A Lightweight Robust Training Method for Defending Model Poisoning Attacks in Federated Learning Assisted UAV Networks

Abstract

1. Introduction

- We introduce a computationally efficient FL framework optimized for UAV-assisted networks, leveraging unsupervised contrastive learning and lightweight architectures to enable robust representation learning on resource-constrained clients, addressing the computational and storage limitations of UAVs.

- We develop a robust, adaptive aggregation method at the server, which combines cosine similarity-based update filtering and dimension-wise aggregation with adaptive learning rates, effectively countering both traditional and adaptive model poisoning strategies, including stealthy and coordinated attacks.

- We provide extensive experimental validation, demonstrating that FedULite not only significantly improves robustness and efficiency in UAV-assisted federated learning but also achieves reliable convergence and strong resistance to adversarial disruptions under diverse real-world conditions.

2. Related Work

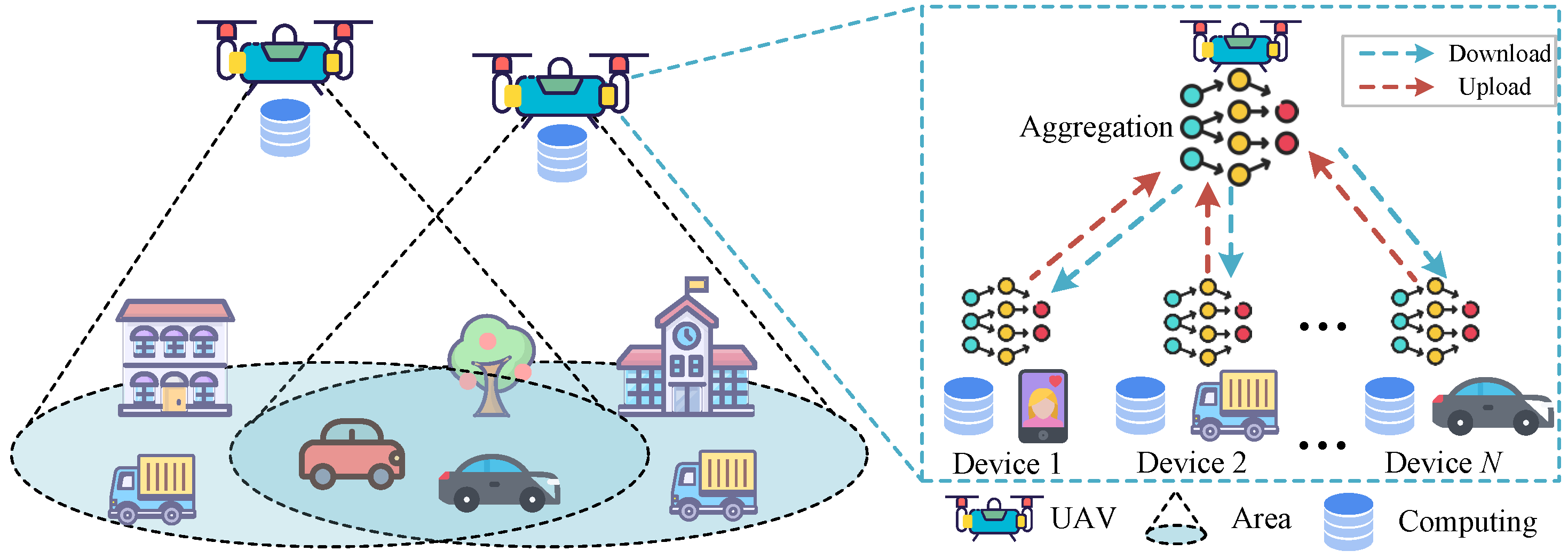

2.1. Federated Learning Assisted UAV Networks

2.2. Poisoning Attacks in Federated Learning

2.3. Poisoning Defenses in Federated Learning

3. Threat Model

4. Method

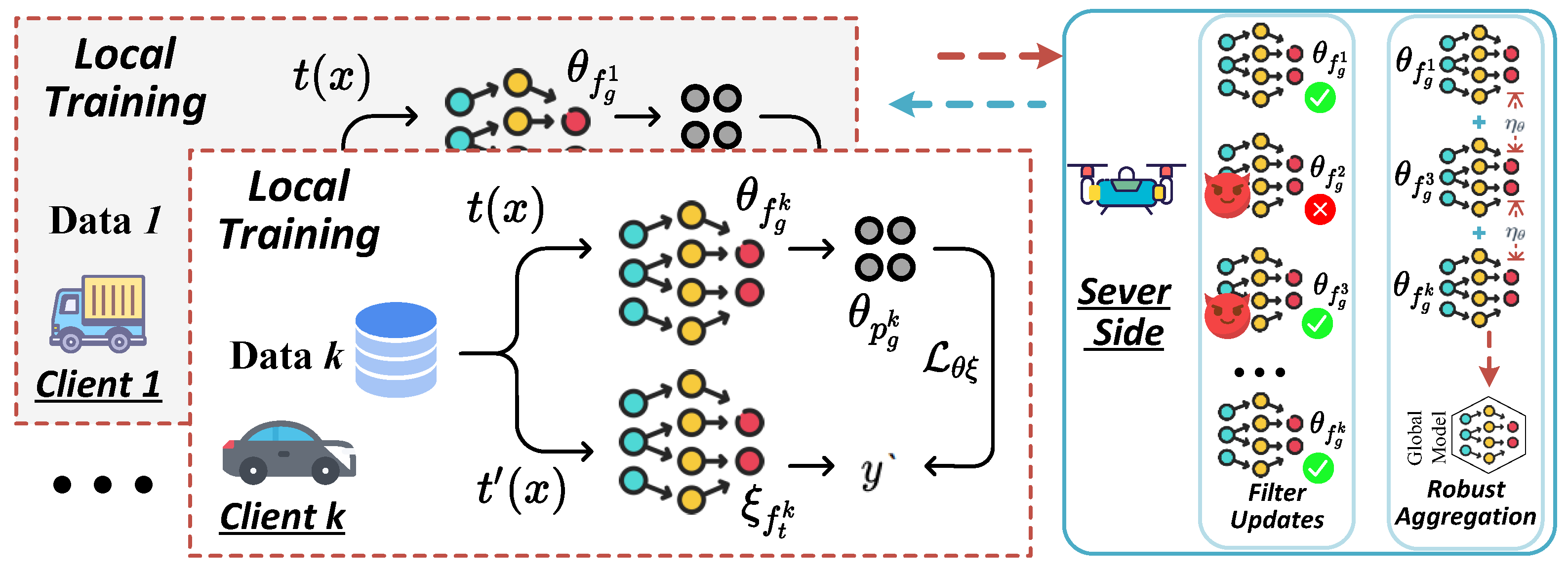

4.1. Overview of FedULite

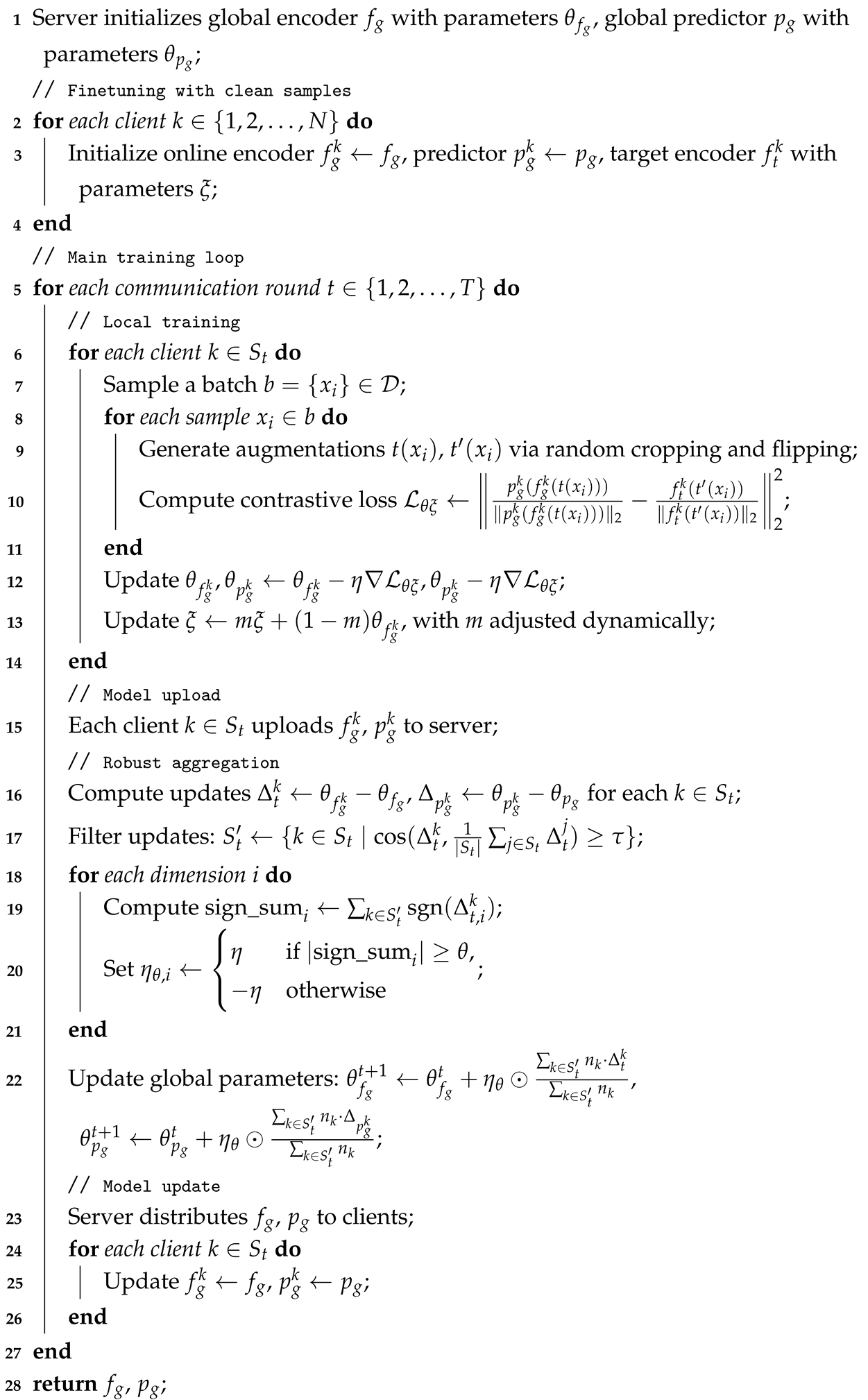

- Local Training: Each client performs unsupervised representation learning with a lightweight contrastive loss, training an online encoder , predictor , and target encoder , with anomaly detection to counter data poisoning.

- Model Aggregation: The server aggregates client models using a robust strategy that evaluates update consistency, producing updated global models and .

- Model Update: The server distributes and , which clients adopt to update their local models.

4.2. Local Robust Training

| Algorithm 1: Algorithm of FedULite process |

Data: Number of clients N, communication rounds T, local epochs Result: Global encoder , global predictor  |

4.3. Robust Adaptive Aggregation

5. Evaluation

5.1. Experimental Setup

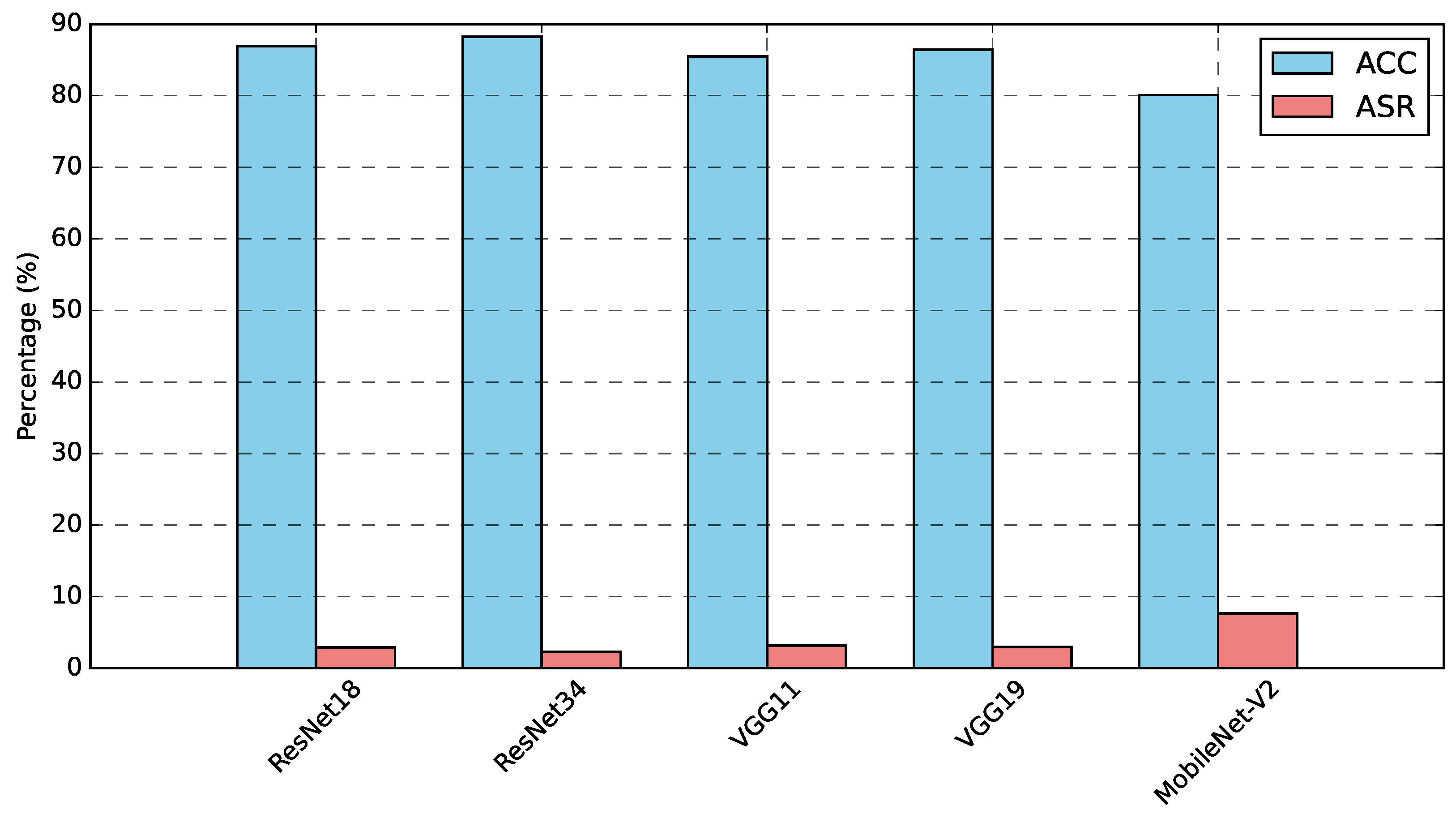

5.1.1. Datasets and Models

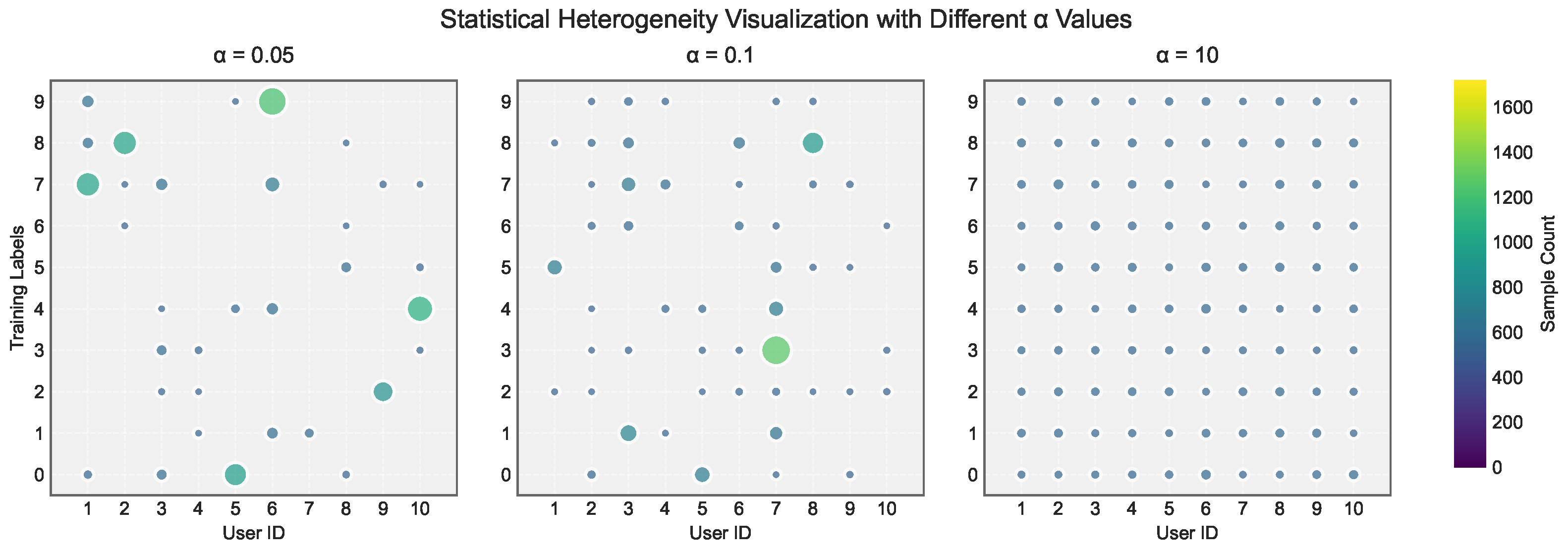

5.1.2. Federated Learning Settings

5.1.3. Attacks and Defenses Settings

5.1.4. Evaluation Metrics

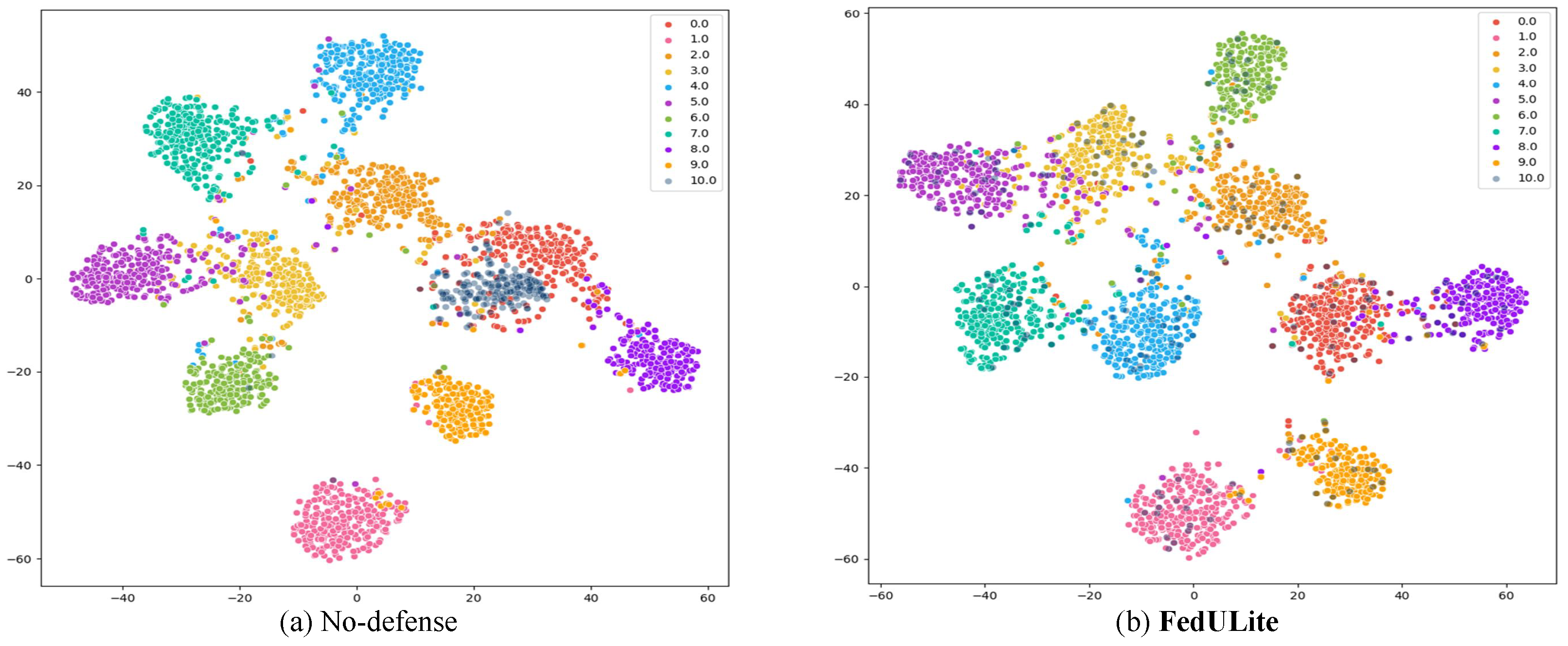

5.2. Experimental Results

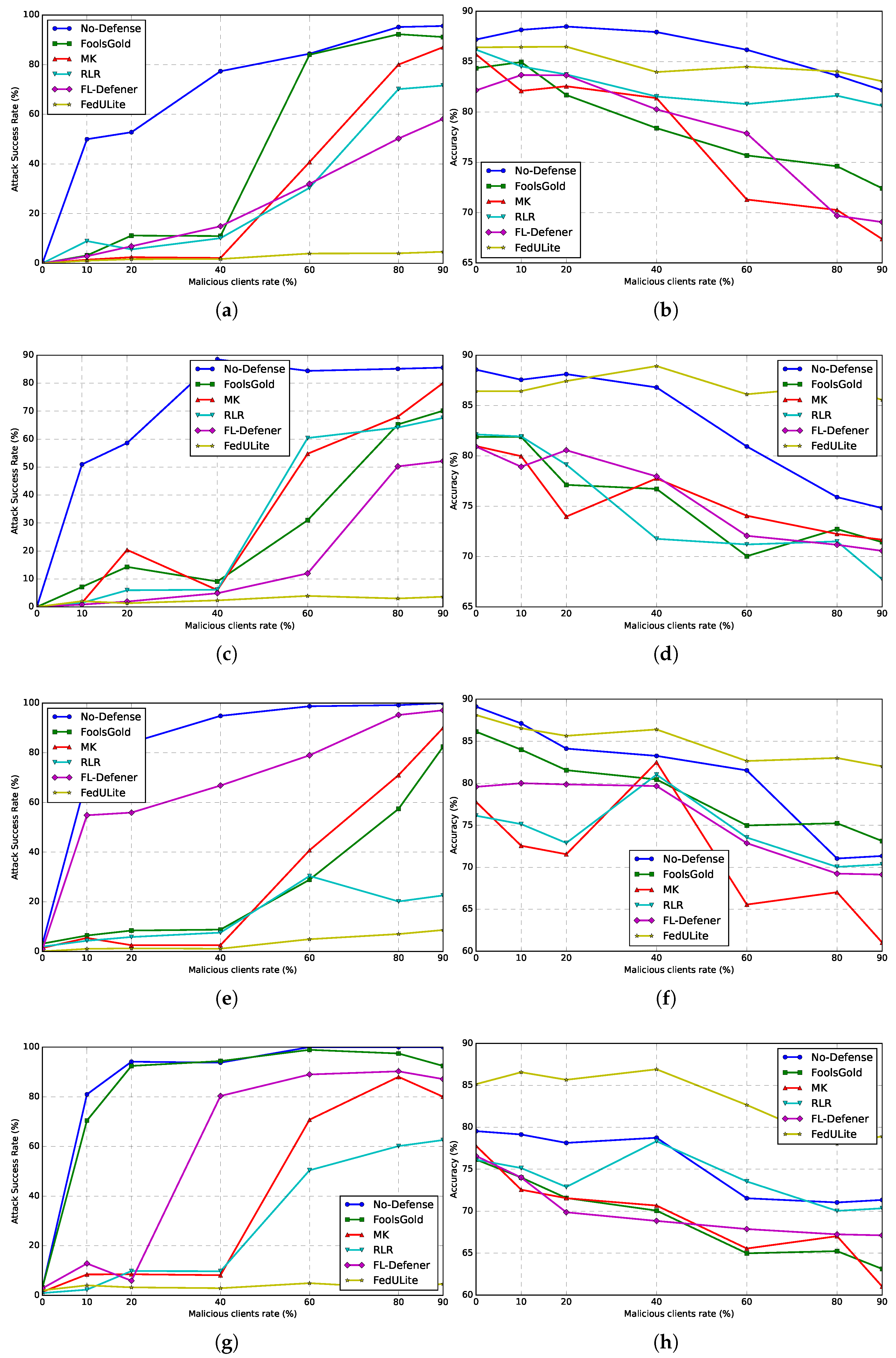

5.2.1. Comparison

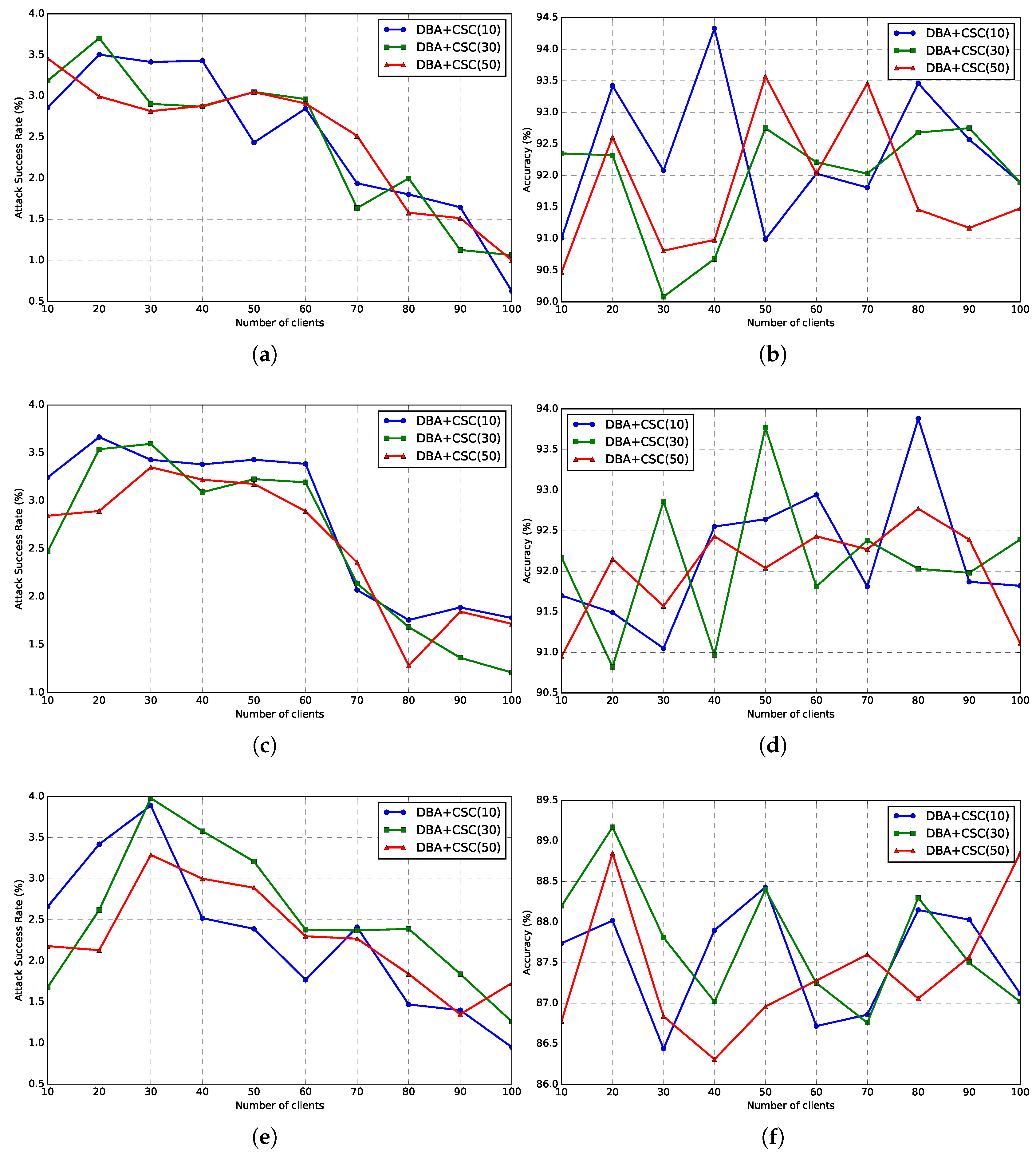

5.2.2. Different FL and Attack Settings

5.3. Ablation and Parameter Sensitivity Analysis

5.3.1. Robust Adaptive Aggregation

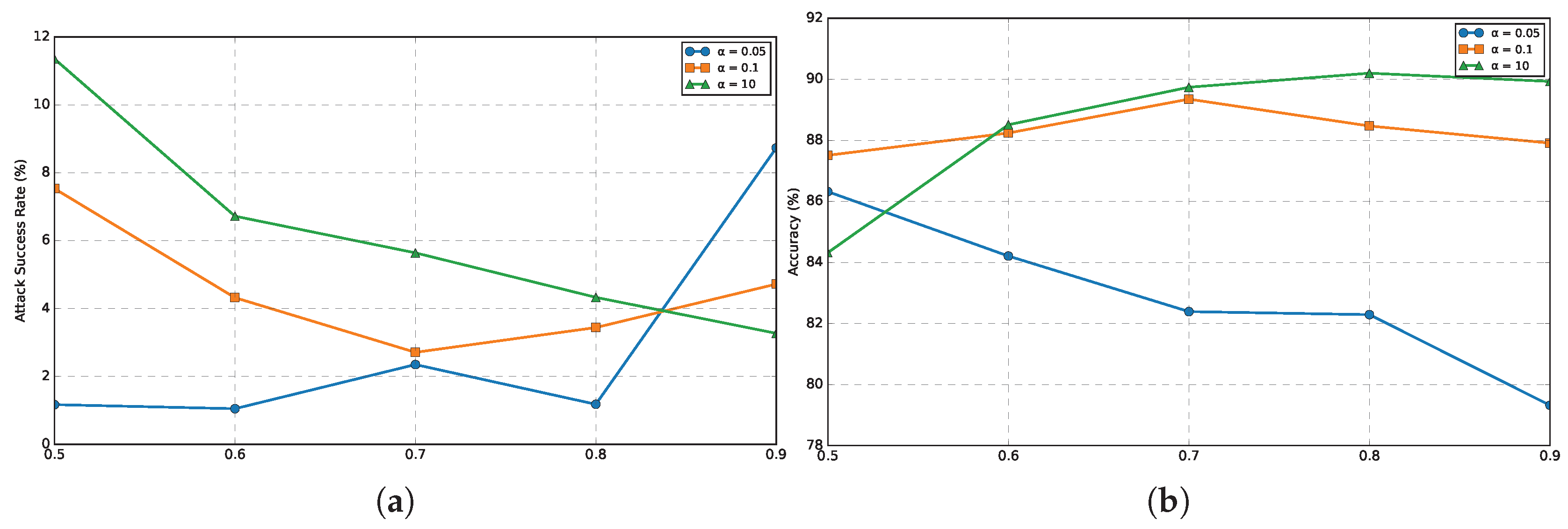

5.3.2. Impact of

5.3.3. Computational Overhead

5.3.4. Impact of Local Epoch

5.4. Adaptive Attack

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lu, X.; Xiao, L.; Dai, C.; Dai, H. UAV-aided cellular communications with deep reinforcement learning against jamming. IEEE Wirel. Commun. 2020, 27, 48–53. [Google Scholar] [CrossRef]

- Qi, F.; Zhu, X.; Mang, G.; Kadoch, M.; Li, W. UAV network and IoT in the sky for future smart cities. IEEE Netw. 2019, 33, 96–101. [Google Scholar] [CrossRef]

- Zeng, Y.; Wu, Q.; Zhang, R. Accessing from the sky: A tutorial on UAV communications for 5G and beyond. Proc. IEEE 2019, 107, 2327–2375. [Google Scholar] [CrossRef]

- Brik, B.; Ksentini, A.; Bouaziz, M. Federated learning for UAVs-enabled wireless networks: Use cases, challenges, and open problems. IEEE Access 2020, 8, 53841–53849. [Google Scholar] [CrossRef]

- Konečnỳ, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated learning: Strategies for improving communication efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; PMLR. pp. 1273–1282. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Zhu, C.; Zhang, J.; Sun, X.; Chen, B.; Meng, W. ADFL: Defending backdoor attacks in federated learning via adversarial distillation. Comput. Secur. 2023, 132, 103366. [Google Scholar] [CrossRef]

- Gong, X.; Chen, Y.; Wang, Q.; Kong, W. Backdoor attacks and defenses in federated learning: State-of-the-art, taxonomy, and future directions. IEEE Wirel. Commun. 2022, 30, 114–121. [Google Scholar] [CrossRef]

- Xia, G.; Chen, J.; Yu, C.; Ma, J. Poisoning attacks in federated learning: A survey. IEEE Access 2023, 11, 10708–10722. [Google Scholar] [CrossRef]

- Ma, C.; Chen, L.; Yong, J.H. Simulating unknown target models for query-efficient black-box attacks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11835–11844. [Google Scholar]

- Bagdasaryan, E.; Veit, A.; Hua, Y.; Estrin, D.; Shmatikov, V. How to backdoor federated learning. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Online, 26–28 August 2020; PMLR. pp. 2938–2948. [Google Scholar]

- Nguyen, T.D.; Nguyen, T.; Le Nguyen, P.; Pham, H.H.; Doan, K.D.; Wong, K.S. Backdoor attacks and defenses in federated learning: Survey, challenges and future research directions. Eng. Appl. Artif. Intell. 2024, 127, 107166. [Google Scholar] [CrossRef]

- Chen, H.; Chen, X.; Peng, L.; Ma, R. Flram: Robust aggregation technique for defense against byzantine poisoning attacks in federated learning. Electronics 2023, 12, 4463. [Google Scholar] [CrossRef]

- Xu, S.; Xia, H.; Zhang, R.; Liu, P.; Fu, Y. FedNor: A robust training framework for federated learning based on normal aggregation. Inf. Sci. 2024, 684, 121274. [Google Scholar] [CrossRef]

- Zhang, C.; Yang, S.; Mao, L.; Ning, H. Anomaly detection and defense techniques in federated learning: A comprehensive review. Artif. Intell. Rev. 2024, 57, 150. [Google Scholar] [CrossRef]

- Yin, D.; Chen, Y.; Kannan, R.; Bartlett, P. Byzantine-robust distributed learning: Towards optimal statistical rates. In Proceedings of the International Conference on Machine Learning, Pmlr, Stockholm, Sweden, 10–15 July 2018; pp. 5650–5659. [Google Scholar]

- Tran, N.H.; Bao, W.; Zomaya, A.; Nguyen, M.N.; Hong, C.S. Federated learning over wireless networks: Optimization model design and analysis. In Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1387–1395. [Google Scholar]

- Wang, S.; Tuor, T.; Salonidis, T.; Leung, K.K.; Makaya, C.; He, T.; Chan, K. Adaptive federated learning in resource constrained edge computing systems. IEEE J. Sel. Areas Commun. 2019, 37, 1205–1221. [Google Scholar] [CrossRef]

- Wang, S.; Tuor, T.; Salonidis, T.; Leung, K.K.; Makaya, C.; He, T.; Chan, K. When edge meets learning: Adaptive control for resource-constrained distributed machine learning. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 63–71. [Google Scholar]

- Zeng, T.; Semiari, O.; Mozaffari, M.; Chen, M.; Saad, W.; Bennis, M. Federated learning in the sky: Joint power allocation and scheduling with UAV swarms. In Proceedings of the ICC 2020–2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Shiri, H.; Park, J.; Bennis, M. Communication-efficient massive UAV online path control: Federated learning meets mean-field game theory. IEEE Trans. Commun. 2020, 68, 6840–6857. [Google Scholar] [CrossRef]

- Liu, Y.; Nie, J.; Li, X.; Ahmed, S.H.; Lim, W.Y.B.; Miao, C. Federated learning in the sky: Aerial-ground air quality sensing framework with UAV swarms. IEEE Internet Things J. 2020, 8, 9827–9837. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Huang, J.; Xiong, Z.; Kang, J.; Niyato, D.; Hua, X.S.; Leung, C.; Miao, C. Towards federated learning in UAV-enabled Internet of Vehicles: A multi-dimensional contract-matching approach. IEEE Trans. Intell. Transp. Syst. 2021, 22, 5140–5154. [Google Scholar] [CrossRef]

- Ng, J.S.; Lim, W.Y.B.; Dai, H.N.; Xiong, Z.; Huang, J.; Niyato, D.; Hua, X.S.; Leung, C.; Miao, C. Joint auction-coalition formation framework for communication-efficient federated learning in UAV-enabled internet of vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 22, 2326–2344. [Google Scholar] [CrossRef]

- Fadlullah, Z.M.; Kato, N. HCP: Heterogeneous computing platform for federated learning based collaborative content caching towards 6G networks. IEEE Trans. Emerg. Top. Comput. 2020, 10, 112–123. [Google Scholar] [CrossRef]

- Wang, Y.; Su, Z.; Zhang, N.; Benslimane, A. Learning in the air: Secure federated learning for UAV-assisted crowdsensing. IEEE Trans. Netw. Sci. Eng. 2020, 8, 1055–1069. [Google Scholar] [CrossRef]

- Wu, Q.; Zeng, Y.; Zhang, R. Joint trajectory and communication design for multi-UAV enabled wireless networks. IEEE Trans. Wirel. Commun. 2018, 17, 2109–2121. [Google Scholar] [CrossRef]

- Yin, S.; Li, L.; Yu, F.R. Resource allocation and basestation placement in downlink cellular networks assisted by multiple wireless powered UAVs. IEEE Trans. Veh. Technol. 2019, 69, 2171–2184. [Google Scholar] [CrossRef]

- Zhang, Y.; Mou, Z.; Gao, F.; Jiang, J.; Ding, R.; Han, Z. UAV-enabled secure communications by multi-agent deep reinforcement learning. IEEE Trans. Veh. Technol. 2020, 69, 11599–11611. [Google Scholar] [CrossRef]

- Zhao, N.; Cheng, Y.; Pei, Y.; Liang, Y.C.; Niyato, D. Deep reinforcement learning for trajectory design and power allocation in UAV networks. In Proceedings of the ICC 2020–2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Zhu, M.; Liu, X.Y.; Walid, A. Deep reinforcement learning for unmanned aerial vehicle-assisted vehicular networks. arXiv 2019, arXiv:1906.05015. [Google Scholar]

- Wang, L.; Wang, K.; Pan, C.; Xu, W.; Aslam, N.; Nallanathan, A. Deep reinforcement learning based dynamic trajectory control for UAV-assisted mobile edge computing. IEEE Trans. Mob. Comput. 2021, 21, 3536–3550. [Google Scholar] [CrossRef]

- Fang, D.; Qiang, J.; Ouyang, X.; Zhu, Y.; Yuan, Y.; Li, Y. Collaborative Document Simplification Using Multi-Agent Systems. In Proceedings of the 31st International Conference on Computational Linguistics, Dhabi, UAE, 19–24 January 2025; pp. 897–912. [Google Scholar]

- Fang, D.; Qiang, J.; Zhu, Y.; Yuan, Y.; Li, W.; Liu, Y. Progressive Document-level Text Simplification via Large Language Models. arXiv 2025, arXiv:2501.03857. [Google Scholar]

- Tolpegin, V.; Truex, S.; Gursoy, M.E.; Liu, L. Data poisoning attacks against federated learning systems. In Proceedings of the Computer Security–ESORICs 2020: 25th European Symposium on Research in Computer Security, ESORICs 2020, Guildford, UK, 14–18 September 2020; Proceedings, part i 25. Springer: Berlin/Heidelberg, Germany, 2020; pp. 480–501. [Google Scholar]

- Jagielski, M.; Oprea, A.; Biggio, B.; Liu, C.; Nita-Rotaru, C.; Li, B. Manipulating machine learning: Poisoning attacks and countermeasures for regression learning. In Proceedings of the 2018 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 21–23 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 19–35. [Google Scholar]

- Kumar, K.N.; Mohan, C.K.; Machiry, A. Precision Guided Approach to Mitigate Data Poisoning Attacks in Federated Learning. In Proceedings of the Fourteenth ACM Conference on Data and Application Security and Privacy, Porto, Portugal, 19–21 June 2024; pp. 233–244. [Google Scholar]

- Sun, G.; Cong, Y.; Dong, J.; Wang, Q.; Lyu, L.; Liu, J. Data poisoning attacks on federated machine learning. IEEE Internet Things J. 2021, 9, 11365–11375. [Google Scholar] [CrossRef]

- Alharbi, E.; Marcolino, L.S.; Gouglidis, A.; Ni, Q. Robust federated learning method against data and model poisoning attacks with heterogeneous data distribution. In ECAI 2023; IOS Press: Amsterdam, The Netherlands, 2023; pp. 85–92. [Google Scholar]

- Cao, X.; Gong, N.Z. Mpaf: Model poisoning attacks to federated learning based on fake clients. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3396–3404. [Google Scholar]

- Zhang, J.; Zhu, C.; Ge, C.; Ma, C.; Zhao, Y.; Sun, X.; Chen, B. Badcleaner: Defending backdoor attacks in federated learning via attention-based multi-teacher distillation. IEEE Trans. Dependable Secur. Comput. 2024, 21, 4559–4573. [Google Scholar] [CrossRef]

- Sun, J.; Li, A.; DiValentin, L.; Hassanzadeh, A.; Chen, Y.; Li, H. Fl-wbc: Enhancing robustness against model poisoning attacks in federated learning from a client perspective. Adv. Neural Inf. Process. Syst. 2021, 34, 12613–12624. [Google Scholar]

- Sun, S.; Sugrim, S.; Stavrou, A.; Wang, H. Partner in Crime: Boosting Targeted Poisoning Attacks against Federated Learning. IEEE Trans. Inf. Forensics Secur. 2025, 20, 4152–4166. [Google Scholar] [CrossRef]

- Liu, T.; Zhang, Y.; Feng, Z.; Yang, Z.; Xu, C.; Man, D.; Yang, W. Beyond traditional threats: A persistent backdoor attack on federated learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 21359–21367. [Google Scholar]

- Wang, H.; Sreenivasan, K.; Rajput, S.; Vishwakarma, H.; Agarwal, S.; Sohn, J.Y.; Lee, K.; Papailiopoulos, D. Attack of the tails: Yes, you really can backdoor federated learning. Adv. Neural Inf. Process. Syst. 2020, 33, 16070–16084. [Google Scholar]

- Huang, S.; Li, Y.; Yan, X.; Gao, Y.; Chen, C.; Shi, L.; Chen, B.; Ng, W.W. Scope: On Detecting Constrained Backdoor Attacks in Federated Learning. IEEE Trans. Inf. Forensics Secur. 2025, 20, 3302–3315. [Google Scholar] [CrossRef]

- Wu, J.; Jin, J.; Wu, C. Challenges and countermeasures of federated learning data poisoning attack situation prediction. Mathematics 2024, 12, 901. [Google Scholar] [CrossRef]

- Blanchard, P.; El Mhamdi, E.M.; Guerraoui, R.; Stainer, J. Machine learning with adversaries: Byzantine tolerant gradient descent. Adv. Neural Inf. Process. Syst. 2017, 119–129. [Google Scholar]

- Zhang, G.; Liu, H.; Yang, B.; Feng, S. Dwama: Dynamic weight-adjusted mahalanobis defense algorithm for mitigating poisoning attacks in federated learning. Peer-to-Peer Netw. Appl. 2024, 17, 3750–3764. [Google Scholar] [CrossRef]

- Huang, S.; Li, Y.; Chen, C.; Shi, L.; Gao, Y. Multi-metrics adaptively identifies backdoors in federated learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4652–4662. [Google Scholar]

- Jebreel, N.M.; Domingo-Ferrer, J. Fl-defender: Combating targeted attacks in federated learning. Knowl.-Based Syst. 2023, 260, 110178. [Google Scholar] [CrossRef]

- Pillutla, K.; Kakade, S.M.; Harchaoui, Z. Robust aggregation for federated learning. IEEE Trans. Signal Process. 2022, 70, 1142–1154. [Google Scholar] [CrossRef]

- Liu, J.; Li, X.; Liu, X.; Zhang, H.; Miao, Y.; Deng, R.H. DefendFL: A privacy-preserving federated learning scheme against poisoning attacks. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 9098–9111. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.Y.; Chao, W.L. On Bridging Generic and Personalized Federated Learning for Image Classification. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Chen, C.; Ye, T.; Wang, L.; Gao, M. Learning to generalize in heterogeneous federated networks. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–21 October 2022; pp. 159–168. [Google Scholar]

- Xie, C.; Huang, K.; Chen, P.Y.; Li, B. Dba: Distributed backdoor attacks against federated learning. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Hsu, T.M.H.; Qi, H.; Brown, M. Measuring the effects of non-identical data distribution for federated visual classification. arXiv 2019, arXiv:1909.06335. [Google Scholar]

- Fung, C.; Yoon, C.J.M.; Beschastnikh, I. The Limitations of Federated Learning in Sybil Settings. In Proceedings of the 23rd International Symposium on Research in Attacks, Intrusions and Defenses, RAID 2020, San Sebastian, Spain, 14–15 October 2020; USENIX Association: Berkeley, CA, USA, 2020; pp. 301–316. [Google Scholar]

- Ozdayi, M.S.; Kantarcioglu, M.; Gel, Y.R. Defending against backdoors in federated learning with robust learning rate. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 19–21 May 2021; Volume 35, pp. 9268–9276. [Google Scholar]

| Datasets | Attack | No-Defense | FoolsGold | Multi-Krum | RLR | FL-Defender | FedULite | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ASR | ACC | ASR | ACC | ASR | ACC | ASR | ACC | ASR | ACC | ASR | ACC | ||

| MNIST | CSC | 87.33 | 82.08 | 8.45 | 80.26 | 4.71 | 80.33 | 6.72 | 82.49 | 75.54 | 79.58 | 1.97 | 92.08 |

| PGD | 89.88 | 91.94 | 13.06 | 82.37 | 4.58 | 88.46 | 0.46 | 84.21 | 73.13 | 86.81 | 2.41 | 93.66 | |

| CCA | 91.59 | 92.58 | 12.87 | 82.29 | 4.12 | 87.49 | 4.16 | 88.75 | 86.12 | 82.55 | 0.67 | 90.43 | |

| DBA | 99.1 | 90.27 | 96.92 | 78.44 | 6.91 | 88.08 | 5.6 | 87.96 | 87.48 | 82.86 | 4.33 | 90.2 | |

| F-MNIST | CSC | 79.06 | 82.54 | 6.8 | 81.6 | 3.89 | 80.34 | 5.1 | 81.44 | 4.35 | 80.16 | 0.25 | 90.74 |

| PGD | 90.54 | 90.17 | 12.13 | 79.35 | 2.2 | 88.68 | 2.69 | 86.57 | 2.96 | 82.44 | 2.4 | 91.12 | |

| CCA | 90.56 | 94.34 | 9.5 | 81.42 | 2.86 | 89.17 | 4.68 | 84.56 | 2.57 | 84.63 | 1.63 | 88.16 | |

| DBA | 99.11 | 89.95 | 97.7 | 79.41 | 7.42 | 86.32 | 7.32 | 83.84 | 10.69 | 82.82 | 3.26 | 89.7 | |

| CIFAR-10 | CSC | 77.34 | 87.93 | 10.97 | 78.39 | 2.14 | 81.37 | 10.1 | 81.53 | 14.9 | 80.25 | 1.72 | 83.96 |

| PGD | 88.5 | 86.8 | 9.08 | 76.72 | 6.01 | 77.76 | 6.16 | 71.76 | 4.88 | 77.96 | 2.34 | 88.92 | |

| CCA | 94.87 | 83.27 | 8.8 | 80.46 | 2.53 | 82.51 | 7.59 | 81.03 | 66.82 | 79.68 | 1.11 | 86.42 | |

| DBA | 93.74 | 78.75 | 94.36 | 70.07 | 8.14 | 70.67 | 9.74 | 78.34 | 80.32 | 68.84 | 2.91 | 86.91 | |

| Datasets | Setting | No-Defense | FoolsGold | Multi-Krum | RLR | FL-Defender | FedULite | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ASR | ACC | ASR | ACC | ASR | ACC | ASR | ACC | ASR | ACC | ASR | ACC | ||

| MNIST | = 0.05 | 85.07 | 85.62 | 81.26 | 75.41 | 40.96 | 80.86 | 42.88 | 78.04 | 12.53 | 79.11 | 1.18 | 82.29 |

| = 0.1 | 89.58 | 87.22 | 87.55 | 79.36 | 22.59 | 81.96 | 27.24 | 81.42 | 8.29 | 79.7 | 3.44 | 88.47 | |

| = 10 | 99.1 | 90.27 | 96.92 | 78.44 | 6.91 | 88.08 | 5.6 | 87.96 | 87.48 | 82.86 | 4.33 | 90.2 | |

| E-MNIST | = 0.05 | 81.5 | 66.46 | 80.45 | 64.26 | 35.31 | 60.37 | 39.66 | 62.77 | 7.27 | 62.39 | 1.54 | 65.86 |

| = 0.1 | 86.54 | 74.3 | 82.33 | 67.69 | 37.25 | 63.66 | 39.13 | 64.63 | 10.26 | 63.14 | 5.48 | 73.25 | |

| = 10 | 95.25 | 75.09 | 88.23 | 68.82 | 17.67 | 72.5 | 12.94 | 72.79 | 6.19 | 70.61 | 3.48 | 73.7 | |

| Dataset | RAA-DFU | RAA-DRA | RAA-DALL | FedULite | ||||

|---|---|---|---|---|---|---|---|---|

| ASR | ACC | ASR | ACC | ASR | ACC | ASR | ACC | |

| MNIST | 12.08 | 89.13 | 26.78 | 85.42 | 34.17 | 81.04 | 4.33 | 90.2 |

| F-MNIST | 14.37 | 86.61 | 32.02 | 84.45 | 43.55 | 80.13 | 3.26 | 89.7 |

| CIFAR-10 | 13.74 | 83.48 | 39.45 | 84.3 | 46.06 | 72.26 | 2.91 | 86.91 |

| Setting | Encoder | Predictor | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

| a = 0.05 | 0.48 | 0.51 | 0.46 | 0.52 | 0.49 | 0.37 | 0.54 | 0.29 |

| a = 0.1 | 0.37 | 0.41 | 0.42 | 0.46 | 0.36 | 0.28 | 0.36 | 0.26 |

| a = 10 | 0.36 | 0.39 | 0.35 | 0.37 | 0.32 | 0.26 | 0.31 | 0.25 |

| Method | MobileNetV2 | ResNet50 | ||

|---|---|---|---|---|

| Average Training Time | Memory Usage | Average Training Time | Memory Usage | |

| FedAvg | 0.85 (seconds/epoch) | 20 MB | 3.71 (seconds/epoch) | 90 MB |

| FL-Defender | 1.32 (seconds/epoch) | 28 MB | 5.82 (seconds/epoch) | 135 MB |

| FedULite | 0.91 (seconds/epoch) | 22 MB | 4.05 (seconds/epoch) | 96 MB |

| Attack | Metric | No-Defense | E = 1 | E = 2 | E = 4 | E = 6 | E = 8 |

|---|---|---|---|---|---|---|---|

| CSC | ASR | 77.34 | 1.72 | 1.54 | 1.13 | 1.52 | 0.96 |

| ACC | 87.93 | 83.96 | 84.14 | 86.71 | 87.15 | 87.24 | |

| PGD | ASR | 88.5 | 2.34 | 2.56 | 1.79 | 1.62 | 1.57 |

| ACC | 86.8 | 88.92 | 88.76 | 89.01 | 89.21 | 89.35 | |

| CCA | ASR | 94.87 | 1.11 | 1.04 | 0.53 | 0 | 0 |

| ACC | 83.27 | 86.42 | 86.72 | 87.58 | 87.42 | 87.73 | |

| DBA | ASR | 93.74 | 2.91 | 2.18 | 1.98 | 1.76 | 1.05 |

| ACC | 78.75 | 86.91 | 85.74 | 86.95 | 87.13 | 87.42 |

| Adaptive Attack | Dataset | No-Defense | FedULite | ||

|---|---|---|---|---|---|

| ASR | ACC | ASR | ACC | ||

| MNIST | 90.63 ± 0.98 | 90.18 ± 0.72 | 5.42 ± 1.32 | 90.72 ± 2.71 | |

| F-MNIST | 88.92 ± 1.73 | 89.72 ± 1.89 | 3.58 ± 2.21 | 90.18 ± 1.98 | |

| CIFAR-10 | 89.15 ± 2.15 | 83.24 ± 5.73 | 6.72 ± 1.54 | 88.74 ± 3.46 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, L.; Zhai, W.; Bu, X.; Sun, M.; Zhu, C. A Lightweight Robust Training Method for Defending Model Poisoning Attacks in Federated Learning Assisted UAV Networks. Drones 2025, 9, 528. https://doi.org/10.3390/drones9080528

Chen L, Zhai W, Bu X, Sun M, Zhu C. A Lightweight Robust Training Method for Defending Model Poisoning Attacks in Federated Learning Assisted UAV Networks. Drones. 2025; 9(8):528. https://doi.org/10.3390/drones9080528

Chicago/Turabian StyleChen, Lucheng, Weiwei Zhai, Xiangfeng Bu, Ming Sun, and Chenglin Zhu. 2025. "A Lightweight Robust Training Method for Defending Model Poisoning Attacks in Federated Learning Assisted UAV Networks" Drones 9, no. 8: 528. https://doi.org/10.3390/drones9080528

APA StyleChen, L., Zhai, W., Bu, X., Sun, M., & Zhu, C. (2025). A Lightweight Robust Training Method for Defending Model Poisoning Attacks in Federated Learning Assisted UAV Networks. Drones, 9(8), 528. https://doi.org/10.3390/drones9080528