FAEM: Fast Autonomous Exploration for UAV in Large-Scale Unknown Environments Using LiDAR-Based Mapping

Abstract

1. Introduction

- (1)

- We introduce the occlusion-free ellipsoid, a novel concept enabling low-complexity, high-quality viewpoint generation via geometric constraint solving.

- (2)

- We propose a globally optimized hierarchical exploration framework. Firstly, it performs globally guided topological kinodynamic path searching using an incremental roadmap, generating multiple candidate paths that satisfy kinodynamic constraints and cover diverse topologies. Subsequently, an optimal global path is selected to access high-gain viewpoints while simultaneously generating highly maneuverable and energy-efficient flight trajectories, effectively balancing exploration efficiency and computational cost. Compared to most existing methods, this framework significantly reduces both memory footprint and computation time.

- (3)

- We also introduce an adaptive dynamic replanning strategy. This strategy employs a dynamic start-point selection mechanism and a real-time replanning policy to ensure flight trajectory continuity and stability, thereby enhancing exploration efficiency.

- (4)

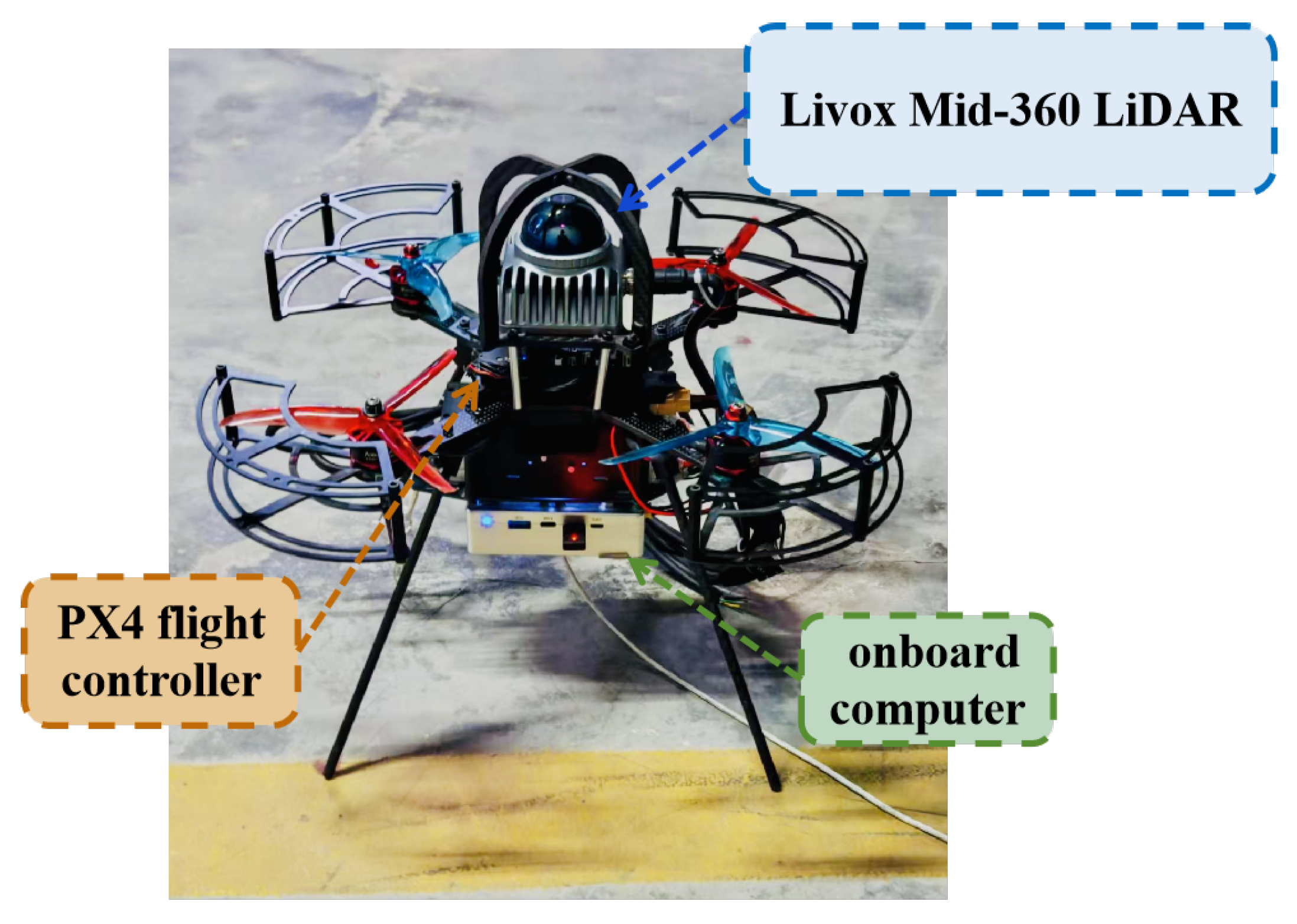

- The proposed method was successfully validated on an autonomous quadrotor platform equipped with LiDAR navigation. Extensive simulations and physical experiments validate its effectiveness, demonstrating significant advantages in memory usage optimization, exploration efficiency, and computational speed. The system also demonstrates excellent autonomous navigation capabilities in large-scale, complex, and cluttered scenarios.

2. Related Works

2.1. Sampling-Based Exploration Methods

2.2. Frontier-Based Exploration Algorithms

2.3. Hybrid Frontier-Based and Sampling-Based Algorithms

2.4. Quadrotor Trajectory Planning

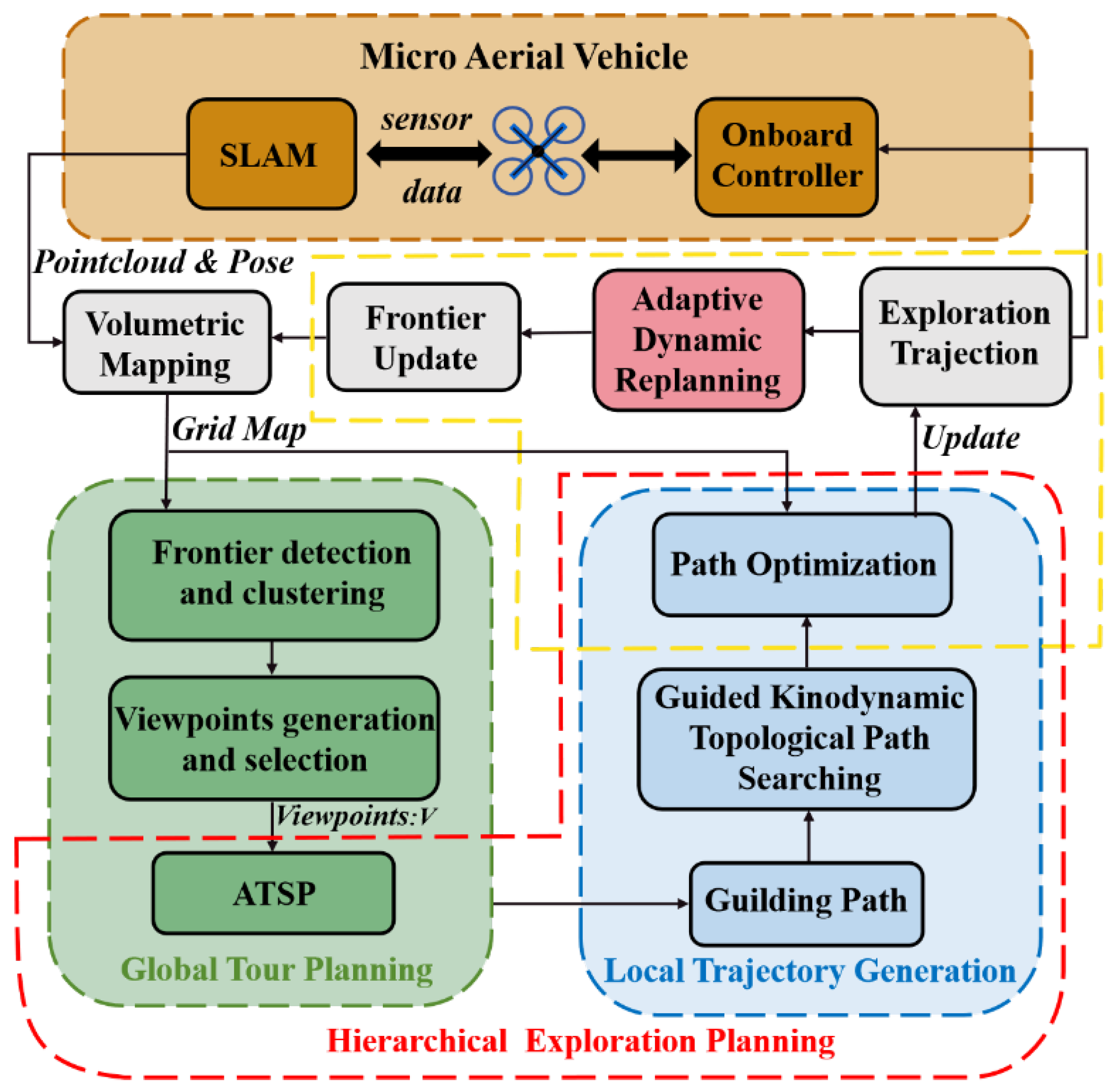

3. System Overview

4. Proposed Approach

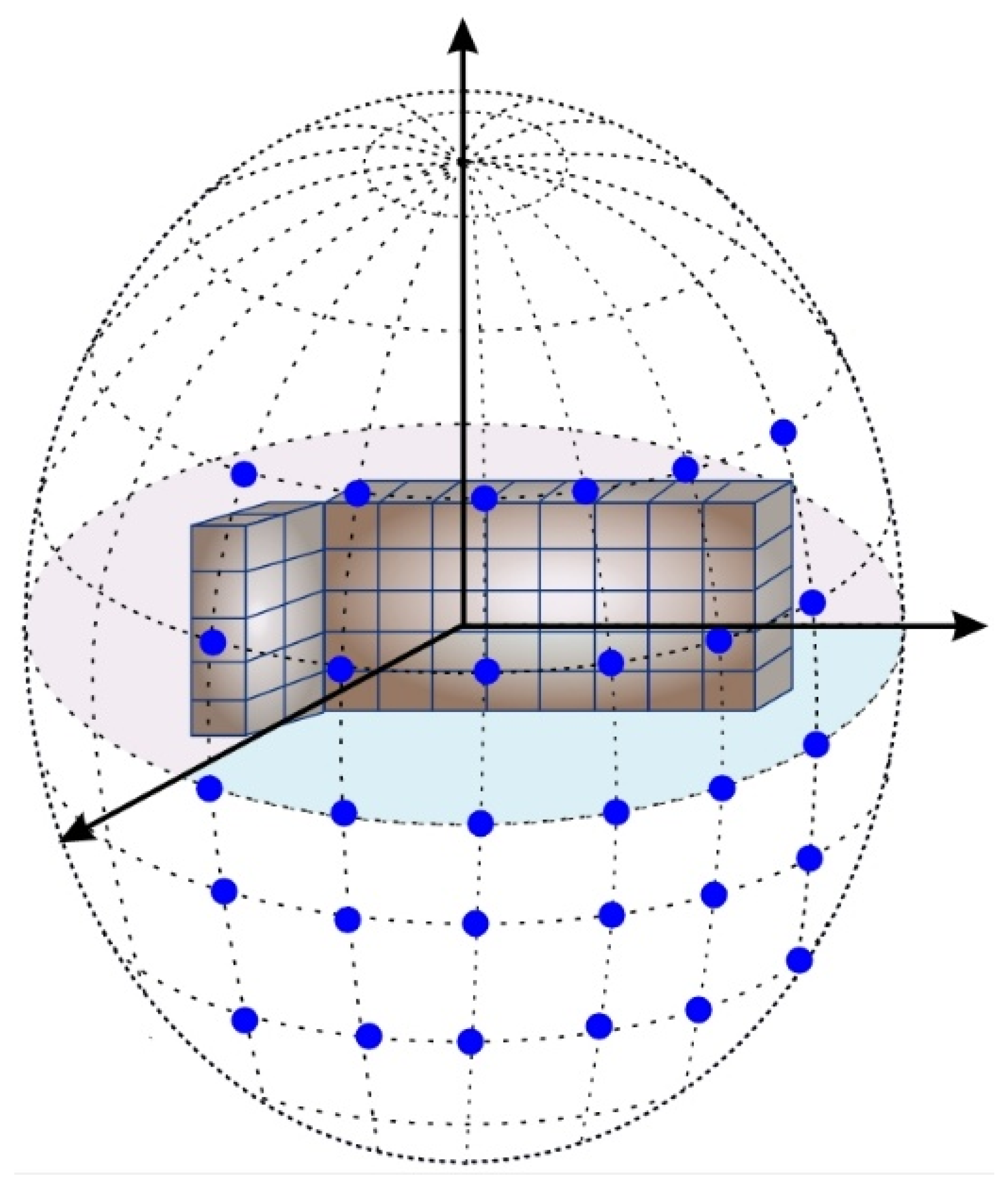

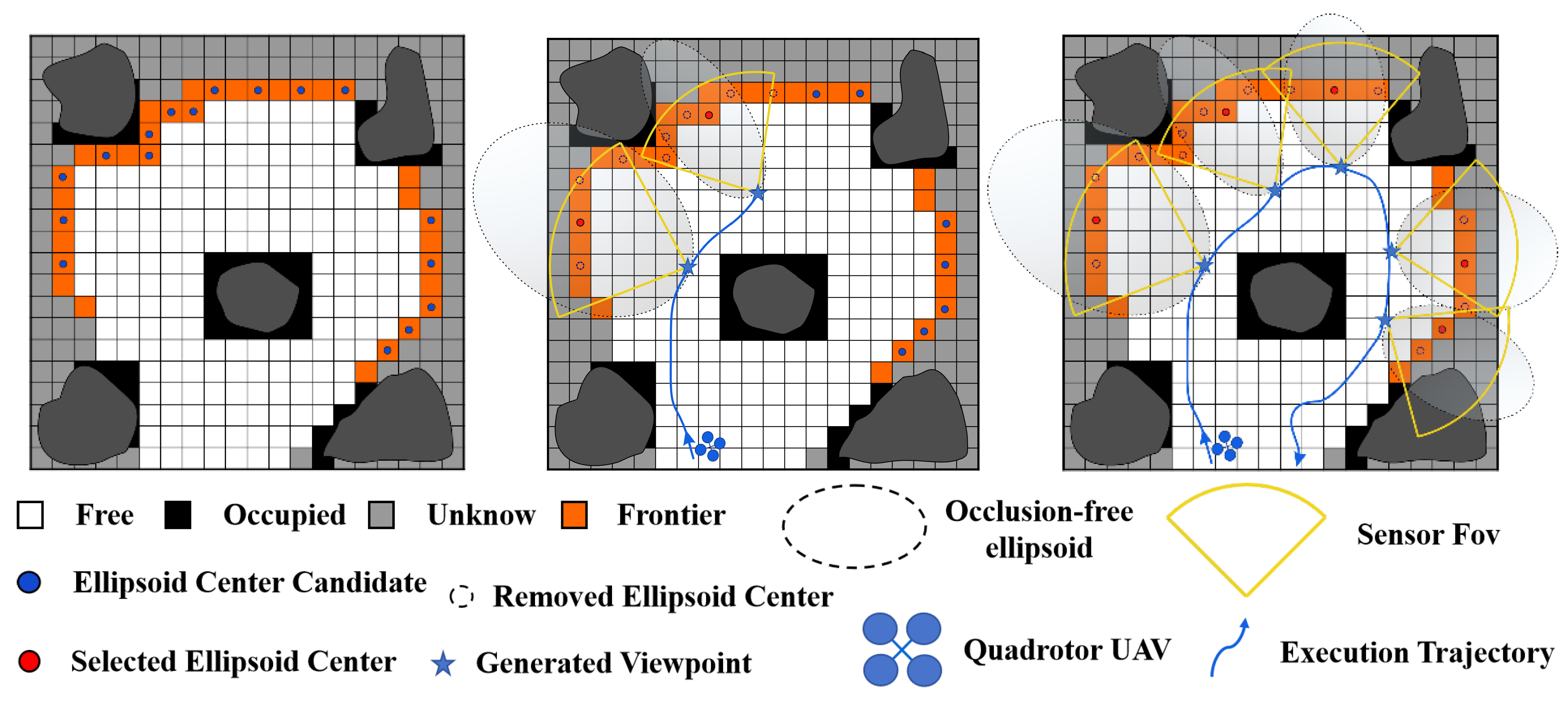

4.1. Occlusion-Free Ellipsoid

4.2. Viewpoints Generation

| Algorithm 1 Generate viewpoints with ellipsoids |

|

4.3. Global Tour Planning

5. Hierarchical Exploration Planning

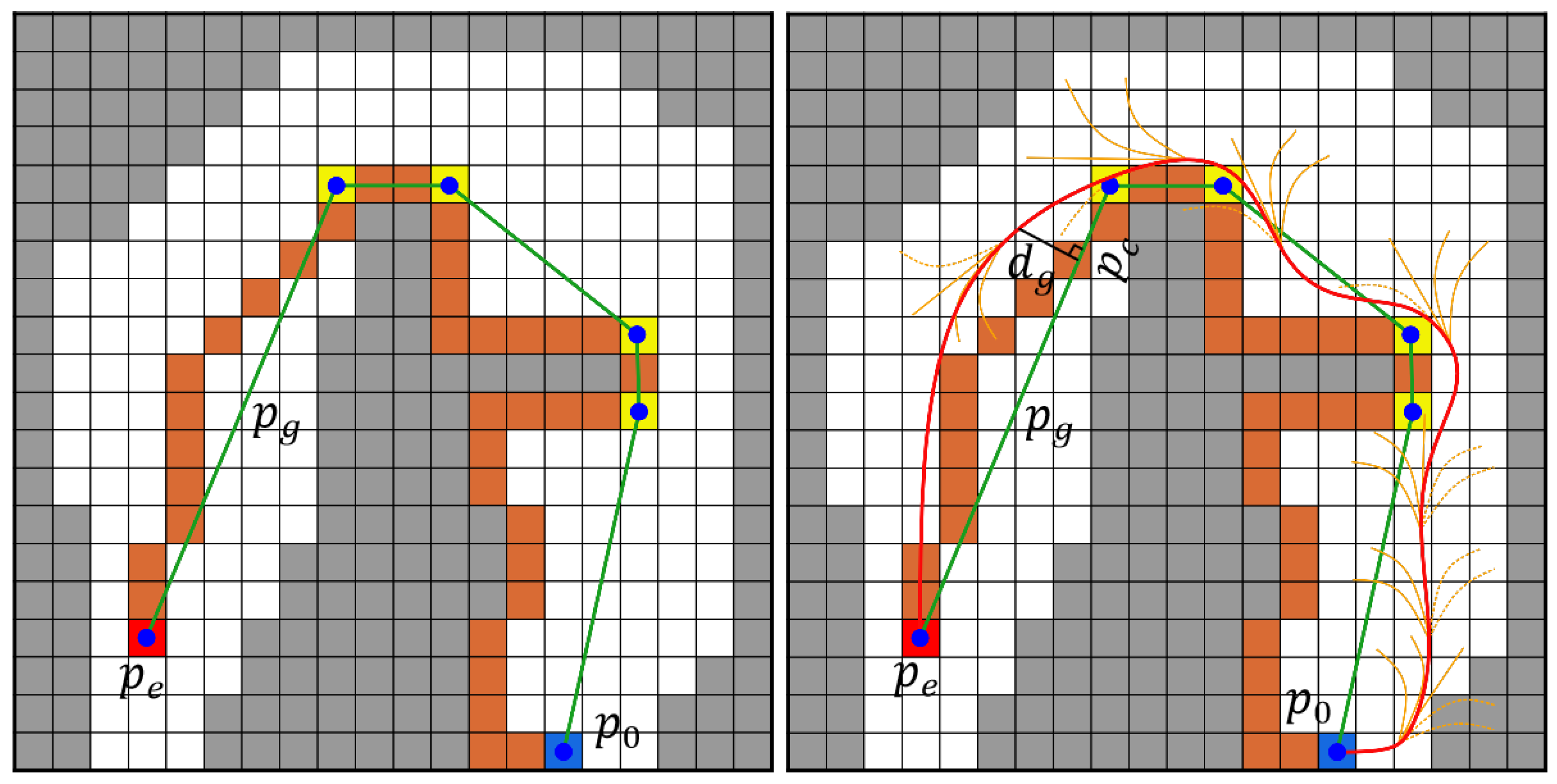

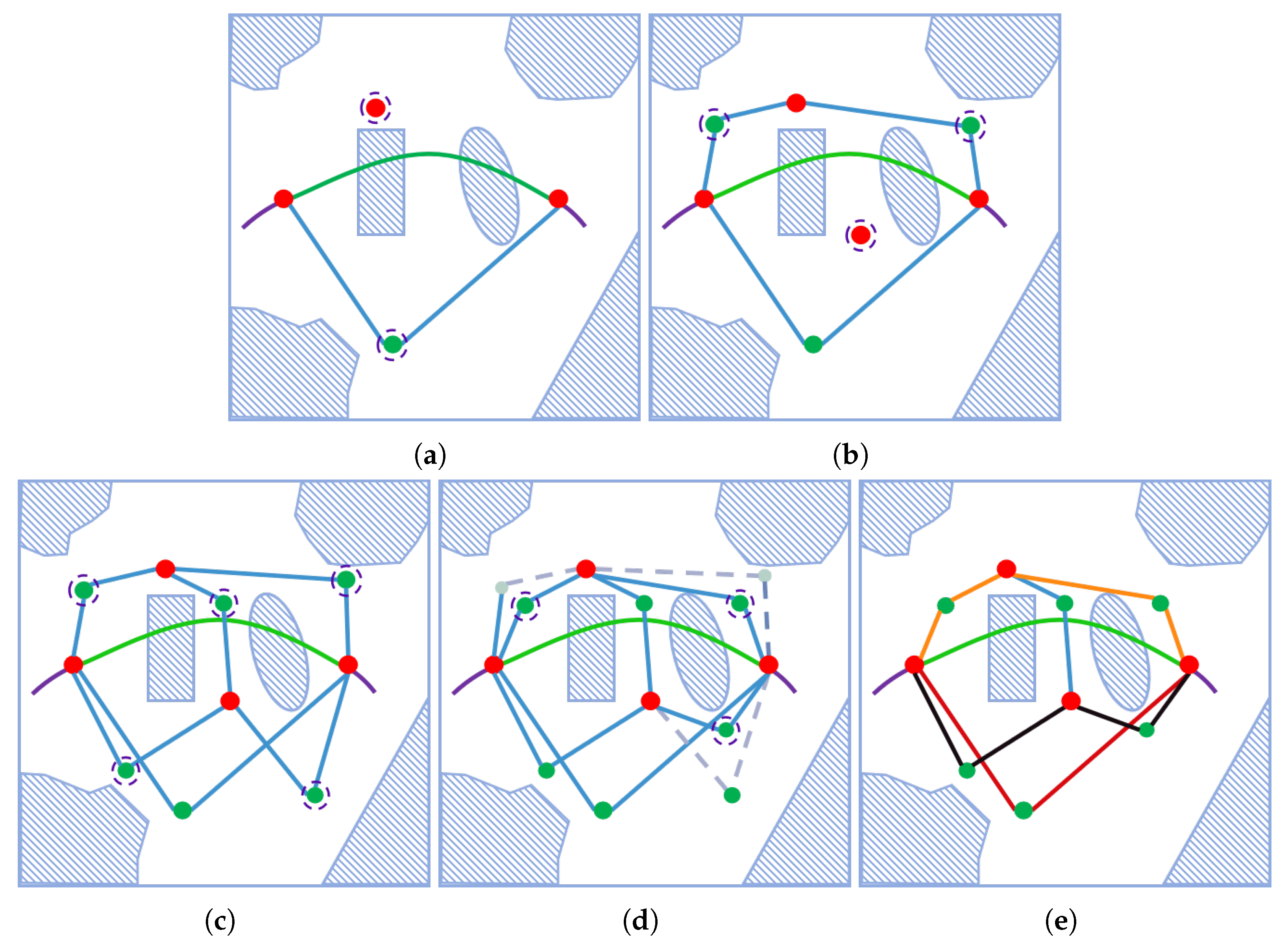

5.1. Global Kinodynamic Topological Path Searching

| Algorithm 2 Kinodynamic Topological Roadmap |

|

5.2. Local Trajectory Generation

5.3. Trajectory Optimization

5.4. Adaptive Dynamic Replanning

6. Experimental Results

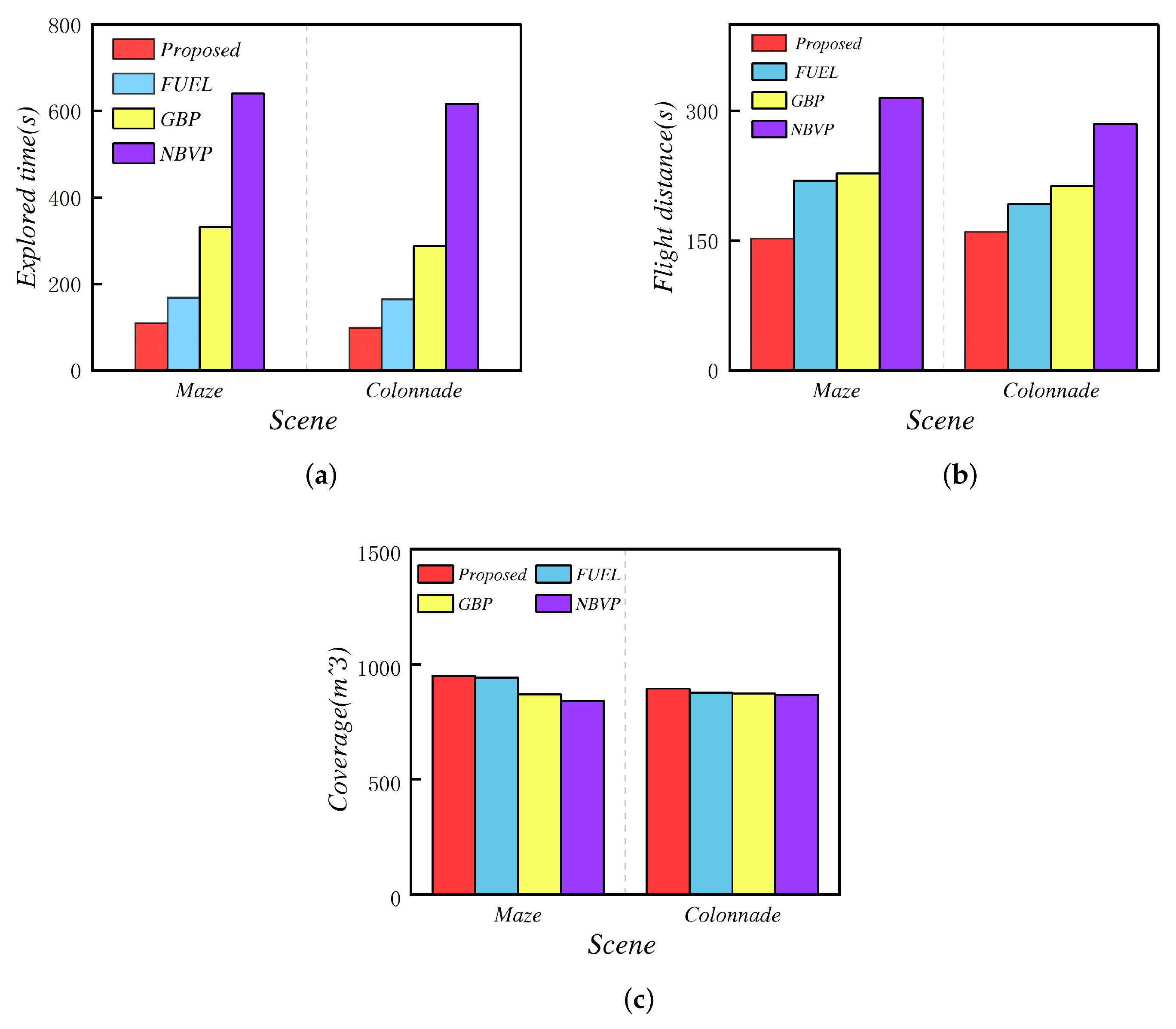

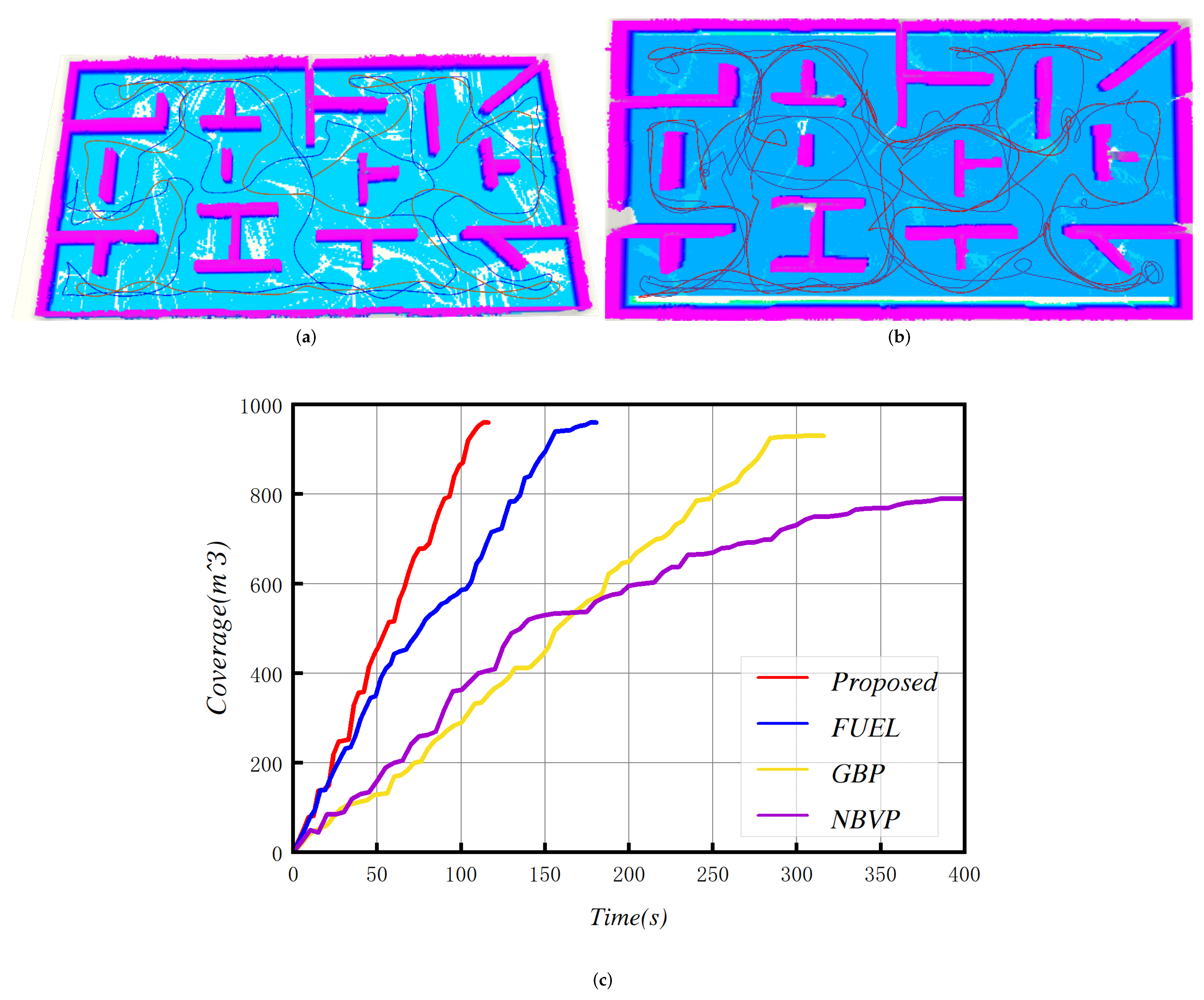

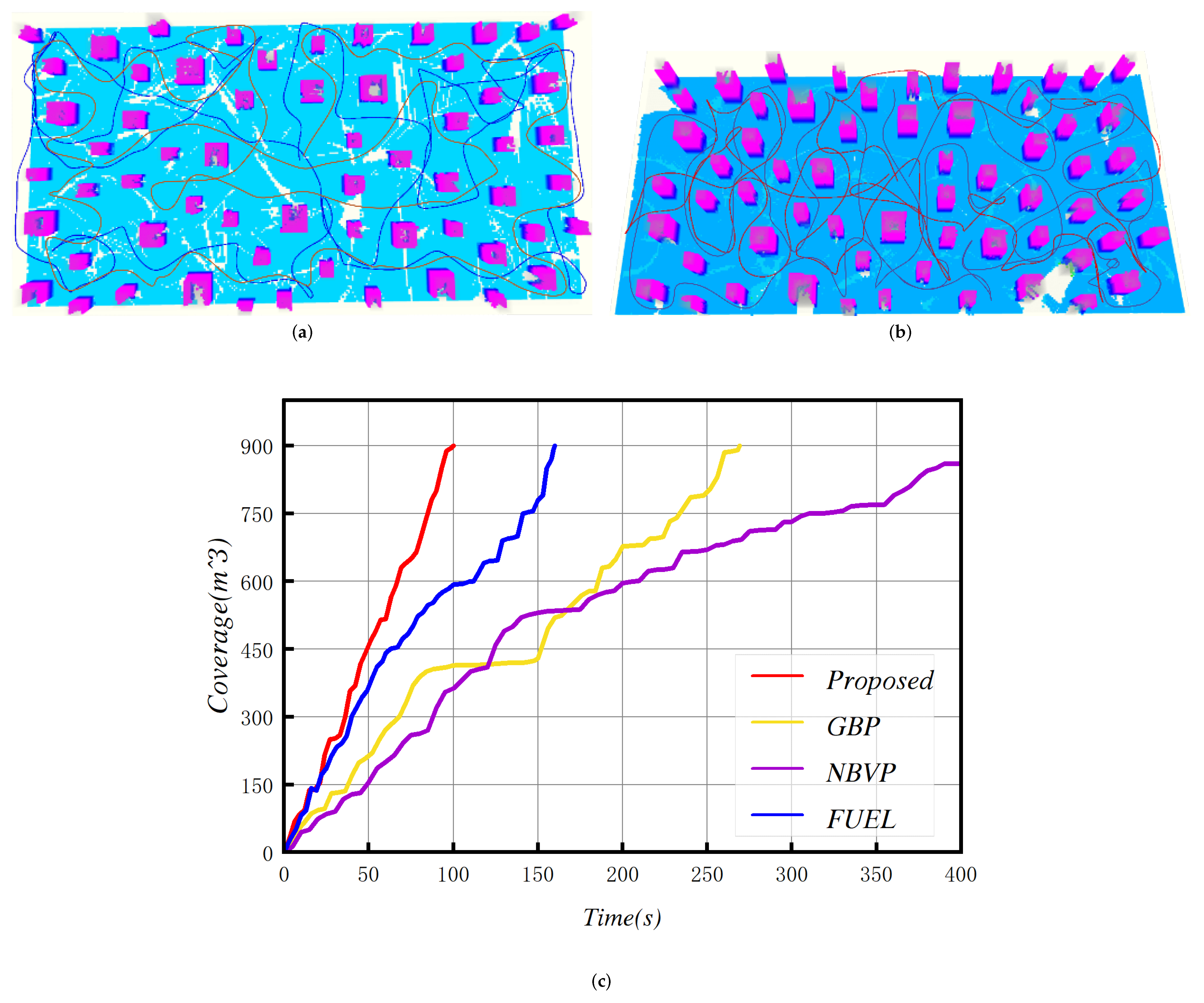

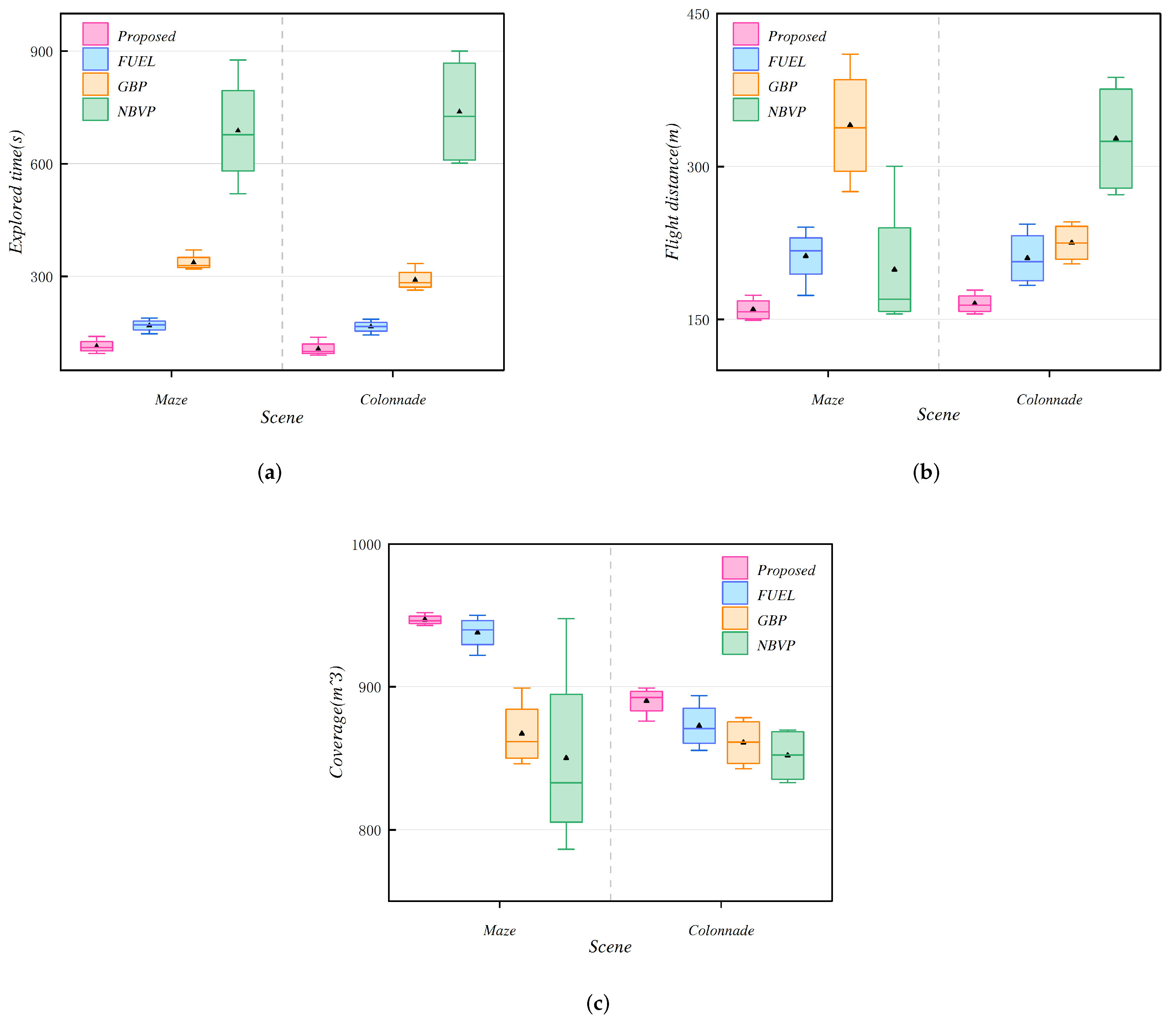

6.1. Benchmark Comparisons

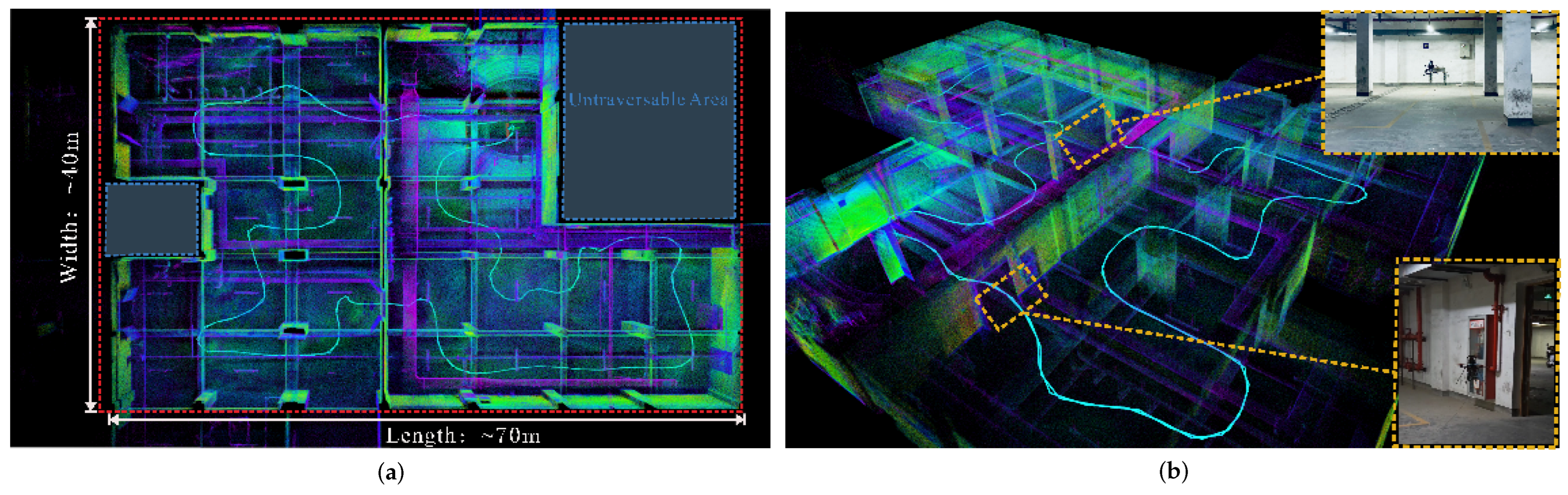

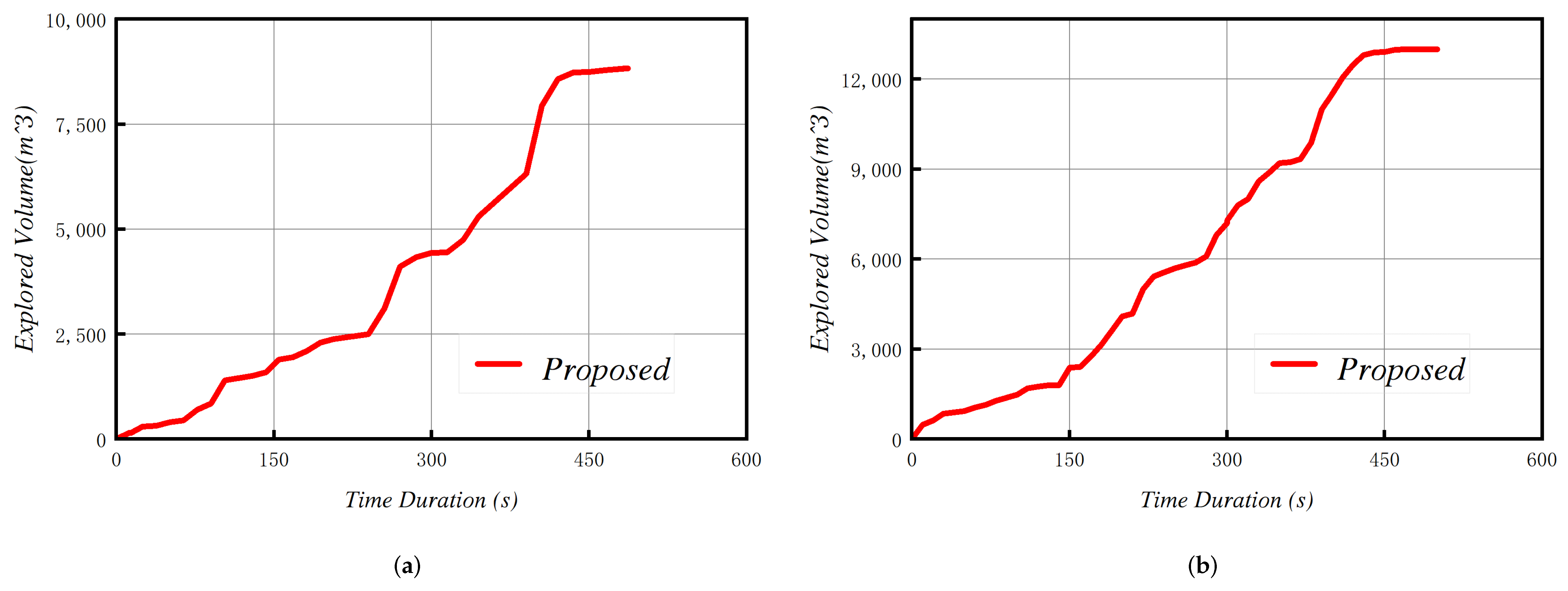

6.2. Physical Experiments

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cieslewski, T.; Kaufmann, E.; Scaramuzza, D. Rapid exploration with multi-rotors: A frontier selection method for high speed flight. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2135–2142. [Google Scholar]

- Han, D.; Jiang, H.; Wang, L.; Zhu, X.; Chen, Y.; Yu, Q. Collaborative task allocation and optimization solution for unmanned aerial vehicles in search and rescue. Drones 2024, 8, 138. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, W.; Qin, W.; Tang, W. A novel UAV path planning approach: Heuristic crossing search and rescue optimization algorithm. Expert Syst. Appl. 2023, 215, 119243. [Google Scholar] [CrossRef]

- Zhang, M.; Li, W.; Wang, M.; Li, S.; Li, B. Helicopter-UAVs search and rescue task allocation considering UAVs operating environment and performance. Comput. Ind. Eng. 2022, 167, 107994. [Google Scholar] [CrossRef]

- Faiz, T.I.; Vogiatzis, C.; Liu, J.; Noor-E-Alam, M. A robust optimization framework for two-echelon vehicle and UAV routing post-disaster humanitarian logistics operations. Networks 2024, 84, 200–219. [Google Scholar] [CrossRef]

- Liu, Y.; Vogiatzis, C.; Yoshida, R.; Morman, E. Solving reward-collecting problems with UAVs: A comparison of online optimization and Q-learning. J. Intell. Robot. Syst. 2022, 104, 35. [Google Scholar] [CrossRef]

- Schmid, L.; Pantic, M.; Khanna, R.; Ott, L.; Siegwart, R.; Nieto, J. An efficient sampling-based method for online informative path planning in unknown environments. IEEE Robot. Autom. Lett. 2020, 5, 1500–1507. [Google Scholar] [CrossRef]

- Qin, H.; Meng, Z.; Meng, W.; Chen, X.; Sun, H.; Lin, F.; Ang, M.H. Autonomous exploration and mapping system using heterogeneous uavs and ugvs in gps-denied environments. IEEE Trans. Veh. Technol. 2019, 68, 1339–1350. [Google Scholar] [CrossRef]

- Battulwar, R.; Winkelmaier, G.; Valencia, J.; Naghadehi, M.Z.; Peik, B.; Abbasi, B.; Parvin, B.; Sattarvand, J. A practical methodology for generating high-resolution 3d models of open-pit slopes using uavs: Flight path planning and optimization. Remote Sens. 2020, 12, 2283. [Google Scholar] [CrossRef]

- Petráček, P.; Krátký, V.; Petrlík, M.; Báča, T.; Kratochvíl, R.; Saska, M. Large-scale exploration of cave environments by unmanned aerial vehicles. IEEE Robot. Autom. Lett. 2021, 6, 7596–7603. [Google Scholar] [CrossRef]

- Bircher, A.; Kamel, M.; Alexis, K.; Oleynikova, H.; Siegwart, R. Receding horizon “next-best-view” planner for 3d exploration. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1462–1468. [Google Scholar]

- Meng, Z.; Qin, H.; Chen, Z.; Chen, X.; Sun, H.; Lin, F.; Ang, M.H. A two-stage optimized next-view planning framework for 3-d unknown environment exploration, and structural reconstruction. IEEE Robot. Autom. Lett. 2017, 2, 1680–1687. [Google Scholar] [CrossRef]

- Dang, T.; Tranzatto, M.; Khattak, S.; Mascarich, F.; Alexis, K.; Hutter, M. Graph-based subterranean exploration path planning using aerial and legged robots. J. Field Robot. 2020, 37, 1363–1388. [Google Scholar] [CrossRef]

- Dharmadhikari, M.; Dang, T.; Solanka, L.; Loje, J.; Nguyen, H.; Khedekar, N.; Alexis, K. Motion primitives-based path planning for fast and agile exploration using aerial robots. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 179–185. [Google Scholar]

- Bircher, A.; Kamel, M.; Alexis, K.; Oleynikova, H.; Siegwart, R. Receding horizon path planning for 3D exploration and surface inspection. Auton. Robot. 2018, 42, 291–306. [Google Scholar] [CrossRef]

- Duberg, D.; Jensfelt, P. UFOExplorer: Fast and scalable samplingbased exploration with a graph-based planning structure. IEEE Robot. Autom. Lett. 2022, 7, 2487–2494. [Google Scholar] [CrossRef]

- Zhang, X.; Chu, Y.; Liu, Y.; Zhang, X.; Zhuang, Y. A novel informative autonomous exploration strategy with uniform sampling for quadrotors. IEEE Trans. Ind. Electron. 2022, 69, 13131–13140. [Google Scholar] [CrossRef]

- Yamauchi, B. A frontier-based approach for autonomous exploration. In Proceedings of the 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation, Monterey, CA, USA, 10–11 July 1997; pp. 146–151. [Google Scholar]

- Zhao, Y.; Yan, L.; Xie, H.; Dai, J.; Wei, P. Autonomous exploration method for fast unknown environment mapping by using UAV equipped with limited FOV sensor. IEEE Trans. Ind. Electron. 2024, 71, 4933–4943. [Google Scholar] [CrossRef]

- Yu, J.; Shen, H.; Xu, J.; Zhang, T. ECHO: An efficient heuristic viewpoint determination method on frontier-based autonomous exploration for quadrotors. IEEE Robot. Autom. Lett. 2023, 8, 5047–5054. [Google Scholar] [CrossRef]

- Zhou, B.; Zhang, Y.; Chen, X.; Shen, S. Fuel: Fast uav exploration using incremental frontier structure and hierarchical planning. IEEE Robot. Autom. Lett. 2021, 6, 779–786. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, X.; Liu, Y.; Wang, H.; Zhang, X.; Zhuang, Y. FGIP: A frontier-guided informative planner for UAV exploration and reconstruction. IEEE Trans. Ind. Inform. 2024, 20, 6155–6166. [Google Scholar] [CrossRef]

- Selin, M.; Tiger, M.; Duberg, D.; Heintz, F.; Jensfelt, P. Efficient autonomous exploration planning of large-scale 3-D environments. IEEE Robot. Autom. Lett. 2019, 4, 1699–1706. [Google Scholar] [CrossRef]

- Dai, A.; Papatheodorou, S.; Funk, N.; Tzoumanikas, D.; Leutenegger, S. Fast frontier-based information-driven autonomous exploration with an MAV. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation, Paris, France, 31 May–31 August 2020; pp. 9570–9576. [Google Scholar]

- Wang, C.; Ma, H.; Chen, W.; Liu, L.; Meng, M.Q.-H. Efficient autonomous exploration with incrementally built topological map in 3D environments. IEEE Trans. Instrum. Meas. 2020, 69, 9853–9865. [Google Scholar] [CrossRef]

- Connolly, C. The determination of next best views. In Proceedings of the 1985 IEEE International Conference on Robotics and Automation, St. Louis, MO, USA, 25–28 March 1985; Volume 2, pp. 432–435. [Google Scholar]

- Ding, W.; Gao, W.; Wang, K.; Shen, S. An efficient b-spline-based kinodynamic replanning framework for quadrotors. IEEE Trans. Robot. 2019, 35, 1287–1306. [Google Scholar] [CrossRef]

- Papachristos, C.; Khattak, S.; Alexis, K. Uncertainty-aware receding horizon exploration and mapping using aerial robots. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 4568–4575. [Google Scholar]

- Dang, T.; Papachristos, C.; Alexis, K. Visual saliency-aware receding horizon autonomous exploration with application to aerial robotics. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2526–2533. [Google Scholar]

- Witting, C.; Fehr, M.; Ahnemann, R.B.; Oleynikova, H.; Siegwart, R. History-aware autonomous exploration in confined environments using mavs. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–9. [Google Scholar]

- Wang, C.; Zhu, D.; Li, T.; Meng, M.Q.-H.; de Silva, C.W. Efficient autonomous robotic exploration with semantic road map in indoor environments. IEEE Robot. Autom. Lett. 2019, 4, 2989–2996. [Google Scholar] [CrossRef]

- Dang, T.; Mascarich, F.; Khattak, S.; Papachristos, C.; Alexis, K. Graph-based path planning for autonomous robotic exploration in subterranean environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3105–3112. [Google Scholar]

- Keidar, M.; Kaminka, G.A. Efficient frontier detection for robot exploration. Int. J. Robot. Res. 2014, 33, 215–236. [Google Scholar] [CrossRef]

- Quin, P.; Nguyen, D.D.K.; Vu, T.L.; Alempijevic, A.; Paul, G. Approaches for efficiently detecting frontier cells in robotics exploration. Front. Robot. AI 2021, 8, 616470. [Google Scholar] [CrossRef]

- Quin, P.; Alempijevic, A.; Paul, G.; Liu, D. Expanding wavefront frontier detection: An approach for efficiently detecting frontier cells. In Proceedings of the 2014 Australasian Conference on Robotics and Automation, ACRA, Melbourne, Australia, 2–4 December 2014. [Google Scholar]

- Shen, S.; Michael, N.; Kumar, V. Autonomous indoor 3d exploration with a micro-aerial vehicle. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; IEEE: Piscataway, NJ, USA, 2012; p. 915. [Google Scholar]

- Juliá, M.; Gil, A.; Reinoso, O. A comparison of path planning strategies for autonomous exploration and mapping of unknown environments. Auton. Robot. 2012, 33, 427–444. [Google Scholar] [CrossRef]

- Zhu, F.; Ren, Y.; Kong, F.; Wu, H.; Liang, S.; Chen, N.; Xu, W.; Zhang, F. Decentralized lidarinertial swarm odometry. arXiv 2022, arXiv:2209.06628. [Google Scholar]

- Wang, Z.; Zhou, X.; Xu, C.; Gao, F. Geometrically constrained trajectory optimization for multicopters. IEEE Trans. Robot. 2022, 38, 3259–3278. [Google Scholar] [CrossRef]

- Kong, F.; Liu, X.; Tang, B.; Lin, J.; Ren, Y.; Cai, Y.; Zhu, F.; Chen, N.; Zhang, F. Marsim: A light-weight point-realistic simulator for lidar-based UAVs. arXiv 2022, arXiv:2211.10716. [Google Scholar] [CrossRef]

- Zhu, H.; Cao, C.; Xia, Y.; Scherer, S.; Zhang, J.; Wang, W. DSVP: Dual-stage viewpoint planner for rapid exploration by dynamic expansion. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems, Prague, Czech Republic, 27 September–1 October 2021; pp. 7623–7630. [Google Scholar]

- Respall, V.M.; Devitt, D.; Fedorenko, R.; Klimchik, A. Fast sampling-based next-best-view exploration algorithm for a MAV. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 89–95. [Google Scholar]

- Tao, Y.; Wu, Y.; Li, B.; Cladera, F.; Zhou, A.; Thakur, D.; Kumar, V. Seer: Safe efficient exploration for aerial robots using learning to predict information gain. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 1235–1241. [Google Scholar]

- Ratliff, N.; Zucker, M.; Bagnell, J.A.; Srinivasa, S. Chomp: Gradient optimization techniques for efficient motion planning. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 489–494. [Google Scholar]

- Kalakrishnan, M.; Chitta, S.; Theodorou, E.; Pastor, P.; Schaal, S. STOMP: Stochastic trajectory optimization for motion planning. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 4569–4574. [Google Scholar]

- Oleynikova, H.; Burri, M.; Taylor, Z.; Nieto, J.; Siegwart, R.; Galceran, E. Continuous-time trajectory optimization for online UAV replanning. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Daejeon, Republic of Korea, 9–14 October 2016; pp. 5332–5339. [Google Scholar]

- Zucker, M.; Ratliff, N.; Dragan, A.D.; Pivtoraiko, M.; Klingensmith, M.; Dellin, C.M.; Bagnell, J.A.; Srinivasa, S.S. Chomp: Covariant hamiltonian optimization for motion planning. Int. J. Robot. Res. 2013, 32, 1164–1193. [Google Scholar] [CrossRef]

- Gao, F.; Lin, Y.; Shen, S. Gradient-based online safe trajectory generation for quadrotor flight in complex environments. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 3681–3688. [Google Scholar]

- Zhou, B.; Pan, J.; Gao, F.; Shen, S. Raptor: Robust and perception-aware trajectory replanning for quadrotor fast flight. arXiv 2020, arXiv:2007.03465. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, Z.; Ye, H.; Xu, C.; Gao, F. EGO-Planner: An ESDF-Free Gradient-Based Local Planner for Quadrotors. IEEE Robot. Autom. Lett. 2021, 6, 478–485. [Google Scholar] [CrossRef]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. Fast-lio2: Fast direct lidar-inertial odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Tang, B.; Ren, Y.; Zhu, F.; He, R.; Liang, S.; Kong, F.; Zhang, F. Bubble Explorer: Fast UAV Exploration in Large-Scale and Cluttered 3D-Environments Using Occlusion-Free Spheres. arXiv 2023, arXiv:2304.00852. [Google Scholar]

- Mueller, M.W.; Hehn, M.; D’Andrea, R. A computationally efficient motion primitive for quadrocopter trajectory generation. IEEE Trans. Robot. 2015, 31, 1294–1310. [Google Scholar] [CrossRef]

- Lin, S.; Kernighan, B.W. An effective heuristic algorithm for the traveling-salesman problem. Oper. Res. 1973, 21, 498–516. [Google Scholar] [CrossRef]

- Ding, W.; Gao, W.; Wang, K.; Shen, S. Trajectory replanning for quadrotors using kinodynamic search and elastic optimization. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 7595–7602. [Google Scholar]

- Schmitzberger, E.; Bouchet, J.-L.; Dufaut, M.; Wolf, D.; Husson, R. Capture of homotopy classes with probabilistic road map. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Lausanne, Switzerland, 30 September–4 October 2002; IEEE: Piscataway, NJ, USA, 2002; Volume 3, pp. 2317–2322. [Google Scholar]

- Blochliger, F.; Fehr, M.; Dymczyk, M.; Schneider, T.; Siegwart, R. Topomap: Topological mapping and navigation based on visual slam maps. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–9. [Google Scholar]

- Usenko, V.; Von Stumberg, L.; Pangercic, A.; Cremers, D. Real-time trajectory replanning for MAVs using uniform B-splines and a 3D circular buffer. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 215–222. [Google Scholar]

- Simeon, T.; Laumond, J.-P.; Nissoux, C. Visibility-based probabilistic roadmaps for motion planning. Adv. Robot. 2000, 14, 477–493. [Google Scholar] [CrossRef]

- Ren, Y.; Zhu, F.; Liu, W.; Wang, Z.; Lin, Y.; Gao, F.; Zhang, F. Bubble Planner: Planning High-speed Smooth Quadrotor Trajectories using Receding Corridors. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 6332–6339. [Google Scholar] [CrossRef]

- Tordesillas, J.; Lopez, B.T.; How, J.P. Faster: Fast and safe trajectory planner for flights in unknown environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1934–1940. [Google Scholar]

- Zhu, F.; Ren, Y.; Zhang, F. Robust realtime lidar-inertial initialization. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 3948–3955. [Google Scholar]

| Scene | Method | Exploration Time (s) | Flight Distance (m) | Coverage (m3) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Avg | Std | Max | Min | Avg | Std | Max | Min | Avg | Std | Max | Min | ||

| Maze | Proposed | 108.6 | 4.8 | 123.2 | 105 | 152.4 | 10 | 173.8 | 149.2 | 945.5 | 3.2 | 952 | 943.5 |

| FUEL | 168.4 | 7.3 | 177.3 | 157.4 | 219.4 | 11.2 | 237.4 | 215.2 | 942.7 | 0.9 | 953.1 | 937.1 | |

| GBP | 331.1 | 11.1 | 370.4 | 327.5 | 228 | 5.6 | 248.5 | 224.8 | 869.3 | 11.5 | 899.2 | 853.7 | |

| NBVP | 640.5 | 172.3 | 876.5 | 520.7 | 315.4 | 82.6 | 410.3 | 275.3 | 841.5 | 86.7 | 947.7 | 786.4 | |

| Colonnade | Proposed | 98.7 | 1.1 | 107.2 | 96.4 | 160.2 | 6.8 | 170.2 | 155.4 | 894.5 | 2.3 | 899.7 | 890.4 |

| FUEL | 164.4 | 6.2 | 173.4 | 154.3 | 192.4 | 8.7 | 243.4 | 183.4 | 876.2 | 1.3 | 893.8 | 865.3 | |

| GBP | 287.2 | 20.3 | 334.7 | 263.7 | 213.4 | 42.7 | 245.7 | 204.4 | 872.4 | 17.8 | 878.4 | 842.5 | |

| NBVP | 617.5 | 168.4 | 900.4 | 601.7 | 284.9 | 32.4 | 387.4 | 272.4 | 867.2 | 78.3 | 869.7 | 837.2 | |

| Scene | Exploration Time (s) | Flight Distance (m) | Coverage (m3) |

|---|---|---|---|

| underground garage | 461 | 451.6 | 8957 |

| forest | 473 | 303.5 | 13,042 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Wang, J.; Wang, S.; Wang, M.; Wang, T.; Feng, Z.; Zhu, S.; Zheng, E. FAEM: Fast Autonomous Exploration for UAV in Large-Scale Unknown Environments Using LiDAR-Based Mapping. Drones 2025, 9, 423. https://doi.org/10.3390/drones9060423

Zhang X, Wang J, Wang S, Wang M, Wang T, Feng Z, Zhu S, Zheng E. FAEM: Fast Autonomous Exploration for UAV in Large-Scale Unknown Environments Using LiDAR-Based Mapping. Drones. 2025; 9(6):423. https://doi.org/10.3390/drones9060423

Chicago/Turabian StyleZhang, Xu, Jiqiang Wang, Shuwen Wang, Mengfei Wang, Tao Wang, Zhuowen Feng, Shibo Zhu, and Enhui Zheng. 2025. "FAEM: Fast Autonomous Exploration for UAV in Large-Scale Unknown Environments Using LiDAR-Based Mapping" Drones 9, no. 6: 423. https://doi.org/10.3390/drones9060423

APA StyleZhang, X., Wang, J., Wang, S., Wang, M., Wang, T., Feng, Z., Zhu, S., & Zheng, E. (2025). FAEM: Fast Autonomous Exploration for UAV in Large-Scale Unknown Environments Using LiDAR-Based Mapping. Drones, 9(6), 423. https://doi.org/10.3390/drones9060423