Modeling and Simulation of Urban Laser Countermeasures Against Low-Slow-Small UAVs

Abstract

1. Introduction

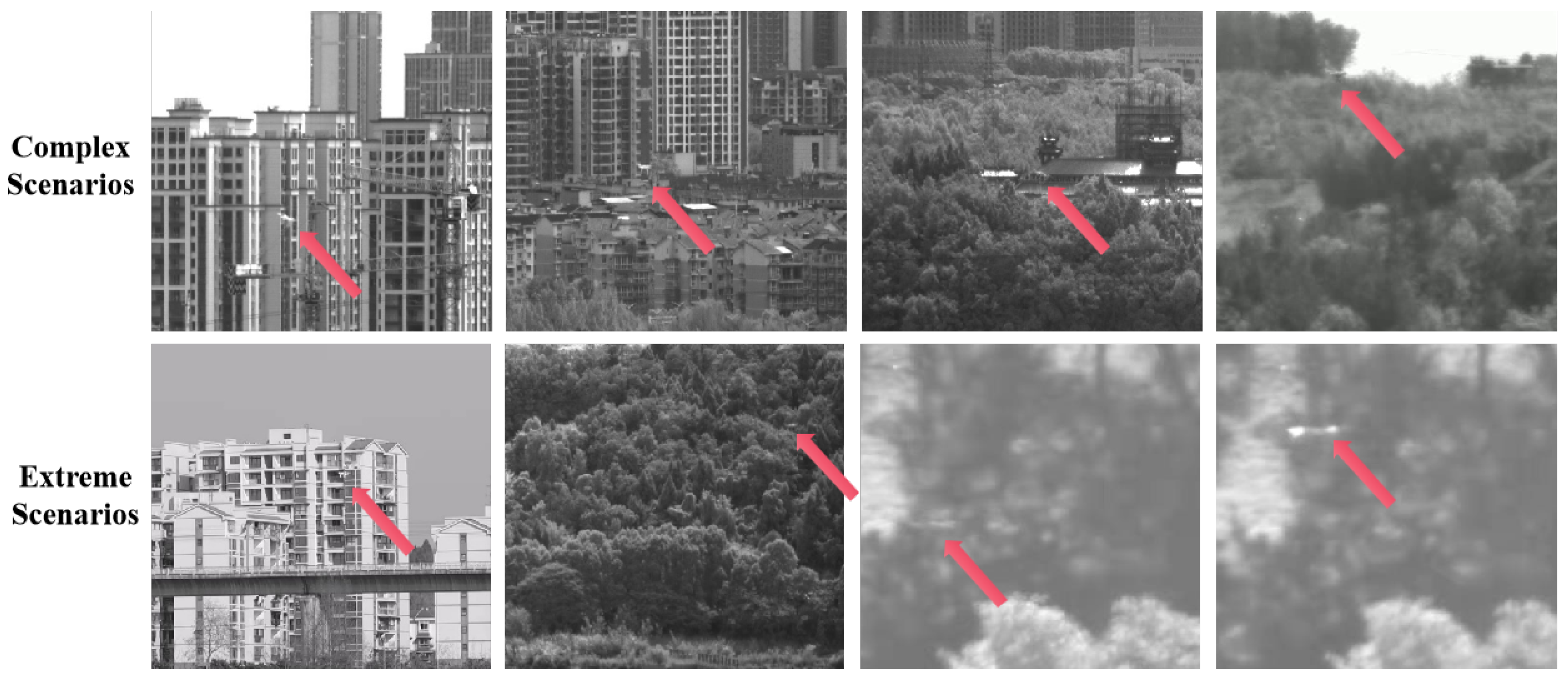

- Target Recognition and Tracking in Complex Environments: Low-altitude small targets have low brightness and contrast, often impacted by strong sunlight backgrounds and motion blur. These factors make it difficult for traditional feature extraction algorithms to distinguish targets from the background [6]. Multi-frame saliency detection methods struggle in dynamic urban environments.

- Laser Transmission and Atmospheric Disturbance Effects: Atmospheric turbulence [7] and the absorption and scattering of laser energy by suspended particulates reduce target backscatter intensity, distort the laser spot, and ultimately degrade tracking accuracy and damage effectiveness.

- Robust Control for High-Speed Maneuvering Targets: The high maneuverability of UAVs requires ATP systems with faster dynamic response and greater resistance to interference. Traditional PID control algorithms lack sufficient stability in complex disturbance environments.

- Multi-Physics Coupled Simulation Challenges: There is a lack of an integrated simulation framework for laser transmission, optical system dynamics, control algorithms, and atmospheric effects, limiting efficient multi-disciplinary simulations.

2. Related Work

2.1. Applications in UAV Simulation and Autonomous Control

2.2. Simulation-Based Target Detection and Reinforcement Learning

2.3. Vision-Based Anti-UAV Detection in Real-World Scenarios

2.4. Comparative Analysis of Laser Countermeasure Simulation Frameworks

2.5. Research Gaps and Novel Contributions

3. Laser Countermeasure Simulation Framework Based on Multimodal Collaboration

- Real-Time Interaction in Dynamic Engagement Scenarios: Supports multi-perspective collaborative control and data-driven target tracking, simulating the adversarial process between UAV evasive maneuvers and dynamic laser beam tuning.

- Multi-Source Heterogeneous Data Fusion: Integrates GIS terrain data, BIM building models, and real-time meteorological information to generate three-dimensional dynamic scenarios in urban canyon environments.

3.1. Construction of 3D GIS Panorama Model Library

- Lightweighting and LOD Optimization of Oblique Photogrammetric Models: To improve rendering efficiency in complex urban environments, a mesh simplification algorithm based on vertex clustering and edge collapse [1] is used, reducing the number of original triangles by more than 70% (Equation (1)):where represents the centroid of the vertex cluster. The LOD hierarchy is then constructed based on the viewpoint distance d and screen-space error (SSE) (Equation (2)):where is the geometric error threshold, f is the focal length, and is the field of view angle.

- Geospatial Registration and Coordinate Transformation: To address the discrepancy between the local coordinate system and the global geodetic reference frame, a seven-parameter coordinate transformation model constrained by Ground Control Points (GCPs) is employed (Equation (3)):The parameters are optimized using the Levenberg–Marquardt algorithm to achieve sub-meter positioning accuracy (Figure 3c).

- Dynamic Tile-Based Loading Mechanism: Based on the 3D Tiles specification, a spatial quadtree index is used to partition large-scale models (Equation (4)):where l denotes the tile level and the maximum level. By applying view frustum culling and occlusion culling algorithms [28], the rendering frame rate is boosted to over 60 FPS (Figure 3a,b).

3.2. Dynamic Fusion Modeling of Targets and Environments

- Illumination Consistency Modeling: To simulate the skylight background interference described in the Introduction, a real-time illumination model based on atmospheric scattering is developed (Equation (5)) [29]:where is the total extinction coefficient, R s the optical path length, and is the scattering phase function.

- Terrain Dynamics Coupling: To model the aerodynamic interference force on the UAV, we introduce a Digital Surface Model (DSM) and fluid dynamics coupling method, calculating the aerodynamic interference force (Equation (6)):where is the air density, is the drag coefficient, and is the local wind direction unit vector.

- Environmental Disturbances Across Multiple Time Scales: The impact of atmospheric turbulence on laser propagation is modeled using a stochastic differential equation (SDE) (Equation (7)):where is the attenuation coefficient, is the turbulence intensity parameter, and is the Wiener process.

3.3. Multi-Level Collaborative Tracking Control Architecture

- Coarse-TV Tracking Control Law: The Coarse-TV (CTV) system uses a Proportional-Integral-Derivative (PID) controller for rapid response:where the tracking error , and the control parameters are tuned via the frequency-domain response method. The quaternion update equation is formulated as follows:

- Precision-TV Fine-Tuning Strategy: The Precision-TV (PTV) system operates in parallel with the Coarse-TV (CTV) system, using a nonlinear PID compensation mechanism:where denotes the saturation function, with the parameters set as . The update equation for the camera attitude fine-tuning is as follows:

- Kinematic Chain Transmission: The kinematic model of the camera platform is transmitted via the Lie group SE(3):where denotes the angular velocity twist and denotes the translational velocity twist.

4. Model Architecture

- Lightweight Feature Pyramid Reconstruction: Traditional detection models (e.g., FPN) use multilevel feature layers (P2–P5) for the detection of multiscale targets. Deeper layers (P4, P5) are more sensitive to large targets, while shallower layers (P2, P3) retain finer details of small targets. This work removes the P4 and P5 detection layers, retaining only P2 (1/4 resolution) and P3 (1/8 resolution) as input to the detection head. The feature fusion process follows:where ⊕ denotes element-wise addition, and UpSample refers to bilinear interpolation upsampling.

- Dynamic Spatial Attention Mechanism (DSAM): To address image blurring and background confusion caused by high-speed UAV motion, the DSAM module is embedded in the D-Fine CCFM. This mechanism captures local context information along height and width directions to generate spatial attention weights.As shown in Figure 5: Given an input feature map: , the DSAM computation follows: apply average pooling along the width and height directions for spatial attention:Process and with grouped convolution and activation:Channel attention generates descriptors through global average pooling on the input feature map:Processed through two fully-connected layers (implemented as 1 × 1 convolutions) with nonlinear activation:where are learnable parameters, and r is the channel compression ratio. denotes the ReLU activation function, and denotes the Sigmoid function.Weight fusion and output broadcast to the original spatial dimensions for element-wise multiplication with and the input feature map:where ⊙ represents element-wise multiplication.

- NWD Loss Function: To address the sensitivity of IoU to small target localization, the Normalized Wasserstein Distance (NWD) [2] is introduced as the regression loss. By modeling detection boxes as Gaussian distributions , the NWD is defined as follows:The regression loss function isCompared to traditional IoU loss, NWD exhibits a smooth response to small positional deviations. The model adopts a dynamic weight allocation strategy to jointly optimize detection and localization tasks:where is the focal loss, is the self-distillation loss, and the hyperparameters are set as .

5. Experiments and Results

5.1. Dataset

- Diverse backgrounds (open sky, forest, urban environments);

- Multiple motion patterns (level flight, complex maneuvers, rapid ascent/descent);

- Balanced representation of simulated and real-world scenarios.

5.2. Experiments

- D-Fine (Baseline): The original unmodified architecture employing standard IoU loss and a full feature pyramid (P3–P5 layers) for multi-scale detection. This model contains 4.0M parameters and serves as the reference benchmark.

- D-Fine-NWD: Enhanced from D-Fine by replacing IoU loss with Normalized Wasserstein Distance (NWD) loss. This modification specifically addresses the sensitivity of small target localization while retaining the complete P3–P5 feature pyramid structure. The NWD loss models bounding boxes as Gaussian distributions to improve sub-pixel accuracy.

- D-Fine-NWD-P2 (Proposed UAV-D-Fine): Built upon D-Fine-NWD with lightweight feature pyramid reconstruction:

- -

- Removes P4 and P5 detection layers;

- -

- Retains only high-resolution P2 (1/4 scale) and P3 (1/8 scale) features;

- -

- Implements optimized feature fusion: .

- D-Fine-NWD-P2-DSAM (Enhanced UAV-D-Fine): Extends D-Fine-NWD-P2 by integrating the Dynamic Spatial Attention Mechanism (DSAM) into the feature fusion pathway.This architecture achieves 85% parameter reduction (0.6 M params) while maintaining detection performance.

5.3. UAV Tracking Test and Miss Distance Analysis Based on the Simulation System

5.4. Result Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

DURC Statement

Acknowledgments

Conflicts of Interest

References

- Mohamed, N.; Al-Jaroodi, J.; Jawhar, I.; Idries, A.; Mohammed, F. Unmanned aerial vehicles applications in future smart cities. Technol. Forecast. Soc. Chang. 2020, 153, 119293. [Google Scholar] [CrossRef]

- Hu, N.; Li, Y.; Pan, W.; Shao, S.; Tang, Y.; Li, X. Geometric distribution of UAV detection performance by bistatic radar. IEEE Trans. Aerosp. Electron. Syst. 2023, 60, 2445–2452. [Google Scholar] [CrossRef]

- Chae, M.H.; Park, S.O.; Choi, S.H.; Choi, C.T. Reinforcement learning-based counter fixed-wing drone system using GNSS deception. IEEE Access 2024, 12, 16549–16558. [Google Scholar] [CrossRef]

- Kaushal, H.; Kaddoum, G. Applications of lasers for tactical military operations. IEEE Access 2017, 5, 20736–20753. [Google Scholar] [CrossRef]

- Wu, J.; Huang, S.; Wang, X.; Kou, Y.; Yang, W. Study on the Performance of Laser Device for Attacking Miniature UAVs. Optics 2024, 5, 378–391. [Google Scholar] [CrossRef]

- Jiang, N.; Wang, K.; Peng, X.; Yu, X.; Wang, Q.; Xing, J.; Li, G.; Guo, G.; Ye, Q.; Jiao, J.; et al. Anti-UAV: A large-scale benchmark for vision-based UAV tracking. IEEE Trans. Multimed. 2021, 25, 486–500. [Google Scholar] [CrossRef]

- Weyrauch, T.; Vorontsov, M.A.; Carhart, G.W.; Beresnev, L.A.; Rostov, A.P.; Polnau, E.E.; Liu, J.J. Experimental demonstration of coherent beam combining over a 7 km propagation path. Opt. Lett. 2011, 36, 4455–4457. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. Airsim: High-fidelity visual and physical simulation for autonomous vehicles. In Field and Service Robotics: Results of the 11th International Conference; Springer: Cham, Switzerland, 2018; pp. 621–635. [Google Scholar]

- Madaan, R.; Gyde, N.; Vemprala, S.; Brown, M.; Nagami, K.; Taubner, T.; Cristofalo, E.; Scaramuzza, D.; Schwager, M.; Kapoor, A. Airsim drone racing lab. In Proceedings of the Neurips 2019 competition and demonstration track, Vancouver, CA, USA, 8–14 December 2019; pp. 177–191. [Google Scholar]

- Chao, Y.; Dillmann, R.; Roennau, A.; Xiong, Z. E-DQN-Based Path Planning Method for Drones in Airsim Simulator under Unknown Environment. Biomimetics 2024, 9, 238. [Google Scholar] [CrossRef] [PubMed]

- Shen, S.E.; Huang, Y.C. Application of reinforcement learning in controlling quadrotor UAV flight actions. Drones 2024, 8, 660. [Google Scholar] [CrossRef]

- Shimada, T.; Nishikawa, H.; Kong, X.; Tomiyama, H. Pix2pix-based monocular depth estimation for drones with optical flow on airsim. Sensors 2022, 22, 2097. [Google Scholar] [CrossRef] [PubMed]

- Mittal, P.; Sharma, A.; Singh, R. A simulated dataset in aerial images using simulink for object detection and recognition. Int. J. Cogn. Comput. Eng. 2022, 3, 144–151. [Google Scholar] [CrossRef]

- Rui, C.; Youwei, G.; Huafei, Z.; Hongyu, J. A comprehensive approach for UAV small object detection with simulation-based transfer learning and adaptive fusion. arXiv 2021, arXiv:2109.01800. [Google Scholar]

- Wang, B.; Li, Q.; Mao, Q.; Wang, J.; Chen, C.P.; Shangguan, A.; Zhang, H. A Survey on Vision-Based Anti Unmanned Aerial Vehicles Methods. Drones 2024, 8, 518. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Hu, Y.; Wu, X.; Zheng, G.; Liu, X. Object detection of UAV for anti-UAV based on improved YOLO v3. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 8386–8390. [Google Scholar]

- Cheng, Q.; Li, J.; Du, J.; Li, S. Anti-UAV Detection Method Based on Local-Global Feature Focusing Module. In Proceedings of the 2024 IEEE 7th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 15–17 March 2024; Volume 7, pp. 1413–1418. [Google Scholar] [CrossRef]

- Li, Y.; Yuan, D.; Sun, M.; Wang, H.; Liu, X.; Liu, J. A global-local tracking framework driven by both motion and appearance for infrared anti-uav. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 3026–3035. [Google Scholar]

- Khalighi, M.A.; Uysal, M. Survey on free space optical communication: A communication theory perspective. IEEE Commun. Surv. Tutor. 2014, 16, 2231–2258. [Google Scholar] [CrossRef]

- Berk, A.; Anderson, G.P.; Acharya, P.K.; Bernstein, L.S.; Muratov, L.; Lee, J.; Fox, M.J.; Adler-Golden, S.M.; Chetwynd, J.H.; Hoke, M.L.; et al. MODTRAN 5: A reformulated atmospheric band model with auxiliary species and practical multiple scattering options: Update. In Proceedings of the Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XI, Orlando, FL, USA, 28 March–1 April 2005; Volume 5806. [Google Scholar] [CrossRef]

- Multiphysics, C. Introduction to Comsol Multiphysics®; COMSOL Multiphysics: Burlington, MA, USA, 1998; Volume 9, p. 32. [Google Scholar]

- Madenci, E.; Guven, I. The Finite Element Method and Applications in Engineering Using ANSYS®; Springer: New York, NY, USA, 2015. [Google Scholar]

- Coluccelli, N. Nonsequential modeling of laser diode stacks using Zemax: Simulation, optimization, and experimental validation. Appl. Opt. 2010, 49, 4237–4245. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Wang, W.; Li, J.; Gao, B.; Li, H. Research on a Method for Realizing Three-Dimensional Real-Time Cloud Rendering Based on Cesium. In Proceedings of the 2024 4th International Conference on Electronic Information Engineering and Computer Science (EIECS), Yanji, China, 27–29 September 2024; pp. 224–228. [Google Scholar] [CrossRef]

- Zhuang, Z.; Guo, R.; Huang, Z.; Zhang, Y.; Tian, B. UAV Localization Using Staring Radar Under Multipath Interference. In Proceedings of the IGARSS 2022–2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 2291–2294. [Google Scholar]

- Jiang, W.; Liu, Z.; Wang, Y.; Lin, Y.; Li, Y.; Bi, F. Realizing Small UAV Targets Recognition via Multi-Dimensional Feature Fusion of High-Resolution Radar. Remote Sens. 2024, 16, 2710. [Google Scholar] [CrossRef]

- Peng, Y.; Li, H.; Wu, P.; Zhang, Y.; Sun, X.; Wu, F. D-FINE: Redefine regression Task in DETRs as Fine-grained distribution refinement. arXiv 2024, arXiv:2410.13842. [Google Scholar]

| Models | Params (M) | GFLOPs | mAP@50 | mAP@50-95 | Miss Distance (px) | FPS (4080) |

|---|---|---|---|---|---|---|

| YOLOv11n | 2.9 | 7.6 | 0.985 | 0.781 | 4.19 | 375 |

| D-Fine | 4.0 | 7.0 | 0.988 | 0.786 | 4.15 | 390 |

| D-Fine-NWD | 4.0 | 7.0 | 0.994 | 0.797 | 3.85 | 390 |

| D-Fine-NWD-P2 | 0.6 | 9.1 | 0.994 | 0.804 | 2.98 | 433 |

| D-Fine-NWD-P2-DSAM | 0.6 | 9.3 | 0.994 | 0.810 | 2.84 | 423 |

| UAV Model | Coarse Track Miss Distance Sequence | Precise Track Miss Distance Sequence |

|---|---|---|

| DJI Phantom 4 |  |  |

| Mavic |  |  |

| Matrix 200 |  |  |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, Z.; You, J.; Gu, J.; Kou, H.; Li, G. Modeling and Simulation of Urban Laser Countermeasures Against Low-Slow-Small UAVs. Drones 2025, 9, 419. https://doi.org/10.3390/drones9060419

Ye Z, You J, Gu J, Kou H, Li G. Modeling and Simulation of Urban Laser Countermeasures Against Low-Slow-Small UAVs. Drones. 2025; 9(6):419. https://doi.org/10.3390/drones9060419

Chicago/Turabian StyleYe, Zixun, Jiang You, Jingliang Gu, Hangning Kou, and Guohao Li. 2025. "Modeling and Simulation of Urban Laser Countermeasures Against Low-Slow-Small UAVs" Drones 9, no. 6: 419. https://doi.org/10.3390/drones9060419

APA StyleYe, Z., You, J., Gu, J., Kou, H., & Li, G. (2025). Modeling and Simulation of Urban Laser Countermeasures Against Low-Slow-Small UAVs. Drones, 9(6), 419. https://doi.org/10.3390/drones9060419