LSTM-H: A Hybrid Deep Learning Model for Accurate Livestock Movement Prediction in UAV-Based Monitoring Systems

Abstract

1. Introduction

2. Related Works

3. Mobility Prediction Models

- Real-time Kalman Filter (KF);

- Long Short-Term Memory (LSTM);

- Spatial–Temporal Attentive LSTM (STA-LSTM);

- Extended Kalman Filter with Hidden Markov Model (EKF-HMM).

3.1. Real-Time Kalman Filter (KF)

- Prediction: using the previous state estimate, the filter predicts the next state based on the system’s motion model:where is the predicted state at time t, A is the state transition matrix, B is the control matrix, and is the control input.

- Correction: the predicted state is corrected using the observed state , which accounts for measurement noise:where is the Kalman Gain, and H is the observation matrix.

3.2. Long Short-Term Memory (LSTM)

- The input gate () determines how much of the current input should update the memory cell. It is calculated aswhere is the input vector at time t, is the hidden state from the previous time step; and are the weight matrices for the input and recurrent connections, respectively; and is the bias vector. The function represents the sigmoid activation function.

- The forget gate () controls the extent to which the previous memory cell content is retained. It is computed aswhere and are the weight matrices for the input and recurrent connections, and is the bias vector.

- The output gate () regulates the output of the memory cell to the hidden state. It is given bywhere and are the weight matrices for the input and recurrent connections, and is the bias vector.

3.3. Spatial–Temporal Attentive LSTM (STA-LSTM)

- Attention score computation: for each time step t, an attention score is computed based on the hidden state of the LSTM and a learnable context vector u:where is a weight matrix, is a bias vector, and T is the total number of time steps in the input sequence.

- Weighted hidden state: the hidden states are weighted by their corresponding attention scores to compute a context vector c:

- Final prediction: the context vector c is passed through a fully connected layer and activation function to produce the final prediction:where is the weight matrix, and is the bias vector for the output layer.

3.4. Extended Kalman Filter with Hidden Markov Model (EKF-HMM)

3.4.1. Extended Kalman Filter (EKF)

- Prediction: the next state is predicted based on the non-linear state transition model f:where represents process noise, typically modeled as zero-mean Gaussian noise with covariance Q.

- Correction: the predicted state is updated using the observed state , based on the non-linear observation model h:where is the Kalman Gain, computed asHere, is the error covariance matrix, is the Jacobian matrix of h evaluated at , and R is the measurement noise covariance.

3.4.2. Hidden Markov Model (HMM)

- A finite set of hidden states .

- State transition probabilities , where represents the probability of transitioning from state to .

- Observation probabilities , where represents the likelihood of observing given state .

- An initial state distribution , where .

3.4.3. Integration of EKF and HMM

4. Methodology

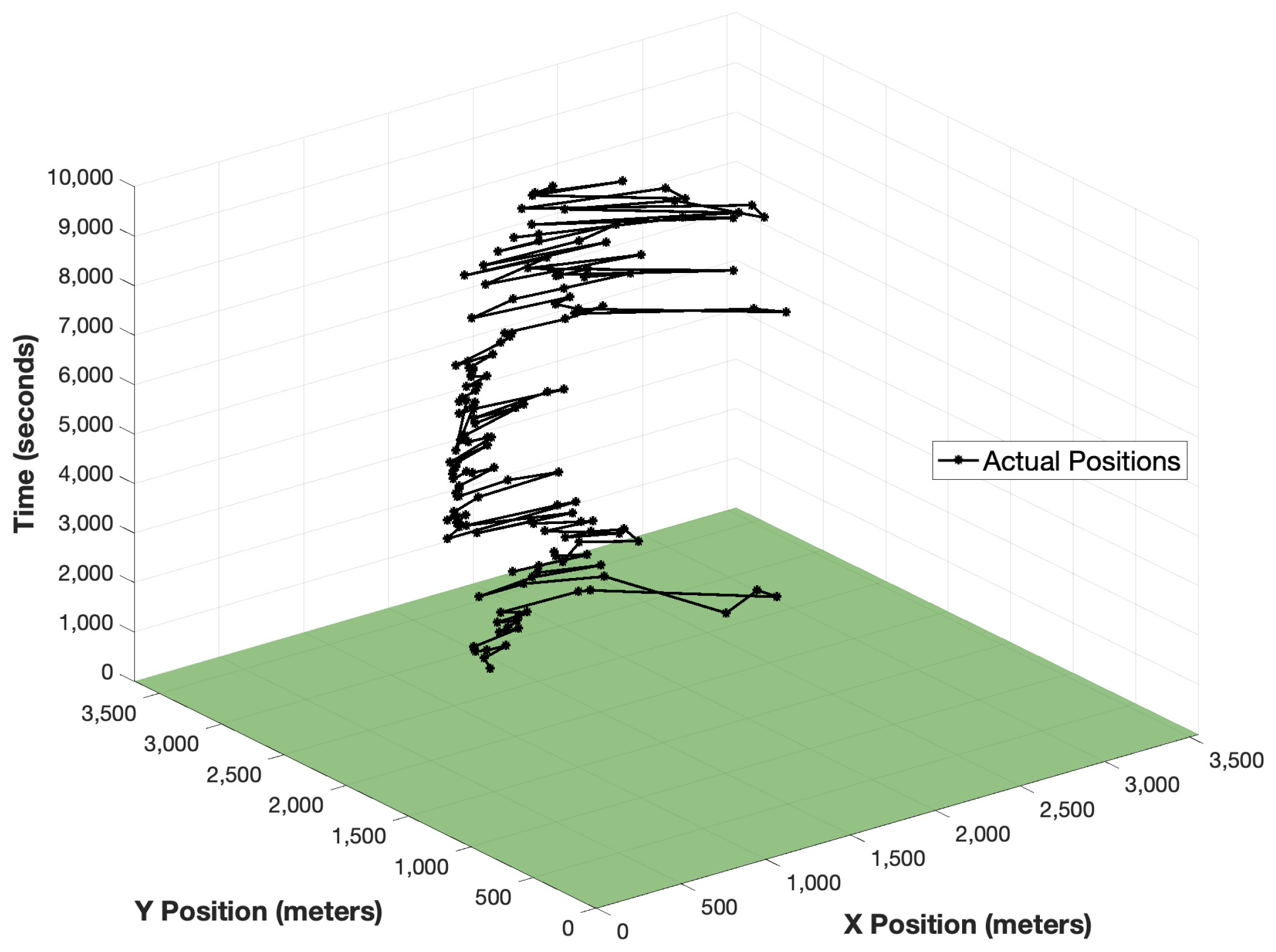

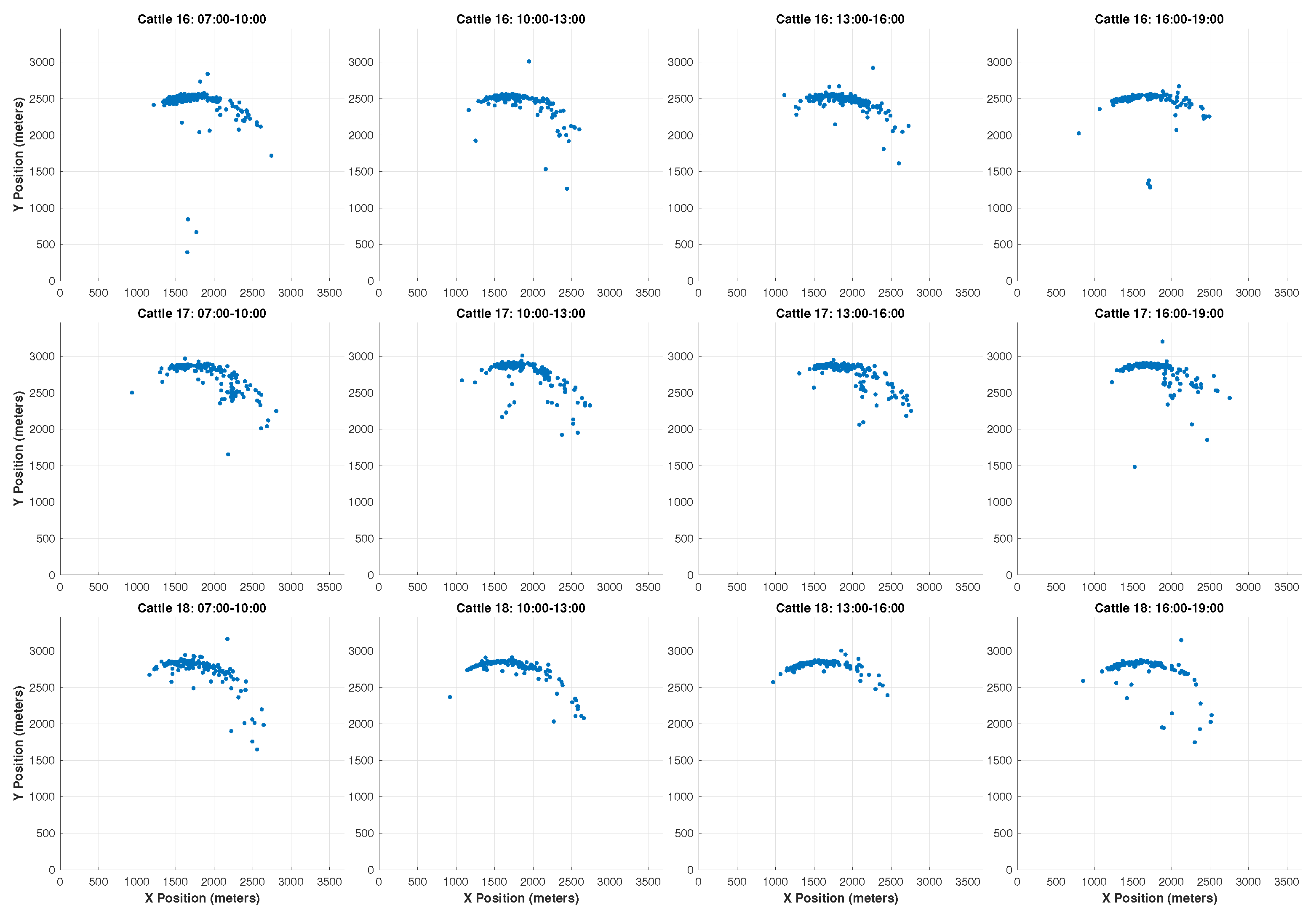

4.1. Dataset Description

- Timestamp: the time at which the livestock position was recorded (ISO 8601 format, e.g., YYYY-MM-DD HH:mm:ss.SSS).

- X coordinate: the livestock’s horizontal position in meters within the farm boundary.

- Y coordinate: the livestock’s vertical position in meters within the farm boundary.

- Z coordinate: excluded, as noted above.

4.2. Synthetic Data Generation

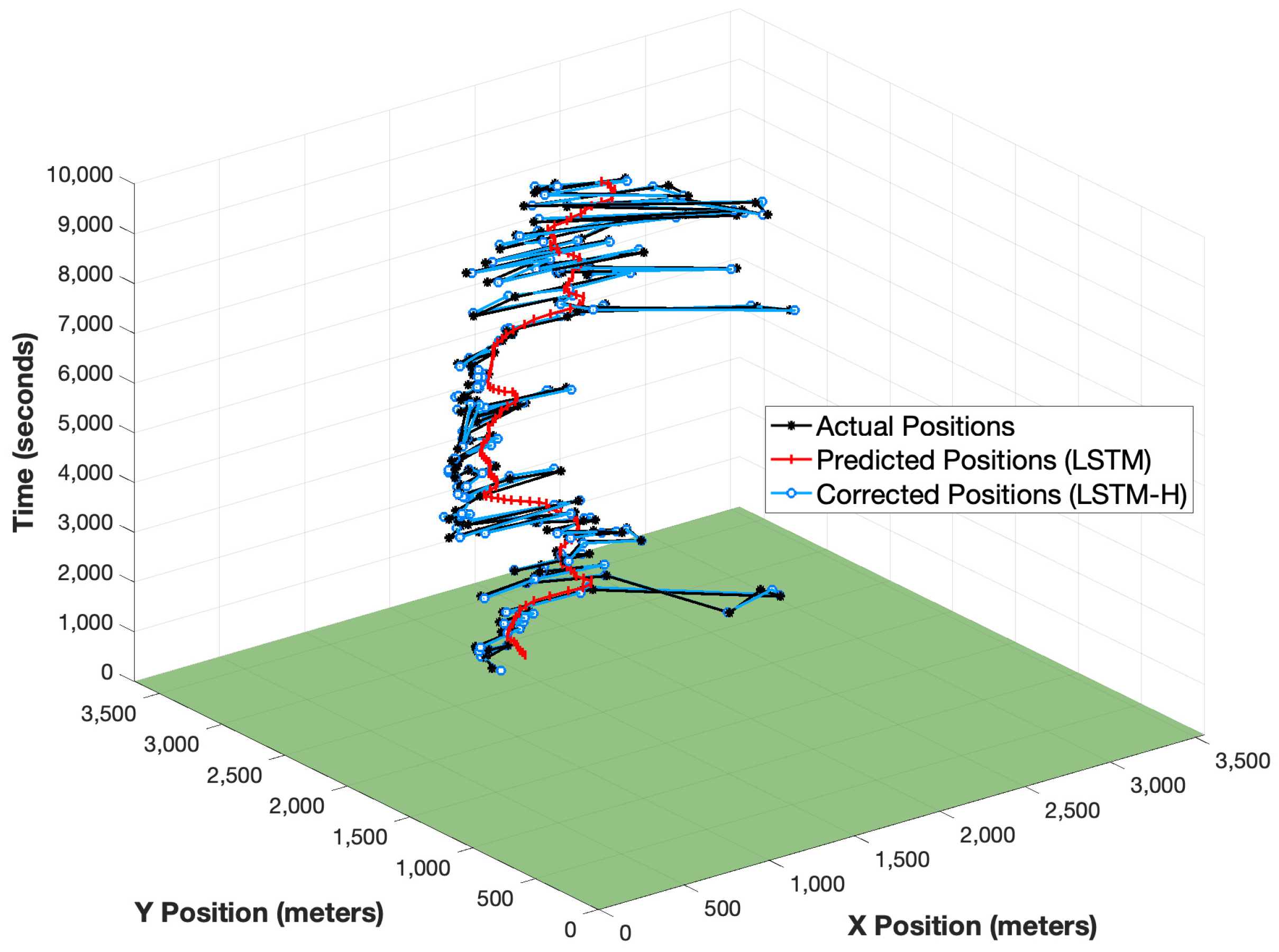

4.3. Proposed LSTM-Hybrid Model

- Prediction step: The KF takes the LSTM-predicted position, , and projects it forward based on the system’s motion model:where is the predicted state at time t, A is the state transition matrix, B is the control matrix, and is the control input. In this case, the LSTM prediction acts as the initial input for the KF.

- Correction step: The KF refines the predicted state by incorporating noisy real-time observations , such as UAV-collected positions:where is the Kalman Gain, H is the observation matrix, and is the observed position. The Kalman Gain, , determines the weight given to the observation versus the prediction and is computed aswhere is the error covariance matrix and R is the measurement noise covariance.

| Parameter | Value | Rationale |

|---|---|---|

| Max Epochs | 200 | Ensures convergence without overfitting, validated by monitoring validation loss. |

| Mini-Batch Size | 64 | Balances GPU memory usage and stable gradient updates for the dataset size. |

| Initial Learning Rate | 0.002 | Enables rapid initial learning, with a drop factor of 0.005 every 80 epochs for stability. |

| Learning Rate Drop Factor | 0.005 | Facilitates fine-tuning in later training stages to capture complex temporal patterns. |

| Learning Rate Drop Period | 80 | Allows sufficient epochs per learning rate for stable convergence. |

| Gradient Threshold | 1.2 | Prevents gradient explosion, ensuring stable training. |

| L2 Regularization | 0.002 | Reduces overfitting, validated through cross-validation. |

| Variance Coefficient () | 0.4 | Scales error calculations to match synthetic noise level (5% of coordinate range). |

| Process Noise Covariance (Q) | [1, 1] | Reflects expected movement variability, prioritizing LSTM predictions. |

| Measurement Noise Covariance (R) | [1, 1] | Matches synthetic noise variance, correcting noisy UAV observations. |

| Control Noise | 0.1 | Ensures filter stability for constant velocity model. |

| Algorithm 1 LSTM-hybrid model workflow |

| 1: Input: Historical livestock positions, noisy real-time observations 2: Output: Corrected livestock position predictions 3: Train the LSTM model on historical movement data 4: for each time step t do 5: Predict the livestock’s position using the LSTM () 6: Use the LSTM prediction as the initial state for the KF 7: Perform the KF prediction step to estimate the next state 8: Perform the KF correction step using real-time noisy observations 9: end for |

5. Results

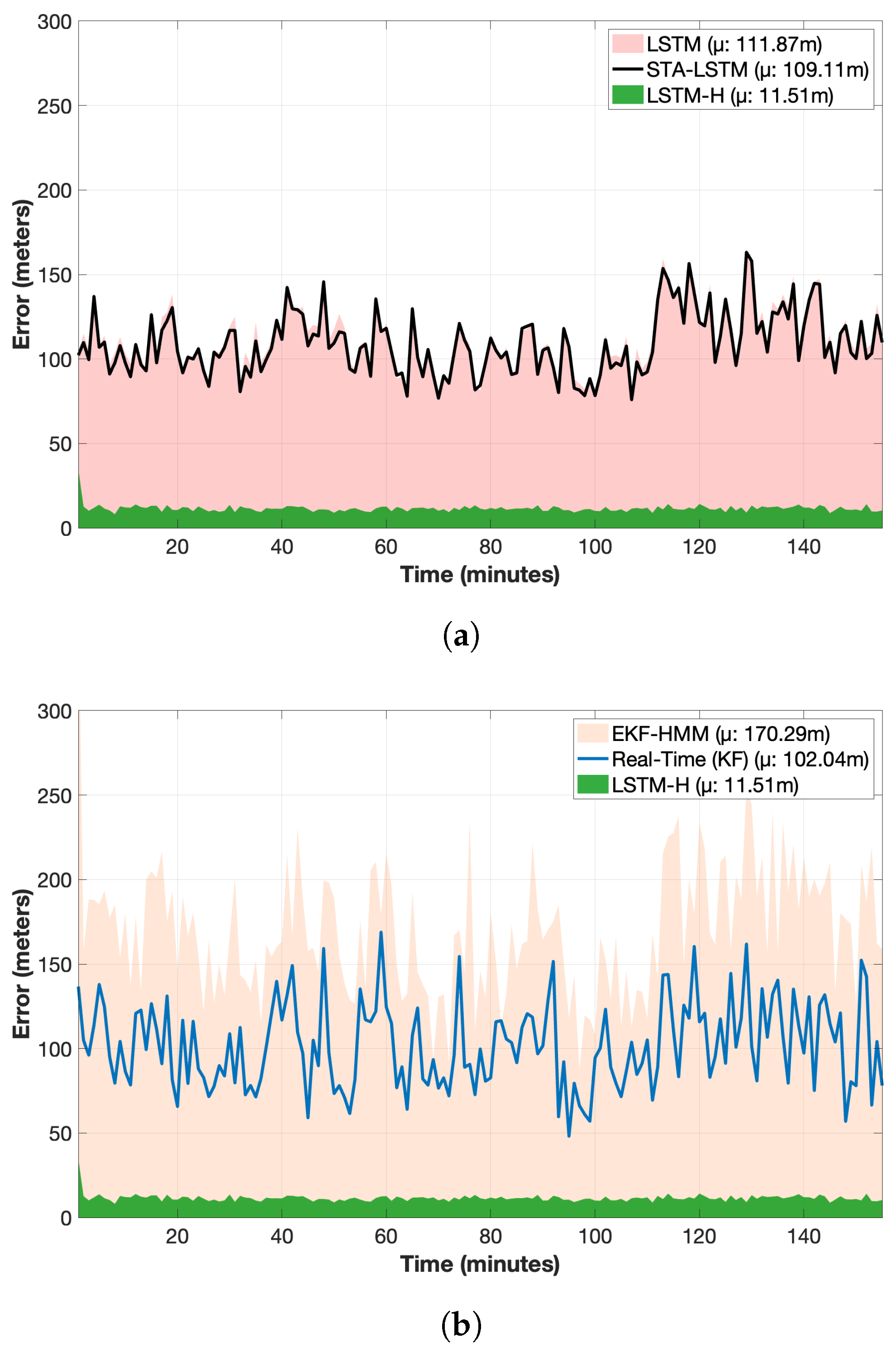

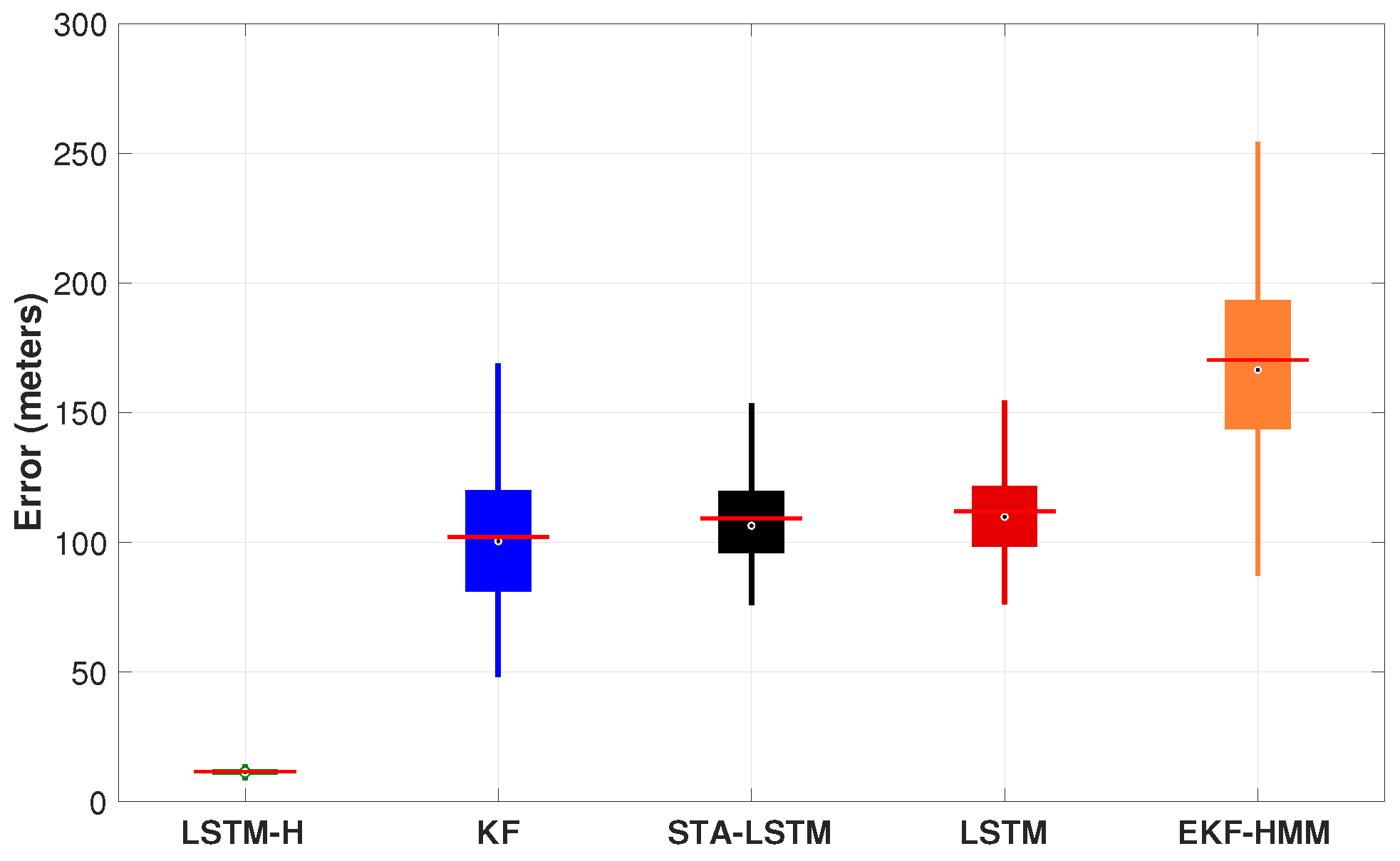

5.1. First-Step Prediction Accuracy Comparison

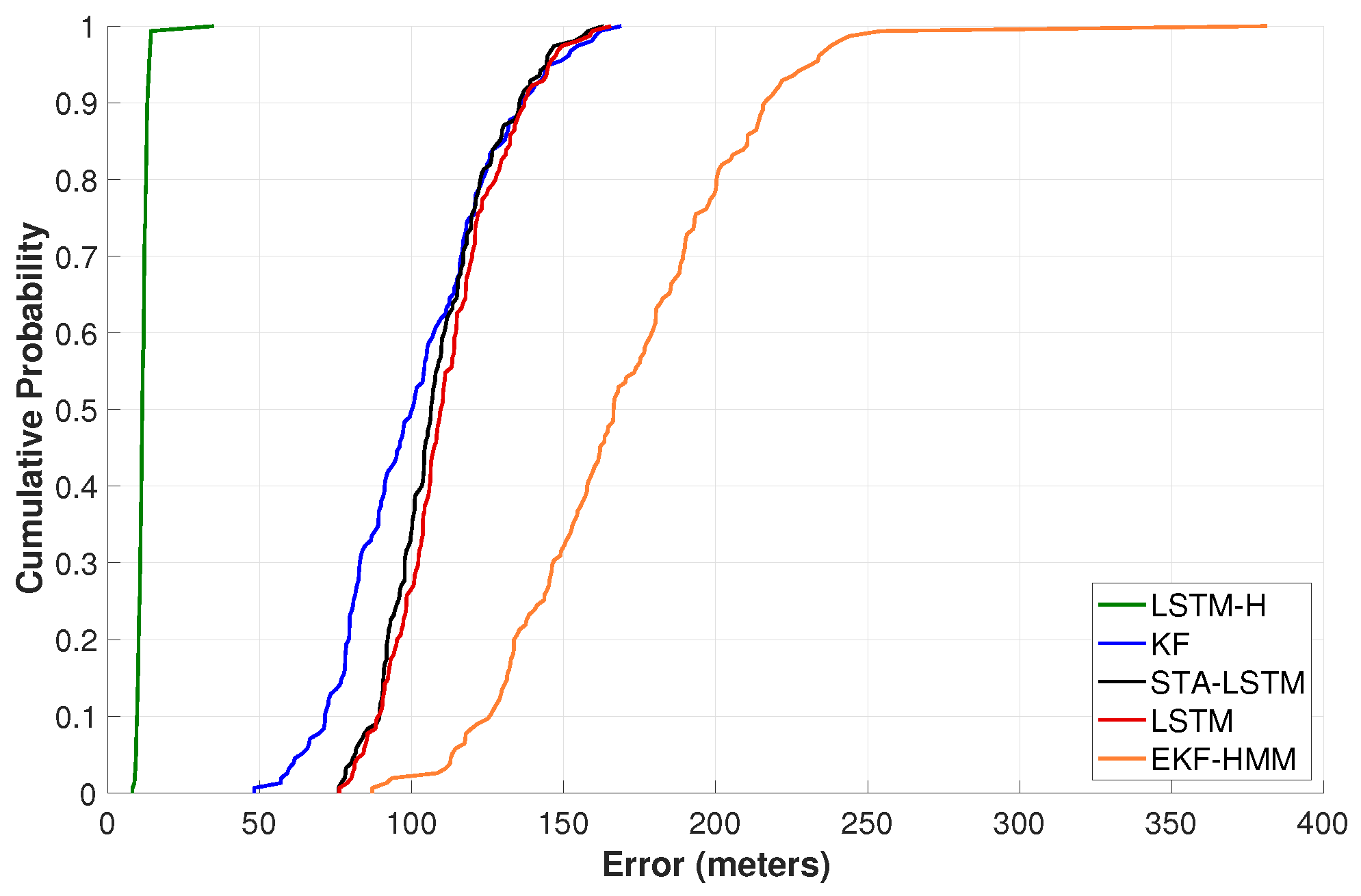

5.2. Error Distribution and Cumulative Analysis

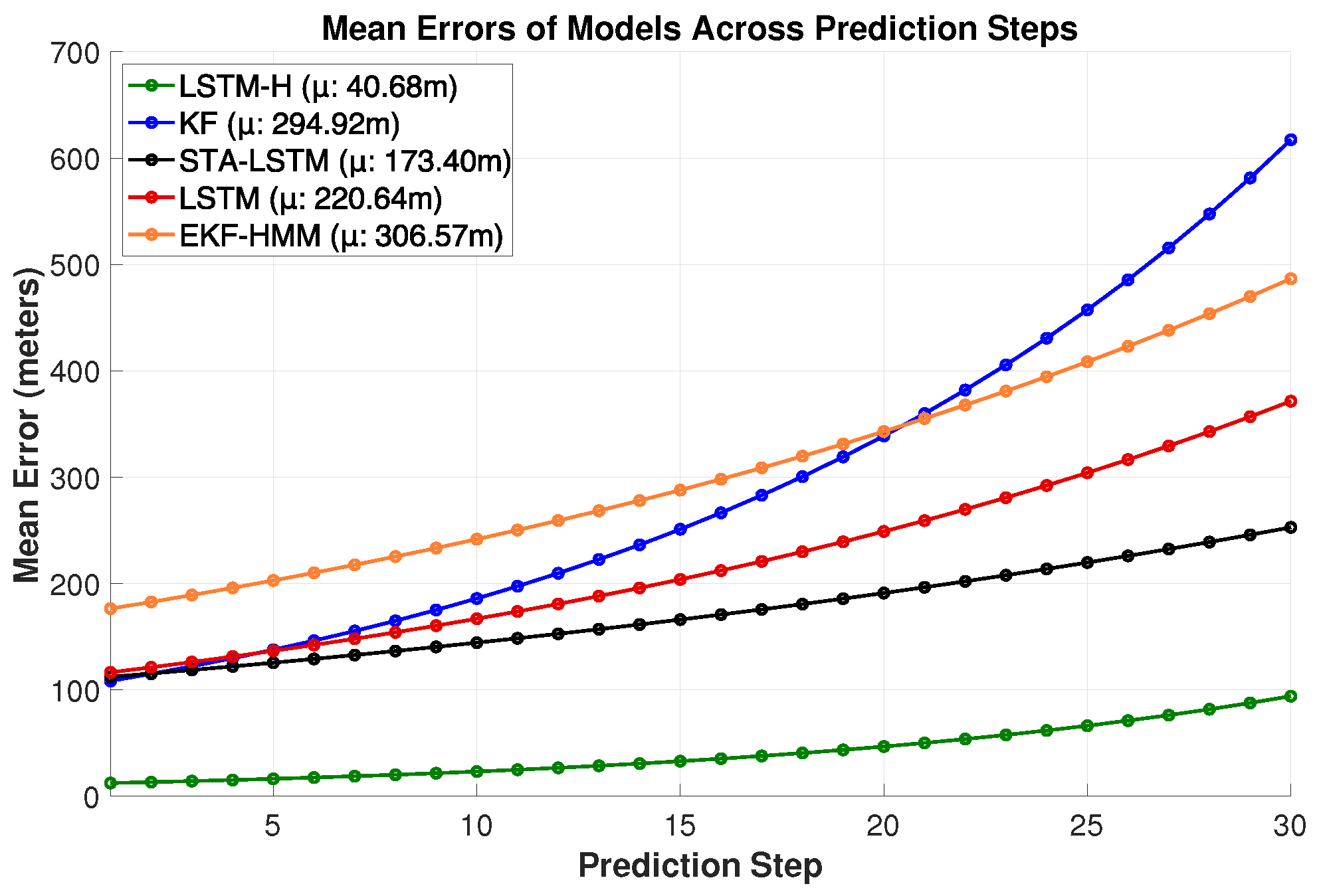

5.3. Prediction Accuracy over 30 Steps

5.4. Summary of Model Performance

6. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

DURC Statement

Conflicts of Interest

References

- Ergunsah, S.; Tümen, V.; Kosunalp, S.; Demir, K. Energy-efficient animal tracking with multi-unmanned aerial vehicle path planning using reinforcement learning and wireless sensor networks. Concurr. Comput. Pract. Exp. 2023, 35, e7527. [Google Scholar] [CrossRef]

- Neethirajan, S.; Kemp, B. Digital livestock farming. Sens. Bio-Sens. Res. 2021, 32, 100408. [Google Scholar] [CrossRef]

- Moradi, S.; Bokani, A.; Hassan, J. UAV-based smart agriculture: A review of UAV sensing and applications. In Proceedings of the 2022 32nd International Telecommunication Networks and Applications Conference (ITNAC), Wellington, New Zealand, 30 November–2 December 2022; pp. 181–184. [Google Scholar]

- Neethirajan, S. The role of sensors, big data and machine learning in modern animal farming. Sens. Bio-Sens. Res. 2020, 29, 100367. [Google Scholar] [CrossRef]

- Mukhamediev, R.I.; Yakunin, K.; Aubakirov, M.; Assanov, I.; Kuchin, Y.; Symagulov, A.; Levashenko, V.; Zaitseva, E.; Sokolov, D.; Amirgaliyev, Y. Coverage path planning optimization of heterogeneous UAVs group for precision agriculture. IEEE Access 2023, 11, 5789–5803. [Google Scholar] [CrossRef]

- Benalaya, N.; Adjih, C.; Amdouni, I.; Laouiti, A.; Saidane, L. UAV search path planning for livestock monitoring. In Proceedings of the 2022 IEEE 11th IFIP International Conference on Performance Evaluation and Modeling in Wireless and Wired Networks (PEMWN), Rome, Italy, 8–10 November 2022; pp. 1–6. [Google Scholar]

- Salehi, S.; Bokani, A.; Hassan, J.; Kanhere, S.S. Aetd: An application-aware, energy-efficient trajectory design for flying base stations. In Proceedings of the 2019 IEEE 14th Malaysia International Conference on Communication (MICC), Selangor, Malaysia, 2–4 December 2019; pp. 19–24. [Google Scholar]

- Salehi, S.; Hassan, J.; Bokani, A. An optimal multi-uav deployment model for uav-assisted smart farming. In Proceedings of the 2022 IEEE Region 10 Symposium (TENSYMP), Mumbai, India, 1–3 July 2022; pp. 1–6. [Google Scholar]

- Monteiro, A.; Santos, S.; Gonçalves, P. Precision agriculture for crop and livestock farming—Brief review. Animals 2021, 11, 2345. [Google Scholar] [CrossRef]

- Strong, A.K.; Martin, S.M.; Bevly, D.M. Utilizing Hidden Markov Models to Classify Maneuvers and Improve Estimates of an Unmanned Aerial Vehicle. IFAC-PapersOnLine 2021, 54, 449–454. [Google Scholar] [CrossRef]

- Kant, R.; Saini, P.; Kumari, J. Long short-term memory auto-encoder-based position prediction model for fixed-wing UAV during communication failure. IEEE Trans. Artif. Intell. 2022, 4, 173–181. [Google Scholar] [CrossRef]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. Mar. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Song, X.; Liu, Y.; Xue, L.; Wang, J.; Zhang, J.; Wang, J.; Jiang, L.; Cheng, Z. Time-series well performance prediction based on Long Short-Term Memory (LSTM) neural network model. J. Pet. Sci. Eng. 2020, 186, 106682. [Google Scholar] [CrossRef]

- Jiang, R.; Xu, H.; Gong, G.; Kuang, Y.; Liu, Z. Spatial-temporal attentive LSTM for vehicle-trajectory prediction. ISPRS Int. J. Geo-Inf. 2022, 11, 354. [Google Scholar] [CrossRef]

- Farjad-Pezeshk, N.; Asadpour, V. Robust chaotic parameter modulation based on hybrid extended Kalman filter and hidden Markov model detector. In Proceedings of the 2011 19th Iranian Conference on Electrical Engineering, Tehran, Iran, 17–19 May 2011; pp. 1–4. [Google Scholar]

- Al-Absi, M.A.; Fu, R.; Kim, K.H.; Lee, Y.S.; Al-Absi, A.A.; Lee, H.J. Tracking unmanned aerial vehicles based on the Kalman filter considering uncertainty and error aware. Electronics 2021, 10, 3067. [Google Scholar] [CrossRef]

- Alos, A.; Dahrouj, Z. Using MLSTM and multioutput convolutional LSTM algorithms for detecting anomalous patterns in streamed data of unmanned aerial vehicles. IEEE Aerosp. Electron. Syst. Mag. 2021, 37, 6–15. [Google Scholar] [CrossRef]

- Gośliński, J.; Giernacki, W.; Królikowski, A. A nonlinear filter for efficient attitude estimation of unmanned aerial vehicle (UAV). J. Intell. Robot. Syst. 2019, 95, 1079–1095. [Google Scholar] [CrossRef]

- Wu, Q.; Zhang, M.; Dong, C.; Feng, Y.; Yuan, Y.; Feng, S.; Quek, T.Q. Routing protocol for heterogeneous FANETs with mobility prediction. China Commun. 2022, 19, 186–201. [Google Scholar] [CrossRef]

- Nicolas, G.; Apolloni, A.; Coste, C.; Wint, G.W.; Lancelot, R.; Gilbert, M. Predictive gravity models of livestock mobility in Mauritania: The effects of supply, demand and cultural factors. PLoS ONE 2018, 13, e0199547. [Google Scholar] [CrossRef] [PubMed]

- Zhao, K.; Jurdak, R. Understanding the spatiotemporal pattern of grazing cattle movement. Sci. Rep. 2016, 6, 31967. [Google Scholar] [CrossRef]

- Suseendran, G.; Balaganesh, D. Cattle movement monitoring and location prediction system using Markov decision process and IoT sensors. In Proceedings of the 2021 2nd International Conference on Intelligent Engineering and Management (ICIEM), London, UK, 28–30 April 2021; pp. 188–192. [Google Scholar]

- Bajardi, P.; Barrat, A.; Savini, L.; Colizza, V. Optimizing surveillance for livestock disease spreading through animal movements. J. R. Soc. Interface 2012, 9, 2814–2825. [Google Scholar] [CrossRef]

- Guo, Y.; Poulton, G.; Corke, P.; Bishop-Hurley, G.; Wark, T.; Swain, D.L. Using accelerometer, high sample rate GPS and magnetometer data to develop a cattle movement and behaviour model. Ecol. Model. 2009, 220, 2068–2075. [Google Scholar] [CrossRef]

- Nielsen, L.R.; Pedersen, A.R.; Herskin, M.S.; Munksgaard, L. Quantifying walking and standing behaviour of dairy cows using a moving average based on output from an accelerometer. Appl. Anim. Behav. Sci. 2010, 127, 12–19. [Google Scholar] [CrossRef]

- Jiandong, Z.; Yukun, G.; Lihui, Z.; Qiming, Y.; Guoqing, S.; Yong, W. Real-time UAV path planning based on LSTM network. J. Syst. Eng. Electron. 2024, 35, 374–385. [Google Scholar]

- Neethirajan, S. Artificial intelligence and sensor innovations: Enhancing livestock welfare with a human-centric approach. Hum.-Centric Intell. Syst. 2024, 4, 77–92. [Google Scholar] [CrossRef]

- Pavlovic, D.; Davison, C.; Hamilton, A.; Marko, O.; Atkinson, R.; Michie, C.; Crnojevic, V.; Andonovic, I.; Bellekens, X.; Tachtatzis, C. Classification of Cattle Behaviours Using Neck-Mounted Accelerometer-Equipped Collars and Convolutional Neural Networks. Sensors 2021, 21, 4050. [Google Scholar] [CrossRef]

- Afimilk Ltd. Afimilk Silent Herdsman-Smart Cow Neck Collar. 2016. Available online: http://www.afimilk.com (accessed on 26 April 2025).

- Weinert-Nelson, J.R.; Werner, J.; Jacobs, A.A.; Anderson, L.; Williams, C.A.; Davis, B.E. Evaluation of the RumiWatch system as a benchmark to monitor feeding and locomotion behaviors of grazing dairy cows. J. Dairy Sci. 2025, 108, 735–749. [Google Scholar] [CrossRef]

- Luo, W.; Zhang, G.; Shao, Q.; Zhao, Y.; Wang, D.; Zhang, X.; Liu, K.; Li, X.; Liu, J.; Wang, P.; et al. An efficient visual servo tracker for herd monitoring by UAV. Sci. Rep. 2024, 14, 10463. [Google Scholar] [CrossRef]

- Shen, P.; Wang, F.; Luo, W.; Zhao, Y.; Li, L.; Zhang, G.; Zhu, Y. Based on improved joint detection and tracking of UAV for multi-target detection of livestock. Heliyon 2024, 10, e38316. [Google Scholar] [CrossRef] [PubMed]

- Luo, W.; Zhang, G.; Yuan, Q.; Zhao, Y.; Chen, H.; Zhou, J.; Meng, Z.; Wang, F.; Li, L.; Liu, J.; et al. High-precision tracking and positioning for monitoring Holstein cattle. PLoS ONE 2024, 19, e0302277. [Google Scholar] [CrossRef]

- Fleming, C.; Drescher-Lehman, J.; Noonan, M.; Akre, T.; Brown, D.; Cochrane, M.; Dejid, N.; DeNicola, V.; DePerno, C.; Dunlop, J.; et al. A comprehensive framework for handling location error in animal tracking data. bioRxiv 2020. [Google Scholar] [CrossRef]

- Liu, K.; Zheng, J. UAV trajectory optimization for time-constrained data collection in UAV-enabled environmental monitoring systems. IEEE Internet Things J. 2022, 9, 24300–24314. [Google Scholar] [CrossRef]

- Nyamuryekung’e, S. Transforming ranching: Precision livestock management in the Internet of Things era. Rangelands 2024, 46, 13–22. [Google Scholar] [CrossRef]

- Panda, S.S.; Terrill, T.H.; Siddique, A.; Mahapatra, A.K.; Morgan, E.R.; Pech-Cervantes, A.A.; Van Wyk, J.A. Development of a decision support system for animal health management using geo-information technology: A novel approach to precision livestock management. Agriculture 2024, 14, 696. [Google Scholar] [CrossRef]

- Velusamy, P.; Rajendran, S.; Mahendran, R.K.; Naseer, S.; Shafiq, M.; Choi, J.G. Unmanned Aerial Vehicles (UAV) in precision agriculture: Applications and challenges. Energies 2021, 15, 217. [Google Scholar] [CrossRef]

- Toscano, F.; Fiorentino, C.; Capece, N.; Erra, U.; Travascia, D.; Scopa, A.; Drosos, M.; D’Antonio, P. Unmanned Aerial Vehicle for Precision Agriculture: A Review. IEEE Access 2024, 12, 69188–69205. [Google Scholar] [CrossRef]

- Kishor, I.; Agrawal, D.; Jain, G.; Dadhich, A.; Gautam, S. Crop fertilization using unmanned aerial vehicles (UAV’s). In Recent Advances in Sciences, Engineering, Information Technology & Management; CRC Press: Boca Raton, FL, USA, 2025; pp. 312–320. [Google Scholar]

- Shehab, M.M.I.; Jany, M.R.; Alam, S.; Sarker, A.; Hossen, M.S.; Shufian, A. Precision Farming with Autonomous Drones: Real-time Data and Efficient Fertilization Techniques. In Proceedings of the 2025 2nd International Conference on Advanced Innovations in Smart Cities (ICAISC), Jeddah, Saudi Arabia, 9–11 February 2025; pp. 1–6. [Google Scholar]

| Model | Step 1 Error | Increase | Step 1–30 Errors | Increase |

|---|---|---|---|---|

| LSTM-H | 11.51 m | - | 40.68 m | - |

| LSTM | 111.87 m | 9.7× | 220.64 m | 5.4× |

| STA-LSTM | 109.11 m | 9.5× | 173.40 m | 4.3× |

| KF | 102.04 m | 8.9× | 294.92 m | 7.2× |

| EKF-HMM | 170.29 m | 14.8× | 306.57 m | 7.5× |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bokani, A.; Yadegaridehkordi, E.; Kanhere, S.S. LSTM-H: A Hybrid Deep Learning Model for Accurate Livestock Movement Prediction in UAV-Based Monitoring Systems. Drones 2025, 9, 346. https://doi.org/10.3390/drones9050346

Bokani A, Yadegaridehkordi E, Kanhere SS. LSTM-H: A Hybrid Deep Learning Model for Accurate Livestock Movement Prediction in UAV-Based Monitoring Systems. Drones. 2025; 9(5):346. https://doi.org/10.3390/drones9050346

Chicago/Turabian StyleBokani, Ayub, Elaheh Yadegaridehkordi, and Salil S. Kanhere. 2025. "LSTM-H: A Hybrid Deep Learning Model for Accurate Livestock Movement Prediction in UAV-Based Monitoring Systems" Drones 9, no. 5: 346. https://doi.org/10.3390/drones9050346

APA StyleBokani, A., Yadegaridehkordi, E., & Kanhere, S. S. (2025). LSTM-H: A Hybrid Deep Learning Model for Accurate Livestock Movement Prediction in UAV-Based Monitoring Systems. Drones, 9(5), 346. https://doi.org/10.3390/drones9050346