1. Introduction

In recent years, drone technology has rapidly emerged as an important tool in various fields such as intelligent video surveillance [

1,

2], human–computer interaction [

3], aviation reconnaissance [

4], precision guidance [

5], military strikes [

6], etc., thanks to its advantages of a small size, a low cost, and flexible movement. With the continuous expansion of drone application scenarios, its autonomous navigation and target-tracking capabilities have become key technologies for improving overall performance. Robust target tracking algorithms not only ensure the stable recognition and localization of specific targets by drones in complex environments, but also provide real-time decision support during tasks such as search and rescue and border patrol. Therefore, research on unmanned aerial vehicle target tracking technology has broad application prospects [

7].

However, in the actual tracking process, due to the complexity of application scenarios and limitations of onboard platforms [

8], achieving precise and robust tracking using drones still faces significant challenges. Firstly, unmanned aerial vehicles (UAVs) have strong maneuverability and flexible movements. During the execution of tracking tasks, they will face more complex and dynamic background environments, which pose certain difficulties for precise target positioning. Secondly, in certain application scenarios, drones may capture the imaging pose of targets from different angles, causing changes in the appearance of the targets and requiring trackers to have strong adaptability. In addition, rapid motion and changes in perspective can cause significant scale changes in the target during tracking, and the accuracy of scale estimation directly affects the tracking accuracy. Therefore, unmanned aerial vehicle target tracking algorithms also require the trackers to have high-scale estimation capabilities.

In recent years, scholars have conducted in-depth research and made significant progress in improving the performance of unmanned aerial vehicle target tracking algorithms. Although deep learning-based methods have demonstrated excellent performance in complex scenarios through learning the high-level semantic features of targets, their tracking accuracy is highly dependent on the training of large-scale annotated datasets, and the distribution characteristics of the training data limit the model’s generalization ability. In addition, the parameter debugging process of neural networks is relatively cumbersome. In contrast, the DCF-based method obtains the features of the target through the first frame of the image sequence and utilizes an online learning mechanism to update the target appearance model in real time, which means it can adapt to dynamic changes in the target appearance without relying on pre-trained datasets. At the same time, the number of parameters is relatively low and the physical meaning is clear. Researchers can adjust the parameters reasonably according to specific scene requirements. The above characteristics make the DCF algorithm more applicable in the field of unmanned aerial vehicle target tracking.

The core idea of a DCF-based tracker is to train a filter on the search area by minimizing the sum of squared errors between the ideal output and the actual output. Then, the filter is correlated with the search area of the next frame to obtain a response map. The position corresponding to the maximum value of the response map is the center of the target. MOSSE (Minimum Output Sum of Squared Error) [

9] was the first technique to apply correlation filtering to the tracking field, converting time-domain correlation operations into frequency-domain element-wise multiplication, significantly reducing the computational complexity and achieving a tracking speed of 669 fps. This is the pioneering work of the DCF tracking algorithm. Subsequently, CSK (Circular Structure with Kernels) [

10] cyclically shifted the training samples to obtain negative samples and established a relationship between correlation filtering and Fourier transform using the diagonalization of cyclic matrices in the Fourier domain, further improving the performance of the DCF tracker. Subsequently, KCF (Kernelized Correlation Filter) [

11] introduced multi-channel HOG (Histogram of Oriented Gradient) features into DCF and transformed the problem of target tracking into a ridge regression problem, laying the foundation for the development of subsequent DCF tracking algorithms.

After KCF, research on DCF trackers mainly focused on fusing different features [

12,

13,

14], establishing more robust appearance models [

6,

15,

16], improving scale estimation strategies [

17,

18], optimizing model update mechanisms [

9,

19], and so on. Although these studies have improved the accuracy of trackers to varying degrees, there are still some issues when applied to the field of UAV target tracking, as follows: (1) UAV scenes often face highly dynamic changes and high-complexity background environments, and therefore require feature representations with higher discriminability and stronger representation capabilities. Discriminative ability refers to the ability of features to distinguish between targets and backgrounds in the spatial dimension, while representational ability refers to the ability of features to adapt to changes in the appearance of targets in the temporal dimension. However, many trackers simply stack multiple features or fuse them in fixed proportions, without exploring the spatial and temporal connections between different features and achieving the adaptive fusion of multiple features in different scenarios. Therefore, the advantages of different features cannot be fully utilized, which, to some extent, limits the performance of trackers. (2) Most existing DCF methods for improving tracker models are based on spatial and temporal regularization terms. The spatial regularization term can effectively suppress the boundary effects caused by cyclic operations, while the temporal regularization term can enhance the temporal continuity of the filter and avoid distortion of the filter model due to factors such as occlusion. Both effectively improve the performance of the tracker. However, there is still room for improvement in existing methods. The spatial regularization term does not pay enough attention to the target, making the tracker susceptible to interference from background clutter, similar objects, and other factors in complex and changing scenes. The temporal regularization term only utilizes the information of adjacent frames of the filter, without fully utilizing earlier historical information and the information of the filter change rate, which, to some extent, limits the anti-distortion ability of the tracker. (3) The improvement in scale filters achieved using the DCF algorithm mostly focuses on implementing aspect ratio adaptation, ignoring the problem of discontinuous scale resolution caused by multi-scale sampling. In some scenarios, drones need to track and aim at targets ranging from far to near, and the scale of the targets will slowly change during this process. When the target scale is scaled between two fixed scale resolutions, due to computational accuracy, the estimated scale of the filter will switch back and forth between the two scales, causing jitter in the center and frame of the target and seriously affecting the robustness of the tracker. In addition, recently, many scholars have introduced saliency detection into DCF trackers, used it to select feature channels, or used it to construct spatial regularization terms. Many studies have shown that the reasonable use of saliency information can improve the tracking accuracy of filters. However, most of these studies utilize saliency information to improve one aspect of the filter, and few scholars have introduced saliency information into multiple aspects of the filter simultaneously to integrate the performance of the tracker, resulting in the underutilization of saliency information.

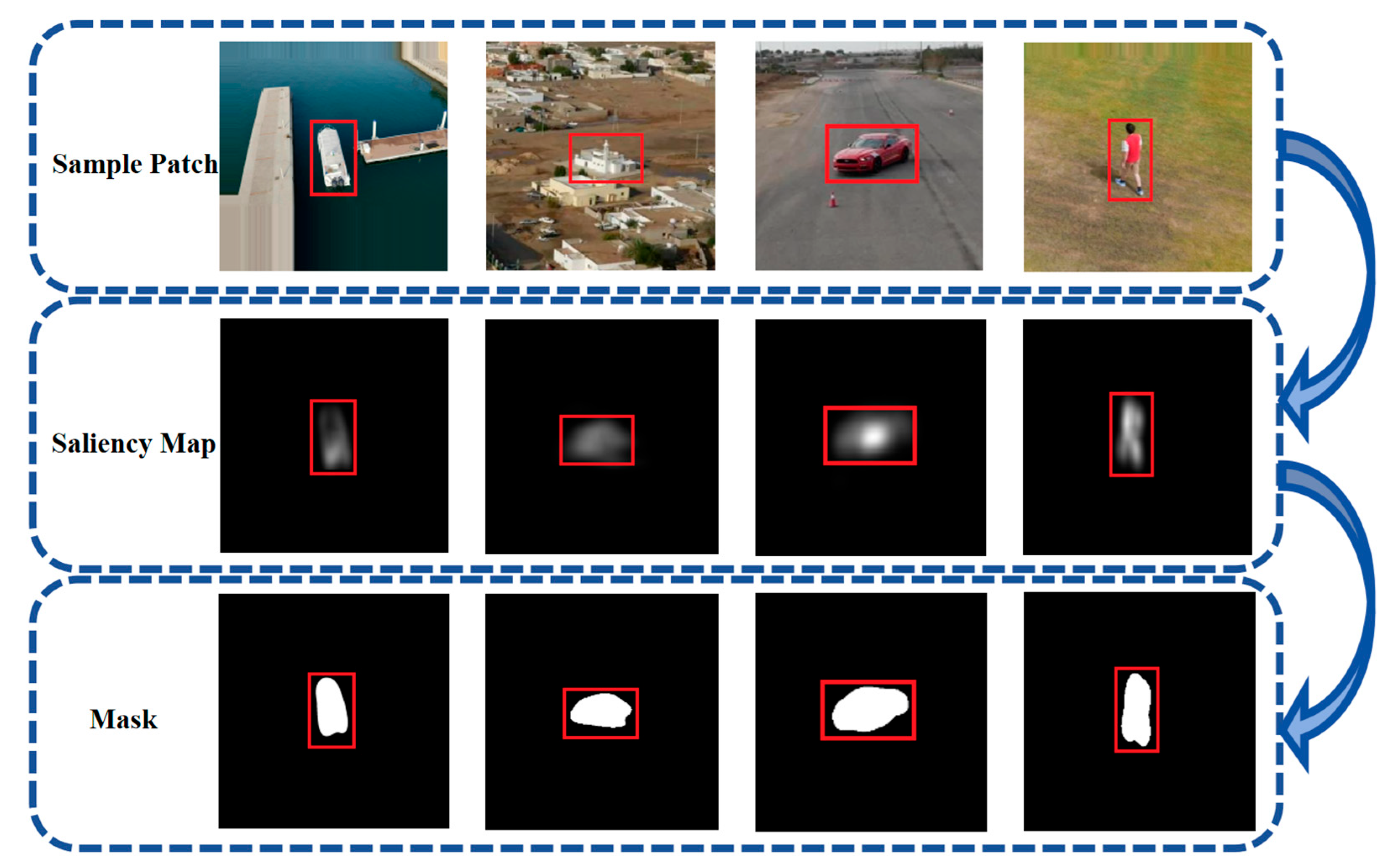

In response to the above issues, this paper proposed a robust UAV target tracking algorithm based on saliency detection (SDBCF), which integrates saliency information into multiple modules, such as feature fusion, model construction, and scale estimation, to comprehensively improve the robustness of the tracker. The main contributions of this paper are as follows:

- (1)

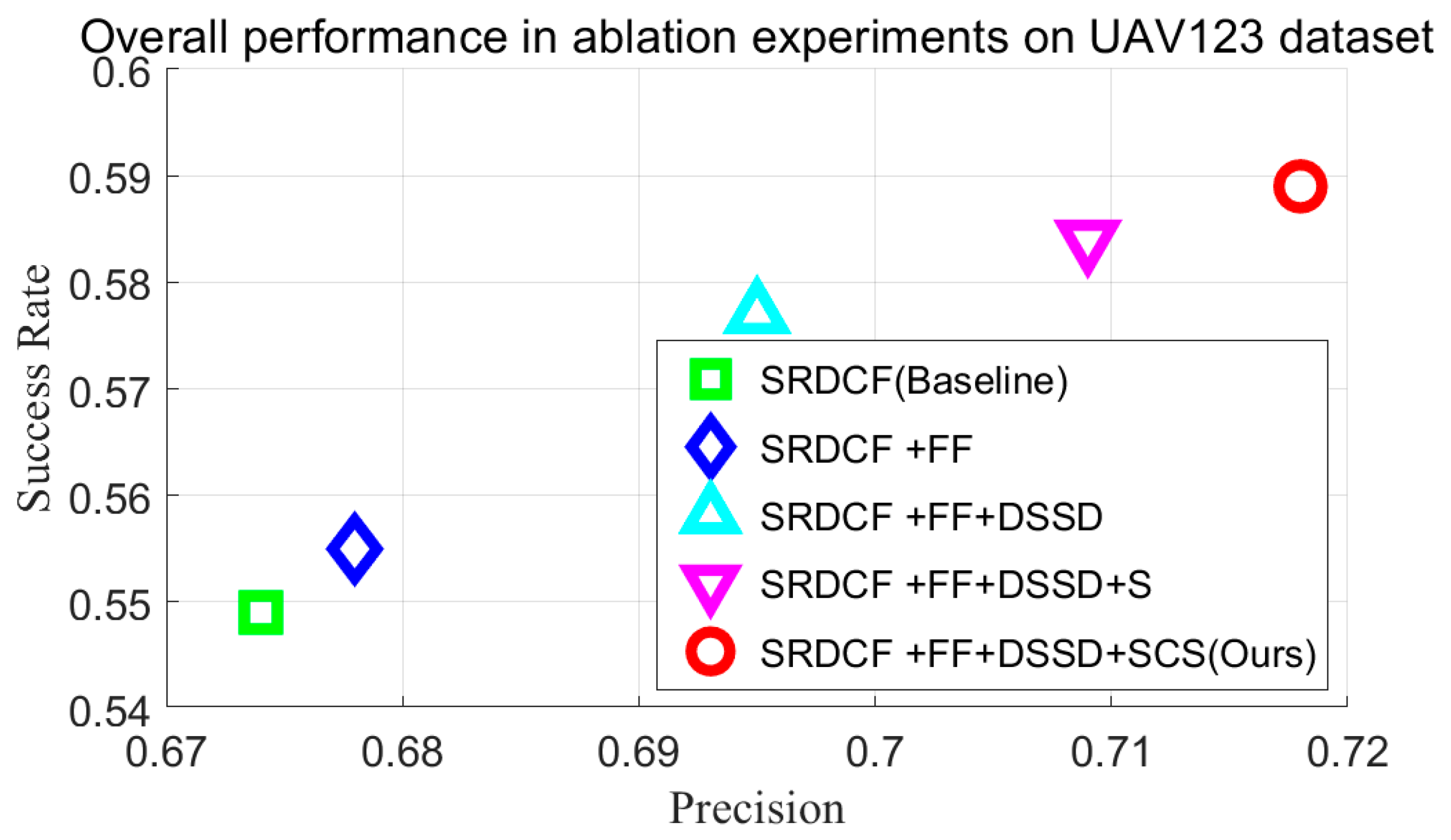

A dynamic feature fusion scheme is proposed. Based on the saliency information and historical information of features, the discriminative and representational abilities of each feature are quantified, enabling the dynamic fusion of features in different scenarios and improving the robustness of the tracker in scenarios such as background clutter and deformation.

- (2)

A robust filter model is constructed. By utilizing saliency detection, the filter model is guided to focus on learning target information in the spatial dimension, while also reasonably learning background information, which enhances the discriminative ability of the filter in scenarios such as background clutter. By utilizing second-order differential regularization terms to constrain the temporal rate of change of the filter, the robustness of the filter in scenarios such as occlusion and camera motion is improved. The use of ADMM method to solve the model improved the tracking speed.

- (3)

A high-precision scale estimation strategy is proposed. The adaptive aspect ratio change in the target is achieved by using the significance detection method, and the continuous scale space is constructed by the interpolation method to estimate the continuous scale change in the target, which improves the robustness of the tracker in scale-change scenarios.

- (4)

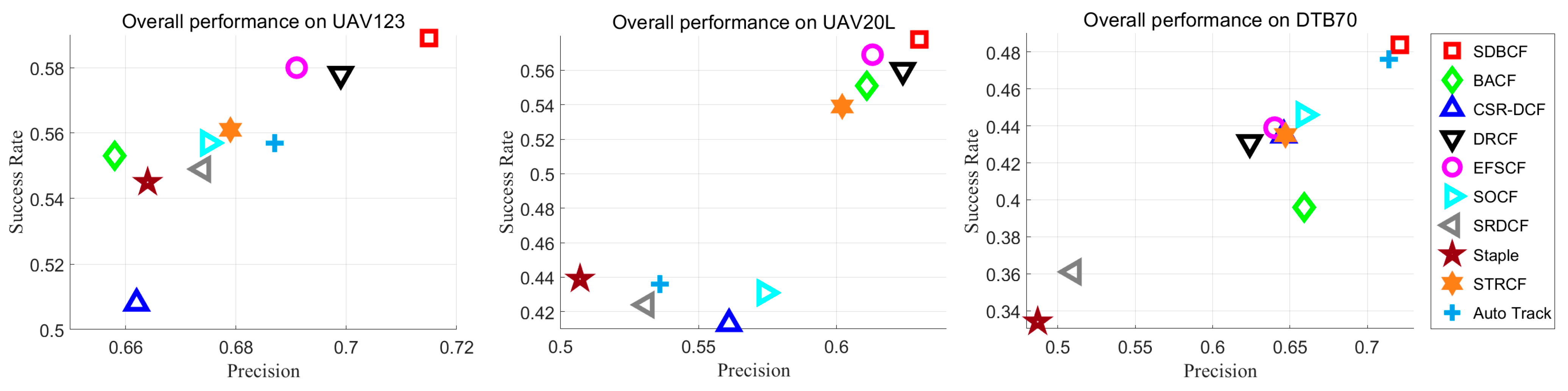

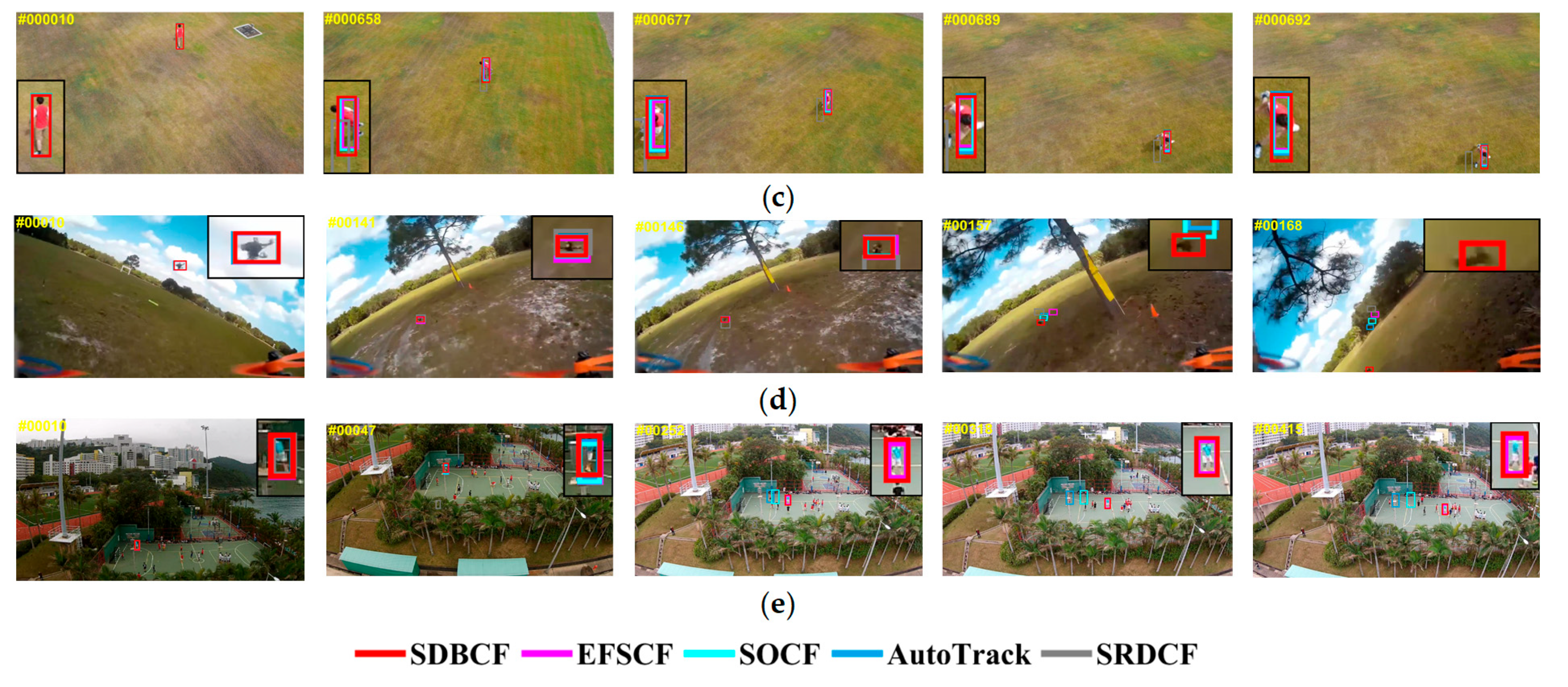

The performance of SDBCF was tested on the UAV123, UAV20L, and DTB70 datasets. The experimental results showed that the tracking accuracy and success rate of the tracker exceeded those of the existing mainstream trackers, and it exhibited superior performance in terms of various attributes, such as background clutter, occlusion, similar object interference, aspect ratio changes, scale changes, and camera motion, proving the effectiveness of the proposed method.

Next, in

Section 2, this paper first reviews the research status of the DCF algorithm, and then introduces the tracking framework and specific details of SDBCF in

Section 3.

Section 4 presents the experimental results and an analysis of SDBCF, as well as comparative algorithms. Finally, the conclusion of this article and the outlook for the future are presented.

2. Related Work

This section provides a brief review of the most relevant DCF trackers to our work, including trackers that optimize filter models, trackers that fuse features, trackers based on saliency detection, and trackers that optimize scale estimation strategies.

In recent years, researchers have made many attempts to optimize filter models and improve the robustness of filters in complex scenarios and have achieved many impressive results. ARCF [

20] adds a response graph regularization term to the DCF framework, which constrains the response graphs between adjacent frames to suppress filter distortion caused by factors such as occlusion, thereby improving the robustness of the tracker. RTSBACF [

21] includes a sample suppression term based on BACF to suppress interfered-with information, and proposed a sample compensation strategy to compensate for undisturbed information, effectively reducing background interference and fully utilizing undisturbed target and background information. BAASR [

22] introduces background-aware information into the objective function and develops a novel spatial regularization term. This regularization term integrates both the appearance information of the target and the reliability of the tracking results, and obtains spatial weight values through an energy function, effectively improving the tracking precision of the filter. SOCF [

23] uses a spatial disturbance suppression strategy, which first utilizes the temporal information in the historical response map to detect disturbance information in the background. Then, a spatial disturbance map is constructed to suppress negative samples in the disturbance area, effectively separating the target from the background and making the filter focus more on the target itself during training. LDECF [

24] proposes a dynamic sensitivity regularization term that constrains the forward-tracking and historical backtracking response of the filter between consecutive frames, making the filter more flexible. This can not only adapt to rapid changes in the target, but can also adapt to highly dynamic background environments.

The adaptive fusion of features is one of the current research hotspots in DCF algorithms, with the aim of providing a more comprehensive and accurate description of the target. LSVKSCF [

25] is a new multi-expert feature fusion strategy. In this method, multiple features are randomly combined, and the target is tracked and predicted separately. The mutual overlap rate of the predicted bounding boxes and the self-overlap rate between historical frames are used to evaluate each feature, thereby achieving an adaptive fusion of features. UOT [

26] uses a two-step feature compression mechanism that reduces feature dimensionality by compressing redundant features, while combining multiple compressed features based on the PSR (Peak to Sidelobe Ratio) [

9] to accurately characterize the target. Zhang et al. [

27] proposed a feature fusion method that first enhances the features using the PSR, making the response map smoother and the peaks more prominent. Then, different features are fused based on the enhanced response, so that the fused features can better describe the target. SCSTCF [

28] uses a binary linear programming feature fusion method, which pre-set three fusion weights, fused response maps separately for each weight, and selected the weight with the minimum L2 norm of the difference between the response maps before and after fusion as the final fusion weight, improving the adaptive ability of the tracker. DSTRCFT [

29] uses a multi-memory tracking framework that combines different features to track targets in parallel, and uses memory evaluation based on reliability scores to select the best memory for the final tracking results.

In order to improve the performance of trackers in complex scenes, many scholars have introduced image segmentation into the field of target tracking, which is used to learn target and non-target regions separately. Significance testing is the most common method. DRCF [

30] constructs a dual regularized correlation filter tracker for saliency detection, integrating saliency information into both spatial regularization and ridge regression terms to impose stronger penalties on non-target regions. SDCS-CF [

31] uses a pixel-level saliency map generation method to adjust the weights of search area features, thereby achieving robust tracking and improving resistance to background interference. Zhang et al. [

32] integrated a saliency enhancement term in the DCF framework to emphasize target information, enabling the tracker to effectively highlight the target during tracking while suppressing background noise. TRSADCF [

33] uses saliency information to distinguish between target and non-target regions in the response map, thereby improving the filter response in the target region. CSCF [

34] combines saliency information with filters and integrates them into the DCF framework, improving the tracking performance of trackers in complex scenes such as deformation, motion blur, and background clutter.

The results of scale estimation have a significant impact on the performance of trackers. When the tracking box is too large, the template will contain too much background information; when the tracking box is too small, the template will lose some target features, making it difficult for it to fully learn the appearance of the target, ultimately leading to tracking failure. Therefore, improving the accuracy of scale estimation is also a research focus of DCF trackers. PSACF [

35] uses a scale adaptive method based on probability estimation, which utilizes a Gaussian distribution model to estimate the target scale and introduces the assumption of global and local scale consistency to restore the target scale. This method can handle scale changes without using multi-scale features. He et al. [

36] proposed a variable scale learning method that introduces a variable scale factor, overcoming the limitations of fixed scale factors in the commonly used multi-scale search strategies. Then, multi-scale aspect ratios compensate for the shortcomings of constant aspect ratios. By combining variable scale factors with multiple aspect ratios, a variable scale aspect ratio estimation method is implemented. FAST [

37] proposed a scale search scheme that integrates Average Peak Correlation Energy (APCE) [

38] into the displacement filter framework to achieve robust and accurate scale estimation. MCPF [

37] utilizes a particle sampling strategy for the scale estimation of targets, effectively addressing the problem of large-scale variations. Chen et al. [

39] proposed a two-dimensional scale space search method that detects the width and height of the target separately, improving the performance of the tracker in scenarios with large-scale and vertical/horizontal changes in the target.

Compared to the existing research, the uniqueness of SDBCF lies in its deep integration of the complexity and variability of drone target tracking scenarios, and the implementation of the collaborative optimization of multiple modules of the tracker through saliency methods, thereby comprehensively enhancing the robustness of the tracker in complex environments. Specifically, in the feature fusion module, SDBCF fully explores the spatial and temporal dimensions of each feature, achieving the adaptive fusion of multiple features, and thereby enhancing the comprehensiveness and adaptability of feature representation. In terms of model construction, SDBCF effectively suppresses interference from non-target areas using saliency information, and by imposing constraints on the temporal rate of change in the filter, it effectively avoids model distortion and constructs a more robust filter model. In terms of scale estimation, the SDBCF algorithm not only adapts to changes in the aspect ratio of the target, but also accurately estimates continuous changes in the target scale, significantly improving the accuracy and flexibility of scale estimation.

5. Conclusions

This paper proposed a robust UAV target tracking algorithm SDBCF based on saliency detection. As UAV target tracking is easily affected by background clutter, similar object interference, camera motion, occlusion, scale changes and other factors, improvement strategies for SRDCF were proposed, focusing on three aspects: feature fusion, model construction, and scale estimation. In terms of feature fusion, SDBCF fully exploits the spatial and temporal information of features, achieving the dynamic fusion of features in complex scenes. In terms of model construction, SDBCF integrates dynamic spatial regularization terms and second-order differential regularization terms into the DCF framework, improving the robustness of the filter in scenarios such as background clutter, similar object interference, and occlusion. In terms of scale estimation, SDBCF achieves adaptive response to changes in target aspect ratio and can continuously estimate changes in target scale, effectively improving the tracker’s ability to cope with scale changes. SDBCF utilizes significance detection methods to connect various modules together, fully leveraging the role of significance detection. Comparative experiments conducted on the UAV123, UAV20L, and DTB70 datasets showed that SDBCF had the best DP and AUC scores on all three datasets, with improvements of (4.1%, 5.0%), (9.9%, 15.4%), and (21.1%, 12.3%) compared to SRDCF, respectively. When facing similar background interference, background clutter, occlusion, scale variation, aspect ratio variation, camera motion, etc., the tracking performance of SDBCF is significantly better than other tracking methods, fully demonstrating the superiority of SDBCF.

Although the tracking performance of SDBCF has significantly improved, SDBCF only uses manual features and has a limited ability to represent targets, resulting in poor performance in, for example, low-resolution scenarios. Therefore, in the next step, we will consider introducing deep features into the feature fusion module to further improve the tracking performance of SDBCF without significantly affecting the tracking speed. At the same time, it was found during the experiment that SDBCF had poor robustness in scenarios such as complete occlusion and objects being out of view. This is because SDBCF did not design reliability detection modules and redetection modules, and therefore cannot respond to tracking failures in a timely manner. Therefore, we will also consider carrying out related work. In addition, the scale estimation strategy proposed by SDBCF is actually a universal scale estimation method for DCF trackers. Therefore, the next step will be to conduct relevant experiments to further investigate the performance of the scale estimation module on other trackers.