Abstract

Due to their high efficiency and real-time performance, discriminant correlation filtering (DCF) trackers have been widely applied in unmanned aerial vehicle (UAV) tracking. However, the robustness of existing trackers is still poor when facing complex scenes, such as background clutter, occlusion, camera motion, and scale variations. In response to this problem, this paper proposes a robust UAV target tracking algorithm based on saliency detection (SDBCF). Using saliency detection methods, the DCF tracker is optimized in three aspects to enhance the robustness of the tracker in complex scenes: feature fusion, filter-model construct, and scale-estimation methods improve. Firstly, this article analyzes the features from both spatial and temporal dimensions, evaluates the representational and discriminative abilities of different features, and achieves adaptive feature fusion. Secondly, this paper constructs a dynamic spatial regularization term using a mask that fits the target, and integrates it with a second-order differential regularization term into the DCF framework to construct a novel filter model, which is solved using the ADMM method. Next, this article uses saliency detection to supervise the aspect ratio of the target, and trains a scale filter in the continuous domain to improve the tracker’s adaptability to scale variations. Finally, comparative experiments were conducted with various DCF trackers on three UAV datasets: UAV123, UAV20L, and DTB70. The DP and AUC scores of SDBCF on the three datasets were (71.5%, 58.9%), (63.0%, 57.8%), and (72.1%, 48.4%), respectively. The experimental results indicate that SDBCF achieves a superior performance.

1. Introduction

In recent years, drone technology has rapidly emerged as an important tool in various fields such as intelligent video surveillance [1,2], human–computer interaction [3], aviation reconnaissance [4], precision guidance [5], military strikes [6], etc., thanks to its advantages of a small size, a low cost, and flexible movement. With the continuous expansion of drone application scenarios, its autonomous navigation and target-tracking capabilities have become key technologies for improving overall performance. Robust target tracking algorithms not only ensure the stable recognition and localization of specific targets by drones in complex environments, but also provide real-time decision support during tasks such as search and rescue and border patrol. Therefore, research on unmanned aerial vehicle target tracking technology has broad application prospects [7].

However, in the actual tracking process, due to the complexity of application scenarios and limitations of onboard platforms [8], achieving precise and robust tracking using drones still faces significant challenges. Firstly, unmanned aerial vehicles (UAVs) have strong maneuverability and flexible movements. During the execution of tracking tasks, they will face more complex and dynamic background environments, which pose certain difficulties for precise target positioning. Secondly, in certain application scenarios, drones may capture the imaging pose of targets from different angles, causing changes in the appearance of the targets and requiring trackers to have strong adaptability. In addition, rapid motion and changes in perspective can cause significant scale changes in the target during tracking, and the accuracy of scale estimation directly affects the tracking accuracy. Therefore, unmanned aerial vehicle target tracking algorithms also require the trackers to have high-scale estimation capabilities.

In recent years, scholars have conducted in-depth research and made significant progress in improving the performance of unmanned aerial vehicle target tracking algorithms. Although deep learning-based methods have demonstrated excellent performance in complex scenarios through learning the high-level semantic features of targets, their tracking accuracy is highly dependent on the training of large-scale annotated datasets, and the distribution characteristics of the training data limit the model’s generalization ability. In addition, the parameter debugging process of neural networks is relatively cumbersome. In contrast, the DCF-based method obtains the features of the target through the first frame of the image sequence and utilizes an online learning mechanism to update the target appearance model in real time, which means it can adapt to dynamic changes in the target appearance without relying on pre-trained datasets. At the same time, the number of parameters is relatively low and the physical meaning is clear. Researchers can adjust the parameters reasonably according to specific scene requirements. The above characteristics make the DCF algorithm more applicable in the field of unmanned aerial vehicle target tracking.

The core idea of a DCF-based tracker is to train a filter on the search area by minimizing the sum of squared errors between the ideal output and the actual output. Then, the filter is correlated with the search area of the next frame to obtain a response map. The position corresponding to the maximum value of the response map is the center of the target. MOSSE (Minimum Output Sum of Squared Error) [9] was the first technique to apply correlation filtering to the tracking field, converting time-domain correlation operations into frequency-domain element-wise multiplication, significantly reducing the computational complexity and achieving a tracking speed of 669 fps. This is the pioneering work of the DCF tracking algorithm. Subsequently, CSK (Circular Structure with Kernels) [10] cyclically shifted the training samples to obtain negative samples and established a relationship between correlation filtering and Fourier transform using the diagonalization of cyclic matrices in the Fourier domain, further improving the performance of the DCF tracker. Subsequently, KCF (Kernelized Correlation Filter) [11] introduced multi-channel HOG (Histogram of Oriented Gradient) features into DCF and transformed the problem of target tracking into a ridge regression problem, laying the foundation for the development of subsequent DCF tracking algorithms.

After KCF, research on DCF trackers mainly focused on fusing different features [12,13,14], establishing more robust appearance models [6,15,16], improving scale estimation strategies [17,18], optimizing model update mechanisms [9,19], and so on. Although these studies have improved the accuracy of trackers to varying degrees, there are still some issues when applied to the field of UAV target tracking, as follows: (1) UAV scenes often face highly dynamic changes and high-complexity background environments, and therefore require feature representations with higher discriminability and stronger representation capabilities. Discriminative ability refers to the ability of features to distinguish between targets and backgrounds in the spatial dimension, while representational ability refers to the ability of features to adapt to changes in the appearance of targets in the temporal dimension. However, many trackers simply stack multiple features or fuse them in fixed proportions, without exploring the spatial and temporal connections between different features and achieving the adaptive fusion of multiple features in different scenarios. Therefore, the advantages of different features cannot be fully utilized, which, to some extent, limits the performance of trackers. (2) Most existing DCF methods for improving tracker models are based on spatial and temporal regularization terms. The spatial regularization term can effectively suppress the boundary effects caused by cyclic operations, while the temporal regularization term can enhance the temporal continuity of the filter and avoid distortion of the filter model due to factors such as occlusion. Both effectively improve the performance of the tracker. However, there is still room for improvement in existing methods. The spatial regularization term does not pay enough attention to the target, making the tracker susceptible to interference from background clutter, similar objects, and other factors in complex and changing scenes. The temporal regularization term only utilizes the information of adjacent frames of the filter, without fully utilizing earlier historical information and the information of the filter change rate, which, to some extent, limits the anti-distortion ability of the tracker. (3) The improvement in scale filters achieved using the DCF algorithm mostly focuses on implementing aspect ratio adaptation, ignoring the problem of discontinuous scale resolution caused by multi-scale sampling. In some scenarios, drones need to track and aim at targets ranging from far to near, and the scale of the targets will slowly change during this process. When the target scale is scaled between two fixed scale resolutions, due to computational accuracy, the estimated scale of the filter will switch back and forth between the two scales, causing jitter in the center and frame of the target and seriously affecting the robustness of the tracker. In addition, recently, many scholars have introduced saliency detection into DCF trackers, used it to select feature channels, or used it to construct spatial regularization terms. Many studies have shown that the reasonable use of saliency information can improve the tracking accuracy of filters. However, most of these studies utilize saliency information to improve one aspect of the filter, and few scholars have introduced saliency information into multiple aspects of the filter simultaneously to integrate the performance of the tracker, resulting in the underutilization of saliency information.

In response to the above issues, this paper proposed a robust UAV target tracking algorithm based on saliency detection (SDBCF), which integrates saliency information into multiple modules, such as feature fusion, model construction, and scale estimation, to comprehensively improve the robustness of the tracker. The main contributions of this paper are as follows:

- (1)

- A dynamic feature fusion scheme is proposed. Based on the saliency information and historical information of features, the discriminative and representational abilities of each feature are quantified, enabling the dynamic fusion of features in different scenarios and improving the robustness of the tracker in scenarios such as background clutter and deformation.

- (2)

- A robust filter model is constructed. By utilizing saliency detection, the filter model is guided to focus on learning target information in the spatial dimension, while also reasonably learning background information, which enhances the discriminative ability of the filter in scenarios such as background clutter. By utilizing second-order differential regularization terms to constrain the temporal rate of change of the filter, the robustness of the filter in scenarios such as occlusion and camera motion is improved. The use of ADMM method to solve the model improved the tracking speed.

- (3)

- A high-precision scale estimation strategy is proposed. The adaptive aspect ratio change in the target is achieved by using the significance detection method, and the continuous scale space is constructed by the interpolation method to estimate the continuous scale change in the target, which improves the robustness of the tracker in scale-change scenarios.

- (4)

- The performance of SDBCF was tested on the UAV123, UAV20L, and DTB70 datasets. The experimental results showed that the tracking accuracy and success rate of the tracker exceeded those of the existing mainstream trackers, and it exhibited superior performance in terms of various attributes, such as background clutter, occlusion, similar object interference, aspect ratio changes, scale changes, and camera motion, proving the effectiveness of the proposed method.

Next, in Section 2, this paper first reviews the research status of the DCF algorithm, and then introduces the tracking framework and specific details of SDBCF in Section 3. Section 4 presents the experimental results and an analysis of SDBCF, as well as comparative algorithms. Finally, the conclusion of this article and the outlook for the future are presented.

2. Related Work

This section provides a brief review of the most relevant DCF trackers to our work, including trackers that optimize filter models, trackers that fuse features, trackers based on saliency detection, and trackers that optimize scale estimation strategies.

In recent years, researchers have made many attempts to optimize filter models and improve the robustness of filters in complex scenarios and have achieved many impressive results. ARCF [20] adds a response graph regularization term to the DCF framework, which constrains the response graphs between adjacent frames to suppress filter distortion caused by factors such as occlusion, thereby improving the robustness of the tracker. RTSBACF [21] includes a sample suppression term based on BACF to suppress interfered-with information, and proposed a sample compensation strategy to compensate for undisturbed information, effectively reducing background interference and fully utilizing undisturbed target and background information. BAASR [22] introduces background-aware information into the objective function and develops a novel spatial regularization term. This regularization term integrates both the appearance information of the target and the reliability of the tracking results, and obtains spatial weight values through an energy function, effectively improving the tracking precision of the filter. SOCF [23] uses a spatial disturbance suppression strategy, which first utilizes the temporal information in the historical response map to detect disturbance information in the background. Then, a spatial disturbance map is constructed to suppress negative samples in the disturbance area, effectively separating the target from the background and making the filter focus more on the target itself during training. LDECF [24] proposes a dynamic sensitivity regularization term that constrains the forward-tracking and historical backtracking response of the filter between consecutive frames, making the filter more flexible. This can not only adapt to rapid changes in the target, but can also adapt to highly dynamic background environments.

The adaptive fusion of features is one of the current research hotspots in DCF algorithms, with the aim of providing a more comprehensive and accurate description of the target. LSVKSCF [25] is a new multi-expert feature fusion strategy. In this method, multiple features are randomly combined, and the target is tracked and predicted separately. The mutual overlap rate of the predicted bounding boxes and the self-overlap rate between historical frames are used to evaluate each feature, thereby achieving an adaptive fusion of features. UOT [26] uses a two-step feature compression mechanism that reduces feature dimensionality by compressing redundant features, while combining multiple compressed features based on the PSR (Peak to Sidelobe Ratio) [9] to accurately characterize the target. Zhang et al. [27] proposed a feature fusion method that first enhances the features using the PSR, making the response map smoother and the peaks more prominent. Then, different features are fused based on the enhanced response, so that the fused features can better describe the target. SCSTCF [28] uses a binary linear programming feature fusion method, which pre-set three fusion weights, fused response maps separately for each weight, and selected the weight with the minimum L2 norm of the difference between the response maps before and after fusion as the final fusion weight, improving the adaptive ability of the tracker. DSTRCFT [29] uses a multi-memory tracking framework that combines different features to track targets in parallel, and uses memory evaluation based on reliability scores to select the best memory for the final tracking results.

In order to improve the performance of trackers in complex scenes, many scholars have introduced image segmentation into the field of target tracking, which is used to learn target and non-target regions separately. Significance testing is the most common method. DRCF [30] constructs a dual regularized correlation filter tracker for saliency detection, integrating saliency information into both spatial regularization and ridge regression terms to impose stronger penalties on non-target regions. SDCS-CF [31] uses a pixel-level saliency map generation method to adjust the weights of search area features, thereby achieving robust tracking and improving resistance to background interference. Zhang et al. [32] integrated a saliency enhancement term in the DCF framework to emphasize target information, enabling the tracker to effectively highlight the target during tracking while suppressing background noise. TRSADCF [33] uses saliency information to distinguish between target and non-target regions in the response map, thereby improving the filter response in the target region. CSCF [34] combines saliency information with filters and integrates them into the DCF framework, improving the tracking performance of trackers in complex scenes such as deformation, motion blur, and background clutter.

The results of scale estimation have a significant impact on the performance of trackers. When the tracking box is too large, the template will contain too much background information; when the tracking box is too small, the template will lose some target features, making it difficult for it to fully learn the appearance of the target, ultimately leading to tracking failure. Therefore, improving the accuracy of scale estimation is also a research focus of DCF trackers. PSACF [35] uses a scale adaptive method based on probability estimation, which utilizes a Gaussian distribution model to estimate the target scale and introduces the assumption of global and local scale consistency to restore the target scale. This method can handle scale changes without using multi-scale features. He et al. [36] proposed a variable scale learning method that introduces a variable scale factor, overcoming the limitations of fixed scale factors in the commonly used multi-scale search strategies. Then, multi-scale aspect ratios compensate for the shortcomings of constant aspect ratios. By combining variable scale factors with multiple aspect ratios, a variable scale aspect ratio estimation method is implemented. FAST [37] proposed a scale search scheme that integrates Average Peak Correlation Energy (APCE) [38] into the displacement filter framework to achieve robust and accurate scale estimation. MCPF [37] utilizes a particle sampling strategy for the scale estimation of targets, effectively addressing the problem of large-scale variations. Chen et al. [39] proposed a two-dimensional scale space search method that detects the width and height of the target separately, improving the performance of the tracker in scenarios with large-scale and vertical/horizontal changes in the target.

Compared to the existing research, the uniqueness of SDBCF lies in its deep integration of the complexity and variability of drone target tracking scenarios, and the implementation of the collaborative optimization of multiple modules of the tracker through saliency methods, thereby comprehensively enhancing the robustness of the tracker in complex environments. Specifically, in the feature fusion module, SDBCF fully explores the spatial and temporal dimensions of each feature, achieving the adaptive fusion of multiple features, and thereby enhancing the comprehensiveness and adaptability of feature representation. In terms of model construction, SDBCF effectively suppresses interference from non-target areas using saliency information, and by imposing constraints on the temporal rate of change in the filter, it effectively avoids model distortion and constructs a more robust filter model. In terms of scale estimation, the SDBCF algorithm not only adapts to changes in the aspect ratio of the target, but also accurately estimates continuous changes in the target scale, significantly improving the accuracy and flexibility of scale estimation.

3. Proposed Method

In this section, we first review the basic framework of the DCF tracker. Then, we provide a detailed introduction to the SDBCF we propose.

3.1. Conventional Correlation Filter Learning

3.1.1. Displacement Filter

Traditional DCF trackers typically consist of a displacement filter and a scale filter. Firstly, the displacement filter is used to estimate the target position, and then the scale filter is used to estimate the target scale. Our displacement filter is optimized based on SRDCF. SRDCF is typically trained by minimizing the objective function in Equation (1):

is the sample feature of size extracted in frame , represents the number of feature channels, is the expected Gaussian shape response, represents the filter of the channel trained in the frame, and represents the discrete cyclic correlation operator. is the spatial regularization term, is the weight of the bowl-shaped static spatial regularization term, and represents the Hadamard product.

3.1.2. Scale Filter

Most DCF tracking algorithms use a one-dimensional scale filter to estimate the scale of the target. Specifically, let represent the initial frame target size, while represents the number of scale pyramids. For each , an image block of size is extracted from the position estimated by the displacement filter as the center to form a scale pyramid. represents the scale factor between feature layers. All image blocks are scaled to size ; -dimensional features are extracted separately, and used as the values of training sample at scale , where is the number of samples contained in the corresponding features of each image block. After convolving the scale filter with the features of each scale to obtain the response values, the final response function can be obtained by summing the response values along the interior of each scale. The scale filter is achieved by minimizing the objective function in Equation (2):

where is the expected output response and is the regularization parameter that controls overfitting.

3.2. Robust UAV Target Tracking Algorithm Based on Saliency Detection

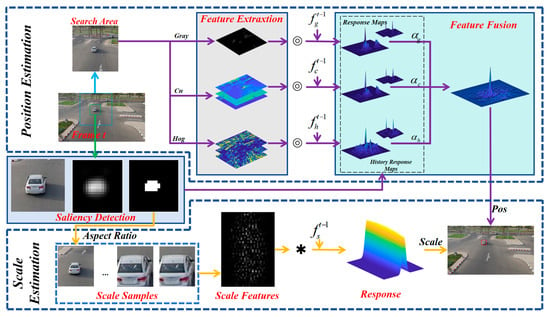

The tracking flow of SDBCF is shown in Figure 1, which is divided into two parts: position estimation and scale estimation. In the frame of the image sequence, we first extract features from the search area and perform saliency detection around the target to obtain a mask, which fits the appearance of the target. Then, the features are correlated with the displacement filter trained in frame to obtain the response map corresponding to each feature. The response maps are fused using the spatial and temporal information of features and the target position is estimated based on the fused response map. Subsequently, near the estimated target position, image blocks of different scales are extracted based on the aspect ratio provided by the mask map to obtain discrete scale features. These features are interpolated to extend to the continuous domain and convolved with the scale filter trained in frame to obtain the response map of the continuous domain, which is used to estimate the target scale.

Figure 1.

Algorithm flowchart in this paper. The purple line in the figure represents the process related to position estimation, and the yellow line represents the process related to scale estimation.

The objective function of SDBCF is shown in Equation (3):

In the formula, is the dynamic spatial regularization term. is the weight to be optimized, is the reference weight during the training process, is the mask image that fits the target, is the static spatial regularization term inherited from the SRDCF tracker, and is the spatial regularization term parameter. is a second-order differential regularization term, where , . is the coefficient of the differential regularization term.

Here, we did not directly involve the dynamic spatial regularization term obtained by multiplying the mask with the static spatial regularization term in the filter training. Instead, we added it as a reference term to the objective function, which allows the filter to appropriately learn some background information during the training process, thereby improving the robustness of the tracker. Meanwhile, unlike typical temporal regularization terms, second-order differential regularization terms suppress the rate of change in the filter and have a stronger inhibitory effect on sudden changes in the appearance model caused by factors such as occlusion and camera motion, which can further enhance the robustness of the filter.

3.2.1. Saliency Detection

We use the method proposed in reference [40] to perform saliency detection on the target and generate a target mask image. This method starts from the principle of natural image statistics, analyzes the logarithmic spectrum of the image to be detected, obtains spectral residuals, and then converts the spectral residuals to the spatial domain to obtain saliency maps.

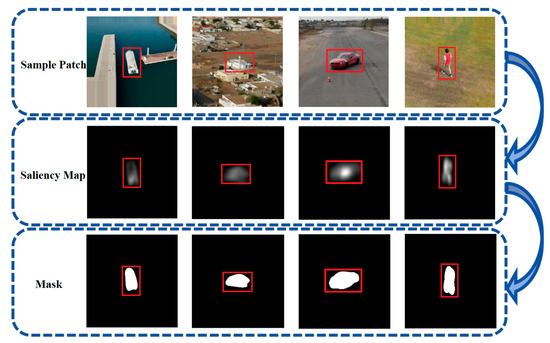

Saliency detection is performed on the search area and the initial significance detection map is obtained. Then, a cosine window is applied to the initial saliency detection map for filtering, suppressing saliency regions far from the center of the target, and the saliency detection map of the current frame is obtained. The saliency detection map is then binarized to obtain a mask map that fits the appearance of the target. Figure 2 shows some of the detection results.

Figure 2.

Mask generation process. From (top) to (bottom): sample patch, saliency map, and mask. From (left) to (right): sequence boat1, building4, car16, and person5 in the UAV123 dataset. The red box represents the target area.

3.2.2. Feature Fusion

In order to better utilize different features, SDBCF fully explores the spatial and temporal information of features, dynamically fuses them based on their discriminative and representational abilities in different scenarios, and maximizes the potential of multiple features in the tracking process. Specifically, SDBCF trains a tracker using grayscale features, hog features, and cn features, respectively, corresponding to , , and . In each frame, , , and perform correlation operations with the search area to obtain corresponding response maps , , and . Then, , , are fused and the target is located based on the fused response maps. The fusion process is shown in Equation (4):

represents the fused response map, while , , and represent the fusion coefficients corresponding to , , and , respectively. As mentioned earlier, the fusion coefficients , , and are related to both the discriminative and representational abilities of the features.

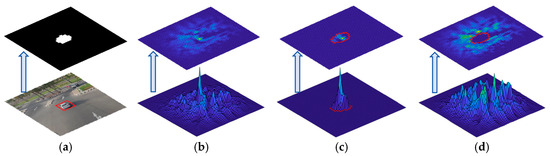

Discriminative Ability

When evaluating the discriminative ability of features, we used the mask map generated by significance detection (Figure 3a) to divide the feature response (Figure 3b) into the target area (Figure 3c) and non-target area (Figure 3d), and determined the discriminative ability score for each feature based on the response ratio of the target area to the non-target area.

Figure 3.

(a) Generated mask for search area; (b) search area response map; (c) target area response map; (d) non-target area response map.

Obviously, the higher the response ratio between the target area and the non-target area, the stronger the discriminative ability of the feature, and a higher discriminative score should be assigned to the feature. The discriminative ability score for each feature is as follows:

represents the variable representing grayscale features, hog features, or cn features, represents the target area, represents the non target area, represents the response value of the target area, and represents the response value of the non-target area. Their definitions are shown in Equations (6) and (7):

In the formula, represents the response value at the corresponding position in the response map, and represents the number of pixels in the corresponding area. It is worth noting that we only counted the positive values in the response graph here, as negative response values are meaningless in correlation filtering tracking [41].

Representational Ability

During the continuous appearance changes in the target, if a certain feature can always accurately predict the target position, it has strong robustness and a correspondingly strong representation ability. Therefore, we combined the historical information of the features and evaluated the representational ability of each feature based on the consistency between its predicted position and the final predicted position of the target. Specifically, we used , , and to predict the target position separately, and then compared the predicted results with the final predicted results of the target. The smaller the error, the higher the consistency between the feature prediction results and the final prediction results; thus a larger representation score would be assigned. Here, we use the center distance error to measure the prediction error:

represents the variable representing grayscale features, hog features, or cn features, represents the predicted positions of three features, and represents the final predicted position. The consistency between each feature prediction position and the final prediction position is expressed as follows:

The representational ability of each feature can be expressed as follows:

In the formula, represents the weight of adjusting the proportion of historical consistency and current frame consistency; represents the number of historical frames involved in the consistency evaluation.

After obtaining the discriminative and representational scores for each feature, the fusion coefficient is expressed as follows:

is the weight coefficient of the fusion of the discriminative ability score and the representational ability score. The fusion coefficient of each feature can be normalized to obtain the final fusion coefficient.

3.2.3. Algorithm Optimization

To solve the objective function (3), the relaxation variable , Lagrange multiplier , and step parameter are introduced to obtain the augmented Lagrange function of Equation (3):

If is defined as the dual variable of scaling, the above equation can be transformed into

Using the ADMM multiplier method, the above equation can be decomposed into the following subproblems to obtain a solution:

Among them, is the number of iterations.

(1) Subproblem : using Parseval’s theorem and the convolution theorem, this can be transformed into the Fourier domain:

Here, for easier explanation, superscript is omitted. represents complex conjugation, and represents discrete Fourier transform (DFT) with symmetry.

It can be observed that the row and column elements of the ideal Gaussian Fourier transform are only related to the row and column elements corresponding to the overall channel and the feature sample . Let , concatenate the elements on all channels together, and decompose the above equation into independent subproblems, each of which takes the following form:

By taking the derivative of and setting it to 0, taking the identity matrix as , and using the Sherman–Morrison formula, we can obtain the following solution:

(2) Subproblem : since subproblem does not involve convolution operations in the time domain, the derivative of can be directly set to zero and solved in the time domain. Its closed form solution is as follows:

(3) Subproblem :

(4) Iterative update: in each iteration, is updated using the last line of Equation (14). Step parameter is updated as follows:

is the maximum value of and is the step size factor.

3.2.4. Object Localization and Model Update

SDBCF trains and updates , , and separately. In each frame, the optimal filter obtained from the previous frame is used to calculate the response map , as shown in Equation (21):

is the inverse Fourier transform, . Then, using Equation (4), the response map is fused to obtain the final response map . The position corresponding to the maximum value of is the center of the target. The filter is updated using Equation (22):

is the learning rate.

3.2.5. Scale Estimation Strategy

In order to improve the robustness of the tracker in scenarios with continuous scale changes, we hope to be able to estimate continuous scale changes. Reference [13] proposed a theoretical framework that obtained a continuous domain response function and achieved pixel-level localization of the target. Taking inspiration from reference [13], SDBCF trained a scale filter in the continuous domain to map scale samples to response functions in the continuous domain, thus breaking through the limitations of a fixed scale resolution.

Aspect Ratio Adaptive

After obtaining the target mask image through saliency detection in Section 3.2.1, we took the minimum bounding rectangle for the target area in the mask image and then used the aspect ratio of the minimum bounding rectangle as the aspect ratio of the current frame target. It is worth mentioning that to prevent failure in saliency detection, we also added an anomaly detection step. When the new aspect ratio and the aspect ratio of the previous frame met , we updated the aspect ratio of the target. Otherwise, we did not update it.

Training Continuous Scale Filters

In order to fix the limitation of scale resolution, we hope to train filters in the continuous domain to achieve more accurate scale estimation. Reference [13] proposed a theoretical framework that extends feature maps of different resolutions to a continuous spatial domain of the same period through interpolation methods, achieving the fusion of multi-resolution features. Inspired by reference [13], we interpolated the scale samples and extended them to the continuous spatial domain. At the same time, we trained a two-dimensional scale filter in the continuous domain to map the scale samples to the response function on the continuous domain, thereby estimating the continuous scale changes.

Firstly, we assumed there were two variables , , and the space where the square integrable periodic function with periods and corresponding to the two variables was located is . Let be the standard orthogonal basis of , where . For the complex valued function within , its Fourier coefficients were defined as . For clarity, square brackets were used for functions with discrete domains. Any can be represented by its Fourier series as . For function , there is , where the bar represents complex conjugation. The Fourier coefficients satisfy Parseval’s theorem , where , . Fourier coefficients satisfy the following convolution properties: . Among them, represents the continuous convolution operator.

Feature sample is composed of a discrete scale pyramid as a function indexed by the discrete variable . To interpolate feature sample , an interpolation operator of is defined:

The continuous domain represents the spatial range of feature samples and scalar represent the size of the space. In practice, are arbitrary; they only represent the scaling of the coordinate system. is the interpolation function. Here, the interpolation function is the superposition of the scaled and translated versions of the cubic interpolation kernel function :

Define the linear convolution operator . This operator maps sample to a response function on a continuous field. We estimate the target scale by maximizing the response function. Because the response value is defined in a continuous spatial domain, our method can break the limitations of a fixed and discontinuous scale resolution, thereby estimating the continuous changes in the target scale and improving the accuracy of scale estimation. The definition of continuous correlation operator is as follows:

is the scale filter to be trained and represents the continuous convolution operator. is trained by minimizing the objective function in Equation (27):

Here, is the expected response output. is defined as the periodic repetition of Gaussian function centered around , where is the scale of the target in the previous frame, is the center point of the scale sample. Its Fourier coefficient is as follows:

Equation (29) is minimized in the Fourier domain. The Fourier coefficients of the interpolated feature map are , where represent the discrete Fourier transforms of . and represents the Fourier coefficients of and . The Fourier coefficients of Equation (26) are

Using Parseval’s theorem, the objective function in the Fourier domain can be expanded:

For scale filter , we can equivalently solve for Fourier coefficients . In the experiment, we need a finite set of parameters to represent . Therefore, for coefficient , we assume that when and , . and determine the number of filter coefficients that need to be calculated during the training process. We take , . Finally, the solution of the scale filter obtained is as follows:

Scale Estimation and Model Update

In frame , we use the target position estimated by the displacement filter as the center and the scale of frame as the reference to adjust the aspect ratio of the target based on the saliency detection results of frame . Then, we extract the discrete scale feature and use the scale filter trained in frame and Equation (31) to calculate the Fourier coefficients of the response function . In order to obtain the maximum response value on interval , a rough initial estimate is first found through a grid search, where the response value is calculated at discrete position and and . Then, the obtained maximum value is used for the iterative optimization initialization of the Fourier series expansion and the maximum response value is determined using Newton’s method, where corresponds to the precise scale.

The updated scale filter is shown in Equation (32):

is the learning rate of the scale filter.

4. Experiments

This section provides a detailed presentation and analysis of the experimental details and results. Section 4.1 introduces the experimental parameters, Section 4.2 presents the experimental dataset and evaluation indicators, Section 4.3 presents the experimental results and a detailed analysis of the results, and Section 4.4 presents the ablation experiment, demonstrating the contributions of each module to SDBCF.

4.1. Experimental Details and Parameters

All experiments were implemented in Matlab R2021a and run on a PC equipped with an Inter (R) Core (TM) i7-12700H CPU@2.30 GHz, 16 GB RAM.

In the experiment, the parameters in the displacement filter were as follows: spatial regularization coefficient , differential regularization coefficient , initial value of step parameter set to , maximum value set to , step size factor set to , ADMM iteration number set to 2, and learning rate in model update set to . In the feature fusion module, , , . In the scale estimation module, the threshold for aspect ratio change rate was taken as , . In the scale filter, , scale factor , and . The learning rate of the scale filter was set to . The remaining parameters were the same as the SRDCF [15] tracker settings.

4.2. The Dataset and Evaluation Metrics

4.2.1. The Dataset

To verify the effectiveness of the algorithm proposed in this paper, the UAV datasets UAV123 [42], UAV20L [42], and DTB70 [43] were used to test and analyze the trackers. The UAV123 dataset contains 123 image sequences, covering aspect ratio change (ARC), camera motion (CM), background clutter (BC), fast motion (FM), illumination variation (IV), full occlusion (FOC), low resolution (LR), out of view (OV), similar object interference (SOB), partial occlusion (POC), scale variation (SV), and viewpoint change (VC). There are 11 common attributes in UAV scenarios. UAV20L is a selection of 20 long sequences from the UAV123 dataset, with longer time spans and more complex target motion trajectories. The DTB70 dataset consists of 70 image sequences and also contains 11 attributes. Unlike UAV123, DTB70 combines complete occlusion and local occlusion into one occlusion (OCC) attribute, while adding a deformation (DEF) attribute. These three datasets are currently the mainstream open-source testing datasets for UAV target tracking algorithms, with high authority and universality.

4.2.2. Evaluation Metrics

The experiment used the One Pass Evaluation (OPE) in the OTB dataset [44] as the evaluation scheme, which measured the performance of each candidate tracker on three datasets using its success rate curve, Area Under the ROC Curve (AUC), and Distance Precision (DP). The success rate is defined as the percentage of frames whose intersection-to-union ratio IoU between the predicted bounding box and the real bounding box exceeds the given threshold . For , different values between 0 and 1 were taken to plot the success rate curve, and then we used the area under the success rate curve (AUC) to rank the tracker. Distance accuracy was defined as the proportion of video frames in a video sequence where the Euclidean distance between the predicted target center and the true target center position is less than a given threshold. A common threshold of 20 pixels in the experiment was used to rank the tracker. Additionally, the tracking speed was measured using the number of frames processed per second by the tracker.

4.3. Experimental Results and Analysis

This section compares SDBCF with various representative filtering target trackers and provides a detailed analysis, including a quantitative comparison, a qualitative analysis, a tracking speed evaluation, and a key parameter analysis.

4.3.1. Quantitative Comparison

This paper selects nine representative DCF trackers for comparative experiments focusing on the aspects of Feature Representation (FR), Dynamic Feature Fusion (DFF), Scale Estimation (SE), Dynamic Spatial Regularization (DSR), Baseline (B), etc., including BACF [45], STRCF [16], SRDCF [15], CSR-DCF [46], DRCF [30], Auto Track [6], Staple [47], EFSCF [48], SOCF [23], etc. The specific details are shown in Table 1.

Table 1.

Tracker detail comparison table.

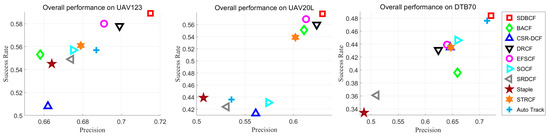

By conducting experimental evaluations of the UAV123, UAV20L, and DTB70 datasets, the corresponding distance accuracy and success rates were obtained, as shown in Figure 4 and Table 2.

Figure 4.

OPE results of 10 algorithms on the UAV123, UAV20L, and DTB70 datasets.

Table 2.

DP and AUC scores of 10 trackers on the UAV123, UAV20L, and DTB70 datasets.

As shown in Table 2, SDBCF has the best tracking success rate and highest precision on the UAV123, UAV20L, and DTB70 datasets. On the UAV123 dataset, the DP score and AUC score of SDBCF were 71.5% and 58.9%, which were improved by 4.1% and 5.0% compared to the SRDC. On the UAV20L dataset, the DP score and AUC score of SDBCF were 63.0% and 57.8%; when using the SRDCF, these were improved by 9.9% and 15.4%. On the DTB70 dataset, the DP score and AUC score of SDBCF were 72.1% and 48.4%, which were improved by 21.1% and 12.3% compared to the SRDCF.

This paper analyzes the tracking performance of various algorithms on different attributes on the UAV123, UAV20L, and DTB70 datasets, as shown in Table 3, Table 4, Table 5, Table 6, Table 7 and Table 8.

Table 3.

DP scores of 10 trackers under the partial attributes of the UAV123 dataset.

Table 4.

AUC scores of 10 trackers under the partial attributes of the UAV123 dataset.

Table 5.

DP scores of 10 trackers under the partial attributes of the UAV20L dataset.

Table 6.

AUC scores of 10 trackers under the partial attributes of the UAV20L dataset.

Table 7.

DP scores of 10 trackers under the partial attributes of the DTB70 dataset.

Table 8.

AUC scores of 10 trackers under the partial attributes of the DTB70 dataset.

Table 3 and Table 4 quantitatively display the DP and AUC scores of the 10 trackers for certain attributes in the UAV123 dataset, with SDBCF being either optimal or suboptimal. Compared to SRDCF, SDBCF shows varying degrees of improvement in DP and AUC scores across various attributes. Under the scale change attribute, the DP score and AUC score increased by 5.1% and 3.8%, respectively. In the aspect ratio variation scenario, the DP score and AUC score increased by 4.9% and 3.6%, respectively. Regarding the camera motion attribute, the DP score and AUC score of SDBCF increased by 5.4% and 5.9%, respectively. Regarding the partial occlusion attribute, the DP score and AUC score of SDBCF increased by 5.5% and 3.7%, respectively. Regarding the similar target interference attributes, the DP score and AUC score of SDBCF increased by 7.7% and 7.0%, respectively. Regarding the background clutter attribute, the DP score and AUC score of SDBCF increased by 8.7% and 7.2%, respectively.

Table 5 and Table 6 show the precision curves, success curves, and corresponding DP and AUC scores, respectively, of 10 trackers, focusing on different attributes, using the UAV20L dataset. Similarly to the UAV123 dataset, SDBCF exhibited significant performance improvements across multiple attributes. For the scale change attribute, the DP score and AUC score increased by 10.9% and 14.3%, respectively. In the aspect ratio variation scenario, the DP score and AUC score increased by 13.2% and 14.3%, respectively. For the camera motion attribute, the DP score and AUC score of SDBCF increased by 12.9% and 13.2%, respectively. Regarding the local occlusion attribute, the DP score and AUC score of SDBCF increased by 9.4% and 17.4%, respectively. For the similar target interference attributes, the DP score and AUC score of SDBCF increased by 5.9% and 13.6%, respectively. For the background clutter attribute, the DP score and AUC score of SDBCF increased by 22.2% and 15.9%, respectively.

Table 7 and Table 8 show the DP and AUC scores of the 10 trackers focusing on particular attributes when using the DTB70 dataset. The performance of SDBCF was optimal or suboptimal across multiple attributes. For the scale change attribute, the DP score and AUC score increased by 21.6% and 13.2%, respectively. In the aspect ratio variation scenario, the DP score and AUC score increased by 26.2% and 13.5%, respectively. Regarding the camera motion attribute, the DP score and AUC score of SDBCF increased by 19.6% and 10.4%, respectively. For the occlusion attribute, the DP score and AUC score of SDBCF increased by 15.9% and 10.5%, respectively. For the similar target interference attributes, the DP score and AUC score of SDBCF increased by 20.0% and 10.0%, respectively. For the background clutter attribute, the DP score and AUC score of SDBCF increased by 24.8% and 13.8%, respectively.

The performance improvement attained by SDBCF for the scale variation and aspect ratio variation attributes is mainly due to the scale estimation strategy. The aspect ratio adaptive module based on saliency detection enables the tracker to adapt to the changes in the appearance of the target, and the continuous domain scale filter can adapt to the continuous changes in the target scale, so SDBCF can estimate the scale more accurately. The performance improvement achieved by SDBCF regarding the background clutter attributes is mainly attributed to the dynamic fusion of features and the construction of robust appearance models. The feature fusion module comprehensively measures the spatial and temporal information of features, allowing different features to adaptively adjust their weights in different scenarios and enabling SDBCF to achieve optimal feature expression in complex scenes. The dynamic spatial regularization term that combines target saliency information enables the tracker to focus on learning target information during training and suppress interference factors in the background. During the tracking process, background noise generated by factors such as changes in drone perspective and rapid motion may cause filter distortion, while the introduction of second-order differential regularization makes the filter smoother in terms of its timing and alleviates the impact of filter distortion. In addition, compared with the UAV123 dataset, SDBCF showed more significant improvements when using the UAV20L dataset and DTB70 dataset compared to SRDCF, proving that SDBCF has a stronger ability to cope with complex scenes and higher robustness when facing scenarios such as scale changes and background clutter.

4.3.2. Qualitative Analysis

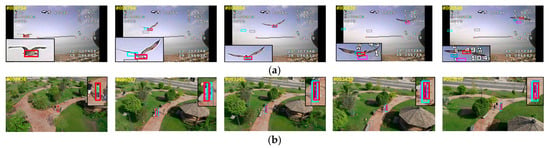

To verify the robustness of SDBCF in complex scenes, four trackers (SRDCF, Auto Track, EFSCF, and SOCF) were selected and there performance when using five challenging video sequences was compared. Figure 5 shows the tracking results of the five trackers, with smaller-resolution targets appropriately enlarged and cropped and displayed in the upper right or lower left corner of the corresponding image.

Figure 5.

Partial tracking results of the five trackers on different sequences. (a) Bird; (b) Group3; (c) Person7; (d) ChasingDrones; (e) Basketball.

In the scenarios with background clutter (Group3, Chasing Drones, and Basketball) and similar object interference (Group3, Gull1, and Motor2), the feature fusion module can combine the discriminative and representational abilities of features to identify targets from the background with maximum discrimination. The dynamic spatial regularization term further suppresses the interference factors in non-target areas during the training process, enabling SDBCF to better resist the interference of background clutter and similar targets. In the scenarios with deformation (Bird Group3, Person7, and Basketball), viewpoint change (Chasing Drones) and fast motion (Bird, Person7, and Chasing Drones), the feature fusion module adjusts the fusion ratio of features to represent the target with the most representative fusion scheme, enabling SDBCF to better adapt to the changes in the appearance of the target. In occlusion (Group 3 and Basketball) scenarios, the second-order differential regularization term can suppress the distortion of the appearance model by constraining the temporal change rate of the filter, thereby alleviating the interference of occlusion and other factors. From Figure 5, in the case where multiple interferences occur simultaneously, the comparative trackers all experienced a certain degree of drift or even tracking failure, while SDBCF was able to track the target stably and continuously.

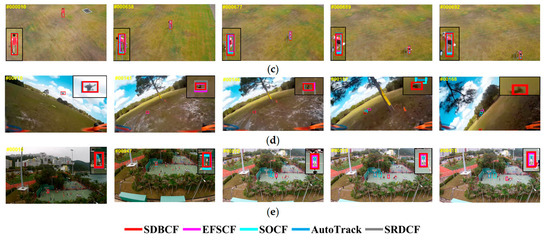

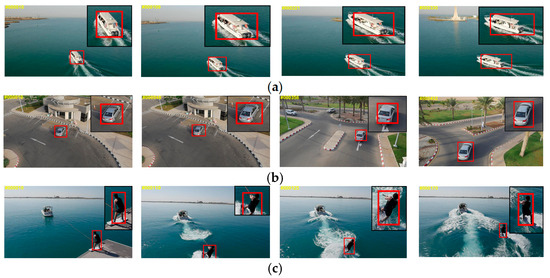

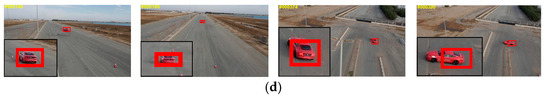

In addition, to verify the accuracy of scale estimation, the tracking results of SDBCF on sequences with significant scale variations are shown in Figure 6.

Figure 6.

Partial tracking results of SDBCF on several sequences: (a) Boat3; (b) Car8; (c) wakeboard1; (d) Car16.

From Figure 6, it can be seen that SDBCF has good adaptability to changes in the aspect ratio of the target and can still generate the smallest rectangular box around the target, even when there are significant changes in its appearance.

4.3.3. Tracking Speed Evaluation

The tracking speed of 10 algorithms was tested on the UAV123, UAV20L, and DTB70 datasets, and the tracking results are shown in Table 9.

Table 9.

Real-time analysis of 10 trackers.

According to Table 9, the average tracking speed of SDBCF on the three datasets is 34.86 fps. Although SDBCF trained three filters, its tracking speed is still better than that of SRDCF. This is mainly due to the use of the ADMM method in the solution process of SDBCF for the filters.

4.3.4. Parameter Analysis

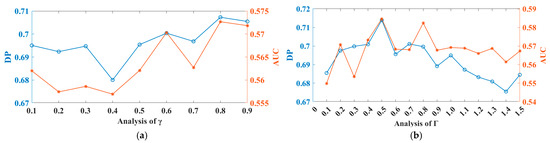

In this section, we take the UAV123 dataset as an example to conduct a sensitivity analysis of weight coefficient and the fusion of discriminative ability score and representational ability score, as well as the second-order difference regularization coefficient .

Within the range of , the interval stride is 0.1, with a total of nine parameters. The experimental results are shown in Figure 7a. Obviously, when a = 0.8, both the DP score and AUC score of SDBCF reach their maximum values. This also means that, in the dataset we chose, the discriminative ability of the features is more important than their representational ability.

Figure 7.

Sensitivity analysis of the key parameters in SDBCF: (a) fusion weight coefficient analysis results; (b) differential regularization coefficient analysis results.

Within the range of , the interval stride is 0.1, with a total of 15 parameters. The experimental results are shown in Figure 7b. When the value of is small, this means that the constraint on the rate of change in the filter between adjacent frames is small, which makes the filter unable to effectively suppress distortion caused by interference factors. When the value of is large, this means that there is a greater constraint on the rate of change in the filter between adjacent frames, but this also limits the adaptability of the filter to changes in the appearance of the target to a certain extent. From the experimental results, it can be seen that both the DP score and AUC score of SDBCF are optimal when .

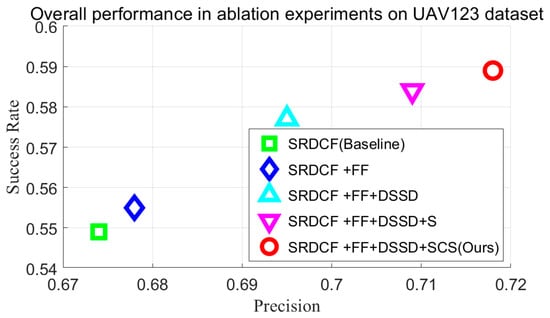

4.4. Ablation Experiment

In order to verify the effectiveness of the proposed method, ablation experiments were designed in this paper.

We used “FF” to represent the feature fusion module, “DSSD” to represent the dynamic spatial regularization term, the second-order differential regularization term, “S” to represent the aspect ratio adaptive module based on saliency detection, and “SCS” to represent the aspect ratio adaptive module and continuous scale filter. Comparative experiments were conducted on the UAV123 dataset, and the experimental results are shown in Table 10 and Figure 8. According to Table 10 and Figure 8, each module can improve the performance of the tracker. The feature fusion module increased the DP score and AUC score of SRDCF by 0.4% and 0.6%, respectively. The dynamic spatial regularization module and second-order differential regularization module further improved the DP score and AUC score of SRDCF by 1.7% and 2.2%, respectively. The introduction of the aspect ratio adaptive module further improved the DP score and AUC score of the tracker by 1.4% and 0.7%, respectively. The introduction of continuous scale filters increased the DP score and AUC score of the tracker by 0.6% and 0.5%, respectively.

Table 10.

Results of ablation experiments on the UAV123 dataset.

Figure 8.

Overall performance of the five algorithms in ablation experiments on the UAV123.

In summary, all modules of SDBCF improved the performance of the tracker, demonstrating the rationality of the SDBCF design.

5. Conclusions

This paper proposed a robust UAV target tracking algorithm SDBCF based on saliency detection. As UAV target tracking is easily affected by background clutter, similar object interference, camera motion, occlusion, scale changes and other factors, improvement strategies for SRDCF were proposed, focusing on three aspects: feature fusion, model construction, and scale estimation. In terms of feature fusion, SDBCF fully exploits the spatial and temporal information of features, achieving the dynamic fusion of features in complex scenes. In terms of model construction, SDBCF integrates dynamic spatial regularization terms and second-order differential regularization terms into the DCF framework, improving the robustness of the filter in scenarios such as background clutter, similar object interference, and occlusion. In terms of scale estimation, SDBCF achieves adaptive response to changes in target aspect ratio and can continuously estimate changes in target scale, effectively improving the tracker’s ability to cope with scale changes. SDBCF utilizes significance detection methods to connect various modules together, fully leveraging the role of significance detection. Comparative experiments conducted on the UAV123, UAV20L, and DTB70 datasets showed that SDBCF had the best DP and AUC scores on all three datasets, with improvements of (4.1%, 5.0%), (9.9%, 15.4%), and (21.1%, 12.3%) compared to SRDCF, respectively. When facing similar background interference, background clutter, occlusion, scale variation, aspect ratio variation, camera motion, etc., the tracking performance of SDBCF is significantly better than other tracking methods, fully demonstrating the superiority of SDBCF.

Although the tracking performance of SDBCF has significantly improved, SDBCF only uses manual features and has a limited ability to represent targets, resulting in poor performance in, for example, low-resolution scenarios. Therefore, in the next step, we will consider introducing deep features into the feature fusion module to further improve the tracking performance of SDBCF without significantly affecting the tracking speed. At the same time, it was found during the experiment that SDBCF had poor robustness in scenarios such as complete occlusion and objects being out of view. This is because SDBCF did not design reliability detection modules and redetection modules, and therefore cannot respond to tracking failures in a timely manner. Therefore, we will also consider carrying out related work. In addition, the scale estimation strategy proposed by SDBCF is actually a universal scale estimation method for DCF trackers. Therefore, the next step will be to conduct relevant experiments to further investigate the performance of the scale estimation module on other trackers.

Author Contributions

Conceptualization, H.W. and W.W.; methodology, H.W.; software, H.W., G.C. and X.L.; validation, H.W. and G.C.; formal analysis, G.C.; investigation, H.W.; resources, H.W., X.L. and W.W.; data curation, H.W. and W.W.; writing—original draft preparation, H.W.; writing—review and editing, W.W.; visualization, H.W. and G.C.; supervision, W.W. and X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhang, J.; Zhang, X.; Huang, Z.; Cheng, X.; Feng, J.; Jiao, L. Bidirectional Multiple Object Tracking Based on Trajectory Criteria in Satellite Videos. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar]

- Siddharth, R.; Aghila, G. A Fog-Assisted Framework for Intelligent Video Preprocessing in Cloud-based Video Surveillance as a Service. IEEE Trans. Sustain. Comput. 2022, 7, 1. [Google Scholar]

- Bu, Y.; Xie, L.; Gong, Y.; Wang, C.; Yang, L.; Liu, J.; Lu, S. RF-Dial: Rigid Motion Tracking and Touch Gesture Detection for Interaction via RFID Tags. IEEE Trans. Mob. Comput. 2022, 21, 1061–1080. [Google Scholar]

- Stodola, P. Improvement in the model of cooperative aerial reconnaissance used in the tactical decision support system. J. Def. Model. Simul. 2017, 14, 483–492. [Google Scholar]

- Mu, L.; Xie, G.; Yu, X.; Wang, B.; Zhang, Y. Robust Guidance for a Reusable Launch Vehicle in Terminal Phase. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 1996–2011. [Google Scholar] [CrossRef]

- Li, Y.; Fu, C.; Ding, F.; Huang, Z.; Lu, G. AutoTrack: Towards High-Performance Visual Tracking for UAV with Automatic Spatio-Temporal Regularization. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Luo, W.; Biao, L.; Ruigang, F. Infrared Ground Multi-object Tracking Method Based on Improved ByteTrack Algorithm. Comput. Sci. 2023, 50, 176–183. [Google Scholar] [CrossRef]

- Xie, Z.; Xu, Z.; Han, S.; Zhu, J.; Huang, X. Modulus Constrained Minimax Radar Code Design Against Target Interpulse Fluctuation. IEEE Trans. Veh. Technol. 2023, 72, 13671–13676. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the Circulant Structure of Tracking-by-detection with Kernels. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar]

- Danelljan, M.; Shahbaz Khan, F.; Felsberg, M.; Van de Weijer, J. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Wang, N.; Zhou, W.G.; Tian, Q.; Hong, R.C.; Wang, M.; Li, H.Q. Multi-Cue Correlation Filters for Robust Visual Tracking. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Li, F.; Tian, C.; Zuo, W.; Zhang, L.; Yang, M.H. Learning Spatial-Temporal Regularized Correlation Filters for Visual Tracking. arXiv 2018, arXiv:1803.08679. [Google Scholar]

- Li, Y.; Zhu, J. A Scale Adaptive Kernel Correlation Filter Tracker with Feature Integration. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.; Felsberg, M. Accurate Scale Estimation for Robust Visual Tracking. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Jiang, B.; Luo, R.; Mao, J.; Xiao, T.; Jiang, Y. Acquisition of localization confidence for accurate object detection. In Proceedings of the IEEE European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 816–832. [Google Scholar]

- Huang, Z.; Fu, C.; Li, Y.; Lin, F.; Lu, P. Learning aberrance repressed correlation filters for realtime uav tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; p. 28912900. [Google Scholar]

- Xing, W.; Zhang, H.; Wu, Y.; Li, Y.; Yuan, D. Redefined target sample-based background-aware correlation filters for object tracking. Appl. Intell. 2023, 53, 11120–11141. [Google Scholar] [CrossRef]

- Zhang, J.; Yuan, T.; He, Y.; Wang, J. A background-aware correlation filter with adaptive saliency-aware regularization for visual tracking. Neural Comput. Appl. 2022, 34, 6359–6376. [Google Scholar] [CrossRef]

- Ma, S.; Zhao, B.; Hou, Z.; Yu, W.; Pu, L.; Yang, X. SOCF: A correlation filter for real-time UAV tracking based on spatial disturbance suppression and object saliency-aware. Expert Syst. Appl. 2024, 238, 122131. [Google Scholar]

- Yu, Y.-F.; Chen, Z.; Zhang, Y.; Zhang, C.; Ding, W. Learning Dynamic-Sensitivity Enhanced Correlation Filter with Adaptive Second-Order Difference Spatial Regularization for UAV Tracking. IEEE Trans. Intell. Transp. Syst. 2025, 1–20. [Google Scholar] [CrossRef]

- Elayaperumal, D.; Joo, Y.H. Learning spatial variance-key surrounding-aware tracking via multi-expert deep feature fusion. Inf. Sci. Int. J. 2023, 629, 502–519. [Google Scholar]

- Li, J.; Xue, C.; Luo, X.; Fu, Y.; Lin, B. Robust underwater object tracking with image enhancement and two-step feature compression. Complex Intell. Syst. 2025, 11, 154. [Google Scholar]

- Zhang, Y.; Zheng, Y. Object Tracking in UAV Videos by Multi-Feature Correlation filters with Saliency Proposals. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5538–5548. [Google Scholar]

- Zhang, J.; Feng, W.; Yuan, T.; Wang, J.; Sangaiah, A.K. SCSTCF: Spatial-Channel Selection and Temporal Regularized Correlation Filters for visual tracking. Appl. Soft Comput. 2022, 118, 108485. [Google Scholar]

- Moorthy, S.; Joo, Y.H. Learning dynamic spatial-temporal regularized correlation filter tracking with response deviation suppression via multi-feature fusion. Neural Netw. 2023, 167, 360–379. [Google Scholar] [CrossRef]

- Fu, C.; Xu, J.; Lin, F.; Guo, F.; Liu, T.; Zhang, Z. Object Saliency-Aware Dual Regularized Correlation Filter for Real-Time Aerial Tracking. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8940–8951. [Google Scholar]

- Yang, P.; Wang, Q.; Dou, J.; Dou, L. SDCS-CF: Saliency-driven localization and cascade scale estimation for visual tracking. J. Vis. Commun. Image Represent. 2024, 98, 104040. [Google Scholar]

- Zhang, W. Saliency-enhanced background-aware correlation filters with dual temporal regularization for unmanned aerial vehicle tracking. J. Electron. Imaging 2024, 33, 023017. [Google Scholar] [CrossRef]

- Arthanari, S.; Elayaperumal, D.; Joo, Y.H. Learning temporal regularized spatial-aware deep correlation filter tracking via adaptive channel selection. Neural Netw. 2025, 186, 107210. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Yin, S.; Mbelwa, J.T.; Sun, F. Context and saliency aware correlation filter for visual tracking. Multimedia Tools Appl. 2022, 81, 27879–27893. [Google Scholar] [CrossRef]

- He, H.; Chen, Z.; Li, Z.; Liu, X.; Liu, H. Scale-Aware Tracking Method with Appearance Feature Filtering and Inter-Frame Continuity. Sensors 2023, 23, 7516. [Google Scholar] [CrossRef]

- He, X.; Zhao, L.; Chen, C.Y.C. Variable scale learning for visual object tracking. J. Ambient Intell. Humaniz. Comput. 2023, 14, 3315–3330. [Google Scholar] [CrossRef]

- Ma, H.; Lin, Z.; Acton, S.T. FAST: Fast and Accurate Scale Estimation for Tracking. IEEE Signal Process. Lett. 2020, 27, 161–165. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef]

- Chen, F.; Wang, X. Adaptive spatial-temporal regularization for correlation filters based visual object tracking. Symmetry 2021, 13, 1665. [Google Scholar] [CrossRef]

- Hou, X.; Zhang, L. Saliency detection: A spectral residual approach. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Nai, K.; Li, Z.; Wang, H. Dynamic feature fusion with spatial-temporal context for robust object tracking. Pattern Recognit. 2022, 130, 108775. [Google Scholar] [CrossRef]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for UAV tracking. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Amsterdam, The Netherlands, 2016; pp. 445–461. [Google Scholar]

- Li, S.; Yeung, D.-Y. Visual Object Tracking for Unmanned Aerial Vehicles: A Benchmark and New Motion Models. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-2017), San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.-H. Online Object Tracking: A Benchmark. In Proceedings of the 2013 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Galoogahi, H.K.; Fagg, A.; Lucey, S. Learning Background-Aware Correlation Filters for Visual Tracking. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- LuNežič, A.; Vojíř, T.; Zajc, L.Č.; Matas, J.; Kristan, M. Discriminative correlation filter tracker with channel and spatial reliability. Int. J. Comput. Vis. 2018, 126, 671–688. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Wen, J.; Chu, H.; Lai, Z.; Xu, T.; Shen, L. Enhanced robust spatial feature selection and correlation filter learning for UAV tracking. Neural Netw. 2023, 161, 39–54. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).