Enhancing Integrated Navigation with a Self-Attention LSTM Hybrid Network for UAVs in GNSS-Denied Environments

Abstract

1. Introduction

2. Estimation Model

2.1. Aerodynamics of UAVs

2.2. State Dynamics

2.3. Observation Model

2.4. Moving Horizon Estimation

3. Methodology

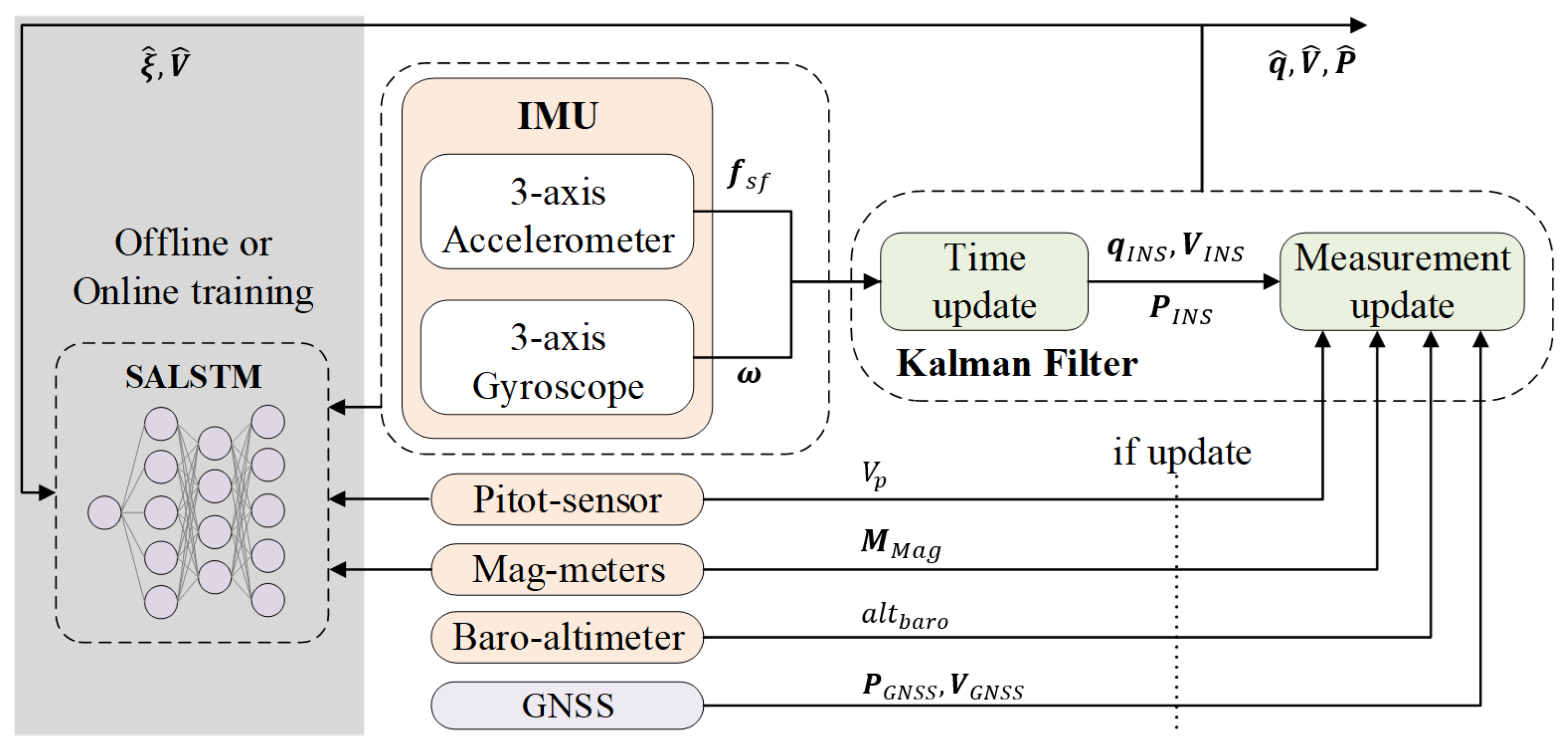

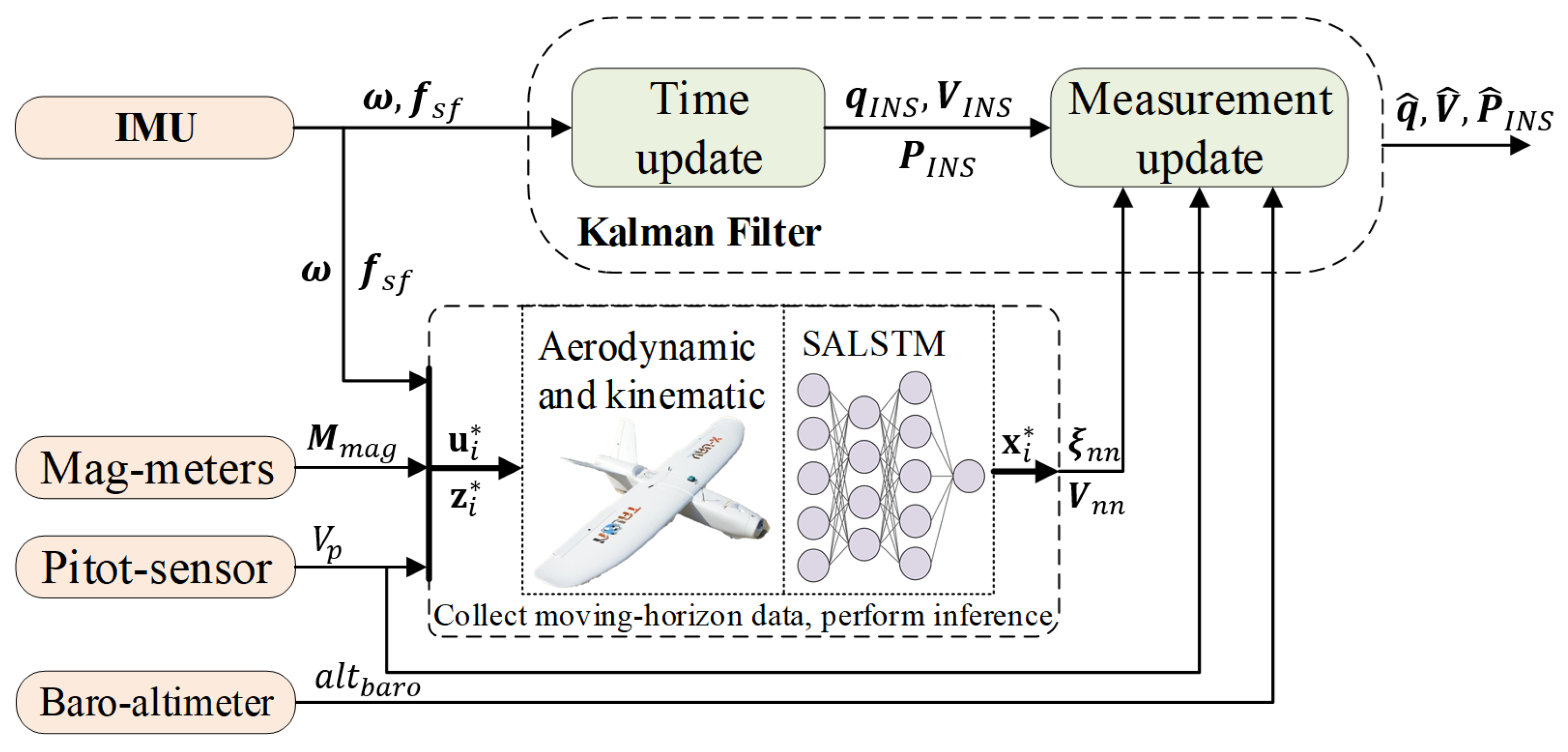

3.1. Integrated Navigation Framework

3.2. Data Fusing Methodology

3.2.1. System Dynamic

3.2.2. Measurement Model

3.2.3. Implementation for EKF

3.3. SALSTM Network Design

3.3.1. SALSTM Architecture

3.3.2. LSTM Component

3.3.3. Self-Attention Component

4. Experimental Section

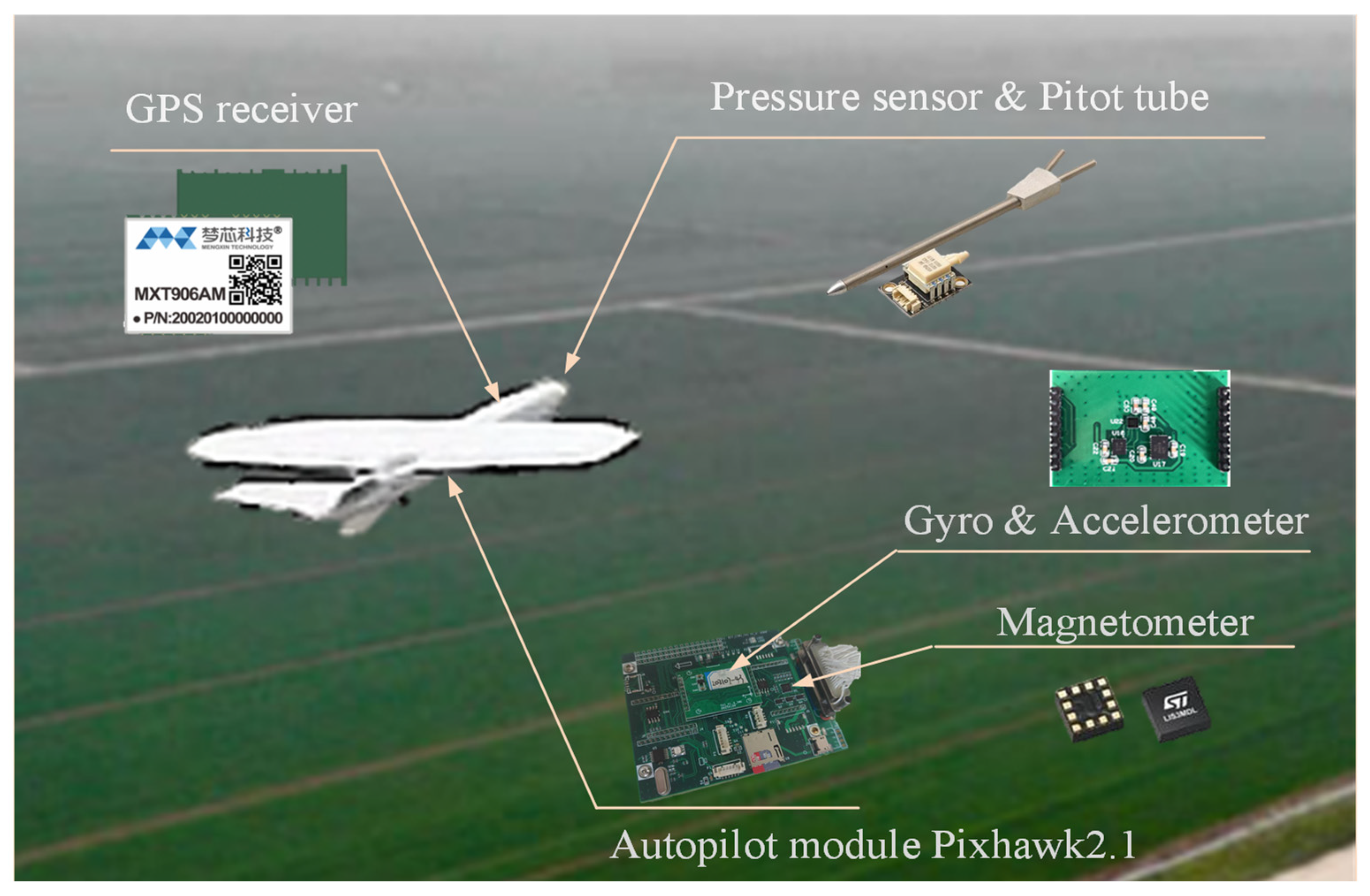

4.1. Hardware Configuration

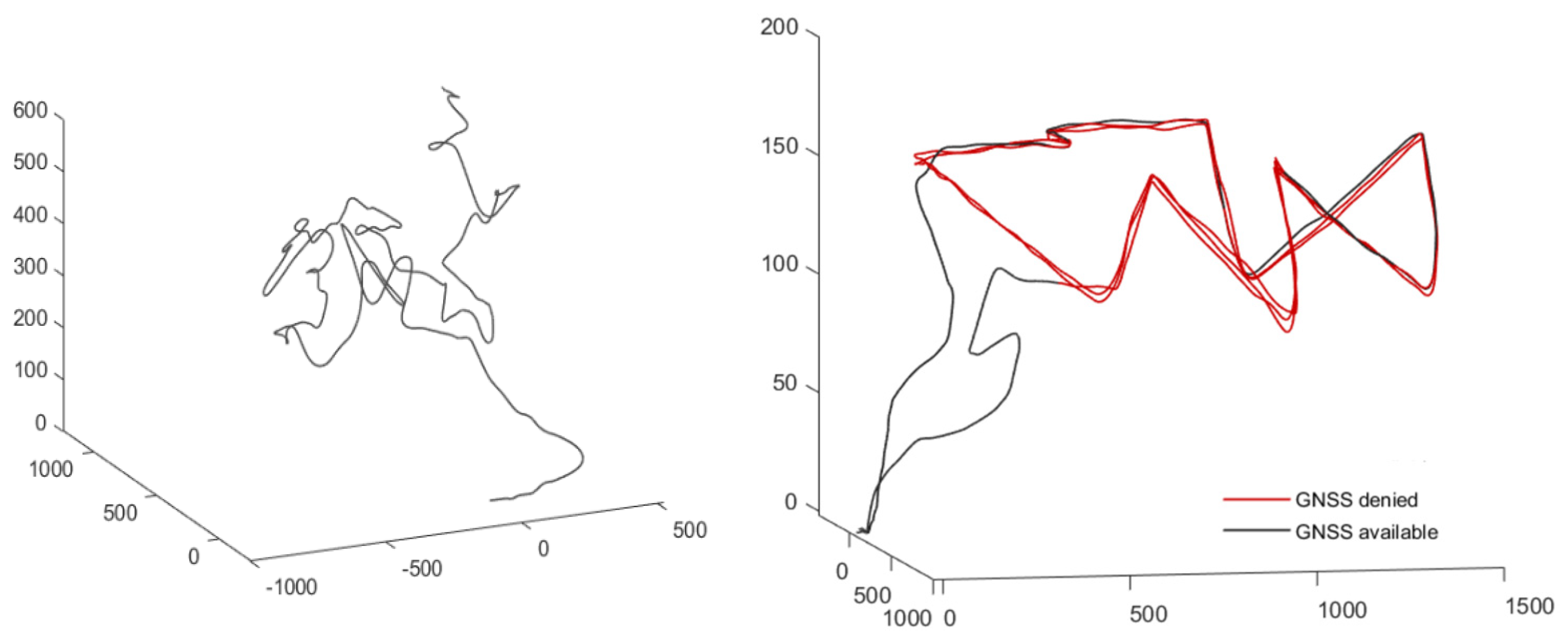

4.2. Field Test Setup

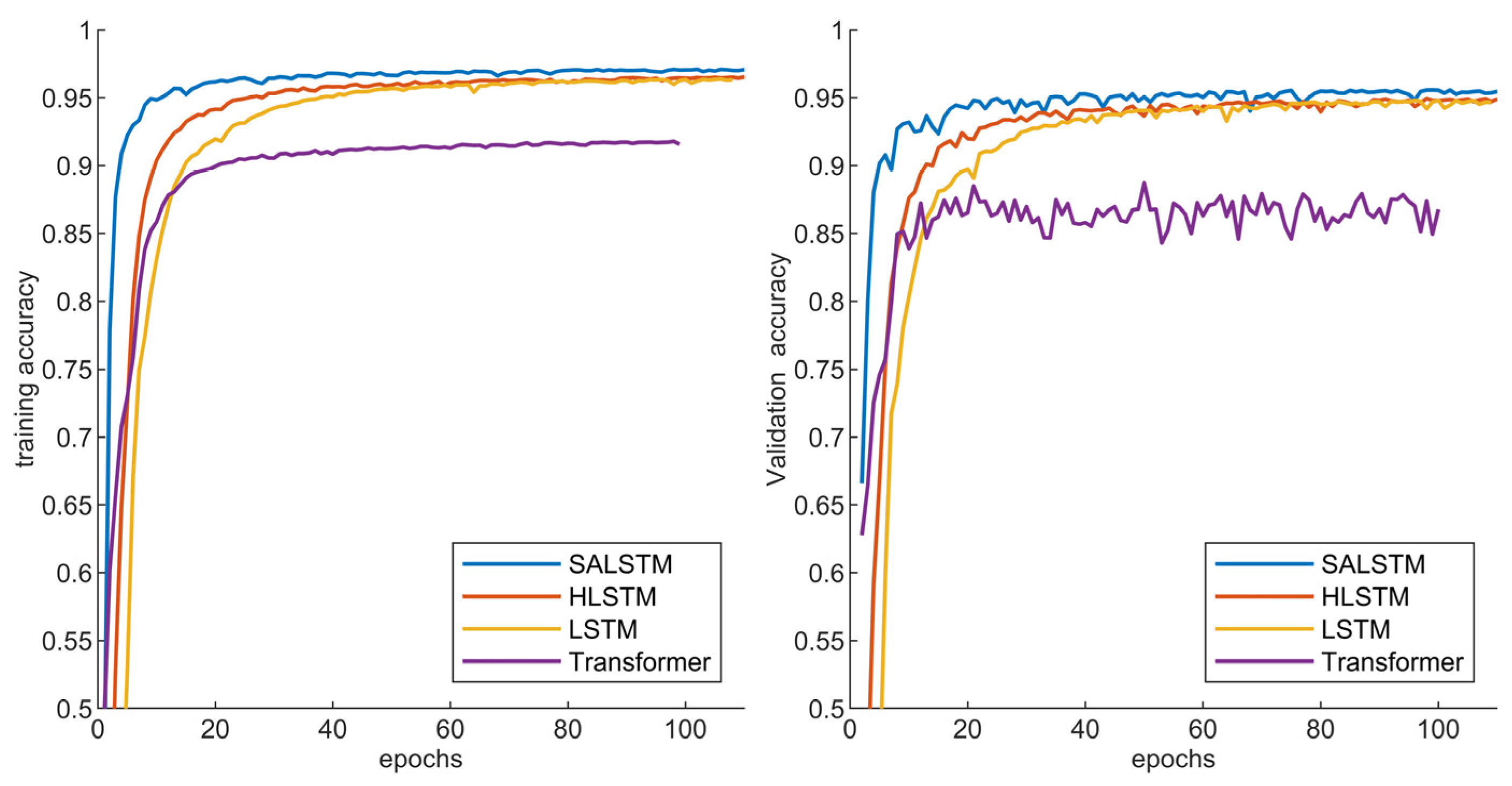

4.3. Model Pre-Training

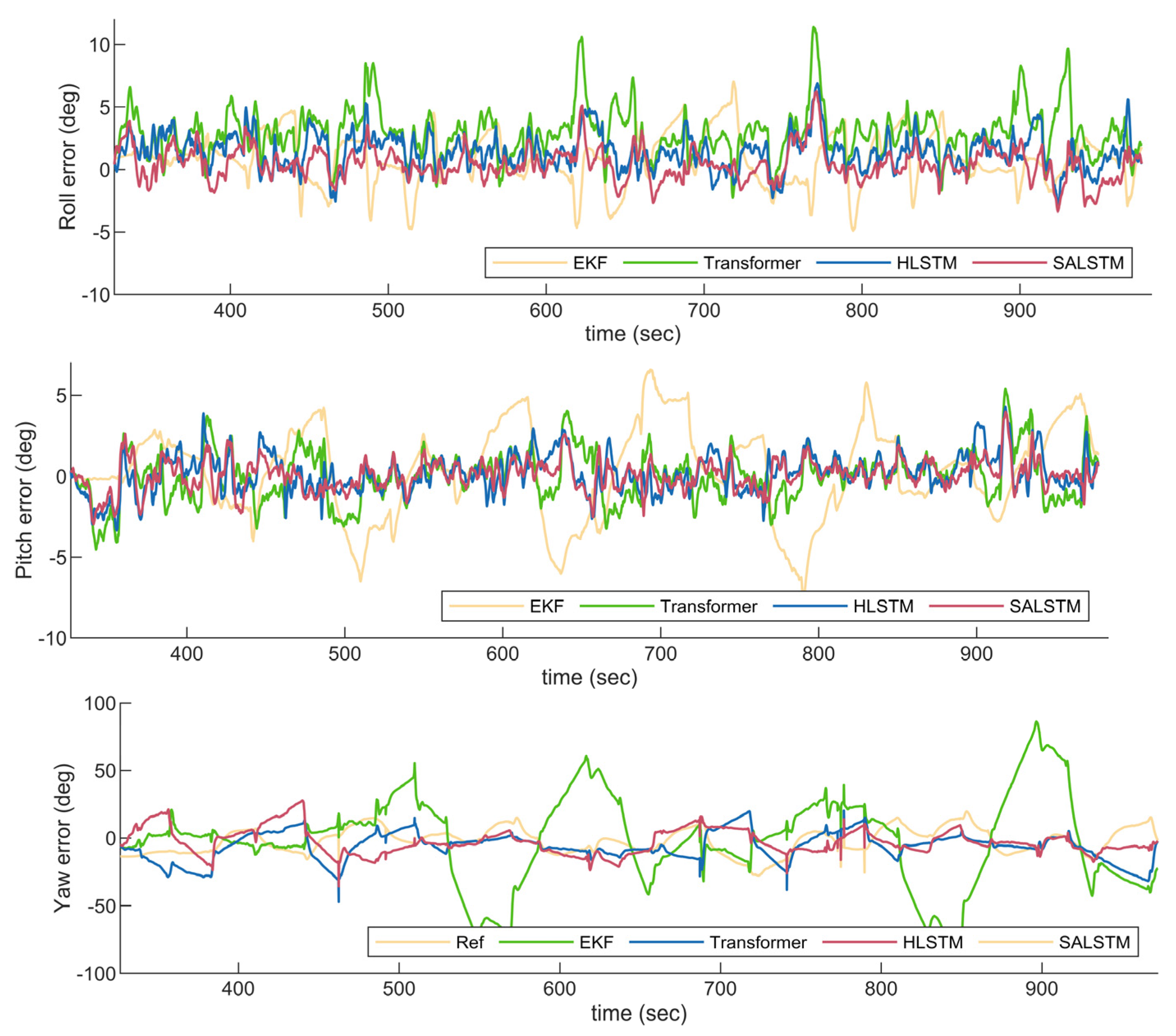

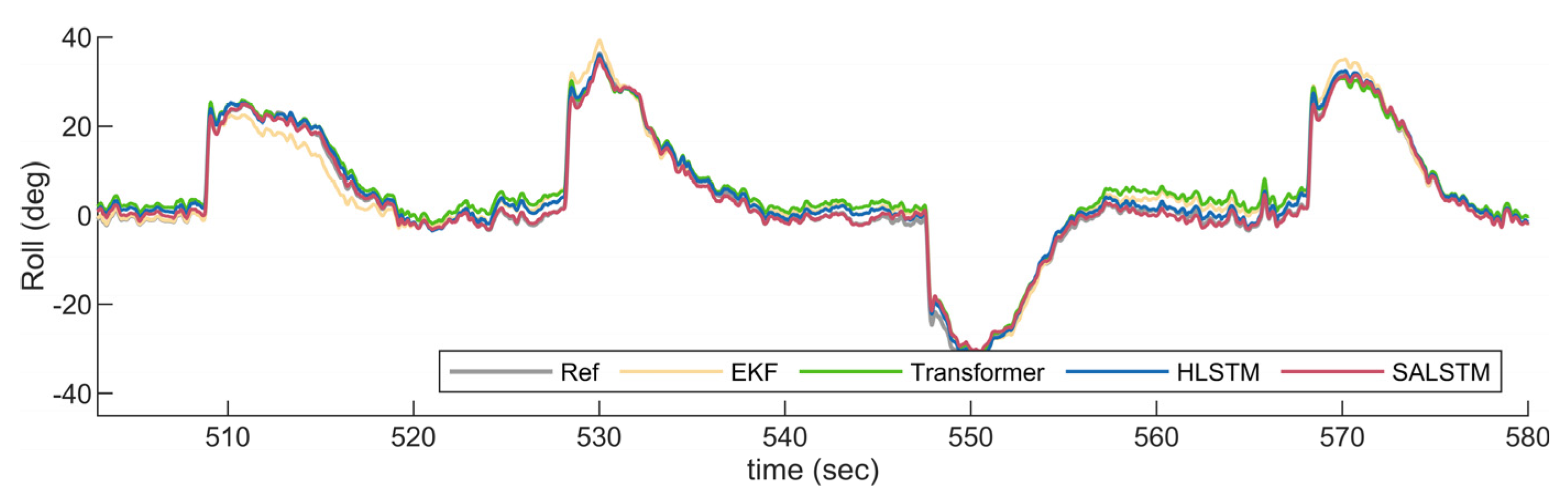

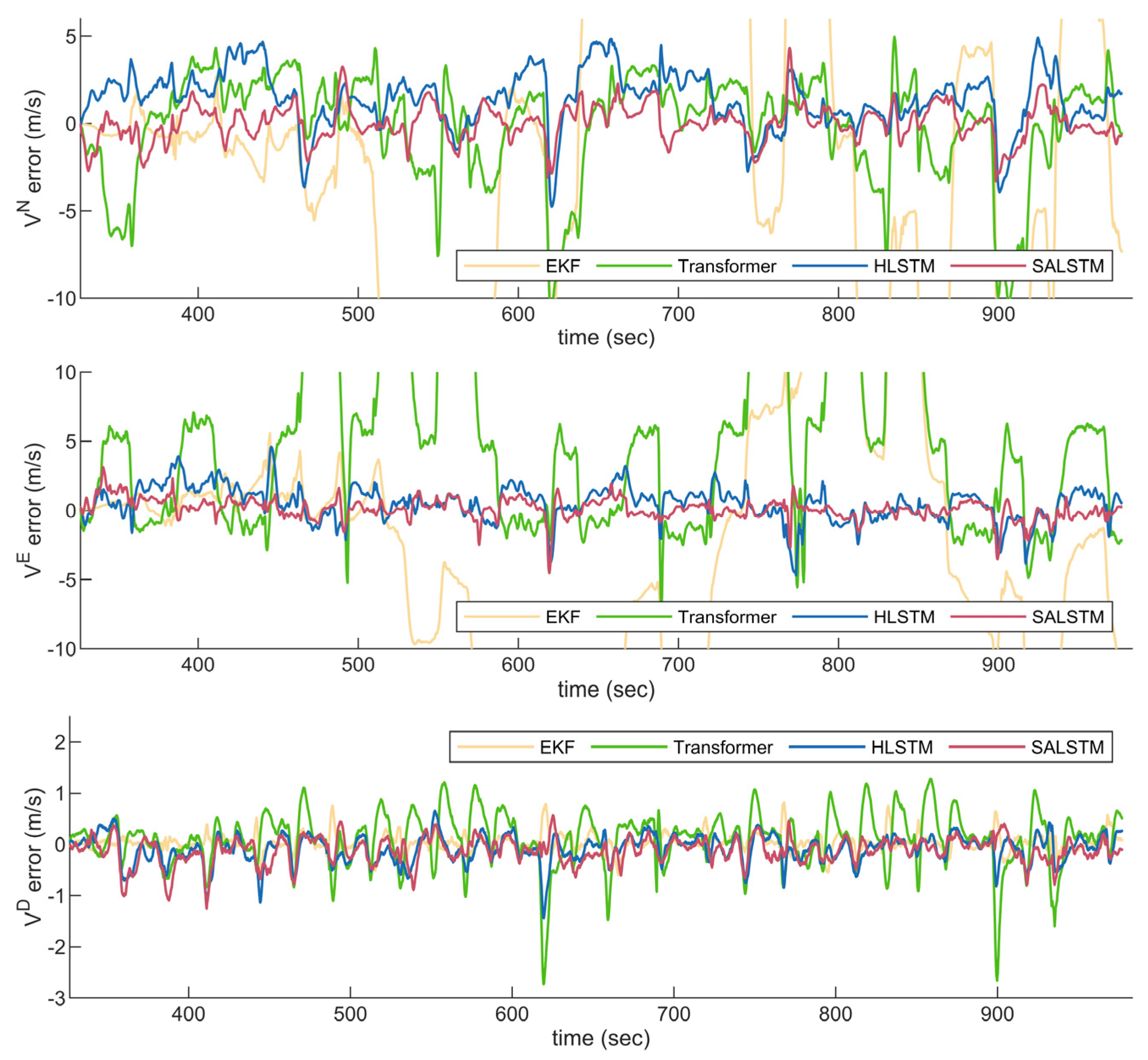

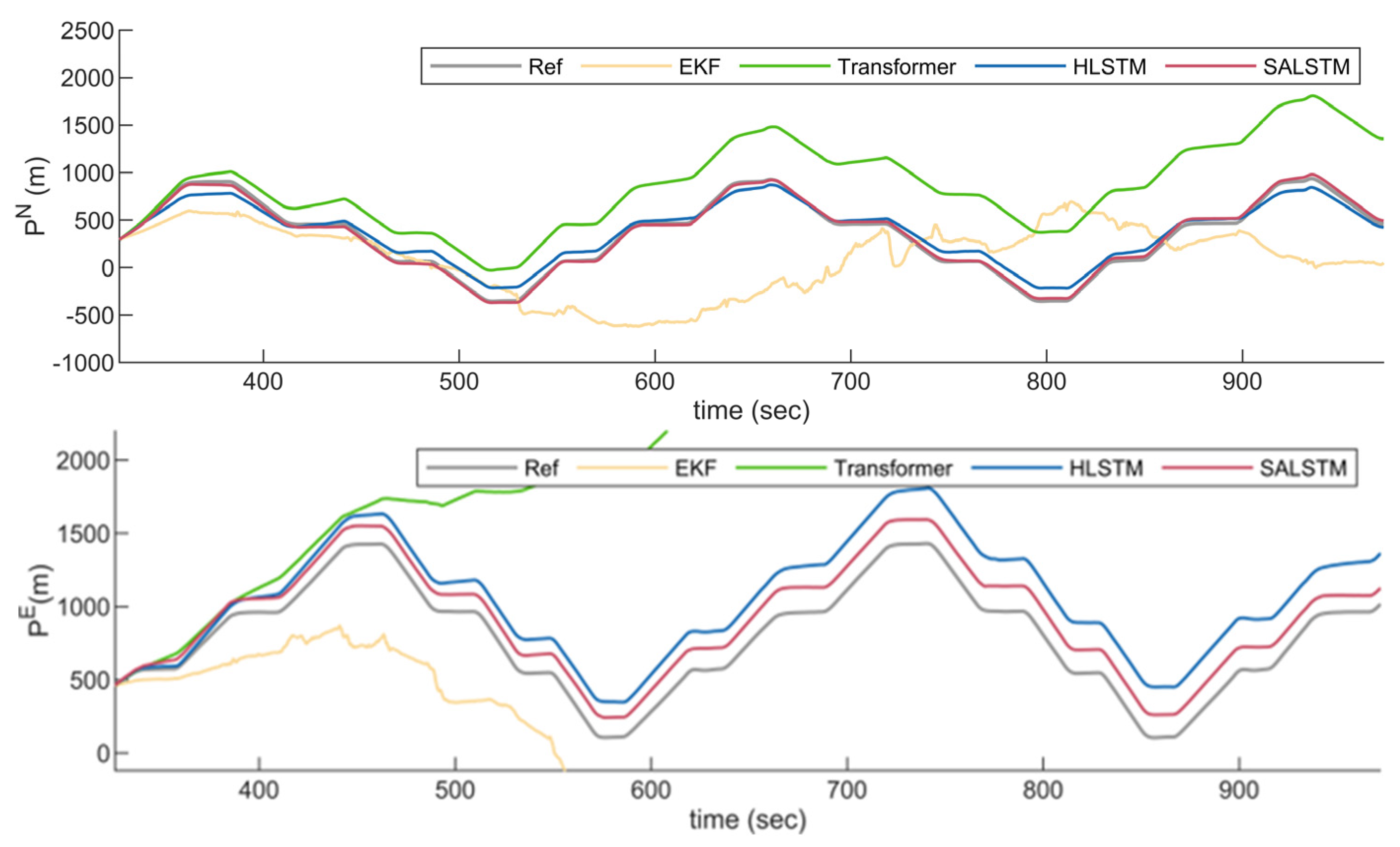

4.4. Test Results

5. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Nomenclature

| Aerodynamic force | |

| Ground velocity | |

| UAV mass | |

| Specific force | |

| Acceleration | |

| Rotation matrix | |

| Gravitational acceleration | |

| Thrust | |

| Gravity | |

| Dynamic pressure | |

| Reference area | |

| Aerodynamic derivatives | |

| Angle of attack | |

| Sideslip angle | |

| Airspeed | |

| Air density | |

| Wind velocity | |

| Geomagnetic vector | |

| Sample time interval | |

| Pitot airspeed | |

| Quaternion | |

| Position in north–east–down frame | |

| Lift, drag, and lateral forces | |

| or | Euler attitude |

| or | Relative velocity in the body frame |

| or | Angular rates |

| or | Magnetic field intensity measured by the magnetometer |

| Superscripts: | |

| Values in the body frame | |

| Values in the navigation frame | |

| Values in the wind frame |

Appendix A

References

- Taghizadeh, S.; Safabakhsh, R. An Integrated INS/GNSS System with an Attention-Based Hierarchical LSTM during GNSS Outage. GPS Solut. 2023, 27, 71. [Google Scholar] [CrossRef]

- Daneshmand, S.; Lachapelle, G. Integration of GNSS and INS with a Phased Array Antenna. GPS Solut. 2018, 22, 3. [Google Scholar] [CrossRef]

- Li, M.; Hu, T. Deep Learning Enabled Localization for UAV Autolanding. Chin. J. Aeronaut. 2021, 34, 585–600. [Google Scholar] [CrossRef]

- Pasqualetto Cassinis, L.; Fonod, R.; Gill, E.; Ahrns, I.; Gil-Fernández, J. Evaluation of Tightly- and Loosely-Coupled Approaches in CNN-Based Pose Estimation Systems for Uncooperative Spacecraft. Acta Astronaut. 2021, 182, 189–202. [Google Scholar] [CrossRef]

- Huang, H.; Yang, Y.; Wang, H.; Ding, Z.; Sari, H.; Adachi, F. Deep Reinforcement Learning for UAV Navigation Through Massive MIMO Technique. IEEE Trans. Veh. Technol. 2020, 69, 1117–1121. [Google Scholar] [CrossRef]

- Lin, H.-Y.; Zhan, J.-R. GNSS-Denied UAV Indoor Navigation with UWB Incorporated Visual Inertial Odometry. Measurement 2023, 206, 112256. [Google Scholar] [CrossRef]

- Liu, H.; Pan, S.; Wu, P.; Yu, K.; Gao, W.; Yu, B. Uncertainty-Aware UWB/LiDAR/INS Tightly Coupled Fusion Pose Estimation via Filtering Approach. IEEE Sens. J. 2024, 24, 11113–11126. [Google Scholar] [CrossRef]

- He, G.; Yuan, X.; Zhuang, Y.; Hu, H. An Integrated GNSS/LiDAR-SLAM Pose Estimation Framework for Large-Scale Map Building in Partially GNSS-Denied Environments. IEEE Trans. Instrum. Meas. 2021, 70, 1–9. [Google Scholar] [CrossRef]

- Guangcai, W.; Xu, X.; Zhang, T. M-M Estimation-Based Robust Cubature Kalman Filter for INS/GPS Integrated Navigation System. IEEE Trans. Instrum. Meas. 2021, 70, 1–11. [Google Scholar] [CrossRef]

- Sabatini, R.; Roy, A.; Blasch, E.; Kramer, K.A.; Fasano, G.; Majid, I.; Crespillo, O.G.; Brown, D.A.; Ogan Major, R. Avionics Systems Panel Research and Innovation Perspectives. IEEE Aerosp. Electron. Syst. Mag. 2020, 35, 58–72. [Google Scholar] [CrossRef]

- Sharaf, R.; Noureldin, A.; Osman, A.; El-Sheimy, N. Online INS/GPS Integration with a Radial Basis Function Neural Network. IEEE Aerosp. Electron. Syst. Mag. 2005, 20, 8–14. [Google Scholar]

- Chen, L.; Fang, J. A Hybrid Prediction Method for Bridging GPS Outages in High-Precision POS Application. IEEE Trans. Instrum. Meas. 2014, 63, 1656–1665. [Google Scholar] [CrossRef]

- Yao, Y.; Xu, X.; Zhu, C.; Chan, C.-Y. A Hybrid Fusion Algorithm for GPS/INS Integration during GPS Outages. Measurement 2017, 103, 42–51. [Google Scholar]

- Taghizadeh, S.; Safabakhsh, R. An Integrated INS/GNSS System With an Attention-Based Deep Network for Drones in GNSS Denied Environments. IEEE Aerosp. Electron. Syst. Mag. 2023, 38, 14–25. [Google Scholar]

- Malleswaran, M.; Vaidehi, V.; Sivasankari, N. A Novel Approach to the Integration of GPS and INS Using Recurrent Neural Networks with Evolutionary Optimization Techniques. Aerosp. Sci. Technol. 2014, 32, 169–179. [Google Scholar] [CrossRef]

- Dai, H.; Bian, H.; Wang, R.; Ma, H. An INS/GNSS Integrated Navigation in GNSS Denied Environment Using Recurrent Neural Network. Def. Technol. 2020, 16, 334–340. [Google Scholar] [CrossRef]

- Doostdar, P.; Keighobadi, J.; Hamed, M.A. INS/GNSS Integration Using Recurrent Fuzzy Wavelet Neural Networks. GPS Solut. 2020, 24, 29. [Google Scholar] [CrossRef]

- Bijjahalli, S.; Sabatini, R.; Gardi, A. Advances in Intelligent and Autonomous Navigation Systems for Small UAS. Prog. Aerosp. Sci. 2020, 115, 100617. [Google Scholar]

- Fang, W.; Jiang, J.; Lu, S.; Gong, Y.; Tao, Y.; Tang, Y.; Yan, P.; Luo, H.; Liu, J. A LSTM Algorithm Estimating Pseudo Measurements for Aiding INS during GNSS Signal Outages. Remote Sens. 2020, 12, 256. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, Y.; Liu, Z.; Wang, S.; Xing, H.; Zhu, W. Enhancing the Reliability of Shipborne INS/GNSS Integrated Navigation System during Abnormal Sampling Periods Using Bi-LSTM and Robust CKF. Ocean Eng. 2023, 288, 115934. [Google Scholar] [CrossRef]

- Chen, S.; Xin, M.; Yang, F.; Zhang, X.; Liu, J.; Ren, G.; Kong, S. Error Compensation Method of GNSS/INS Integrated Navigation System Based on AT-LSTM during GNSS Outages. IEEE Sens. J. 2024, 24, 20188–20199. [Google Scholar]

- Lv, P.-F.; Lv, J.-Y.; Hong, Z.-C.; Xu, L.-X. Integration of Deep Sequence Learning-Based Virtual GPS Model and EKF for AUV Navigation. Drones 2024, 8, 441. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling 2014. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Tang, Y.; Jiang, J.; Liu, J.; Yan, P.; Tao, Y.; Liu, J. A GRU and AKF-Based Hybrid Algorithm for Improving INS/GNSS Navigation Accuracy during GNSS Outage. Remote Sensing 2022, 14, 752. [Google Scholar]

- Zhao, S.; Zhou, Y.; Huang, T. A Novel Method for AI-Assisted INS/GNSS Navigation System Based on CNN-GRU and CKF during GNSS Outage. Remote Sensing 2022, 14, 4494. [Google Scholar]

- Meng, X.; Tan, H.; Yan, P.; Zheng, Q.; Chen, G.; Jiang, J. A GNSS/INS Integrated Navigation Compensation Method Based on CNN–GRU + IRAKF Hybrid Model During GNSS Outages. IEEE Trans. Instrum. Meas. 2024, 73, 1–15. [Google Scholar]

- Guo, T.; Jiang, N.; Li, B.; Zhu, X.; Wang, Y.; Du, W. UAV Navigation in High Dynamic Environments: A Deep Reinforcement Learning Approach. Chin. J. Aeronaut. 2021, 34, 479–489. [Google Scholar]

- Wu, Z.; Yao, Z.; Lu, M. Deep-Reinforcement-Learning-Based Autonomous Establishment of Local Positioning Systems in Unknown Indoor Environments. IEEE Internet Things J. 2022, 9, 13626–13637. [Google Scholar]

- Xue, Y.; Chen, W. Multi-Agent Deep Reinforcement Learning for UAVs Navigation in Unknown Complex Environment. IEEE Trans. Intell. Veh. 2023, 9, 2290–2303. [Google Scholar]

- Cheng, J.; Dong, L.; Lapata, M. Long Short-Term Memory-Networks for Machine Reading. arXiv 2016, arXiv:1601.06733. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Wang, Z.; Li, J.; Liu, C.; Yang, Y.; Li, J.; Wu, X.; Yang, Y.; Ye, B. Optimization-Assisted Filter for Flow Angle Estimation of SUAV Without Adequate Measurement. Drones 2024, 8, 758. [Google Scholar] [CrossRef]

- Hu, G.; Wang, W.; Zhong, Y.; Gao, B.; Gu, C. A New Direct Filtering Approach to INS/GNSS Integration. Aerosp. Sci. Technol. 2018, 77, 755–764. [Google Scholar]

- Cho, A.; Kim, J.; Lee, S.; Kee, C. Wind Estimation and Airspeed Calibration Using a UAV with a Single-Antenna GPS Receiver and Pitot Tube. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 109–117. [Google Scholar]

- Yang, Y.; Liu, X.; Liu, X.; Guo, Y.; Zhang, W. Model-Free Integrated Navigation of Small Fixed-Wing UAVs Full State Estimation in Wind Disturbance. IEEE Sens. J. 2022, 22, 2771–2781. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar]

- Jiang, W.; Cai, T.; Xu, G.; Wang, Y. Autonomous Obstacle Avoidance and Target Tracking of UAV: Transformer for Observation Sequence in Reinforcement Learning. Knowl.-Based Syst. 2024, 290, 111604. [Google Scholar]

- Zhuang, Y.; Sun, X.; Li, Y.; Huai, J.; Hua, L.; Yang, X.; Cao, X.; Zhang, P.; Cao, Y.; Qi, L.; et al. Multi-Sensor Integrated Navigation/Positioning Systems Using Data Fusion: From Analytics-Based to Learning-Based Approaches. Inf. Fusion 2023, 95, 62–90. [Google Scholar]

- Gandhi, A.; Sharma, A.; Biswas, A.; Deshmukh, O. GeThR-Net: A Generalized Temporally Hybrid Recurrent Neural Network for Multimodal Information Fusion. In Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, October 8-10 and 15-16, 2016, Proceedings, Part II 14; Hua, G., Jégou, H., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; Volume 9914, pp. 883–899. ISBN 978-3-319-48880-6. [Google Scholar]

| Sensor | Type | Noise/Error | Frequency |

|---|---|---|---|

| BMI160 | Gyro Accelerometer | 0.014°/S/√Hz | 250 Hz |

| 150 µg/√Hz | |||

| MXT906AM | GPS receiver | pos: 2.5 m vel: 0.1 m/s | 5 Hz |

| LIS3MDL | Magnetometer | 3.2 mG | / |

| MS5611 | Barometer | 0.027 mbar | 1024 Hz |

| MS5525 | Pressure sensor & Pitot tube | 0.84 Pa | 100 Hz |

| Model | Cost Function | Batch Size | Dropout | Learning Rate | Activation Function | Optimizer |

|---|---|---|---|---|---|---|

| Transformer | MSE | 300 | 0.2 | 0.01 | GELU, Softmax | Adam |

| LSTM, HLSTM | MSE | 300 | 0.2 | 0.01 | tanh, sigmoid | Adam |

| SALSTM | MSE | 300 | 0.2 | 0.01 | Softmax, tanh, sigmoid | Adam |

| Model | Transformer | LSTM | HLSTM | SALSTM |

|---|---|---|---|---|

| Total params | 187,229 | 177,600 | 190,290 | 166,243 |

| Inference time (s) | 0.042 | 0.044 | 0.047 | 0.044 |

| State | EKF | Transformer | HLSTM | SALSTM |

|---|---|---|---|---|

| Roll | 2.16 | 3.52 | 1.72 | 1.36 |

| Pitch | 2.79 | 1.52 | 1.17 | 0.98 |

| Yaw | 9.55 | 32.50 | 11.97 | 9.39 |

| State | EKF | Transformer | HLSTM | SALSTM |

|---|---|---|---|---|

| 20.05 | 3.04 | 2.14 | 1.02 | |

| 30.16 | 9.00 | 1.33 | 1.06 | |

| 0.17 | 0.53 | 0.28 | 0.27 |

| State | EKF | Transformer | HLSTM | SALSTM |

|---|---|---|---|---|

| 461.37 | 574.44 | 84.75 | 26.84 | |

| 1477.98 | 2164.73 | 279.39 | 138.51 | |

| 6.83 | 6.54 | 7.10 | 7.16 |

| State | EKF | Transformer | HLSTM | SALSTM |

|---|---|---|---|---|

| 991.58 | 897.83 | 139.60 | 35.27 | |

| 2525.42 | 3614.90 | 357.24 | 158.67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Shen, X.; Li, J.; Li, J.; Wu, X.; Yang, Y. Enhancing Integrated Navigation with a Self-Attention LSTM Hybrid Network for UAVs in GNSS-Denied Environments. Drones 2025, 9, 279. https://doi.org/10.3390/drones9040279

Wang Z, Shen X, Li J, Li J, Wu X, Yang Y. Enhancing Integrated Navigation with a Self-Attention LSTM Hybrid Network for UAVs in GNSS-Denied Environments. Drones. 2025; 9(4):279. https://doi.org/10.3390/drones9040279

Chicago/Turabian StyleWang, Ziyi, Xiaojun Shen, Jie Li, Juan Li, Xueyong Wu, and Yu Yang. 2025. "Enhancing Integrated Navigation with a Self-Attention LSTM Hybrid Network for UAVs in GNSS-Denied Environments" Drones 9, no. 4: 279. https://doi.org/10.3390/drones9040279

APA StyleWang, Z., Shen, X., Li, J., Li, J., Wu, X., & Yang, Y. (2025). Enhancing Integrated Navigation with a Self-Attention LSTM Hybrid Network for UAVs in GNSS-Denied Environments. Drones, 9(4), 279. https://doi.org/10.3390/drones9040279