Abstract

Multi-UAV path planning algorithms are crucial for the successful design and operation of unmanned aerial vehicle (UAV) networks. While many network researchers have proposed UAV path planning algorithms to improve system performance, an in-depth review of multi-UAV path planning has not been fully explored yet. The purpose of this study is to survey, classify, and compare the existing multi-UAV path planning algorithms proposed in the literature over the last eight years in various scenarios. After detailing classification, we compare various multi-UAV path planning algorithms based on time consumption, computational cost, complexity, convergence speed, and adaptability. We also examine multi-UAV path planning approaches, including metaheuristic, classical, heuristic, machine learning, and hybrid methods. Finally, we identify several open research problems for further investigation. More research is required to design smart path planning algorithms that can re-plan pathways on the fly in real complex scenarios. Therefore, this study aims to provide insight into the multi-UAV path planning algorithms for network researchers and engineers to contribute further to the design of next-generation UAV systems.

1. Introduction

Path planning for swarms of unmanned aerial vehicles (UAVs) is significantly complex in terms of navigation and management. Multi-UAV path planning combines individual UAV route planning, which helps to finish the operation in a complicated environment [1]. The task entails identifying the optimal route for UAVs to travel from their initial location to their destination, considering different constraints and goals. Due to their limited energy capacity, UAVs require an energy-efficient method to ensure the network’s durability and mission completion [2,3]. The system first collects environmental data from its surroundings before efficiently determining a route for all aerial vehicles. Using swarms reduces flight duration, leading to lower operational expenses [4]. The field of swarm UAVs is incredibly intriguing and offers numerous important advantages [5]. Swarm robots can function effectively in large numbers so that the system can handle the failure of individual robots. Even if one robot malfunctions or is removed from the swarm, the rest of the robots can continue to operate effectively and complete the mission, usually without a significant decrease in performance [6,7]. Such a swarm reduces the requirement for human involvement in hazardous situations [8]. Recent approaches concentrate on achieving autonomous control across the entire swarm. Efficient calculation of flight trajectories with minimal cost is essential [9]. Low-altitude UAVs frequently navigate places highly inhabited by obstacles such as buildings, trees, power lines, and infrastructure. Because of these obstructions, UAVs must perform precise maneuvers to prevent collisions [10]. The flight path calculations must account for these obstacles and the anticipated locations of all UAVs in the swarm to avoid collisions among fleet members. Using multiple Unmanned Aerial Vehicles (UAVs) offers clear benefits compared to a single-UAV system. A multi-UAV system is particularly valuable in extensive or widespread disaster scenarios, where a single UAVs’ capabilities may be insufficient due to limited range and coverage. Real-time data sharing among UAVs enables a more thorough understanding of the operational environment, helps to identify and avoid potential hazards, and improves overall flight safety [11]. Advanced sensors and processing power are necessary for real-time detection of obstacles. Finding an efficient route is essential for multi-UAV scenarios involving high speed, distance coverage, and other operational limitations [12]. Many important factors need to be considered when planning a route for a swarm of UAVs. These include navigating obstacles, efficient communication, teamwork, prioritizing safety and dependability, energy conservation, flexibility in changing surroundings, simplified system design, reduced computational demands, and the capability to operate at various altitudes. The subsections below discuss the research challenges, scope, and contributions of this study, in addition to providing a summary of existing algorithms.

1.1. Research Challenges

Determining an obstacle-free route in an unfamiliar area is challenging for a multi-UAV path planning algorithm. Researchers in the field of network studies are currently showing great interest in the exploration of multi-UAV path planning. There are numerous ongoing research studies, and experts are emphasizing the need for additional analysis in diverse environments. When planning a path for swarm UAVs, factors such as energy efficiency, effectiveness, control, cooperation, and safety must be considered. These factors play a crucial role in achieving the primary objective of operating a swarm of UAVs [13]. Finding an obstacle-free route in an unfamiliar area is one of the challenging tasks of multi-UAV path planning. Intelligent multi-UAV path planning algorithms must be capable of preventing collisions between UAVs [14,15]. A crucial component of path planning algorithms involves lowering the system’s computational cost [16]. The algorithm’s slow convergence speed makes it inefficient for UAVs to complete its task [17]. Finding the best route can be aided by the algorithm’s minimal complexity [18].

1.2. Research Scope and Contribution

In this research, we address the following two research questions.

Research Question 1: What comprehensive review paper can be formulated on multi-UAV path planning? To address this research question, we conducted a comprehensive literature survey by selecting more than 100 existing relevant articles published in academic journals and conference proceedings.

Research Question 2: What can be done to classify the existing research on multi-UAV path planning approaches? The aim of this research paper is to provide a comprehensive review of various approaches to multi-UAV (swarm) path planning. The research on swarm UAV path planning is vital for the advancement of autonomous systems and the improvement of their effectiveness in real-world applications. The expected outcomes include improved algorithms for path planning, a better understanding of multi-UAV coordination, and practical solutions that enhance operational efficiency and effectiveness. By organizing research into these categories, researchers can clearly understand multi-UAV path planning, identify gaps in current knowledge, and highlight areas for future exploration. Research into adaptive path planning can improve the system’s handling ability in dynamic environments. Thus, in this paper, we detail its relevance and potential impact, as well as the benefits it offers to both the wider academic and industrial communities for implementations.

The primary motivation for this paper is to assemble various existing algorithms used in research on multi-UAV path planning in various scenarios together in one paper. This research can help network engineers and researchers select the best algorithm for their required missions, comparing the exploration and exploitation of all the methods. This work presents the results of research and analysis on multi-UAV path planning conducted over the last eight years (2017 to 2024) using well-known databases such as Google Scholar, Science Direct, Scopus, IEEE Xplore, Elsevier Science Direct, the MDPI platform, and the Wiley Online Library. Search terms used in this paper include machine learning for multi-UAV path planning, hybrid approach for multi-UAV path planning, heuristics for multi-UAV path planning, meta-heuristics for multi-UAV path planning, survey, review, and overview of multi-UAV path planning, as well as classical approaches for multi-UAV path planning. The main contributions of this paper are outlined as follows:

- We critically review more than 100 published papers on UAV path planning. To this end, we carefully selected relevant refereed articles from scholarly journals and conference proceedings.

- We classify the existing research on UAV path planning based on multi-UAV algorithms and features. To this end, we focus on a survey of various approaches, including metaheuristic, classical, heuristic, machine learning, and hybrid methods. This significant work contributes to the design and development of next-generation UAV systems.

- We identify and discuss open research problems, including multi-UAV path planning, focusing on machine learning and hybrid algorithms for practical application scenarios such as disaster rescue and recovery management.

1.3. Summary of Existing Algorithms

A summary of existing algorithms is presented in Table 1. The selected algorithms are presented in column 1. Columns 2, 3, 4, 5, 6, and 7 show the problems addressed, limitations, environmental type, extensive area scalability, scenario, and corresponding references, respectively. Many route planning algorithms ignore some parameters. The Hexagonal Area Search Deep Q-Network (HAS-DQN) is shown in the first row of Table 1. It addresses the problems of energy efficiency and overlapping coverage during route planning, but it overlooks the computation of complexity and costs. This approach can be used to collect data in a dynamic environment that is not scalable over a large area. The different degrees of multi-UAV height are not considered, but the whale-inspired deep Q-network (WDQN) focuses on issues associated with complicated dynamic environments. This technique can be utilized for emergency rescue. However, it is not scalable over a wide area. The multi-objective deep reinforcement learning (MODRL) technique does not account for communication between UAVs and the GCS. This method can be used in catastrophes and mountainous situations and is scalable over a wide area. The distributed formation algorithm (row 4) considers the overfitting of complicated problems, but the energy efficiency aspect is not considered. This technique is applicable in 270 unseen instances and is not scalable in vast areas. The DQN method (Row 5) ignores convergence speed, but it considers battery constraints. This approach can be used to gather data in restricted areas; however, it is not scalable to huge areas. The Genetic Algorithm (GA) and homotopic algorithm consider UAVs’ safe return from a limited area, but energy efficiency is not considered (see row 6). This approach can be used to recover other UAVs using a controller UAV and is scalable over a wide area. Multi-objective particle swarm optimization with multi-mode collaboration based on reinforcement learning (MCMOPSO–RL) does not consider system resource requirements (see row 7). This technique can control many static barriers in a complex situation and is scalable over a wide region.

Table 1.

Summary of existing algorithms.

Fermat point-based grouping particle swarm optimization (FP-GPSO) (row 8) considers optimal path finding but excludes communication between UAVs and the GCS. Although the experiment only covers a short area, this algorithm allows swarms to fly over a long distance with both carrier and parasite UAVs. The hybrid improved particle swarm optimization (PSO) model and the Gaussian pseudospectral method (GPM) consider flight time; however, as indicated in Table 1, row 9, UAV height and system resource requirements are not considered. This technique can shorten flying times in an environment with numerous obstacles, but it is not designed for large-area scalability. Row 10 (Table 1) shows that the hybrid method paired with model predictive control (MPC) and particle swarm optimization (PSO) considers search with shifting targets but not energy efficiency or UAV height. This approach can be used to find moving targets; however, it is not designed for broad area scalability. The hybrid Spatial Refined Voting Mechanism (SPVM) and Particle Swarm Optimization (PSO) algorithms examine concerns about convergence and local optima, but energy efficiency is not addressed (see row 11). This algorithm improves search quality in the 4D environment, avoids dangers and obstacles, and is scalable in vast areas. The hybrid PPSwarm algorithm paired with Practical Swarm Optimization (PSO) and Rapidly exploring Random Trees (RRT) (row 12) considers path discovery in narrow passageways but neither height nor energy efficiency. This approach can be applied to tiny passages with static impediments and is scalable in significant areas. Table 1, row 13, illustrates that a bioinspired neural network and improved Harris hawk optimization (BINN-HHO) were employed to evaluate static obstacle avoidance in a mountain scenario, with moving obstacles omitted. This technique is scalable over a wide area and can be used in mountainous regions with long, unstable paths and poor moving-obstacle avoidance capabilities. Comprehensive learning dynamic multi-swarm particle swarm optimization (CL-DMSPSO) handles issues in complex environments, but it does not address communication between UAVs and the GCS (Table 1, row 14). This technique can reduce path length, avoid collisions in dynamic environments, and scale across a wide area. Table 1, row 15, reveals that the Non-Dominated Sorting Genetic Algorithm multi-objective Evolutionary algorithm (NSGA multi-objective EA) includes convergence time and communication between UAVs and the GCS but not barriers in an unknown environment. This algorithm does not scale well in extensive areas and can be utilized for data collection, communication with the the Ground Control Station (GCS), and surveillance in dynamic settings.

1.4. Paper Organization

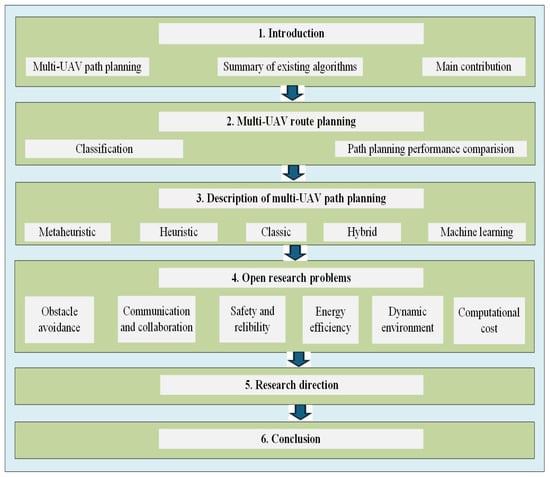

The structure and organization of this paper are shown in Figure 1. The multi-UAV path planning algorithms are discussed in Section 2, which focuses on classifying and comparing multi-UAV path planning systems. Section 3 explores various approaches, including metaheuristic, heuristic, classic, machine learning, and hybrid algorithms. The open research problems are discussed in Section 4. The research directions are highlighted in Section 5, and a brief conclusion in Section 6 ends the paper.

Figure 1.

The structure and organization of this paper.

2. Multi-UAV Path Planning Algorithms

A multi-UAV path planning model minimizes the path length, minimizes collisions with obstacles and between UAVs, reduces communication requirements for UAVs, improves communication quality in complex environments, simplifies system complexity, and shares real-time data between UAVs and the GCS [32]. The subsections below discuss the classification of multi-UAV path planning and compare existing path planning algorithms.

2.1. Classification of Multi-UAV Path Planning Algorithms

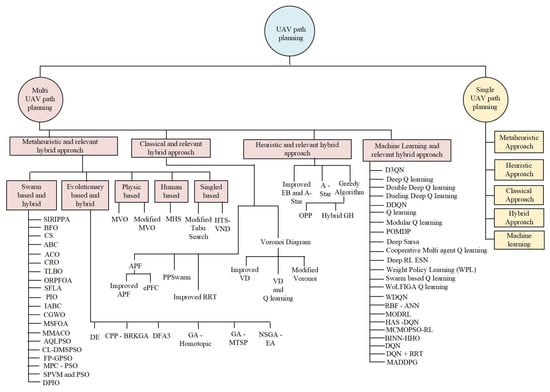

The classification of multi-UAV path planning is shown in Figure 2. Numerous algorithms exist to plan routes for swarm UAVs. Multi-UAV operations utilize metaheuristic, heuristic, classical, machine learning, and hybrid techniques. Metaheuristic algorithms encompass swarm-based, evolutionary, physical, human, and single-UAV-based approaches. Swarm-based algorithms include the Secondary Immune Responses based Immune Path Planning Algorithm (SIRIPPA), Artificial Bee Colony (ABC), Ant Colony Optimization (ACO), Fermat point-based grouping particle swarm optimization (FP-GPSO), Coral Reef Optimization (CRO), Teaching–Learning-Based Optimization (TLBO), the Optimal reference point-based Fruit Fly Optimization Algorithm (ORPFOA), Cuckoo Search (CS), Bacteria Foraging Optimization (BFO), the Shuffled Frog Leaping Algorithm (SFLA), Pigeon-Inspired Optimization (PIO), the Multiple Swarm Fruit Fly Optimization Algorithm (MSFOA), Improved Artificial Bee Colony (IABC), Maximum–minimum ant colony optimization (MMACO), Chaotic Gray Wolf Optimization (CGWO), and comprehensive learning dynamic multi-swarm particle swarm optimization (CL-DMSPSO). The Multi-Verse Optimizer (MVO) is a metaheuristic technique based on a physics algorithm [34,35]. The Modified Harmony Search and Improved Harmony Search Algorithm are metaheuristic algorithms based on a human-based approach [36]. Genetic Algorithm–Multiple Traveling Salesman Problem (GA-MTSP) and the Coverage Path Planning–Biased Random Key Genetic Algorithm (CPP-BRKGA) are instances of hybrid algorithms that are based on evolutionary biology. Adaptive Q-Learning-based Particle Swarm Optimization (AQLPSO) is a hybrid algorithm based on swarms. The Improved Artificial Potential Field (IAPF), Artificial Potential Field (APF), and Improved Rapidly Exploring Random Tree (IRRT) are classical approaches [37]. PPSwarm (PSO—Practical Swarm Optimization, RRT—Rapidly Exploring Random Trees) is an example of a hybrid classical algorithm. There are various forms of heuristics, including the Greedy Algorithm, A-star, Optimal Path Planning (OPP), and Hybrid Greedy Heuristic (GH). An example of a hybrid heuristic approach is the A-Star algorithm with an elastic band. One area of machine learning is reinforcement learning. Path planning techniques for swarm UAV operations heavily rely on reinforcement learning. The Hexagonal Area Search Deep Q Network (HAS-DQN), Q learning with Win-or-Learn Fast policy and IGA (WoLFIGA), multi-objective deep reinforcement learning (MODRL), Partially Observable Markov Decision Process (POMDP), multi-objective deep reinforcement learning (MODRL), Deep Reinforcement Learning Echo State Network (Deep-RL-ESN), Deep Sarsa algorithms, whale-inspired deep Q network (WDQN), ulti-objective particle swarm optimization algorithm with multi-mode collaboration based on reinforcement learning (MCMOPSO-RL), Dueling double deep Q-networks (D3QN), and Radial Basis Functions–Artificial Neural Network (RBF-ANN) are instances of hybrid algorithms for machine learning [21,38,39].

Figure 2.

Classification of multi-UAV path planning algorithms.

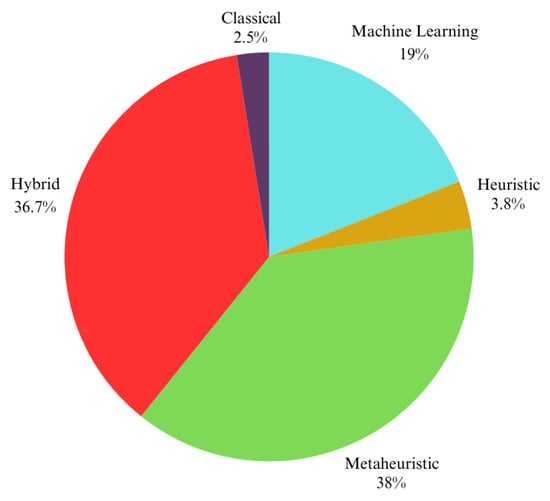

Figure 3 displays the annual published articles on multi-UAV path planning algorithm from 2021 to 2025. According to the statistics, the metaheuristic-based approach was utilized of the time, representing the greatest percentage among multi-UAV path planning algorithms, followed by hybrid algorithms (), algorithms based on machine learning (), heuristic-based algorithms (), and classical algorithms (). Google Scholar, Science Direct, Scopus, IEEE Xplore, Elsevier Science Direct, the MDPI platform, and the Wiley Online Library are the sources of these data.

Figure 3.

Published papers on multi-UAV path planning algorithms.

2.2. Existing Path Planning Algorithms

Table 2 compares various multi-UAV route planning strategies based on UAV time consumption, computational cost, system complexity, convergence speed, adaptability in challenging environments, path smoothness, availability of a online algorithm, extensive area scalability, and obstacle avoidance capability. The algorithms are compared using experimental data from several publications. For example, hybrid genetic algorithms and MTSP reduce flight paths but are not cost-effective. Although theycan scale over a vast region, path smoothness and obstacle avoidance capacity were not been taken into account in this study, and they are not online algorithms. The discrete pigeon-inspired optimization (DPIO) algorithm is simple to implement, but it requires more time to run. The study did not considered path smoothness and obstacle avoidance capacity. Despite the algorithm’s ability to scale across a large area, it is not an online algorithm. The Chaotic Gray Wolf Optimization (CGWO) algorithm provides lower system complexity and cost, but the convergence time is sluggish. Path smoothness and obstacle avoidance capability were taken into account in the study; although it can scale across a wide region, it is not an online algorithm. Maximum minimal ant colony optimization (MMACO) is a quick convergence algorithm. However, the complexity of the system is considerable. Path smoothness was not taken into account in the study; although it can scale across a wide region and has obstacle avoidance capability, it is not an online algorithm. Although improved PSO algorithms speed up convergence, they are unable to adapt to dynamic conditions. Path smoothness and obstacle avoidance capability were taken into account in this study; nonetheless, it is not an online method and is not scalable over a vast region. Although it performs better, the hybrid DQN and RRT algorithm slows down as the number of obstacles increases. The study did not consider obstacle avoidance and path smoothness. It is a scalable online method that works over a wide geographic area. The deep reinforcement learning algorithm has a fast convergence speed, but the computing cost is high. The study considered path smoothness and obstacle avoidance capabilities; it is an online approach that is scalable across a large geographic area. Although the reinforcement learning algorithm has a straightforward system, it takes a long time to compute. It is not an online technique, and the smoothness of the route is not considered. It cannot be scaled over a large geographical area. However, it is capable of avoiding obstacles. The complexity of the system of the deep reinforcement learning algorithm and its computational cost are significant. IT cannot avoid obstacles and does not consider the smoothness of the path. It is scalable across a wide geographic area and is an online method. The Improved Artificial Bee Colony (IABC) algorithm has low system complexity and cost. It does not take path smoothness into account, and it is not scalable across a wide geographic area. This method is online and can avoid obstacles. The improved artificial potential field (IAPF) algorithm requires substantial time, system complexity, and expensive costs but converges quickly. It is not an online algorithm and does not consider path smoothness. It can avoid obstacles and is scalable over a wide geographic area. Multi-Agent Deep Deterministic Policy Gradient (MADDPG) can plan a path for a swarm of UAVs in a complicated environment. In addition to not being an online algorithm, it does not consider path smoothness. It is also not scalable over a wide geographic area and cannot avoid obstructions. A performance comparison of existing multi-UAV path planning algorithms is presented next.

Table 2.

Performance comparison of existing multi-UAV path planning algorithms.

3. Description of Multi-UAV Path Planning Approaches

An individual aerial vehicle can perform limited operational tasks due to its limited energy and strength. In a complex environment, a multi-UAV system is required to complete the task with adequate strength, accuracy, and flexibility [50]. Several multi-UAV path planning algorithms exist, including metaheuristic, heuristic, classical, machine learning, and hybrid approaches. Each category offers several algorithms that can be used to plan multi-UAV routes. The subsections below discuss the multi-UAV path planning categories and some of the algorithms from each category.

3.1. Metaheuristic Approach

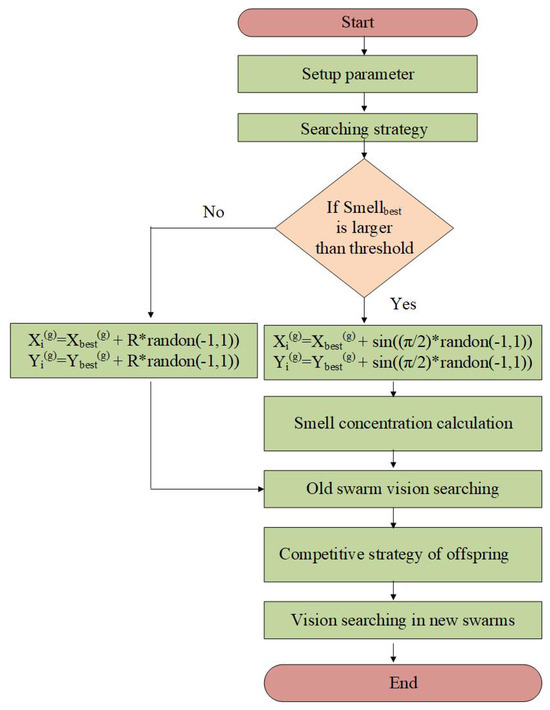

The metaheuristic technique is a prominent algorithm for multi-UAV path planning. There are numerous types of these strategies, including swarm-based, evolutionarily-based, physically-based, human-base, and single-UAV-based algorithms. Swarm-based algorithms include PSO, FOA, ACO, and ABC. Many hybrid algorithms are now widely utilized in conjunction with metaheuristic algorithms. The multiple swarm fruit fly optimization algorithm (MSFOA) for multi-UAV path planning is shown in Figure 4. Fruit flies have excellent vision and a keen sense of smell. Fruit flies use their sense of sight to find food and other flies and their sense of smell to identify scents in the air. MSFOA plans the multi-UAV route using the fruit fly concept. The fruit fly population size, maximum number of iterations, swarm size, threshold, and genetic coefficient of hybridization are all set up during the initialization step of the MSFOA process. In the MSFOA flow chart, the x coordinate of the g-th old swarm’s location is . The y coordinate of the g-th old swarm’s location is . The x coordinate of the i-th fruit fly in the g-th old swarm is . The y coordinate of the i-th fruit fly in the g-th old swarm is . The smell concentration of the original fruit fly swarm site is denoted by . Equations (1) and (2) are used to calculate and , respectively, if the value is greater than the threshold. If not, Equations (3) and (4) are used to compute and , respectively. After that, the algorithm computes the smell and looks for vision in old swarms. The procedure determines the x coordinate of the i-th fruit fly in the g-th new swarm, which is , and the y coordinate of the i-th fruit fly in the g-th new swarm, which is , as part of the competitive tactics of the offspring phase. It then searches the new swarm’s vision. The competitive process is then repeated. The optimal path for the multi-UAV system is then determined [57]. The secondary immune response, known as SIRIPPA in biology, is characterized by its speed and effectiveness. Based on previous contacts with obstacles or targets, the SIRIPPA algorithm can change its trajectories for multi-UAV scenarios. SIRIPPA divides multi-UAV route planning into two immunity phases. During the primary phase, this algorithm constructs an immune model using previous information to avoid obstacles efficiently. Each UAV remembers past encounters, such as obstacle locations and successful paths. In the second phase, the algorithm utilizes this memory to adjust future paths, steering clear of previously encountered obstacles. Combining these two phases, SIRIPPA enhances multi-UAV route planning [58]. Biological systems also inspire another algorithm called Symbiotic Artificial Immune System (SAIS), which can effectively handle large population sizes. This method demonstrates superior performance in finding an optimal solution. The SCPIO algorithm was created to minimize overall costs by implementing a timestamp segmentation method that enhances the coordination time, convergence abilities, and velocity of multiple UAVs [59]. Inspired by mutant pigeons, the optimization algorithm can reduce system complexity, ultimately enhancing global search capabilities. It balances exploitation and exploration, resulting in increased stability. Employing a timestamp segmentation method minimizes system costs and guarantees obstacle-free route planning for multiple aerial paths [60]. The ORPFOA multi-UAV route planning algorithm shows improved convergence speed, with low cost and time consumption required to complete the operation [61]. By employing quantic Pythagorean hodograph (PH) curve techniques, the DCPSO algorithm can overcome curvature obstacles as follows:

Figure 4.

Flow chart illustrating the multiple-swarm fruit fly optimization algorithm.

If

if

where R is the dwindled parameter of the search step.

This system possesses curvature continuity and rational intrinsic properties, making it adept at efficiently resolving the issue of local optima. Enhancing GWO techniques can result in improved energy and cost efficiency through the development of population initialization, enhancement of the decay factor, and unique position updating. These techniques can effectively prevent constraints in prohibited areas. The enhanced artificial bee colony algorithm improves system convergence and reduces computational requirements. This approach is efficient in emergency medical rescue operations in highly obstacle-ridden and catastrophic environments, reducing system complexity and improving efficiency [62]. The Cooperative Co-Evolutionary Algorithm (CCEA) enhances the convergence speed by minimizing the evolution process at each stage, thereby reducing system complexity. An analysis conducted with 500 aerial iterations revealed superior results. This algorithm is designed to operate effectively in complex and dynamic environments.

A multi-objective evolutionary algorithm achieves an improved convergence speed using weighted random techniques, leading to enhanced search capability. The algorithm simplifies the system, reducing computational demands. Despite experimenting with various conditions, the results remain unsatisfactory in complex environments. Further research is necessary to enhance the algorithm’s performance in complex settings [63]. The efficient management of obstacles can be achieved by employing a progressive weight vector system in a multi-objective evolutionary algorithm [64]. Enhanced multi-UAV route planning convergence speed can be accomplished by implementing techniques to improve the initial population. Accurate and productive scheduling can be achieved using a genetic algorithm. Efficient resolution of the problem of UAV safety and security can be achieved by employing a homotopic UAV recovery technique.

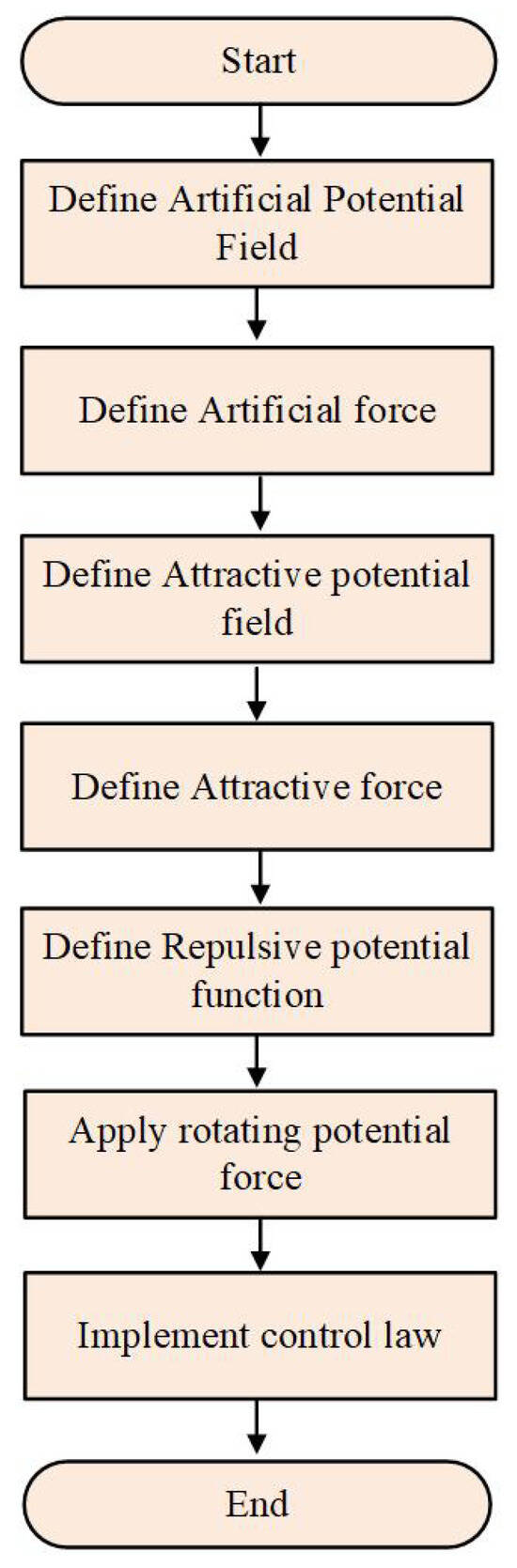

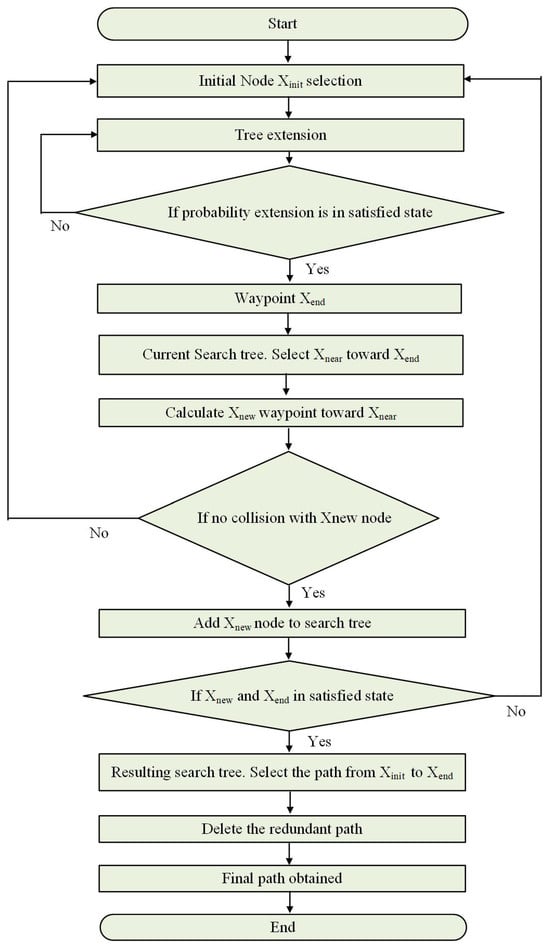

3.2. Classical Approach

Classical algorithms use global path planning, which can be applied to known and unknown environments [65]. Very few classical approaches are used in multi-UAV path planning. Examples of classical approaches include APF, improved RRT, and the Voronoi diagram. A flow chart of an improved APF-based multi-UAV path planning algorithm is shown in Figure 5. The artificial potential field is defined at the first stage of the process. Then, the force of artificial potential is determined. Next, a function for a desirable artificial potential field is defined. The force of attraction is also described. The function of repulsive potential is then defined. A spinning potential force is then used. Control legislation is implemented to enhance convergence and stability. Then, the UAV arrives at its target [66]. The Artificial Potential Field (APF) method enhances the attractive potential field function, repulsive field function, and temporary virtual target strategy to avoid constraints and collisions. The system has the capability to function in a dynamically constrained environment. In future experiments, energy efficiency needs to be taken into consideration when using this technique. Using Voronoi graph techniques can enhance the convergence speed of swarm UAV route planning. Region decomposition techniques help in reducing system complexity and computational requirements. These techniques can transform a multi-UAVsystem into a single-UAV system. Further research should experiment with this method in complex and constrained environments [67]. The Improved Rapidly Exploring Random Tree (IRRT) algorithm improves system efficiency by eliminating redundant nodes on the path, making it more cost-effective. This system can navigate constraints in a dynamic environment. A flow chart of an improved RRT-based multi-UAV path planning algorithm is shown in Figure 6. In the first stage, the principal node attaches to the tree. After that, it extends the tree. If the probability of extension is satisfied, then it selects the waypoint and searches the tree until the node closest to the is located. It then determines ’s direction relative to . If there is no collision with , the new node is added to the search tree. Otherwise, the method of selecting is repeated. If the difference between and is met, it goes to the resulting search tree, and it starts the node selection operation to select the route from to . The final multi-UAV path is discovered by removing the superfluous pathway [68].

Figure 5.

Flow chart illustrating an improved artificial potential field algorithm.

Figure 6.

Flow chart of an improved rapidly exploring random trees algorithm.

3.3. Heuristic Approach

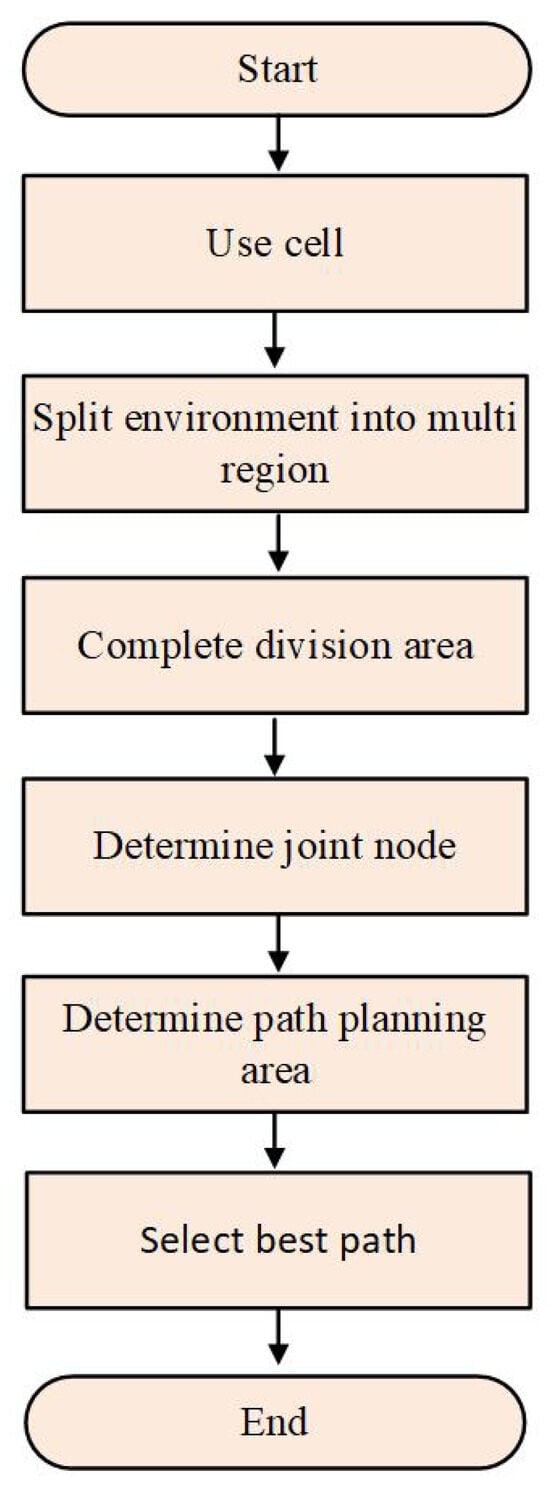

Heuristic algorithms are simple to run but have large computational overhead when used on a large scale. These algorithms can be divided into coverage path planning and optimal path planning algorithms [69]. Very few heuristic techniques are used in multi-UAV path planning. Examples of heuristic algorithms include A-star, the greedy algorithm, hybrid greedy heuristic (GH), and optimal path planning (OPP), which are all used in multi-UAV path planning. Hybrid enhanced heuristic algorithms include the elastic band and A-star algorithms. Elastic bands are utilized in this hybrid approach for general route planning. To smooth the initial path, the A-star algorithm employs local route planning [70]. Figure 7 shows a flow chart of the enhanced A* algorithm. The UAV region in this algorithm is a cell divided into multiple regions. Each UAV searches and covers the area that corresponds to the division. The system then determines the area for coverage for each UAV. The algorithm chooses the best route through the joint node [71].

Figure 7.

Flow chart of an enhanced A* algorithm.

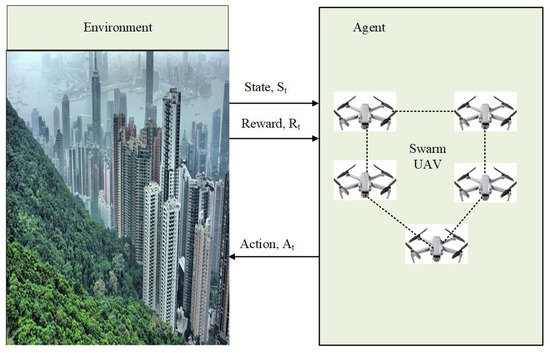

3.4. Machine Learning Approach

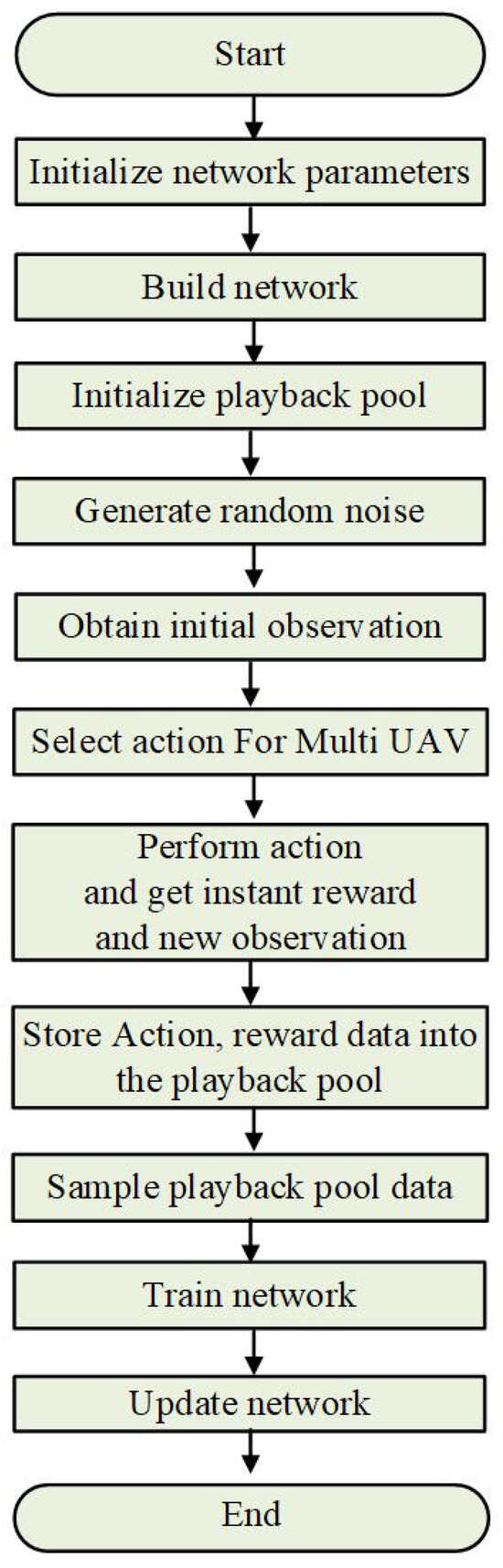

Machine learning is a prominent approach used in multi-UAV path planning. Numerous machine learning methods tackle numerous concerns associated with multi-UAV path planning. Many hybrid algorithms combined with machine learning perform admirably in the multi-UAV path planning domain. Reinforcement learning is a prominent strategy in machine learning algorithms. Q-learning is a prominent reinforcement learning technique. Combining Q-learning with various hybrid algorithms enhances multi-UAV path planning operations. Deep reinforcement learning combines techniques with deep neural networks. Deep Q Network (DQN) is a hybrid method that combines reinforcement and deep learning approaches. Examples of deep learning algorithms include artificial neural networks (ANNs) and convolutional neural networks (CNNs). Dueling deep Q-learning, deep Q-learning, and dueling double deep Q-learning are examples of deep reinforcement learning techniques. Figure 8 shows the process of a reinforcement learning technique. The decision-making process in reinforcement learning involves using various important factors, such as agent, environment, state, action, reward, policy, and value function, to plan a route. Reinforcement learning utilizes Markov decision processes to determine the multi-UAV path in a given environment. Figure 9 shows a flow chart of a deep reinforcement learning-based multi-UAV search technique. After initializing the settings, the parameters for the critic and target actor networks are created. The playbook pool is then initialized. After creating random noises for exploration, the first observation is obtained by choosing an action for each UAV. The system then carries out the actions, earns an immediate reward, and obtains an observation. The playback pool stores the actions, rewards, and observations. Finally, the playbook pool data are sampled and trained to update the network [72,73]. The Double Deep Deterministic Policy Gradient (DDPG) algorithm performs better in addressing energy consumption and convergence issues compared to the retrace mechanism. Q-learning stands out as a widely utilized algorithm in reinforcement learning [74]. The overestimation issue of Q-learning can impact the system’s efficiency [75]. The Q-learning algorithm demonstrates improved performance in effectively balancing exploitation and exploration [76]. In swarm UAV operations, Q-learning encounters an issue with high energy consumption [77,78]. Many other algorithms have been introduced to address the issues with Q-learning, such as the combination of Q-learning and neural networks known as deep Q-learning [79]. Q-learning algorithm adaptive conversion speed (ACSQL) demonstrates improved performance in maximizing exploration efficiency [75]. A method for devising a path to cover an entire area using Q-learning is outlined. This method integrates reinforcement learning to improve the ability to avoid constraints by leveraging global environmental data [80]. Computational performance is enhanced by combining the Q-learning algorithm with complete-coverage path planning [81]. Dueling deep Q-learning utilizes both global and local paths. The C51 Dueling Deep Q Network algorithm can generate multiple UAV route plans in a restricted area. This algorithm improves system performance using Q-distribution techniques. The system performance is enhanced using improved dueling deep Q network, which prevents the repetition of paths [82].

Figure 8.

Reinforcement learning algorithm.

Figure 9.

Flow chart illustrating a deep reinforcement learning-based algorithm.

3.5. Hybrid Approach

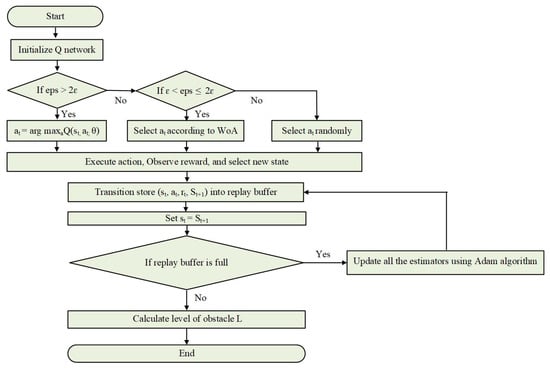

A hybrid algorithm is a valuable method in multi-UAV path planning. For example, a deep Q network approach uses a Markov decision process to solve the problems of path planning. The problems are addressed by considering path length, energy consumption, and obstacle avoidance. A deep neural network is used to train the Q-value function. A flow chart of the WDQN hybrid algorithm is shown in Figure 10. The multi-UAV hybrid route planning algorithm, called the whale-inspired deep Q-network, combines the Whale Algorithm (WoA) and deep Q network (DQN) to improve the learning efficiency and convergence speed by incorporating WoA during DQN training to balance between exploitation and exploration in the DQN model. This algorithm offers improved obstacle-free paths in dynamic environments.

were is the Q-network weight, is the state at time t, and is the action at time t. The Q network is initialized in stage one.

Figure 10.

Flow chart illustrating the whale-inspired deep Q-network hybrid algorithm.

- If, , then Equation (5) is applied.

- Otherwise, if , is selected according to WoA.

- Otherwise, is selected randomly.

Here, is the greedy probability and eps is random (0,1). First, the system performs the action, receives the reward, and selects a new state. The transition parameter is then recorded to the replay buffer by setting the state () to . If the buffer is full, all the estimators are updated using Adam’s approach to determine the obstruction level [20]. The technique for controlling UAV swarms, called position-based graph attention multi-agent potential field function learning, is based on multi-agent reinforcement learning (MARL). It involves gathering local data around each UAV to calculate the motion of multiple UAVs. An RL algorithm is utilized to navigate around obstacles and ensure cooperative motion in swarm UAV operations. The use of a graph attention mechanism (GAT) enhances the ability of multiple UAVs to operate in dynamic environments [83]. The system’s complexity increases with the use of a hybrid algorithm. However, a hybrid algorithm is necessary to enhance the system’s performance and capability. For instance, in multi-UAV route planning, maximum–minimum ant colony optimization (MMACO), combined with DE (differential evolution) algorithms, successfully solved the global optimal solution. These techniques utilize multiple colonies and sub-colonies to address the issue of global optima in multi-UAV route planning, enabling information sharing to improve the overall system performance. An example of a hybrid algorithm that combines a machine learning-based Q-learning algorithm with a metaheuristic-based PSO algorithm (also known as adaptive Q-learning-based particle swarm optimization) can improve system performance and convergence speed with lower system costs. This hybrid algorithm calculates the state, analyzes the maximum Q value to choose an action, and updates the Q value in determining the reward gained from the specific task. Employing the PSO algorithm can enhance the system’s performance in a dynamic environment [84,85,86]. Another example of a hybrid algorithm is the Voronoi diagram based on the classical approach, and the Q-learning method based on machine learning is effective in dynamic environments. This approach improves the initial Q value and reward process and is more effective in avoiding obstacles [87]. A hybrid method that combines metaheuristic-based hybrid tabu search with metaheuristic-based variable neighborhood descent (HTS-VND) improves computing performance and is efficient in data collection scenarios [88].

3.6. Summary of Multi-UAV Path Planning Approaches

This study discusses metaheuristic, heuristic, classical, machine learning, and hybrid algorithms for multi-UAV path planning. Metaheuristic algorithms are classified into several groups, including swarm, evolutionary, physical, human, and single-UAV-based approaches. The swarm algorithm optimizes the path using computer approaches like bioinspired activity found in bees, birds, and flies. Figure 2 illustrates many hybrid algorithms based on the swarm algorithm used in multi-UAV path planning. Many hybrid algorithms merge with evolutionary algorithms to handle multi-UAV path planning issues. While the classical approach offers very few algorithms for multi-UAV path planning, the heuristic and hybrid approaches are used for multi-UAV path planning. Various machine learning algorithms are also used in multi-UAV path planning. For instance, reinforcement learning and neural networks are used to solve some crucial path-planning issues in complicated environments. Open research problems are discussed next.

4. Open Research Problems

Route planning for multi-UAVs is a complex task. Average height, energy consumption, obstacle avoidance capabilities, system complexity, convergence speed, and adaptability in dynamic environments influence the system development and implementation costs in a multi-UAV operation. The critical components of multi-UAV route planning are obstacle avoidance, communication and collaboration, safety and reliability, dynamic environments, energy efficiency, system complexity, computational cost, and requirements. Each of these important components is discussed in the subsections below.

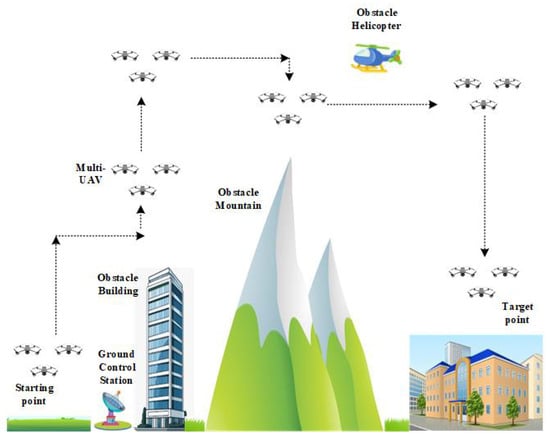

4.1. Obstacle Avoidance

Intelligent algorithms must be capable of adjusting real-time paths and addressing changing circumstances. The multi-UAV static and dynamic obstacle avoidance situation is depicted in Figure 11. Capturing images with a high overlap ratio, then using computer software to produce a 3D map of a target region is known as 3D map reconstruction for UAV path planning [89]. It is difficult to accurately construct a 3D map in a defective conflict warning area while avoiding collisions and ensuring the safety of the planned path [90]. When developing a multi-UAV route planning system, it is important to ensure that the algorithms can identify and avoid obstacles in intricate and congested environments. Path planning is crucial for determining the optimal obstacle-free route for a multiple-UAV operation. The use of 3D maps to facilitate the movement of various UAVs amidst fixed obstacles such as trees, buildings, poles, and towers, as well as dynamic obstacles like birds and planes, needs to be considered [91]. Research on high-altitude areas encompassing forests and high-rise buildings in urban areas must be considered [92]. For instance, multi-UAV operations alongside helicopters can be used in war zones. This paper aims to assist researchers in locating information on the creation of sophisticated sensing and perception methods and real-time obstacle avoidance algorithms.

Figure 11.

Multi-UAV obstacle avoidance scenario.

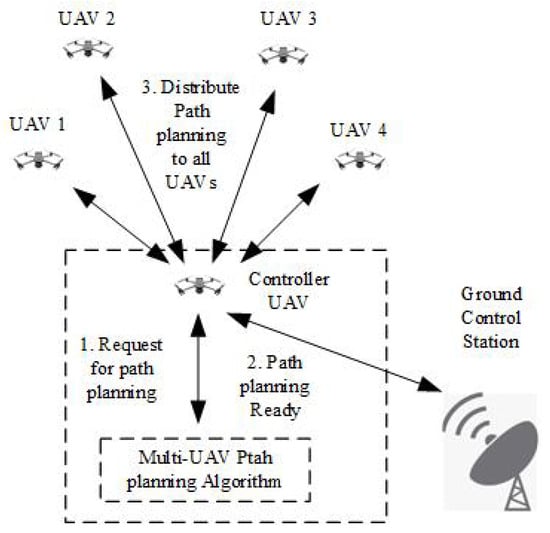

4.2. Communication and Collaboration

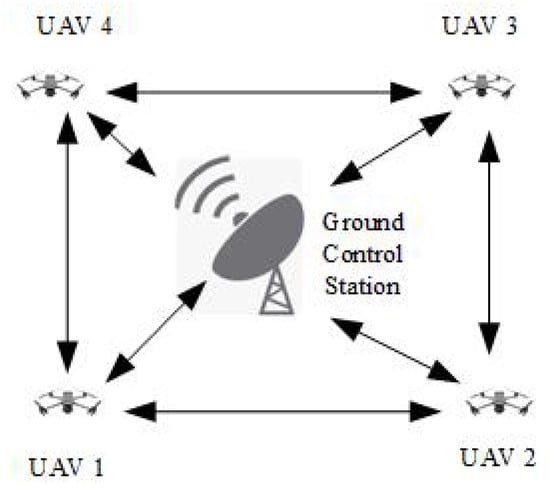

Managing UAV communications is crucial for preventing collisions and ensuring efficient coordination. As shown in Figure 12, the controller UAV communicates and collaborates with the GCS and other UAVs in a leader–follower model. New algorithms need to be developed to optimize coverage time. The system must also monitor the connectivity between UAVs and the ground control station. To guarantee redundancy, continuous multi-hop connectivity of many UAVs is necessary. Effective resource utilization is essential in order to avoid communication losses. Algorithms should be able to manage seamless communication for numerous aerial vehicles in a complex environment. Facilitating the sharing of drone data, position, and status is also required. One drone needs to act as controller to maintain the formation during swarm operations. The remaining airborne vehicles obey the commands of the controller [93]. In the event of controller failure, another drone is capable of carrying out the controller role. Decentralization of the controlling system should be possible with an advanced algorithm in the future [33,94]. The algorithm should be able to reduce communication time and packet loss. For instance, an attention mechanism in multi-agent reinforcement learning reduces communication delays. A consensus-based communication system can minimize information losses in multi-UAV path planning [95].

Figure 12.

Multi-UAV leader–follower model.

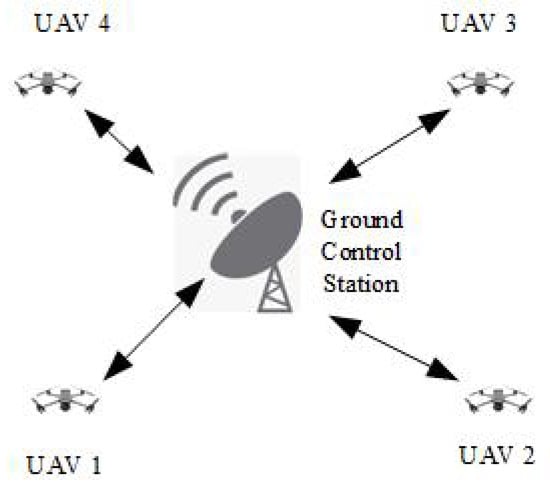

In a distributed scenario, each UAV controls key operations, makes decisions, and communicates with other UAVs to achieve the desired goals (Figure 13). Each UAV and the GCS communicate simultaneously.

Figure 13.

Multi-UAV distributed control scenario.

Figure 14 illustrates a decentralized situation where each UAV uses a path planning algorithm and communicates with the GCS.

Figure 14.

Multi-UAV decentralized control scenario.

4.3. Safety and Reliability

The safety and dependability of the swarm’s operation must be ensured, particularly in situations with potential malfunctions or unforeseen behavior. Multiple UAVs offer the advantages of a higher resource delivery capacity, strengthened flight security, and lower mission costs relative to a single-UAV system. Various key factors, such as environmental conditions (e.g., weather and terrain); mission complexity; and UAV performance in terms of maximum flight range, fuel consumption, and typical rescue capabilities, have significant impacts on rescue missions. When employing a multi-UAV system for disaster rescue, it is essential to allocate UAVs and combination modes and plan their flight paths more effectively. This survey paper aims to assist network researchers in developing robust algorithms that integrate redundancy and fault tolerance to manage UAV failures and ensure mission success [96,97].

4.4. Dynamic Environments

Adapting path planning algorithms for dynamic environments involves handling changing obstacles or target locations [98]. Planning UAV trajectories is difficult due to unfamiliar environments with static and dynamic obstacles. Multi-UAV path planning involves creating the best possible route for each UAV within the UAV swarm while considering constraints related to mission execution time and avoiding collisions [99]. This paper aims to facilitate the development of adaptive algorithms capable of real-time path planning in response to changing environmental conditions.

4.5. Energy Efficiency

UAVs need to balance energy consumption while still effectively planning their paths. The primary goal of multi-UAV path planning is to complete the coverage task as quickly as possible. Developing a path planning algorithm for swarm UAVs that minimizes path cost and achieves faster convergence speed is crucial [6]. Path planning should consider energy-efficient algorithms for optimization of flight paths to increase UAVs’ operational range and duration. In a multi-UAV operation, UAVs must communicate with one another to share tasks, coverage, surveillance, and real-time data collection. All UAVs need to interact with one another to avoid collisions and optimize trajectories. Developing decentralized algorithms so that each UAV can plan its routing in a multi-UAV scenario while communicating and conserving energy is a priority [100]. An example of an energy-efficient strategy is the energy-saving path planning algorithm based on reinforcement learning (ESPP-RL). This approach and dynamic battery management are used to determine the most energy-efficient flight path for UAVs [101]. A multi-UAV collaborative search algorithm based on the binary search algorithm (MUCS-BSAE) is used to spread a single UAV task to several UAVs, lowering the single UAV’s energy consumption and improving the system’s energy efficiency [102].

4.6. System Complexity and Computational Cost

Computational complexity increases with the number of UAVs, as well as the complexity of the environment. As the number of UAVs grows, the problem becomes more complicated due to the increased number of possible interactions and collision avoidance scenarios. The UAV must map the environment, process the image, communicate with the ground controller station, navigate the path, and avoid collisions with other UAVs. These duties require a lot of processing power [103]. An example of reducing computational cost and system complexity is the cooperative co-evolutionary algorithm (CCEA), which reduces the difficulty of problems by dividing high-dimensional variables into sub-variables for collaborative optimization [104]. Achieving high-quality solutions requires computational resources. Research on scalable algorithms that can handle various UAVs more efficiently is crucial. However, using a decentralized approach, each UAV can plan a path independently but coordinate with other UAVs to offer scalable solutions. Developing algorithms that can handle real-time constraints while still providing high-quality paths is a key area of focus. Leveraging parallel and distributed computing resources can help address the computational challenges. Algorithms that can be effectively parallelized or distributed across multiple processors can reduce overall computation time. By combining various planning approaches, one can balance computational efficiency and solution quality. Incorporating machine learning techniques in UAVs can help to improve path planning algorithms, consequently reducing computational costs and enhancing adaptability to dynamic environments. For instance, a Q-learning algorithm in machine learning can use exploitation and exploration to reduce system complexity and decision-making time [87]. Similarly, Metaheuristic and classical algorithms utilize exploitation and exploration to handle massive and complex areas [105]. The ability of a UAV to maintain communication while consuming minimal bandwidth is essential. The algorithm’s ability to respond quickly to changing circumstances is required for successful deployment [106]. The algorithm must be capable of maintaining safety to avoid collisions among UAVs or with other objects. This survey paper can help network engineers and researchers in developing smart multi-UAV algorithms that support low complexity and computational costs.

4.7. Summary of Open Research Issues

This section summarizes the above discussion on open research problems and highlights some practical issues and implications. Multi-UAV algorithms must be designed to re-plan pathways in a complicated environment to overcome static and dynamic impediments. Each UAV should be able to communicate with the control station and other UAVs with ease and redundancy. Algorithms need to be able to protect swarm UAVs from any challenging situations. The system ought to overcome various hurdles in real-world practical applications. Energy efficiency aspects need to be looked at to provide swarm UAVs with sufficient resources to complete the task. Costs and complexity must be carefully considered in system design and implementation. To this end, advanced machine learning techniques must be integrated into system design to enhance path planning, reduce computational costs, and improve adaptability to dynamic environments. Another design aspect is to use parallel and distributed computing resources to overcome computational challenges. Future research directions are discussed next.

5. Future Research Directions

Path planning is an essential part of any multi-UAV operation. An obstacle-free path is crucial to ensure UAVs are safe and sound. In this section, we list and describe five future research directions based on their importance, focusing on technological maturity, research challenges, and resource requirements.

1. Metaheuristic approach: The most widely used algorithms for multi-UAV path planning metaheuristic algorithms, which are technologically mature, especially in the areas of essential path planning and coordination for specific mission types. However, challenges remain with respect to decentralization, real-time optimization, and scalability. For instance, Levy flight-based multi-population gray wolf optimization (LM- GWO) is a hybrid metaheuristic algorithm that reduces energy consumption. However, this hybrid metaheuristic algorithm is not decentralized, and it is not an online algorithm [107].

2. Hybrid algorithm: Many network researchers have focused on developing hybrid multi-UAV path planning algorithms. The technology is mature, with significant progress made in recent years, particularly in collaborative strategies and real-time adaptation. Hybrid algorithms can be computationally expensive and complex. The need for real-time adjustments and large-scale coordination can overload processing resources, particularly in large fleets. While hybrid multi-UAV path planning algorithms are often tested by simulation, replicating the complexities of real-world situation in a simulated setting is a challenging task. Further research is required to ensure that these algorithms perform well in real application scenarios. For instance, HIPSO-MSOS is a hybrid algorithm that combines enhanced particle swarm optimization (IPSO) and modified symbiotic organism search (MSOS) to lower the cost of UAV coordination. However, the system complexity of this algorithm must be decreased. This hybrid algorithm can be used to solve the recovery problem by controlling aircraft for a long-range swarm UAV with good performance [108]. One hybrid algorithm that combines the genetic algorithm and the homotopic algorithm is the evolutionarily-based metaheuristic algorithm described in Table 1, row 6. This hybrid algorithm solves the recovery problem by controlling aircraft in a long-range UAV swarm with excellent performance. This algorithm needs to be evaluated in challenging real-world settings for the controller UAV to carry out the aerial recovery framework properly.

3. Machine learning algorithms: Machine learning algorithms are used for multi-UAV path planning purposes. Even in hybrid algorithms, machine learning algorithms are used with other algorithms. The integration of machine learning in multi-UAV systems is maturing rapidly in several key areas, particularly in autonomous navigation, fault detection, and environmental perception. However, the training of ML algorithms, deep learning, and reinforcement learning requires powerful computing resources. Once trained, the system requires real-time computational resources for decision making, coordination, and path planning. As a result, energy consumption and running expenses can increase, particularly for large fleets with numerous UAVs flying at once [109]. A double deep Q network (DDQN) can solve both local and global optimal actions, although this method disregards variables like processing cost, massive scalability, UAV heights, and distributed vs. decentralized methodology [98]. The multi-agent reinforcement learning (MARL) system can safely collect data without understanding urban surroundings [79]. The C51-Duel-IP algorithm can plan routes in real time in distributed mode; however, the smoothness of the path has not been considered [110].

4. Heuristic approach: Heuristic algorithms in multi-UAV path planning have received little attention. Heuristic algorithms are increasingly integrated with real-time systems for applications like search and rescue, surveillance, and military operations. However, heuristic algorithms, particularly in large-scale scenarios, struggle with scalability, real-time execution, and avoiding collisions between UAVs. One such heuristic-based hybrid algorithm is combined with the improved elastic band and A-Star algorithms, which boosts its efficiency. However, to prevent collisions between UAVs, research must be carried out in a complex three-dimensional environment, and the height of UAVs must be specified [70,111].

5. Classical approach: Classical algorithms in multi-UAV route planning have received little attention. Classical algorithms can be used in simple scenarios such as small fleets. However, multi-UAV path planning applications in large-scale, dynamic, and real-time scenarios are still limited with respect to scalability, coordination, communication, and decentralization. For instance, theta Star (Theta*) and Artificial Potential Field (APF), which are used to increase search speed and UAV safety, are examples of hybrid classical approaches. The system must be more scalable across a wide area, and this algorithm does not consider real-time route planning [112].

6. Conclusions

This paper presents a survey, classification, and comparison of multi-UAV path planning algorithms. Multi-UAV path planning is significant when designing and implementing a multi-UAV system. We thoroughly reviewed 127 published papers on UAV path planning, selecting from various scholarly journals and conference proceedings. We analyzed multiple approaches to multi-UAV path planning, including classical, heuristic, meta-heuristic, machine learning, and hybrid approaches. Our analysis is based on system complexity, computational cost, time consumption, and adaptability to changing environments. We have summarized numerous discussions, emphasizing the most widely used multi-UAV methods. A review of the literature reveals that the following challenges and specific areas need more research in the field: (i) Algorithms should be enhanced to handle a more significant number of UAVs efficiently. (ii) System efficiency needs to be increased to enable real-time path planning. (iii) Algorithms should be developed that can better adapt to changes in ever-changing environments, such as changing obstacles or fluctuating weather conditions. (iv) Coordination and communication among unmanned vehicles can be enhanced to optimize group behavior and task allocation. (v) Intelligent algorithms should be developed to optimize and balance multiple objectives, such as minimizing risk, conserving energy, and reducing travel time. Finally, (vi) a multi-UAV path planning system should be developed to minimize system complexity and costs.

Author Contributions

Conceptualization, M.R. and N.I.S.; Methodology, M.R. and N.I.S.; Software, N.I.S.; Validation, M.R. and N.I.S.; Formal analysis, M.R.; Investigation, M.R. and N.I.S.; Resources, N.I.S. and R.L.; Data curation, M.R.; Writing—original draft, M.R.; Writing—review & editing, M.R., N.I.S. and R.L.; Visualization, M.R.; Supervision, N.I.S. and R.L.; Project administration, N.I.S. and R.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Acronym

| Acronym | Explanation |

| ABC | Artificial Bee Colony |

| APF | Artificial Potential Field |

| AQLPSO | Adaptive Q-Learning-based Particle Swarm Optimization |

| ACO | Ant Colony Optimization |

| BFO | Bacteria Foraging Optimization |

| BINN-HHO | Bioinspired neural network and improved Harris hawk optimization |

| BRKGA | Biased Random Key Genetic Algorithm |

| CGWO | Chaotic Gray Wolf Optimization |

| CL-DMSPSO | Comprehensive learning dynamic multi-swarm particle swarm optimization |

| CNN | Convolution Neural Network |

| CPP | Coverage Path Planning |

| CRO | Coral Reef Optimization |

| CS | Cuckoo Search |

| Deep RL ESN | Deep Reinforcement Learning Echo State Network |

| DDPG | Deep deterministic policy gradient algorithm |

| DE | Differential Evolution |

| Distributed formation algorithm 3 | |

| DPIO | Discrete pigeon-inspired optimization |

| DQN | Deep Q-network |

| DDQN | Double deep Q-network |

| D3QN | Dueling double deep Q-network |

| DQN - RRT | Deep Q-network–Rapidly exploring Random Tree |

| EB-A* | Elastic band–A-Star |

| ePFC | Extended potential field controller |

| FP-GPSO | Fermat point-based grouping particle swarm optimization |

| GA | Genetic Algorithm |

| GA-Homotopic | Genetic Algorithm and Homotopic Algorithm |

| GCS | Ground control station |

| GH | Greedy Heuristic |

| GP | Gaussian Process |

| HAS-DQN | Hexagonal Area Search Deep Q-Network |

| HTS-VND | Hybrid tabu search-variable neighborhood descent |

| Hybrid GH | Hybrid Greedy Heuristic |

| Improved PSO and GPM | Improved particle swarm optimization and Gaussian pseudo-spectral method |

| Improved EB and A-Star | Improved Elastic Band and A-Star Algorithm |

| IVD | Improved Voronoi Diagram |

| IABC | Improved Artificial Bee Colony |

| IAPF | Improved Artificial Potential Field |

| IPSO | Improved Practical Swarm Optimization |

| LM-GWO | Levy flight-based multi-population grey wolf optimisation |

| MADDPG | Multi-Agent Deep Deterministic Policy Gradient |

| MCMOPSO-RL | Multi-objective particle swarm optimization with multi-mode collaboration based on reinforcement learning |

| MHS | Modified Harmony Search |

| MMACO | Maximum minimum ant colony optimization |

| MODRL | multi-objective deep reinforcement learning |

| MPC and PSO | Model predictive control and particle swarm optimization |

| MSFOA | Multiple Swarm Fruit Fly Optimization Algorithm |

| MTSP | Multiple Traveling Salesman Problem |

| MVD | Modified Voronoi Diagram |

| MVO | Multi-Verse Optimizer |

| MUCS-BSAE | Multi-UAV collaborative search algorithm based on the binary search algorithm |

| NSGA multi-objective EA | Non Dominated Sorting Genetic Algorithm multi-objective Evolutionary algorithms |

| OPP | Optimal Path Planning |

| ORPFOA | Optimal reference point Fruit Fly Optimization Algorithm |

| PDE | Partial Differential Equation |

| PIO | Pigeon Inspired Optimization |

| POMDP | Partially Observable Markov Decision Process |

| PPSwarm | PSO (Practical Swarm Optimization) + RRT (Rapid-exploring Random Trees) |

| RBF-ANN | Radial Basis Functions Artificial Neural Network |

| RGV | Reduced Visibility Graph |

| RRT | Rapidly exploring Random Tree |

| SFLA | Shuffled Frog Leaping Algorithm |

| SIRIPPA | Secondary Immune Responses-based Immune Path Planning Algorithm |

| SPVM and PSO | Spatial Refined Voting Mechanism and Particle Swarm Optimization |

| TLBO | Teaching Learning Based Optimization |

| UAV | unmanned aerial vehicle |

| VD | Voronoi Diagram |

| WoLFIGA | Win or learn fast policy with Infinitesimal gradient ascent |

| WPL | Weighted Policy Learner |

| WDQN | Whale inspired deep Q-network |

References

- Wu, Y.; Nie, M.; Ma, X.; Guo, Y.; Liu, X. Co-Evolutionary Algorithm-Based Multi-Unmanned Aerial Vehicle Cooperative Path Planning. Drones 2023, 7, 606. [Google Scholar] [CrossRef]

- Sarkar, N.I.; Gul, S. Artificial intelligence-based autonomous UAV networks: A survey. Drones 2023, 7, 322. [Google Scholar] [CrossRef]

- Abid, M.; El Kafhali, S.; Amzil, A.; Hanini, M. Optimization of UAV Flight Paths in Multi-UAV Networks for Efficient Data Collection. Arab. J. Sci. Eng. 2024, 1–26. [Google Scholar] [CrossRef]

- Puente-Castro, A.; Rivero, D.; Pazos, A.; Fernandez-Blanco, E. A review of artificial intelligence applied to path planning in UAV swarms. Neural Comput. Appl. 2022, 34, 153–170. [Google Scholar]

- Zhang, S.; Liu, W.; Ansari, N. Completion time minimization for data collection in a UAV-enabled IoT network: A deep reinforcement learning approach. IEEE Trans. Veh. Technol. 2023, 72, 14734–14742. [Google Scholar]

- Xu, C.; Xu, M.; Yin, C. Optimized multi-UAV cooperative path planning under the complex confrontation environment. Comput. Commun. 2020, 162, 196–203. [Google Scholar]

- Shiri, H.; Park, J.; Bennis, M. Massive Autonomous UAV Path Planning: A Neural Network Based Mean-Field Game Theoretic Approach. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Waikoloa, HI, USA, 9–13 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Anastasiou, A.; Kolios, P.; Panayiotou, C.; Papadaki, K. Swarm path planning for the deployment of drones in emergency response missions. In Proceedings of the 2020 IEEE International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 456–465. [Google Scholar]

- Ait Saadi, A.; Soukane, A.; Meraihi, Y.; Benmessaoud Gabis, A.; Mirjalili, S.; Ramdane-Cherif, A. UAV Path Planning Using Optimization Approaches: A Survey. Arch. Comput. Methods Eng. 2022, 29, 4233–4284. [Google Scholar] [CrossRef]

- Rocha, L.; Vivaldini, K. Analysis and contributions of classical techniques for path planning. In Proceedings of the 2021 IEEE Latin American Robotics Symposium (LARS), 2021 Brazilian Symposium on Robotics (SBR), and 2021 Workshop on Robotics in Education (WRE), Natal, Brazil, 11–15 October 2021; pp. 54–59. [Google Scholar]

- Aljalaud, F.; Kurdi, H.; Youcef-Toumi, K. Bio-inspired multi-UAV path planning heuristics: A review. Mathematics 2023, 11, 2356. [Google Scholar] [CrossRef]

- Yan, C.; Xiang, X.; Wang, C. Towards real-time path planning through deep reinforcement learning for a UAV in dynamic environments. J. Intell. Robot. Syst. 2020, 98, 297–309. [Google Scholar]

- Chronis, C.; Anagnostopoulos, G.; Politi, E.; Garyfallou, A.; Varlamis, I.; Dimitrakopoulos, G. Path planning of autonomous UAVs using reinforcement learning. J. Phys. Conf. Ser. 2023, 2526, 012088. [Google Scholar] [CrossRef]

- Li, L.; Wang, L.; Hou, J.; Ma, J.; Liu, Y. Multi-UAV Path Planning Based on DRL for Data Collection in UAV-Assisted IoT. In Proceedings of the 2024 14th International Conference on Information Science and Technology (ICIST), Chengdu, China, 6–9 December 2024; pp. 566–573. [Google Scholar] [CrossRef]

- López, B.; Muñoz, J.; Quevedo, F.; Monje, C.A.; Garrido, S.; Moreno, L.E. Path Planning and Collision Risk Management Strategy for Multi-UAV Systems in 3D Environments. Sensors 2021, 21, 4414. [Google Scholar] [CrossRef]

- Pérez-Carabaza, S.; Besada-Portas, E.; López-Orozco, J.A. Minimizing the searching time of multiple targets in uncertain environments with multiple UAVs. Appl. Soft Comput. 2024, 155, 111471. [Google Scholar] [CrossRef]

- Shi, J.; Tan, L.; Zhang, H.; Lian, X.; Xu, T. Adaptive multi-UAV path planning method based on improved gray wolf algorithm. Comput. Electr. Eng. 2022, 104, 108377. [Google Scholar] [CrossRef]

- Madridano, Á.; Al-Kaff, A.; Martín, D.; de la Escalera, A. Trajectory planning for multi-robot systems: Methods and applications. Expert Syst. Appl. 2021, 173, 114660. [Google Scholar] [CrossRef]

- Zhu, X.; Wang, L.; Li, Y.; Song, S.; Ma, S.; Yang, F.; Zhai, L. Path planning of multi-UAVs based on deep Q-network for energy-efficient data collection in UAVs-assisted IoT. Veh. Commun. 2022, 36, 100491. [Google Scholar] [CrossRef]

- Wang, W.; Zhang, G.; Da, Q.; Lu, D.; Zhao, Y.; Li, S.; Lang, D. Multiple Unmanned Aerial Vehicle Autonomous Path Planning Algorithm Based on Whale-Inspired Deep Q-Network. Drones 2023, 7, 572. [Google Scholar] [CrossRef]

- Liu, X.; Chai, Z.Y.; Li, Y.L.; Cheng, Y.Y.; Zeng, Y. Multi-objective deep reinforcement learning for computation offloading in UAV-assisted multi-access edge computing. Inf. Sci. 2023, 642, 119154. [Google Scholar] [CrossRef]

- Stolfi, D.H.; Danoy, G. An Evolutionary Algorithm to Optimise a Distributed UAV Swarm Formation System. Appl. Sci. 2022, 12, 10218. [Google Scholar] [CrossRef]

- Zhou, C.; Kadhim, K.M.R.; Zheng, X. Multi-UAVs path planning for data harvesting in adversarial scenarios. Comput. Commun. 2024, 221, 42–53. [Google Scholar] [CrossRef]

- Liu, Y.; Qi, N.; Yao, W.; Zhao, J.; Xu, S. Cooperative Path Planning for Aerial Recovery of a UAV Swarm Using Genetic Algorithm and Homotopic Approach. Appl. Sci. 2020, 10, 4154. [Google Scholar] [CrossRef]

- Zhang, X.; Xia, S.; Li, X.; Zhang, T. Multi-objective particle swarm optimization with multi-mode collaboration based on reinforcement learning for path planning of unmanned air vehicles. Knowl.-Based Syst. 2022, 250, 109075. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, L.; Cai, B.; Liang, Y. Unified path planning for composite UAVs via Fermat point-based grouping particle swarm optimization. Aerosp. Sci. Technol. 2024, 148, 109088. [Google Scholar] [CrossRef]

- Shao, S.; He, C.; Zhao, Y.; Wu, X. Efficient Trajectory Planning for UAVs Using Hierarchical Optimization. IEEE Access 2021, 9, 60668–60681. [Google Scholar] [CrossRef]

- Hu, X.; Liu, Y.; Wang, G. Optimal search for moving targets with sensing capabilities using multiple UAVs. J. Syst. Eng. Electron. 2017, 28, 526–535. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, X.; Zhang, Y.; Guan, X. Collision free 4D path planning for multiple UAVs based on spatial refined voting mechanism and PSO approach. Chin. J. Aeronaut. 2019, 32, 1504–1519. [Google Scholar] [CrossRef]

- Meng, Q.; Chen, K.; Qu, Q. PPSwarm: Multi-UAV Path Planning Based on Hybrid PSO in Complex Scenarios. Drones 2024, 8, 192. [Google Scholar] [CrossRef]

- Li, S.; Zhang, R.; Ding, Y.; Qin, X.; Han, Y.; Zhang, H. Multi-UAV Path Planning Algorithm Based on BINN-HHO. Sensors 2022, 22, 9786. [Google Scholar] [CrossRef]

- Xu, L.; Cao, X.; Du, W.; Li, Y. Cooperative path planning optimization for multiple UAVs with communication constraints. Knowl.-Based Syst. 2023, 260, 110164. [Google Scholar] [CrossRef]

- Güven, İ.; Yanmaz, E. Multi-objective path planning for multi-UAV connectivity and area coverage. Ad Hoc Netw. 2024, 160, 103520. [Google Scholar] [CrossRef]

- Wang, Z.; Sun, G.; Zhou, K.; Zhu, L. A parallel particle swarm optimization and enhanced sparrow search algorithm for unmanned aerial vehicle path planning. Heliyon 2023, 9, e14784. [Google Scholar] [CrossRef]

- Cho, S.; Park, H.; Lee, H.; Shim, D.; Kim, S.Y. Coverage Path Planning for Multiple Unmanned Aerial Vehicles in Maritime Search and Rescue Operations. Comput. Ind. Eng. 2021, 161, 107612. [Google Scholar] [CrossRef]

- Cui, Y.; Dong, W.; Hu, D.; Liu, H. The Application of Improved Harmony Search Algorithm to Multi-UAV Task Assignment. Electronics 2022, 11, 1171. [Google Scholar] [CrossRef]

- Yuan, X.; Feng, Z. A Study of Path Planning for Multi- UAVs in Random Obstacle Environment Based on Improved Artificial Potential Field Method. In Proceedings of the 2022 China Automation Congress (CAC), Xiamen, China, 25–27 November 2022; pp. 5241–5245. [Google Scholar] [CrossRef]

- Bui, D.N.; Phung, M.D. Radial basis function neural networks for formation control of unmanned aerial vehicles. Robotica 2024, 42, 1842–1860. [Google Scholar] [CrossRef]

- Jing, W.; Deng, D.; Wu, Y.; Shimada, K. Multi-UAV Coverage Path Planning for the Inspection of Large and Complex Structures. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October–24 January 2020; pp. 1480–1486. [Google Scholar] [CrossRef]

- Pehlivanoglu, Y.V.; Pehlivanoglu, P. An enhanced genetic algorithm for path planning of autonomous UAV in target coverage problems. Appl. Soft Comput. 2021, 112, 107796. [Google Scholar] [CrossRef]

- Li, M.; Sun, P.F.; Jeon, S.W.; Zhao, X.; Jin, H. Multi-UAV Path Planning with Genetic Algorithm. In Proceedings of the 2023 14th International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 11–13 October 2023; pp. 918–920. [Google Scholar] [CrossRef]

- Ntakolia, C.; Platanitis, K.S.; Kladis, G.P.; Skliros, C.; Zagorianos, A.D. A Genetic Algorithm enhanced with Fuzzy-Logic for multi-objective Unmanned Aircraft Vehicle path planning missions. In Proceedings of the 2022 International Conference on Unmanned Aircraft Systems (ICUAS), Dubrovnik, Croatia, 21–24 June 2022; pp. 114–123. [Google Scholar] [CrossRef]

- Zhong, Y.; Wang, L.; Lin, M.; Zhang, H. Discrete pigeon-inspired optimization algorithm with Metropolis acceptance criterion for large-scale traveling salesman problem. Swarm Evol. Comput. 2019, 48, 134–144. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, T.; Cai, Z.; Zhao, J.; Wu, K. Multi-UAV coordination control by chaotic grey wolf optimization based distributed MPC with event-triggered strategy. Chin. J. Aeronaut. 2020, 33, 2877–2897. [Google Scholar] [CrossRef]

- Ali, Z.; Han, Z.; Zhengru, D. Path planning of multiple UAVs using MMACO and DE algorithm in dynamic environment. Meas. Control 2023, 56, 002029402091572. [Google Scholar] [CrossRef]

- Deng, L.; Chen, H.; Zhang, X.; Liu, H. Three-Dimensional Path Planning of UAV Based on Improved Particle Swarm Optimization. Mathematics 2023, 11, 1987. [Google Scholar] [CrossRef]

- Castro, G.G.R.d.; Berger, G.S.; Cantieri, A.; Teixeira, M.; Lima, J.; Pereira, A.I.; Pinto, M.F. Adaptive Path Planning for Fusing Rapidly Exploring Random Trees and Deep Reinforcement Learning in an Agriculture Dynamic Environment UAVs. Agriculture 2023, 13, 354. [Google Scholar] [CrossRef]

- Xue, Y.; Chen, W. Multi-Agent Deep Reinforcement Learning for UAVs Navigation in Unknown Complex Environment. IEEE Trans. Intell. Veh. 2024, 9, 2290–2303. [Google Scholar] [CrossRef]

- Zourari, A.; Youssefi, M.A.; Youssef, Y.; Dakir, R. Reinforcement Q-Learning for Path Planning of Unmanned Aerial Vehicles (UAVs) in Unknown Environments. Int. Rev. Autom. Control. (IREACO) 2023, 16, 253–259. [Google Scholar] [CrossRef]

- Zhao, X.; Yang, R.; Zhong, L.; Hou, Z. Multi-UAV Path Planning and Following Based on Multi-Agent Reinforcement Learning. Drones 2024, 8, 18. [Google Scholar] [CrossRef]

- Frattolillo, F.; Brunori, D.; Iocchi, L. Scalable and Cooperative Deep Reinforcement Learning Approaches for Multi-UAV Systems: A Systematic Review. Drones 2023, 7, 236. [Google Scholar] [CrossRef]

- Xing, X.; Zhou, Z.; Li, Y.; Xiao, B.; Xun, Y. Multi-UAV Adaptive Cooperative Formation Trajectory Planning Based on An Improved MATD3 Algorithm of Deep Reinforcement Learning. IEEE Trans. Veh. Technol. 2024, 73, 12484–12499. [Google Scholar] [CrossRef]

- Baldazo, D.; Parras, J.; Zazo, S. Decentralized Multi-Agent Deep Reinforcement Learning in Swarms of Drones for Flood Monitoring. In Proceedings of the 2019 27th European Signal Processing Conference (EUSIPCO), A Coruna, Spain, 2–6 September 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Tian, G.; Zhang, L.; Bai, X.; Wang, B. Real-time Dynamic Track Planning of Multi-UAV Formation Based on Improved Artificial Bee Colony Algorithm. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 10055–10060. [Google Scholar] [CrossRef]

- Wu, X.; Wu, S.; Yuan, S.; Wang, X.; Zhou, Y. Multi-UAV Path Planning with Collision Avoidance in 3D Environment Based on Improved APF. In Proceedings of the 2023 9th International Conference on Control, Automation and Robotics (ICCAR), Beijing, China, 21–23 April 2023; pp. 221–226. [Google Scholar] [CrossRef]

- Wang, L.; Wang, K.; Pan, C.; Xu, W.; Aslam, N.; Hanzo, L. Multi-Agent Deep Reinforcement Learning-Based Trajectory Planning for Multi-UAV Assisted Mobile Edge Computing. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 73–84. [Google Scholar]

- Shi, K.; Zhang, X.; Xia, S. Multiple Swarm Fruit Fly Optimization Algorithm Based Path Planning Method for Multi-UAVs. Appl. Sci. 2020, 10, 2822. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, L.; Yuan, M.; Shen, Y. A Novel Online Path Planning Algorithm for Multi-Robots Based on the Secondary Immune Response in Dynamic Environments. Electronics 2024, 13, 562. [Google Scholar] [CrossRef]

- Zhang, D.; Duan, H. Social-class pigeon-inspired optimization and time stamp segmentation for multi-UAV cooperative path planning. Neurocomputing 2018, 313, 229–246. [Google Scholar] [CrossRef]

- Yu, Y.; Deng, Y.; Duan, H. Multi-UAV Cooperative Path Planning via Mutant Pigeon Inspired Optimization with Group Learning Strategy. In Advances in Swarm Intelligence; Springer: Cham, Switzerland, 2021; pp. 195–204. [Google Scholar] [CrossRef]

- Li, K.; Ge, F.; Han, Y.; Wang, Y.; Xu, W. Path planning of multiple UAVs with online changing tasks by an ORPFOA algorithm. Eng. Appl. Artif. Intell. 2020, 94, 103807. [Google Scholar] [CrossRef]

- Liu, H.; Ge, J.; Wang, Y.; Li, J.; Ding, K.; Zhang, Z.; Guo, Z.; Li, W.; Lan, J. Multi-UAV Optimal Mission Assignment and Path Planning for Disaster Rescue Using Adaptive Genetic Algorithm and Improved Artificial Bee Colony Method. Actuators 2022, 11, 4. [Google Scholar] [CrossRef]

- Ramirez Atencia, C.; Del Ser, J.; Camacho, D. Weighted strategies to guide a multi-objective evolutionary algorithm for multi-UAV mission planning. Swarm Evol. Comput. 2019, 44, 480–495. [Google Scholar] [CrossRef]

- Zhang, W.; Peng, C.; Yuan, Y.; Cui, J.; Qi, L. A novel multi-objective evolutionary algorithm with a two-fold constraint-handling mechanism for multiple UAV path planning. Expert Syst. Appl. 2024, 238, 121862. [Google Scholar] [CrossRef]

- Yang, L.; Li, P.; Qian, S.; Quan, H.; Miao, J.; Liu, M.; Hu, Y.; Memetimin, E. Path Planning Technique for Mobile Robots: A Review. Machines 2023, 11, 980. [Google Scholar] [CrossRef]

- Pan, Z.; Zhang, C.; Xia, Y.; Xiong, H.; Shao, X. An Improved Artificial Potential Field Method for Path Planning and Formation Control of the Multi-UAV Systems. IEEE Trans. Circuits Syst. II Express Briefs 2021, 69, 1129–1133. [Google Scholar] [CrossRef]

- Wang, J.; Wang, R. Multi-UAV Area Coverage Track Planning Based on the Voronoi Graph and Attention Mechanism. Appl. Sci. 2024, 14, 7844. [Google Scholar] [CrossRef]

- Zu, W.; Fan, G.; Gao, Y.; Ma, Y.; Zhang, H.; Zeng, H. Multi-UAVs Cooperative Path Planning Method based on Improved RRT Algorithm. In Proceedings of the 2018 IEEE International Conference on Mechatronics and Automation (ICMA), Changchun, China, 5–8 August 2018; pp. 1563–1567. [Google Scholar] [CrossRef]

- Fu, Z.; Yu, J.; Xie, G.; Chen, Y.; Mao, Y. A Heuristic Evolutionary Algorithm of UAV Path Planning. Wirel. Commun. Mob. Comput. 2018, 2018, 2851964. [Google Scholar] [CrossRef]

- Lin, J.; Ye, F.; Yu, Q.; Xing, J.; Quan, Z.; Sheng, C. Research on Multi-UAVs Route Planning Based on the Integration of Improved Elastic Band and A-Star Algorithm. In Proceedings of the IEEE International Conference on Unmanned Systems (ICUS), Guangzhou, China, 28–30 October 2022. [Google Scholar]

- Du, Y. Multi-UAV Search and Rescue with Enhanced A * Algorithm Path Planning in 3D Environment. Int. J. Aerosp. Eng. 2023, 2023, 8614117. [Google Scholar] [CrossRef]