Consensus-Based Formation Control for Heterogeneous Multi-Agent Systems in Complex Environments

Abstract

1. Introduction

2. Methods

2.1. Graph Theory

- has a zero eigenvalue, whose corresponding eigenvector is , and all its nonzero eigenvalues are positive real numbers.

- is a semipositive definite symmetric matrix and satisfies .

- The eigenvalues of , and . It is common to define the second smallest eigenvalue of the matrix as , to be the algebraic connectivity of the graph of the algebraic connectivity while having the following:

- For any vector , satisfy the following:

2.2. Multi-Consensus Theory

2.3. Stability Theory

- The connectivity preserving potential function is designed as follows:

- Another point to note, the agent spacing of the expected formation should be set between . In other words, the states of agent and agent need to satisfy the following equation: .

- The initial value of the Lyapunov is a constant;

- The relationship between neighboring agents needs to satisfy .

3. Modeling and Analysis

3.1. Problem Analysis in Complex Environments

3.2. Formation Control Modeling

3.3. Heterogeneous Multi-Intelligent-Agent Formation Collaborative Obstacle Avoidance Controller

3.3.1. Controller Design

- Let be the set of state geometries of the smart agent, then is the set of formation queueing functions, where . The position component of is , and the velocity component is . The goal of the system is to make all agents’ states θi(t) converge to a common trajectory hi(t), i.e., .

3.3.2. Proof of Stability

- Definition of degree matrix :

- The Lyapunov function is chosen as a quadratic function of the agent states:

4. Experiment and Simulation

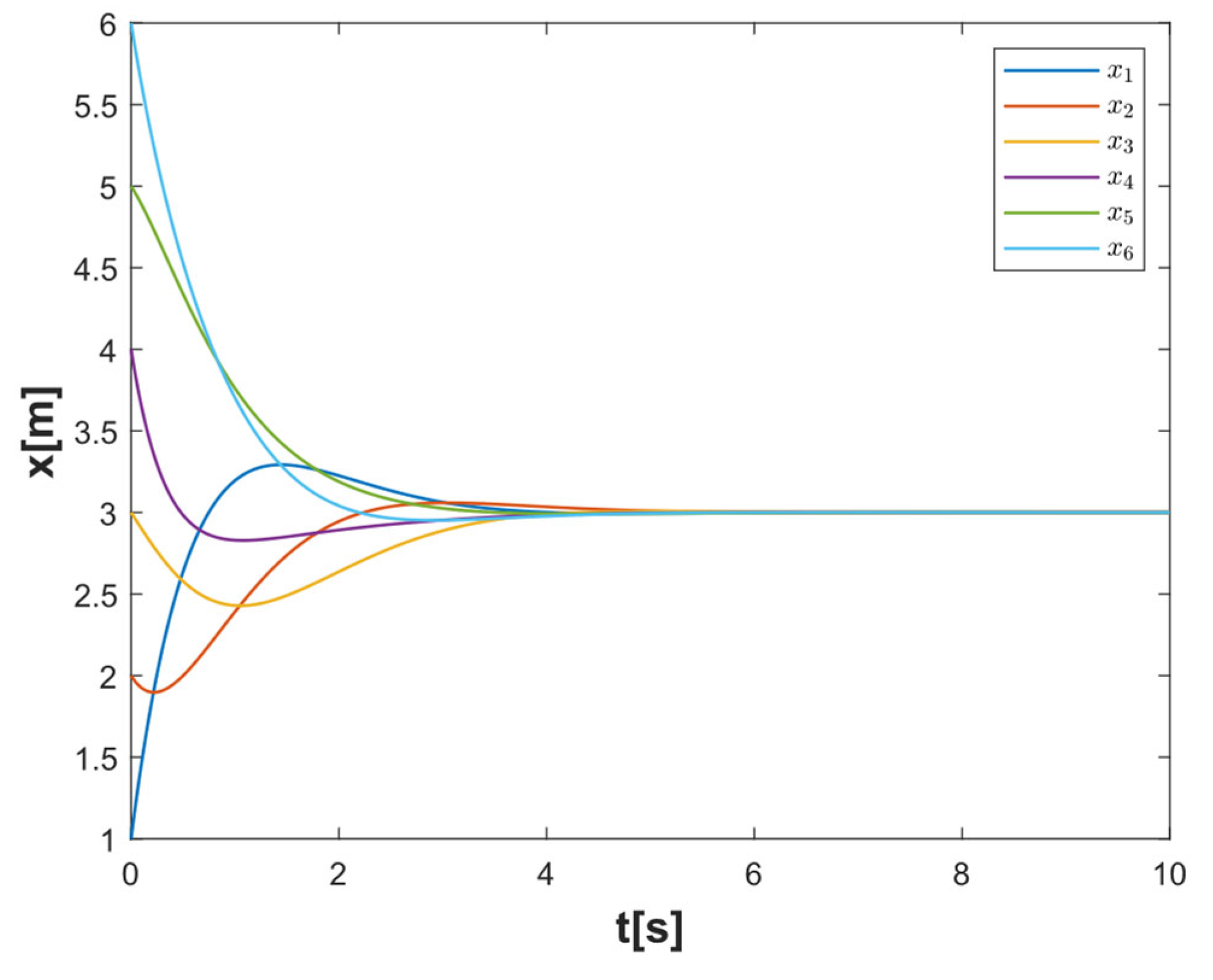

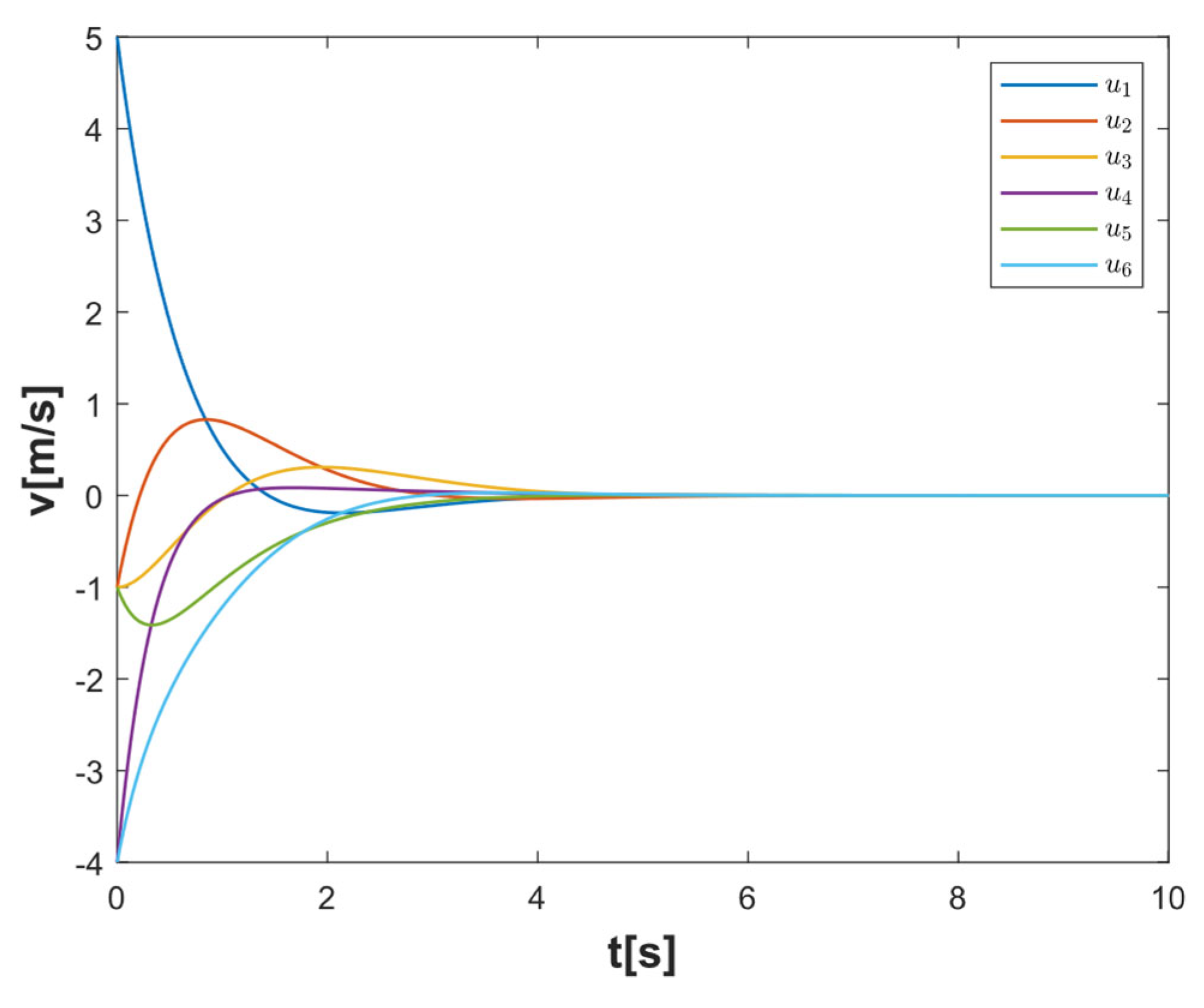

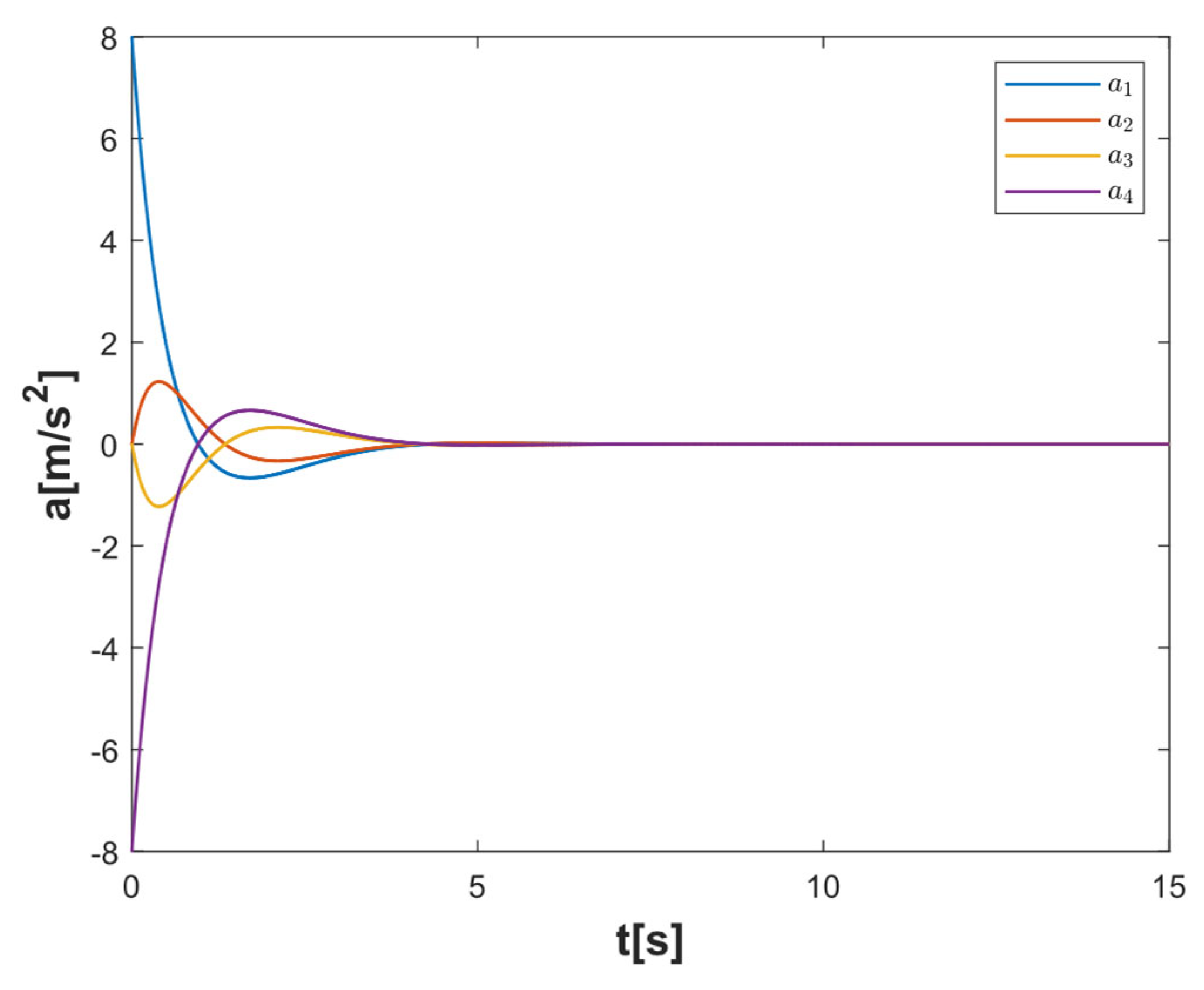

4.1. Simulation Verification of System Consistency

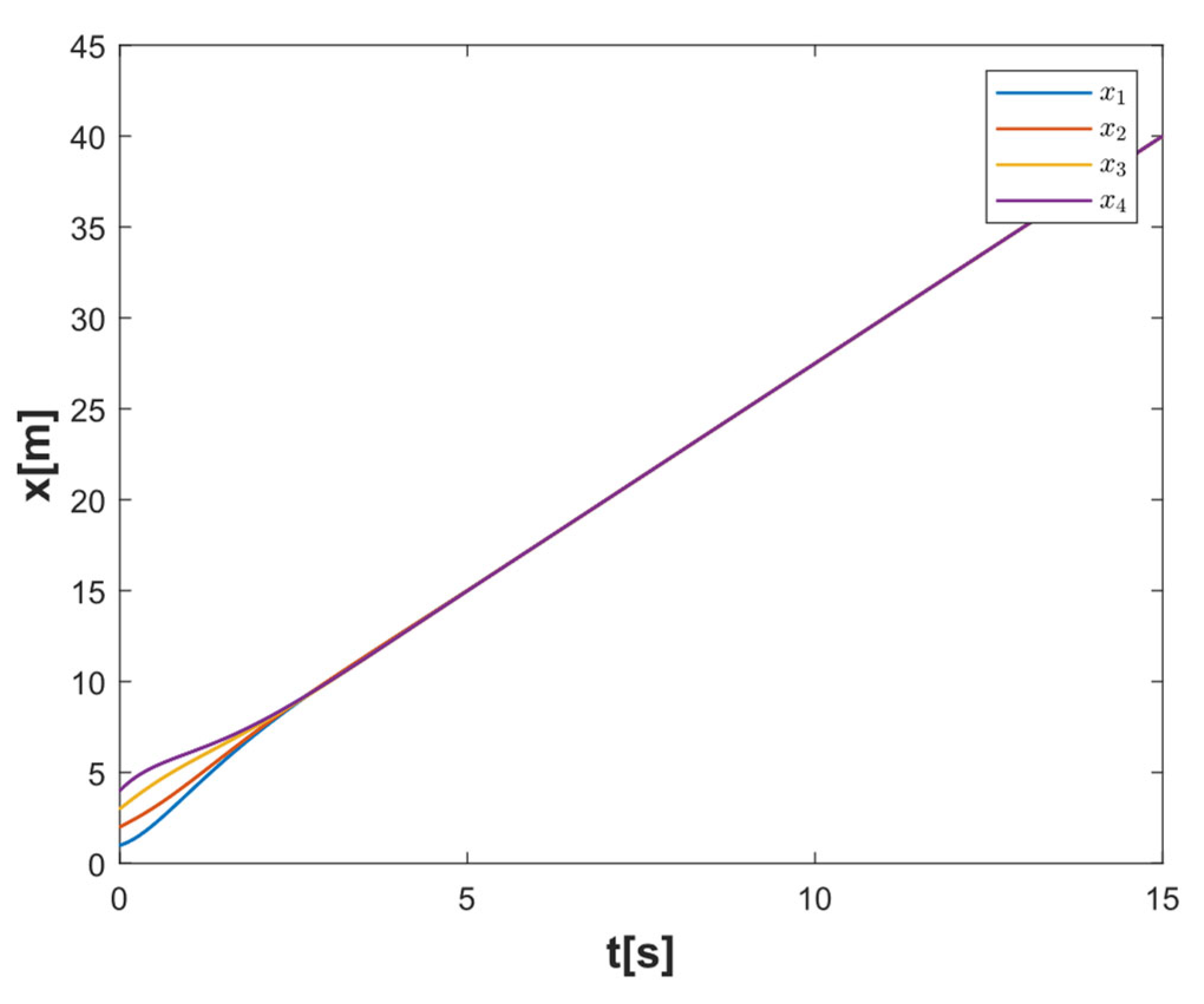

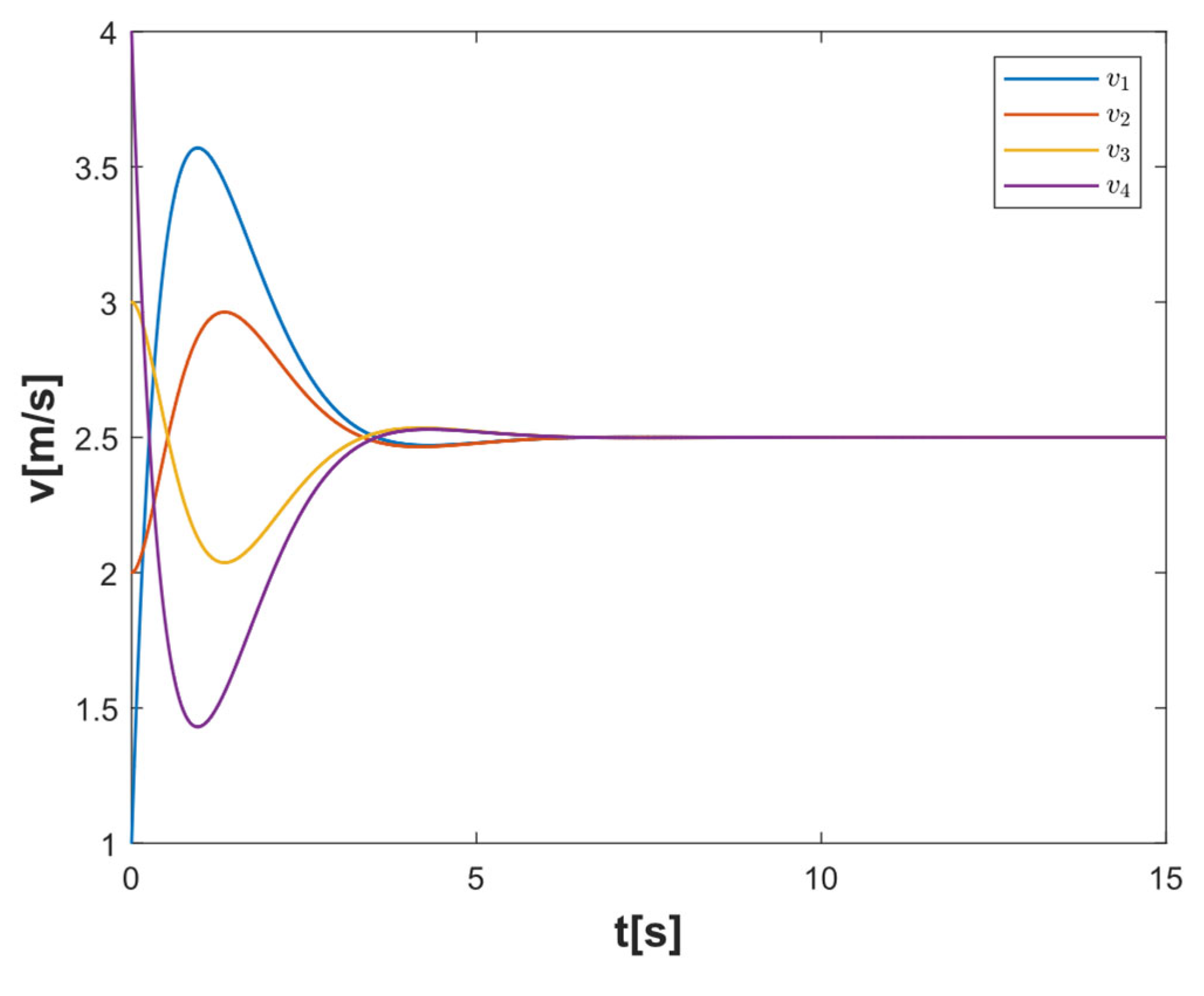

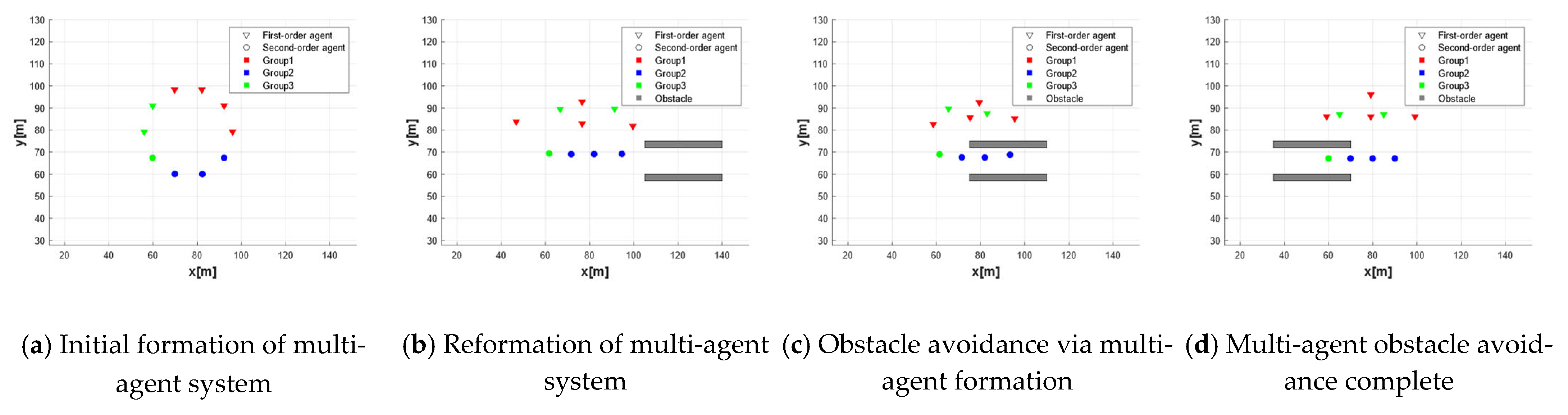

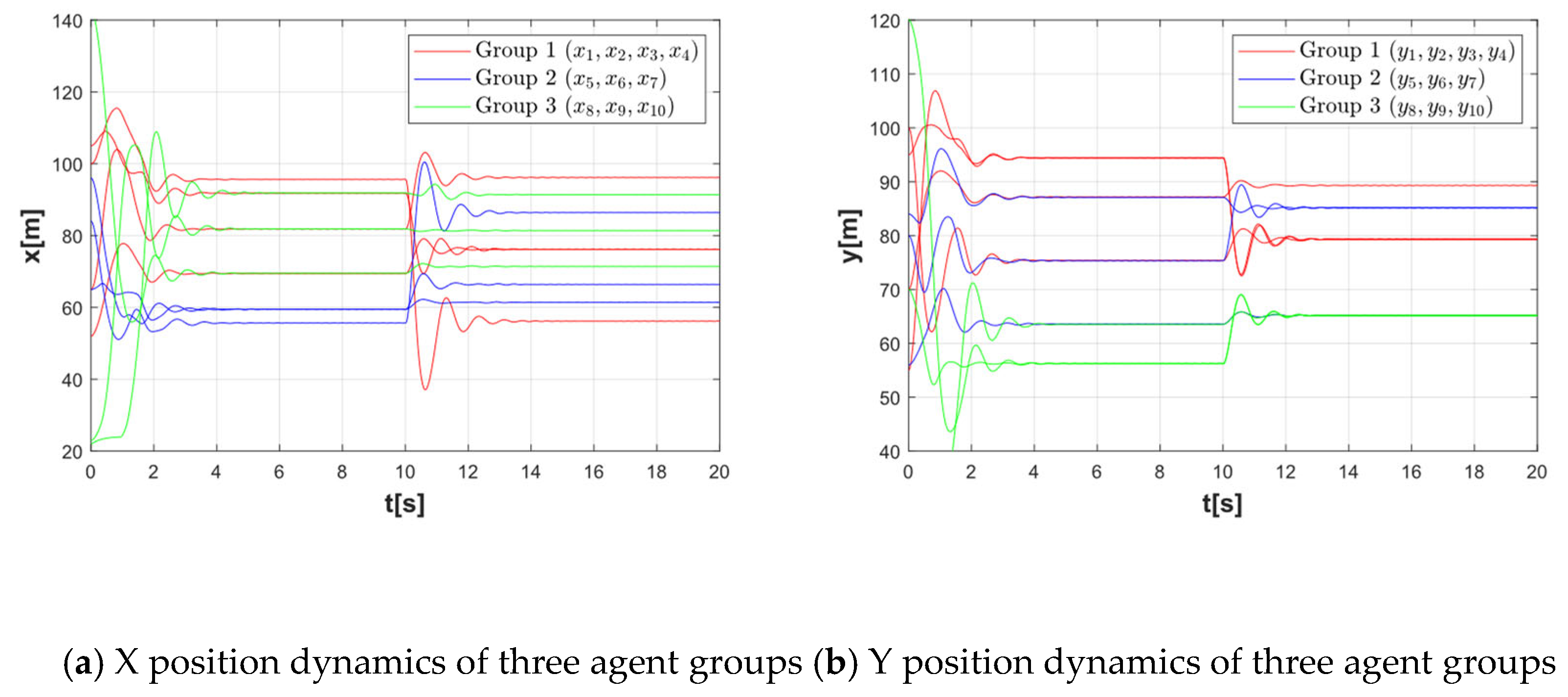

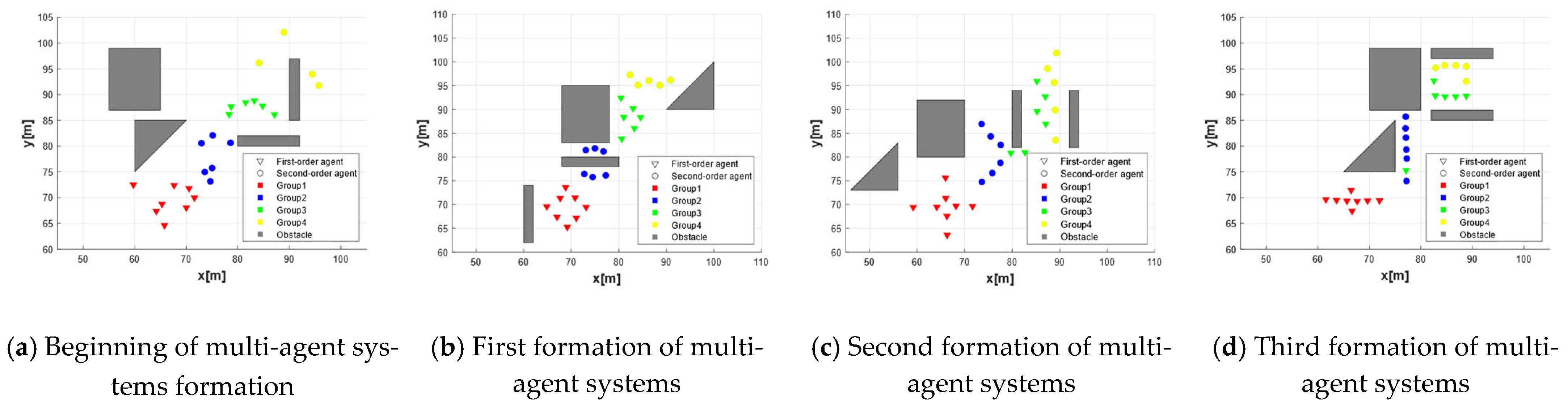

4.2. Formation and Obstacle Avoidance Performance Simulation Verification

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Cordeiro, T.F.K.; Ishihara, J.Y.; Ferreira, H.C. A Decentralized Low-Chattering Sliding Mode Formation Flight Controller for a Swarm of UAVs. Sensors 2020, 20, 3094. [Google Scholar] [CrossRef] [PubMed]

- Cappello, D.; Mylvaganam, T. Distributed Control of Multi-Agent Systems via Linear Quadratic Differential Games with Partial Information. In Proceedings of the 2018 IEEE Conference on Decision and Control (CDC), Miami, FL, USA, 17–19 December 2018; pp. 4565–4570. [Google Scholar]

- Tan, C.; Cui, Y.; Li, Y. Global Consensus of High-Order Discrete-Time Multi-Agent Systems with Communication Delay and Saturation Constraint. Sensors 2022, 22, 1007. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Li, W. Distributed adaptive control for nonlinear multi-agent systems with nonlinear parametric uncertainties. Math. Biosci. Eng. 2023, 20, 12908–12922. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Li, C.; Guo, Y.; Ma, G.; Li, Y.; Xiao, B. Formation–containment control of multi-agent systems with communication delays. ISA Trans. 2022, 128, 32–43. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Li, X.; Wang, L. Distributed H∞ consensus of heterogeneous multi-agent systems with nonconvex constraints. ISA Trans. 2022, 131, 160–166. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Chen, M.; Ding, Z. Distributed adaptive controllers for cooperative output regulation of heterogeneous linear multi-agent systems with directed graphs. Automatica. 2016, 68, 179–183. [Google Scholar] [CrossRef]

- Lu, M.; Wu, J.; Zhan, X.; Han, T.; Yan, H. Consensus of second-order heterogeneous multi-agent systems with and without input saturation. ISA Trans. 2022, 126, 14–20. [Google Scholar] [CrossRef] [PubMed]

- Park, B.S.; Yoo, S.J. An Error Transformation Approach for Connectivity-Preserving and Collision-Avoiding Formation Tracking of Networked Uncertain Underactuated Surface Vessels. IEEE Trans. Cybern. 2018, 49, 2955–2966. [Google Scholar] [CrossRef] [PubMed]

- Xiong, S.; Wu, Q.; Wang, Y.; Chen, M. An l_2-l_∞ distributed containment coordination tracking of heterogeneous multi-unmanned systems with switching directed topology. Appl. Math. Computation. 2021, 404, 126080. [Google Scholar] [CrossRef]

- Hua, Y.; Dong, X.; Hu, G.; Li, Q.; Ren, Z. Distributed Time-Varying Output Formation Tracking for Heterogeneous Linear Multiagent Systems With a Nonautonomous Leader of Unknown Input. IEEE Trans. Autom. Control. 2019, 64, 4292–4299. [Google Scholar] [CrossRef]

- Duan, J.; Zhang, H.; Liang, Y.; Cai, Y. Bipartite finite-time output consensus of heterogeneous multi-agent systems by finite-time event-triggered observer. Neurocomputing 2019, 365, 86–93. [Google Scholar] [CrossRef]

- Duan, J.; Duan, G.; Cheng, S.; Cao, S.; Wang, G. Fixed-time time-varying output formation–containment control of heterogeneous general multi-agent systems. ISA Trans. 2023, 137, 210–221. [Google Scholar] [CrossRef] [PubMed]

- Hua, Y.; Dong, X.; Li, Q.; Ren, Z. Distributed fault-tolerant time-varying formation control for high-order linear multi-agent systems with actuator failures. ISA Trans. 2017, 71, 40–50. [Google Scholar] [CrossRef] [PubMed]

- Lan, J.; Liu, Y.-J.; Yu, D.; Wen, G.; Tong, S.; Liu, L. Time-Varying Optimal Formation Control for Second-Order Multiagent Systems Based on Neural Network Observer and Reinforcement Learning. IEEE Trans. Neural Networks Learn. Syst. 2022, 35, 3144–3155. [Google Scholar] [CrossRef] [PubMed]

- Mo, L.; Guo, S.; Yu, Y. Mean-square H∞ antagonistic formations of second-order multi-agent systems with multiplicative noises and external disturbances. ISA Trans. 2020, 97, 36–43. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Park, J.H. Group consensus of discrete-time multi-agent systems with fixed and stochastic switching topologies. Nonlinear Dyn. 2014, 77, 1297–1307. [Google Scholar] [CrossRef]

- Gong, J.; Jiang, B.; Ma, Y.; Mao, Z. Distributed Adaptive Fault-Tolerant Formation–Containment Control With Prescribed Performance for Heterogeneous Multiagent Systems. IEEE Trans. Cybern. 2022, 53, 7787–7799. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.; Song, Y.; Lim, S.; Oh, H. Decentralized Multiple V-Formation Control in Undirected Time-Varying Network Topologies. In Proceedings of the 2019 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED UAS), Cranfield, UK, 25–27 November 2019; pp. 278–286. [Google Scholar]

| Method | Centralized Control | Distributed Control | Proposed Method |

|---|---|---|---|

| Scalability | not suitable for large-scale systems | better than centralized but still limited | scalable to large multi-agent systems |

| Fault Tolerance | single point of failure | resilient to some faults | robust against faults and jamming |

| Flexibility | requires centralized coordination) | agents work independently but with fixed rules | dynamic subgrouping and real-time adaptability |

| System Stability | precise control | difficult to guarantee under changing conditions | ensures stability with time-varying topologies |

| Complexity of Environment | struggles with dynamic and complex environments | can handle complex environments but with limited real-time response | effective in complex and dynamic environments (urban flight, mountainous terrain) |

| Parameter | Value |

|---|---|

| 50 [m] | |

| 40 [m] | |

| 5 [m] | |

| 0.5 [m] | |

| ) | (8, 2) |

| ) | (1.5, 1.5) |

| (1, 1.1, 1.2) | |

| 0.6 | |

| 0.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, X.; Yang, Y.; Zhang, Z.; Jiao, J.; Cheng, H.; Fu, W. Consensus-Based Formation Control for Heterogeneous Multi-Agent Systems in Complex Environments. Drones 2025, 9, 175. https://doi.org/10.3390/drones9030175

Chang X, Yang Y, Zhang Z, Jiao J, Cheng H, Fu W. Consensus-Based Formation Control for Heterogeneous Multi-Agent Systems in Complex Environments. Drones. 2025; 9(3):175. https://doi.org/10.3390/drones9030175

Chicago/Turabian StyleChang, Xiaofei, Yiming Yang, Zhuo Zhang, Jiayue Jiao, Haoyu Cheng, and Wenxing Fu. 2025. "Consensus-Based Formation Control for Heterogeneous Multi-Agent Systems in Complex Environments" Drones 9, no. 3: 175. https://doi.org/10.3390/drones9030175

APA StyleChang, X., Yang, Y., Zhang, Z., Jiao, J., Cheng, H., & Fu, W. (2025). Consensus-Based Formation Control for Heterogeneous Multi-Agent Systems in Complex Environments. Drones, 9(3), 175. https://doi.org/10.3390/drones9030175