A Multi-Drone System Proof of Concept for Forestry Applications

Abstract

1. Introduction

1.1. The OPENSWARM EU Project

1.2. Research Question and Objectives

- Integrate and enhance state-of-the-art open source frameworks for:

- Develop and apply an auto-tuning particle swarm optimisation (PSO) algorithm to enhance flight control and stabilisation;

- Conduct field experiments in real-world forest environments to evaluate the system’s performance in operational environments under real conditions;

- Assess the potential scalability and adaptability of the system for broader forestry management applications, namely by benefiting from the OPENSWARM code base.

1.3. Organisation of the Article

2. Related Work

2.1. Drone-Based Applications in Forestry

2.2. Single and Multi-Drone SLAM

2.3. Autonomous Navigation with Forestry Drones

2.4. Swarm Robotics with Drones

3. Multi-Drone PoC System Integration

- UAV-specific Modules: Each drone is equipped with an onboard system that includes controllers, state estimators, and planners. These components ensure precise trajectory tracking and obstacle avoidance. The dataflow through sensors, such as LiDAR, GNSS, and IMU, supports real-time state estimation and navigation;

- Mapping and Localisation Framework: The system employs a distributed SLAM framework. It includes modules like loop closure, pose graph optimisation, and keyframe selection to enable robust map generation and localisation;

- Global Map Service: A centralised mapping service aggregates data from individual drones to build a comprehensive global map, facilitating coordinated navigation and task execution;

- Swarm Formation and Communication: The system uses a formation tracker and path generator to manage the movements of multiple drones. Communication is facilitated through a peer-to-peer network, enabling seamless lightweight data sharing and synchronisation among drones;

- Hardware Integration: The architecture incorporates sensors and actuators to provide environmental awareness and ensure smooth execution of control commands. These include 3D LiDAR, IMU, and GNSS modules;

- Wireless Network: The wireless network forms the backbone for inter-drone communication, ensuring efficient collaboration within the swarm.

3.1. Planning, Navigation, and Control

3.1.1. MRS UAV System

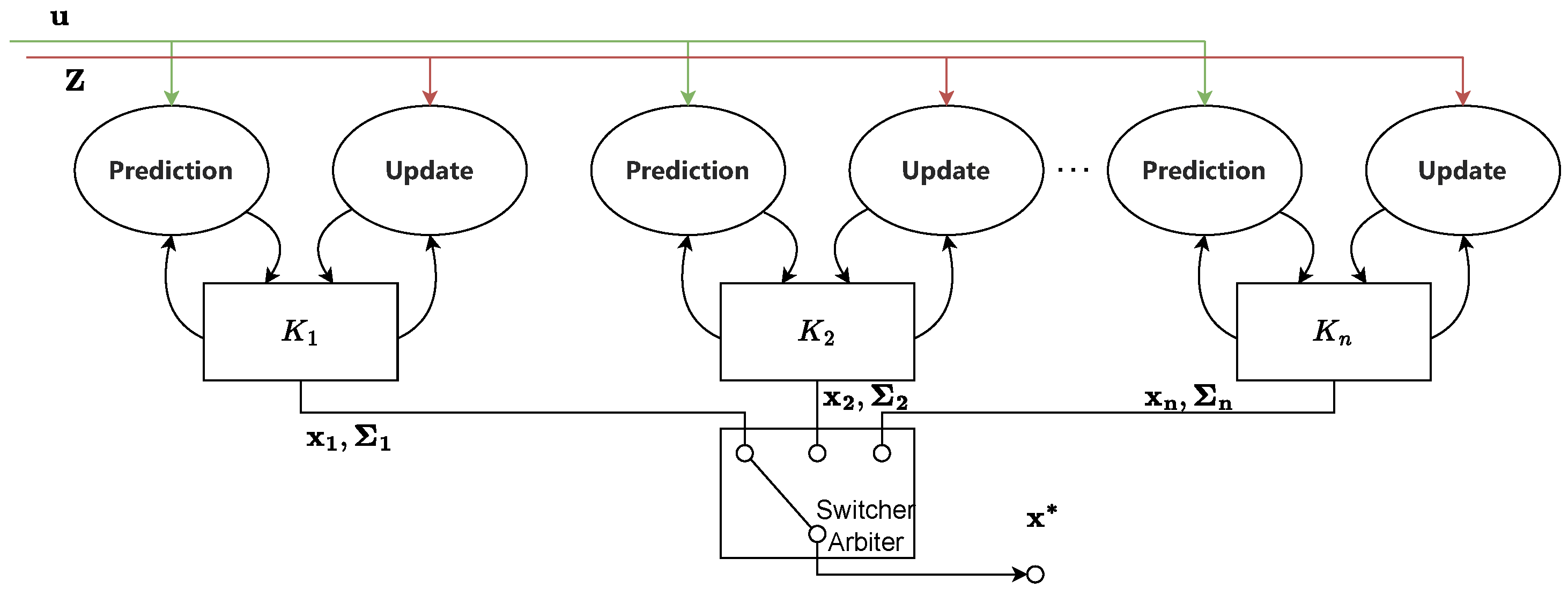

- Multi-Frame Localisation: This component provides drone state estimation using multiple sensors across various reference frames. It ensures accurate localisation even in GNSS-denied environments by fusing data from the low-level controller and related sensors (IMU, barometer, GNSS, etc.), as well as the output from SLAM methods.

- Feedback Control: The system features advanced feedback control mechanisms, including Model Predictive Control (MPC) and SE(3) geometric tracking, which enable precise and aggressive manoeuvring. These controllers are designed to handle noisy state estimates and ensure stability during complex flight operations.

- Modular Software Architecture: The MRS UAV System is modular, facilitating easy integration and customisation. This modularity allows for the incorporation of new control methods, sensor systems, and mission-specific functionalities.

- Realistic Simulations: The framework includes simulations of drones, allowing researchers to test and validate their algorithms in a controlled virtual environment before deploying them in real-world scenarios.

- Robust Localisation: The multi-frame localisation capability ensures the accurate positioning of multiple drones individually in dense forest environments, where GNSS signals are weak or obstructed. This is crucial for tasks, such as forest mapping and monitoring.

- Precise Control: The advanced feedback control mechanisms enable drones to navigate through complex and cluttered environments, such as forests, with high precision and stability.

- Scalability and Flexibility: The modular architecture allows for easy integration of additional sensors and control algorithms, making the system highly adaptable to various forestry applications.

- Comprehensive Testing: The simulation environment enables thorough testing and validation of algorithms, ensuring reliable performance in real-world forestry missions.

3.1.2. State Estimation

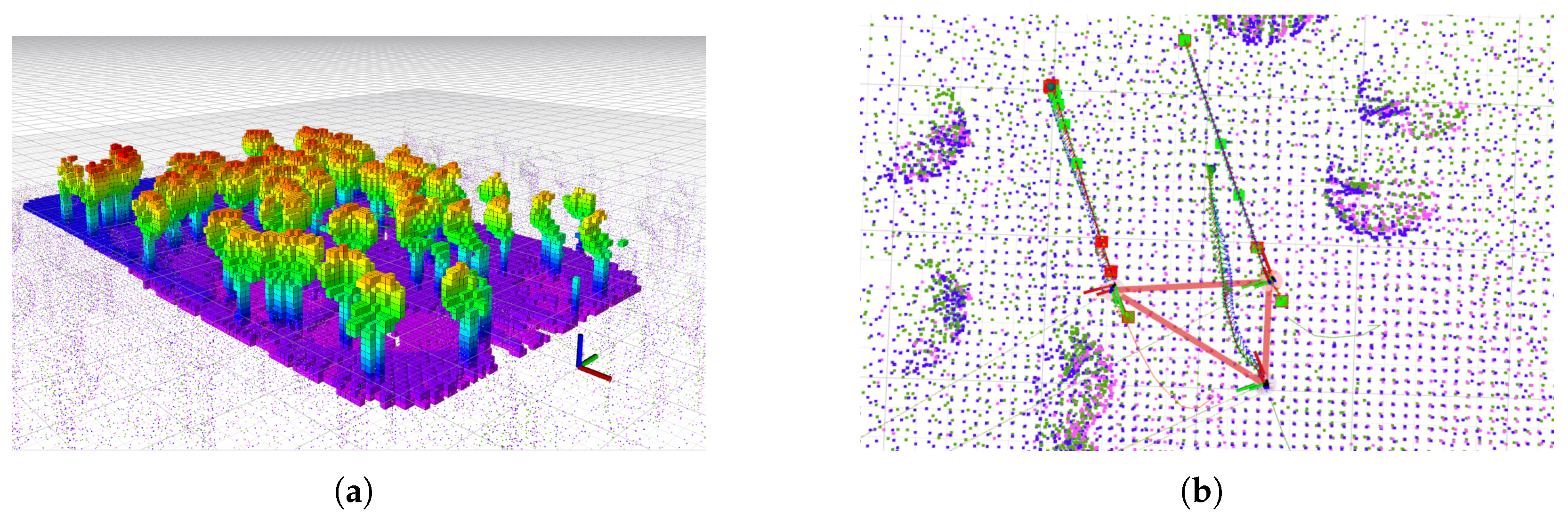

3.1.3. Octomap Planner

3.2. Localisation and Mapping

- Single-robot Front-end: It supports the interface with single-robot front-ends with various LiDAR odometry in order to ensure accurate pose estimation and local map generation;

- Distributed Loop Closure: It facilitates inter-robot collaboration by identifying overlapping areas between maps generated by different drones, using lightweight descriptors for efficient place recognition;

- Distributed Back-end: It integrates these loop closures into a pose graph optimization process, refining the global map and ensuring consistent and accurate localization across the swarm.

3.2.1. Single-Robot Front-End

- Preprocessing: This step involves extracting features from raw LiDAR scans. These features include edge and planar points, which are critical for accurate pose estimation.

- IMU Integration: IMU measurements are integrated to provide high-frequency motion estimates, which can be used to predict the drone’s pose between LiDAR scans.

- Factor Graph Optimisation: LIO-SAM constructs a factor graph that incorporates LiDAR, IMU, and GNSS measurements. The factor graph is optimised using an iterative smoothing algorithm, which refines the pose estimates by minimising the overall error.

- Loop Closure: The framework also includes a loop closure mechanism to detect and correct drift by recognising previously visited locations. This enhances the long-term accuracy and consistency of the map.

3.2.2. Distributed Loop Closure

3.2.3. Distributed Back-End

- Odometry factors (): these are constraints based on the incremental movement of a robot (using LiDAR odometry);

- Intra-robot loop closure factors (): these help to correct drift within a single robot’s trajectory;

- Inter-robot loop closure factors (): these constraints come from loop closures between different robots.

3.2.4. Advancements in Frameworks

- Enable IMU frequency configuration: Add support for IMU nominal frequency parameter to ensure synchronisation with system timing, essential for accurate localisation.

- Refine GNSS factor and loop closure: Correct GNSS factor loop and adjust loop closure topic names to ensure robust localisation and prevent data processing errors. GPS factors () have been added to the PGO module.

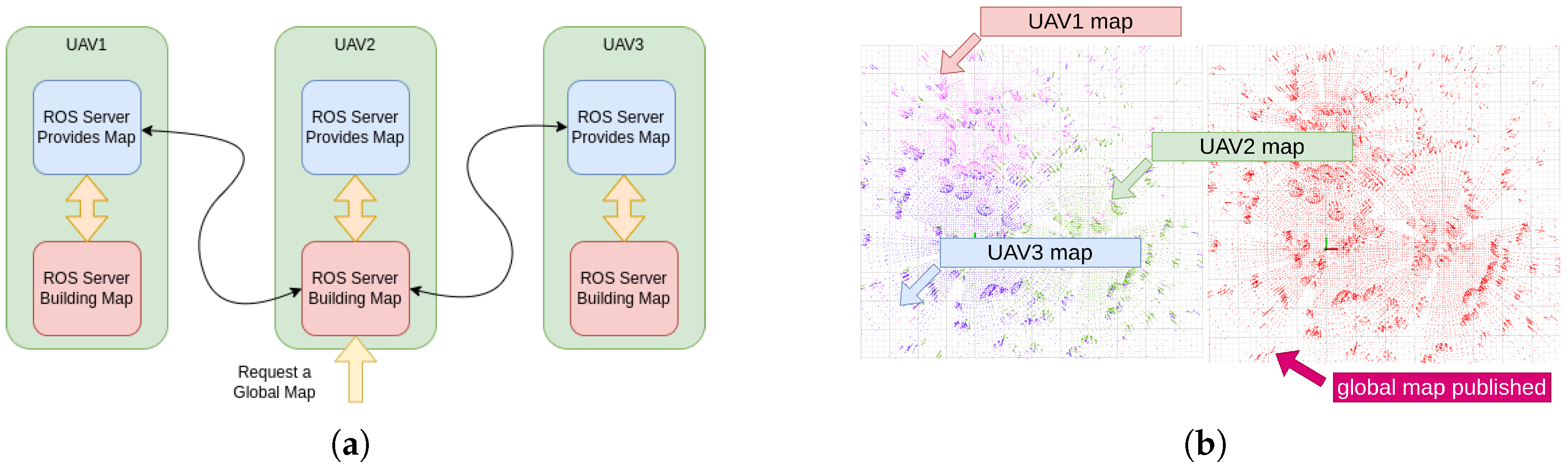

- Add support for global map publishing and search for initial pose: Include functions for loading and publishing global maps, alongside initial pose search using scan context descriptors (SCD), to improve mapping capabilities.

- Implement node management: Add functionality for node termination, restart, and action server with feedback, allowing dynamic control and monitoring of the SLAM process.

- Incorporate covariance monitoring and odometry information: Integrate covariance thresholds and odometry covariance data to enhance state estimation reliability.

- Update graph management and localisation modes: Add save/load functions for factor graphs and introduce various localisation modes to support flexible and adaptable mapping solutions.

- Enable global map service and consistent naming conventions: Integrate a global map service and standardise naming and frames for compatibility with the MRS UAV System, facilitating a unified spatial understanding across the swarm.

- Enhanced Coverage: By utilising multiple drones, DCL-SLAM can cover larger areas more efficiently than single-robot SLAM systems. This is crucial for extensive forest mapping and monitoring tasks.

- Improved Accuracy: The collaborative nature of DCL-SLAM allows for the sharing and fusion of data from multiple sources, leading to more accurate and robust localisation and mapping.

- Scalability: DCL-SLAM’s distributed architecture makes it highly scalable, allowing for the addition of more drones to the network without significant performance degradation.

- Resilience to Environmental Changes: The framework’s ability to integrate data from multiple drones helps to quickly adapt to dynamic environmental changes, such as moving obstacles and varying lighting conditions in forests.

- High Accuracy and Robustness: The integration of LiDAR and IMU data provides by LIO-SAM ensures high accuracy in pose estimation, even in environments with limited GNSS signals, such as dense forests.

3.3. Formation Flight

3.3.1. Swarm Formation

- An edge connecting vertices and which implies that robots i and j can measure the geometric distance between each other;

- All vertices are interconnected;

- Each edge of the graph G is associated with a non-negative weight, which is given bywhere denotes the Euclidean norm.

- Formation Similarity Metric: This metric uses undirected graphs to represent the formation of drones, with vertices representing individual drones and edges representing the distances between them. The Laplacian matrix of the graph [52] is used to measure the similarity between the current and desired formations, ensuring that the swarm maintains its shape while avoiding obstacles.

- Optimisation Framework: The framework formulates the trajectory generation as an unconstrained optimisation problem. The cost function includes terms for control effort, total time, obstacle avoidance, formation similarity, swarm reciprocal avoidance, dynamic feasibility, and uniform distribution of constraint points. The optimisation process simultaneously balances these factors to generate collision-free trajectories that preserve the formation.

- Distributed Architecture: The framework is designed for distributed implementation, where each drone independently calculates its trajectory based on local information and shared data from other drones. This approach enhances scalability and robustness, allowing the swarm to adapt to dynamic environments and maintain formation in real time.

3.3.2. Swarm-MRS Bridge

3.4. Global Map Service

3.5. Flight Control Optimisation

3.5.1. Control Parameters

3.5.2. Particle Swarm Optimisation (PSO)

3.5.3. Auto-Tuning Algorithm

| Algorithm 1 Auto-tuning algorithm for drone flight control using PSO |

|

3.6. Wireless Communication

4. Experimental Results

4.1. Drone Configuration: Scout v3

Technical Specifications

4.2. Flight Control Optimisation

4.2.1. FCO Experimental Protocol

- I.

- Initial Setup:

- Select an appropriate test environment with minimal external disturbances and obstacles.

- Ensure all safety measures are in place, including the presence of safety personnel and emergency procedures.

- Prepare a drone by fully charging the batteries and verifying the proper functioning of all sensors and communication systems.

- Perform pre-flight checks to ensure the drone is ready for take-off, including verifying the integrity of the propulsion system, control surfaces, and sensor calibration.

- II.

- Execution of Iterations:

- For each iteration, follow these steps:

- (a)

- Take-off: Safely take off the drone and bring it to a designated hover position at a predefined altitude.

- (b)

- Evaluation of Particles: Running the algorithm online, to sequentially evaluate the solutions of all particles within the population. Each particle updates the control parameters accordingly. The drone attempts to maintain a stable hover while executing the control parameters associated with each particle.

- (c)

- Record best particle: Record the best known global position g among all particles, i.e., the one with the best fitness function f.

- (d)

- Landing: After evaluating all particles, safely land the drone and power it down.

- III.

- Battery Swapping/Recharging:

- Swap the depleted battery with a fully charged one or recharge the battery to ensure uninterrupted operation for the next iteration.

- Allow the drone to cool down if necessary to prevent overheating.

- IV.

- Iteration Resumption:

- Resume the operation for the next iteration by repeating steps 2 and 3 until the algorithm converges to an optimal solution or the predefined number of iterations is reached.

4.2.2. Results and Discussion

4.3. Collaborative Forestry Mapping

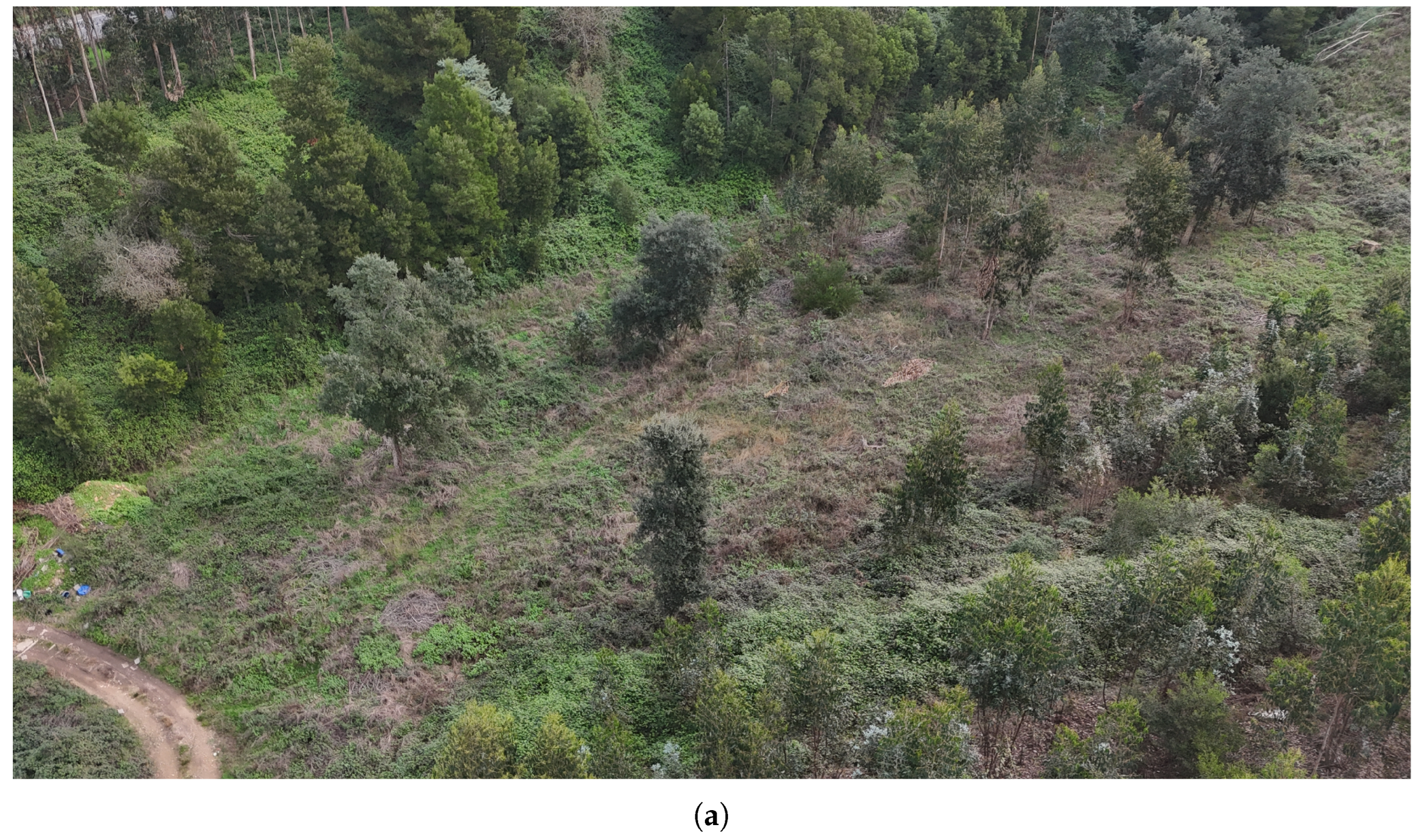

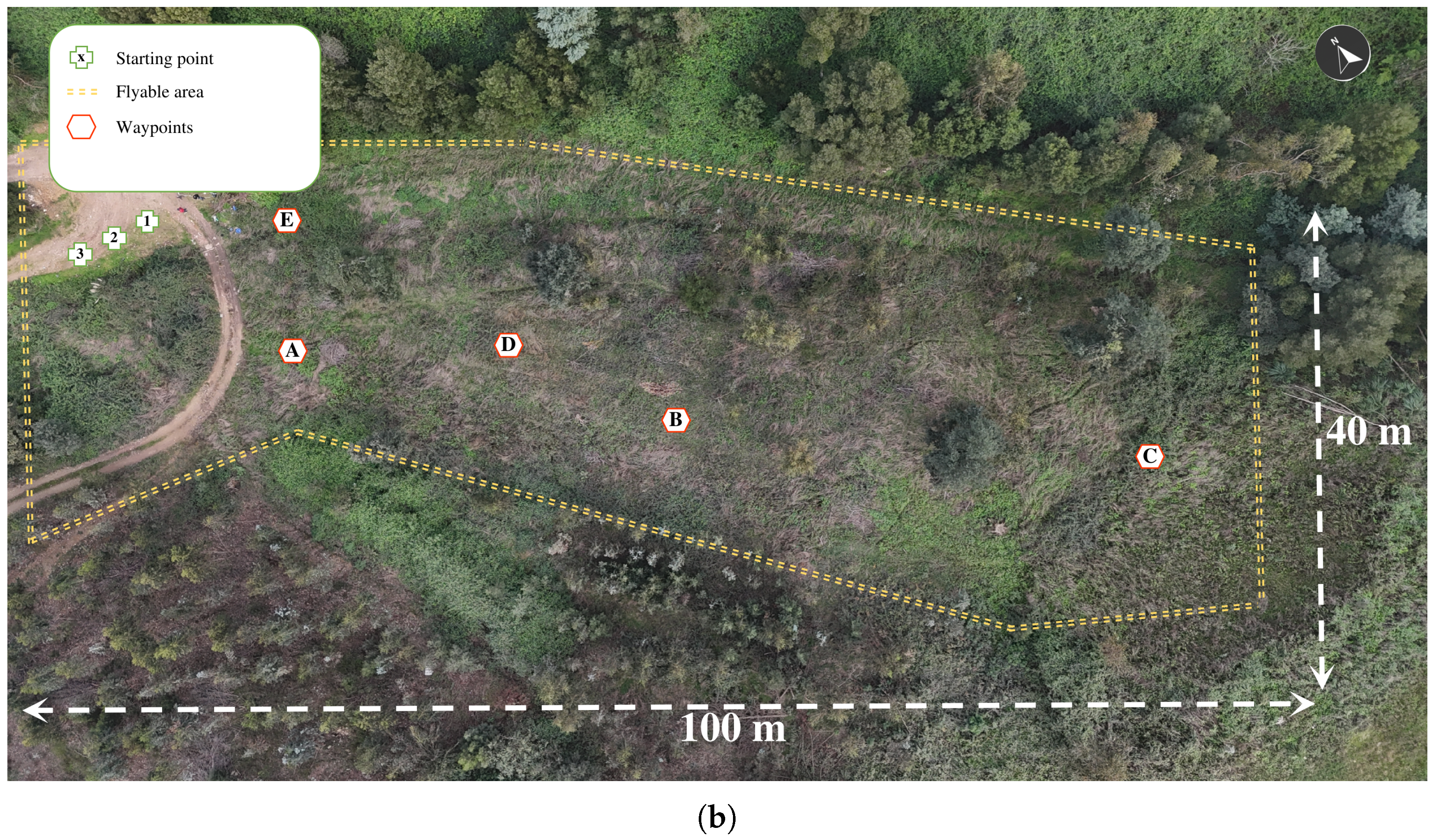

4.3.1. Forest Site Description

4.3.2. Experimental Protocol

- I.

- Initial Setup:

- As a safety mechanism, every drone was equipped with a radio receiver running an open-source radio control 2.4 GHz link connected to the lowest-level FCU. A single centralised radio control was used as a transmitter to broadcast safety commands, which was utilised solely for triggering the take-off and executing emergency landings in case of any malfunction or unexpected issue.

- Based on the MRS UAV System, a set of predefined launch files was configured to streamline the deployment of all necessary ROS packages, ensuring seamless integration and initialisation of the multi-drone system functionalities.

- II.

- Mission planning:

- A collaborative flight strategy was devised using waypoint-based (Figure 9b) path planning to distribute tasks among drones while ensuring minimal overlap and avoiding potential collisions.

- III.

- Software configurations:

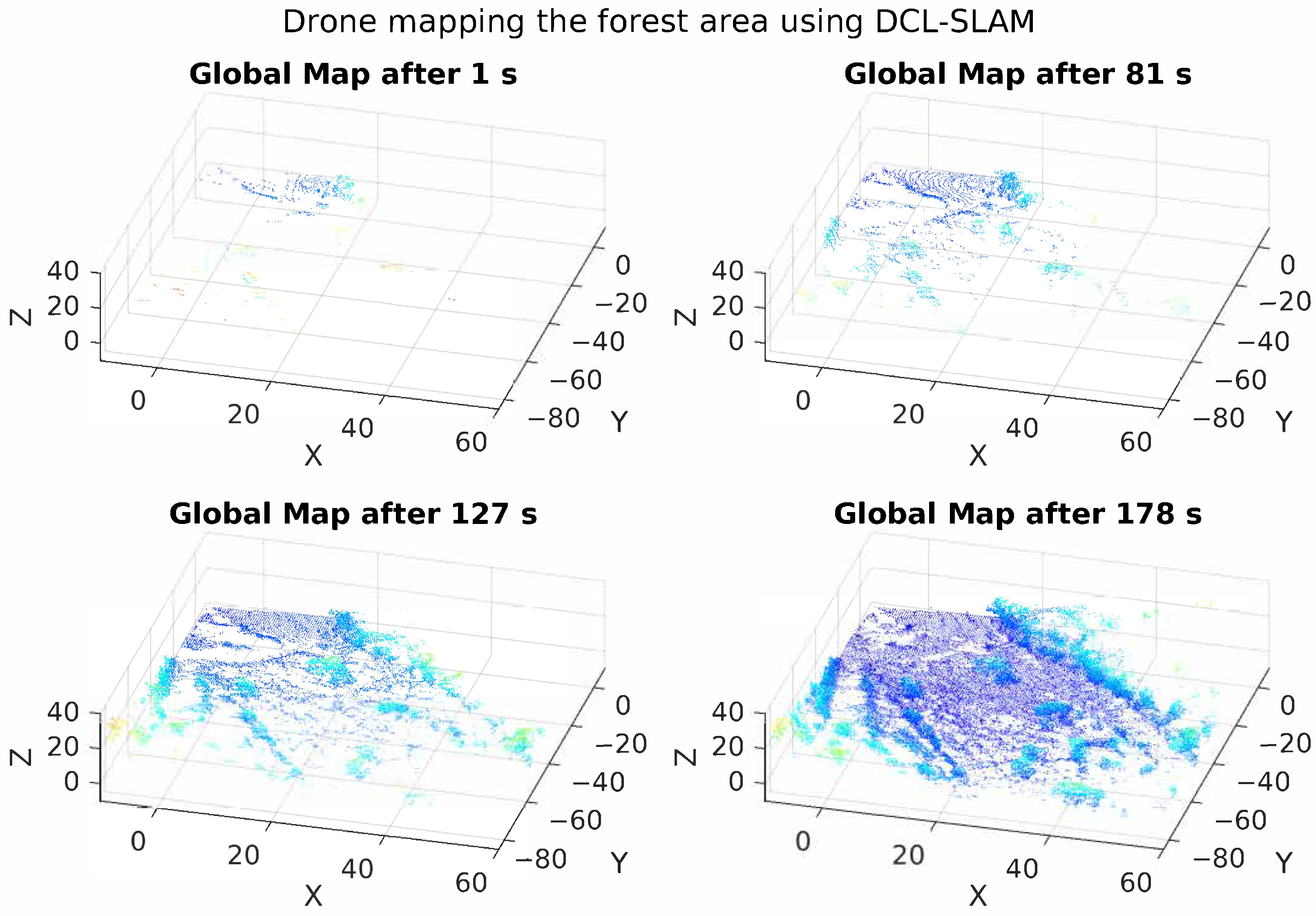

- The system utilised a fleet of three Scout v3 drones (drones , , and ), using the LiDAR to generate a detailed 3D representation of the forest structure, which was further processed using DCL-LIO-SLAM and MRS OctoMap to create an efficient volumetric map for path planning, GNSS for georeferencing, and communication modules for real-time data sharing and coordination.

- IV.

- Field deployment:

- A multi-agent framework facilitates autonomous task distribution and path optimisation, while a wireless network enables data transmission between the drones;

- Field operations were conducted using a swarm formation (Section 3.3.1) to optimise coverage and efficiency during the forestry mapping missions. The drones should try to maintain a triangle formation and keep a height difference between them of 2 m. The drone was chosen as the leader of the formation.

- V.

- Data Acquisition:

- During the data acquisition phase, each drone recorded its own set of data in a standardised ROS bag file format, capturing synchronised streams from the onboard sensors. These bag files served as comprehensive logs of the drones’ flight activities and sensor outputs. The decentralised recording approach ensured that data from each drone were securely stored locally, reducing the risk of data loss during multi-drone operations.

- VI.

- Post-processing:

- Following the missions, the bag files were retrieved and analysed in post-processing. This analysis involved extracting key metrics, such as point cloud data for forest structure. The use of individual bag files enabled detailed performance evaluations for each drone and facilitated the integration of datasets to construct a cohesive and high-resolution map of the forest site.

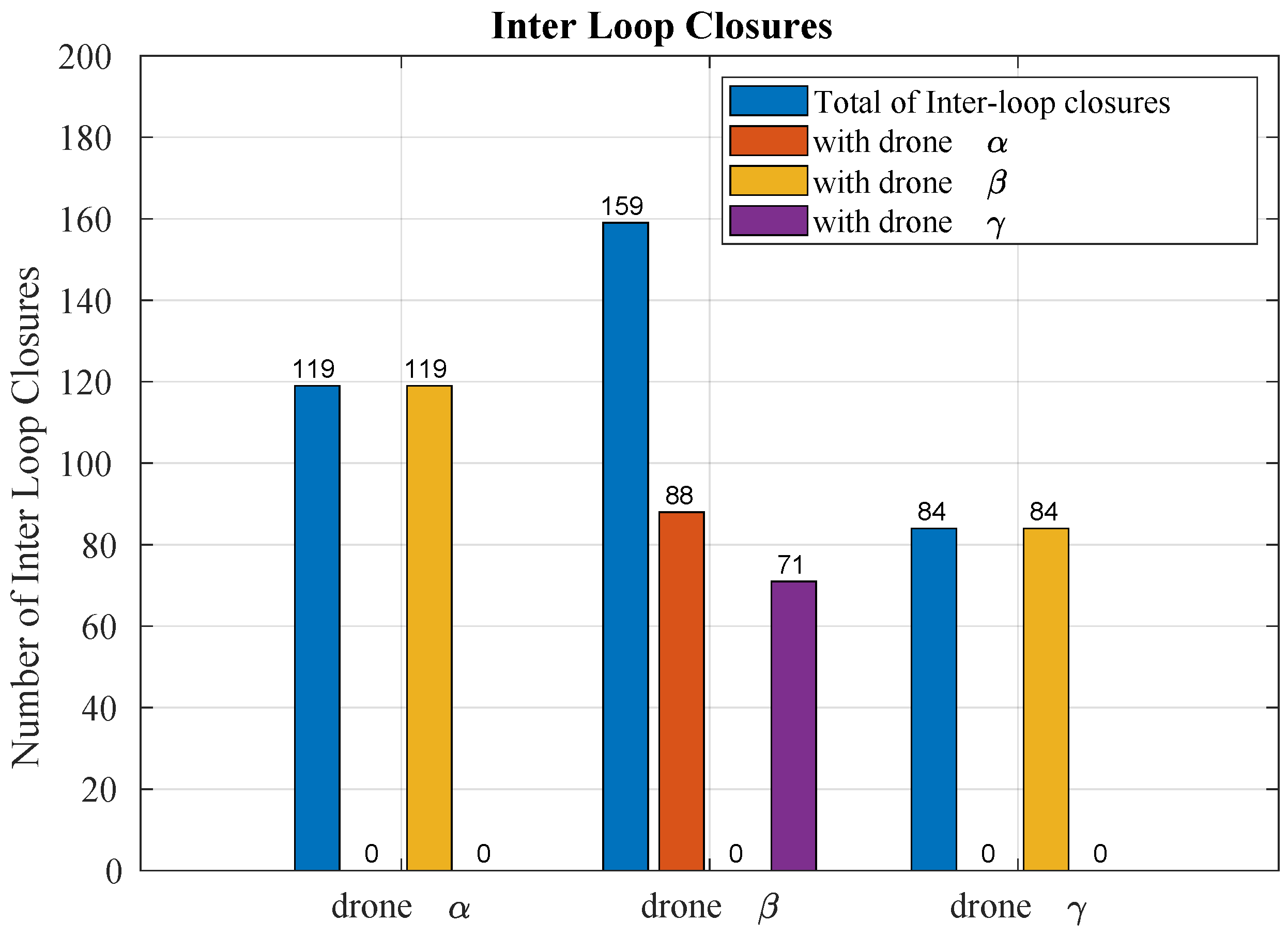

4.3.3. Results and Discussion

5. Implications and Future Work

5.1. Integration with OPENSWARM Code Base

- Application Performance Monitoring: Monitoring and managing the performance of applications running on the drones is essential for optimising their operation. We propose to enable the OPENSWARM telemetry framework through existing packages, such as rosmon (https://github.com/xqms/rosmon, accessed on 20 January 2025) or diagnostics (https://github.com/ros/diagnostics, accessed on 20 January 2025), to create a comprehensive monitoring solution.

- Collaborative AI Task Distribution: Efficient task distribution among swarm members is critical for maximising collective performance. In this work, we integrate a Swarm Formation package as a preliminary step. Future improvements could include implementing self-organising maps (SOMs) for coordinating task allocation dynamically based on real-time environmental inputs and drone capabilities [59].

- Energy-Aware Collaborative Task Scheduler: Efficient scheduling of tasks based on available energy resources ensures optimal resource utilisation across the swarm. This capability will be achieved through an energy-aware task scheduler that evaluates each drone’s current energy levels in real time, dynamically assigning tasks that align with each unit’s energy availability and the mission’s overall energy budget.

5.2. Potential Improvements

- Enhanced Sensor Fusion Techniques: Although the integration of LiDAR, IMU, and GNSS data has proven effective in operating in forestry scenarios, further advancements in sensor fusion techniques could improve the system’s accuracy and robustness. Incorporating additional sensors, such as multispectral cameras, can provide richer environmental data, aiding in tasks, such as species identification and health monitoring of forest areas. Advanced algorithms, like deep learning-based sensor fusion, could be explored to optimally combine data from these diverse sensors.

- Adaptive Control Algorithms: While the current SE(3) control framework offers robust performance, adaptive control algorithms could be developed to dynamically adjust control parameters in real time based on environmental conditions and mission requirements. Techniques like model predictive control and reinforcement learning could be employed to enhance the adaptability and efficiency of the flight control system, ensuring stable operation even in rapidly changing forest environments.

- Advanced Collaborative Algorithms: Enhancing the collaborative capabilities of the drone swarm through more sophisticated algorithms for task allocation and coordination can improve the overall efficiency and effectiveness of the system. Exploring game theory-based approaches, market-based mechanisms, and advanced AI-driven strategies for dynamic task assignment can optimise resource utilisation and mission success rates.

- Robust Communication Networks: While dependable WiFi networking has been integrated into the system, it has not been the scope of this work. Therefore, further improvements in communication protocols and ad-hoc infrastructure could enhance reliability and data throughput. Research into multi-hop communication networks, adaptive bandwidth allocation, and fault-tolerant communication strategies could ensure continuous and stable connectivity among drones, even in challenging environments with high signal attenuation.

- Scalability and Modular Architecture: To support larger and more diverse drone swarms, the system architecture could be made more modular and scalable. Implementing microservice architecture and containerisation (e.g., using Singularity as an approach already adopted by the authors) could facilitate easier deployment, scaling, and maintenance of the system. This approach would allow new functionalities to be added or existing ones to be updated without disrupting the overall system operation.

- User-Friendly Interfaces and Tools: Developing intuitive user interfaces and tools for mission planning, monitoring, and analysis could improve the usability of the system for end-users, such as forest managers and researchers. Graphical interfaces for swarm programming, real-time visualisation of drone data, and easy-to-use mission planning tools would enhance user experience and operational efficiency.

- Migrating from ROS1 to ROS2: This is particularly beneficial for multi-robot systems due to several key improvements and considerations [60]. ROS2 eliminates the need for the central ROS Master node, enabling inherently distributed communication. This avoids the bottlenecks and single points of failure that hinder scalability and robustness in ROS1, which this work overcomes by adopting the nimbro_network package. Additionally, ROS2 integrates Data Distribution Service (DDS) as its communication middleware, which offers enhanced support for real-time systems, and quality-of-service configurations. However, this migration would not come without the extra effort to improve data exchange under high-latency, low-quality, dynamic networks, which ROS2 is still not prepared for as it currently stands.

5.3. Broader Applications

- Agriculture: In agriculture, the multi-drone system can be utilised for precision farming practices [61]. Drones equipped with multispectral cameras can monitor crop health, detect pest infestations, and assess soil moisture levels. The system’s ability to perform collaborative mapping and real-time data analysis can optimise irrigation schedules, apply fertilisers precisely, and improve overall crop yields.

- Disaster Response and Management: During natural disasters, such as wildfires, earthquakes, and floods, the multi-drone system can play a critical role in search and rescue operations [62]. Drones can quickly cover large areas to locate survivors, assess damage, and provide real-time situational awareness to emergency responders. The collaborative capabilities of the system ensure efficient coordination and resource allocation, enhancing the effectiveness of disaster response efforts.

- Environmental Monitoring and Conservation: Beyond forestry, the multi-drone system can be employed for broader environmental monitoring and conservation efforts [63]. Drones can track wildlife populations, monitor habitats, and assess the health of ecosystems. They can also detect and track illegal activities, such as poaching and logging, providing crucial data for conservation authorities. The system’s real-time mapping and monitoring capabilities can support efforts to protect endangered species and preserve biodiversity.

- Urban Planning and Infrastructure Inspection: In urban areas, the multi-drone system can assist in planning and managing infrastructure [64]. Drones can conduct detailed inspections of buildings, bridges, and other critical infrastructure, identifying structural issues and maintenance needs. The system’s ability to create accurate 3D maps can aid in urban planning and development projects, ensuring efficient use of space and resources. Drones can also monitor traffic patterns and air quality, contributing to smarter and more sustainable cities.

- Logistics and Supply Chain Management: The logistics industry can benefit from the multi-drone system through enhanced delivery and inventory management [65]. Drones can autonomously transport goods between warehouses, distribution centres, and customer locations, reducing delivery times and operational costs. In large warehouses, drones can perform inventory checks and manage stock levels, improving supply chain efficiency. The collaborative nature of the system ensures seamless coordination and optimisation of logistics operations.

- Energy Sector: In the energy sector, drones can be used for inspecting and maintaining infrastructure such as power lines, wind turbines, and solar panels [66]. The system’s ability to navigate complex environments and perform detailed inspections can help identify faults and schedule maintenance activities, reducing downtime and improving the reliability of energy supply. Drones can also monitor pipeline networks and detect leaks, enhancing the safety and efficiency of energy distribution.

- Public Safety and Law Enforcement: Law enforcement agencies can leverage the multi-drone system for surveillance, crowd monitoring, and crime prevention [67]. Drones can provide real-time aerial views of public events, monitor traffic violations, and assist in tracking suspects. The system’s advanced capabilities in real-time data analysis and collaborative operations can enhance the effectiveness of public safety measures and support law enforcement activities.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CSLAM | Collaborative Simultaneous Localization and Mapping |

| DARPA | Defense Advanced Research Projects Agency |

| DDS | Data Distribution Service |

| DCL-SLAM | Distributed Collaborative LiDAR Simultaneous Localization and Mapping |

| DLIO | Direct LiDAR-Inertial Odometry |

| DLO | Direct LiDAR Odometry |

| FCU | Flight Control Unit |

| FCO | Flight Controller Optimizer |

| GICP | Generalized Iterative Closest Point |

| GNSS | Global Navigation Satellite System |

| ICP | Iterative Closest Point |

| iG-LIO | Incremental Generalized Iterative Closest Point LiDAR-Inertial Odometry |

| IMU | Inertial Measurement Unit |

| LiDAR | Light Detection and Ranging |

| Light-LOAM | Lightweight LiDAR Odometry and Mapping |

| LoRa | Long Range (wireless communication protocol) |

| LIO | LiDAR-Inertial Odometry |

| LIO-SAM | LiDAR-Inertial Odometry Smoothing and Mapping |

| LSD-SLAM | Large-Scale Direct Simultaneous Localization and Mapping |

| MAVROS | MAVLink Extending ROS |

| MPC | Model Predictive Control |

| MRS UAV | Multi-Robot System Unmanned Aerial Vehicle |

| OPENSWARM | Open-source collaborative framework for Swarm Robotics |

| ORB-SLAM | Oriented FAST and Rotated BRIEF Simultaneous Localization and Mapping |

| PGO | Pose Graph Optimization |

| PoC | Proof of Concept |

| PSO | Particle Swarm Optimization |

| PX4 | Open-source Flight Stack for UAVs |

| RMSE | Root Mean Square Error |

| ROS | Robot Operating System |

| RTK | Real-Time Kinematic (positioning) |

| SCD | Scan Context Descriptors |

| SLAM | Simultaneous Localization and Mapping |

| SOM | Self-Organizing Map |

| SR-LIVO | Sparse Robust LiDAR-Inertial Visual Odometry |

| TCP | Transmission Control Protocol |

| UDP | User Datagram Protocol |

| UAV | Unmanned Aerial Vehicle |

References

- Partheepan, S.; Sanati, F.; Hassan, J. Autonomous unmanned aerial vehicles in bushfire management: Challenges and opportunities. Drones 2023, 7, 47. [Google Scholar] [CrossRef]

- Merz, M.; Pedro, D.; Skliros, V.; Bergenhem, C.; Himanka, M.; Houge, T.; Matos-Carvalho, J.P.; Lundkvist, H.; Cürüklü, B.; Hamrén, R.; et al. Autonomous UAS-based agriculture applications: General overview and relevant European case studies. Drones 2022, 6, 128. [Google Scholar] [CrossRef]

- Zhang, J.; Hu, J.; Lian, J.; Fan, Z.; Ouyang, X.; Ye, W. Seeing the forest from drones: Testing the potential of lightweight drones as a tool for long-term forest monitoring. Biol. Conserv. 2016, 198, 60–69. [Google Scholar] [CrossRef]

- Ferreira, J.F.; Portugal, D.; Andrada, M.E.; Machado, P.; Rocha, R.P.; Peixoto, P. Sensing and Artificial Perception for Robots in Precision Forestry: A Survey. Robotics 2023, 12, 139. [Google Scholar] [CrossRef]

- Ecke, S.; Dempewolf, J.; Frey, J.; Schwaller, A.; Endres, E.; Klemmt, H.J.; Tiede, D.; Seifert, T. UAV-based forest health monitoring: A systematic review. Remote Sens. 2022, 14, 3205. [Google Scholar] [CrossRef]

- Baca, T.; Petrlik, M.; Vrba, M.; Spurny, V.; Penicka, R.; Hert, D.; Saska, M. The MRS UAV system: Pushing the frontiers of reproducible research, real-world deployment, and education with autonomous unmanned aerial vehicles. J. Intell. Robot. Syst. 2021, 102, 26. [Google Scholar] [CrossRef]

- Zhong, S.; Qi, Y.; Chen, Z.; Wu, J.; Chen, H.; Liu, M. DCL-SLAM: A distributed collaborative LIDAR SLAM framework for a robotic swarm. IEEE Sens. J. 2023, 24, 4786–4797. [Google Scholar] [CrossRef]

- Quan, L.; Yin, L.; Xu, C.; Gao, F. Distributed swarm trajectory optimization for formation flight in dense environments. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 4979–4985. [Google Scholar]

- Ahmed, F.; Mohanta, J.; Keshari, A.; Yadav, P.S. Recent advances in unmanned aerial vehicles: A review. Arab. J. Sci. Eng. 2022, 47, 7963–7984. [Google Scholar] [CrossRef]

- Banu, T.P.; Borlea, G.F.; Banu, C. The use of drones in forestry. J. Environ. Sci. Eng. B 2016, 5, 557–562. [Google Scholar]

- Trybała, P.; Morelli, L.; Remondino, F.; Farrand, L.; Couceiro, M.S. Under-Canopy Drone 3D Surveys for Wild Fruit Hotspot Mapping. Drones 2024, 8, 577. [Google Scholar] [CrossRef]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Mokroš, M.; Tabačák, M.; Lieskovskỳ, M.; Fabrika, M. Unmanned aerial vehicle use for wood chips pile volume estimation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 953–956. [Google Scholar] [CrossRef]

- Kinaneva, D.; Hristov, G.; Raychev, J.; Zahariev, P. Early forest fire detection using drones and artificial intelligence. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; pp. 1060–1065. [Google Scholar]

- Getzin, S.; Wiegand, K.; Schöning, I. Assessing biodiversity in forests using very high-resolution images and unmanned aerial vehicles. Methods Ecol. Evol. 2012, 3, 397–404. [Google Scholar] [CrossRef]

- Chen, K.; Lopez, B.T.; Agha-mohammadi, A.a.; Mehta, A. Direct LiDAR Odometry: Fast Localization With Dense Point Clouds. IEEE Robot. Autom. Lett. 2022, 7, 2000–2007. [Google Scholar] [CrossRef]

- Chen, K.; Nemiroff, R.; Lopez, B.T. Direct LiDAR-Inertial Odometry: Lightweight LIO with Continuous-Time Motion Correction. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 3983–3989. [Google Scholar] [CrossRef]

- Bai, C.; Xiao, T.; Chen, Y.; Wang, H.; Zhang, F.; Gao, X. Faster-LIO: Lightweight Tightly Coupled Lidar-Inertial Odometry Using Parallel Sparse Incremental Voxels. IEEE Robot. Autom. Lett. 2022, 7, 4861–4868. [Google Scholar] [CrossRef]

- He, D.; Xu, W.; Chen, N.; Kong, F.; Yuan, C.; Zhang, F. Point-LIO: Robust High-Bandwidth Light Detection and Ranging Inertial Odometry. Adv. Intell. Syst. 2023, 5, 2200459. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, Y.; Yuan, S.; Xie, L. iG-LIO: An Incremental GICP-based Tightly-coupled LiDAR-inertial Odometry. IEEE Robot. Autom. Lett. 2024, 9, 1883–1890. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 834–849. [Google Scholar]

- Yi, S.; Lyu, Y.; Hua, L.; Pan, Q.; Zhao, C. Light-LOAM: A Lightweight LiDAR Odometry and Mapping Based on Graph-Matching. IEEE Robot. Autom. Lett. 2024, 9, 3219–3226. [Google Scholar] [CrossRef]

- Yuan, Z.; Deng, J.; Ming, R.; Lang, F. SR-LIVO: LiDAR-Inertial-Visual Odometry and Mapping With Sweep Reconstruction. IEEE Robot. Autom. Lett. 2024, 9, 5110–5117. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled lidar inertial odometry via smoothing and mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5135–5142. [Google Scholar]

- Garforth, J.; Webb, B. Visual appearance analysis of forest scenes for monocular SLAM. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 1794–1800. [Google Scholar]

- Tomaštík, J.; Saloň, Š.; Tunák, D.; Chudỳ, F.; Kardoš, M. Tango in forests—An initial experience of the use of the new Google technology in connection with forest inventory tasks. Comput. Electron. Agric. 2017, 141, 109–117. [Google Scholar] [CrossRef]

- Chang, Y.; Ebadi, K.; Denniston, C.E.; Ginting, M.F.; Rosinol, A.; Reinke, A.; Palieri, M.; Shi, J.; Chatterjee, A.; Morrell, B.; et al. LAMP 2.0: A Robust Multi-Robot SLAM System for Operation in Challenging Large-Scale Underground Environments. arXiv 2022, arXiv:2205.13135. [Google Scholar] [CrossRef]

- Trujillo, J.C.; Munguia, R.; Guerra, E.; Grau, A. Cooperative monocular-based SLAM for multi-UAV systems in GPS-denied environments. Sensors 2018, 18, 1351. [Google Scholar] [CrossRef]

- Mahdoui, N.; Frémont, V.; Natalizio, E. Communicating multi-uav system for cooperative SLAM-based exploration. J. Intell. Robot. Syst. 2020, 98, 325–343. [Google Scholar] [CrossRef]

- Schmuck, P.; Chli, M. Multi-UAV collaborative monocular SLAM. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3863–3870. [Google Scholar]

- Tian, Y.; Liu, K.; Ok, K.; Tran, L.; Allen, D.; Roy, N.; How, J.P. Search and rescue under the forest canopy using multiple UAVs. Int. J. Robot. Res. 2020, 39, 1201–1221. [Google Scholar] [CrossRef]

- Cui, J.Q.; Lai, S.; Dong, X.; Liu, P.; Chen, B.M.; Lee, T.H. Autonomous navigation of UAV in forest. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 726–733. [Google Scholar]

- Balamurugan, G.; Valarmathi, J.; Naidu, V. Survey on UAV navigation in GPS denied environments. In Proceedings of the 2016 International Conference on Signal Processing, Communication, Power and Embedded System (SCOPES), Paralakhemundi, India, 3–5 October 2016; pp. 198–204. [Google Scholar]

- Zhilenkov, A.A.; Chernyi, S.G.; Sokolov, S.S.; Nyrkov, A.P. Intelligent autonomous navigation system for UAV in randomly changing environmental conditions. J. Intell. Fuzzy Syst. 2020, 38, 6619–6625. [Google Scholar] [CrossRef]

- Wang, C.; Liu, P.; Zhang, T.; Sun, J. The adaptive vortex search algorithm of optimal path planning for forest fire rescue UAV. In Proceedings of the 2018 IEEE 3rd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 October 2018; pp. 400–403. [Google Scholar]

- Pestana, J.; Mellado-Bataller, I.; Sanchez-Lopez, J.L.; Fu, C.; Mondragón, I.F.; Campoy, P. A general purpose configurable controller for indoors and outdoors GPS-denied navigation for multirotor unmanned aerial vehicles. J. Intell. Robot. Syst. 2014, 73, 387–400. [Google Scholar] [CrossRef]

- Brambilla, M.; Ferrante, E.; Birattari, M.; Dorigo, M. Swarm robotics: A review from the swarm engineering perspective. Swarm Intell. 2013, 7, 1–41. [Google Scholar] [CrossRef]

- Şahin, E. Swarm robotics: From sources of inspiration to domains of application. In Proceedings of the International Workshop on Swarm Robotics, Santa Monica, CA, USA, 17 July 2004; pp. 10–20. [Google Scholar]

- Alsammak, I.L.H.; Mahmoud, M.A.; Aris, H.; AlKilabi, M.; Mahdi, M.N. The use of swarms of unmanned aerial vehicles in mitigating area coverage challenges of forest-fire-extinguishing activities: A systematic literature review. Forests 2022, 13, 811. [Google Scholar] [CrossRef]

- Weinstein, A.; Cho, A.; Loianno, G.; Kumar, V. Visual Inertial Odometry Swarm: An Autonomous Swarm of Vision-Based Quadrotors. IEEE Robot. Autom. Lett. 2018, 3, 1801–1807. [Google Scholar] [CrossRef]

- Madridano, Á.; Al-Kaff, A.; Flores, P.; Martín, D.; de la Escalera, A. Software architecture for autonomous and coordinated navigation of uav swarms in forest and urban firefighting. Appl. Sci. 2021, 11, 1258. [Google Scholar] [CrossRef]

- Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F. Fault-tolerant cooperative navigation of networked UAV swarms for forest fire monitoring. Aerosp. Sci. Technol. 2022, 123, 107494. [Google Scholar] [CrossRef]

- Gupta, L.; Jain, R.; Vaszkun, G. Survey of important issues in UAV communication networks. IEEE Commun. Surv. Tutorials 2015, 18, 1123–1152. [Google Scholar] [CrossRef]

- Venturini, F.; Mason, F.; Pase, F.; Chiariotti, F.; Testolin, A.; Zanella, A.; Zorzi, M. Distributed Reinforcement Learning for Flexible and Efficient UAV Swarm Control. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 955–969. [Google Scholar] [CrossRef]

- Peng, Q.; Wu, H.; Xue, R. Review of Dynamic Task Allocation Methods for UAV Swarms Oriented to Ground Targets. Complex Syst. Model. Simul. 2021, 1, 163–175. [Google Scholar] [CrossRef]

- Mohan, M.; Richardson, G.; Gopan, G.; Aghai, M.M.; Bajaj, S.; Galgamuwa, G.P.; Vastaranta, M.; Arachchige, P.S.P.; Amorós, L.; Corte, A.P.D.; et al. UAV-supported forest regeneration: Current trends, challenges and implications. Remote Sens. 2021, 13, 2596. [Google Scholar] [CrossRef]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Carlone, L.; Aragues, R.; Castellanos, J.A.; Bona, B. A fast and accurate approximation for planar pose graph optimization. Int. J. Robot. Res. 2014, 33, 965–987. [Google Scholar] [CrossRef]

- Bondy, J.A.; Murty, U.S.R. Graph Theory; Springer: New York, NY, USA, 2008. [Google Scholar]

- Zhang, Z. Iterative closest point (ICP). In Computer Vision: A Reference Guide; Springer: New York, NY, USA, 2021; pp. 718–720. [Google Scholar]

- Couceiro, M.; Ghamisi, P. Fractional-Order Darwinian PSO. In Fractional Order Darwinian Particle Swarm Optimization: Applications and Evaluation of an Evolutionary Algorithm; Springer International Publishing: Cham, Switzerland, 2016; pp. 11–20. [Google Scholar] [CrossRef]

- Lee, T.; Leok, M.; McClamroch, N.H. Geometric tracking control of a quadrotor UAV on SE(3). In Proceedings of the 49th IEEE Conference on Decision and Control (CDC), Atlanta, GA, USA, 15–17 December 2010; pp. 5420–5425. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R.C. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Imran, M.; Hashim, R.; Khalid, N.E.A. An Overview of Particle Swarm Optimization Variants. Procedia Eng. 2013, 53, 491–496. [Google Scholar] [CrossRef]

- Trybala, P.; Morelli, L.; Remondino, F.; Couceiro, M.S. Towards robotization of foraging wild fruits: A multi-camera drone for mapping berries under canopy. In Proceedings of the European Robotics Forum (ERF 2024), Rimini, Italy, 13–15 March 2024. [Google Scholar]

- Chen, B.W.; Rho, S. Autonomous tactical deployment of the UAV array using self-organizing swarm intelligence. IEEE Consum. Electron. Mag. 2020, 9, 52–56. [Google Scholar] [CrossRef]

- Castilho, J.P.C. ROS 2.0–Study and Evaluation of ROS 2 in comparison with ROS 1. Master’s Thesis, University of Coimbra, Coimbra, Portugal, 2022. [Google Scholar]

- Hermanus, D.R.; Supangkat, S.H.; Hidayat, F. Designing an Advanced Situational Awareness Platform Using Intelligent Multi-Drones for Smart Farming Towards Agriculture 5.0. In Proceedings of the 2024 International Conference on ICT for Smart Society (ICISS), Bandung, Indonesia, 4–5 September 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Mohd Daud, S.M.S.; Mohd Yusof, M.Y.P.; Heo, C.C.; Khoo, L.S.; Chainchel Singh, M.K.; Mahmood, M.S.; Nawawi, H. Applications of drone in disaster management: A scoping review. Sci. Justice 2022, 62, 30–42. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the use of unmanned aerial systems for environmental monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Muñoz, J.; López, B.; Quevedo, F.; Monje, C.A.; Garrido, S.; Moreno, L.E. Multi UAV Coverage Path Planning in Urban Environments. Sensors 2021, 21, 7365. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.; Kim, S.; Kim, J.; Jung, H. Drone-Assisted Multimodal Logistics: Trends and Research Issues. Drones 2024, 8, 468. [Google Scholar] [CrossRef]

- Shafiee, M.; Zhou, Z.; Mei, L.; Dinmohammadi, F.; Karama, J.; Flynn, D. Unmanned Aerial Drones for Inspection of Offshore Wind Turbines: A Mission-Critical Failure Analysis. Robotics 2021, 10, 26. [Google Scholar] [CrossRef]

- Quamar, M.M.; Al-Ramadan, B.; Khan, K.; Shafiullah, M.; El Ferik, S. Advancements and Applications of Drone-Integrated Geographic Information System Technology—A Review. Remote Sens. 2023, 15, 5039. [Google Scholar] [CrossRef]

| Technical Specification | Description |

|---|---|

| Energy autonomy | 2 LiPo 6S batteries of 22.2 V and 4500 mAh |

| Sensing payload | 3D LiDAR (16-channel), 9 DoF IMU, GNSS-RTK, and the possibility to add an optional back camera (e.g., GoPro) |

| Communication technologies | Dual Band Wireless-AC WiFi 5 (802.11ac), radio controller system 2.4 GHz and a cellular communication LTE Cat4 mobile router |

| ROS integration | Running Ubuntu 20.04 with ROS Noetic Ninjemys on a NUC10i7FNKN a tenth generation i7 processor, with 6 Cores @ 4.70 GHz |

| Durability and maneuverability | Carbon fiber frame hexacopter 800 mm with X shape, motors KV380 and a weight of 5 kg |

| Parameter | Description | Initial Values |

|---|---|---|

| Number of parameters | 11 | |

| n | Number of sample points | 1500 |

| Population size | 15 | |

| Inertia weight | 1.0 | |

| Cognitive coefficient | 1.5 | |

| Social coefficient | 1.5 | |

| Number of generations | 30 | |

| Stagnation value | 10 |

| SE(3) Parameter | Description | MRS Supersoft Values | Optimised Values |

|---|---|---|---|

| Position gain | 3.0 | 1.05 | |

| Velocity gain | 2.0 | 0.73 | |

| Acceleration gain | 0.3 | 0.29 | |

| Body integral gain | 0.1 | 0.10 | |

| World integral gain | 0.1 | 0.09 | |

| Position z gain | 15.0 | 5.11 | |

| Velocity z gain | 8.0 | 3.14 | |

| Acceleration z gain | 1.0 | 0.30 | |

| Pitch/roll attitude gain | 5.0 | 1.57 | |

| Yaw attitude gain | 5.0 | 1.47 | |

| Mass Estimator | 5.0 | 0.49 |

| Drone | Loop Closure | Inter Loop | Intra Loop | ||

|---|---|---|---|---|---|

| Total | Total | (%) | Total | (%) | |

| 259 | 119 | 45.9 | 140 | 54.1 | |

| 215 | 159 | 73.9 | 56 | 26.1 | |

| 218 | 84 | 38.5 | 134 | 61.6 | |

| Formation | Formation Similarity | Metric A | Metric B | ||

|---|---|---|---|---|---|

| Distance (Equation (7)) | Values (m2) | Difference (%) | Values (m2) | Difference (%) | |

| A | 0.37 | 17.40 | 8.75 | 21.96 | 7.65 |

| B | 0.20 | 6.82 | −57.38 | 12.05 | −40.93 |

| C | 0.12 | 14.30 | −10.63 | 22.28 | 9.22 |

| D | 0.30 | 16.49 | 3.06 | 28.62 | 40.29 |

| E | 0.48 | 26.02 | 62.63 | 36.01 | 76.52 |

| F | 0.16 | 11.51 | −28.06 | 24.35 | 19.36 |

| Reference value | 0.00 | 16.00 | - | 20.40 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Araújo, A.G.; Pizzino, C.A.P.; Couceiro, M.S.; Rocha, R.P. A Multi-Drone System Proof of Concept for Forestry Applications. Drones 2025, 9, 80. https://doi.org/10.3390/drones9020080

Araújo AG, Pizzino CAP, Couceiro MS, Rocha RP. A Multi-Drone System Proof of Concept for Forestry Applications. Drones. 2025; 9(2):80. https://doi.org/10.3390/drones9020080

Chicago/Turabian StyleAraújo, André G., Carlos A. P. Pizzino, Micael S. Couceiro, and Rui P. Rocha. 2025. "A Multi-Drone System Proof of Concept for Forestry Applications" Drones 9, no. 2: 80. https://doi.org/10.3390/drones9020080

APA StyleAraújo, A. G., Pizzino, C. A. P., Couceiro, M. S., & Rocha, R. P. (2025). A Multi-Drone System Proof of Concept for Forestry Applications. Drones, 9(2), 80. https://doi.org/10.3390/drones9020080