Effectiveness of Unmanned Aerial Vehicle-Based LiDAR for Assessing the Impact of Catastrophic Windstorm Events on Timberland

Highlights

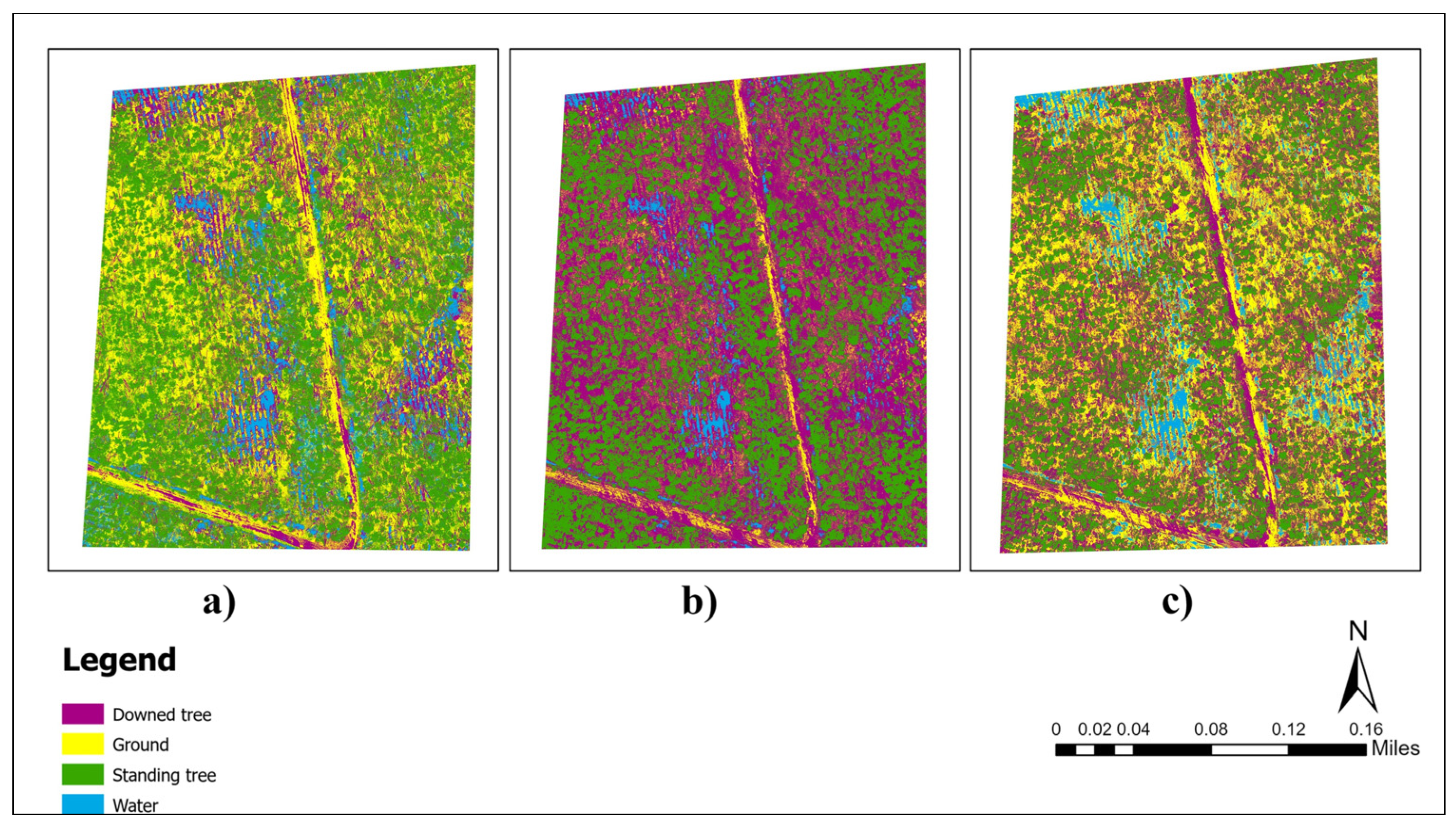

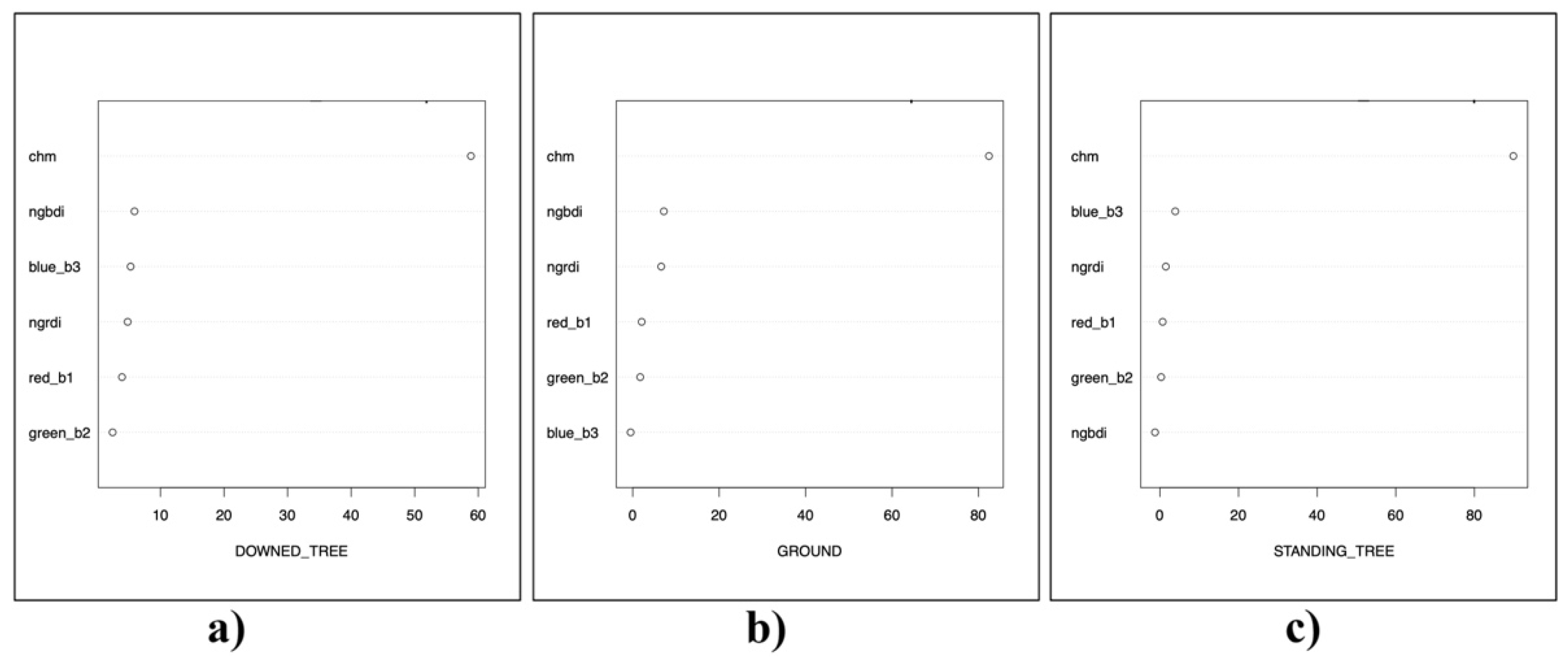

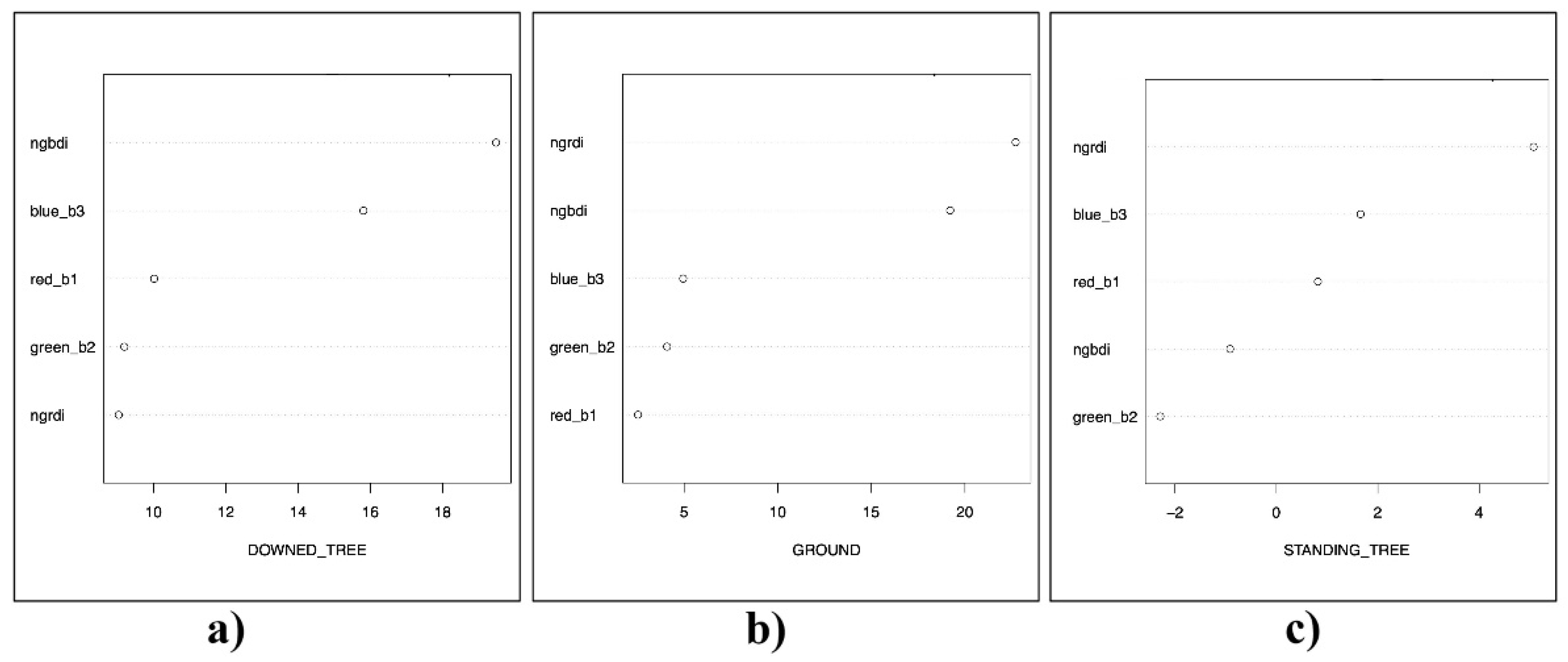

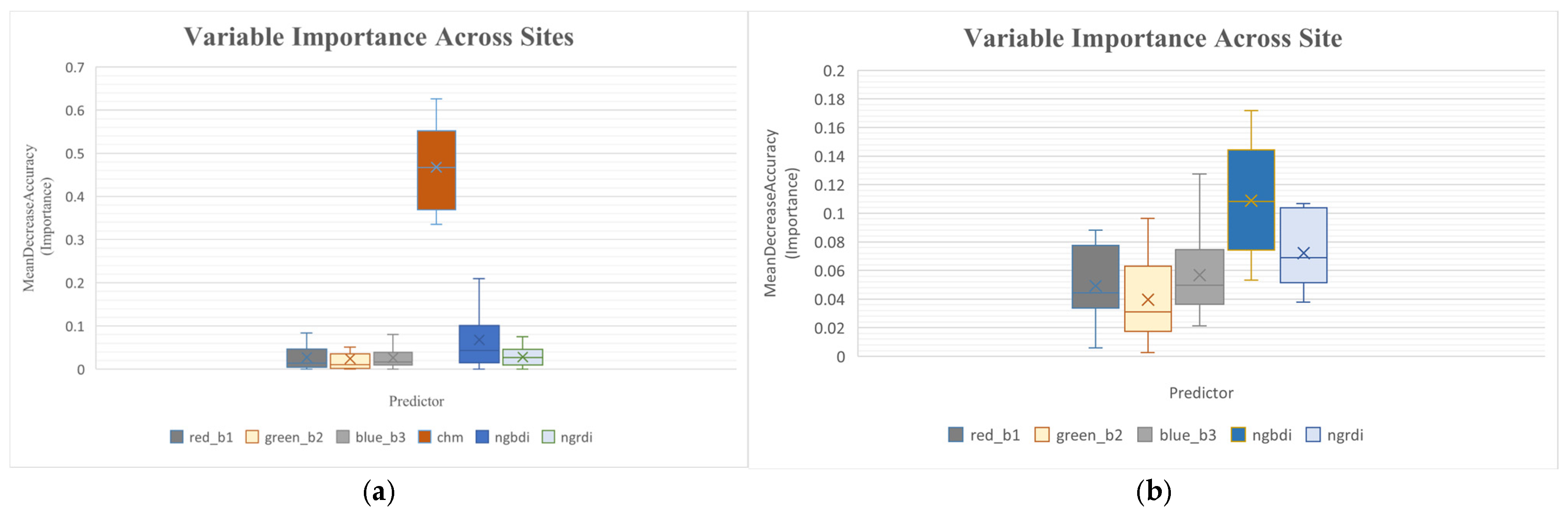

- UAV—LiDAR combined with RGB imagery significantly improved mapping of wind-storm-damaged forests, achieving higher classification accuracy.

- Random Forest classifier outperformed Maximum Likelihood and Decision Tree methods.

- Incorporating LiDAR-derived canopy height models is crucial for accurate assess-ment of windstorm damage in forestland.

- UAV—LiDAR integrated with UAV imagery enables efficient and scalable mapping for post-windstorm forest damage assessment and planning.

Abstract

1. Introduction

2. Materials and Methods

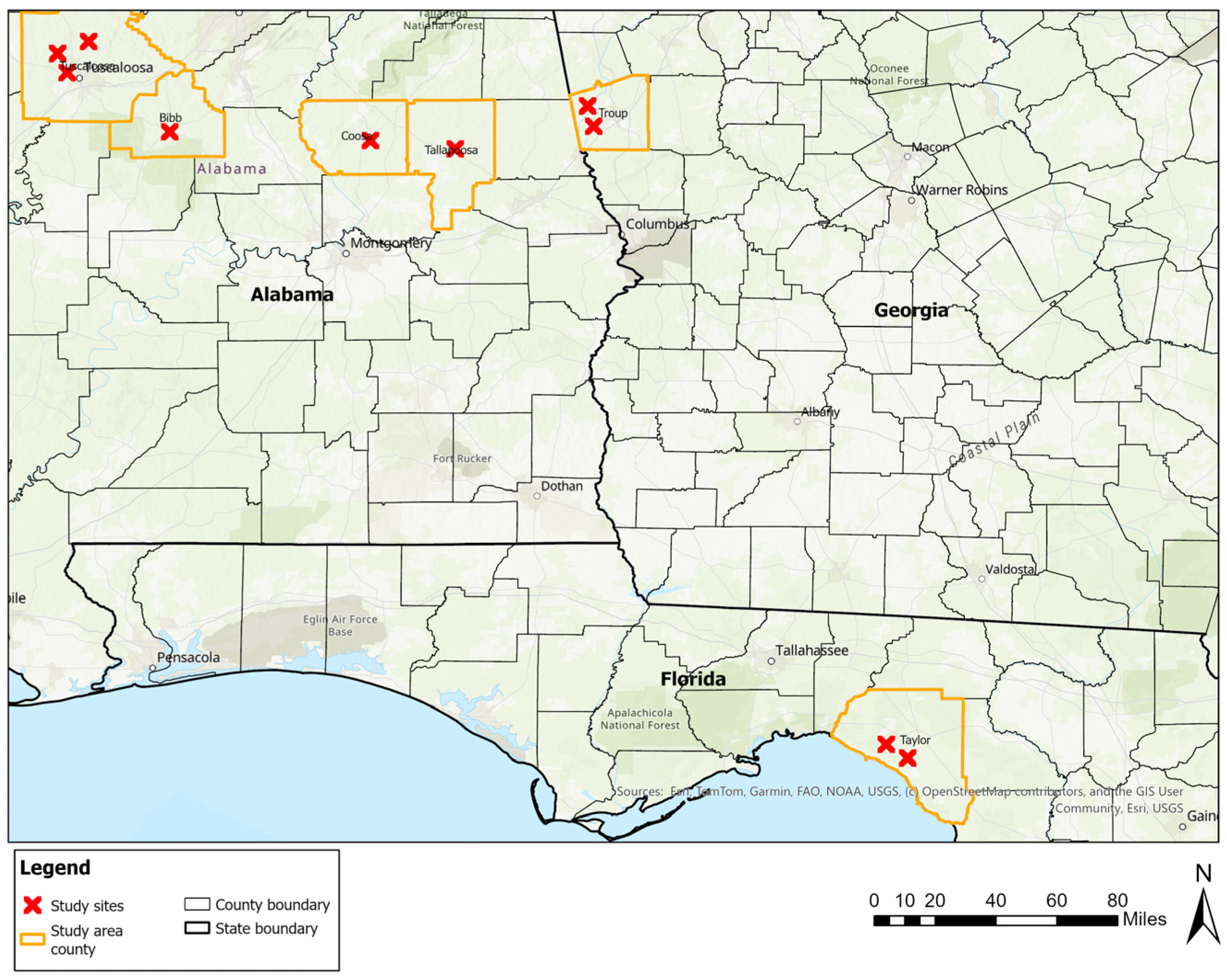

2.1. Study Area

2.2. Data Acquisition

2.2.1. Forest Inventory

2.2.2. Remote Sensing Data Collection

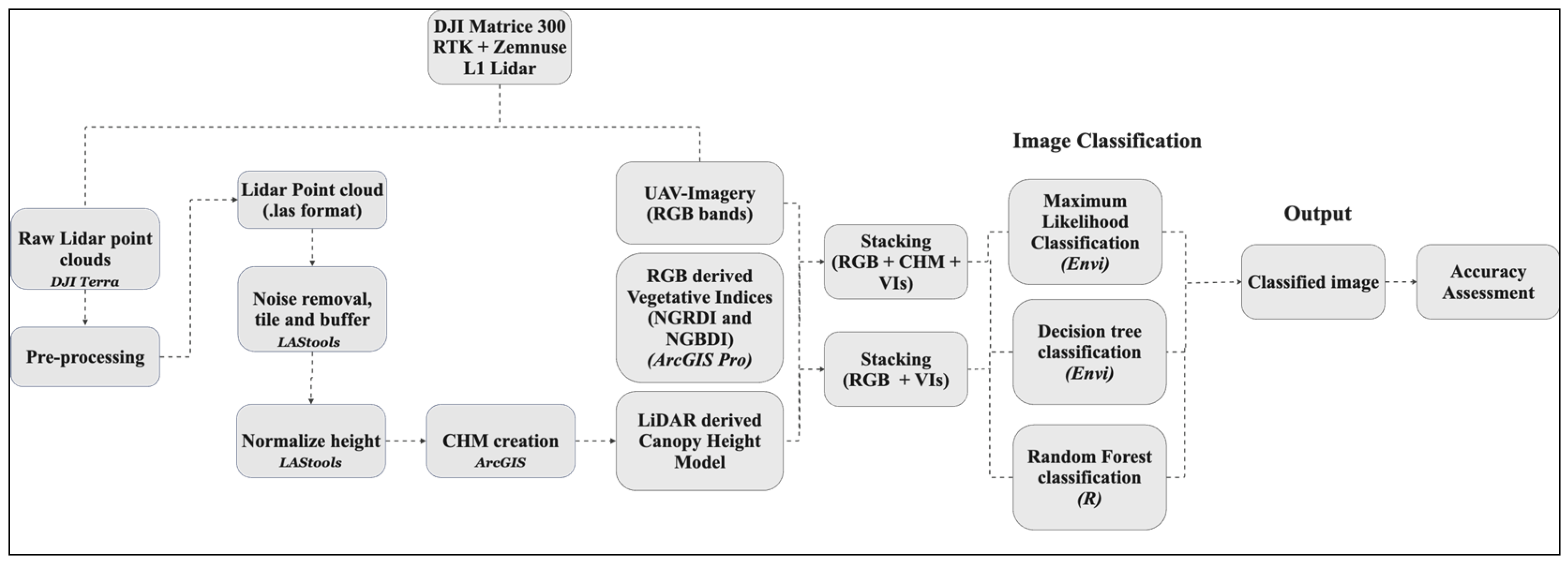

2.3. Data Processing

2.3.1. LiDAR Data Pre-Processing and Derived Products

2.3.2. RGB Imagery Processing and Derived Products

2.4. Image Classification Scheme

2.4.1. Definition of Classification Criteria and Training Sample Collection

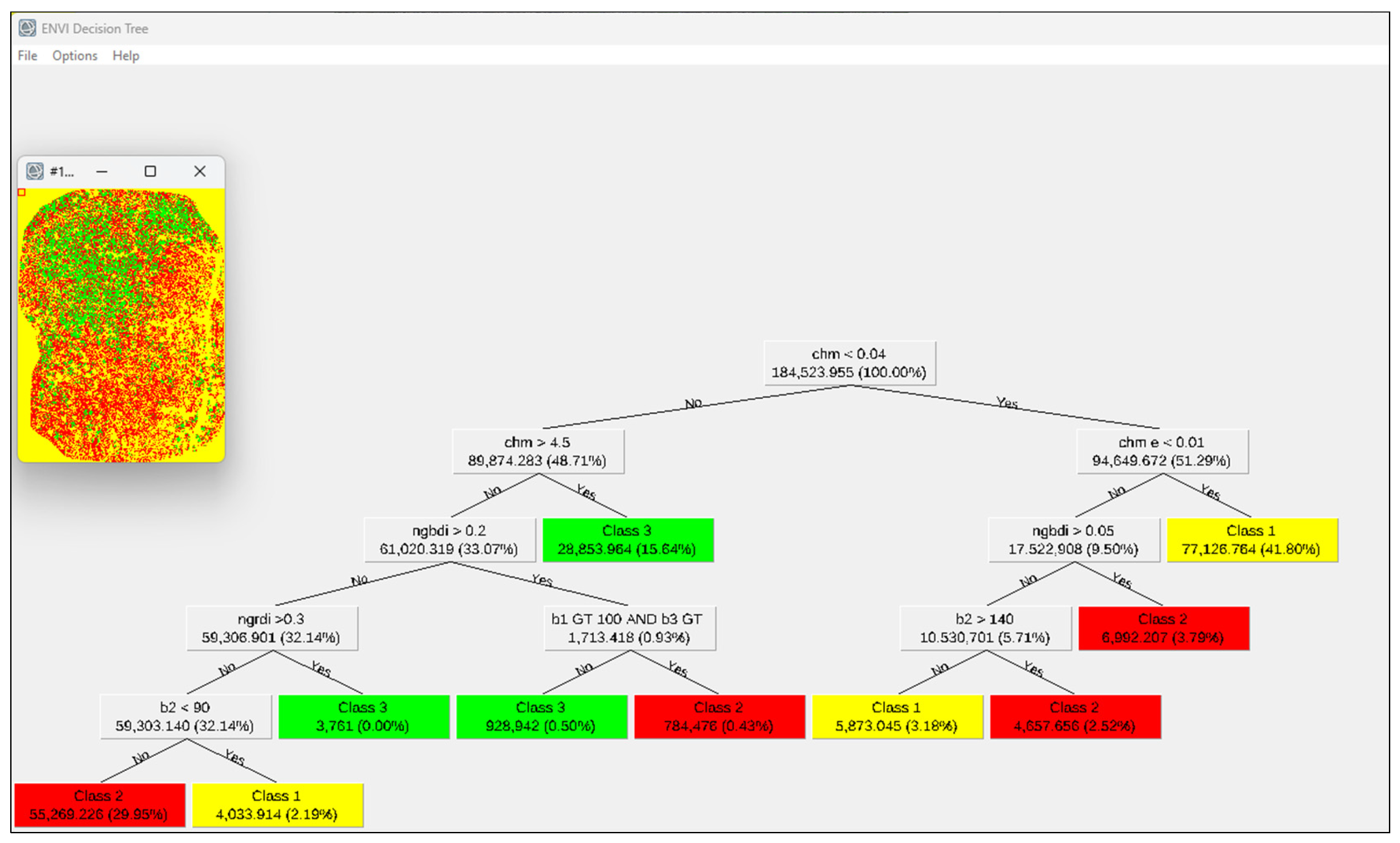

2.4.2. Image Classification

2.4.3. Accuracy Assessment

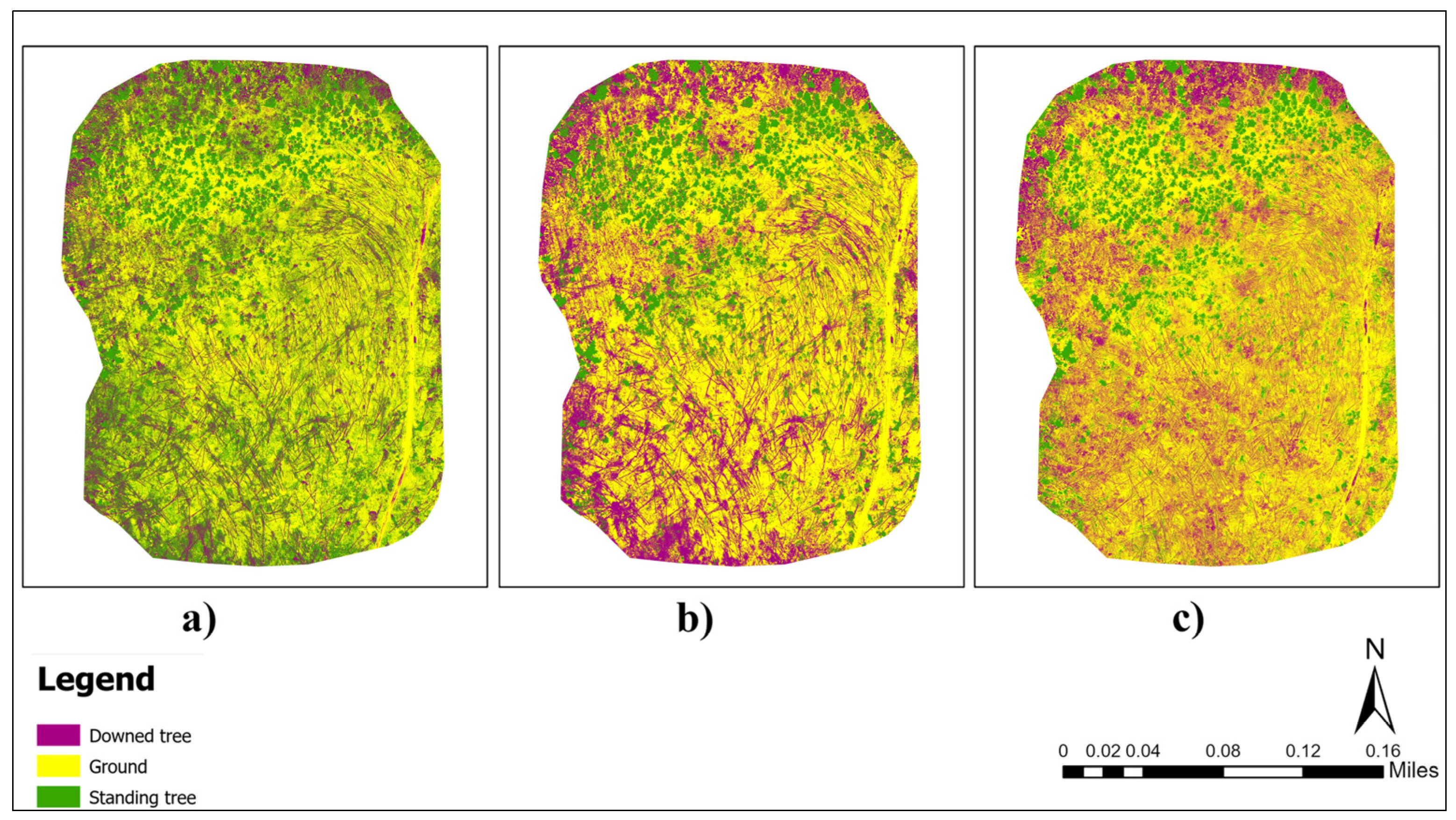

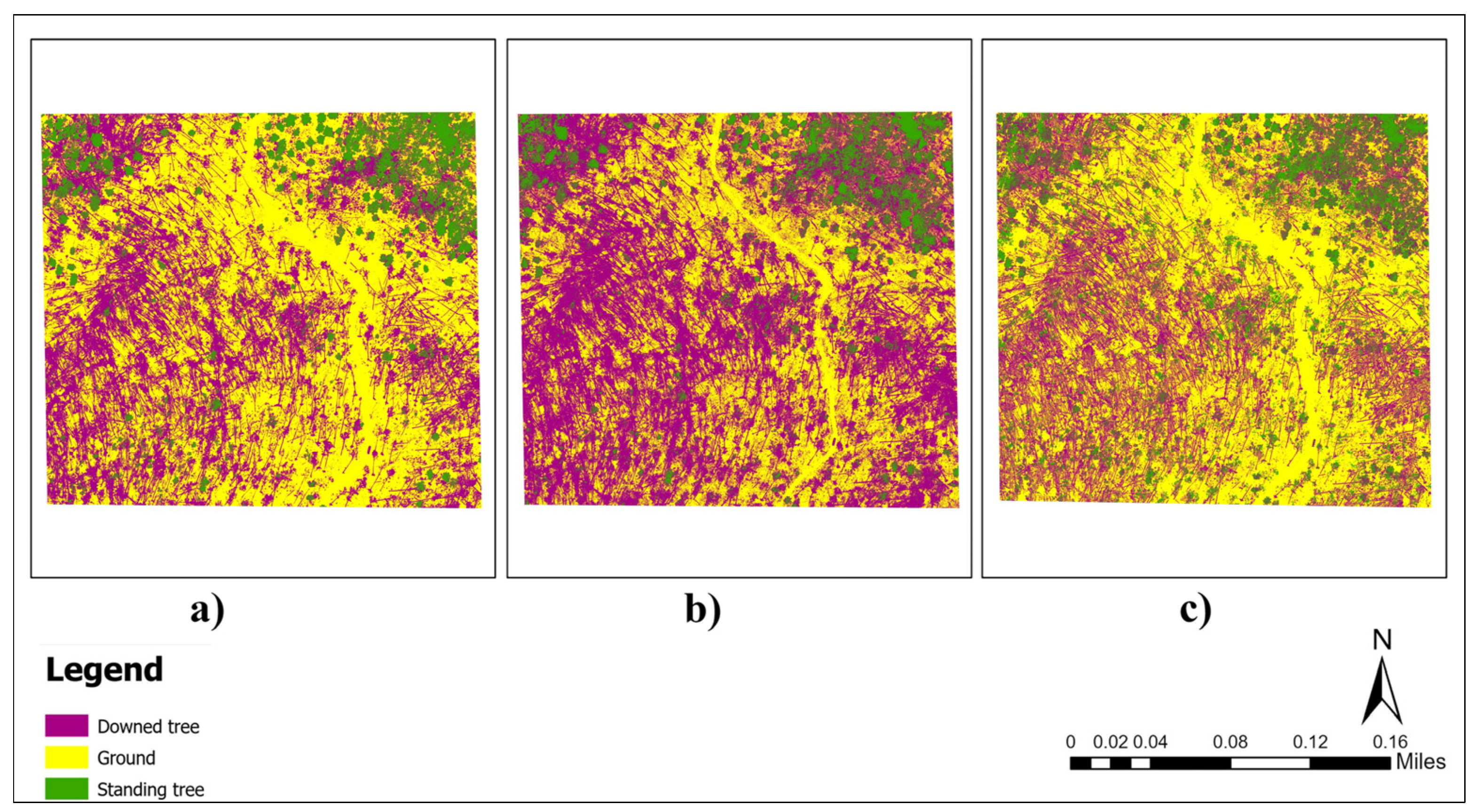

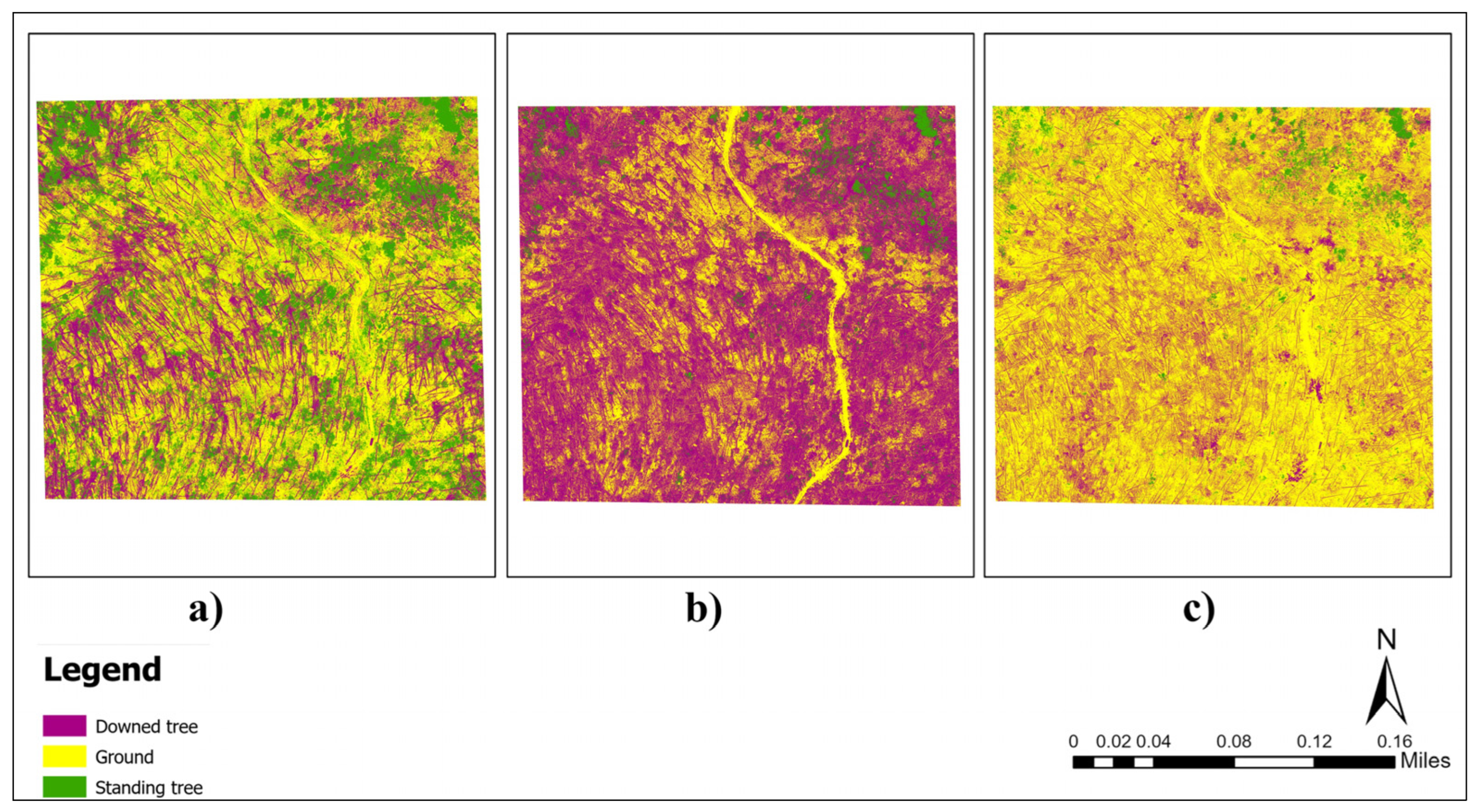

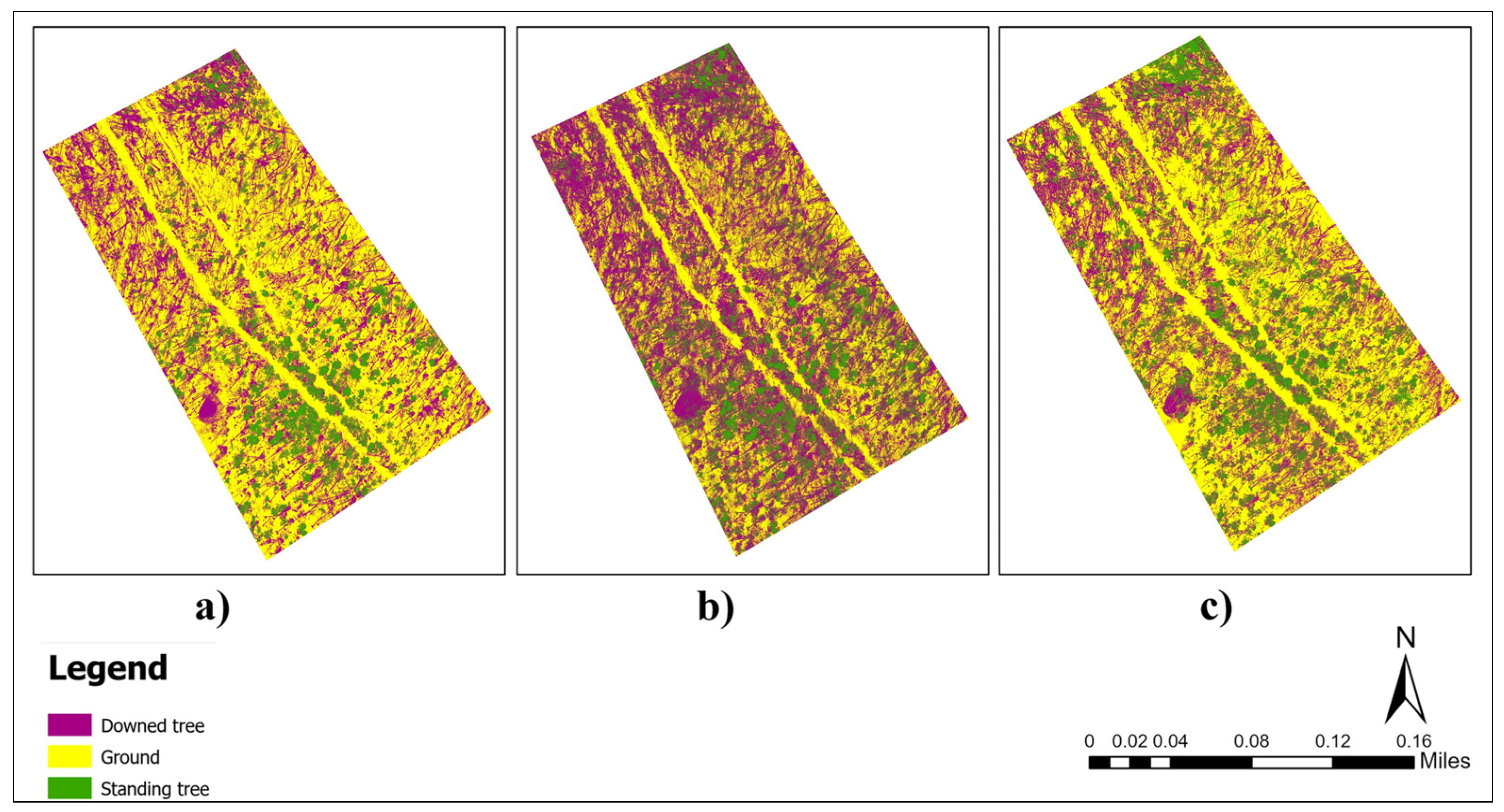

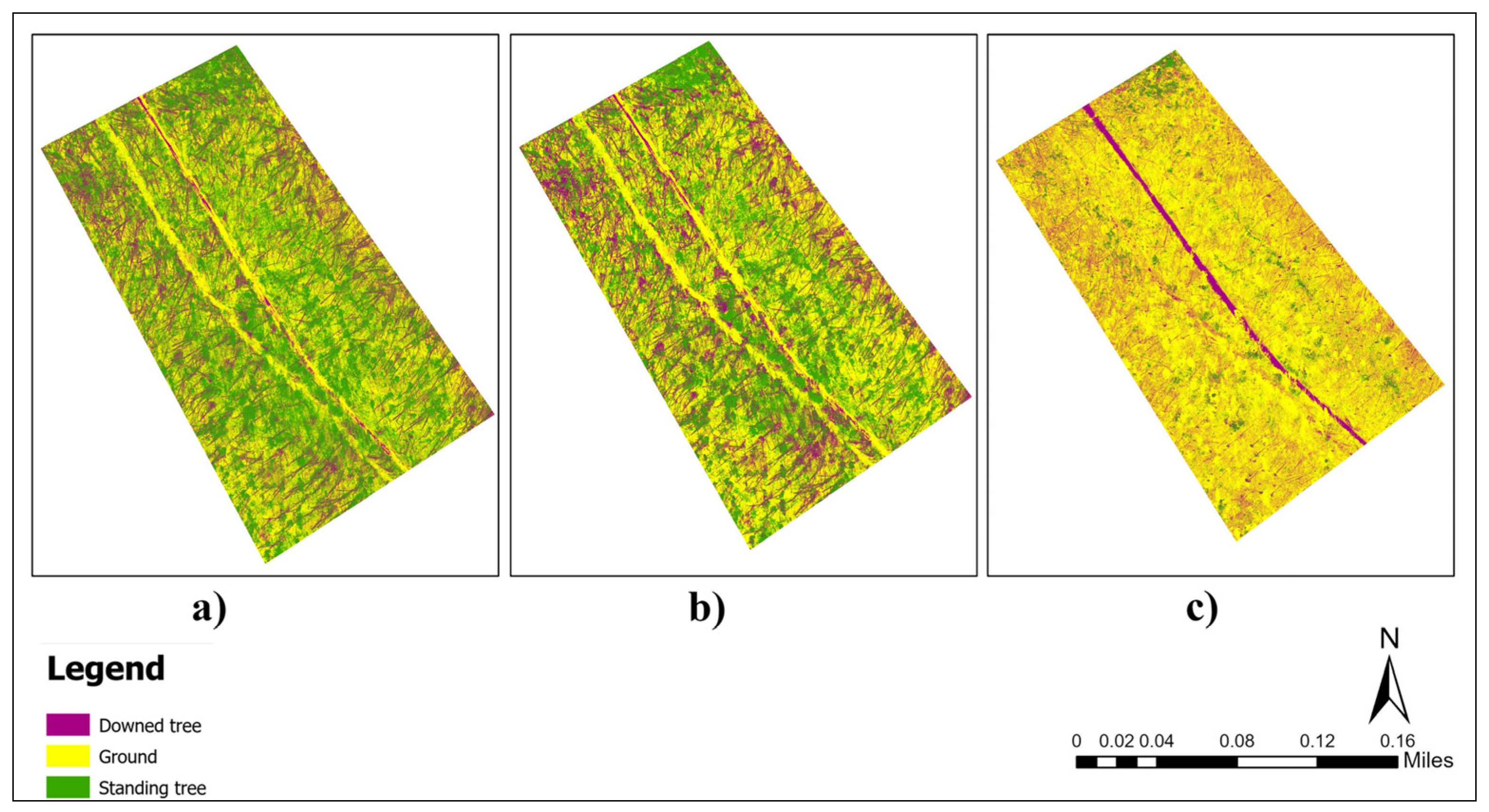

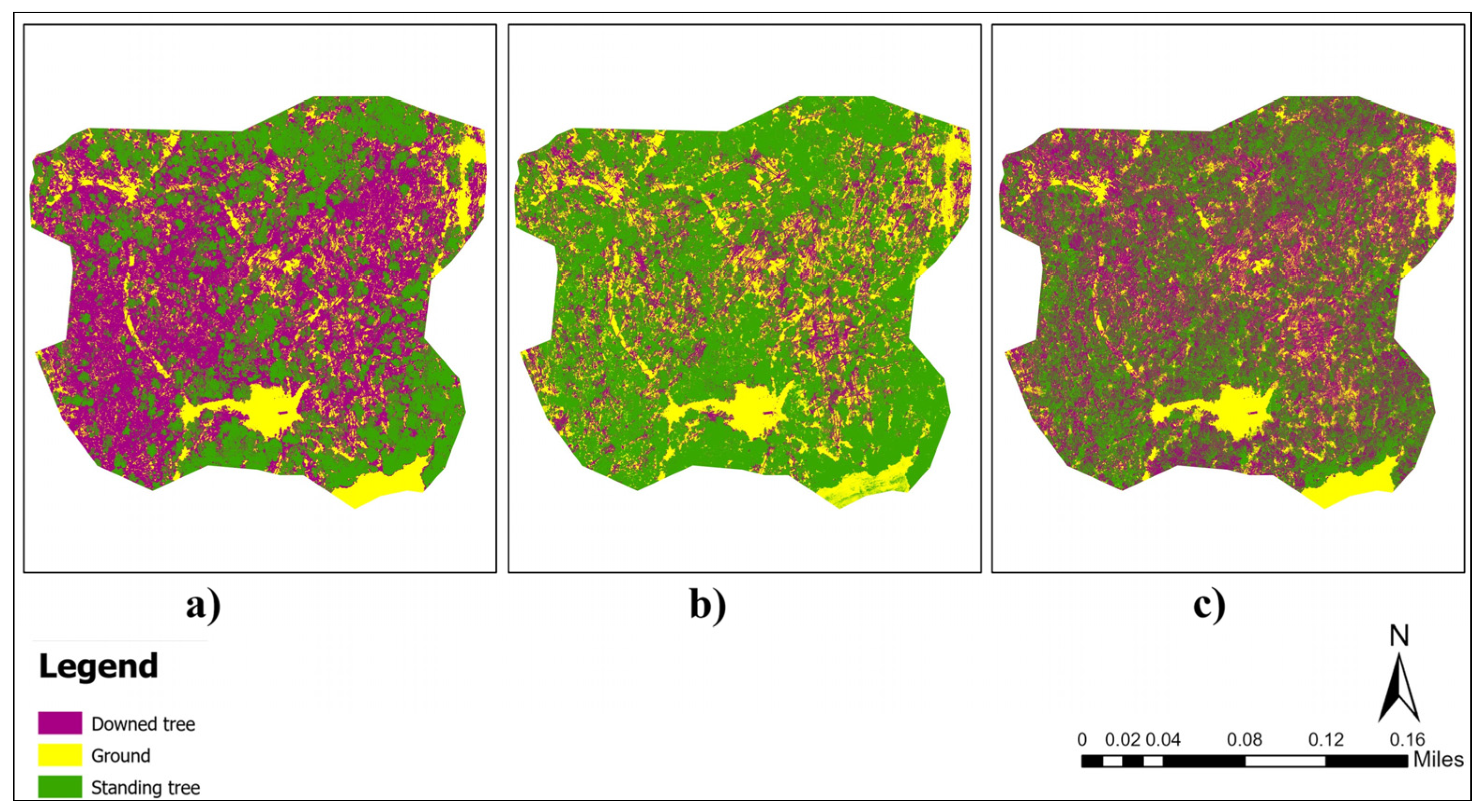

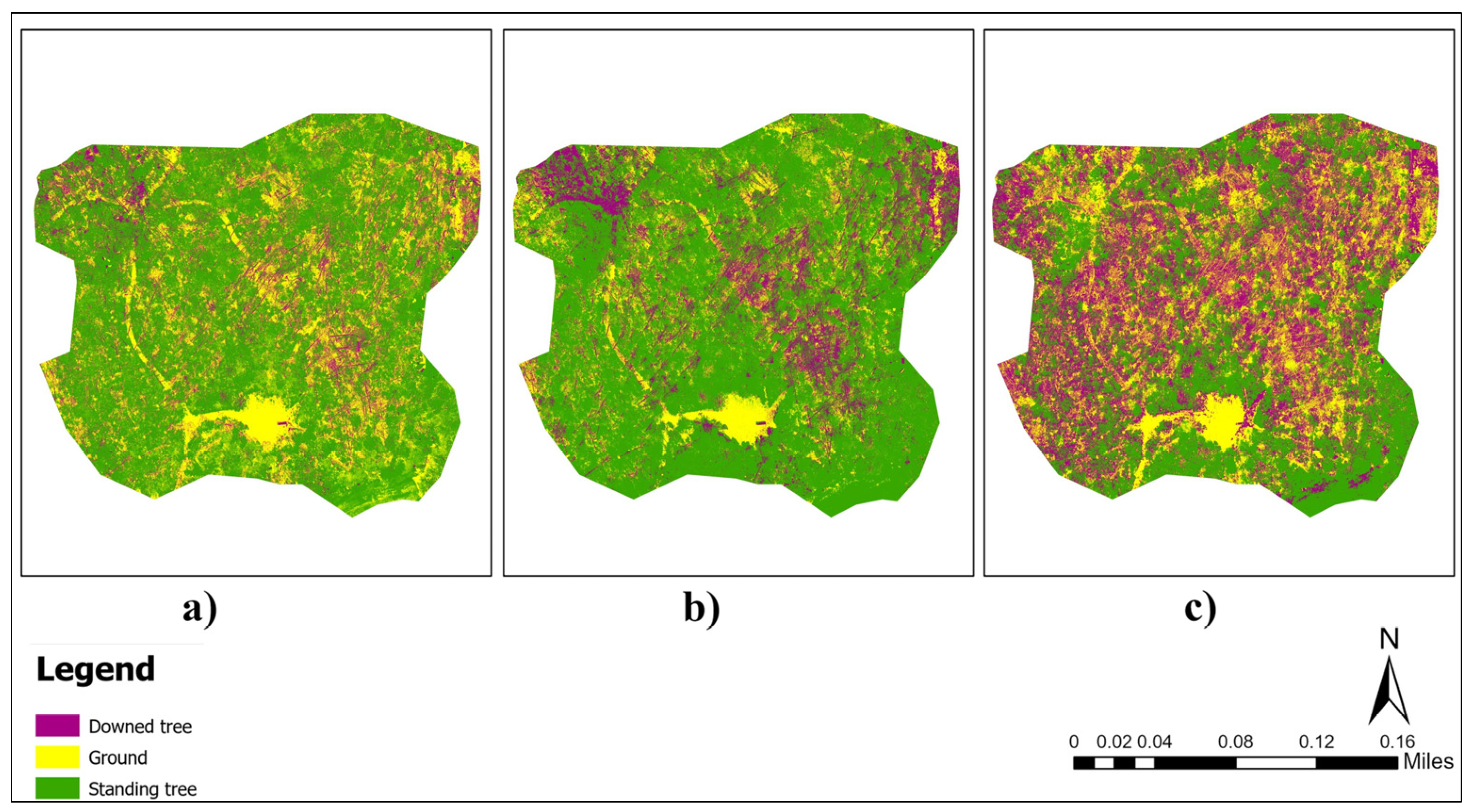

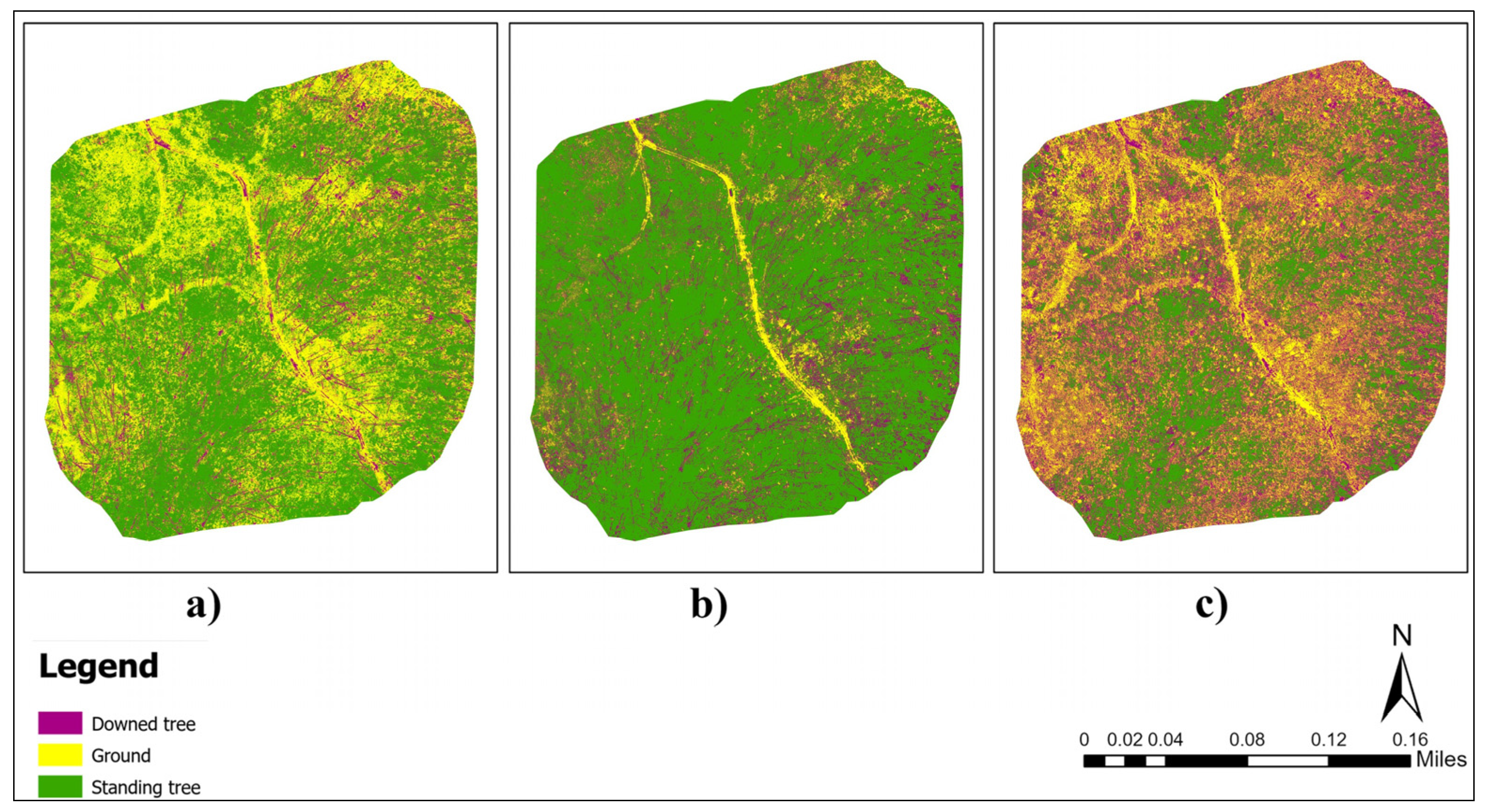

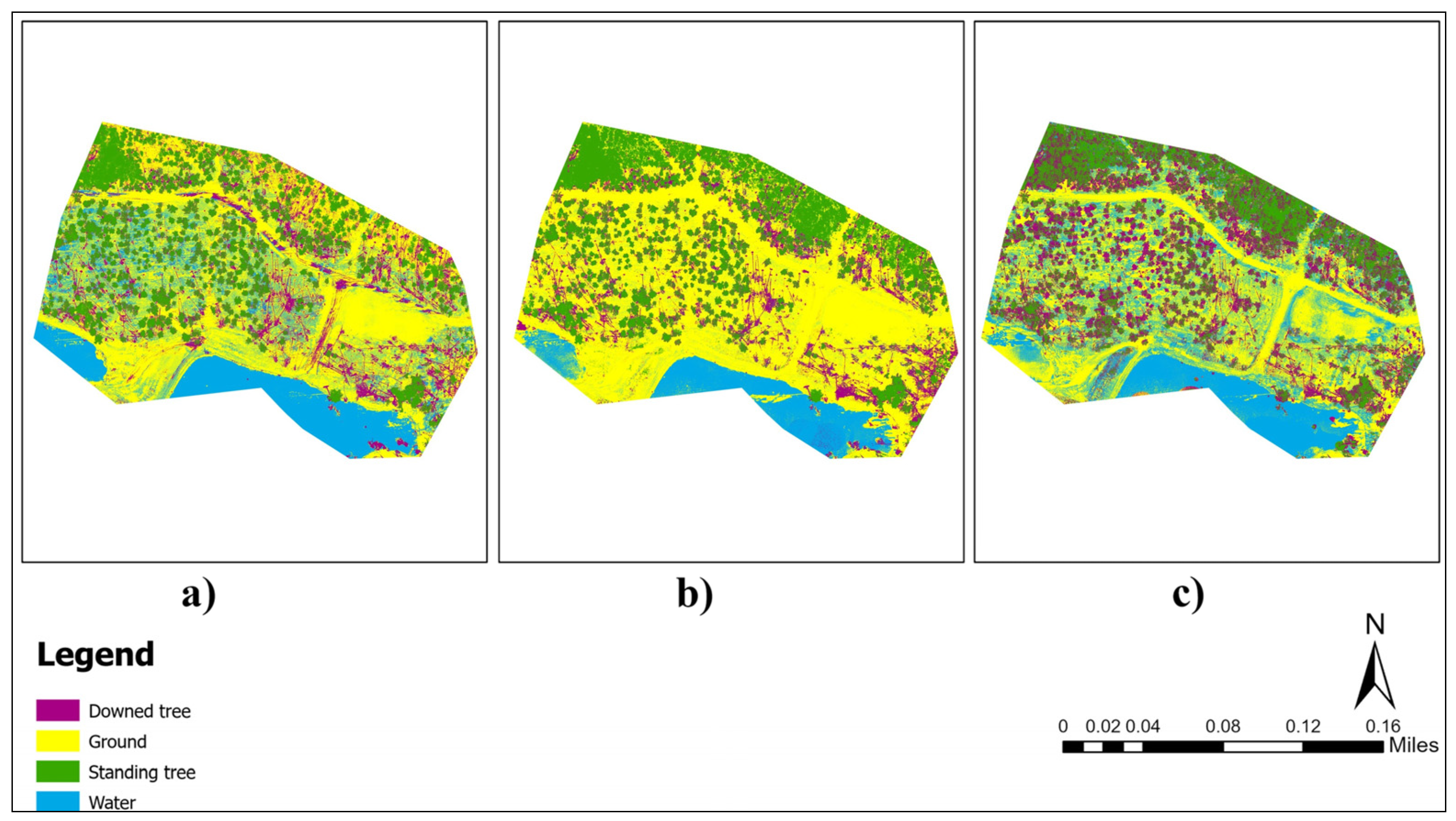

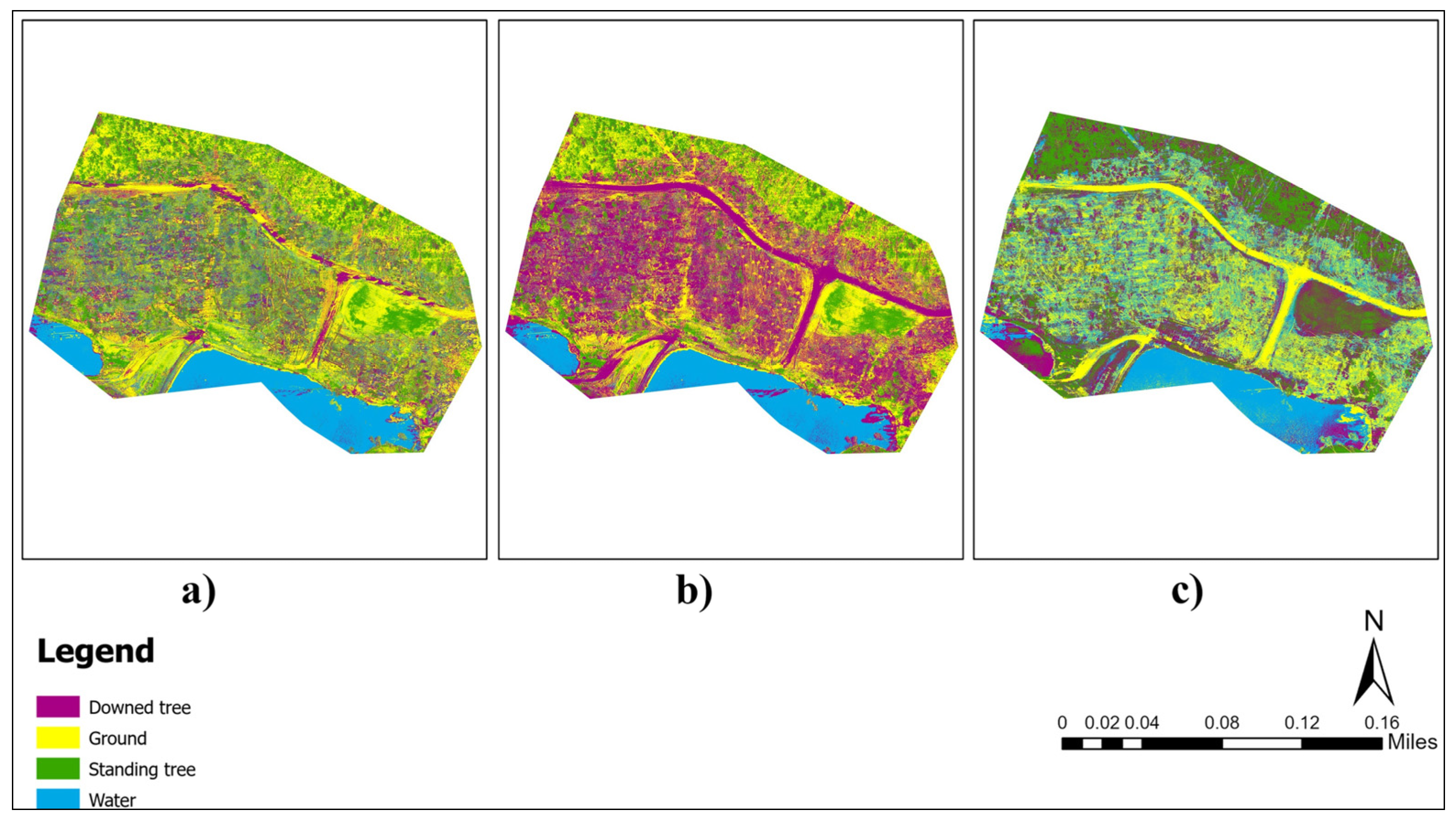

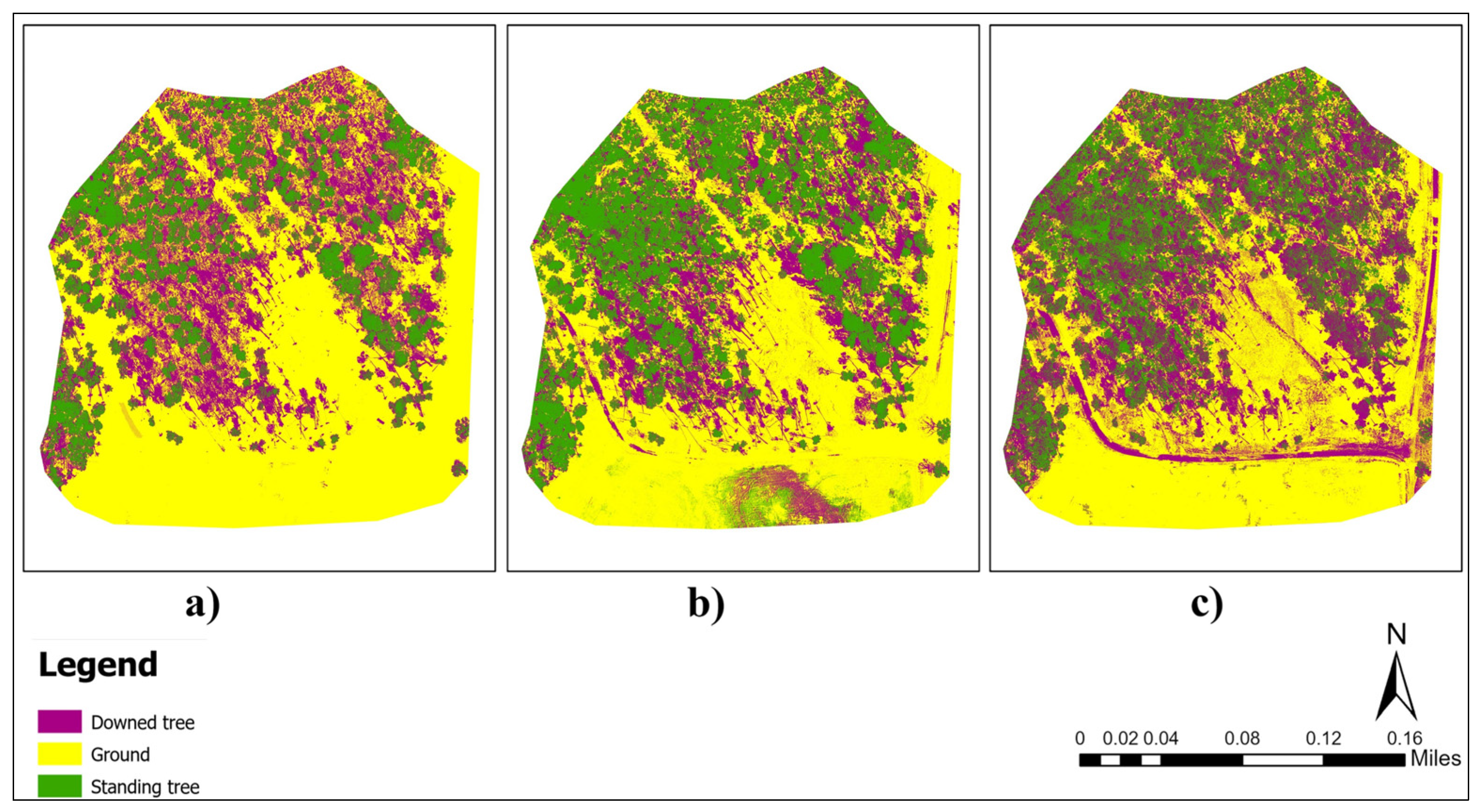

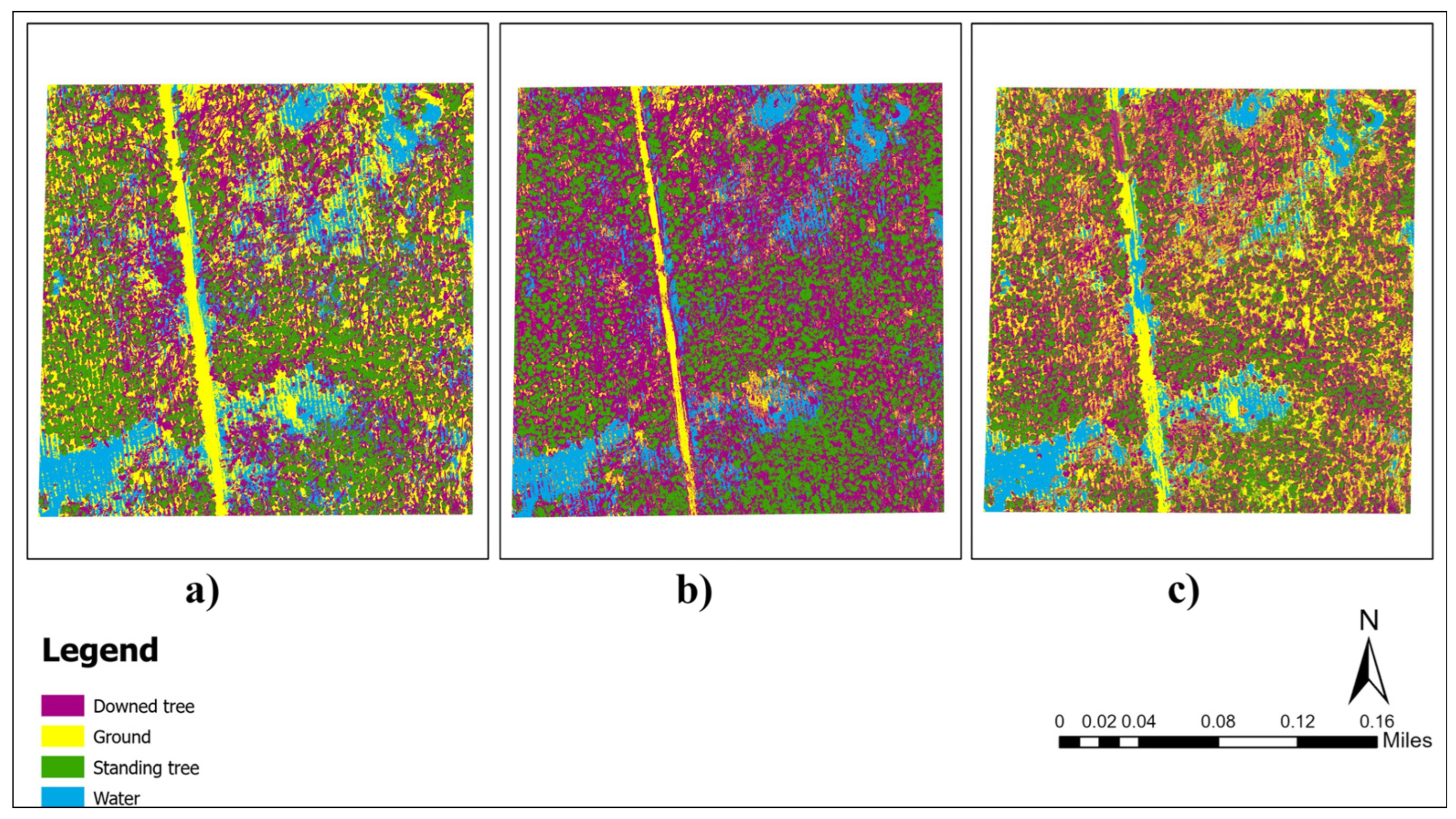

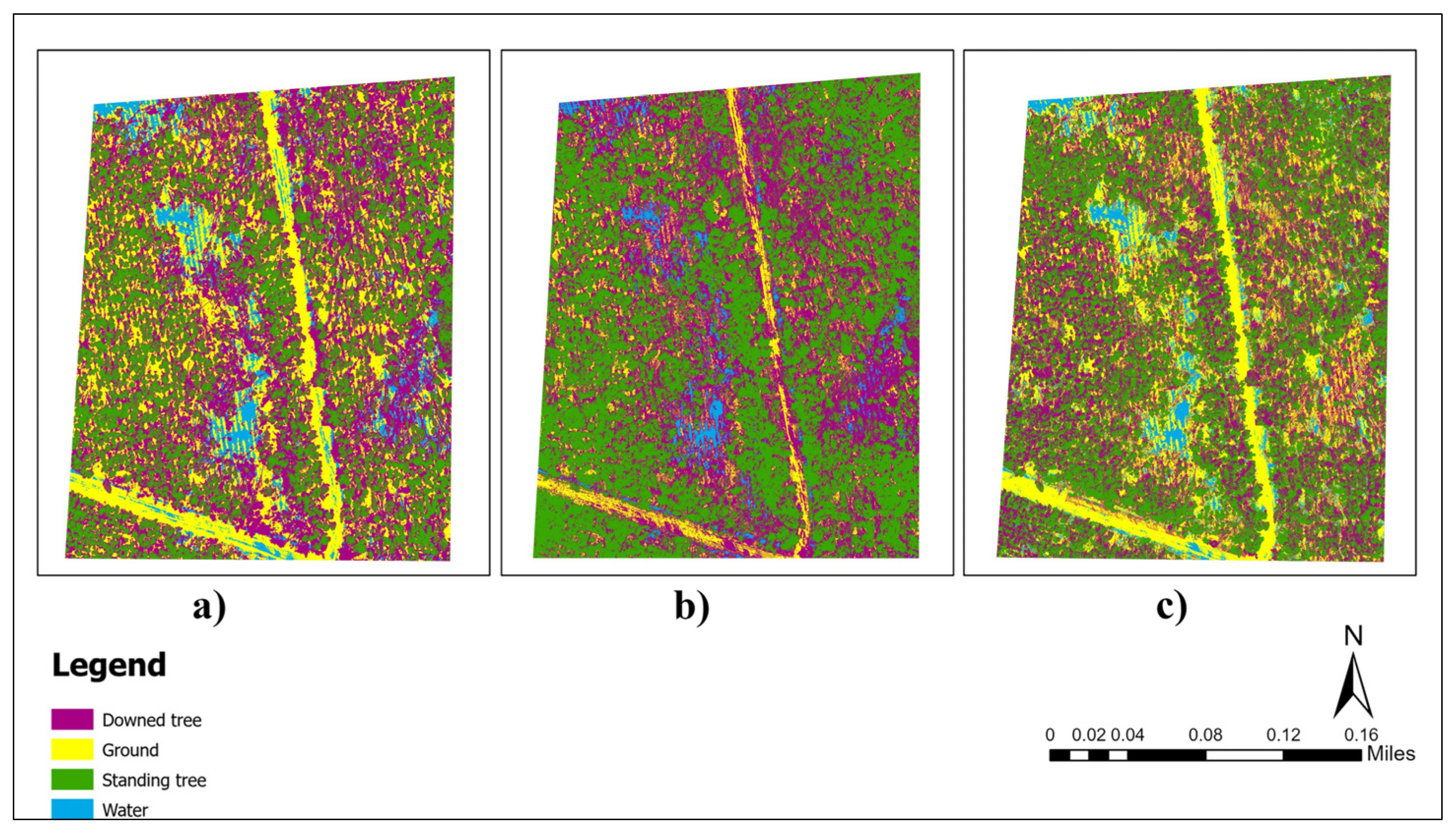

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| US | United States |

| UAVs | Unmanned Aerial Vehicles |

| LiDAR | Light Detection and Ranging |

| RF | Random Forest |

| ML | Maximum Likelihood |

| DT | Decision Tree |

| CHM | Canopy Height Model |

| SAR | Synthetic Aperture Radar |

| NWS | National Weather Service |

| DAT | Damage Assessment Toolkit |

| NOAA | National Oceanic and Atmospheric Administration |

| NHC | National Hurricane Center |

| Dbh | Diameter at breast height |

| RTK | Real-Time Kinematic |

| PPK | Post-Processed Kinematic |

| ALDOT | Alabama Department of Transportation |

| FDOT | Florida Department of Transportation |

| NGRDI | Normalized Green Red Difference Index |

| NGBDI | Normalized Green Blue Difference Index |

| ROI | Regions of Interest |

| OA | Overall Accuracy |

| PA | Producer’s Accuracy |

| SVM | Support Vector Machines |

| UA | User’s Accuracy |

| GEOBIA | Geographic Object-Based Image Analysis |

| OBIA | Object-Based Image Analysis |

Appendix A

Appendix B

| Site Number | Mean Point Density (points/m2) | Mean Point Spacing (m) |

|---|---|---|

| 1 | 1057.43 | 0.03 |

| 2 | 948.01 | 0.03 |

| 3 | 713.57 | 0.03 |

| 4 | 651.36 | 0.03 |

| 5 | 1048.69 | 0.03 |

| 6 | 663.75 | 0.03 |

| 7 | 978.93 | 0.03 |

| 8 | 848.01 | 0.03 |

| 9 | 1191.07 | 0.02 |

| 10 | 1589.61 | 0.02 |

| Site Number | Training ROIs | Validation ROIs | Total |

|---|---|---|---|

| 1 | 20*3 | 14*3 | 102 |

| 2 | 20*3 | 14*3 | 102 |

| 3 | 20*3 | 14*3 | 102 |

| 4 | 20*4 | 14*4 | 136 |

| 5 | 20*3 | 14*3 | 102 |

| 6 | 20*3 | 14*3 | 102 |

| 7 | 20*4 | 14*4 | 136 |

| 8 | 20*3 | 14*3 | 102 |

| 9 | 20*4 | 14*4 | 136 |

| 10 | 20*4 | 14*4 | 136 |

| Total | 680 | 476 | 1156 |

References

- Gardiner, B.; Blennow, K.; Carnus, J.M.; Fleischer, P.; Ingemarson, F.; Landmann, G.; Lindner, M.; Marzano, M.; Nicoll, B.; Orazio, C.; et al. Destructive Storms in European Forests: Past and Forthcoming Impacts. Final Report to European Commission—DG Environment; European Forest Institute: Joensuu, Finland, 2010. [Google Scholar]

- Mitchell, R.J.; Liu, Y.; O’Brien, J.J.; Elliott, K.J.; Starr, G.; Miniat, C.F.; Hiers, J.K. Future Climate and Fire Interactions in the Southeastern Region of the United States. For. Ecol. Manag. 2014, 327, 316–326. [Google Scholar] [CrossRef]

- Fortuin, C.C.; Montes, C.R.; Vogt, J.T.; Gandhi, K.J.K. Predicting Risks of Tornado and Severe Thunderstorm Damage to Southeastern U.S. Forests. Landsc. Ecol. 2022, 37, 1905–1919. [Google Scholar] [CrossRef]

- Sharma, A.; Ojha, S.K.; Dimov, L.D.; Vogel, J.G.; Nowak, J. Long-Term Effects of Catastrophic Wind on Southern US Coastal Forests: Lessons from a Major Hurricane. PLoS ONE 2021, 16, e0243362. [Google Scholar] [CrossRef]

- McNulty, S.G. Hurricane Impacts on US Forest Carbon Sequestration. Environ. Pollut. 2002, 116, S17–S24. [Google Scholar] [CrossRef]

- Mitchell, D.; Smidt, M.; McDonald, T.; Elder, T. Issues and Opportunities for Salvaging Storm Damaged Wood. In Proceedings of the 2021 ASABE Annual International Virtual Meeting, Online, 12–16 July 2021; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2021. [Google Scholar]

- Forest Health Management Group; Georgia Forestry Commission. Hurricane Michael Timber Impacts Assessment, Georgia, October 10–11, 2018; Georgia Forestry Commission: Dry Branch, GA, USA, 2018. [Google Scholar]

- Georgia Forestry Commission. Hurricane Helene Recovery Assistance. Available online: https://gatrees.org/forest-management-conservation/natural-disaster-recovery/hurricane-helene-recovery-assistance/ (accessed on 29 September 2025).

- Bradley, S.; Maggard, A.; Carter, B.; Assessing and Managing Storm-Damaged Timber. Alabama A&M & Auburn Universities Extension Publication FOR-2066. 2018. Available online: https://www.aces.edu/wp-content/uploads/2018/12/FOR-2066.pdf (accessed on 22 November 2022).

- Musah, M.; Diaz, J.H.; Alawode, A.O.; Gallagher, T.; Peresin, M.S.; Mitchell, D.; Smidt, M.; Via, B. Field Assessment of Downed Timber Strength Deterioration Rate and Wood Quality Using Acoustic Technologies. Forests 2022, 13, 752. [Google Scholar] [CrossRef]

- Ritter, T.; Gollob, C.; Kraßnitzer, R.; Stampfer, K.; Nothdurft, A. A Robust Method for Detecting Wind-Fallen Stems from Aerial RGB Images Using a Line Segment Detection Algorithm. Forests 2022, 13, 90. [Google Scholar] [CrossRef]

- Windrim, L.; Bryson, M.; McLean, M.; Randle, J.; Stone, C. Automated Mapping of Woody Debris over Harvested Forest Plantations Using UAVs, High-Resolution Imagery, and Machine Learning. Remote Sens. 2019, 11, 733. [Google Scholar] [CrossRef]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Fusion of Hyperspectral and LIDAR Remote Sensing Data for Classification of Complex Forest Areas. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1416–1427. [Google Scholar] [CrossRef]

- Guo, Q.; Su, Y.; Hu, T. LiDAR Principles, Processing and Applications in Forest Ecology; Academic Press: Cambridge, MA, USA, 2023. [Google Scholar]

- Lechner, A.M.; Foody, G.M.; Boyd, D.S. Applications in Remote Sensing to Forest Ecology and Management. One Earth 2020, 2, 405–412. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Sam, L.; Akanksha; Martín-Torres, F.J.; Kumar, R. UAVs as Remote Sensing Platform in Glaciology: Present Applications and Future Prospects. Remote Sens. Environ. 2016, 175, 196–204. [Google Scholar] [CrossRef]

- Liebel, L.; Körner, M. Single-Image Super Resolution for Multispectral Remote Sensing Data Using Convolutional Neural Networks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B3, 883–890. [Google Scholar] [CrossRef]

- Mohan, M.; Leite, R.V.; Broadbent, E.N.; Wan Mohd Jaafar, W.S.; Srinivasan, S.; Bajaj, S.; Dalla Corte, A.P.; Do Amaral, C.H.; Gopan, G.; Saad, S.N.M.; et al. Individual Tree Detection Using UAV-Lidar and UAV-SfM Data: A Tutorial for Beginners. Open Geosci. 2021, 13, 1028–1039. [Google Scholar] [CrossRef]

- Villa, G.; Moreno, J.; Calera, A.; Amorós-López, J.; Camps-Valls, G.; Domenech, E.; Garrido, J.; González-Matesanz, J.; Gómez-Chova, L.; Martínez, J.Á.; et al. Spectro-Temporal Reflectance Surfaces: A New Conceptual Framework for the Integration of Remote-Sensing Data from Multiple Different Sensors. Int. J. Remote Sens. 2013, 34, 3699–3715. [Google Scholar] [CrossRef]

- Beland, M.; Parker, G.; Sparrow, B.; Harding, D.; Chasmer, L.; Phinn, S.; Antonarakis, A.; Strahler, A. On Promoting the Use of Lidar Systems in Forest Ecosystem Research. For. Ecol. Manag. 2019, 450, 117484. [Google Scholar] [CrossRef]

- Dassot, M.; Constant, T.; Fournier, M. The Use of Terrestrial LiDAR Technology in Forest Science: Application Fields, Benefits and Challenges. Ann. For. Sci. 2011, 68, 959–974. [Google Scholar] [CrossRef]

- Hudak, A.T.; Evans, J.S.; Stuart Smith, A.M. LiDAR Utility for Natural Resource Managers. Remote Sens. 2009, 1, 934–951. [Google Scholar] [CrossRef]

- Næsset, E.; Gobakken, T.; Holmgren, J.; Hyyppä, H.; Hyyppä, J.; Maltamo, M.; Nilsson, M.; Olsson, H.; Persson, Å.; Söderman, U. Laser Scanning of Forest Resources: The Nordic Experience. Scand. J. For. Res. 2004, 19, 482–499. [Google Scholar] [CrossRef]

- Silva, C.A.; Klauberg, C.; Hudak, A.T.; Vierling, L.A.; Fennema, S.J.; Corte, A.P.D. Modeling and Mapping Basal Area of Pinus taeda L. Plantation Using Airborne LiDAR Data. An. Acad. Bras. Ciênc. 2017, 89, 1895–1905. [Google Scholar] [CrossRef]

- Niemi, M.T.; Vauhkonen, J. Extracting Canopy Surface Texture from Airborne Laser Scanning Data for the Supervised and Unsupervised Prediction of Area-Based Forest Characteristics. Remote Sens. 2016, 8, 582. [Google Scholar] [CrossRef]

- Popescu, S.C. Estimating Biomass of Individual Pine Trees Using Airborne Lidar. Biomass Bioenergy 2007, 31, 646–655. [Google Scholar] [CrossRef]

- Tran, T.H.G.; Ressl, C.; Pfeifer, N. Integrated Change Detection and Classification in Urban Areas Based on Airborne Laser Scanning Point Clouds. Sensors 2018, 18, 448. [Google Scholar] [CrossRef] [PubMed]

- Abdollahnejad, A.; Panagiotidis, D.; Surový, P. Estimation and Extrapolation of Tree Parameters Using Spectral Correlation between UAV and Pléiades Data. Forests 2018, 9, 85. [Google Scholar] [CrossRef]

- Naesset, E. Determination of Mean Tree Height of Forest Stands Using Airborne Laser Scanner Data. ISPRS J. Photogramm. Remote Sens. 1997, 52, 49–56. [Google Scholar] [CrossRef]

- Nothdurft, A.; Gollob, C.; Kraßnitzer, R.; Erber, G.; Ritter, T.; Stampfer, K.; Finley, A.O. Estimating Timber Volume Loss Due to Storm Damage in Carinthia, Austria, Using ALS/TLS and Spatial Regression Models. For. Ecol. Manag. 2021, 502, 119714. [Google Scholar] [CrossRef]

- Xu, Z.; Li, W.; Li, Y.; Shen, X.; Ruan, H. Estimation of Secondary Forest Parameters by Integrating Image and Point Cloud-Based Metrics Acquired from Unmanned Aerial Vehicle. J. Appl. Remote Sens. 2020, 14, 022204. [Google Scholar] [CrossRef]

- d’Oliveira, M.V.; Broadbent, E.N.; Oliveira, L.C.; Almeida, D.R.; Papa, D.A.; Ferreira, M.E.; Zambrano, A.M.A.; Silva, C.A.; Avino, F.S.; Prata, G.A. Aboveground Biomass Estimation in Amazonian Tropical Forests: A Comparison of Aircraft-and Gatoreye UAV-Borne LIDAR Data in the Chico Mendes Extractive Reserve in Acre, Brazil. Remote Sens. 2020, 12, 1754. [Google Scholar] [CrossRef]

- Marcello, J.; Spínola, M.; Albors, L.; Marqués, F.; Rodríguez-Esparragón, D.; Eugenio, F. Performance of Individual Tree Segmentation Algorithms in Forest Ecosystems Using UAV LiDAR Data. Drones 2024, 8, 772. [Google Scholar] [CrossRef]

- Storch, M.; Jarmer, T.; Adam, M.; de Lange, N. Systematic Approach for Remote Sensing of Historical Conflict Landscapes with UAV-Based Laserscanning. Sensors 2022, 22, 217. [Google Scholar] [CrossRef]

- Almeida, D.R.A.; Broadbent, E.N.; Zambrano, A.M.A.; Wilkinson, B.E.; Ferreira, M.E.; Chazdon, R.; Meli, P.; Gorgens, E.B.; Silva, C.A.; Stark, S.C.; et al. Monitoring the Structure of Forest Restoration Plantations with a Drone-Lidar System. Int. J. Appl. Earth Obs. Geoinf. 2019, 79, 192–198. [Google Scholar] [CrossRef]

- Wallace, L.; Watson, C.; Lucieer, A. Detecting Pruning of Individual Stems Using Airborne Laser Scanning Data Captured from an Unmanned Aerial Vehicle. Int. J. Appl. Earth Obs. Geoinf. 2014, 30, 76–85. [Google Scholar] [CrossRef]

- Kim, J.; Popescu, S.C.; Lopez, R.R.; Wu, X.B.; Silvy, N.J. Vegetation Mapping of No Name Key, Florida Using Lidar and Multispectral Remote Sensing. Int. J. Remote Sens. 2020, 41, 9469–9506. [Google Scholar] [CrossRef]

- Liu, K.; Shen, X.; Cao, L.; Wang, G.; Cao, F. Estimating Forest Structural Attributes Using UAV-LiDAR Data in Ginkgo Plantations. ISPRS J. Photogramm. Remote Sens. 2018, 146, 465–482. [Google Scholar] [CrossRef]

- Thapa, N.; Narine, L.L.; Fan, Z.; Yang, S.; Tiwari, K. Detection of Invasive Plants Using NAIP Imagery and Airborne LiDAR in Coastal Alabama and Mississippi, USA. Ann. For. Res. 2023, 66, 63–77. [Google Scholar] [CrossRef]

- Corte, A.P.D.; Souza, D.V.; Rex, F.E.; Sanquetta, C.R.; Mohan, M.; Silva, C.A.; Zambrano, A.M.A.; Prata, G.; Alves De Almeida, D.R.; Trautenmüller, J.W.; et al. Forest Inventory with High-Density UAV-Lidar: Machine Learning Approaches for Predicting Individual Tree Attributes. Comput. Electron. Agric. 2020, 179, 105815. [Google Scholar] [CrossRef]

- Rijal, A.; Cristan, R.; Parajuli, M. Application of LiDAR in Forest Management. Available online: https://www.aces.edu/blog/topics/forestry/application-of-lidar-in-forest-management/ (accessed on 27 March 2024).

- Chuvieco, E. Fundamentals of Satellite Remote Sensing: An Environmental Approach; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Feng, Q.; Liu, J.; Gong, J. UAV Remote Sensing for Urban Vegetation Mapping Using Random Forest and Texture Analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Lu, B.; He, Y. Species Classification Using Unmanned Aerial Vehicle (UAV)-Acquired High Spatial Resolution Imagery in a Heterogeneous Grassland. ISPRS J. Photogramm. Remote Sens. 2017, 128, 73–85. [Google Scholar] [CrossRef]

- Poblete-Echeverría, C.; Olmedo, G.F.; Ingram, B.; Bardeen, M. Detection and Segmentation of Vine Canopy in Ultra-High Spatial Resolution RGB Imagery Obtained from Unmanned Aerial Vehicle (UAV): A Case Study in a Commercial Vineyard. Remote Sens. 2017, 9, 268. [Google Scholar] [CrossRef]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping Forest Tree Species in High Resolution UAV-Based RGB-Imagery by Means of Convolutional Neural Networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Simpson, G.; Nichol, C.J.; Wade, T.; Helfter, C.; Hamilton, A.; Gibson-Poole, S. Species-Level Classification of Peatland Vegetation Using Ultra-High-Resolution UAV Imagery. Drones 2024, 8, 97. [Google Scholar] [CrossRef]

- Duan, F.; Wan, Y.; Deng, L. A Novel Approach for Coarse-to-Fine Windthrown Tree Extraction Based on Unmanned Aerial Vehicle Images. Remote Sens. 2017, 9, 306. [Google Scholar] [CrossRef]

- Lee, S.; Yu, B.-H. Automatic Detection of Dead Tree from UAV Imagery. In Proceedings of the 39th Asian Conference on Remote Sensing, Kuala Lumpur, Malaysia, 15–19 October 2018; pp. 15–19. [Google Scholar]

- Blanchard, S.D.; Jakubowski, M.K.; Kelly, M. Object-Based Image Analysis of Downed Logs in Disturbed Forested Landscapes Using Lidar. Remote Sens. 2011, 3, 2420–2439. [Google Scholar] [CrossRef]

- Lopes Queiroz, G.; McDermid, G.J.; Castilla, G.; Linke, J.; Rahman, M.M. Mapping Coarse Woody Debris with Random Forest Classification of Centimetric Aerial Imagery. Forests 2019, 10, 471. [Google Scholar] [CrossRef]

- Polewski, P.; Yao, W.; Heurich, M.; Krzystek, P.; Stilla, U. Detection of Fallen Trees in ALS Point Clouds Using a Normalized Cut Approach Trained by Simulation. ISPRS J. Photogramm. Remote Sens. 2015, 105, 252–271. [Google Scholar] [CrossRef]

- Polewski, P.; Yao, W.; Heurich, M.; Krzystek, P.; Stilla, U. A Voting-Based Statistical Cylinder Detection Framework Applied to Fallen Tree Mapping in Terrestrial Laser Scanning Point Clouds. ISPRS J. Photogramm. Remote Sens. 2017, 129, 118–130. [Google Scholar] [CrossRef]

- Asner, G.P.; Knapp, D.E.; Kennedy-Bowdoin, T.; Jones, M.O.; Martin, R.E.; Boardman, J.; Hughes, R.F. Invasive Species Detection in Hawaiian Rainforests Using Airborne Imaging Spectroscopy and LiDAR. Remote Sens. Environ. 2008, 112, 1942–1955. [Google Scholar] [CrossRef]

- Liang, W.; Abidi, M.; Carrasco, L.; McNelis, J.; Tran, L.; Li, Y.; Grant, J. Mapping Vegetation at Species Level with High-Resolution Multispectral and Lidar Data over a Large Spatial Area: A Case Study with Kudzu. Remote Sens. 2020, 12, 609. [Google Scholar] [CrossRef]

- Zhai, L.; Sun, J.; Sang, H.; Yang, G.; Jia, Y. Large area land cover classification with Landsat ETM+ images based on decision tree. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2012, XXXIX-B7, 421–426. [Google Scholar] [CrossRef]

- Otukei, J.R.; Blaschke, T. Land Cover Change Assessment Using Decision Trees, Support Vector Machines and Maximum Likelihood Classification Algorithms. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, S27–S31. [Google Scholar] [CrossRef]

- Sharma, R.; Ghosh, A.; Joshi, P.K. Decision Tree Approach for Classification of Remotely Sensed Satellite Data Using Open Source Support. J. Earth Syst. Sci. 2013, 122, 1237–1247. [Google Scholar] [CrossRef]

- Balha, A.; Singh, C.K. Comparison of Maximum Likelihood, Neural Networks, and Random Forests Algorithms in Classifying Urban Landscape. In Application of Remote Sensing and GIS in Natural Resources and Built Infrastructure Management; Singh, V.P., Yadav, S., Yadav, K.K., Corzo Perez, G.A., Muñoz-Arriola, F., Yadava, R.N., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 29–38. ISBN 978-3-031-14096-9. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Hamdi, Z.M.; Brandmeier, M.; Straub, C. Forest Damage Assessment Using Deep Learning on High Resolution Remote Sensing Data. Remote Sens. 2019, 11, 1976. [Google Scholar] [CrossRef]

- Ba, A.; Laslier, M.; Dufour, S.; Hubert-Moy, L. Riparian Trees Genera Identification Based on Leaf-on/Leaf-off Airborne Laser Scanner Data and Machine Learning Classifiers in Northern France. Int. J. Remote Sens. 2020, 41, 1645–1667. [Google Scholar] [CrossRef]

- Li, M.; Im, J.; Beier, C. Machine Learning Approaches for Forest Classification and Change Analysis Using Multi-Temporal Landsat TM Images over Huntington Wildlife Forest. GIScience Remote Sens. 2013, 50, 361–384. [Google Scholar] [CrossRef]

- Bandyopadhyay, M.; Van Aardt, J.A.N.; Cawse-Nicholson, K. Classification and Extraction of Trees and Buildings from Urban Scenes Using Discrete Return LiDAR and Aerial Color Imagery. In Laser Radar Technology and Applications XVIII, Proceedings of the SPIE—The International Society for Optical Engineering, Baltimore, MD, USA, 20 May 2013; Turner, M.D., Kamerman, G.W., Eds.; SPIE: St. Bellingham, WA, USA, 2013; Volume 8731, p. 873105. [Google Scholar]

- Li, B.; Hou, J.; Li, D.; Yang, D.; Han, H.; Bi, X.; Wang, X.; Hinkelmann, R.; Xia, J. Application of LiDAR UAV for High-Resolution Flood Modelling. Water Resour. Manag. 2021, 35, 1433–1447. [Google Scholar] [CrossRef]

- Secord, J.; Zakhor, A. Tree Detection in Urban Regions Using Aerial Lidar and Image Data. IEEE Geosci. Remote Sens. Lett. 2007, 4, 196–200. [Google Scholar] [CrossRef]

- Wang, X.; Wang, Y.; Zhou, C.; Yin, L.; Feng, X. Urban Forest Monitoring Based on Multiple Features at the Single Tree Scale by UAV. Urban For. Urban Green. 2021, 58, 126958. [Google Scholar] [CrossRef]

- Ferreira, M.P.; Dos Santos, D.R.; Ferrari, F.; Coelho, L.C.T.; Martins, G.B.; Feitosa, R.Q. Improving Urban Tree Species Classification by Deep-Learning Based Fusion of Digital Aerial Images and LiDAR. Urban For. Urban Green. 2024, 94, 128240. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef]

- Kim, Y. Generation of Land Cover Maps through the Fusion of Aerial Images and Airborne LiDAR Data in Urban Areas. Remote Sens. 2016, 8, 521. [Google Scholar] [CrossRef]

- Zhong, H.; Zhang, Z.; Liu, H.; Wu, J.; Lin, W. Individual Tree Species Identification for Complex Coniferous and Broad-Leaved Mixed Forests Based on Deep Learning Combined with UAV LiDAR Data and RGB Images. Forests 2024, 15, 293. [Google Scholar] [CrossRef]

- Bork, E.W.; Su, J.G. Integrating LIDAR Data and Multispectral Imagery for Enhanced Classification of Rangeland Vegetation: A Meta Analysis. Remote Sens. Environ. 2007, 111, 11–24. [Google Scholar] [CrossRef]

- Shi, Y.; Skidmore, A.K.; Wang, T.; Holzwarth, S.; Heiden, U.; Pinnel, N.; Zhu, X.; Heurich, M. Tree Species Classification Using Plant Functional Traits from LiDAR and Hyperspectral Data. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 207–219. [Google Scholar] [CrossRef]

- Zabel, I.H.H.; Duggan-Hass, D.; Ross, R.M. The Teacher-Friendly Guide to Climate Change; Special publications; Paleontological Research Institution: Ithaca, NY, USA, 2017; ISBN 978-0-87710-519-0. [Google Scholar]

- United States Department of Agriculture; Natural Resources Conservation Services. Title 190-Forestry Inventory Methods Technical. 2018. Available online: https://directives.nrcs.usda.gov/sites/default/files2/1719592953/Forestry%20190-1%2C%20Forestry%20Inventory%20Methods.pdf (accessed on 26 April 2024).

- Northwest Natural Resource Group; Stewardship Forestry. Forest Inventory and Monitoring Guidelines; Northwest Certified Forestry: Seattle, WA, USA, 2014. [Google Scholar]

- Isenburg, Martin LAStools—Efficient LiDAR Processing Software (Version V2022.1); Rapidlasso GmbH: Gilching, Germany, 2023; Available online: https://rapidlasso.com/LAStools/ (accessed on 29 September 2025).

- ESRI ArcGIS Pro (Version 3.1.0); ESRI: Redlands, CA, USA, 2023. Available online: https://www.esri.com/en-us/arcgis/products/arcgis-pro/overview (accessed on 29 September 2025).

- Münzinger, M.; Prechtel, N.; Behnisch, M. Mapping the Urban Forest in Detail: From LiDAR Point Clouds to 3D Tree Models. Urban For. Urban Green. 2022, 74, 127637. [Google Scholar] [CrossRef]

- Guo, Q.; Li, W.; Yu, H.; Alvarez, O. Effects of Topographic Variability and Lidar Sampling Density on Several DEM Interpolation Methods. Photogramm. Eng. Remote Sens. 2010, 76, 701–712. [Google Scholar] [CrossRef]

- van Rees, E. Generating Digital Surface Models from Lidar Data in ArcGIS Pro. Available online: https://geospatialtraining.com/generating-digital-surface-models-from-lidar-data-in-arcgis-pro/ (accessed on 21 March 2024).

- ESRI Create DEMs and DSMs from Lidar Using a LAS Dataset. Available online: https://pro.arcgis.com/en/pro-app/latest/help/analysis/3d-analyst/create-dems-and-dsms-from-lidar.htm (accessed on 21 April 2024).

- rapidlasso Rasterizing Perfect Canopy Height Models from LiDAR. Available online: https://rapidlasso.de/rasterizing-perfect-canopy-height-models-from-lidar/ (accessed on 3 February 2024).

- Du, M.; Noguchi, N. Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-Camera System. Remote Sens. 2017, 9, 289. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, F.; Qi, Y.; Deng, L.; Wang, X.; Yang, S. New Research Methods for Vegetation Information Extraction Based on Visible Light Remote Sensing Images from an Unmanned Aerial Vehicle (UAV). Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 215–226. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Li, D.; Zhou, X.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of RGB, Color-Infrared and Multispectral Images Acquired from Unmanned Aerial Systems for the Estimation of Nitrogen Accumulation in Rice. Remote Sensing 2018, 10, 824. [Google Scholar] [CrossRef]

- Shi, Y.; Gao, J.; Li, X.; Li, J.; Dela Torre, D.M.G.; Brierley, G.J. Improved Estimation of Aboveground Biomass of Disturbed Grassland through Including Bare Ground and Grazing Intensity. Remote Sens. 2021, 13, 2105. [Google Scholar] [CrossRef]

- Guimarães, M.J.M.; Reis, I.D.D.; Barros, J.R.A.; Lopes, I.; Da Costa, M.G.; Ribeiro, D.P.; Carvalho, G.C.; Da Silva, A.S.; Oliveira Alves, C.V. Identification of Vegetation Areas Affected by Wildfires Using RGB Images Obtained by UAV: A Case Study in the Brazilian Cerrado. Geomatics 2025, 5, 13. [Google Scholar] [CrossRef]

- Rijal, A.; Cristan, R.; Gallagher, T.; Narine, L.L.; Parajuli, M. Evaluating the Feasibility and Potential of Unmanned Aerial Vehicles to Monitor Implementation of Forestry Best Management Practices in the Coastal Plain of the Southeastern United States. For. Ecol. Manag. 2023, 545, 121280. [Google Scholar] [CrossRef]

- Sen, R.; Goswami, S.; Chakraborty, B. Jeffries-Matusita Distance as a Tool for Feature Selection. In Proceedings of the 2019 International Conference on Data Science and Engineering (ICDSE), Patna, India, 26–28 September 2019; IEEE: Piscataway, NJ, USA; pp. 15–20. [Google Scholar]

- Richards, J.A.; Jia, X. Remote Sensing Digital Image Analysis: An Introduction; Springer: Berlin/Heidelberg, Germany, 1999; ISBN 978-3-662-03980-9. [Google Scholar]

- Ok, A.O.; Akar, O.; Gungor, O. Evaluation of Random Forest Method for Agricultural Crop Classification. Eur. J. Remote Sens. 2012, 45, 421–432. [Google Scholar] [CrossRef]

- Sisodia, P.S.; Tiwari, V.; Kumar, A. A Comparative Analysis of Remote Sensing Image Classification Techniques. In Proceedings of the 2014 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Delhi, India, 24–27 September 2014; pp. 1418–1421. [Google Scholar]

- Richards, J. Remote Sensing Digital Image Analysis; Springer: Berlin/Heidelberg, 1999; p. 240. [Google Scholar]

- NV5. Geospatial Software Maximum Likelihood Classification. Available online: https://www.nv5geospatialsoftware.com/docs/MaximumLikelihood.html (accessed on 29 September 2025).

- Fan, C.L. Ground Surface Structure Classification Using UAV Remote Sensing Images and Machine Learning Algorithms. Appl. Geomat. 2023, 15, 919–931. [Google Scholar] [CrossRef]

- Freeman, E.A.; Frescino, T.S.; Moisen, G.G. ModelMap: An R Package for Model Creation and Map Production. R Package Version 2018; U.S. Government Publishing Office: Washington, DC, USA, 2025; Volume 4, pp. 6–12. Available online: https://cran.r-project.org/web/packages/ModelMap/vignettes/VModelMap.pdf (accessed on 29 September 2025).

- Probst, P.; Wright, M.; Boulesteix, A.-L. Hyperparameters and Tuning Strategies for Random Forest. WIREs Data Min. Knowl. Discov. 2019, 9, e1301. [Google Scholar] [CrossRef]

- Hartling, S.; Sagan, V.; Maimaitijiang, M. Urban Tree Species Classification Using UAV-Based Multi-Sensor Data Fusion and Machine Learning. GIScience Remote Sens. 2021, 58, 1250–1275. [Google Scholar] [CrossRef]

- Qin, H.; Zhou, W.; Yao, Y.; Wang, W. Individual Tree Segmentation and Tree Species Classification in Subtropical Broadleaf Forests Using UAV-Based LiDAR, Hyperspectral, and Ultrahigh-Resolution RGB Data. Remote Sens. Environ. 2022, 280, 113143. [Google Scholar] [CrossRef]

- Van Alphen, R.; Rains, K.C.; Rodgers, M.; Malservisi, R.; Dixon, T.H. UAV-Based Wetland Monitoring: Multispectral and Lidar Fusion with Random Forest Classification. Drones 2024, 8, 113. [Google Scholar] [CrossRef]

- Balestra, M.; Marselis, S.; Sankey, T.T.; Cabo, C.; Liang, X.; Mokroš, M.; Peng, X.; Singh, A.; Stereńczak, K.; Vega, C.; et al. LiDAR Data Fusion to Improve Forest Attribute Estimates: A Review. Curr. For. Rep. 2024, 10, 281–297. [Google Scholar] [CrossRef]

- Gopalakrishnan, R.; Packalen, P.; Ikonen, V.-P.; Räty, J.; Venäläinen, A.; Laapas, M.; Pirinen, P.; Peltola, H. The Utility of Fused Airborne Laser Scanning and Multispectral Data for Improved Wind Damage Risk Assessment over a Managed Forest Landscape in Finland. Ann. For. Sci. 2020, 77, 97. [Google Scholar] [CrossRef]

- Srivastava, S.K.; Seng, K.P.; Ang, L.M.; Pachas, A.N.A.; Lewis, T. Drone-Based Environmental Monitoring and Image Processing Approaches for Resource Estimates of Private Native Forest. Sensors 2022, 22, 7872. [Google Scholar] [CrossRef]

- Tarasova, L.V.; Smirnova, L.N. Satellite-Based Analysis of Classification Algorithms Applied to the Riparian Zone of the Malaya Kokshaga River. IOP Conf. Ser. Earth Environ. Sci. 2021, 932, 012012. [Google Scholar] [CrossRef]

- Czyża, S.; Szuniewicz, K.; Kowalczyk, K.; Dumalski, A.; Ogrodniczak, M.; Zieleniewicz, Ł. Assessment of Accuracy in Unmanned Aerial Vehicle (UAV) Pose Estimation with the REAL-Time Kinematic (RTK) Method on the Example of DJI Matrice 300 RTK. Sensors 2023, 23, 2092. [Google Scholar] [CrossRef]

| Vegetative Indices | Formula |

|---|---|

| Normalized Green Red Difference Index (NGRDI) | (G − R)/(G + R) |

| Normalized Green Blue Difference Index (NGBDI) | (G − B)/(G + B) |

| Site | With CHM | Without CHM | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RF | ML | DT | RF | ML | DT | ||||||||

| OA (%) | k | OA (%) | k | OA (%) | k | OA (%) | k | OA (%) | k | OA (%) | k | ||

| AL | Site 1 | 95.04 | 0.93 | 91.39 | 0.87 | 92.87 | 0.89 | 76.5 | 0.65 | 82.54 | 0.74 | 77.97 | 0.67 |

| Site 2 | 94.79 | 0.92 | 91.32 | 0.87 | 89.87 | 0.85 | 86.45 | 0.80 | 82.16 | 0.73 | 75.37 | 0.63 | |

| Site 3 | 98.63 | 0.98 | 93.34 | 0.90 | 91.05 | 0.87 | 71.32 | 0.56 | 80.68 | 0.71 | 58.04 | 0.39 | |

| Site 4 | 98.08 | 0.97 | 82.78 | 0.77 | 81.24 | 0.75 | 80.52 | 0.74 | 66.69 | 0.56 | 69.07 | 0.59 | |

| Site 5 | 93.93 | 0.91 | 88.45 | 0.83 | 86.89 | 0.80 | 73.46 | 0.60 | 62.98 | 0.45 | 59.32 | 0.39 | |

| Site 6 | 95.45 | 0.93 | 91.06 | 0.87 | 94.99 | 0.92 | 78.14 | 0.67 | 67.59 | 0.52 | 75.16 | 0.63 | |

| GE | Site 7 | 92.02 | 0.89 | 86.69 | 0.82 | 56.59 | 0.42 | 69.41 | 0.59 | 72.5 | 0.63 | 33.23 | 0.11 |

| Site 8 | 96.43 | 0.95 | 86.94 | 0.80 | 76.42 | 0.65 | 76.83 | 0.65 | 72.29 | 0.59 | 45.36 | 0.18 | |

| FL | Site 9 | 93.16 | 0.91 | 91.55 | 0.89 | 77.69 | 0.70 | 83.01 | 0.77 | 76.33 | 0.68 | 70.86 | 0.61 |

| Site 10 | 87.64 | 0.84 | 91.66 | 0.89 | 70.15 | 0.60 | 79.94 | 0.73 | 82.68 | 0.77 | 60.69 | 0.48 | |

| Average | 94.52 | 0.92 | 89.52 | 0.85 | 81.78 | 0.75 | 77.56 | 0.68 | 74.64 | 0.64 | 62.51 | 0.47 | |

| With CHM | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Methods | Site | PA (%) (Standing Tree) | UA (%) (Standing Tree) | PA (%) (Ground) | UA (%) (Ground) | PA (%) (Downed Tree) | UA (%) (Downed Tree) | PA (%) (Water) | UA (%) (Water) |

| 1 | 98.17 | 97.19 | 95.43 | 94.11 | 91.44 | 93.68 | |||

| 2 | 88.40 | 98.08 | 99.23 | 97.97 | 96.87 | 89.33 | |||

| Random | 3 | 97.30 | 100.00 | 100.00 | 97.78 | 98.36 | 98.36 | ||

| Forest | 4 | 99.76 | 98.58 | 99.75 | 95.51 | 96.49 | 98.72 | 96.22 | 99.74 |

| (RF) | 5 | 97.00 | 98.48 | 99.27 | 88.45 | 85.68 | 95.92 | ||

| 6 | 95.37 | 98.10 | 95.98 | 96.44 | 95.03 | 92.12 | |||

| 7 | 99.75 | 100.00 | 84.08 | 92.10 | 86.03 | 96.69 | 98.07 | 82.02 | |

| 8 | 99.31 | 100.00 | 98.29 | 91.67 | 91.8 | 97.76 | |||

| 9 | 98.53 | 95.93 | 100.00 | 98.76 | 74.39 | 98.07 | 100.00 | 98.07 | |

| 10 | 71.53 | 97.03 | 100.00 | 70.92 | 80.25 | 90.93 | 99.75 | 99.75 | |

| Average | 94.51 | 98.34 | 97.20 | 92.37 | 89.63 | 95.16 | 98.51 | 94.89 | |

| 1 | 98.83 | 91.51 | 80.67 | 100.00 | 94.18 | 85.14 | |||

| 2 | 100.00 | 92.55 | 83.21 | 98.86 | 90.91 | 84.09 | |||

| Maximum | 3 | 94.29 | 92.35 | 91.94 | 99.73 | 93.97 | 88.17 | ||

| Likelihood (ML) | 4 | 99.52 | 76.01 | 67.48 | 79.31 | 84.00 | 81.75 | 79.90 | 99.38 |

| 5 | 100.00 | 76.63 | 96.33 | 98.99 | 69.42 | 95.02 | |||

| 6 | 98.84 | 92.22 | 84.63 | 97.02 | 89.62 | 85.19 | |||

| 7 | 100.00 | 96.67 | 96.52 | 67.36 | 66.18 | 99.63 | 84.30 | 96.14 | |

| 8 | 100.00 | 94.55 | 70.22 | 89.56 | 89.46 | 78.12 | |||

| 9 | 98.53 | 94.58 | 91.71 | 98.92 | 93.41 | 77.69 | 82.60 | 100.00 | |

| 10 | 100.00 | 94.27 | 88.10 | 97.37 | 92.00 | 78.80 | 86.21 | 100.00 | |

| Average | 99.00 | 90.13 | 85.08 | 92.71 | 86.31 | 85.36 | 83.25 | 98.88 | |

| 1 | 98.33 | 95.47 | 89.63 | 94.62 | 90.41 | 88.59 | |||

| 2 | 98.54 | 81.62 | 95.20 | 97.30 | 75.68 | 93.05 | |||

| 3 | 87.39 | 86.09 | 99.24 | 98.01 | 85.48 | 87.89 | |||

| Decision | 4 | 65.46 | 77.21 | 94.62 | 87.95 | 73.00 | 63.76 | 92.21 | 98.66 |

| Tree | 5 | 92.75 | 81.18 | 98.04 | 91.55 | 70.15 | 88.65 | ||

| (DT) | 6 | 96.06 | 95.62 | 95.98 | 96.90 | 93.00 | 92.58 | ||

| 7 | 37.35 | 68.16 | 66.67 | 53.39 | 59.56 | 45.08 | 62.80 | 70.84 | |

| 8 | 57.83 | 94.01 | 81.39 | 93.18 | 90.63 | 60.00 | |||

| 9 | 68.30 | 84.24 | 84.42 | 76.36 | 79.27 | 64.74 | 78.92 | 91.74 | |

| 10 | 88.32 | 78.40 | 47.35 | 77.83 | 80.75 | 54.29 | 62.56 | 82.74 | |

| Average | 79.03 | 84.20 | 85.25 | 86.71 | 79.79 | 73.86 | 74.12 | 85.99 | |

| Without CHM | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Methods | Site | PA (%) (Standing Tree) | UA (%) (Standing Tree) | PA (%) (Ground) | UA (%) (Ground) | PA (%) (Downed Tree) | UA (%) (Downed Tree) | PA (%) (Water) | UA (%) (Water) | |

| 1 | 76.64 | 66.32 | 59.81 | 94.64 | 93.53 | 76.73 | ||||

| 2 | 100.00 | 73.80 | 95.81 | 95.24 | 63.75 | 98.60 | ||||

| Random | 3 | 70.33 | 74.57 | 81.46 | 66.34 | 62.54 | 73.19 | |||

| Forest | 4 | 42.28 | 75.27 | 95.58 | 69.98 | 85.24 | 79.94 | 97.35 | 98.51 | |

| (RF) | 5 | 85.89 | 66.82 | 61.28 | 76.12 | 74.14 | 79.88 | |||

| 6 | 91.84 | 71.75 | 77.47 | 85.20 | 64.88 | 79.85 | ||||

| 7 | 63.92 | 61.96 | 52.60 | 56.17 | 66.18 | 75.08 | 94.03 | 81.73 | ||

| 8 | 89.69 | 81.07 | 52.04 | 80.93 | 91.12 | 71.30 | ||||

| 9 | 90.83 | 97.15 | 61.45 | 75.09 | 82.22 | 76.32 | 99.69 | 84.74 | ||

| 10 | 100.00 | 99.70 | 38.64 | 71.20 | 82.13 | 58.04 | 100.00 | 97.08 | ||

| Average | 81.14 | 76.84 | 67.61 | 77.09 | 76.57 | 76.89 | 97.77 | 90.52 | ||

| 1 | 88.56 | 79.48 | 80.63 | 97.94 | 78.36 | 73.60 | ||||

| 2 | 88.62 | 94.74 | 79.64 | 82.87 | 78.35 | 70.99 | ||||

| Maximum | 3 | 80.08 | 85.47 | 92.71 | 70.11 | 69.35 | 90.32 | |||

| Likelihood | 4 | 64.31 | 71.58 | 47.81 | 64.06 | 61.01 | 43.16 | 93.79 | 99.37 | |

| (ML) | 5 | 72.67 | 53.66 | 37.88 | 64.15 | 79.60 | 73.47 | |||

| 6 | 98.54 | 63.41 | 40.38 | 79.46 | 65.48 | 67.69 | ||||

| 7 | 58.86 | 74.70 | 44.22 | 65.95 | 90.17 | 60.23 | 95.17 | 92.80 | ||

| 8 | 96.88 | 73.29 | 33.79 | 82.12 | 90.26 | 68.18 | ||||

| 9 | 98.22 | 97.36 | 37.15 | 93.66 | 95.36 | 57.63 | 73.99 | 84.75 | ||

| 10 | 92.66 | 100.00 | 69.32 | 71.00 | 72.33 | 64.69 | 97.30 | 100.00 | ||

| Average | 83.94 | 79.37 | 56.35 | 77.13 | 78.03 | 67.01 | 90.06 | 94.23 | ||

| 1 | 93.43 | 77.11 | 72.64 | 92.02 | 67.66 | 67.66 | ||||

| 2 | 82.46 | 97.81 | 85.03 | 61.21 | 58.54 | 77.11 | ||||

| 3 | 48.58 | 95.22 | 69.60 | 44.21 | 60.68 | 52.27 | ||||

| Decision | 4 | 44.92 | 81.56 | 53.35 | 55.96 | 84.23 | 56.49 | 93.20 | 94.03 | |

| Tree | 5 | 55.56 | 64.01 | 40.39 | 65.02 | 82.47 | 54.36 | |||

| (DT) | 6 | 80.76 | 89.64 | 61.54 | 83.90 | 84.23 | 60.60 | |||

| 7 | 33.86 | 40.68 | 34.97 | 30.40 | 4.91 | 4.42 | 58.81 | 65.92 | ||

| 8 | 42.81 | 80.59 | 39.51 | 47.39 | 53.87 | 33.57 | ||||

| 9 | 89.35 | 96.49 | 41.34 | 49.50 | 57.73 | 52.58 | 100.00 | 87.53 | ||

| 10 | 87.16 | 57.81 | 52.21 | 62.54 | 53.60 | 54.87 | 50.75 | 73.16 | ||

| Average | 65.89 | 78.09 | 55.06 | 59.22 | 60.79 | 51.39 | 75.69 | 80.16 | ||

| Ground Truth (Pixels) | |||||

|---|---|---|---|---|---|

| Class | Standing Tree | Downed Tree | Ground | Water | Total |

| Standing tree | 401 | 17 | 0 | 0 | 418 |

| Downed tree | 6 | 305 | 0 | 0 | 311 |

| Ground | 0 | 5 | 398 | 0 | 403 |

| Water | 0 | 83 | 0 | 408 | 491 |

| Total | 407 | 410 | 398 | 408 | 1623 |

| Class | Commission (%) | Omission (%) | Commission (Pixels) | Omission (Pixels) |

|---|---|---|---|---|

| Standing tree | 4.07 | 1.47 | 17/418 | 6/407 |

| Downed tree | 1.93 | 25.61 | 6/311 | 105/410 |

| Ground | 1.24 | 0.00 | 5/403 | 0/398 |

| Water | 16.90 | 0.00 | 83/491 | 0/408 |

| Ground Truth (Pixels) | |||||

|---|---|---|---|---|---|

| Class | Standing Tree | Downed Tree | Ground | Water | Total |

| Standing tree | 307 | 8 | 1 | 0 | 316 |

| Downed tree | 2 | 319 | 96 | 1 | 293 |

| Ground | 25 | 48 | 220 | 0 | 380 |

| Water | 4 | 13 | 41 | 322 | 418 |

| Total | 338 | 388 | 358 | 323 | 1407 |

| Class | Commission (%) | Omission (%) | Commission (Pixels) | Omission (Pixels) |

|---|---|---|---|---|

| Standing tree | 2.85 | 9.17 | 9/316 | 31/338 |

| Downed tree | 23.68 | 17.78 | 99/418 | 69/388 |

| Ground | 24.91 | 38.55 | 73/293 | 138/358 |

| Water | 15.26 | 0.31 | 58/380 | 1/323 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Badal, D.; Cristan, R.; Narine, L.L.; Kumar, S.; Rijal, A.; Parajuli, M. Effectiveness of Unmanned Aerial Vehicle-Based LiDAR for Assessing the Impact of Catastrophic Windstorm Events on Timberland. Drones 2025, 9, 756. https://doi.org/10.3390/drones9110756

Badal D, Cristan R, Narine LL, Kumar S, Rijal A, Parajuli M. Effectiveness of Unmanned Aerial Vehicle-Based LiDAR for Assessing the Impact of Catastrophic Windstorm Events on Timberland. Drones. 2025; 9(11):756. https://doi.org/10.3390/drones9110756

Chicago/Turabian StyleBadal, Dipika, Richard Cristan, Lana L. Narine, Sanjiv Kumar, Arjun Rijal, and Manisha Parajuli. 2025. "Effectiveness of Unmanned Aerial Vehicle-Based LiDAR for Assessing the Impact of Catastrophic Windstorm Events on Timberland" Drones 9, no. 11: 756. https://doi.org/10.3390/drones9110756

APA StyleBadal, D., Cristan, R., Narine, L. L., Kumar, S., Rijal, A., & Parajuli, M. (2025). Effectiveness of Unmanned Aerial Vehicle-Based LiDAR for Assessing the Impact of Catastrophic Windstorm Events on Timberland. Drones, 9(11), 756. https://doi.org/10.3390/drones9110756