A Randomized Controlled Trial of ABCD-IN-BARS Drone-Assisted Emergency Assessments

Abstract

1. Introduction

2. Methods

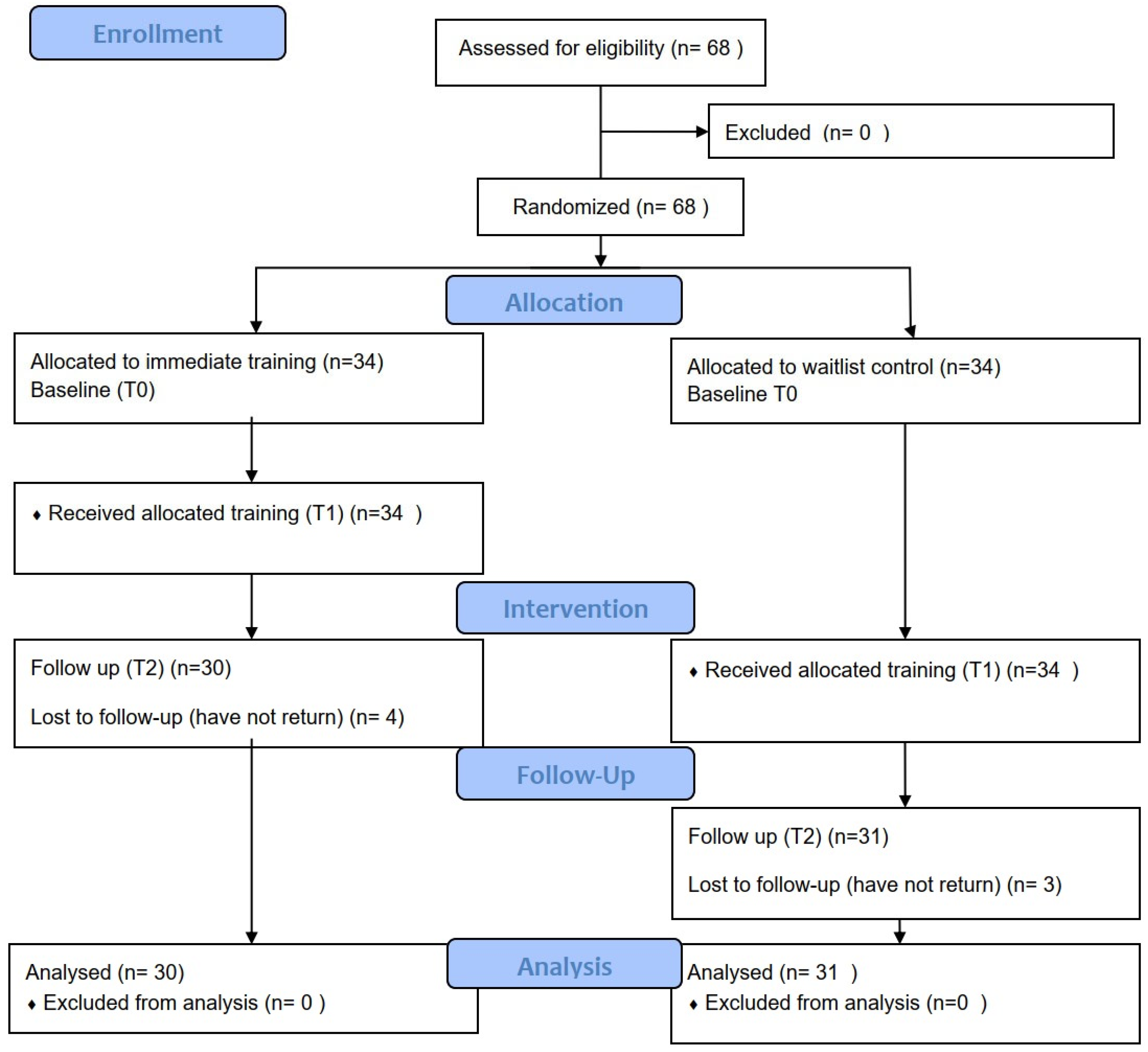

2.1. Study Design and Participation

2.2. Intervention Protocol

2.2.1. Training

2.2.2. Device

2.2.3. Drone In-Flight Patient Assessment Development

2.2.4. ABCD-IN-BARS Protocols

2.2.5. Airway (A)

2.2.6. Breathing (B)

2.2.7. Circulation (C)

2.2.8. Disability (D)

2.2.9. Injuries (I)

2.2.10. Neck (N)

2.2.11. Back (B)

2.2.12. Abdomen (A)

2.2.13. Range of Motion (R)

2.2.14. Stand (S)

2.3. Study Outcome Measures

2.4. Sample Size

2.5. Statistical Analysis and Randomization

2.6. Ethical Considerations

3. Results

3.1. Assessment Efficiency and Skill Retention

3.2. Provider Confidence and Protocol Acceptance

4. Discussion

4.1. Comparison with ABCDE Literature and Protocol Efficacy

4.2. Alignment with Existing Evidence

4.3. Limitations and Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Royo-Vela, M.; Black, M. Drone images versus terrain images in advertisements: Images’ verticality effects and the mediating role of mental simulation on attitude towards the advertisement. J. Mark. Commun. 2018, 26, 21–39. [Google Scholar] [CrossRef]

- Krey, M.; Seiler, R. Usage and acceptance of drone technology in healthcare: Exploring patients and physicians perspective. In Proceedings of the 52nd Hawaii International Conference on System Sciences (HICSS), Grand Wailea, HI, USA, 8–11 January 2020; pp. 4135–4144. [Google Scholar] [CrossRef]

- Konert, A.; Smereka, J.; Szarpak, L. The Use of Drones in Emergency Medicine: Practical and Legal Aspects. Emerg. Med. Int. 2019, 2019, 3589792. [Google Scholar] [CrossRef] [PubMed]

- Robakowska, M.; Ślęzak, D.; Żuratyński, P.; Tyrańska-Fobke, A.; Robakowski, P.; Prędkiewicz, P.; Zorena, K. Possibilities of Using UAVs in Pre-Hospital Security for Medical Emergencies. Int. J. Environ. Res. Public Health 2022, 19, 10754. [Google Scholar] [CrossRef] [PubMed]

- Roberts, N.B.; Ager, E.; Leith, T.; Lott, I.; Mason-Maready, M.; Nix, T.; Gottula, A.; Hunt, N.; Brent, C. Current summary of the evidence in drone-based emergency medical services care. Resusc. Plus 2023, 13, 100347. [Google Scholar] [CrossRef]

- Russell, S.W.; Artandi, M.K. Approach to the telemedicine physical examination: Partnering with patients. Med. J. Aust. 2022, 216, 131–134. [Google Scholar] [CrossRef]

- Hollander, J.E.; Carr, B.G. Virtually Perfect? Telemedicine for Covid-19. N. Engl. J. Med. 2020, 382, 1679–1681. [Google Scholar] [CrossRef]

- Lynn, M.R. Determination and Quantification Of Content Validity. Nurs. Res. 1986, 35, 382–386. [Google Scholar] [CrossRef]

- Benziger, C.P.; Huffman, M.D.; Sweis, R.N.; Stone, N.J. The Telehealth Ten: A Guide for a Patient-Assisted Virtual Physical Examination. Am. J. Med. 2020, 134, 48–51. [Google Scholar] [CrossRef]

- Slovis, C.M.; Carruth, T.B.; Seitz, W.J.; Thomas, C.M.; Elsea, W.R. A priority dispatch system for emergency medical services. Ann. Emerg. Med. 1985, 14, 1055–1060. [Google Scholar] [CrossRef]

- Clawson, J.; Barron, T.; Scott, G.; Siriwardena, A.N.; Patterson, B.; Olola, C. Medical Priority Dispatch System Breathing Problems Protocol Key Question Combinations are Associated with Patient Acuity. Prehospital Disaster Med. 2012, 27, 375–380. [Google Scholar] [CrossRef]

- Lumb, A.B.; Thomas, C.B. Nunn and Lumb’s Applied Respiratory Physiology, 9th ed.; Elsevier Ltd.: Edinburgh, UK; Amsterdam, The Netherlands, 2021. [Google Scholar]

- Siew, L.; Hsiao, A.; McCarthy, P.; Agarwal, A.; Lee, E.; Chen, L. Reliability of Telemedicine in the Assessment of Seriously Ill Children. Pediatrics 2016, 137, e20150712. [Google Scholar] [CrossRef] [PubMed]

- Delgado, H.R.; Braun, S.R.; Skatrud, J.B.; Reddan, W.G.; Pegelow, D.F. Chest wall and abdominal motion during exercise in patients with chronic obstructive pulmonary disease. Am. Rev. Respir. Dis. 1982, 126, 200–205. [Google Scholar]

- Topcuoglu, C.; Yumin, E.T.; Saglam, M.; Cankaya, T.; Konuk, S.; Ozsari, E.; Goksuluk, M.B. Neural Respiratory Drive During Different Dyspnea Relief Positions and Breathing Exercises in Individuals With COPD. Respir. Care 2024, 69, 1129–1137. [Google Scholar] [CrossRef]

- Alay, G.K.; Yıldız, S. Comparison of Forward-Leaning and Fowler Position: Effects on Vital Signs, Pain, and Anxiety Scores in Children With Asthma Exacerbations. Respir. Care 2024, 69, 968–974. [Google Scholar] [CrossRef]

- Scott, G.; Clawson, J.; Rector, M.; Massengale, D.; Thompson, M.; Patterson, B.; Olola, C.H. The Accuracy of Emergency Medical Dispatcher-Assisted Layperson-Caller Pulse Check Using the Medical Priority Dispatch System Protocol. Prehospital Disaster Med. 2012, 27, 252–259. [Google Scholar] [CrossRef]

- Jack, A.I.; Digney, H.T.; Bell, C.A.; Grossman, S.N.; McPherson, J.I.; Saleem, G.T.; Haider, M.N.; Leddy, J.J.; Willer, B.S.; Balcer, L.J.; et al. Testing the Validity and Reliability of a Standardized Virtual Examination for Concussion. Neurol. Clin. Pract. 2024, 14, e200328. [Google Scholar] [CrossRef] [PubMed]

- Lewis, E.R.; Thomas, C.A.; Wilson, M.L.; Mbarika, V.W.A. Telemedicine in Acute-Phase Injury Management: A Review of Practice and Advancements. Telemed. E-Health 2012, 18, 434–445. [Google Scholar] [CrossRef] [PubMed]

- Stiell, I.G.; Clement, C.M.; Grimshaw, J.; Brison, R.J.; Rowe, B.H.; Schull, M.J.; Lee, J.S.; Brehaut, J.; McKnight, R.D.; A Eisenhauer, M.; et al. Implementation of the Canadian C-Spine Rule: Prospective 12 centre cluster randomised trial. BMJ 2009, 339, b4146. [Google Scholar] [CrossRef]

- Iyer, S.; Shafi, K.; Lovecchio, F.; Turner, R.; Albert, T.J.; Kim, H.J.; Press, J.; Katsuura, Y.; Sandhu, H.; Schwab, F.; et al. The Spine Physical Examination Using Telemedicine: Strategies and Best Practices. Glob. Spine J. 2020, 12, 8–14. [Google Scholar] [CrossRef] [PubMed]

- Hayden, E.M.; Borczuk, P.; Dutta, S.; Liu, S.W.; A White, B.; Lavin-Parsons, K.; Zheng, H.; Filbin, M.R.; Zachrison, K.S. Can video-based telehealth examinations of the abdomen safely determine the need for imaging? J. Telemed. Telecare 2021, 29, 761–774. [Google Scholar] [CrossRef] [PubMed]

- Herrington, S.M.; Muhammad Fields, T. Pilot Training and Task Based Performance Evaluation of an Unmanned Aerial Vehicle. In Proceedings of the AIAA Scitech 2021 Forum, Virtual, 11–15 and 19–21 January 2021. [Google Scholar] [CrossRef]

- Fink, F.; Kalter, I.; Steindorff, J.-V.; Helmbold, H.K.; Paulicke, D.; Jahn, P. Identifying factors of user acceptance of a drone-based medication delivery: User-centered control group design approach. JMIR Hum. Factors 2024, 11, e51587. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: Oxford, UK, 1988. [Google Scholar]

- Schulz, K.F. CONSORT 2010 Statement: Updated Guidelines for Reporting Parallel Group Randomized Trials. Ann. Intern. Med. 2010, 152, 726. [Google Scholar] [CrossRef] [PubMed]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Bush, M.S. A Trauma Course Born Out of Personal Tragedy. Royal College of Surgeons. 2016. Available online: https://www.rcseng.ac.uk/news-and-events/blog/personal-tragedy (accessed on 17 May 2025).

- Bruinink, L.J.; Linders, M.; de Boode, W.P.; Fluit, C.R.M.G.; Hogeveen, M. The ABCDE approach in critically ill patients: A scoping review of assessment tools, adherence and reported outcomes. Resusc. Plus 2024, 20, 100763. [Google Scholar] [CrossRef]

- Peran, D.; Kodet, J.; Pekara, J.; Mala, L.; Truhlar, A.; Cmorej, P.C.; Lauridsen, K.G.; Sari, F.; Sykora, R. ABCDE Cognitive Aid Tool in Patient Assessment—Development and Validation in a Multicenter Pilot Simulation Study. BMC Emerg. Med. 2020, 20, 95. [Google Scholar] [CrossRef] [PubMed]

- Van Esch, R.J.C.; Cramer, I.C.; Verstappen, C.; Kloeze, C.; Bouwman, R.A.; Dekker, L.; Montenij, L.; Bergmans, J.; Stuijk, S.; Zinger, S. Camera-Based Continuous Heart and Respiration Rate Monitoring in the ICU. Appl. Sci. 2025, 15, 3422. [Google Scholar] [CrossRef]

| Construct | Operation Definitions | Measured Items |

|---|---|---|

| Perceived Usefulness (PU) | Perceived Usefulness refers to the pilot’s belief that using a drone with the MARS guide to assist in patient assessment would lead to an improvement in the process. | PU1: MARS reduced operation time PU2: drone allows the pilot to assess the patient at difficult access location |

| Perceived Ease of Use (EU) | Perceived Ease Of Use is the level of ease that the pilot experiences when using a drone with the MARS guide. | EU1: MARS would be clear and understandable EU2: I would find it easy to learn MARS EU3: I would be able to use MARS easily and become proficient in achieving my desired outcomes. |

| Intention to use (IU) | The intention to use can uncover possible challenges in drone patient assessment and offer useful insights on what works and what does not. Such feedback can facilitate a better comprehension of how users engage with the system. | IU1: I intend to use MARS to perform patient assessment IU2: Use of drones is important in patient assessment IU3: I am confident in using drones in patient assessment with MARS guide |

| Cohort A (Immediately) (n = 30) | Cohort B (Wait List) (n = 31) | p Value | |

|---|---|---|---|

| Age | 44.23 (S.D. 11.68) | 41.32 (S.D. 11.9) | 0.34 |

| Gender M | 27 | 29 | 0.62 |

| F | 3 | 2 | |

| Previous experience with drone—Yes | 15 | 15 | 0.9 |

| No | 15 | 16 | |

| Baseline unstructured assessment duration | 192.84 (S.D. 87.8) | 194.52 (S.D. 82.77) | 0.99 |

| Post-training (T1) Duration | 230.8 (S.D. 39.28) | 221.55 (S.D. 47.81) | 0.41 |

| 3 months follow-up (T2) Duration | 226.7 (S.D. 38.42) | 224.48 (S.D. 50.31) | 0.85 |

| Simulated Injured scenario | 17 | 14 | 0.37 |

| Missed diagnosis | 6 (35.29%) | 5 (35.71%) | 0.9 |

| Post-Test (Mean Second) | 3 Month Follow-Up (Mean Second) | Compare Post vs. 3 Month Timepoint | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Non Injury | SD | Injured | SD | Non Injury | SD | Injured | SD | Paired t-Test | 95% CI | |

| General | 13.6 | 2.16 | 13.9 | 2.4 | 13.8 | 2.23 | 14.16 | 2.38 | 0.47 | −0.80 to 0.38 |

| Airway | 7.17 | 2.23 | 6.87 | 1.82 | 6.9 | 2.1 | 7.06 | 1.67 | 0.85 | −0.33 to 0.39 |

| Breathing | 14.93 | 3.25 | 13.55 | 2.73 | 15.67 | 3.09 | 13.45 | 2.19 | 0.28 | −0.88 to 0.25 |

| Circulation | 61.23 | 17.5 | 60.97 | 16.29 | 58.03 | 12.42 | 61 | 16.05 | 0.59 | −4.13 to 7.34 |

| Disability | 8.1 | 1.63 | 9.32 | 2.07 | 8.23 | 1.48 | 9.65 | 2.06 | 0.24 | −0.62 to 0.16 |

| Injury | 10.93 | 1.78 | 25.74 | 3.8 | 10.83 | 1.44 | 26.23 | 4.06 | 0.4 | −0.65 to 0.27 |

| Neck | 17.3 | 1.92 | 20.06 | 2.7 | 17.87 | 1.96 | 20.1 | 2.74 | 0.3 | −0.86 to 0.27 |

| Back | 11.2 | 1.96 | 12.81 | 1.99 | 11.23 | 1.74 | 12.71 | 1.7 | 0.89 | −0.43 to 0.49 |

| Abdomen | 17.5 | 3.24 | 19.61 | 3.29 | 17.37 | 3.48 | 19.55 | 2.86 | 0.78 | −0.60 to 0.80 |

| Range of Motion | 23.47 | 4.5 | 38.48 | 4.65 | 22.97 | 4.38 | 37 | 5.65 | 0.02 | 0.19 to 1.81 |

| Stand | 14.07 | 2.12 | 27.68 | 6.54 | 14.63 | 1.71 | 27.71 | 6.19 | 0.4 | −0.99 to 0.40 |

| Total | 188.33 | 17.2 | 262.65 | 26.97 | 189.10 | 19.31 | 260.87 | 31.35 | 0.85 | −4.91 to 5.96 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chan, C.K.J.; Tung, F.L.N.; Ho, S.Y.J.; Yip, J.; Tsui, Z.; Yip, A. A Randomized Controlled Trial of ABCD-IN-BARS Drone-Assisted Emergency Assessments. Drones 2025, 9, 687. https://doi.org/10.3390/drones9100687

Chan CKJ, Tung FLN, Ho SYJ, Yip J, Tsui Z, Yip A. A Randomized Controlled Trial of ABCD-IN-BARS Drone-Assisted Emergency Assessments. Drones. 2025; 9(10):687. https://doi.org/10.3390/drones9100687

Chicago/Turabian StyleChan, Chun Kit Jacky, Fabian Ling Ngai Tung, Shuk Yin Joey Ho, Jeff Yip, Zoe Tsui, and Alice Yip. 2025. "A Randomized Controlled Trial of ABCD-IN-BARS Drone-Assisted Emergency Assessments" Drones 9, no. 10: 687. https://doi.org/10.3390/drones9100687

APA StyleChan, C. K. J., Tung, F. L. N., Ho, S. Y. J., Yip, J., Tsui, Z., & Yip, A. (2025). A Randomized Controlled Trial of ABCD-IN-BARS Drone-Assisted Emergency Assessments. Drones, 9(10), 687. https://doi.org/10.3390/drones9100687