Abstract

Despite the ecological importance of giant clams (Tridacninae), their effective management and conservation is challenging due to their widespread distribution and labour-intensive monitoring methods. In this study, we present an alternative approach to detecting and mapping clam density at Pioneer Bay on Goolboddi (Orpheus) Island on the Great Barrier Reef using drone data with a combination of deep learning tools and a geographic information system (GIS). We trained and evaluated 11 models using YOLOv5 (You Only Look Once, version 5) with varying numbers of input image tiles and augmentations (mean average precision—mAP: 63–83%). We incorporated the Slicing Aided Hyper Inference (SAHI) library to detect clams across orthomosaics, eliminating duplicate counts of clams straddling multiple tiles, and further, applied our models in three other geographic locations on the Great Barrier Reef, demonstrating transferability. Finally, by linking detections with their original geographic coordinates, we illustrate the workflow required to quantify animal densities, mapping up to seven clams per square meter in Pioneer Bay. Our workflow brings together several otherwise disparate steps to create an end-to-end approach for detecting and mapping animals with aerial drones. This provides ecologists and conservationists with actionable and clear quantitative and visual insights from drone mapping data.

Keywords:

YOLOv5; GIS; giant clam; wildlife monitoring; SAHI; deep learning; drone; artificial intelligence; geospatial analysis 1. Introduction

Giant clams (Tridacninae) are large marine bivalves that inhabit coral reefs across the Indo-Pacific and Red Sea [1]. Sometimes reaching 100 years old, these molluscs play many important roles in coral reef ecosystems [2,3]. These can range from contributing carbonate material [4,5] to providing food, structures, and shelter for many other organisms [2,6,7] while also filtering large volumes of water, thereby preventing detrimental algal blooms on reefs [3,8]. Despite their ecological importance, overfishing and habitat destruction threaten Tridacninae species all over the world.

Out of the 12 known species of tridacnines, nine are currently listed as threatened on the International Union for Conservation of Nature (IUCN) Red List, while insufficient data are available to assess the remaining three newly described ones. Furthermore, this global classification was last updated in 1996 and may not accurately reflect their status in individual countries, such as Singapore, where some species are presumed to be nationally extinct [9]. While regional efforts to promote cooperation and collaboration among nations towards sustainably managing giant clam fisheries have been limited, local initiatives have been taken to reduce exploitation.

Local sustainability initiatives such as restocking efforts in Southeast Asia using mariculture have commenced, but the success of programmes has been variable at each locality [10]. Restocking programs often do not have a set of protocols for fisheries officers and managers to follow, nor do they tend to be accompanied by regular monitoring to ascertain the success of such efforts over time. This problem is well summarized by Moore [10] (p. 138), highlighting that “poor survey and reporting protocols, together with poor funding for monitoring, have limited assessment of some reintroduction and restocking programs even to the point of failing to report successful results.” Considering these challenges, different studies have successfully managed to assess population distributions in various locations.

Several population and distribution pattern research projects have been conducted on tridacnines in various locations including Mauritius, the Philippines, Indonesia, and French Polynesia [11,12,13,14,15,16]. These surveys were all conducted by direct visual assessment, where researchers in the field counted the number of giant clams along transect lines or in quadrats [3]. These traditional methods are time-consuming, expensive, and cover only a small area, highlighting the need for more efficient ways to assess giant clam populations.

The availability of user-friendly drones (UAVs or uncrewed airborne vehicles [17]) on the market has revolutionized wildlife monitoring. These non-invasive tools excel over traditional monitoring methods by observing wildlife in remote areas that would otherwise prove challenging or costly for experts to evaluate on site [18,19,20,21,22,23]. However, with increasingly detailed cameras and vast coverage, drones produce an overwhelming amount of data [24]. Navigating this imagery to monitor wildlife manually is not only labour-intensive but also prone to oversight and human error.

As a result, growing research now focuses on automated or semi-automated techniques that delegate these tasks to computers, broadly termed artificial intelligence (AI) [25,26,27,28,29,30,31,32]. With rapid technology advancements and increased computing power, this field now equips conservationists with sophisticated and adaptable tools for automated data analysis, including wildlife monitoring. Yet, a prevailing gap exists: while many studies have developed AI algorithms to detect objects from aerial imagery [28,30,33,34,35,36,37,38,39,40], there is a shortfall in integrating these algorithms into user-friendly platforms and workflows for further geospatial analysis. The challenge now extends beyond creating effective monitoring identification algorithms to determining and operationalising their practical applications. Whether data are visualised on maps or as tabulated spreadsheets, stakeholders need a clear and/or visual understanding of these data. Yet, challenges remain in achieving this clarity, especially given the nature and volume of data that drones generate, and the lack of interoperability between feature detection algorithms and geospatial software.

The large dimensions of drone-derived orthomosaics present a challenge for data processing. These high spatial resolution datasets cannot be digested by object detection models due to their overwhelming detail and file sizes. Orthomosaics are therefore commonly tiled into smaller chunks that the algorithm can manage more readily without compromising detail. However, when an object is detected straddling the boundary between two tiles, the model mistakenly interprets it as two distinct entities, presenting a challenge for counting features of interest. A more automated and accurate technique overlaps tiles as a sweeping window across the large image, and then runs a Non-Maximum Suppression (NMS) to manage overlapping bounding boxes around a detected object [41,42]. By selecting the bounding box with the highest probability and suppressing others that have significant overlap, NMS ensures that each object is detected only once, providing cleaner and more accurate results. Recent studies have integrated NMS to create annotated orthomosaics [43], yet these methods can be computationally heavy and not user-friendly. As an alternative, the Slicing Aided Hyper Inference (SAHI) library was developed [44]. This tool facilitates object detection on large imagery as it is designed to automate the overlapping tiling, the detections, performing NMS, and managing results comprehensively [44,45]. Despite these advancements, a gap remains in combining these tools into a streamlined process tailored for specific ecological applications, such as monitoring an animal species in the wild.

To test these tools and help streamline a workflow for conservationists, our research examines giant clams on the Australian Great Barrier Reef. These endangered species, often subject to inadequate monitoring [10], provide an optimal subject for our study as their size and distinct features make them readily identifiable from low-altitude drone imagery. With giant clams as our focal point, we aim to develop a workflow that incorporates deep learning and spatial analysis to detect and map the clams within drone imagery. To achieve this, we (1) collect, process, and identify the minimum amount of training data necessary to develop a model that remains versatile and adaptable across conditions beyond its training environment; (2) apply object detection algorithms to large orthomosaics using the SAHI library; and (3) develop a pathway between object detection and further geospatial analysis in a GIS to map clam density.

2. Methods

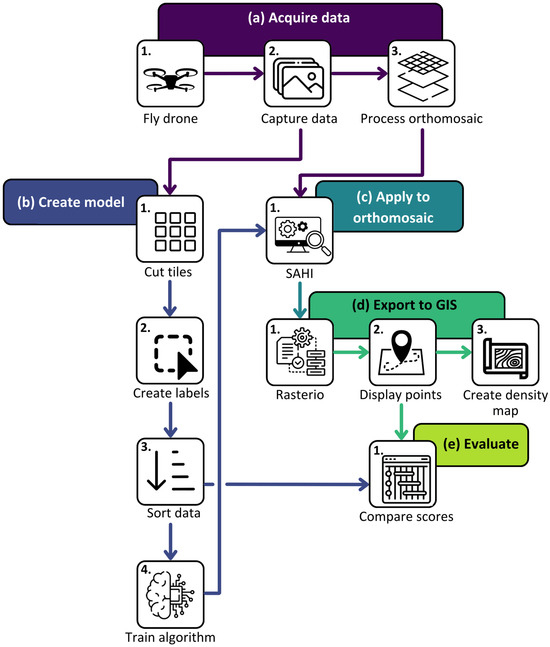

This study encompasses five components (Figure 1), described in more detail in subsequent sections. In brief, (a) drone data were captured, stored, and processed to create orthomosaics that we accessed via GeoNadir; (b) we tiled the orthomosaics into manageable sizes, labelled the clams, performed augmentations, and trained a deep learning model within YOLOv5; (c) we applied the deep learning models to complete, untiled orthomosaics using SAHI; (d) we transformed clam detections into georeferenced observations and mapped clam density in ArcGIS Pro version 3.3.1; and (e) we examined detection accuracy and confidence scores.

Figure 1.

Our workflow consists of five stages, to acquire drone mapping data, train the clam detection model, apply to drone orthomosaics, export to GIS for geospatial analysis, and final evaluation.

2.1. Study Sites

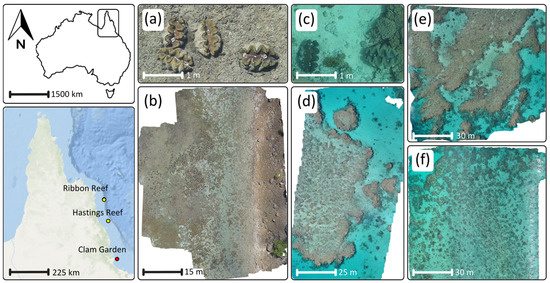

Training data were collected over the ‘clam garden’ inside Pioneer Bay (18°36′30.7′′ S 146°29′18.1′′ E) at Goolboddi (Orpheus) Island, located in the Palm Islands region in north Queensland, Australia, between September 2021 and October 2022 (Figure 2a,b). The garden was originally created as a nursery and studied in the 1980s [46,47]. Today, there are hundreds of protected clams scattered, reaching up to 130 cm in length. This location presents an optimal setting for collecting data to train a clam-detection algorithm, as the high abundance of clams and the variety of environmental factors, including tidal fluctuations, water turbidity, and sun glint, offer a rich dataset from which the algorithm can learn. Although the high clam density at this location does not reflect that found in the wild, this is not a concern since the objective of this study is to collect enough clam images for algorithmic training purposes. We sourced five drone orthomosaics over the clam garden for training purposes (orthomosaics 1–5, Figure 3). Each dataset was captured on a different date and under different environmental conditions and were used to introduce variation into the detection model to produce more robust results. All data were sourced from GeoNadir (https://www.geonadir.com), an open repository with readily accessible drone data from around the world.

Figure 2.

Locations of areas of interest for this study. (a) Close up image of a clam used for training data; (b) one of the five orthomosaics at the Goolboddi clam garden; (c) close up image of clams in ‘the wild’ (unseen data) used to test algorithm versatility; (d) South Ribbon Reef orthomosaic; (e) North Ribbon Reef orthomosaic; and (f) Hastings Reef orthomosaic. Basemap attribution for northern Queensland: ArcGIS—ESRI’s World Imagery.

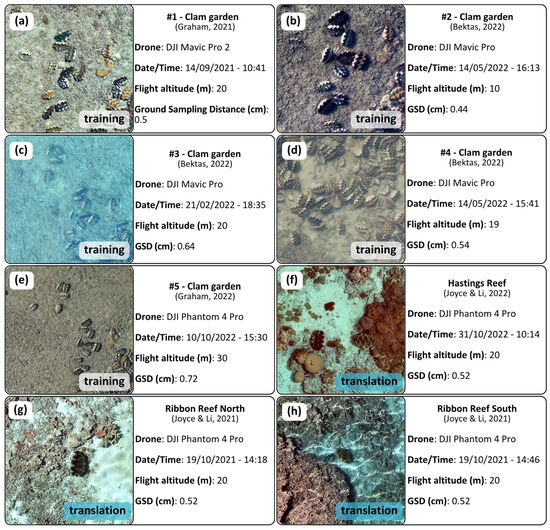

Figure 3.

Datasets with collection parameters and sample images: (a–e) Clam garden location used for training, testing, augmentations, and density mapping; and (f–h) additional translation sites with ‘unseen’ data [48,49,50,51,52,53,54].

To evaluate the algorithm’s adaptability, we also needed to apply it to unfamiliar imagery. We obtained data from three additional reefs: two from Ribbon reef (15°20’35.0′′ S 145°46’36.6′′ E and 15°21’41.8′′ S 145°46′57.0′′ E) and one from Hastings reef (16°31’26.4′′ S 146°00’11.0′′ E) (Figure 2c–f). These orthomosaics acted as the benchmark for our models, assessing its proficiency in identifying previously unobserved clams. Compiling the data for this project involved multiple drone models and researchers (Figure 3). This diversity of data is beneficial for the goal to build a robust detection model. By training on a wide range of data from various sources, the model will hopefully detect giant clams in diverse environmental conditions using different drone types.

2.2. Algorithm Development and Training

We chose You Only Look Once version 5 (YOLOv5) to train our models as it represents a balanced trade-off between detection speed and accuracy [43]. This state-of-the-art object detection algorithm offers a quick ‘off-the-shelf’ solution as it is quick and easy to deploy without architectural customizations. When training models, it is recommended to use small square images with dimensions that are multiples of 32, as the model architecture relies on downscaling the input by factors of two at multiple stages. Although YOLOv5 accommodates various inputs, we established two main tile sizes of 640 × 640 and 832 × 832 pixels to determine the optimal balance between speed and accuracy, while adequately representing the spatial scale of the clams.

To create the necessary tiles, we used the python ‘pillow’ library [55]. This method preserves the original high resolution of the images, critical for detecting small objects [56]. Directly shrinking large images to fit the input of the model would result in a loss of detail for the contained objects, compromising our ability to identify them [55].

We annotated each image tile using Roboflow’s workflow interface (www.roboflow.com), demarcating giant clams using the bounding box tool. We opted for bounding boxes over instance segmentation as our primary concern was the object’s presence or absence rather than specific dimensions. This bounding box labelling method also offered a significant advantage in terms of speed compared to instance segmentation.

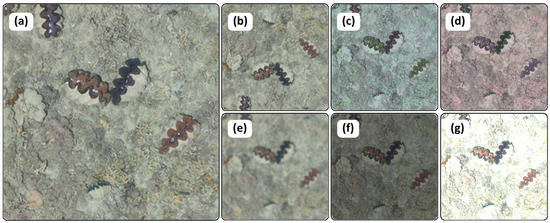

To create a robust model that was not specialized on a single dataset, we employed various data augmentations. Augmentations expand the training dataset, furnishing the model with broader instances to learn from, reducing the chance of overfitting [57]. We chose to apply four augmentations within the Roboflow workflow—flipping, hue, blur, and brightness (Figure 4). While there is no established empirical study or definitive ‘best practice’ methods to guide the augmentations to use for specific applications, we followed the lead of Suto [58] and chose options to represent a broad range of conditions that could realistically occur across our imagery. These were chosen as we believed they would help describe different scenarios in which clams could be found; especially the hue, which added a blue or pink tint at ±68°, like the testing reefs, and brightness at ±40%, which was the maximum brightness at which we would likely capture data.

Figure 4.

Four augmentations applied: (a) Original image; (b) vertical and/or horizontal flip; (c) hue (−68°); (d) hue (+68°); (e) blur (up to 2.5 pixels); (f) brightness (−40%); and (g) brightness (+40%).

We partitioned our total labelled image tiles into distinct training and validation subsets with a 70%/30% split based on the original labelled images. For example, if we had 100 labelled images, 70 were allocated for training and 30 for validation. The augmentations were then applied exclusively to the 70 images used for training (validation images stayed the same). In Roboflow, the augmentation process triples the number of training images, so with 70 labelled images, we generated 210 total images for training—70 original images plus 140 augmented images. To further clarify this process, the augmentations were not applied in isolation (e.g., 30 images for hue adjustment, 30 for brightness…etc.). Instead, the augmentations were applied in combination, resulting in a diverse set of augmented images where multiple parameters were adjusted simultaneously.

Dividing our labelled data in this manner fosters robust models that exhibit strong generalization capabilities [45]. The total number of labelled images needed to train a robust algorithm cannot be fixed as it depends on several factors, including image quality, model architecture, and complexity. Nevertheless, previous research has shown that for detecting a single class with relatively distinct features, a few hundred to a few thousand images are sufficient [30,59]. We built and evaluated 11 different models, driven by varying numbers of images and augmentations (Table 1), to determine the minimal amount of training data required to construct a robust algorithm. Once the datasets were generated in Roboflow, we exported them in the required YOLOv5 format to train the models in a Google Colab session.

Table 1.

Total number of training images used for each detection model. For models 9 to 11, the added 832 × 832-pixel images were resized in Roboflow to fit within a 640 × 640 tile.

We trained our models without modifying any of the architecture from the original GitHub repository. Given the intense computational requirements of these models, we ran them on Google Colab sessions powered by V100 GPUs. The models were trained using the YOLOv5 ‘small’ variant (Yolov5s) with a batch size of eight on 300 epochs. Once the training model was complete, all results and weights were saved locally.

2.3. Model Application

After creating the models based on the clam garden, we tested their generalization abilities using the two untrained orthomosaics from Ribbon Reef and one from Hastings Reef. To manage the large orthomosaics, we used the SAHI library [44]. This allowed us to detect clams across the entire orthomosaic using a sliding window approach, rather than from discrete tiles, accounting for clams that straddle more than one tile. We configured the SAHI tool to scan the image using a 0.3 height and width ratio. This means that on a 640 × 640-pixel image, the window would shift by 192 pixels. We deployed the model locally (Table 2) with a confidence threshold of 10% to observe the kinds of detections it identified. Additionally, we used GREEDYNMM for post-processing, with a match threshold of 0.75. This means that if two bounding boxes overlapped by more than 75%, the smaller one would be merged with the larger one. The working environment was set up in Anaconda (23.2.1) using a variety of libraries (Table 3).

Table 2.

Computer component specifications.

Table 3.

Anaconda libraries used for analysis.

2.4. Geospatial Analysis

After processing an orthomosaic, the algorithm saved the detection labels in a “coco_detection” text file. We then transformed these data to an Excel sheet, where each row represented a distinct detection point with its respective x and y image coordinates. We translated these image coordinates into geographic coordinates—longitude and latitude. We achieved this using the ‘rasterio’ library, correlating the spreadsheet data with the georeferenced data from the orthomosaic. We then imported the spreadsheet into ArcGIS Pro and plotted the detections as points using the longitude and latitude information. By overlaying these points on the georeferenced orthomosaics, we could assess the accuracy of our model visually and by examining the detections and their corresponding confidence scores in the point attribute table.

To calculate the density of clams per square meter, we conducted a spatial join between a 1 × 1 m polygon fishnet and the point detections.

3. Results

3.1. Algorithm Development and Training

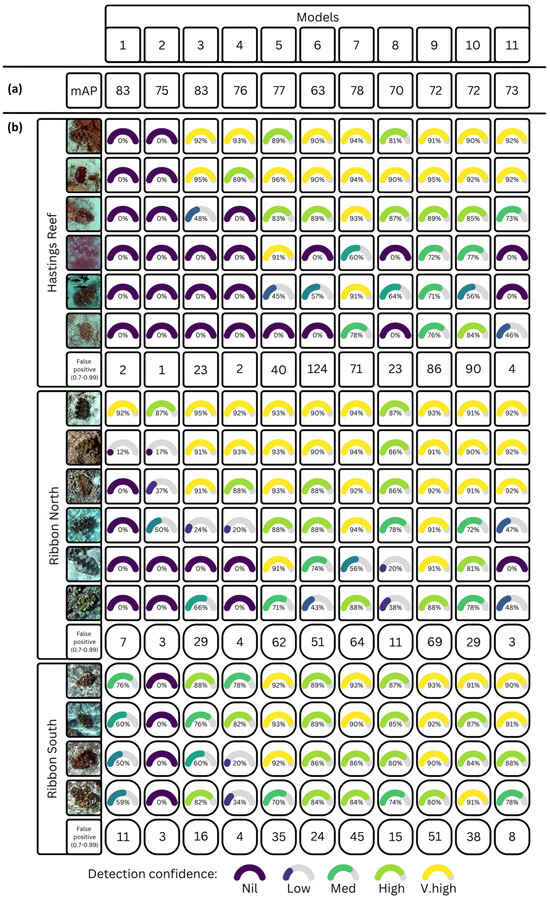

The mean average precision (mAP@50-95%) scores range from 63 (model six) to 83 (models one and three), with the remaining models between 70 and 77 (Figure 5a). However, while this metric is commonly used to report on algorithm effectiveness, it does not tell the complete story. When applying the algorithm to the three unseen datasets from Hastings and Ribbon Reefs, we see that the detection ability, confidence scores, and false detections vary widely (Figure 5b). So, despite the highest mAP score for model one, it failed to detect any clams on Hastings reef, and missed most of those on Ribbon North. Yet, if keeping the number of false positives low is required, model one remains strong (n = 20).

Figure 5.

(a) Mean average precision (mAP@50-95%) of the different models at Pioneer Bay; and (b) detection confidence scores on each clam in the unseen data at Hastings Reef, Ribbon North, and Ribbon South, including the count of false positives at each site where the confidence in detection was greater than 70%.

Model nine performs best, with high confidence detections across Hastings and Ribbon reefs. However it also has the highest combined number of false detections (n = 206). This means that it is likely to considerably overestimate the presence of clams. Yet, with only 600 labelled image tiles and no augmentations, this is a cost-efficient option. Model 11 shows more promising results, with very few false positive detections (n = 15); however it does struggle to detect some of the more distorted clams on Ribbon North and Hastings reefs. Model 10 performs well in detecting all clams on the translation sites, but as with model nine also contains a high number of false positives (n = 157).

3.2. Model Application

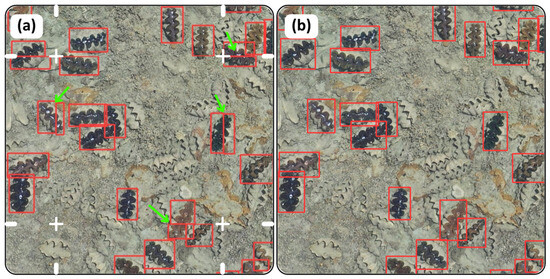

The SAHI library demonstrated better results than tiling and reconstructing a large image. This is because clams that straddle more than one tile are counted more than once (Figure 6). By contrast, the orthomosaic run on SAHI showed the correct number of detections without any slicing. For example, Figure 6 contains 26 clams displayed from orthomosaic 1 of the clam garden, but the tiling method over-counts the number of clams (n = 31) due to duplication.

Figure 6.

Comparison between (a) the results from the detection algorithm after reconstructing tiles; and (b) applying SAHI script with the detection algorithm. The white lines on (a) represent where the tiles are sectioned, and the green arrows indicate clams that straddle tiles, and therefore are counted twice. Note: Only live clams, identifiable by their colourful mantles, are of interest and counted. The other clams visible in the images are deceased, with only their calcium carbonate shells remaining.

While SAHI generated better results, we also tested the difference in speed between models 2, 4, 6, and 8, which ran on a sliding window of 832 pixels by 832, and compared to other models based on a 640 × 640 window. On average, across all models and all three orthomosaics, the 640-square-pixel window ran 58% longer than the 832-square-pixel window, having to go on average through 71% more tiles.

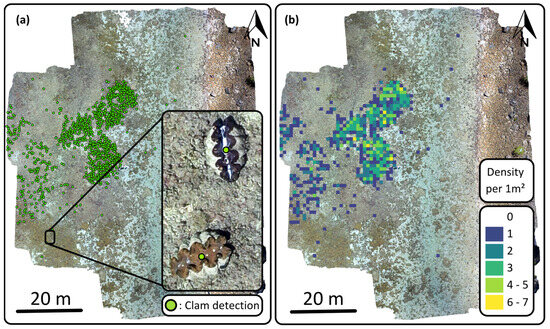

3.3. Geospatial Analysis

Once the different models were created and applied to large orthomosaic images, we were successfully able to use the rasterio library to export the detections into ArcGIS Pro (Figure 7). The script placed the points at the centre of the objects for greater accuracy. Using the 1 × 1 m fishnet, we determined that the density of clams varies, up to seven clams per square meter.

Figure 7.

Clam garden orthomosaic number one with (a) point detection results and (b) associated density map.

4. Discussion

4.1. Algorithm Development and Training

Our analysis of the 11 models highlights a range of efficiencies to identify giant clams in unseen drone imagery. We see that with only 600 labelled image tiles such as in model nine, we were able to generate good adaptability to unseen data or high precision in densely populated areas. However, while some models showed a clear advantage over others, the reliance on mean average precision (mAP) as the sole metric to compare their efficacy is flawed. It became evident in our study that mAP does not necessarily reflect the true utility of a model, especially when considering our desire to transfer the model to unseen data in a different geographic location. It is accepted that the mAP score is heavily influenced by the dataset’s difficulty, meaning a high mAP on an easy dataset does not necessarily mean the model will perform well on a challenging dataset [60,61,62]. Finding alternative methods to represent model results is crucial for determining their applicability to real-world scenarios. As demonstrated in our study, visual tabulation of detections offers valuable insights into a model’s performance beyond traditional metrics. This aids in determining which models are best suited for unseen applications, especially when the primary objective is adaptability.

While many studies delve deeper into enhancing a model’s adaptability [63,64,65,66,67,68], these often involve significant changes such as altered model architectures. Such complex modifications might be beyond the skillset of conservationists, whose primary aims are to census/monitor wildlife. While we also detail a complete workflow to detect and map features, sourcing and implementing the number of different packages used to achieve this goal is not straightforward. For less technical users, it is likely that it will remain a challenge to access and integrate each of these steps.

For studies tasked to manually survey rare and sparse objects in extensive high-spatial-resolution imagery such as bird nests in cities or whales from satellite imagery [69,70,71,72], and where the primary effort is spent detecting rather than counting, our method offers a viable solution. As we searched for clams in our ‘translation’ or unseen datasets, it was somewhat of a ‘needle in a haystack’ effort. Thus, detecting other similarly hard-to-spot objects in extensive imagery requires a flexible algorithm. Despite having limited training data and rudimentary model architectures, we demonstrated how to craft an adaptable algorithm to fit this purpose. However, a trade-off with such flexibility creates the potential surge in false positives, implying a subsequent filtering step might be necessary.

The workflow presented in this study holds value for future studies focused on species or objects that display uniformity in appearance like clams. It is also particularly helpful that these are not mobile animals. In some studies, where the scenario involves counting large congregations of wildlife or objects that have similar attributes and cluster densely [19,73], our method offers speed and precision without demanding vast adaptability. In essence, our research bridges the gap between intricate AI advancements and practical conservation needs, offering tools that are both effective and accessible to those in the field.

4.2. Model Application

The SAHI library was effective in applying our models to orthomosaics without losing detail or multiplying the count of objects that straddle more than one tile. Previous studies have used methods such as counting tile averages to circumvent this issue [30,74,75], making the process more complicated and subject to error. With our approach, we show that the process can be both simpler and more accurate. Earlier works also used methods similar to SAHI but had to run their own custom scripts for applying the algorithms, and then, a running non-maximum suppression [43,45]. Using YOLOv5 with SAHI makes the process smoother and more user-friendly. Bomantara et al. [76] used this combination for detecting artificial seed-like objects in UAV imagery but concluded their research upon confirming a functional algorithm. We have taken this approach to the next stage by providing the steps required to extract and visualize detected objects within a GIS environment for further analysis.

4.3. Geospatial Analysis

This research advanced beyond merely detecting objects by exporting these detections and integrating them into a GIS. Historically, some studies have opted for alternate strategies to generate density maps from orthomosaics [43,77]. For instance, Li et al. [30] detected sea cucumbers in discrete drone images, then manually tallied these detections. They subsequently georeferenced the individual images, crafting density maps in ArcGIS that showcased sea cucumber distribution, avoiding the use of an orthomosaic. While the approach of these authors provides a foundational method, our study offers a finer and more detailed automated analysis. Varga’s [45] study on beach footprints was able to generate density maps from drone detections, but this involved using custom scripts for their data. Our workflow simplifies this process with readily available libraries. We have illustrated a pathway for conservationists: acquiring data, developing and testing algorithms, and applying them in practical scenarios, culminating in readily interpretable results.

Our method offers more than just a structured workflow for data collection and analysis; it also successfully crafts an algorithm to detect giant clams. Traditional studies often rely on divers conducting transect counts [11,12,78], but with our approach, data collection could be more efficient and comprehensive over time, providing the conditions are appropriate for data capture. For optimal data capture, calm and clear oceanic conditions are essential, preferably at low tide while avoiding the high sunglint period during the middle of the day [79]. Turbidity and rough waters can hinder visibility, which are common limitations when deploying drones in marine settings. Some of these challenges can also be overcome by deploying remotely operated surface and underwater vehicles to capture the data. These data could then be processed using the method described above.

4.4. Future Work

Our presented workflow offers a preliminary structure for conservationists aiming to enhance their analytical efficiency using drone mapping data. However, there are areas that warrant further research.

(1) A key area to consider is the model’s adaptability. Specifically, there is a need to address the high number of false positives from adaptable models. Our tests with null imagery reduced these false positives, but this also led to missing some clams. Exploring different data augmentations and an adjusted null imagery may help in achieving a better balance between these outcomes. Future research might explore workflows which ease domain adaptation techniques, such as modifying select hyperparameters to bolster transfer learning, as highlighted in studies like the DAYOLOv5 approach for industrial defect inspection [65].

(2) In this study we used drone mapping data that were readily available and were captured by other people for their own reasons unrelated to our work. This means that we did not test or evaluate optimal capture parameters for our feature of interest. In particular, the flight altitude greatly affects the ground sample distance (GSD) or spatial detail of the resulting data. In a compromise between capturing broad areas efficiently and retaining the spatial detail required for the detection model to work, it would be valuable to determine the altitude threshold beyond which the algorithm loses its detection capability. This could be achieved with a desktop study by systematically degrading or resampling the orthomosaics to higher GSDs to simulate higher flight altitudes. Studies could further consider images of varied sizes, beyond the two array size tiles we explored, providing insights into the trade-offs between algorithm speed and accuracy.

(3) If the size, shape, or orientation of the object to be detected is important, moving from bounding boxes to instance segmentation will be required. This would also allow detections to be converted into georeferenced polygons, enabling further spatial analyses using GIS, and is particularly helpful for estimating overall coverage of detected features, or dimensions of individuals.

(4) Now that we have illustrated a complete workflow that addresses detecting features in an orthomosaic and further analysing them in a GIS, we encourage further studies to refine and simplify this process for non-technically trained analysts. We recognise that the number of libraries and software packages to source and implement may be outside the expertise of many who would otherwise find this workflow useful. Having a single tool that enables users to detect and map in this way will be a welcome improvement.

5. Conclusions

Our study tackled the challenge of analysing large drone image orthomosaics to map giant clams on the Great Barrier Reef. We developed and evaluated 11 models using varying amounts of input training image tiles and augmentations to determine that a minimum of 600 labelled tiles were required to detect giant clams in unseen data with YOLOv5. We achieved higher accuracies when adopting the SAHI model to detect clams on an entire orthomosaic rather than in discrete tiles, which otherwise tended to duplicate counts of features that straddled multiple tiles. We successfully applied our model to unseen data on two different coral reefs and were able to accurately detect the clams in those locations. With an effective way to detect clams across broad areas, we extended the workflow to derive geographically referenced information for each clam detection for further analysis in a GIS. This allowed us to visualize and quantify giant clam densities reaching up to seven individuals per square meter at Pioneer Bay on Goolboddi (Orpheus Island). We have concentrated this study on providing an end-to-end workflow for analysing drone data to count and map giant clams, linking together a range of tools, software, and libraries to achieve this goal. Our workflow provides a blueprint for more efficient and accurate wildlife monitoring using drone data and artificial intelligence, and with broader potential for applications in conservation.

Author Contributions

Conceptualization, O.D. and K.E.J.; methodology, O.D. and K.E.J.; data processing, O.D.; formal analysis, O.D.; writing—original draft preparation, O.D.; writing—review and editing, K.E.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

All data used for this research is publicly available at www.geonadir.com—see reference listing for details. The labelled data are publicly available on www.roboflow.com: https://universe.roboflow.com/clams/detecting-giant-clams-from-drone-imagery-on-the-great-barrier-reef, accessed on 10 August 2024. Requests for the specific code used in this study can be directed to the authors.

Acknowledgments

We thank Joan Li for technical support, and to those who generously mak their drone mapping data findable, accessible, interoperable, and reusable (FAIR) on GeoNadir.

Conflicts of Interest

K.E.J. is the co-founder of GeoNadir.

References

- Watson, S.A.; Neo, M.L. Conserving threatened species during rapid environmental change: Using biological responses to inform management strategies of giant clams. Conserv. Physiol. 2021, 9, coab082. [Google Scholar] [CrossRef] [PubMed]

- Neo, M.L.; Eckman, W.; Vicentuan, K.; Teo, S.L.-M.; Todd, P.A. The ecological significance of giant clams in coral reef ecosystems. Biol. Conserv. 2015, 181, 111–123. [Google Scholar] [CrossRef]

- Neo, M.L.; Wabnitz, C.C.C.; Braley, R.D.; Heslinga, G.A.; Fauvelot, C.; Van Wynsberge, S.; Andréfouët, S.; Waters, C.; Tan, A.S.-H.; Gomez, E.D.; et al. Giant Clams (Bivalvia: Cardiidae: Tridacninae): A Comprehensive Update of Species and Their Distribution, Current Threats and Conservation Status. Oceanogr. Mar. Biol. Annu. Rev. 2017, 55, 2–303. [Google Scholar]

- Mallela, J.; Perry, C. Calcium carbonate budgets for two coral reefs affected by different terrestrial runoff regimes, Rio Bueno, Jamaica. Coral Reefs 2007, 26, 129–145. [Google Scholar] [CrossRef]

- Rossbach, S.; Anton, A.; Duarte, C.M. Drivers of the abundance of Tridacna spp. Giant clams in the red sea. Front. Mar. Sci. 2021, 7, 592852. [Google Scholar] [CrossRef]

- Calumpong, H.P. The Giant Clam: An Ocean Culture Manual; Australian Centre for International Agricultural Research: Canberra, Australia, 1992. [Google Scholar]

- Govan, H.; Fabro, L.; Ropeti, E. Controlling Predators of Cultured Tridacnid Clams; ACIAR: Canberra, Australia, 1993. [Google Scholar]

- Klumpp, D.; Griffiths, C. Contributions of phototrophic and heterotrophic nutrition to the metabolic and growth requirements of four species of giant clam (Tridacnidae). Mar. Ecol. Prog. Ser. 1994, 115, 103–115. [Google Scholar] [CrossRef]

- Neo, M.L.; Todd, P.A. Conservation status reassessment of giant clams (Mollusca: Bivalvia: Tridacninae) in Singapore. Nat. Singap. 2013, 6, 125–133. [Google Scholar]

- Moore, D. Farming Giant Clams in 2021: A Great Future for the ‘Blue Economy’of Tropical Islands. In Aquaculture: Ocean Blue Carbon Meets UN-SDGS; Springer: Berlin/Heidelberg, Germany, 2022; pp. 131–153. [Google Scholar]

- Andréfouët, S.; Friedman, K.; Gilbert, A.; Remoissenet, G. A comparison of two surveys of invertebrates at Pacific Ocean islands: The giant clam at Raivavae Island, Australes Archipelago, French Polynesia. ICES J. Mar. Sci. 2009, 66, 1825–1836. [Google Scholar] [CrossRef]

- Andréfouët, S.; Gilbert, A.; Yan, L.; Remoissenet, G.; Payri, C.; Chancerelle, Y. The remarkable population size of the endangered clam Tridacna maxima assessed in Fangatau Atoll (Eastern Tuamotu, French Polynesia) using in situ and remote sensing data. ICES J. Mar. Sci. 2005, 62, 1037–1048. [Google Scholar] [CrossRef]

- Friedman, K.; Teitelbaum, A. Re-Introduction of Giant Clams in the Indo-Pacific. Global Reintroduction Perspectives: Re-Introduction Case-Studies from around the Globe; Soorae, P., Ed.; IUCN/SSC Re-Introduction Specialist Group: Abu Dhabi, United Arab Emirates, 2008; pp. 4–10. [Google Scholar]

- Gomez, E.D.; Mingoa-Licuanan, S.S. Achievements and lessons learned in restocking giant clams in the Philippines. Fish. Res. 2006, 80, 46–52. [Google Scholar] [CrossRef]

- Naguit, M.R.A.; Rehatta, B.M.; Calumpong, H.P.; Tisera, W.L. Ecology and genetic structure of giant clams around Savu Sea, East Nusa Tenggara province, Indonesia. Asian J. Biodivers. 2013, 3, 174–194. [Google Scholar] [CrossRef]

- Ramah, S.; Taleb-Hossenkhan, N.; Todd, P.A.; Neo, M.L.; Bhagooli, R. Drastic decline in giant clams (Bivalvia: Tridacninae) around Mauritius Island, Western Indian Ocean: Implications for conservation and management. Mar. Biodivers. 2019, 49, 815–823. [Google Scholar] [CrossRef]

- Joyce, K.E.; Anderson, K.; Bartolo, R.E. Of Course We Fly Unmanned—We’re Women! Drones 2021, 5, 21. [Google Scholar] [CrossRef]

- Chabot, D.; Dillon, C.; Ahmed, O.; Shemrock, A. Object-based analysis of UAS imagery to map emergent and submerged invasive aquatic vegetation: A case study. J. Unmanned Veh. Syst. 2016, 5, 27–33. [Google Scholar] [CrossRef]

- Drever, M.C.; Chabot, D.; O’Hara, P.D.; Thomas, J.D.; Breault, A.; Millikin, R.L. Evaluation of an unmanned rotorcraft to monitor wintering waterbirds and coastal habitats in British Columbia, Canada. J. Unmanned Veh. Syst. 2015, 3, 256–267. [Google Scholar] [CrossRef]

- Kelaher, B.P.; Peddemors, V.M.; Hoade, B.; Colefax, A.P.; Butcher, P.A. Comparison of sampling precision for nearshore marine wildlife using unmanned and manned aerial surveys. J. Unmanned Veh. Syst. 2019, 8, 30–43. [Google Scholar] [CrossRef]

- Pomeroy, P.; O’connor, L.; Davies, P. Assessing use of and reaction to unmanned aerial systems in gray and harbor seals during breeding and molt in the UK. J. Unmanned Veh. Syst. 2015, 3, 102–113. [Google Scholar] [CrossRef]

- Oleksyn, S.; Tosetto, L.; Raoult, V.; Joyce, K.E.; Williamson, J.E. Going Batty: The Challenges and Opportunities of Using Drones to Monitor the Behaviour and Habitat Use of Rays. Drones 2021, 5, 12. [Google Scholar] [CrossRef]

- Williamson, J.E.; Duce, S.; Joyce, K.E.; Raoult, V. Putting sea cucumbers on the map: Projected holothurian bioturbation rates on a coral reef scale. Coral Reefs 2021, 40, 559–569. [Google Scholar] [CrossRef]

- Joyce, K.E.; Fickas, K.C.; Kalamandeen, M. The unique value proposition for using drones to map coastal ecosystems. Camb. Prism. Coast. Futures 2023, 1, e6. [Google Scholar] [CrossRef]

- Badawy, M.; Direkoglu, C. Sea turtle detection using faster r-cnn for conservation purpose. In Proceedings of the 10th International Conference on Theory and Application of Soft Computing, Computing with Words and Perceptions-ICSCCW-2019, Prague, Czech Republic, 27–28 August 2019; pp. 535–541. [Google Scholar]

- Dujon, A.M.; Ierodiaconou, D.; Geeson, J.J.; Arnould, J.P.; Allan, B.M.; Katselidis, K.A.; Schofield, G. Machine learning to detect marine animals in UAV imagery: Effect of morphology, spacing, behaviour and habitat. Remote Sens. Ecol. Conserv. 2021, 7, 341–354. [Google Scholar] [CrossRef]

- Gray, P.C.; Chamorro, D.F.; Ridge, J.T.; Kerner, H.R.; Ury, E.A.; Johnston, D.W. Temporally Generalizable Land Cover Classification: A Recurrent Convolutional Neural Network Unveils Major Coastal Change through Time. Remote Sens. 2021, 13, 3953. [Google Scholar] [CrossRef]

- Gray, P.C.; Fleishman, A.B.; Klein, D.J.; McKown, M.W.; Bezy, V.S.; Lohmann, K.J.; Johnston, D.W. A convolutional neural network for detecting sea turtles in drone imagery. Methods Ecol. Evol. 2019, 10, 345–355. [Google Scholar] [CrossRef]

- Hopkinson, B.M.; King, A.C.; Owen, D.P.; Johnson-Roberson, M.; Long, M.H.; Bhandarkar, S.M. Automated classification of three-dimensional reconstructions of coral reefs using convolutional neural networks. PLoS ONE 2020, 15, e0230671. [Google Scholar] [CrossRef] [PubMed]

- Li, J.Y.; Duce, S.; Joyce, K.E.; Xiang, W. SeeCucumbers: Using Deep Learning and Drone Imagery to Detect Sea Cucumbers on Coral Reef Flats. Drones 2021, 5, 28. [Google Scholar] [CrossRef]

- Saqib, M.; Khan, S.D.; Sharma, N.; Scully-Power, P.; Butcher, P.; Colefax, A.; Blumenstein, M. Real-time drone surveillance and population estimation of marine animals from aerial imagery. In Proceedings of the 2018 International Conference on Image and Vision Computing New Zealand (IVCNZ), Auckland, New Zealand, 19–21 November 2018; pp. 1–6. [Google Scholar]

- Harasyn, M.L.; Chan, W.S.; Ausen, E.L.; Barber, D.G. Detection and tracking of belugas, kayaks and motorized boats in drone video using deep learning. Drone Syst. Appl. 2022, 10, 77–96. [Google Scholar] [CrossRef]

- Barbedo, J.G.A.; Koenigkan, L.V.; Santos, T.T.; Santos, P.M. A study on the detection of cattle in UAV images using deep learning. Sensors 2019, 19, 5436. [Google Scholar] [CrossRef]

- Borowicz, A.; Le, H.; Humphries, G.; Nehls, G.; Höschle, C.; Kosarev, V.; Lynch, H.J. Aerial-trained deep learning networks for surveying cetaceans from satellite imagery. PLoS ONE 2019, 14, e0212532. [Google Scholar] [CrossRef]

- Green, K.M.; Virdee, M.K.; Cubaynes, H.C.; Aviles-Rivero, A.I.; Fretwell, P.T.; Gray, P.C.; Johnston, D.W.; Schönlieb, C.B.; Torres, L.G.; Jackson, J.A. Gray whale detection in satellite imagery using deep learning. Remote Sens. Ecol. Conserv. 2023, 9, 829–840. [Google Scholar] [CrossRef]

- Nategh, M.N.; Zgaren, A.; Bouachir, W.; Bouguila, N. Automatic counting of mounds on UAV images: Combining instance segmentation and patch-level correction. In Proceedings of the 2022 21st IEEE International Conference on Machine Learning and Applications (ICMLA), Nassau, Bahamas, 12–14 December 2022; pp. 375–381. [Google Scholar]

- Psiroukis, V.; Espejo-Garcia, B.; Chitos, A.; Dedousis, A.; Karantzalos, K.; Fountas, S. Assessment of different object detectors for the maturity level classification of broccoli crops using uav imagery. Remote Sens. 2022, 14, 731. [Google Scholar] [CrossRef]

- Puliti, S.; Astrup, R. Automatic detection of snow breakage at single tree level using YOLOv5 applied to UAV imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102946. [Google Scholar] [CrossRef]

- Veeranampalayam Sivakumar, A.N. Mid to Late Season Weed Detection in Soybean Production Fields Using Unmanned Aerial Vehicle and Machine Learning; University of Nebraska: Lincoln, NE, USA, 2019. [Google Scholar]

- Yildirim, E.; Nazar, M.; Sefercik, U.G.; Kavzoglu, T. Stone Pine (Pinus pinea L.) Detection from High-Resolution UAV Imagery Using Deep Learning Model. In Proceedings of the IGARSS 2022–2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 441–444. [Google Scholar]

- Hosang, J.; Benenson, R.; Schiele, B. Learning non-maximum suppression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4507–4515. [Google Scholar]

- Van Etten, A. You only look twice: Rapid multi-scale object detection in satellite imagery. arXiv 2018, arXiv:1805.09512. [Google Scholar]

- Hofinger, P.; Klemmt, H.-J.; Ecke, S.; Rogg, S.; Dempewolf, J. Application of YOLOv5 for Point Label Based Object Detection of Black Pine Trees with Vitality Losses in UAV Data. Remote Sens. 2023, 15, 1964. [Google Scholar] [CrossRef]

- Akyon, F.C.; Altinuc, S.O.; Temizel, A. Slicing aided hyper inference and fine-tuning for small object detection. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 966–970. [Google Scholar]

- Varga, L. Identifying Anthropogenic Pressure on Beach Vegetation by Means of Detecting and Counting Footsteps on UAV Images. Master’s Thesis, Utrecht University, Utrecht, The Netherlands, 2023. [Google Scholar]

- Lucas, J.; Lindsay, S.; Braley, R.; Whitford, J. Density of clams and depth reduce growth in grow-out culture of Tridacna gigas. In ACIAR Proceedings: Proceedings of the 7th International Coral Reef Symposium, Guam, Micronesia, 21–26 June 1992; Australian Centre for International Agricultural Research: Canberra, Australia, 1993; p. 67. [Google Scholar]

- Moorhead, A. Giant clam aquaculture in the Pacific region: Perceptions of value and impact. Dev. Pract. 2018, 28, 624–635. [Google Scholar] [CrossRef]

- Graham, E. Orpheus Clam Farm 2022. Available online: https://data.geonadir.com/image-collection-details/1495?workspace=37b63ceb-e6c1-45d2-afa9-0e6dabc03a49-8369 (accessed on 15 August 2024).

- Bektas, T. Pioneer Bay, Orpheus Island Clam Gardens Section. Available online: https://data.geonadir.com/image-collection-details/963?workspace=37b63ceb-e6c1-45d2-afa9-0e6dabc03a49-8369 (accessed on 15 August 2024).

- Bektas, T. Pioneer Bay Clam Gardens. Available online: https://data.geonadir.com/image-collection-details/964?workspace=37b63ceb-e6c1-45d2-afa9-0e6dabc03a49-8369 (accessed on 15 August 2024).

- Bektas, T. Clam Gardens/Coastal Substrate. Available online: https://data.geonadir.com/image-collection-details/652?workspace=37b63ceb-e6c1-45d2-afa9-0e6dabc03a49-8369 (accessed on 15 August 2024).

- Joyce, K.E.; Li, J.Y. Ribbon 5 North Oct 2021. Available online: https://data.geonadir.com/image-collection-details/457?workspace=37b63ceb-e6c1-45d2-afa9-0e6dabc03a49-8369 (accessed on 15 August 2024).

- Joyce, K.E.; Li, J.Y. Ribbon 5 Middle Oct 2021. Available online: https://data.geonadir.com/image-collection-details/456?workspace=37b63ceb-e6c1-45d2-afa9-0e6dabc03a49-8369 (accessed on 15 August 2024).

- Joyce, K.E.; Li, J.Y. West Hastings Reef Flat. Available online: https://data.geonadir.com/image-collection-details/1560?workspace=37b63ceb-e6c1-45d2-afa9-0e6dabc03a49-8369 (accessed on 15 August 2024).

- Clark, J.A. Pillow (PIL Fork) 10.4.0 Documentation. Available online: https://pillow.readthedocs.io/en/stable/ (accessed on 15 August 2024).

- Ozge Unel, F.; Ozkalayci, B.O.; Cigla, C. The power of tiling for small object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Kaur, P.; Khehra, B.S.; Mavi, E.B.S. Data Augmentation for Object Detection: A Review. In Proceedings of the 2021 IEEE International Midwest Symposium on Circuits and Systems (MWSCAS), Lansing, MI, USA, 9–11 August 2021; pp. 537–543. [Google Scholar]

- Suto, J. Improving the generalization capability of YOLOv5 on remote sensed insect trap images with data augmentation. Multimed. Tools Appl. 2024, 83, 27921–27934. [Google Scholar] [CrossRef]

- Norouzzadeh, M.S.; Nguyen, A.; Kosmala, M.; Swanson, A.; Palmer, M.S.; Packer, C.; Clune, J. Automatically identifying, counting, and describing wild animals in camera-trap images with deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, E5716–E5725. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Reinke, A.; Tizabi, M.D.; Baumgartner, M.; Eisenmann, M.; Heckmann-Nötzel, D.; Kavur, A.E.; Rädsch, T.; Sudre, C.H.; Acion, L.; Antonelli, M. Understanding metric-related pitfalls in image analysis validation. arXiv 2023, arXiv:2302.01790. [Google Scholar] [CrossRef]

- Reinke, A.; Tizabi, M.D.; Sudre, C.H.; Eisenmann, M.; Rädsch, T.; Baumgartner, M.; Acion, L.; Antonelli, M.; Arbel, T.; Bakas, S. Common limitations of image processing metrics: A picture story. arXiv 2021, arXiv:2104.05642. [Google Scholar]

- Zhou, H.; Jiang, F.; Lu, H. SSDA-YOLO: Semi-supervised domain adaptive YOLO for cross-domain object detection. Comput. Vis. Image Underst. 2023, 229, 103649. [Google Scholar] [CrossRef]

- Zhao, T.; Zhang, G.; Zhong, P.; Shen, Z. DMDnet: A decoupled multi-scale discriminant model for cross-domain fish detection. Biosyst. Eng. 2023, 234, 32–45. [Google Scholar] [CrossRef]

- Li, C.; Yan, H.; Qian, X.; Zhu, S.; Zhu, P.; Liao, C.; Tian, H.; Li, X.; Wang, X.; Li, X. A domain adaptation YOLOv5 model for industrial defect inspection. Measurement 2023, 213, 112725. [Google Scholar] [CrossRef]

- Lai, J.; Liang, Y.; Kuang, Y.; Xie, Z.; He, H.; Zhuo, Y.; Huang, Z.; Zhu, S.; Huang, Z. IO-YOLOv5: Improved Pig Detection under Various Illuminations and Heavy Occlusion. Agriculture 2023, 13, 1349. [Google Scholar] [CrossRef]

- Kim, J.; Huh, J.; Park, I.; Bak, J.; Kim, D.; Lee, S. Small object detection in infrared images: Learning from imbalanced cross-domain data via domain adaptation. Appl. Sci. 2022, 12, 11201. [Google Scholar] [CrossRef]

- Gheisari, M.; Baghshah, M.S. Joint predictive model and representation learning for visual domain adaptation. Eng. Appl. Artif. Intell. 2017, 58, 157–170. [Google Scholar] [CrossRef]

- Blight, L.K.; Bertram, D.F.; Kroc, E. Evaluating UAV-based techniques to census an urban-nesting gull population on Canada’s Pacific coast. J. Unmanned Veh. Syst. 2019, 7, 312–324. [Google Scholar] [CrossRef]

- Charry, B.; Tissier, E.; Iacozza, J.; Marcoux, M.; Watt, C.A. Mapping Arctic cetaceans from space: A case study for beluga and narwhal. PLoS ONE 2021, 16, e0254380. [Google Scholar] [CrossRef] [PubMed]

- Oosthuizen, W.C.; Krüger, L.; Jouanneau, W.; Lowther, A.D. Unmanned aerial vehicle (UAV) survey of the Antarctic shag (Leucocarbo bransfieldensis) breeding colony at Harmony Point, Nelson Island, South Shetland Islands. Polar Biol. 2020, 43, 187–191. [Google Scholar] [CrossRef]

- Cubaynes, H.C.; Fretwell, P.T.; Bamford, C.; Gerrish, L.; Jackson, J.A. Whales from space: Four mysticete species described using new VHR satellite imagery. Mar. Mammal Sci. 2019, 35, 466–491. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Baylis, S.M.; Mott, R.; Herrod, A.; Clarke, R.H. Precision wildlife monitoring using unmanned aerial vehicles. Sci. Rep. 2016, 6, 22574. [Google Scholar] [CrossRef]

- Zhang, L.; Zhou, X.; Li, B.; Zhang, H.; Duan, Q. Automatic shrimp counting method using local images and lightweight YOLOv4. Biosyst. Eng. 2022, 220, 39–54. [Google Scholar] [CrossRef]

- Lyons, M.B.; Brandis, K.J.; Murray, N.J.; Wilshire, J.H.; McCann, J.A.; Kingsford, R.T.; Callaghan, C.T. Monitoring large and complex wildlife aggregations with drones. Methods Ecol. Evol. 2019, 10, 1024–1035. [Google Scholar] [CrossRef]

- Bomantara, Y.A.; Mustafa, H.; Bartholomeus, H.; Kooistra, L. Detection of Artificial Seed-like Objects from UAV Imagery. Remote Sens. 2023, 15, 1637. [Google Scholar] [CrossRef]

- Natesan, S.; Armenakis, C.; Vepakomma, U. Individual tree species identification using Dense Convolutional Network (DenseNet) on multitemporal RGB images from UAV. J. Unmanned Veh. Syst. 2020, 8, 310–333. [Google Scholar] [CrossRef]

- Braley, R.D. A population study of giant clams (Tridacninae) on the Great Barrier Reef over three-decades. Molluscan Res. 2023, 43, 77–95. [Google Scholar] [CrossRef]

- Joyce, K.; Duce, S.; Leahy, S.; Leon, J.; Maier, S. Principles and practice of acquiring drone-based image data in marine environments. Mar. Freshw. Res. 2018, 70, 952–963. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).