1. Introduction

With the increasing demand for electricity, there has been a surge in the construction of overhead transmission lines [

1,

2,

3]. This has been an important task for the protection of transmission lines [

4,

5,

6]. Consequently, incidents involving foreign objects like bird nests and kites coming into contact with these lines have been increasing [

7,

8,

9]. Foreign objects on power lines pose a significant risk of causing tripping incidents, which can severely impact the secure and stable operation of the power grid [

10,

11,

12]. The automatic detection of foreign objects in time can provide useful information for managers or maintenance workers to handle the hidden danger targets.

Traditional methods rely on hand-crafted features. Tiand et al. [

13] proposed an insulator detection method based on features fusion and Support Vector Machine (SVM) classifier. However, the reliance on the manual design and selection of features restricts its generalization capability and detection accuracy. Song et al. [

14] introduced a local contour detection method that does not consider the relationship between power lines and foreign objects. Although it improves detection speed, it lacks consideration of the physical relationship between power lines. The foreign objects may limit its overall performance in practical applications. In summary, hand-crafted feature-based methods are easy to implement and perform well in detecting foreign objects on transmission lines in simple scenarios. However, when it comes to detecting foreign objects in complex scenarios, these methods exhibit limitations.

Currently, AI-based methods are being applied to object detection with unmanned aerial vehicles [

15,

16,

17,

18,

19,

20]. They are capable of automatically learning features, and identifying the positions of foreign objects. AI-based drone inspection has become an effective means to recognize the foreign objects on transmission lines [

21,

22,

23]. Researchers around the globe have proposed several methods for detecting foreign objects [

24,

25,

26]. According to the stages, these methods can be categorized into end-to-end detectors and multi-stage detectors.

End-to-End Detectors. Li et al. [

27] proposed a lightweight model, which leverages YOLOv3, MobileNetV2, and depthwise separable convolutions. They considered various foreign objects such as smoke and fire. The method achieves a smaller model size and higher detection speed compared to existing models. However, its detection accuracy needs improvement, especially in detecting small-sized objects. Wu et al. [

28] introduced an improved YOLOX technique for foreign object detection on transmission lines. It enhances recognition accuracy by embedding the convolutional block attention module and optimizes using the Generalized Intersection over Union (GIoU) loss. The results show that the enhanced YOLOX has an mAP improvement of 4.24%. However, the lack of detailed classification of foreign objects hinders the implementation of corresponding removal measures. Wang et al. [

29] proposed an improved model based on YOLOv8m. They introduced a global attention module to focus on obstructed foreign objects. The Spatial Pyramid Pooling Fast (SPPF) module is replaced by the Spatial Pyramid Pooling and Cross Stage Partial Connection (SPPCSPC) module to augment the model’s multi-scale feature extraction capability. Ji et al. [

30] designs an Multi-Fusion Mixed Attention Module-YOLO (MFMAM-YOLO) to efficiently identify foreign objects on transmission lines in complex environments. It provides a valuable tool for maintaining the integrity of transmission lines. Yang et al. [

31] developed a foreign object recognition algorithm based on the Denoising Convolutional Neural Network (DnCNN) and YOLOv8. They considered various types of foreign object like bird nests, kites, and balloons. Its mean average precision (mAP) is 82.9%. However, there is a significant room for improvement in detection performance, and the diversity of categories in the dataset needs to be enriched.

Multi-Stage Detectors. Guo et al. [

32] initially constructed a dataset for transmission line images and then utilized the Faster Region-based Convolutional Neural Network (Faster R-CNN) to detect foreign objects such as fallen objects, kites, and balloons. Compared to end-to-end detection, it can handle various shapes and sizes of foreign objects, demonstrating superior versatility and adaptability. Chen et al. [

33] employed Mask R-CNN for detecting foreign objects on transmission lines. The method showed strong performance in speed, efficiency, and precision for detecting foreign objects. Dong et al. [

34] explored the issue of detecting overhead transmission lines from aerial images captured by Unmanned Aerial Vehicles (UAV). The proposed method combines shifted window and balanced feature pyramid with Cascade R-CNN technology to enhance feature representative capabilities. It achieves 7.8%, 11.8%, and 5.5% higher detection accuracy than the baseline for mAP50, relative small and medium mAP, respectively.

Both end-to-end algorithms and multi-stage algorithms obtain good results on the given datasets. Multi-stage detection algorithms demonstrate clear advantages in detection accuracy, while the computational resources and detection time required are also substantial. In contrast, end-to-end detectors are trained with light models, and expected to achieve a trade-off between detection accuracy and speed. Our work belongs to end-to-end detectors.

The dataset for foreign object detection on transmission lines is crucial for model training. They can be categorized into two groups: simulated datasets [

35,

36] and realistic datasets [

7,

37,

38,

39]. Simulated datasets are generated by data augmentation techniques. Ge et al. [

35] augmented their database using image enhancement techniques and manually labeled a dataset comprising 3685 images of bird nests on transmission lines. Similarly, Bi et al. [

36] created a synthetic dataset focusing on bird nests on transmission towers under different environmental conditions. The dataset comprised 2864 images for training and 716 images for testing. Although these datasets simulate changes in environmental conditions through data augmentation techniques, they primarily cover a singular category of foreign objects on transmission lines. Realistic datasets are collected from real-world scenarios. Ning et al. [

37] expanded the variety in their created dataset, encompassing categories such as construction vehicles, flames, and cranes, a total of 1400 images. Zhang et al. [

38] established a dataset, consisting of 20,000 images, including categories like wildfires, additional objects impacting power lines, tower cranes, and regular cranes. Liu et al. [

7], based on aerial images, focused on bird nests, hanging objects, wildfires, and smoke as the four categories for detection, creating a dataset comprising 2496 images. Yu et al. [

39] refined five types of construction vehicle with bird nests from drone-view images. Li et al. created a dataset including four classes: bird nests, balloons, kites, and trash [

40].

Existing datasets are not open source and suffer from limitations such as a limited variety of foreign objects, limited scenarios, and low quality. This significantly impedes progress in research within this field.

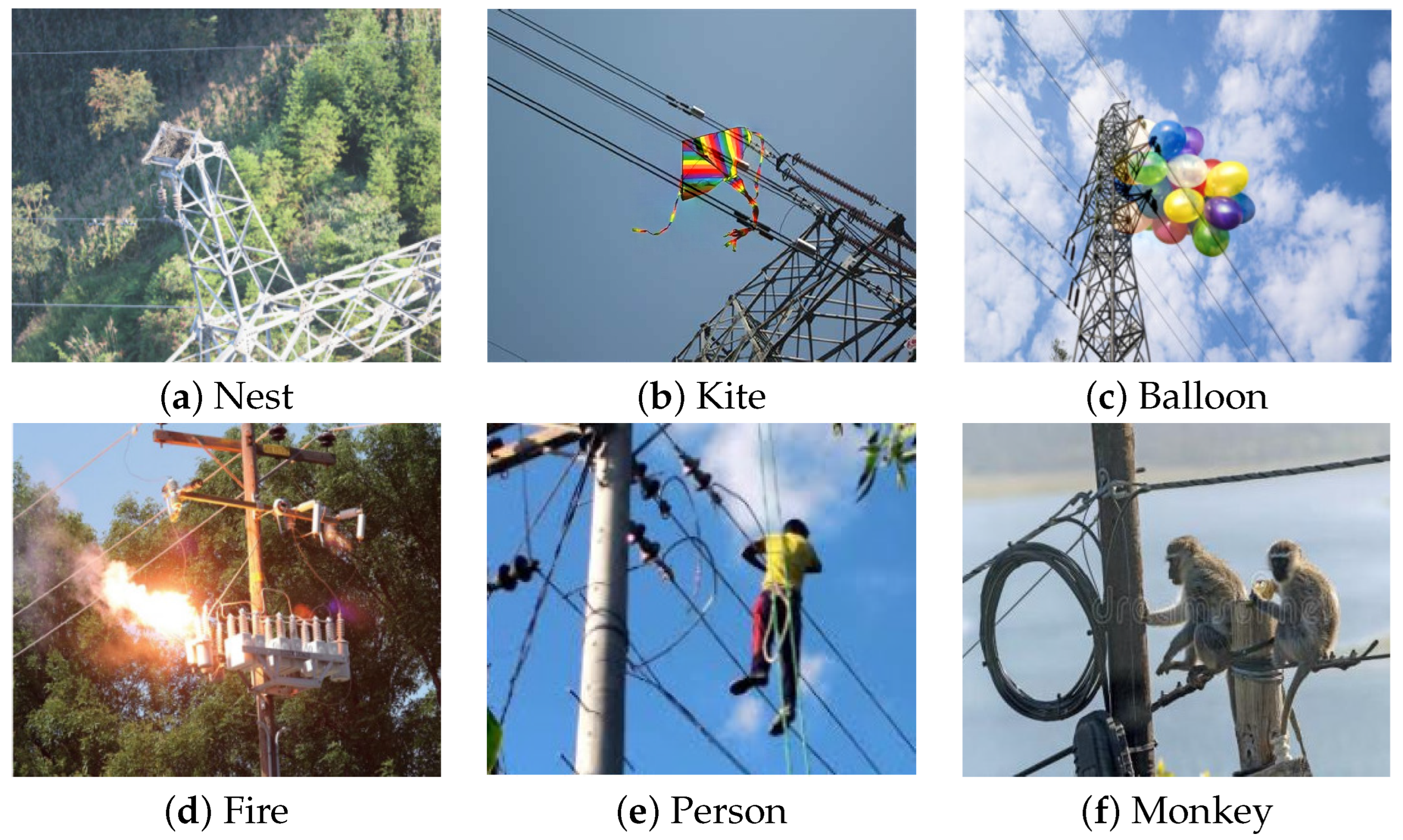

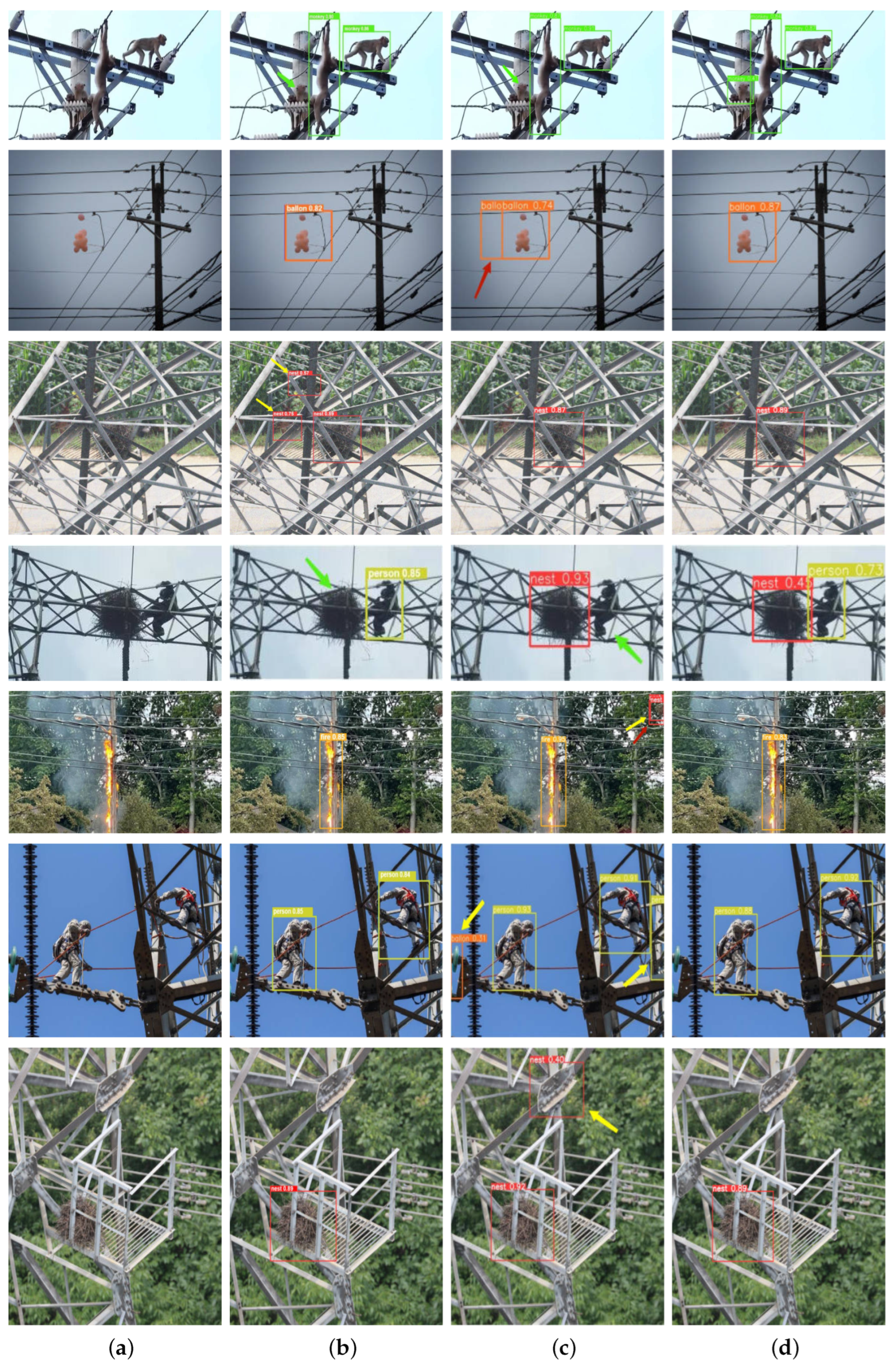

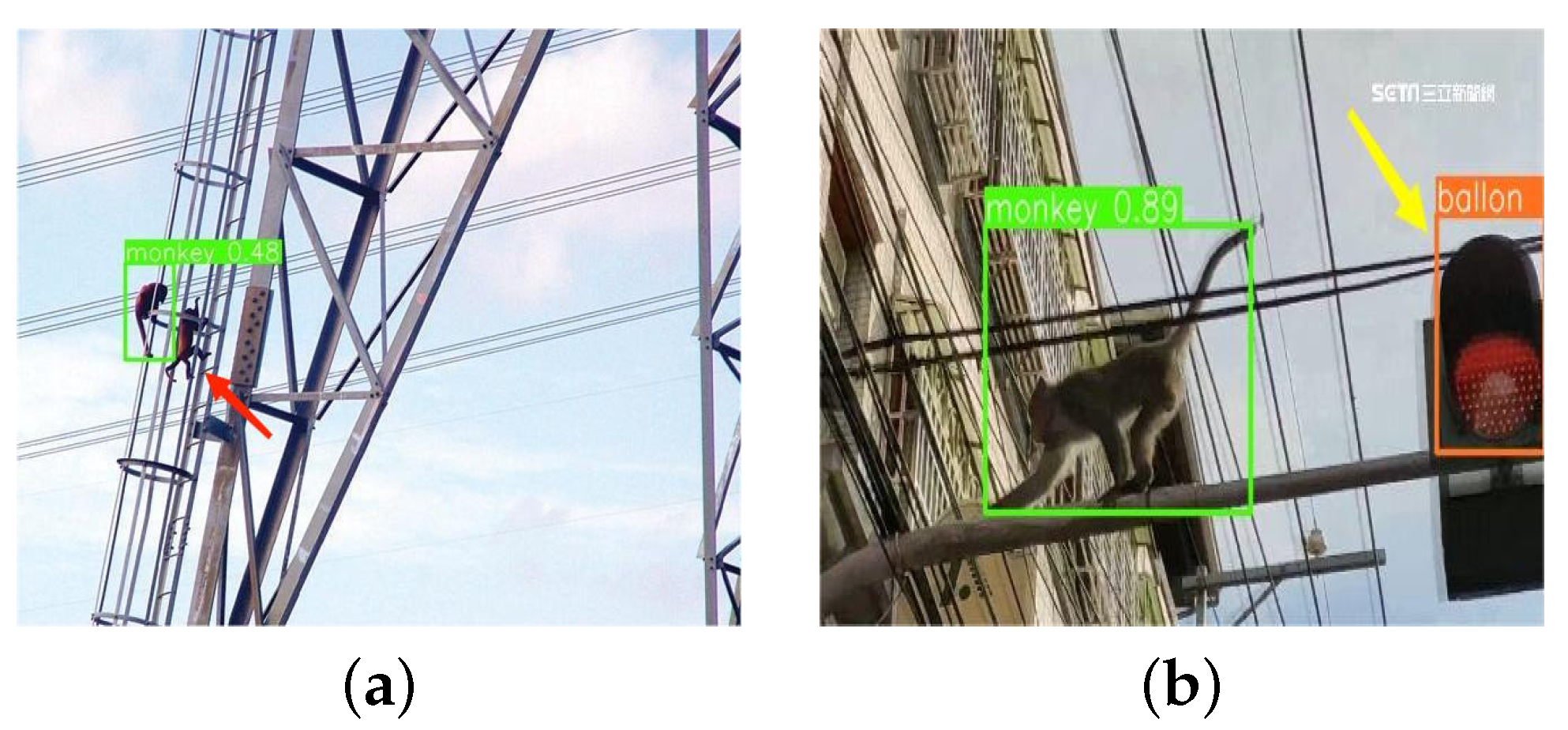

The motivation of this paper is to propose a deep learning-based approach for automatic foreign object detection. Some detection results are presented in the

Figure 1. Considering the fact of lacking datasets, we create a comprehensive dataset to facilitate the model training for foreign object detection on power transmission lines.

The main contributions of this paper are summarized as follows:

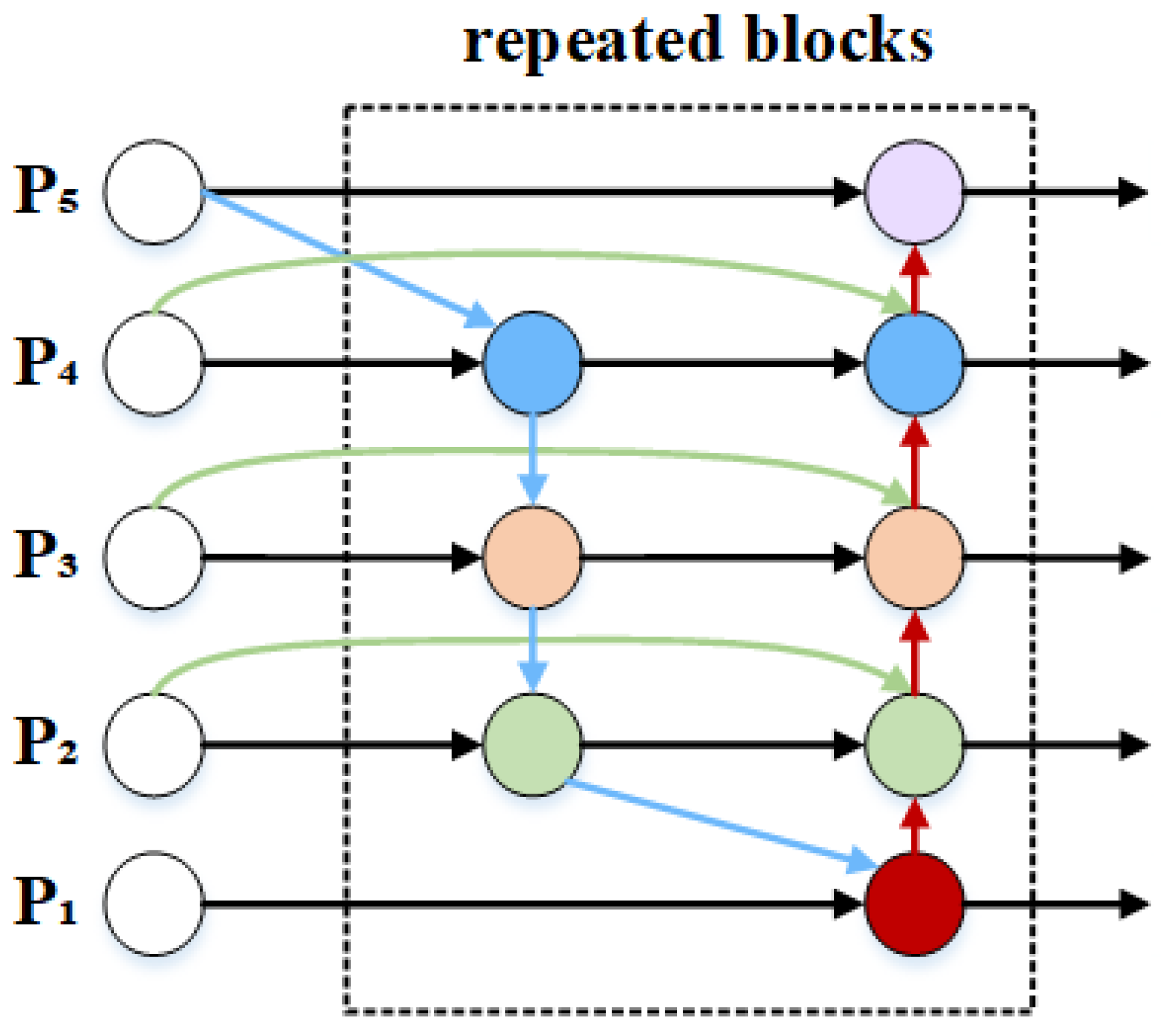

We propose an end-to-end method YOLOv8_BiFPN to detect foreign objects on power transmission lines. It integrates a weighted bidirectional cross-scale connection structure into the detection head of the YOLOv8 network. It outperforms the base-line model significantly.

This paper creates a dataset of foreign objects on transmission lines from drone perspective named FOTL_Drone. It covers six distinct types of foreign object with 1495 annotated images, which is currently the most comprehensive dataset for the detection of foreign objects on transmission lines.

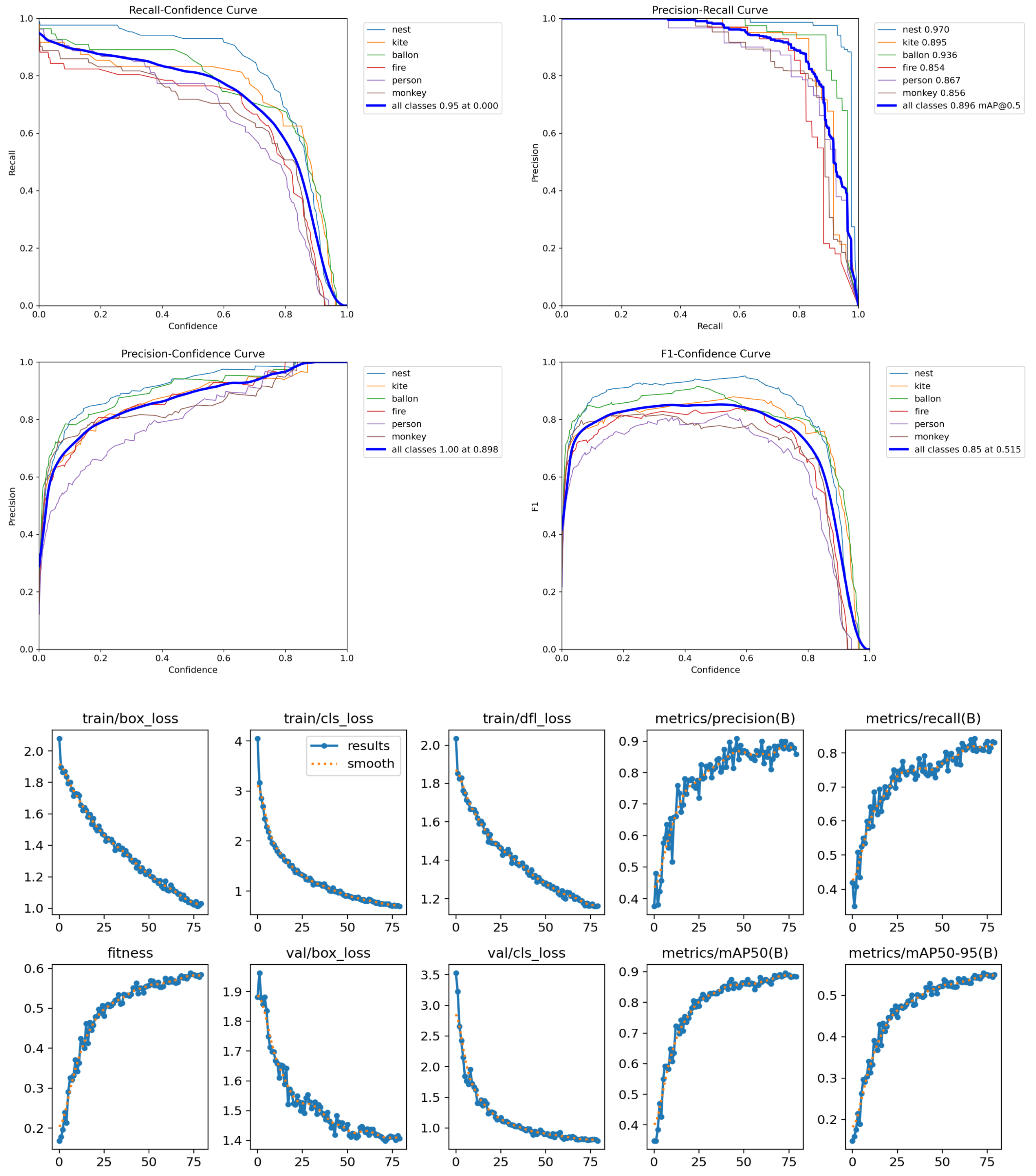

Experimental results on the proposed FOTL_Drone dataset demonstrate YOLOv8_BiFPN model’s effectiveness, with an average precision of 90.2% and an average mAP@.50 of 0.896, outperforming other models.

The paper is structured as follows.

Section 2 provides detailed information about the proposed FOTL_Drone dataset. Our method is described in

Section 3, while

Section 4 presents the experimental results. Finally,

Section 5 concludes all the work.

2. The Proposed Dataset

Recently, drone inspection has gradually emerged as the primary means for detecting foreign objects on transmission lines. In this section, we will present a dataset of foreign objects on transmission lines from drone perspective (FOTL_Drone). This dataset comprises a total of 1495 annotated images, capturing six types of foreign object. The images in the dataset are primarily sourced from the following:

Image search: Google, Bing, Baidu, Sogou, etc.

Screenshots from video sites: YouTube, Bilibili, etc.

Some images are captured from drone-view inspections on transmission lines.

To generate class labels, a visual image annotation tool called labelimg is employed. Annotations are saved in XML format following the PASCAL VOC standard. Six categories are included: nest, kite, balloon, fire, person, and monkey. These images are split into training and testing sets in an 8:2 ratio, with the training set comprising 1196 images and the testing set containing 299 images.

Figure 2 presents the analysis of the proposed FOTL_Drone dataset.

Figure 2a sheds light on four key aspects: the data volume of the training set, showing balanced sample quantities across categories except for bird nests; the distribution and quantity of bounding box sizes, indicating diverse box dimensions; the position of bounding box center points within the images; and the distribution of target height-to-width ratios.

Figure 2b depicts label correlation modeling, where cell color intensity in the matrix indicates the correlation between labels, with darker cells representing stronger associations, while the lighter cells indicating weaker ones.

Figure 3 illustrates six classes of foreign object on transmission lines.

Moreover, the FOTL_Drone dataset is different from other datasets for the following reasons:

Many existing datasets only have a number of limited categories of foreign objects on transmission lines, for example, two or three. In contrast, our FOTL_Drone expands the breadth of potential foreign objects on transmission lines to six classes.

The majority of existing datasets cover a limited range of scenarios. The images in the proposed FOTL_Drone dataset are selected from the drone perspective, which adds scene diversity. This is attributed to the variable shooting positions of the drone.

Existing datasets only include the static foreign objects. In contrast, our dataset not only includes static foreign objects such as kites and balloons, but also moving objects such as power workers and monkeys.