1. Introduction

With the rapid development of unmanned aerial vehicle (UAV) technology and infrared thermal imaging technology, UAVs with infrared thermal imaging cameras are now lightweight and miniaturized. Object detection technology based on infrared images from UAVs has shown better application potential in various fields, with advantages such as a low input cost, greater flexibility, and outstanding performance. Currently, target detection technology based on aerial images is being applied in the fields of agriculture [

1,

2], power transmission [

3,

4], rescue [

5,

6], security [

7,

8], transportation [

9,

10], and others. Among the object detection methods used to obtain aerial images, methods using visible and infrared image fusion have rich feature information and a relatively high target detection accuracy, but their data acquisition and processing costs are relatively high; a target detection model also has more parameters than other models, which affects its future applications. Object detection methods based on visible aerial images are affected by lighting and cannot detect objects properly under low-light conditions, whereas infrared aerial image target detection is not affected by complex environments and is not limited by light conditions. Therefore, infrared aerial target detection methods are widely used in rescue [

11], firefighting [

12], security [

13], transportation [

14,

15], agriculture [

16], and defense [

17]. A schematic diagram of these applications is shown in

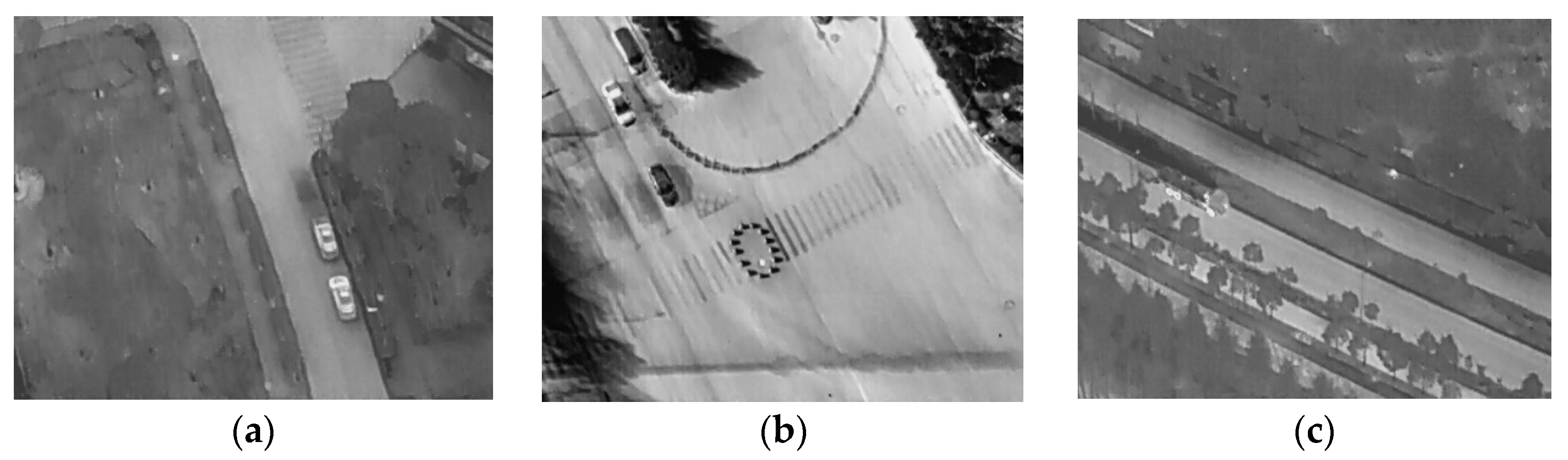

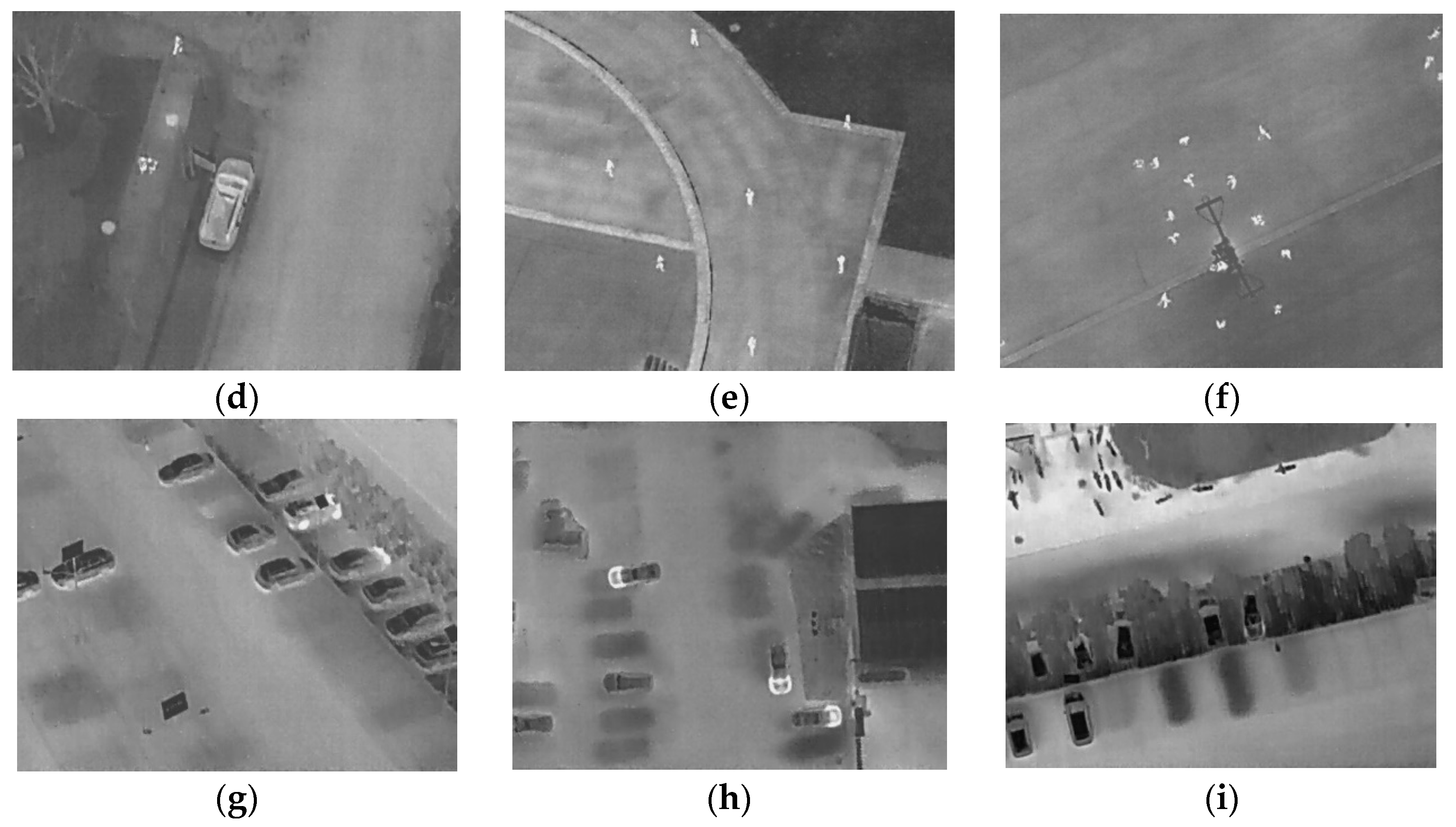

Figure 1.

In

Figure 1, it can be seen that researchers in different fields have carried out a number of studies that demonstrate the applications of object detection techniques using infrared aerial images, to widen the extent of their usage. For example, Sumanta Das et al. [

18] proposed a method for crop disease detection and soil quality detection based on infrared images from UAVs; this method takes advantage of the flexibility and miniaturization of UAVs and combines these qualities with the anti-interference aspects of infrared images to measure the temperature characteristics of crops and soil. Using wheat as an example, they illustrated the advantages of infrared aerial image target detection in crop monitoring. Zhanchuan Cai et al. [

19] proposed a wildlife observation method based on UAV infrared images. On the basis of an existing deep learning network framework, they used UAV aerial infrared images to build an ISOD wildlife detection dataset and proposed a CE (channel enhancement) module to enhance the image features, thereby improving the wildlife detection accuracy. Ziyan Liu et al. [

20] proposed a road vehicle detection method based on UAV infrared images; they constructed a lightweight feature fusion network and fused the target features from the infrared images to achieve a high level of accuracy when detecting road vehicle targets. A. Polukhin et al. [

21] proposed an infrared image target detection method using a YOLOv50-based framework for rescue operations; this method reduces network information redundancy and processes UAV infrared images to detect specified targets. Xiwen Chen et al. [

22] proposed a fire detection method based on UAV infrared images; the method used abundant UAV infrared image data to detect wildland fires through multi-modal feature extraction, and it simplified the firefighting department’s work. Because UAV infrared image target detection technology has the advantages of flexible flight, relatively low cost, and no light limitations, it has great application potential in the field of traffic monitoring. Infrared UAV object detection is more flexible than the traditional methods and is capable of monitoring object conditions in the blind spots of fixed traffic surveillance cameras. Although infrared image object detection has been preliminarily applied to traffic surveillance, there are still some problems. Because the UAV flying altitude is higher than the installation height of ordinary traffic surveillance cameras, the distance between the target and the UAV is large, and the size of the target in the infrared image is smaller. In addition, infrared images have lower contrast, lack of color, and less textural information compared to visible images. Therefore, the accuracy of infrared aerial image-based small object detection algorithms is low, and algorithms need to be improved to achieve the expected performance in practical applications.

In order to improve the accuracy of small object detection in infrared images for traffic surveillance, researchers have referenced different fields of small target detection methods that use UAV infrared images. For example, Victor J. Hansen et al. [

23] proposed a small target detection method for rescue search. They constructed a deep learning small target detection network to process UAV infrared images, to find small targets within a large field of view under poor weather conditions, so as to improve the efficiency of rescue missions. Hu, Shuming et al. [

24] proposed a small object detection method based on UAV infrared images. They constructed a new lightweight model based on the YOLOv7-tiny model and verified the effectiveness of the algorithm by detecting vehicles in a traffic scene; however, the algorithm was relatively weakly focused and did not clearly detect objects. Kim Jaekyung et al. [

25] proposed a small object detection method based on infrared remote sensing images. The detection network was optimized using the YOLOv5 model as a framework to detect pedestrians and vehicles, but the final detection accuracy of the method was low and was less than 70% for pedestrians and vehicles. Yasmin M. Kassim et al. [

26] proposed a small bird detection method based on UAV infrared images. They processed infrared images to detect small bird targets using Mask R-CNN and transfer learning. Although the method enabled the detection of small bird targets, it was only possible to discover whether there was a bird or not, but not to classify the birds. Yan Zhang et al. [

27] proposed a pedestrian detection method based on UAV images. In this method, visible infrared images were used to establish a VTUAV-det dataset and construct a QFDet model to detect pedestrians, but the method only detected pedestrians, which limits its future application and extension.

Although the researchers mentioned above carried out a lot of work on small target detection for infrared UAV aerial images, the number of classifications for small target detection was quite low. For example, only two categories of pedestrians and vehicles were detected in the study by Kim Jaekyung et al. [

25], and the detection model design by Zhang et al. [

27] is only for pedestrians, so it cannot meet real detection task requirements. In the study by Hu, Shuming et al. [

24], the larger target size occupied relatively more pixels in an image and the object feature information was more obvious, which is generally not compatible with real small target detection application scenarios. Therefore, to solve these problems with the existing methods and make detection algorithms more suitable for real traffic monitoring tasks, this study focused on the characteristics of infrared UAV aerial images with low contrast, less color, and less texture information, and proposed a new algorithm for the detection of small road objects based on infrared UAV aerial images. In order to improve the accuracy of small object detection, feature-enhanced attention and dual-GELAN net (FEADG-net) is designed, and a swin transformer feature-extracting backbone (STFE-backbone) is used to extract small object feature information on the basis of low-frequency enhancement; in addition, multidimensional feature fusion is carried out using a dual-GELAN neck (DGneck) structure. Finally, the loss value of the detection algorithm is calculated using the parameters of auto-adjusted InnerIoU. This study promotes the further application of UAV infrared images in the field of traffic and improves on the robustness of the existing target detection methods based on aerial infrared images for the detection of small road objects. It is also informative for other object detection application fields based on aerial images.

In this study, we make some new contributions related to network structure and loss function in order to address the problem of small road target detection in the infrared remote sensing images of UAVs; our contributions are as follows:

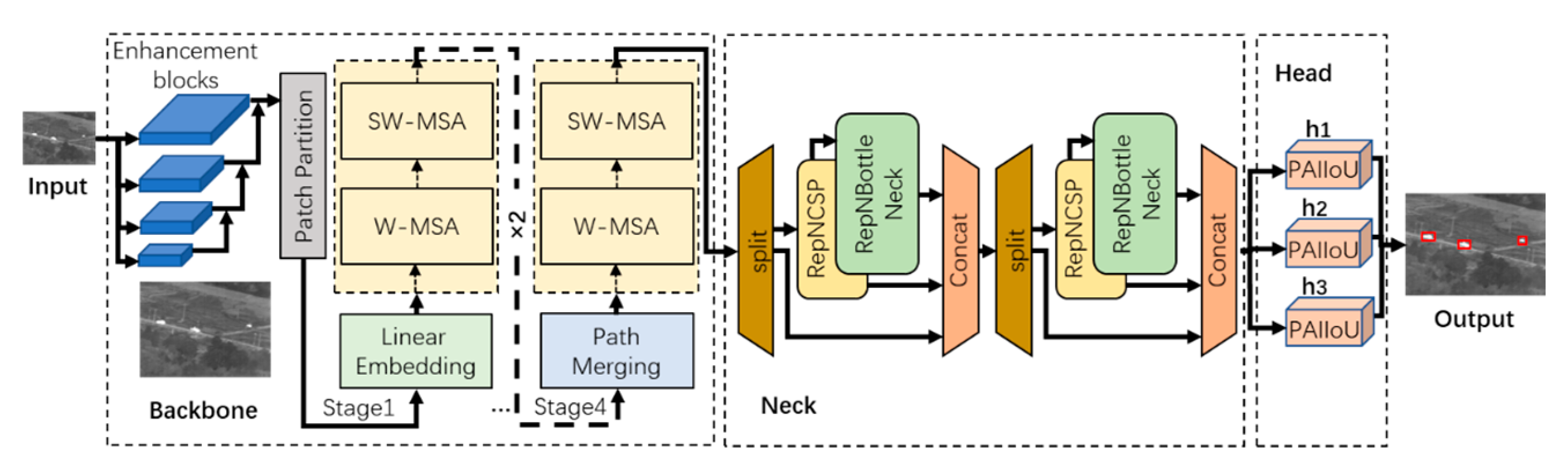

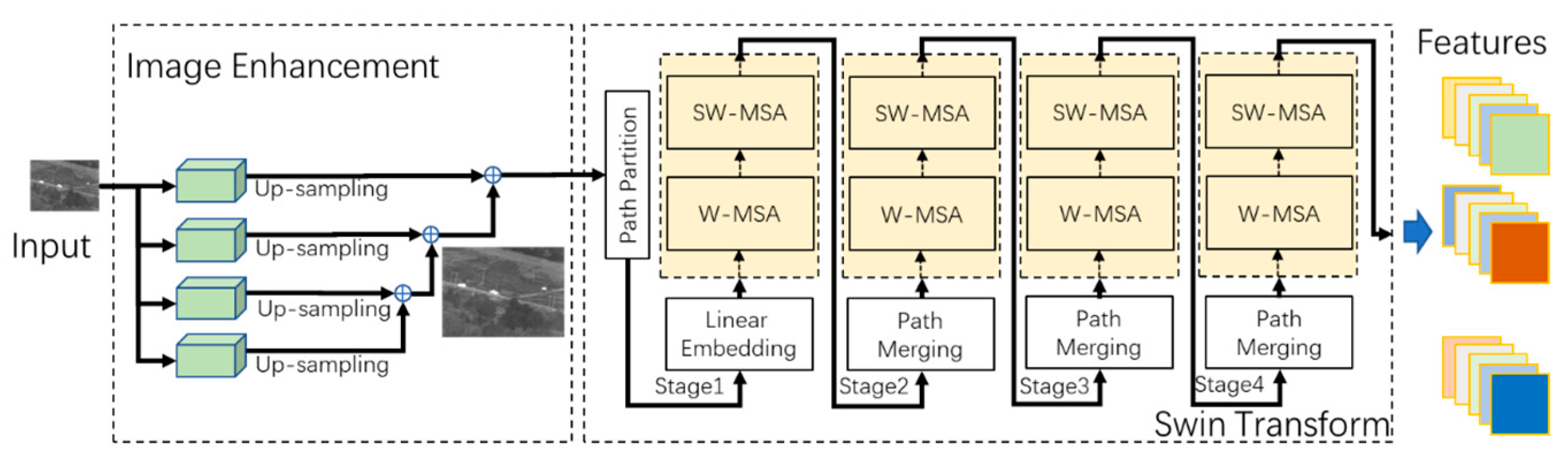

(1) A backbone network that combines target feature enhancement and an attention mechanism is proposed. In order to effectively extract the target features of UAV infrared remote sensing images, this study constructs a feature extraction network with an image enhancement function; it enhances the low-frequency feature information of infrared images and extracts the target’s edges, textures, and other features using a swin transformer attention mechanism.

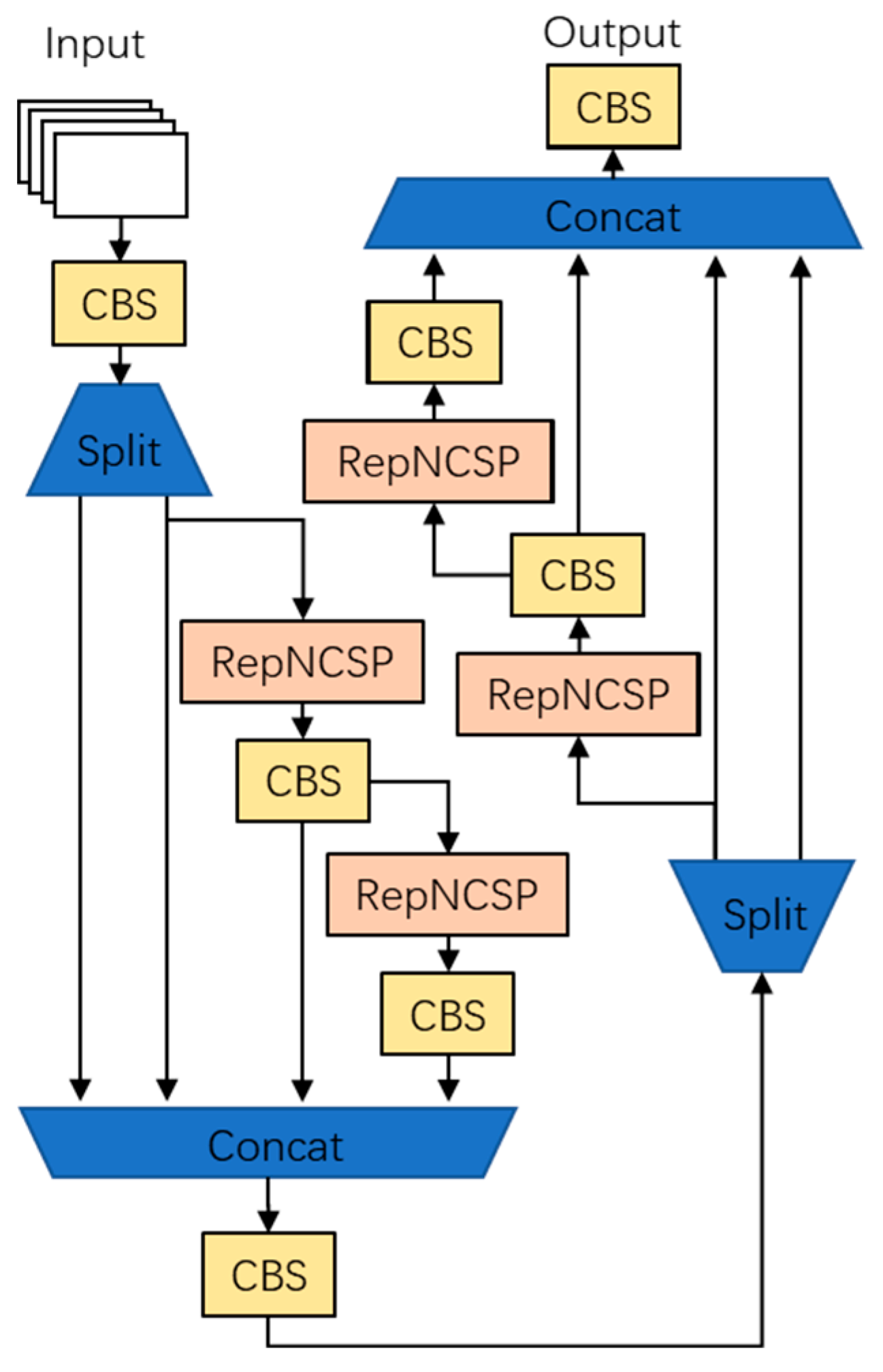

(2) A new feature fusion network is proposed. In order to avoid the problem of a vanishing gradient or weakening gradient in the deep layer of the network, this study constructs a DGneck based on the GELAN structure of yolov9 [

28] to better fuse the different features of small targets in UAV infrared remote sensing images.

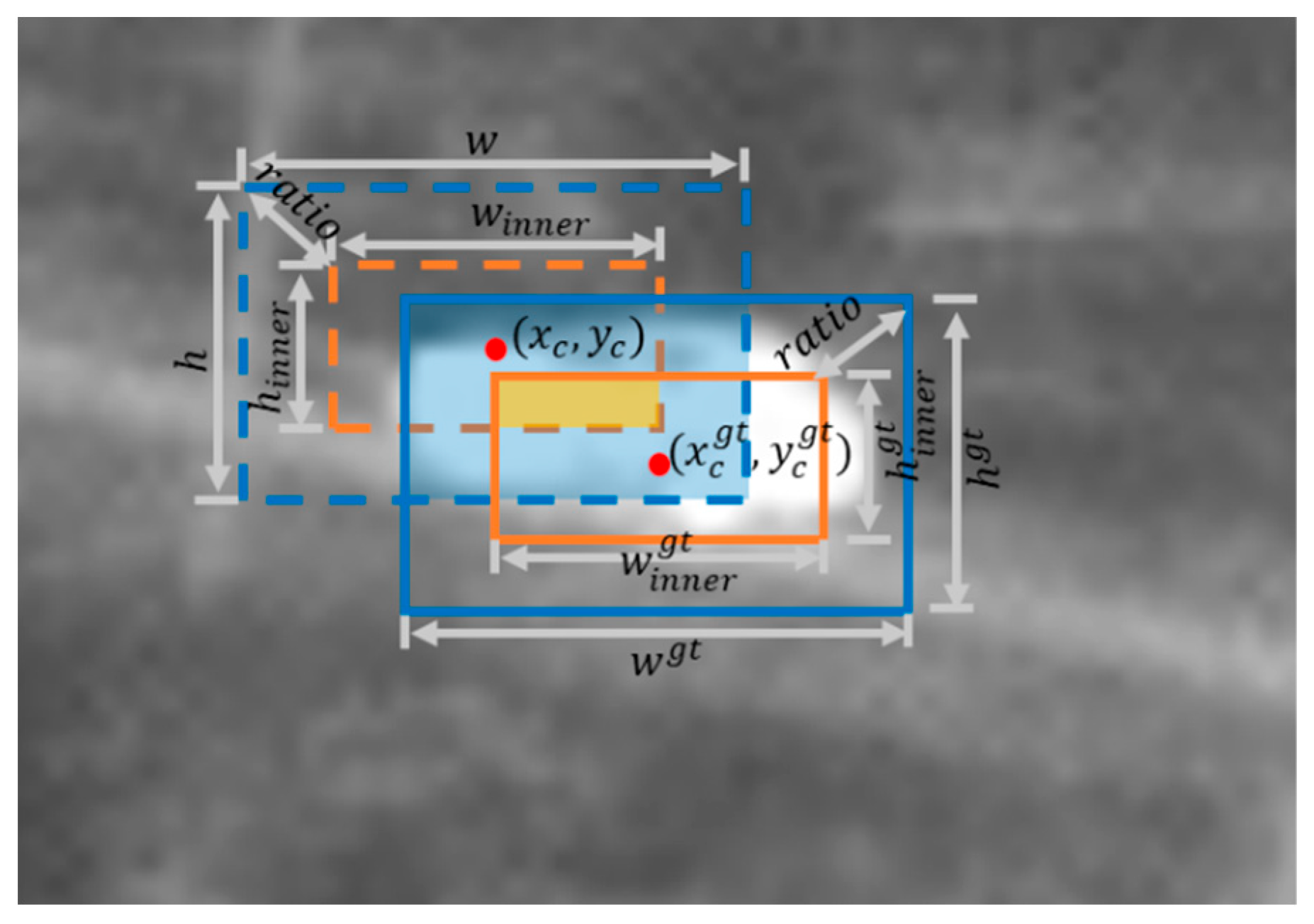

(3) Based on the innerIoU [

29], a loss function with automatic adjustment of the parameters is proposed. To address the problem of the artificial adjustment of parameters in the existing innerIoU calculation algorithm, a calculation using the parameters of the auto-adjusted InnerIoU is proposed. The loss function is constructed using the new innerIoU, in order to improve the robustness of the detection model.

(4) The detection types are raised to eight, and the detection accuracy of six of these types reached 90%. Few of published studies detected eight types of UAV infrared image targets; this study analyzed eight types of targets, six of which were detected with more than 95% accuracy.

3. Experimental Results

In order to verify the ability of FEADG-net to detect small targets in complex scenarios, this study applied FEADG-net to the HIT-UAV dataset and IRTS-AG dataset to detect small targets, and obtained the detection accuracy of the algorithm, which reaches more than 85%. In this study, the experimental hardware platform’s CPU was an Intel(R) Core(TM) i5-13400, the memory was 32 G, the GPU was NVIDIA GeForce RTX 3060, and the software operating system was Microsoft Windows 11 Professional Edition. To verify the effectiveness of FEADG-net relative to other algorithms, this study carried out comparative experiments to compare the detection accuracy of FEADG-net with that of yolov3 [

36], yolov5 [

37], yolov8 [

38], yolov9 [

28], and RTDETR [

39] using the same dataset. Ablation experiments were also carried out to verify the effectiveness of the newly designed internal modules, such as FEST-backbone, DGneck, and loss function

, by replacing or eliminating three new modules in FEADG-net.

3.1. Relevant Metrics

To evaluate the performance of the deep learning road target detection algorithms for infrared images, this section briefly describes the metrics of precision, AP, mAP, and mAP50 that were used in the experiments, which were based on existing machine learning and pattern recognition theories.

3.1.1. Precision

We assume that the samples to be predicted consist of two parts: positive and negative samples. These are classified into four different types according to the prediction results: (I) TP: true positive, the number of positive samples that are correctly predicted; (II) FP: false positive, the number of negative samples that are predicted as positive; (III) TN: true negative, the number of negative samples that are correctly recognized; and (IV) FN: false negative, the number of positive samples predicted as negative. Based on these concepts, the definition of precision

can be obtained, which is equal to the ratio of the number of positive samples predicted correctly to the number of all the samples predicted as positive; the formula is as follows:

where

is the accuracy,

is the number of positive samples correctly predicted, and

is the number of negative samples incorrectly predicted.

3.1.2. Average Precision

Assuming that there are n samples in a category from the dataset, in which there are m positive examples, the maximum accuracy

is calculated for each positive sample; then, the mean of these

values of

is calculated to obtain the average accuracy

. The formula for calculating the average accuracy

is as follows:

where

is the average precision,

is the positive samples in a category, and

is the maximum precision corresponding to the

th positive sample.

3.1.3.

There is generally more than one category in a dataset or a particular object detection task.

is obtained by averaging the

s of all the categories of the dataset, which is calculated as follows:

where

is the number of categories of samples in the dataset, and

is the average accuracy corresponding to the

th category.

3.1.4. 50 and 50

For the image classification task, addresses the category prediction precision, but there is also an anchor regression objective in the target detection task, where the anchor precision is generally evaluated in terms of IoU. 50 is the average precision, which is calculated based on an IoU threshold greater than 0.5 for a given category. On the basis of the calculation of the 50 for a single category, this obtains the 50 for all categories of the dataset; thus, the 50 is obtained for the detection model in that dataset.

3.2. Comparative Experiments

In order to verify the effectiveness of the proposed FEADG-net algorithm in different scenarios and using different sample sizes, the performance of FEADG-net was compared with popular target recognition algorithms, such as yolov3, yolov5, yolov8, yolov9, and RTDETR, using the HIT-UAV dataset and the IRTS-AG dataset. Thus, the small target detection ability of FEADG-net was compared with other algorithms to prove its effectiveness.

3.2.1. Comparative Experiments Using the HIT-UAV Dataset

In order to comprehensively verify the target detection ability of the small object detection algorithm proposed in this study, we trained and tested FEADG-net and the other algorithms using the HIT-UAV dataset, to obtain the corresponding results of the training and target detection experiments.

(1) Comparison of detection model training

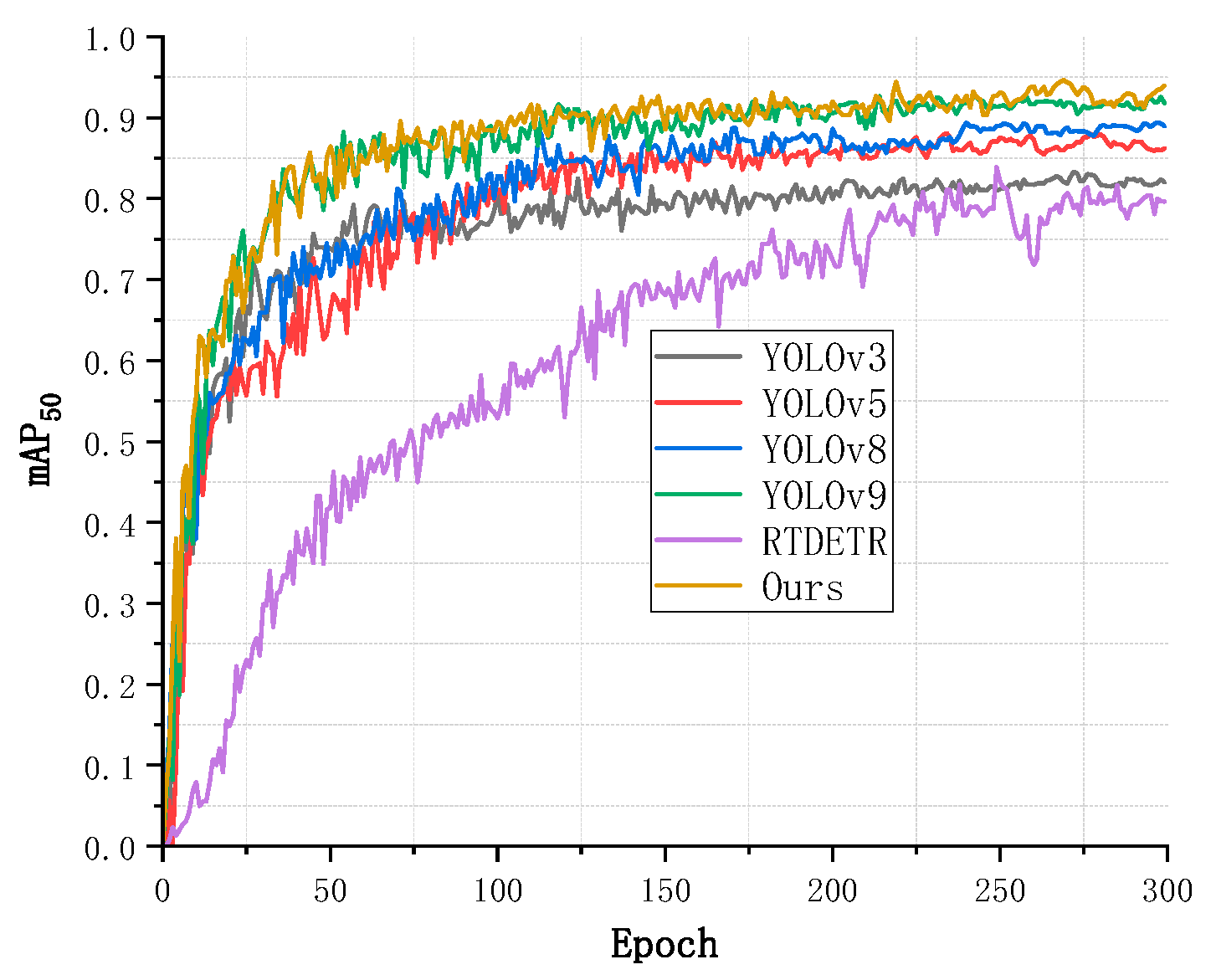

To observe the efficiency of the detection model’s training process for tasks (work) involving small samples, the algorithms FEADG-net, yolov3, yolov5, yolov8, and yolov9 were trained for 300 epochs using the HIT-AUV dataset, in which the total number of samples is small. The variation in the training accuracy curves for the different algorithms using the HIT-UAV dataset is shown in

Figure 11.

In

Figure 11, it can be seen that the detection accuracy of the FEADG-net method proposed in this study was significantly better than that of the other methods; it demonstrated good robustness, but there were large fluctuations in the training process. As can be seen from the change in the accuracy curve, the training accuracy of the other methods was relatively low, and the training performance using the small sample dataset was not as good as that of FEADG-net.

(2) Comparative experiment

The performances of the algorithms FEADG-net, yolov3, yolov5, yolov8, yolov9, and RTDETR were compared using the HIT-UAV dataset. As the “don’t care” category was excluded from the HIT-UAV dataset, the overall algorithm’s accuracy

50 was compared by obtaining the detection accuracy

50 from the different categories used in the experiment, such as people, cars, bicycles, and other vehicles. The results of the comparative experiment are shown in

Table 1.

In

Table 1, it can be seen that the algorithm proposed in this study, the FEADG-net algorithm, had the highest detection accuracy

50, which was equal to 93.8%. YOLOv3 had the lowest detection accuracy

50, which was equal to 82.8%. Among the four categories in the HIT-UAV dataset, the highest detection accuracy was for cars, and the lowest detection accuracy was for other vehicles. The reason for this low accuracy was that other vehicles has a smaller number of samples, has more feature changes, and is easily affected by the interference of environmental factors. According to the model size and real-time parameters, it can be seen that the model parameters of our algorithm were fewer than those of yolov9 and RTDETR, satisfying the real-time requirements at the same time.

(3) Comparative experiment on different hardware configurations

In order to verify the performance of our algorithm on different hardware configurations, we performed comparative experiments for object detection on two different platforms using HIT-UAV dataset. The specific hardware configurations of the experimental platforms, the real-time parameters of yolov9, and our algorithm are shown in

Table 2.

As can be seen from

Table 2, the value of the FPS (Frame Per Second) of our algorithm on an Nvidia RTX 3080 was higher than the value of the FPS on an Nvidia RTX 3060, which indicates that our algorithm ran faster and had better real-time performance on the Nvidia RTX 3080. It can be inferred that our algorithm has better real-time performance on hardware platforms with higher computation capabilities.

(4) Visualization of target detection results

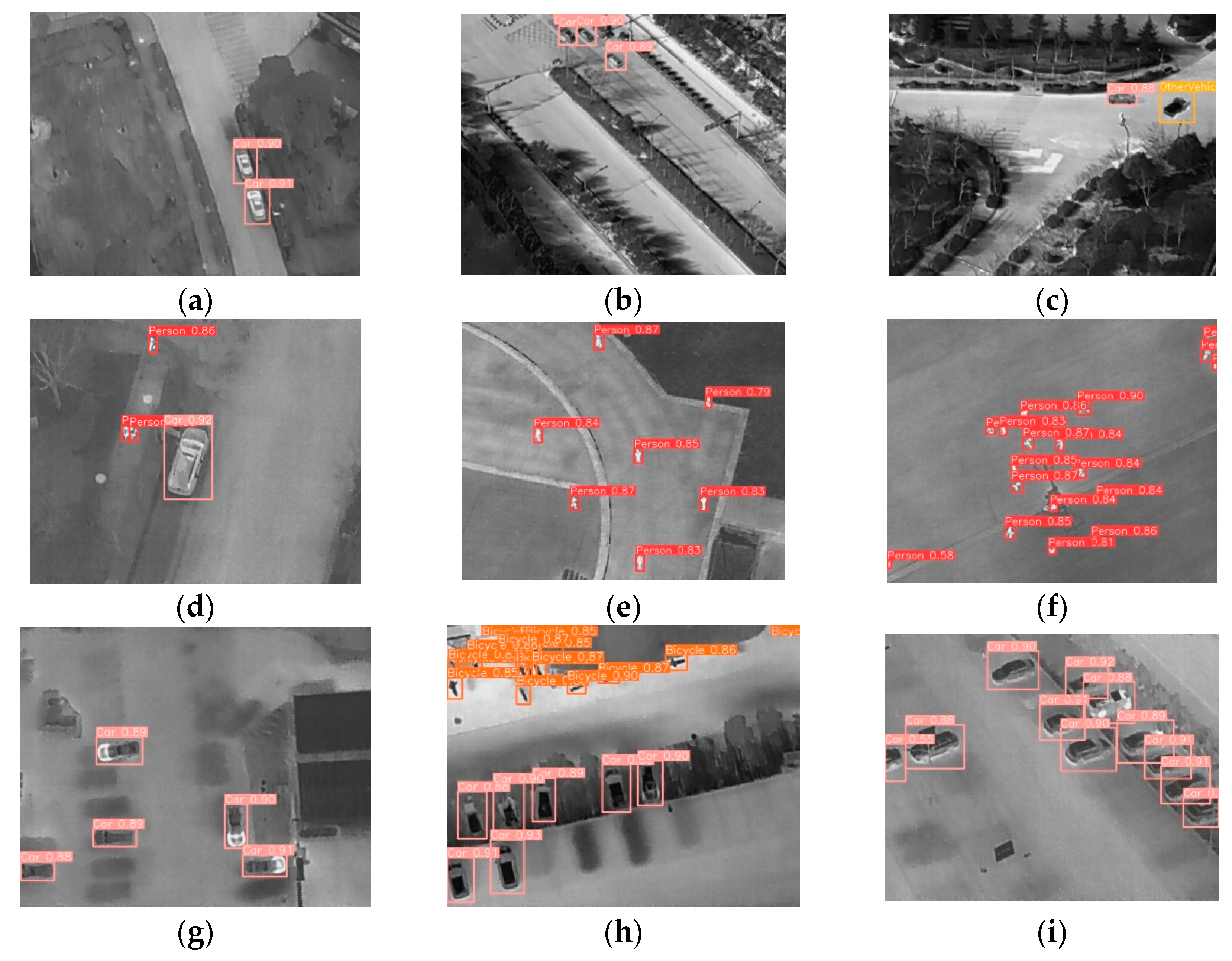

In order to verify the practical performance of the FEADG-net model for small target detection in infrared remote sensing images, this study used the trained FEADG-net model to predict targets using the test part of the HIT-UAV dataset. Some of the results of the small target prediction are shown in

Figure 12.

In

Figure 12, it can be seen that our FEADG-net model could accurately predict different targets using the HIT-UAV dataset and that the actual prediction performance was relatively good.

3.2.2. Comparative Experiment Using the IRTS-AG Dataset

We carried out model training and evaluation of FEADG-net and other algorithms in the IRTS-AG dataset and obtained corresponding experimental results.

(1) Comparison of detection model training

In order to observe the training performances of different algorithms using datasets with a large number of samples, the algorithms FEADG-net, yolov3, yolov5, yolov8, yolov9, and RTDETR were trained using the multi-sample IRTS-AG dataset, and the results from different algorithms using the same dataset were compared.

Figure 13 gives the training results of the different algorithms using IRTS-AG.

In

Figure 13, it can be seen that the FEADG-net algorithm proposed in this study had a better training performance using the IRTS-AG dataset compared with that of the other algorithms.

(2) Comparative experiment

In order to verify the performance of the algorithm using datasets with a larger number of samples, this study carried out comparative experiments using the IRTS-AG dataset with a smaller target size and a larger number of samples and obtained the detection accuracy

50 for eight types of targets and the overall algorithm accuracy

50, so as to verify the effectiveness of the FEADG-net algorithm. The results of the comparative experiments are shown in

Table 3.

In

Table 3, it can be seen that compared to the other target detection methods, FEADG-net had a higher target detection accuracy

50 when using the IRTS-AG dataset, with most of the eight categories having relatively high

50 values and only a few categories having lower

50 values than the others. In particular, our approach’s accuracy improvement was more obvious for category 7, and there were some improvements for the other categories, but this was less obvious, which means that our method performed better than the other algorithms in detecting categories with a smaller number of samples.

According to the comparison of model size and real-time parameters in

Table 3, it can be seen that the size of our detection model was smaller than yolov9 and RTDETR, but its detection accuracy was higher and meets the real-time requirements.

(3) Visualization of target detection results

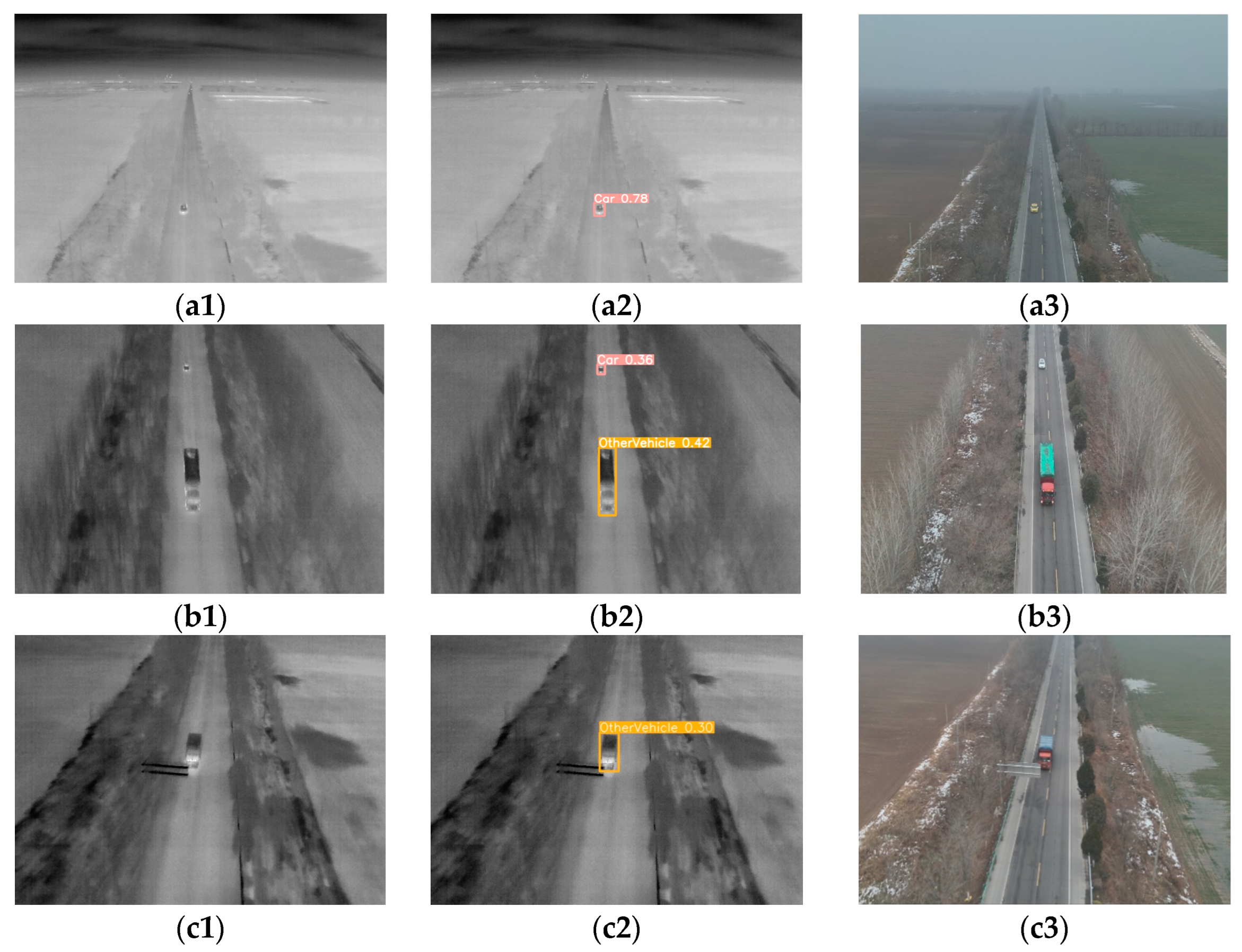

To observe the actual small road target detection performance of the FEADG-net algorithm, this study used the training weight file of the FEADG-net algorithm to predict the small road objects in the test part of the IRTS-AG dataset and visualized the prediction results, which are shown in

Figure 14.

In

Figure 14, it can be seen that the FEADG-net algorithm was able to detect smaller road vehicle targets in the IRTS-AG dataset and that it was also able to distinguish between different types of small road targets in infrared images with better performance.

3.3. Ablation Experiments

In order to verify the effectiveness of the three innovative modules, FEST, DGneck and

, in the UAV infrared image small target detection algorithm FEADG-net, this study carried out ablation experiments by replacing or eliminating the corresponding modules. The results of the ablation experiments using the HIT-UAV dataset are shown in

Table 4.

In

Table 4, it can be seen that the three different new modules in FEADG-net promoted the improvement of target detection accuracy to different degrees; among the modules, the loss function

promoted the detection accuracy the most, and DGneck promoted it the least. However, all of them had a positive effect on the improvement of detection accuracy, and the detection accuracy was effectively improved by the joint contribution of the three modules.

3.4. Experiments in Actual Traffic Monitoring Scenarios

To verify the performance of our algorithm in real traffic scenarios, we detected small targets in our real UAV traffic surveillance data using the training weights of FEADG-net on the HIT-UAV dataset. Our UAV traffic surveillance data were taken on a foggy day in winter using DJI M3T. Because of the high humidity of the atmosphere at that time, the imaging quality of the infrared images was relatively poor. Some of the detection results are shown in

Figure 15.

According to

Figure 15, it can be seen that our FEADG-net algorithm could detect different types of small objects in real UAV traffic surveillance scenarios, and it could overcome the environmental interference to some extent. The data processing speed was 11.6 ms per image at shape (1, 3, 512, 640).

4. Discussion

This section discusses the reasons behind the results and the mutual relationships between the datasets, the detection methods, and the comparative and ablation experiments.

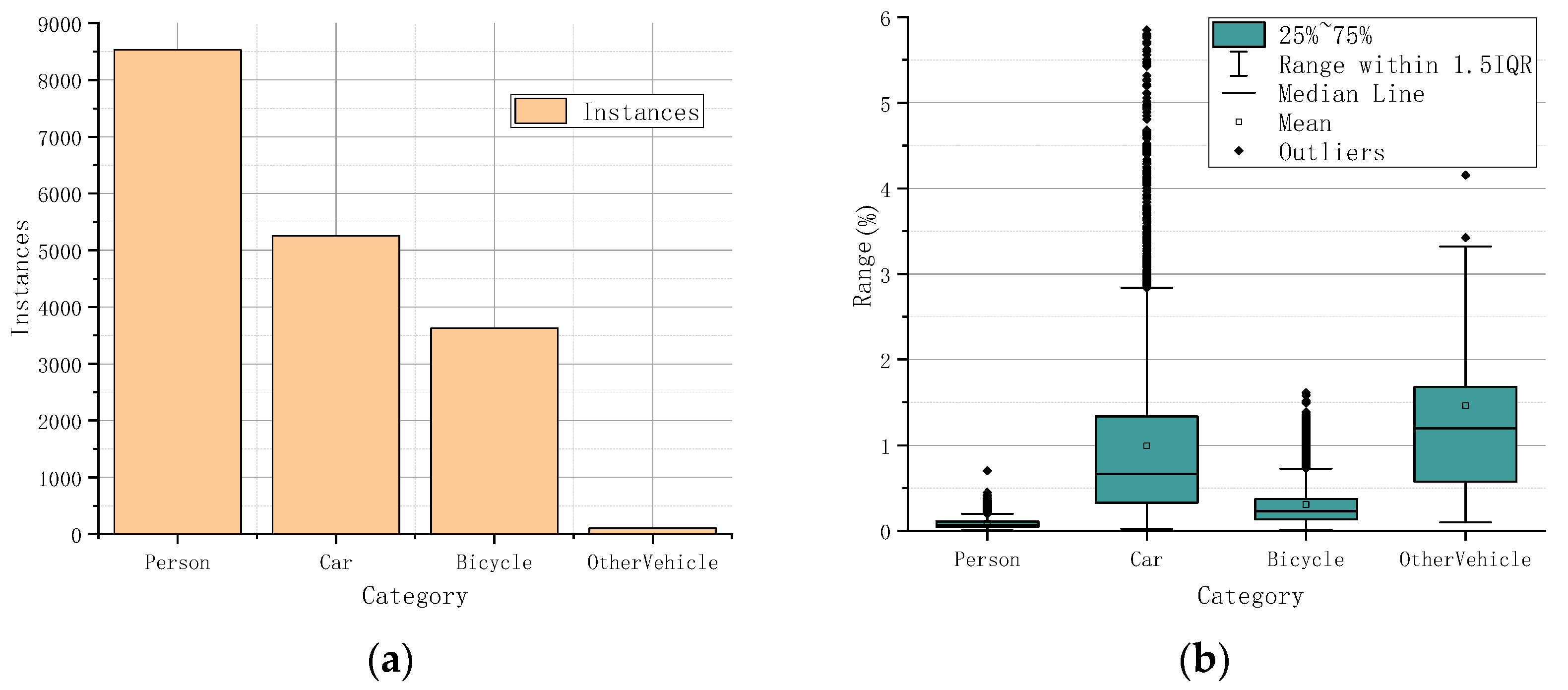

(1) Because the number of instances in the seventh and eighth categories in the IRTS-AG was less than the other six categories, their detection accuracy was lower, which affected the 50 value of the algorithm. Therefore, to obtain better infrared small target detection accuracy, the number of samples in each category from the dataset used for training a model should not be significantly different, so that a higher 50 value can be obtained.

(2) In the IRTS-AG dataset, the difference between the object grey value and the background grey value is larger, which is beneficial for target detection, while the difference between the object grey value and the background grey value in the HIT-UAV dataset is smaller. As a result, the 50 was higher for most of the categories (except the last two) in the IRTS-AG dataset than the 50 for all categories in the HIT-UAV dataset.

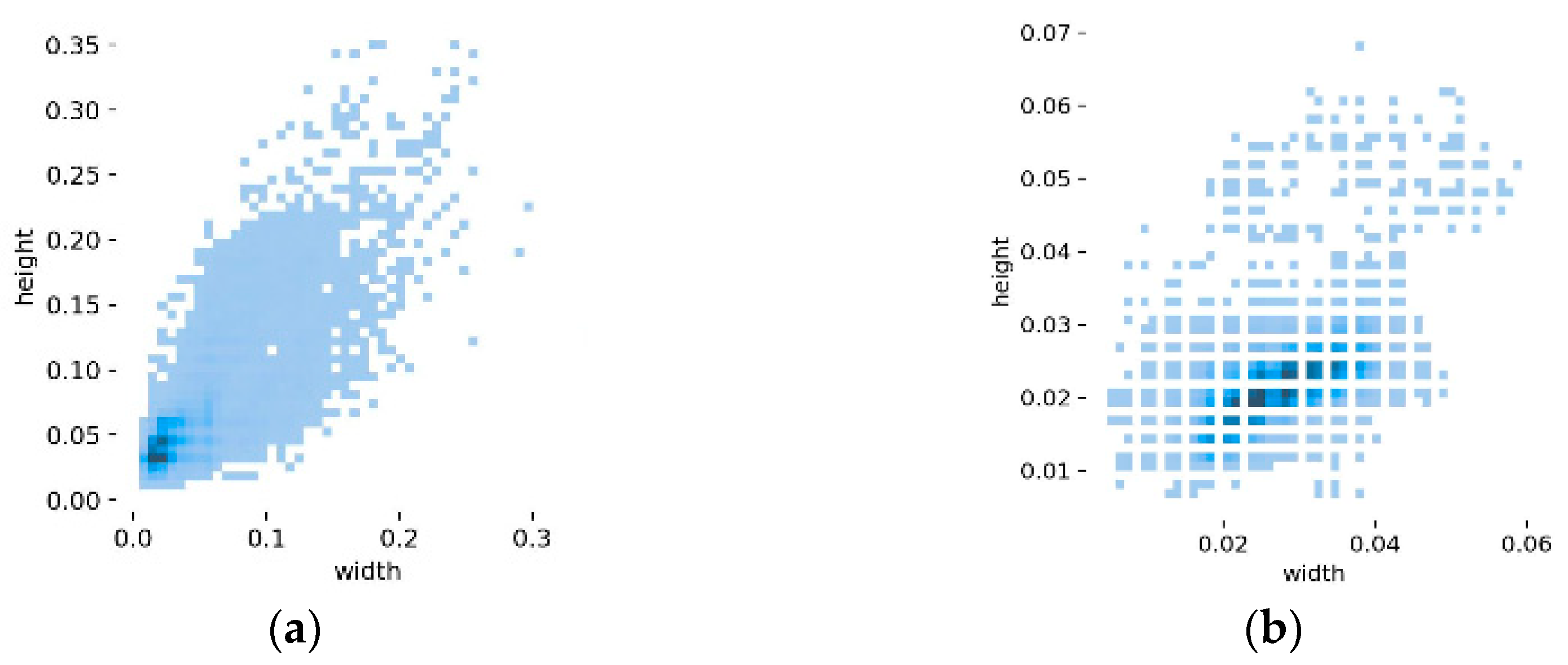

(3) The object instance size distribution is given in

Figure 16, where the horizontal scale is the ratio of the instance width to the image width, and the vertical scale is the ratio of the target height to the image height; each small blue square represents a target instance. The object instance size distribution in the IRTS-AG dataset shown in

Figure 16 indicates that certain sizes of instances on the horizontal and vertical axes are not available; this is because the IRTS-AG dataset consists of 87 video sequences, and many of the sample images are continuous image frames from a particular video; the size of the instances is less random than that of the HIT-UAV dataset.

(4) According to

Figure 11 and

Figure 13, it can be seen that the size of the dataset affected the model training process to some degree. When the model was trained on a dataset with a smaller number of sample images, its corresponding mAP

50 curve showed larger fluctuations, while the curve showed smaller fluctuations when the dataset had a larger number of sample images.

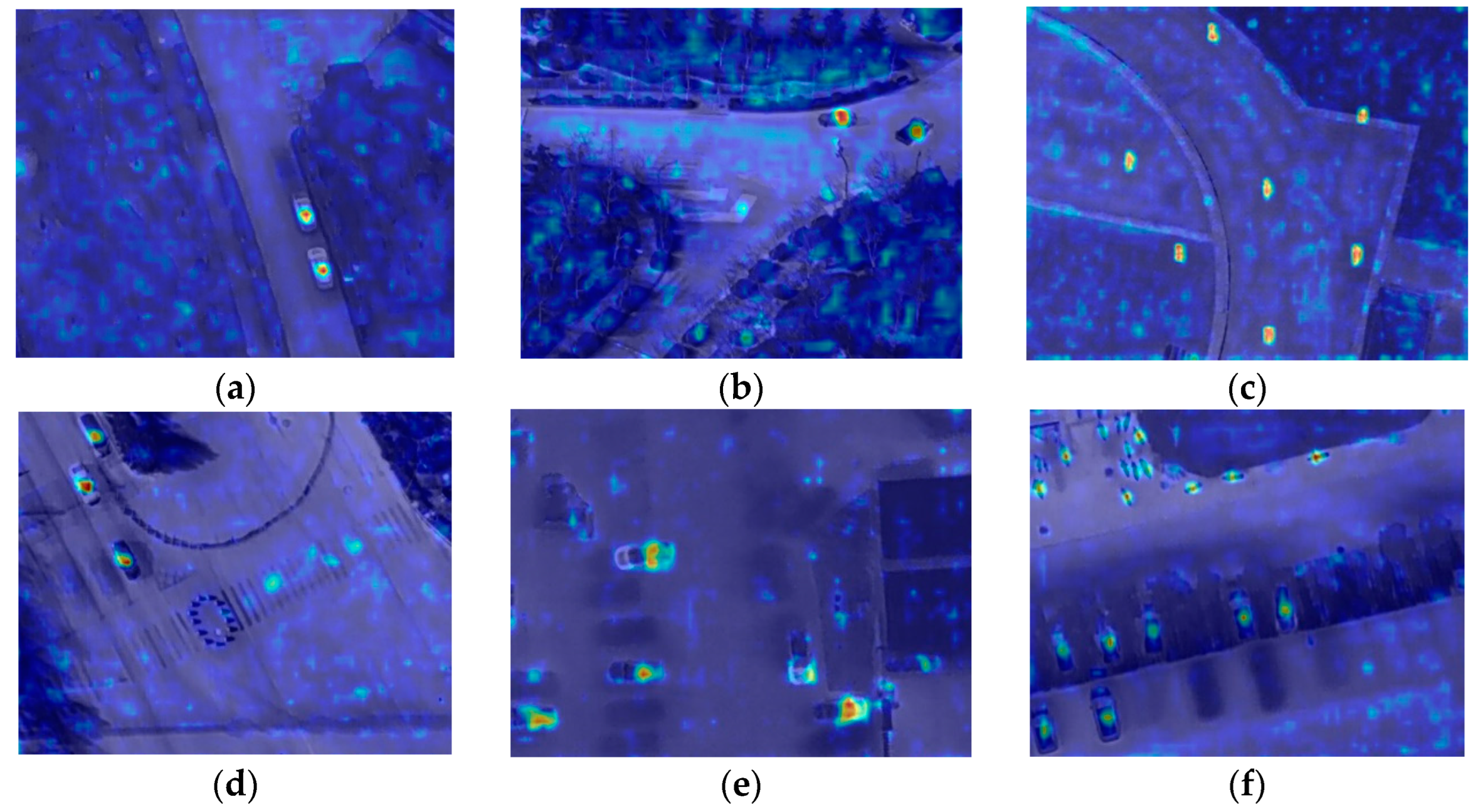

(5) To understand the target detection process of the FEADG-net network model when using different datasets, this study used a class activation map to visualize the gradient information of the object recognition algorithm, which allows us to view the gradient information of the FEADG-net model when detecting small targets on the road. The class activation map of the HIT-UAV dataset is shown in the

Figure 17.

As shown in the characteristics of the class activation map, the color of the heat map is more inclined towards cooler colors (blue) as the corresponding gradient value and the probability of detecting the target become smaller. The color is more inclined towards warmer colors (yellow) as the corresponding gradient value and the probability of detecting the target become higher, as shown in

Figure 17. Thus, it can be seen that the areas corresponding to the targets of the UAV infrared images have large gradient values and the background areas have small gradient values, indicating that the FEADG-net model performed better in the detection of different targets in the HIT-UAV data.

5. Conclusions

With the rapid development of UAV infrared image processing technology, small road target detection technology based on UAV infrared remote sensing images has become a hot research topic. To solve the problems of low contrast, poor edge texture information, and low exploitation of target features in UAV infrared remote sensing images of complex scenes, this study designed FEADG-net to detect small targets in UAV infrared remote sensing images, based on the network structure and feature processing advantages of YOLOv9. FEADG-net consists of three parts, namely a backbone, neck, and head. The backbone combines the advantages of a feature-enhanced network and swin transformer, which can effectively extract the feature information of small targets. The neck mainly draws upon the GELAN structure of yolov9 to construct DGneck, which avoids network deep layer gradients that are too weak and guarantees the effective fusion of different target features. Parameters of the auto-adjusted InnerIoU calculation algorithm were proposed to improve the robustness of the head loss function and to enhance the ability of FEADG-net to detect small targets on roads. Finally, comparative experiments between different algorithms and ablation experiments were carried out using the HIT-UAV dataset and IRTS-AG dataset. In these experiments, the detection accuracy was compared and analyzed between FEADG-net and other algorithms, including yolov3, yolov5, yolov8, yolov9, and RTDETR, to validate the effectiveness of the FEADG-net algorithm proposed in this study. The usefulness of FEST, DGneck, and from the FEADG-net algorithm was verified using ablation experiments. The experimental results showed that the small target detection accuracy of FEADG-net for UAV infrared remote sensing images was greater than 90%, which was higher than the previous methods, and it met the real-time requirements. Although the approach was able to detect eight categories of infrared road small objects and while the target size was sufficiently small, this was still not enough to meet the needs of detecting traffic targets in detailed categories. In future work, the number of categories will be increased and FEADG-net will be optimized to focus on the practical needs of traffic management departments. Currently, the FEADG-net model proposed in this study can be used for road target detection in some traffic scenarios, and it also has some reference value in the fields of search, rescue, wildlife protection, and defense.