Detection Probability and Bias in Machine-Learning-Based Unoccupied Aerial System Non-Breeding Waterfowl Surveys

Abstract

1. Introduction

2. Methods

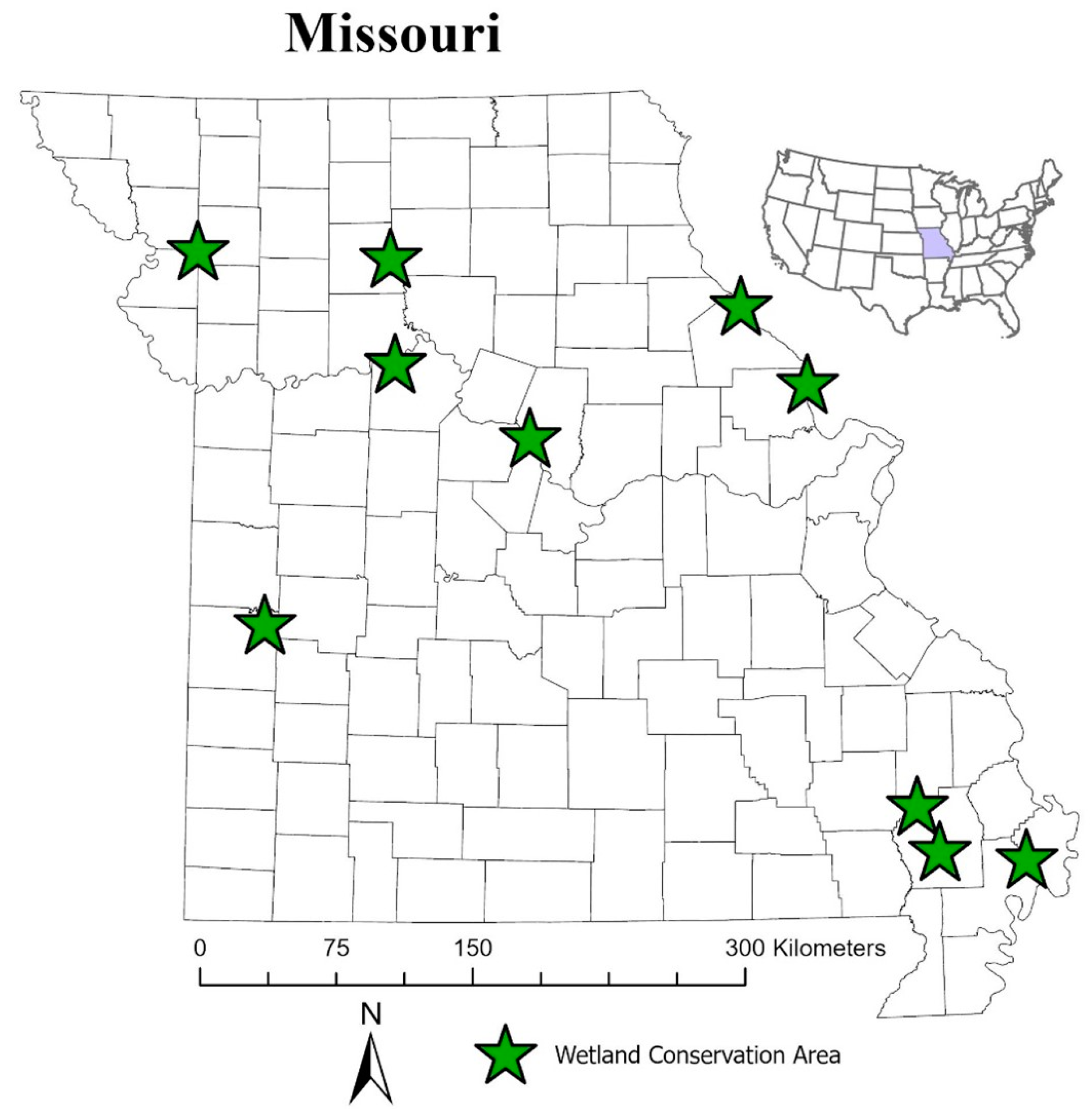

2.1. Study Area

2.2. Availability Bias

2.3. Perception Bias

2.4. Correcting Image Counts

3. Results

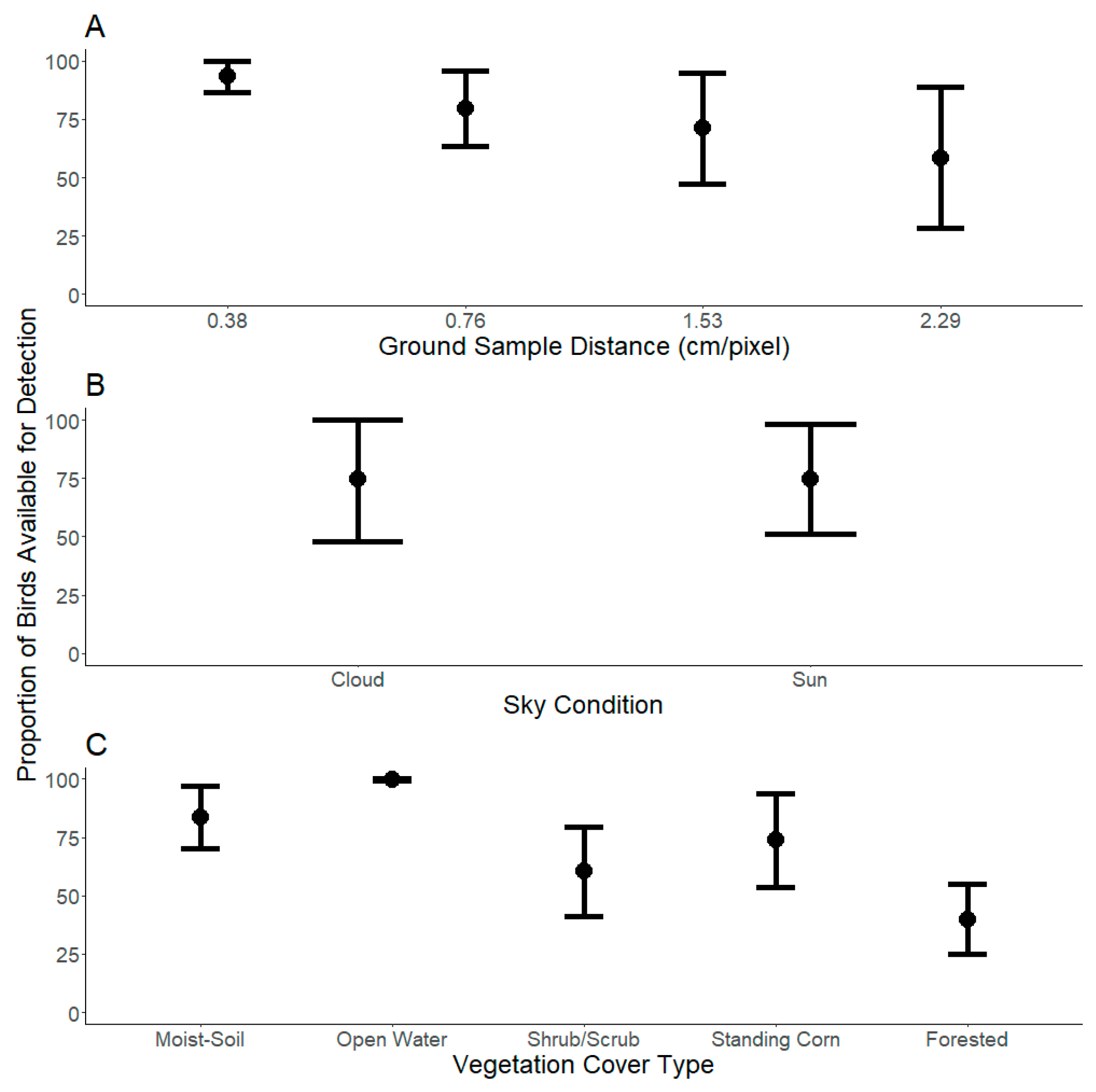

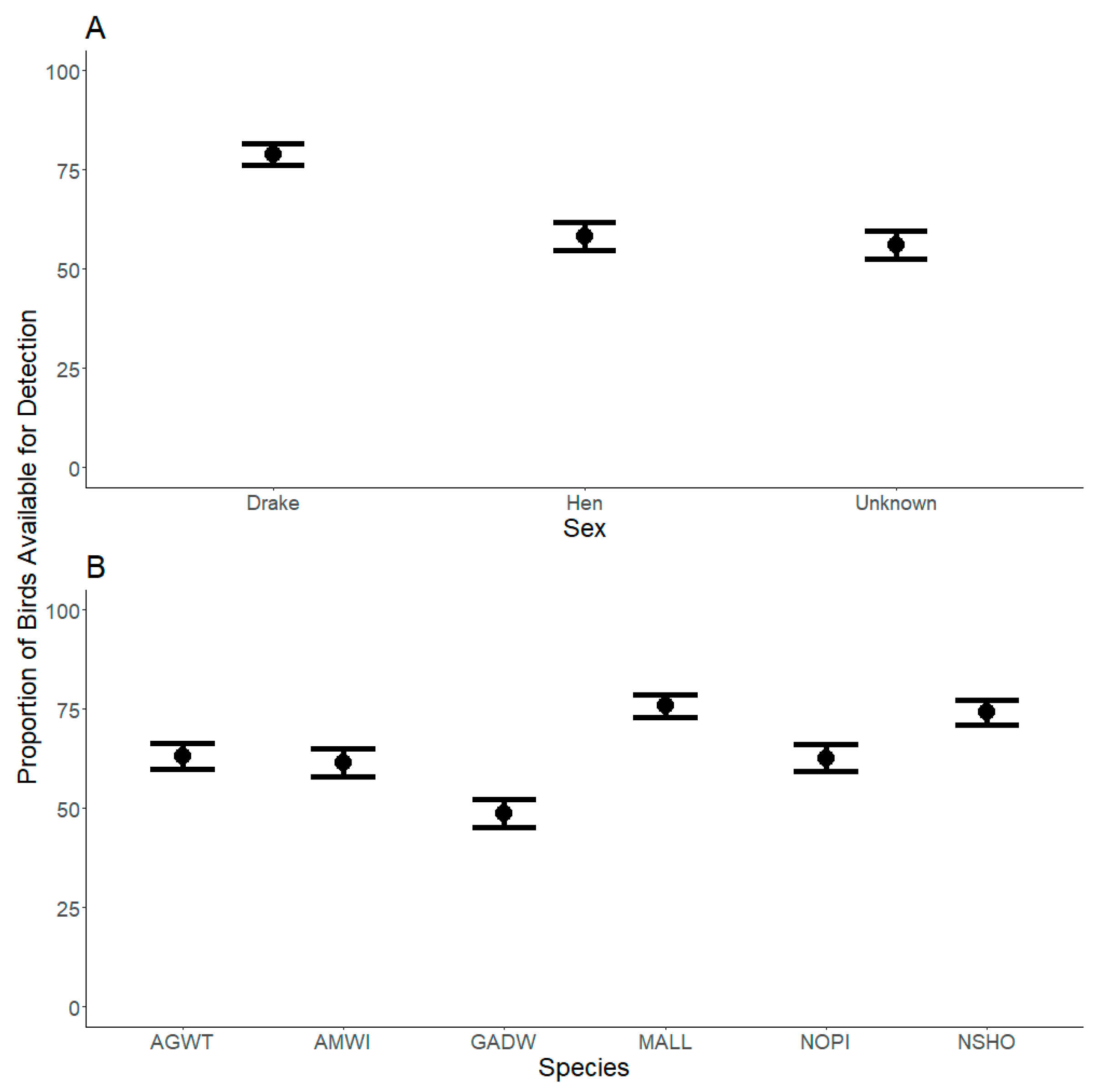

3.1. Availability Bias

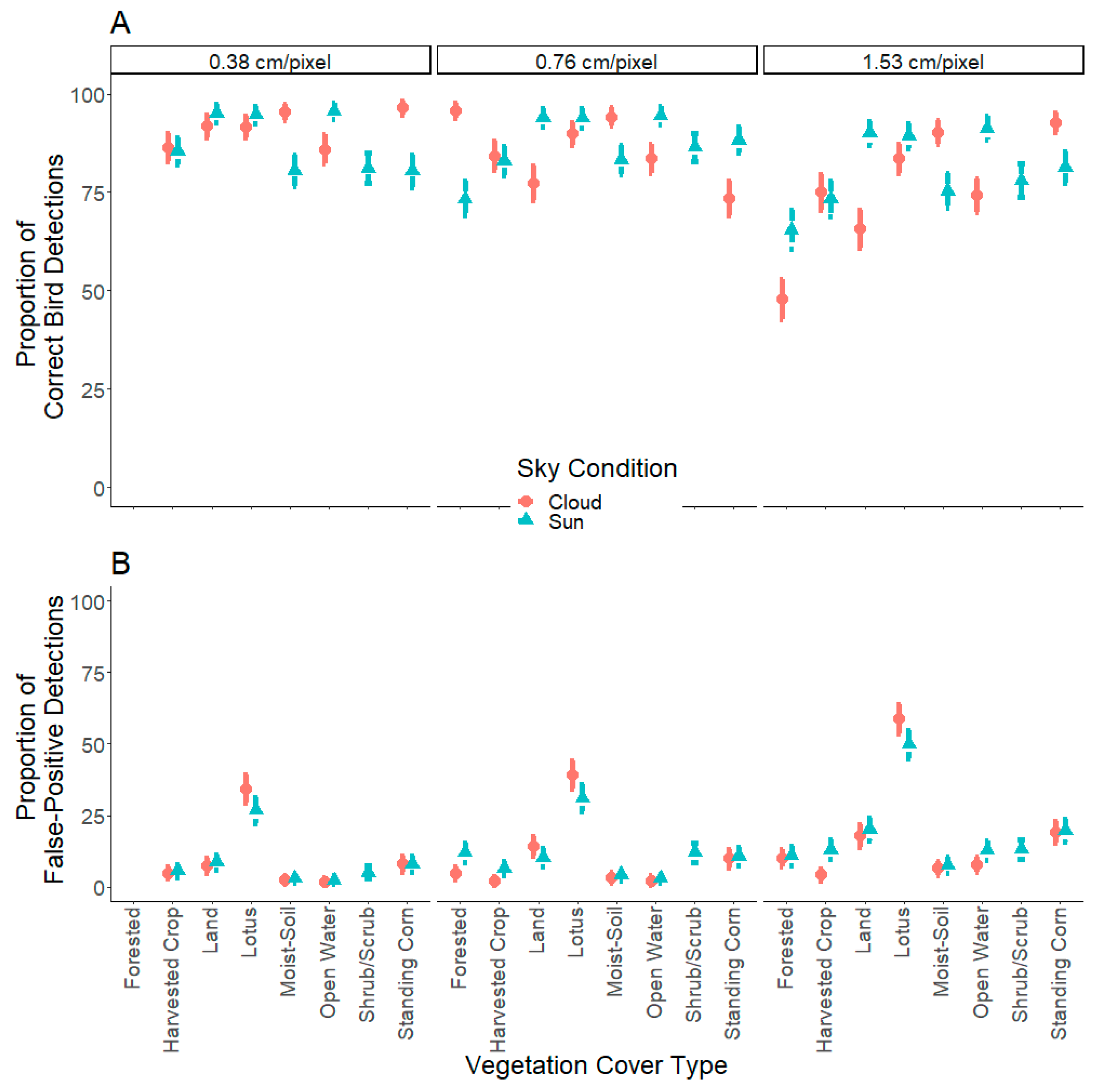

3.2. Perception Bias

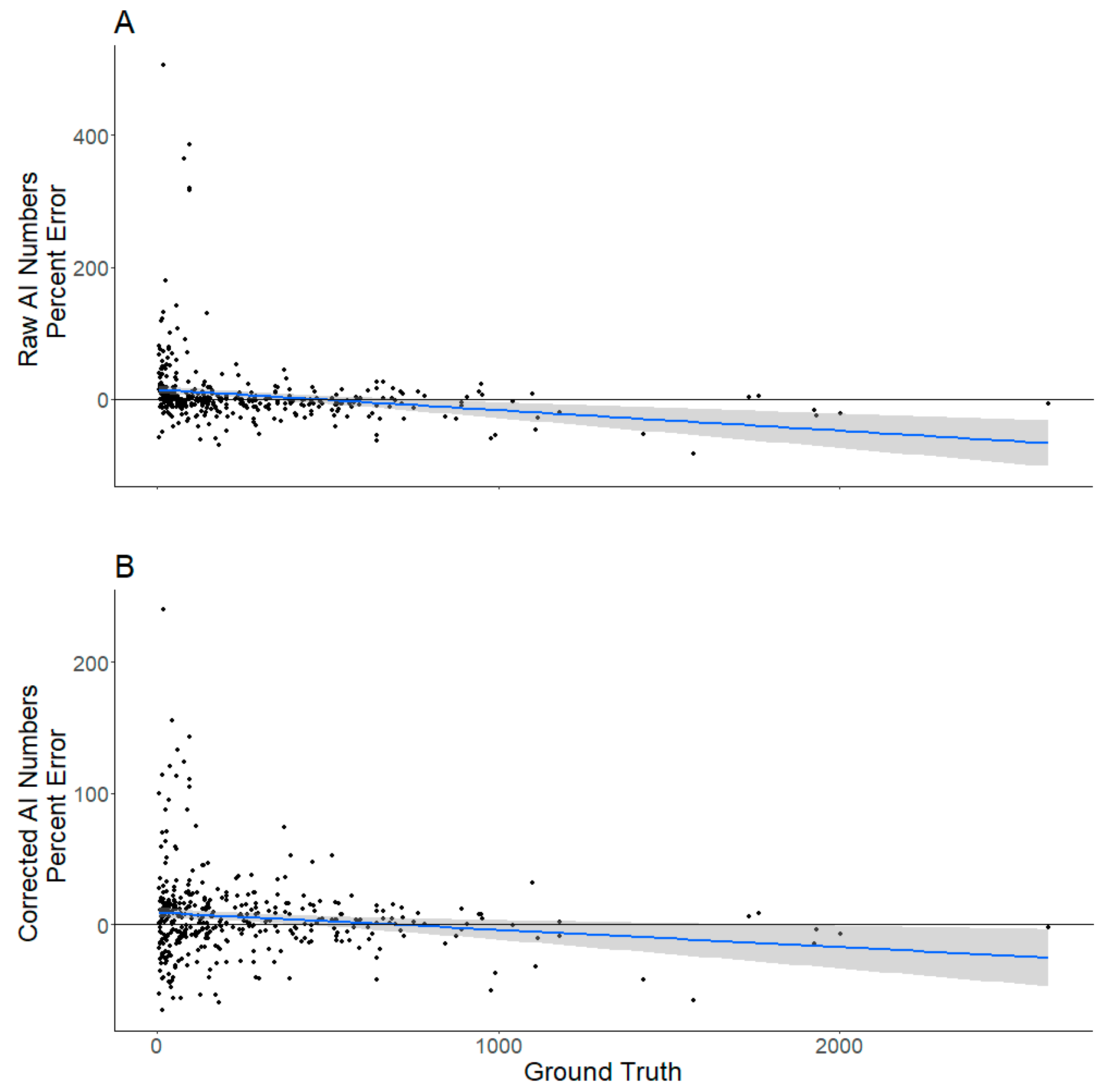

3.3. Correcting Image Counts

4. Discussion

4.1. Availability Bias

4.2. Perception Bias

4.3. Correcting Image Counts

4.4. Management Implications

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nichols, J.; Johnson, F.; Williams, B. Managing North American waterfowl in the face of uncertainty. Annu. Rev. Ecol. Syst. 1995, 26, 177–199. [Google Scholar] [CrossRef]

- Williams, B.; Johnson, F. Adaptive management and the regulation of waterfowl harvests. Wildl. Soc. Bull. 1995, 23, 430–436. [Google Scholar]

- Rönkä, M.; Saari, L.; Hario, M.; Hänninen, J.; Lehikoinen, E. Breeding success and breeding population trends of waterfowl: Implications for monitoring. Wildl. Biol. 2011, 17, 225–239. [Google Scholar] [CrossRef] [PubMed]

- Soulliere, G.; Loges, B.; Dunton, E.; Luukkonen, D.; Eichholz, M.; Koch, M. Monitoring waterfowl in the Midwest during the non-breeding period: Challenges, priorities, and recommendations. J. Fish Wildl. Manag. 2013, 4, 395–405. [Google Scholar] [CrossRef]

- Hagy, H. Coordinated Aerial Waterfowl Surveys on National Wildlife Refuges in the Southeast during Winter 2020; U.S. Fish and Wildlife Service Report, U.S. Fish and Wildlife Service; 2020. Available online: https://ecos.fws.gov/ServCat/DownloadFile/173701 (accessed on 6 June 2023).

- Davis, K.; Silverman, E.; Sussman, A.; Wilson, R.; Zipkin, E. Errors in aerial survey count data: Identifying pitfalls and solutions. Ecol. Evol. 2022, 12, e8733. [Google Scholar] [CrossRef]

- Pagano, A.; Arnold, T. Estimating detection probabilities of waterfowl broods from ground-based surveys. J. Wildl. Manag. 2009, 73, 686–694. [Google Scholar] [CrossRef]

- Eggeman, D.; Johnson, F. Variation in effort and methodology for the midwinter waterfowl inventory in the Atlantic Flyway. Wildl. Soc. Bull. (1973–2006) 1989, 17, 227–233. [Google Scholar]

- Nichols, T.; Clark, L. Comparison of Ground and Helicopter Surveys for Breeding Waterfowl in New Jersey. Wildl. Soc. Bull. 2021, 45, 508–516. [Google Scholar] [CrossRef]

- Smith, G. A Critical Review of the Aerial and Ground Surveys of Breeding Waterfowl in North America; US Department of the Interior, National Biological Service: Washington, DC, USA, 1995; Volume 5.

- Sasse, D. Job-related mortality of wildlife workers in the United States, 1937–2000. Wildl. Soc. Bull. 2003, 31, 1015–1020. [Google Scholar]

- Kumar, A.; Rice, M. Optimized Survey Design for Monitoring Protocols: A Case Study of Waterfowl Abundance. J. Fish Wildl. Manag. 2021, 12, 572–584. [Google Scholar] [CrossRef]

- Leedy, D. Aerial photographs, their interpretation and suggested uses in wildlife management. J. Wildl. Manag. 1948, 12, 191–210. [Google Scholar] [CrossRef]

- Leonard, R.; Fish, E. An aerial photographic technique for censusing lesser sandhill cranes. Wildl. Soc. Bull. 1974, 2, 191–195. [Google Scholar]

- Ferguson, E.; Jorde, D.; Sease, J. Use of 35-mm color aerial photography to acquire mallard sex ratio data. Photogramm. Eng. Remote Sens. 1981, 47, 823–827. [Google Scholar]

- Haramis, G.; Goldsberry, J.; McAuley, D.; Derleth, E. An aerial photographic census of Chesapeake Bay and North Carolina canvasbacks. J. Wildl. Manag. 1985, 49, 449–454. [Google Scholar] [CrossRef]

- Cordts, S.; Zenner, G.; Koford, R. Comparison of helicopter and ground counts for waterfowl in Iowa. Wildl. Soc. Bull. 2002, 30, 317–326. [Google Scholar]

- Anderson, K.; Gatson, K. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez, L.; Montes, G.; Puig, E.; Johnson, S.; Mengersen, K.; Gaston, K. Unmanned aerial vehicles (UAVs) and artificial intelligence revolutionizing wildlife monitoring and conservation. Sensors 2016, 16, 97. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Shao, Q.; Yue, H. Surveying wild animals from satellites, manned aircraft and unmanned aerial systems (UASs): A review. Remote Sens. 2019, 11, 1308. [Google Scholar] [CrossRef]

- Dundas, S.; Vardanega, M.; O’Brien, P.; McLeod, S. Quantifying waterfowl numbers: Comparison of drone and ground-based survey methods for surveying waterfowl on artificial waterbodies. Drones 2021, 5, 5. [Google Scholar] [CrossRef]

- Scholten, C.; Kamphuis, A.; Vredevoogd, K.; Lee-Strydhorst, K.; Atma, J.; Shea, C.; Lamberg, O.; Proppe, D. Real-time thermal imagery from an unmanned aerial vehicle can locate ground nests of a grassland songbird at rates similar to traditional methods. Biol. Conserv. 2019, 233, 241–246. [Google Scholar] [CrossRef]

- Tang, Z.; Zhang, Y.; Wang, Y.; Shang, Y.; Viegut, R.; Webb, E.; Raedeke, A.; Sartwell, J. sUAS and Machine Learning Integration in Waterfowl Population Surveys. In Proceedings of the 2021 IEEE 33rd International Conference on Tools with Artificial Intelligence (ICTAI), Virtual Conference, 1–3 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 517–521. [Google Scholar]

- Zhang, Y.; Wang, S.; Zhai, Z.; Shang, Y.; Viegut, R.; Webb, E.; Raedeke, A.; Sartwell, J. Development of New Aerial Image Datasets and Deep Learning Methods for Waterfowl Detection and Classification. In Proceedings of the 2022 IEEE 4th International Conference on Cognitive Machine Intelligence (CogMI), Atlanta, GA, USA, 14–17 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 117–124. [Google Scholar]

- Lawrence, B.; de Lemmus, E.; Cho, H. UAS-Based Real-Time Detection of Red-Cockaded Woodpecker Cavities in Heterogeneous Landscapes Using YOLO Object Detection Algorithms. Remote Sens. 2023, 15, 883. [Google Scholar] [CrossRef]

- Chabot, D.; Dillon, C.; Francis, C. An approach for using off-the-shelf object-based image analysis software to detect and count birds in large volumes of aerial imagery. Avian Conserv. Ecol. 2018, 13, 15. [Google Scholar] [CrossRef]

- Hodgson, J.; Mott, R.; Baylis, S.; Pham, T.; Wotherspoon, S.; Kilpatrick, A.; Segaran, R.; Reid, I.; Terauds, A.; Koh, L. Drones count wildlife more accurately and precisely than humans. Methods Ecol. Evol. 2018, 9, 1160–1167. [Google Scholar] [CrossRef]

- Wen, D.; Su, L.; Hu, Y.; Xiong, Z.; Liu, M.; Long, Y. Surveys of large waterfowl and their habitats using an unmanned aerial vehicle: A case study on the Siberian crane. Drones 2021, 5, 102. [Google Scholar] [CrossRef]

- Dulava, S.; Bean, W.; Richmond, O. Environmental reviews and case studies: Applications of unmanned aircraft systems (UAS) for waterbird surveys. Environ. Pract. 2015, 17, 201–210. [Google Scholar] [CrossRef]

- Kellenberger, B.; Tuia, D.; Morris, D. AIDE: Accelerating image-based ecological surveys with interactive machine learning. Methods Ecol. Evol. 2020, 11, 1716–1727. [Google Scholar] [CrossRef]

- Kabra, K.; Xiong, A.; Li, W.; Luo, M.; Lu, W.; Yu, T.; Yu, J.; Singh, D.; Garcia, R.; Tang, M.; et al. Deep object detection for waterbird monitoring using aerial imagery. In Proceedings of the 2022 21st IEEE International Conference on Machine Learning and Applications (ICMLA), Nassau, Bahamas, 12–14 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 455–460. [Google Scholar]

- Weinstein, B.; Garner, L.; Saccomanno, V.; Steinkraus, A.; Ortega, A.; Brush, K.; Yenni, G.; McKellar, A.; Converse, R.; Lippitt, C.; et al. A general deep learning model for bird detection in high-resolution airborne imagery. Ecol. Appl. 2022, 32, e2694. [Google Scholar] [CrossRef]

- Pöysä, H.; Kotilainen, J.; Väänänen, V.; Kunnasranta, M. Estimating production in ducks: A comparison between ground surveys and unmanned aircraft surveys. Eur. J. Wildl. Res. 2018, 64, 74. [Google Scholar] [CrossRef]

- Willi, M.; Pitman, R.; Cardoso, A.; Locke, C.; Swanson, A.; Boyer, A.; Veldthuis, M.; Fortson, L. Identifying animal species in camera trap images using deep learning and citizen science. Methods Ecol. Evol. 2019, 10, 80–91. [Google Scholar] [CrossRef]

- Tuia, D.; Kellenberger, B.; Beery, S.; Costelloe, B.; Zuffi, S.; Risse, B.; Mathis, A.; Mathis, M.; van Langevelde, F.; Burghardt, T.; et al. Perspectives in machine learning for wildlife conservation. Nat. Commun. 2022, 13, 792. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, P.; Highsmith, M.; Wergeles, N.; Sartwell, J.; Raedeke, A.; Mitchell, M.; Hagy, H.; Gilbert, A.; Lubinski, B.; et al. Performance comparison of deep learning techniques for recognizing birds in aerial images. In Proceedings of the 2018 IEEE Third International Conference on Data Science in Cyberspace (DSC), Guangzhou, China, 18–21 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 317–324. [Google Scholar]

- Barr, J.; Green, M.; DeMaso, S.; Hardy, T. Detectability and visibility biases associated with using a consumer-grade unmanned aircraft to survey nesting colonial waterbirds. J. Field Ornithol. 2018, 89, 242–257. [Google Scholar] [CrossRef]

- Bushaw, J.; Ringelman, K.; Johnson, M.; Rohrer, T.; Rohwer, F. Applications of an unmanned aerial vehicle and thermal-imaging camera to study ducks nesting over water. J. Field Ornithol. 2020, 91, 409–420. [Google Scholar] [CrossRef]

- Bushaw, J.; Terry, C.; Ringelman, K.; Johnson, M.; Kemink, K.; Rohwer, F. Application of unmanned aerial vehicles and thermal imaging cameras to conduct duck brood surveys. Wildl. Soc. Bull. 2021, 45, 274–281. [Google Scholar] [CrossRef]

- Marchowski, D. Drones, automatic counting tools, and artificial neural networks in wildlife population censusing. Ecol. Evol. 2021, 11, 16214. [Google Scholar] [CrossRef]

- Elmore, J.; Schultz, E.; Jones, L.; Evans, K.; Samiappan, S.; Pfeiffer, M.; Blackwell, B.; Iglay, R. Evidence on the efficacy of small unoccupied aircraft systems (UAS) as a survey tool for North American terrestrial, vertebrate animals: A systematic map. Environ. Evid. 2023, 12, 3. [Google Scholar] [CrossRef]

- Francis, R.; Lyons, M.; Kingsford, R.; Brandis, K. Counting mixed breeding aggregations of animal species using drones: Lessons from waterbirds on semi-automation. Remote Sens. 2020, 12, 1185. [Google Scholar] [CrossRef]

- Burr, P.; Samiappan, S.; Hathcock, L.; Moorhead, R.; Dorr, B. Estimating Waterbird Abundance on Catfish Aquaculture Ponds Using an Unmanned Aerial System; USDA National Wildlife Research Center—Staff Publications: Fort Collins, CO, USA, 2019; p. 2302.

- Wu, E.; Wang, H.; Lu, H.; Zhu, W.; Jia, Y.; Wen, L.; Choi, C.-Y.; Guo, H.; Li, B.; Sun, L.; et al. Unlocking the Potential of Deep Learning for Migratory Waterbirds Monitoring Using Surveillance Video. Remote Sens. 2022, 14, 514. [Google Scholar] [CrossRef]

- Lyons, M.; Brandis, K.; Murray, N.; Wilshire, J.; McCann, J.; Kingsford, R.; Callaghan, C. Monitoring large and complex wildlife aggregations with drones. Methods Ecol. Evol. 2019, 10, 1024–1035. [Google Scholar] [CrossRef]

- Marsh, H.; Sinclair, D. Correcting for visibility bias in strip transect aerial surveys of aquatic fauna. J. Wildl. Manag. 1989, 53, 1017–1024. [Google Scholar] [CrossRef]

- Eikelboom, J.; Wind, J.; van de Ven, E.; Kenana, L.; Schroder, B.; de Knegt, H.; van Langevelde, F.; Prins, H. Improving the precision and accuracy of animal population estimates with aerial image object detection. Methods Ecol. Evol. 2019, 10, 1875–1887. [Google Scholar] [CrossRef]

- Augustine, B.; Koneff, M.; Pickens, B.; Royle, J. Towards estimating marine wildlife abundance using aerial surveys and deep learning with hierarchical classifications subject to error. bioRxiv 2023, 2023-02. [Google Scholar]

- Corcoran, E.; Denman, S.; Hamilton, G. New technologies in the mix: Assessing N-mixture models for abundance estimation using automated detection data from drone surveys. Ecol. Evol. 2020, 10, 8176–8185. [Google Scholar] [CrossRef]

- Hong, S.; Han, Y.; Kim, S.; Lee, A.; Kim, G. Application of deep-learning methods to bird detection using unmanned aerial vehicle imagery. Sensors 2019, 19, 1651. [Google Scholar] [CrossRef]

- Steinhorst, R.; Samuel, M. Sightability adjustment methods for aerial surveys of wildlife populations. Biometrics 1989, 45, 415–425. [Google Scholar] [CrossRef]

- Gabor, T.; Gadawski, T.; Ross, R.; Rempel, R.; Kroeker, D. Visibility Bias of Waterfowl Brood Surveys Using Helicopters in the Great Clay Belt of Northern Ontario (Vicios en la Visibilidad de Camadas de Aves Acuáticas Durante Muestreos Que Usen Helicópteros). J. Field Ornithol. 1995, 66, 81–87. [Google Scholar]

- Cox, A.; Gilliland, S.; Reed, E.; Roy, C. Comparing waterfowl densities detected through helicopter and airplane sea duck surveys in Labrador, Canada. Avian Conserv. Ecol. 2022, 17, 24. [Google Scholar] [CrossRef]

- Roy, C.; Gilliland, S.; Reed, E. A hierarchical dependent double-observer method for estimating waterfowl breeding pairs abundance from helicopters. Wildl. Biol. 2022, 2022, e1003. [Google Scholar] [CrossRef]

- Clement, M.; Converse, S.; Royle, J. Accounting for imperfect detection of groups and individuals when estimating abundance. Ecol. Evol. 2017, 7, 7304–7310. [Google Scholar] [CrossRef]

- Russell, B.; Torralba, A.; Murphy, K.; Freeman, W. LabelMe: A database and web-based tool for image annotation. Int. J. Comput. Vis. 2007, 77, 157–173. [Google Scholar] [CrossRef]

- Goodrich, B.; Gabry, J.; Ali, I.; Brilleman, S. Rstanarm: Bayesian Applied Regression Modeling via Stan; R Package Version 2.21.1; 2020; Available online: https://mc-stan.org/rstanarm/ (accessed on 3 March 2023).

- Martin, J.; Edwards, H.; Burgess, M.; Percival, H.; Fagan, D.; Gardner, B.; Ortega-Ortiz, J.; Ifju, P.; Evers, B.; Rambo, T. Estimating distribution of hidden objects with drones: From tennis balls to manatees. PLoS ONE 2012, 7, e38882. [Google Scholar] [CrossRef]

- Edwards, H.; Hostetler, J.; Stith, B.; Martin, J. Monitoring abundance of aggregated animals (Florida manatees) using an unmanned aerial system (UAS). Sci. Rep. 2021, 11, 12920. [Google Scholar] [CrossRef]

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting linear mixed-effects models using lme4. arXiv 2014, arXiv:1406.5823. [Google Scholar]

- Smith, D.; Reinecke, K.; Conroy, M.; Brown, M.; Nassar, J. Factors affecting visibility rate of waterfowl surveys in the Mississippi Alluvial Valley. J. Wildl. Manag. 1995, 59, 515–527. [Google Scholar] [CrossRef]

- Pearse, A.; Dinsmore, S.; Kaminski, R.; Reinecke, K. Evaluation of an aerial survey to estimate abundance of wintering ducks in Mississippi. J. Wildl. Manag. 2008, 72, 1413–1419. [Google Scholar] [CrossRef]

- Pearse, A.; Gerard, P.; Dinsmore, S.; Kaminski, R.; Reinecke, K. Estimation and correction of visibility bias in aerial surveys of wintering ducks. J. Wildl. Manag. 2008, 72, 808–813. [Google Scholar] [CrossRef]

| Dependent Variable | Model | Covariates | WAIC | ΔWAIC |

|---|---|---|---|---|

| Overall Waterfowl Availability | Interaction Model | Vegetation Cover Type × Sky Condition × GSD | 337.0 | 0.0 |

| Additive Model | Vegetation Cover Type + Sky Condition + GSD | 450.4 | 113.4 | |

| Null Model | None | 1937.9 | 1600.9 | |

| Species and Sex Identification Availability | Interaction Model | Species × Sex × Vegetation Cover Type × Sky Condition × GSD | 1502.2 | 0.0 |

| Additive Model | Species + Sex + Vegetation Cover Type + Sky Condition + GSD | 1668.1 | 165.9 | |

| Null Model | Species + Sex | 4380.4 | 2878.1 |

| Dependent Variable | Model | Covariates | WAIC | ΔWAIC |

|---|---|---|---|---|

| Probability of Correct Waterfowl Detections | Interaction Model | Vegetation Cover Type × Sky Condition × GSD | 4804.2 | 0.0 |

| Additive Model | Vegetation Cover Type + Sky Condition + GSD | 6343.9 | 1539.7 | |

| Null Model | None | 7833.6 | 3029.4 | |

| Probability of False Positive Waterfowl Detections | Interaction Model | Vegetation Cover Type × Sky Condition × GSD | 2939.3 | 0.0 |

| Additive Model | Vegetation Cover Type + Sky Condition + GSD | 3765.0 | 825.7 | |

| Null Model | None | 7215.4 | 4276.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Viegut, R.; Webb, E.; Raedeke, A.; Tang, Z.; Zhang, Y.; Zhai, Z.; Liu, Z.; Wang, S.; Zheng, J.; Shang, Y. Detection Probability and Bias in Machine-Learning-Based Unoccupied Aerial System Non-Breeding Waterfowl Surveys. Drones 2024, 8, 54. https://doi.org/10.3390/drones8020054

Viegut R, Webb E, Raedeke A, Tang Z, Zhang Y, Zhai Z, Liu Z, Wang S, Zheng J, Shang Y. Detection Probability and Bias in Machine-Learning-Based Unoccupied Aerial System Non-Breeding Waterfowl Surveys. Drones. 2024; 8(2):54. https://doi.org/10.3390/drones8020054

Chicago/Turabian StyleViegut, Reid, Elisabeth Webb, Andrew Raedeke, Zhicheng Tang, Yang Zhang, Zhenduo Zhai, Zhiguang Liu, Shiqi Wang, Jiuyi Zheng, and Yi Shang. 2024. "Detection Probability and Bias in Machine-Learning-Based Unoccupied Aerial System Non-Breeding Waterfowl Surveys" Drones 8, no. 2: 54. https://doi.org/10.3390/drones8020054

APA StyleViegut, R., Webb, E., Raedeke, A., Tang, Z., Zhang, Y., Zhai, Z., Liu, Z., Wang, S., Zheng, J., & Shang, Y. (2024). Detection Probability and Bias in Machine-Learning-Based Unoccupied Aerial System Non-Breeding Waterfowl Surveys. Drones, 8(2), 54. https://doi.org/10.3390/drones8020054