An AI-Based Deep Learning with K-Mean Approach for Enhancing Altitude Estimation Accuracy in Unmanned Aerial Vehicles

Abstract

1. Introduction

2. Materials and Research Methodology

2.1. Materials and Methods

- Flight Control Unit (FCU): 32 bit ARM Cortex M4 core with Floating Point Unit (FPU) (168 MHz/256 KB, RAM 2 MB Flash) (Pixhawk, an internationally developed open-hardware project.) Sensors: MPU6000 (primary accelerometer and gyroscope), ST Micro 16-bit gyroscope, ST Micro 14-bit accelerometer/compass, MEAS barometer (InvenSense [now part of TDK Corporation], a U.S.-based company, San Jose, CA, USA)

- Interfaces: 5× UART serial ports, Spektrum DSM/DSM2/DSM-X Satellite input, Futaba S.BUS input, PPM sum signal, RSSI input, I2C, SPI, 2× CAN, USB

- Dimensions: 38 g weight, 50 mm width, 15.5 mm height, 81.5 mm length

- Processor: STM32F412

- GNSS Receiver: Ublox M9N

- Supported GNSS Bands: GPS/QZSS L1 C/A, GLONASS L10F, BeiDou B1I, Galileo E1B/C, SBAS L1 C/A

- Navigation Update Rate: Up to 25 Hz (RTK)

- Position Accuracy: Up to 1.5 m

- Dimensions: 60 × 60 × 16 mm, 33 g weight

- Motors: 4 x Xrotor pro 50A 380 KV

- Propellers: 15” Carbon Fiber, 12 mm hole size

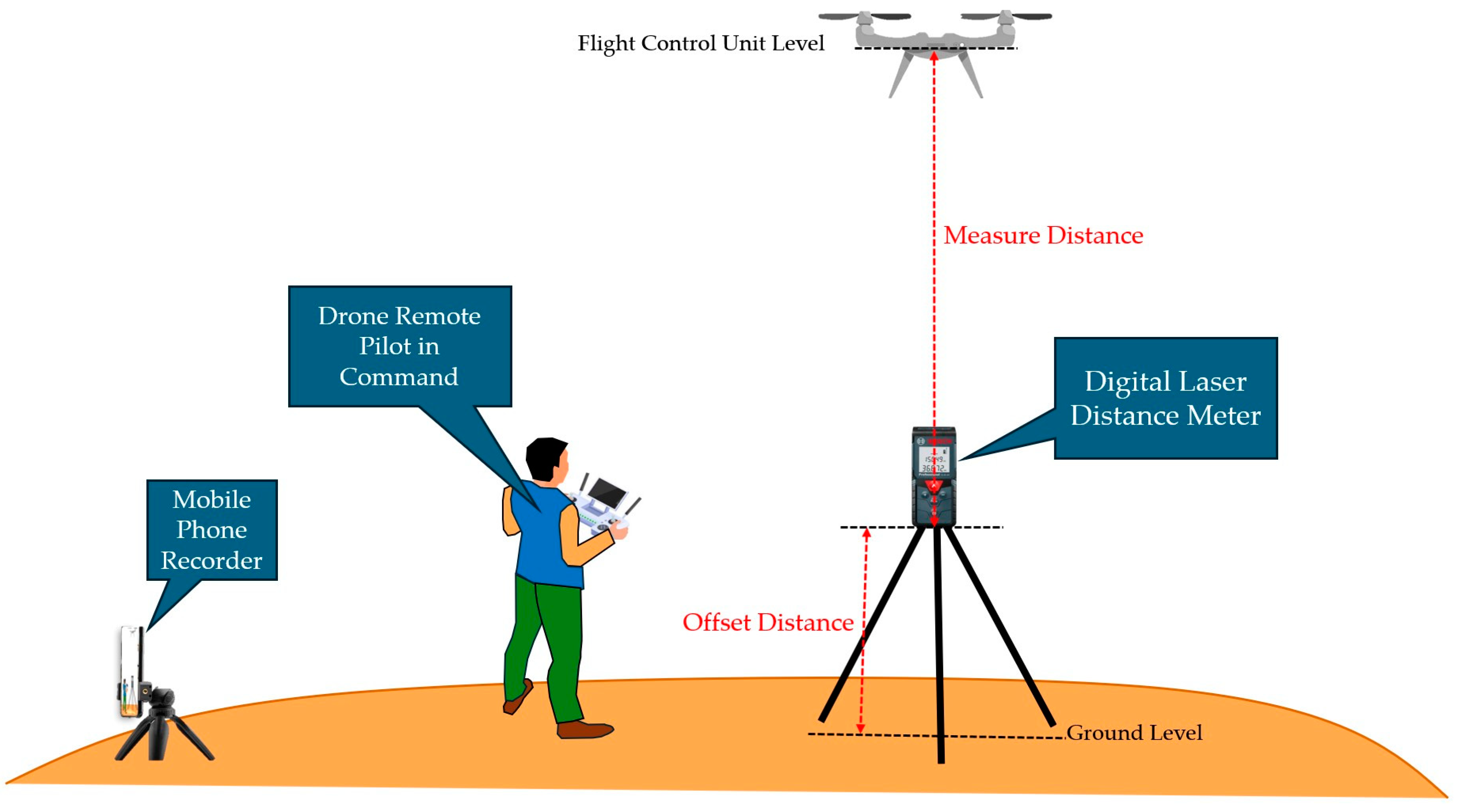

- Digital Laser Distance Meter (Figure 3)

- Measurement Range: 120 m

- Accuracy: ±3 mm

- Laser Class: Class II

2.1.1. Preparation of Experimental Altitude Ranges for Comprehensive UAV Performance Analysis

- Cluster Assignment Equation:

- Centroid Update Equation:

- Objective Function:

2.1.2. Preparation for Testing the Digital Laser Distance Meter

2.2. Deep Learning Regression-Based Models

- 1.

- Layer Specific Equations:

- First Hidden Layer Equationswhere and represent the weights and biases of the first hidden layer

- Second Hidden Layer.where and are the weights and biases of the second hidden layer, and tanh is applied as the activation function for the output of the first layer.

- 2.

- Output Layer Equations:

- 3.

- Activation Functions:

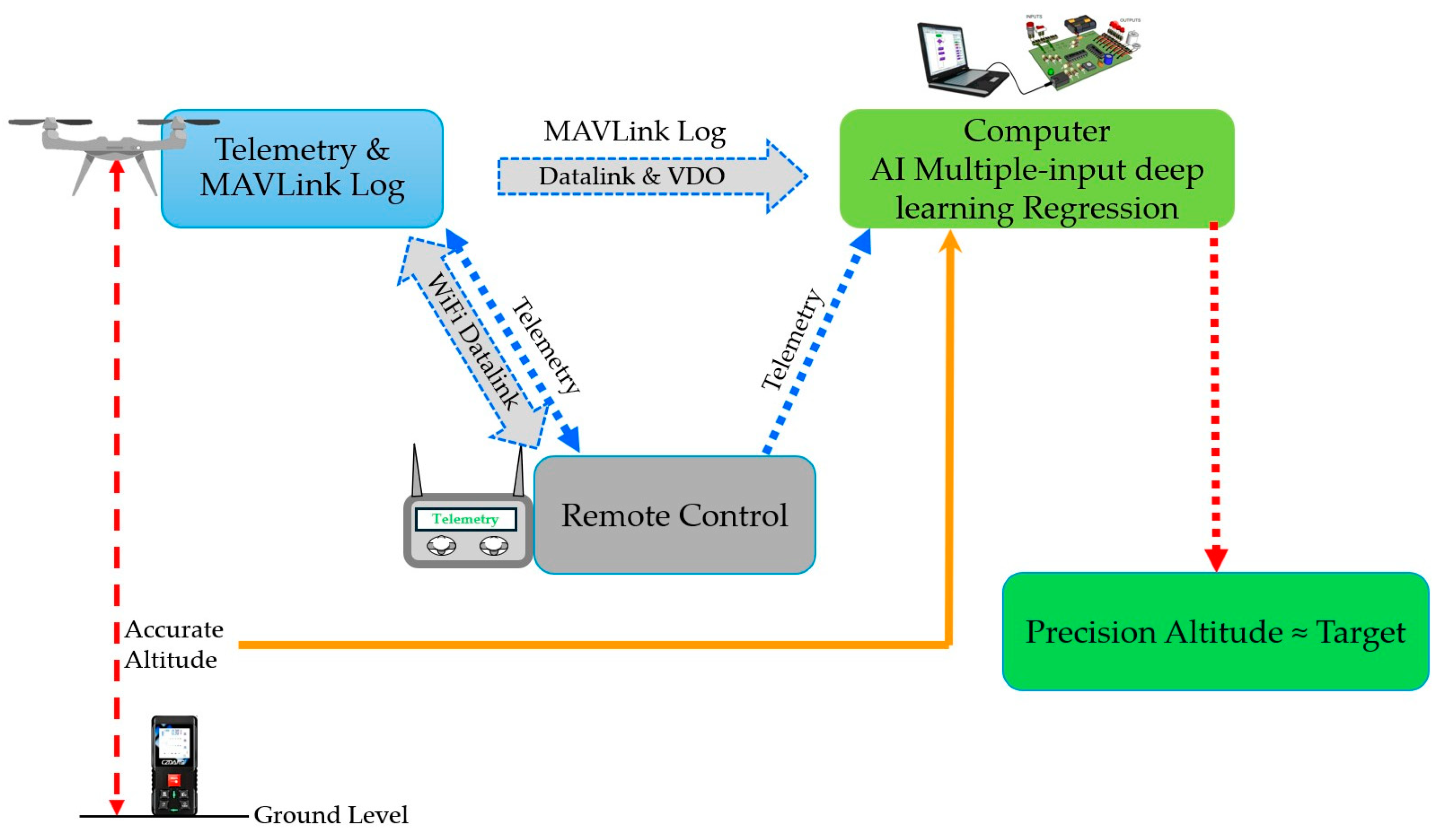

3. Experimental Setup and Data Analysis

3.1. Experimental Design, Instrumentation Configuration

3.1.1. Area Setup

3.1.2. UAVs Setup

3.2. Data Analysis

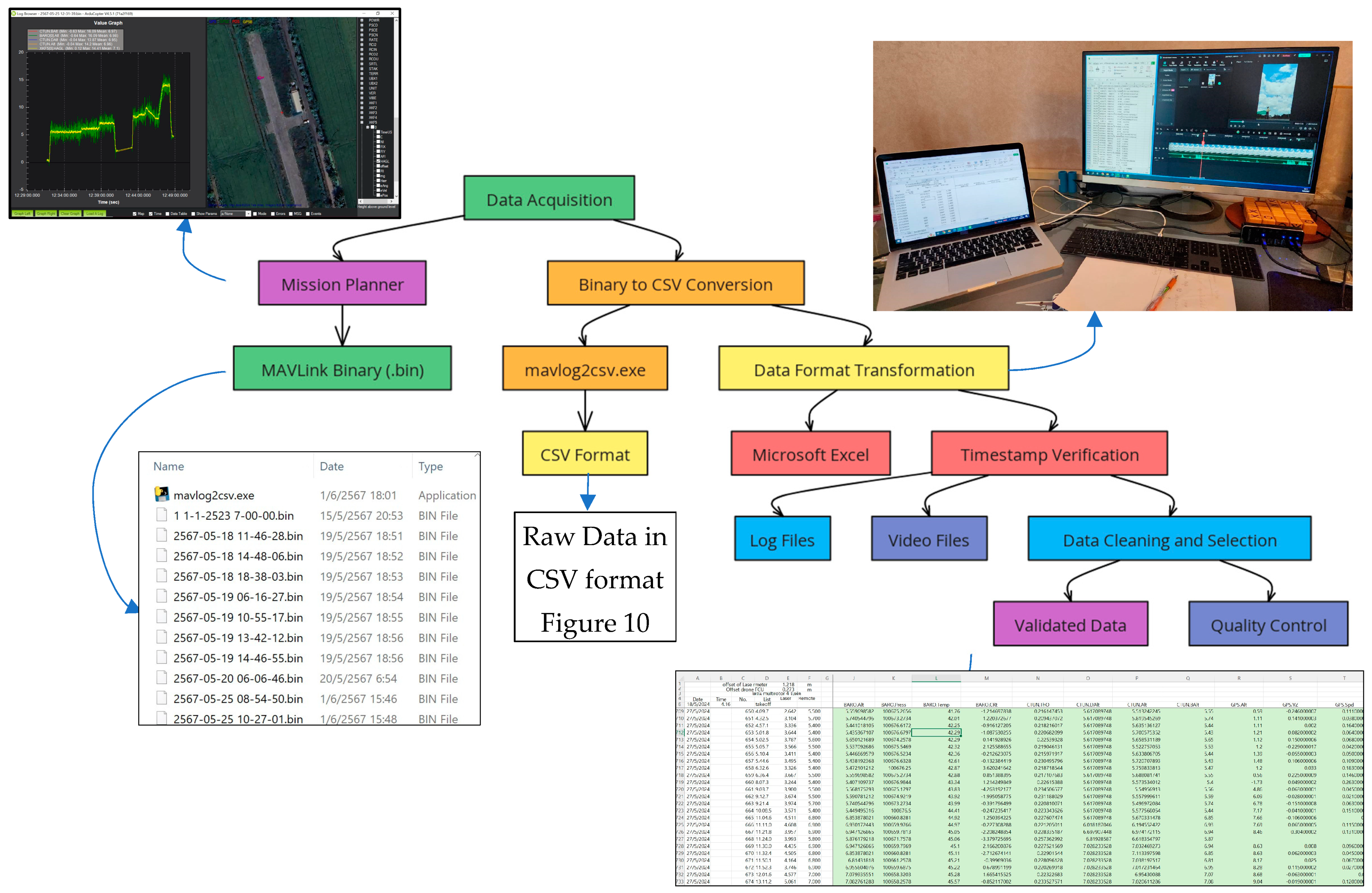

3.2.1. Data Acquisition

3.2.2. Data Cleaning and Selecting Feature

- Remote Control Altitude

- Description: Altitude displayed on the UAV’s remote control, calculated by the onboard Flight Control Unit (FCU) using data from barometric sensors, IMU, and GPS.

- Relevance: Provides the operator with a real-time, integrated altitude estimate, essential for manual adjustments, especially in low-visibility or GPS-limited scenarios.

- Example: During flight, the remote control combines data from the barometer, IMU, and GPS, enabling altitude monitoring and adjustments to maintain the UAV’s height above ground. For instance, if the UAV ascends beyond the desired altitude, the operator can adjust controls to descend back to the set level.

- BARO.Alt (Barometric Altitude)

- Description: Altitude based on barometric pressure readings, which decrease predictably with increasing altitude.

- Relevance: Crucial for altitude stability, especially in variable weather conditions, by providing a consistent atmospheric pressure reference.

- Example: As the UAV ascends, barometric readings decrease, indicating an increase in altitude. Conversely, if the UAV descends, barometric readings increase, helping to confirm the reduction in altitude. This trend stabilizes altitude estimation even in windy conditions.

- CTUN.DAlt (Desired Altitude for Control)

- Description: The target altitude set by the operator or flight controller.

- Relevance: Serves as a benchmark for altitude correction, enabling the control system to adjust the UAV’s position if deviations from the target occur.

- Example: If programmed to maintain at 10 m, the UAV adjusts to return to this set altitude upon detecting any deviation. If the UAV descends below 10 m due to a gust of wind, it will adjust to ascend back to the desired altitude.

- CTUN.Alt (Reference Altitude for Adjustment)

- Description: A dynamically updated altitude base level for comparison with the desired altitude.

- Relevance: Acts as a feedback loop, helping correct altitude changes in response to environmental factors.

- Example: During ascent, this reference helps stabilize the climb by correcting for altitude shifts caused by wind. Likewise, during descent, it provides a stable reference point to ensure controlled lowering, avoiding sudden drops or altitude fluctuations.

- CTUN.BAlt (Barometer-Based Control Altitude)

- Description: Altitude calculated purely from barometric readings, which the control system uses to ensure stability.

- Relevance: Ensures accurate altitude holding, especially at high altitudes or in conditions where GPS signals are unreliable.

- Example: During high-altitude operations, the UAV relies on this reading to maintain stability when GPS accuracy is compromised. If the UAV descends unexpectedly, barometric control altitude helps stabilize the descent until the target altitude is regained.

- XKF5.HAGL (Height Above Ground Level from EKF3 Sensor)

- Description: Height above ground level, calculated from the Extended Kalman Filter (EKF3) sensor, considering immediate terrain conditions.

- Relevance: Vital for terrain navigation, especially in areas with varied topography, by maintaining a safe distance above the ground.

- Example: When flying over hilly terrain, this measurement ensures consistent altitude above ground, preventing possible collisions with obstacles. For instance, if the UAV ascends over rising ground, HAGL helps it maintain safe clearance, while during descent over descending terrain, it ensures the UAV keeps the necessary distance to avoid collisions.

- Target: Reference altitude from digital laser distance meter

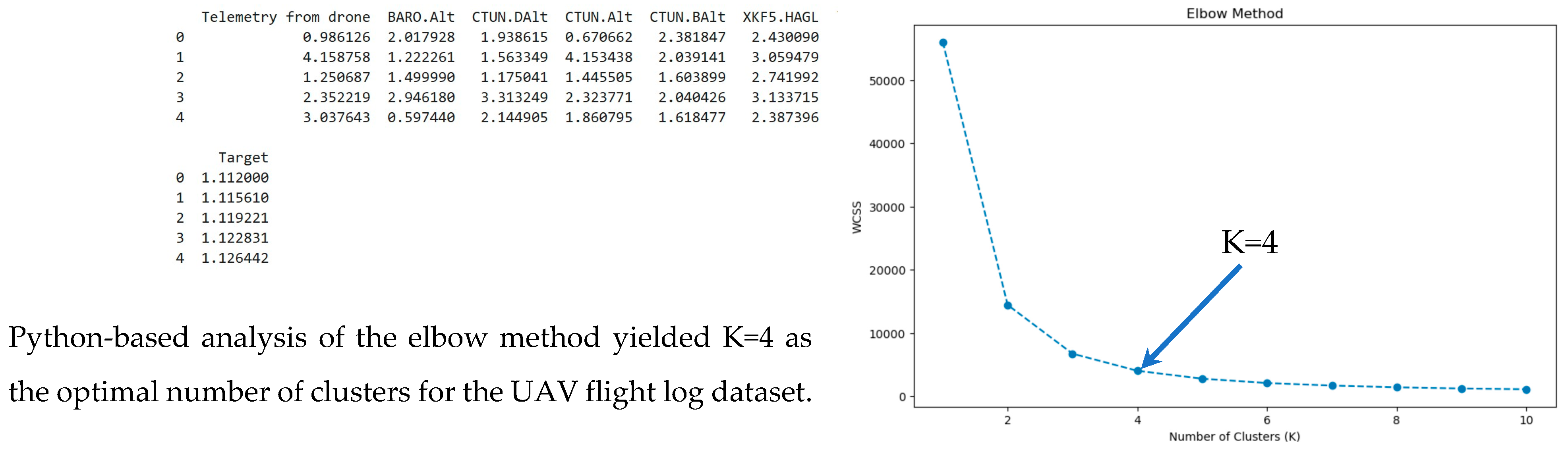

3.2.3. Elbow and K-Mean Method

3.3. Preparation of Training and Testing Datasets

3.4. DL-KMA

- Data Preprocessing and Normalization:

- Altitude Range Clustering:

- Model Architecture:

- Input Layer: Accepting six selected features derived from UAV flight logs.

- Hidden Layers: Two hidden layers, each containing 100 nodes, with hyperbolic tangent (tanh) activation functions.

- Output Layer: A single neuron with ReLU activation for altitude prediction.

- Training Process:

- Loss Function: Mean Squared Error (MSE) was used as the primary loss function.

- Regularization: L1 regularization was applied to prevent overfitting.

- Optimization: A gradient descent iterative algorithm was used to fine-tune the weight matrices (W) and bias vectors (B).

- Early Stopping: Implemented to prevent overfitting and optimize training time.

- Altitude Compensation Mechanism:

- Experimental Validation:

- Processor: 2.5 GHz Intel Core i5 (Ivy Bridge)

- Memory: DDR3 RAM (upgradeable)

- Storage: Traditional hard drive with option for SSD

- Display: 13.3-inch standard display

- Ports: USB 3.0, Thunderbolt

- Optical Drive: DVD SuperDrive

4. Results and Discussion

4.1. Cluster-Specific Performance

4.2. Model Performance Metrics

4.3. Comparative Analysis

4.4. Data Collection Limitations and Challenges

4.5. Environmental Impact Analysis on Model Accuracy

- Area A (Muak Lek, Saraburi): elevation approximately 430 m

- Area B (Bangkok): elevation approximately 3 m

- Varying wind conditions at different altitudes

- Atmospheric pressure differences due to elevation disparities

- Diverse lighting conditions throughout the day (08:30–18:00)

- Critical midday period (11:40–14:50) with intense sunlight

- Different elevations and atmospheric pressures

- Various times of day

- Changing wind conditions

- Diverse lighting conditions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Poudel, G. Improved Object Detection in UAV Images using Deep Learning. Cogniz. J. Multidiscip. Stud. 2024, 4, 83–109. [Google Scholar] [CrossRef]

- Qin, P.; Wu, X.; Cai, Z.; Zhao, X.; Fu, Y.; Wang, M. Joint Optimization of UAV’s Flying Altitude and Power Allocation for UAV-Enabled Internet of Vehicles. IEEE Trans. Intell. Transp. Syst. 2023, 10, 3421–3434. [Google Scholar]

- Pesci, A.; Teza, G.; Fabris, M. Editorial of Special Issue Unconventional Drone-Based Surveying. Drones 2023, 7, 175. [Google Scholar] [CrossRef]

- Gao, F.; Wang, Z.; Liu, X.; Liu, S. UAV Position Optimization for Servicing Ground Users Based on Deep Reinforcement Learning. J. Phys. Conf. Ser. 2024, 2861, 012011. [Google Scholar] [CrossRef]

- Wang, W.; Chen, H.; Zhang, X.; Zhou, W.; Shi, W. Aprus: An Airborne Altitude-Adaptive Purpose-Related UAV System for Object Detection. In Proceedings of the 2022 IEEE 24th International Conference on High Performance Computing & Communications; 8th International Conference on Data Science & Systems; 20th International Conference on Smart City; 8th International Conference on Dependability in Sensor, Cloud & Big Data Systems & Application (HPCC/DSS/SmartCity/DependSys), Hainan, China, 18–20 December 2022. [Google Scholar] [CrossRef]

- Sanjar, S.; Sulaym, S. Optimal Deep Learning-Based Image Classification for IoT-Enabled UAVs in Remote Sensing Applications. Int. J. Adv. Appl. Comput. Intell. 2024, 6, 1–12. [Google Scholar] [CrossRef]

- Yildirim, E.O.; Sefercik, U.G.; Kavzoglu, T. Automated identification of vehicles in very high-resolution UAV orthomosaics using YOLOv7 deep learning model. Turk. J. Electr. Eng. Comput. Sci. 2024, 32, 144–165. [Google Scholar] [CrossRef]

- Panthakkan, A.; Mansoor, W.; Al Ahmad, H. Accurate UAV-Based Vehicle Detection: The Cutting-Edge YOLOv7 Approach. In Proceedings of the 2023 International Symposium on Image and Signal Processing and Analysis (ISPA), Rome, Italy, 18–19 September 2023. [Google Scholar] [CrossRef]

- Zeng, H.; Li, J.; Qu, L. Lightweight Low-Altitude UAV Object Detection Based on Improved YOLOv5s. Int. J. Adv. Netw. Monit. Control 2024, 9, 87–99. [Google Scholar] [CrossRef]

- Chen, Z.; Li, J.; Li, Q.; Dong, Z.; Yang, B. DeepAAT: Deep Automated Aerial Triangulation for Fast UAV-based mapping. arXiv 2024, arXiv:2402.01134. [Google Scholar] [CrossRef]

- Wang, C.; Li, Z.; Gao, Q.; Cui, T.; Sun, D.; Jiang, W. Lightweight and Efficient Air-to-Air Unmanned Aerial Vehicle Detection Neural Networks. In Proceedings of the 2023 IEEE International Conference on Unmanned Systems (ICUS), Hefei, China, 13–15 October 2023. [Google Scholar] [CrossRef]

- Makrigiorgis, R.; Kyrkou, C.; Kolios, P. How High can you Detect? Improved accuracy and efficiency at varying altitudes for Aerial Vehicle Detection. In Proceedings of the 2023 International Conference on Unmanned Aircraft Systems (ICUAS), Warsaw, Poland, 6–9 June 2023. [Google Scholar] [CrossRef]

- Makrigiorgis, R.; Kyrkou, C.; Kolios, P. Multi-Altitude Aerial Vehicles Dataset (Version 1.0); Zenodo: Geneva, Switzerland, 2023. [Google Scholar] [CrossRef]

- Cheng, Q.; Wang, Y.; He, W.; Bai, Y. Lightweight air-to-air unmanned aerial vehicle target detection model. Sci. Rep. 2024, 14, 2609. [Google Scholar] [CrossRef] [PubMed]

- Taame, A.; Lachkar, I.; Abouloifa, A.; Mouchrif, I. UAV Altitude Estimation Using Kalman Filter and Extended Kalman Filter. In Automatic Control and Emerging Technologies, Proceedings of ACET 2023, Kenitra, Morocco, 11–13 July 2023; El Fadil, H., Zhang, W., Eds.; Lecture Notes in Electrical Engineering; Springer: Singapore, 2024; p. 1141. [Google Scholar] [CrossRef]

- Yang, Z.; Xie, F.; Zhou, J.; Yao, Y.; Hu, C.; Zhou, B. AIGDet: Altitude-Information-Guided Vehicle Target Detection in UAV-Based Images. IEEE Sens. J. 2024, 24, 22672–22684. [Google Scholar] [CrossRef]

- Aslani, R.; Saberinia, E. Joint Power Control and Altitude Planning for Energy-Efficient UAV-Assisted Vehicular Networks. In Advances in Systems Engineering; Selvaraj, H., Chmaj, G., Zydek, D., Eds.; Springer: Cham, Switezerland, 2023; p. 761. [Google Scholar] [CrossRef]

- Li, Z.; Jiang, X.; Ma, S.; Ma, X.; Lv, Z.; Ding, H.; Ji, H.; Sun, Z. Expediting the Convergence of Global Localization of UAVs through Forward-Facing Camera Observation. Drones 2024, 8, 335. [Google Scholar] [CrossRef]

- Zhai, W.; Li, C.; Cheng, Q.; Mao, B.; Li, Z.; Li, Y.; Ding, F.; Qin, S.; Fei, S.; Chen, Z. Enhancing Wheat Above-Ground Biomass Estimation Using UAV RGB Images and Machine Learning: Multi-Feature Combinations, Flight Height, and Algorithm Implications. Remote Sens. 2023, 15, 3653. [Google Scholar] [CrossRef]

- Du, L.; Liang, Y.; Mian, I.A.; Zhou, P. K-Means Clustering Based on Chebyshev Polynomial Graph Filtering. In Proceedings of the ICASSP 2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; Available online: https://ieeexplore.ieee.org/document/10446384 (accessed on 24 November 2024).

- Ren, H.; Pan, C.; Wang, K.; Deng, Y.; Elkashlan, M.; Nallanathan, A. Achievable Data Rate for URLLC-Enabled UAV Systems With 3-D Channel Model. IEEE Wirel. Commun. Lett. 2019, 8, 1587–1590. [Google Scholar] [CrossRef]

- Pritzl, V.; Vrba, M.; Tortorici, C.; Ashour, R.; Saska, M. Adaptive estimation of UAV altitude in complex indoor environments using degraded and time-delayed measurements with time-varying uncertainties. Robot. Auton. Syst. 2022, 156, 104315. [Google Scholar] [CrossRef]

- Kolarik, J.; Lyng, N.L.; Bossi, R.; Li, R.; Witterseh, T.; Smith, K.M.; Wargocki, P. Application of Cluster Analysis to Examine the Performance of Low-Cost Volatile Organic Compound Sensors. Buildings 2023, 13, 2070. [Google Scholar] [CrossRef]

- Liu, Y.; Han, K.; Rasdorf, W. A Comparison of Accuracy of UAS Photogrammetry in Different Terrain Sites. In Construction Research Congress 2024; American Society of Civil Engineers: Reston, VA, USA, 2024; pp. 367–376. [Google Scholar] [CrossRef]

- Humaira, H.; Rasyidah, R. Determining the Appropiate Cluster Number Using Elbow Method for K-Means Algorithm. In Proceedings of the 2nd Workshop on Multidisciplinary and Applications (WMA) 2018, Padang, Indonesia, 24–25 January 2018; EAI: Gent, Belgium, 2020. [Google Scholar] [CrossRef]

- Khalaf, A.Z.; Alyasery, B.H. Laser Distance Sensors Evaluation for Geomatics Researches. Iraqi J. Sci. 2020, 61, 1831–1841. [Google Scholar] [CrossRef]

- Taddia, Y.; Stecchi, F.; Pellegrinelli, A. Coastal Mapping Using DJI Phantom 4 RTK in Post-Processing Kinematic Mode. Drones 2020, 4, 9. [Google Scholar] [CrossRef]

- Barrile, V.; La Foresta, F.; Genovese, E. Optimizing Unmanned Aerial Vehicle Electronics: Advanced Charging Systems and Data Transmission Solutions. Electronics 2024, 13, 3208. [Google Scholar] [CrossRef]

- Xu, T.; Damron, E.; Silvestri, S. High-Precision Crop Monitoring Through UAV-Aided Sensor Data Collection. In Proceedings of the ICC 2024—IEEE International Conference on Communications, Denver, CO, USA, 20 August 2024. [Google Scholar] [CrossRef]

- Raj, A.S.; Karthekeyan, S.G.; Kaviyarasu, A.; Henrietta, H.M. Revolutionizing Precision Agriculture with Drone-Based Imaging and Fuzzy Intelligent Algorithms. Qeios 2024. preprint. [Google Scholar] [CrossRef]

- Han, Y. Application of Unmanned Aerial Vehicle Remote Sensing for Agricultural Monitoring. E3S Web of Conferences. In Proceedings of the 2024 International Conference on Ecological Protection and Environmental Chemistry, Budapest, Hungary, 21–23 June 2024. [Google Scholar] [CrossRef]

- Chandran, I.; Vipin, K. Comparative Analysis of Stand-alone and Hybrid Multi-UAV Network Architectures for Disaster Response Missions. In Proceedings of the 2024 International Conference on Advancements in Power, Communication and Intelligent Systems (APCI), Kannur, India, 21–22 June 2024. [Google Scholar] [CrossRef]

| Cluster | Datasets (Before Normalize) | Response Time (msec) | Response Time/Input |

|---|---|---|---|

| K = 1 | 1998 rows | 84,000 | 42.04 ms |

| K = 2 | 1978 rows | 84,000 | 42.76 ms |

| K = 3 | 1994 rows | 85,000 | 42.62 ms |

| K = 4 | 2030 rows | 89,000 | 43.84 ms |

| Comparison Aspects | Proposed System (DL-KMA) | Traditional Hardware System (LiDAR) |

|---|---|---|

| Cost | USD (No additional hardware installation required, uses existing sensors in FCU, cost-effective in terms of equipment) | Based on our analysis, 3 LiDAR models were identified that meet or closely align with the following research specifications: 1. 399 USD: Benewake TF03-100 LiDAR 2. 7700 USD: DJI Zenmuse L1 3. 1599 USD: Livox AVIA |

| Computational Cost | Software-based processing Minimal computational overhead | - Requires LiDAR data processing, high power consumption during operation |

| Response Time | Approximately 42.815 ms | LiDAR Sensors:

|

| Error (Accuracy) | - MSE = 0.011 ≈ ±0.105 m -MAE = 0.069 ≈ ±0.069 m (Accuracy comparable to Digital Laser distance meter [±3 mm]) | - LiDAR: Accuracy ±0.1 m - Barometric: ±0.5 to ±2 m - GPS: Error margin 1–3 m |

| Limitations | - Requires model training - Depends on training data quality | - Increased equipment weight, higher power consumption, not suitable for lightweight UAVs |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Piyakawanich, P.; Phasukkit, P. An AI-Based Deep Learning with K-Mean Approach for Enhancing Altitude Estimation Accuracy in Unmanned Aerial Vehicles. Drones 2024, 8, 718. https://doi.org/10.3390/drones8120718

Piyakawanich P, Phasukkit P. An AI-Based Deep Learning with K-Mean Approach for Enhancing Altitude Estimation Accuracy in Unmanned Aerial Vehicles. Drones. 2024; 8(12):718. https://doi.org/10.3390/drones8120718

Chicago/Turabian StylePiyakawanich, Prot, and Pattarapong Phasukkit. 2024. "An AI-Based Deep Learning with K-Mean Approach for Enhancing Altitude Estimation Accuracy in Unmanned Aerial Vehicles" Drones 8, no. 12: 718. https://doi.org/10.3390/drones8120718

APA StylePiyakawanich, P., & Phasukkit, P. (2024). An AI-Based Deep Learning with K-Mean Approach for Enhancing Altitude Estimation Accuracy in Unmanned Aerial Vehicles. Drones, 8(12), 718. https://doi.org/10.3390/drones8120718