Drone Insights: Unveiling Beach Usage through AI-Powered People Counting

Abstract

1. Introduction

2. Materials and Methods

2.1. Drone Flight Planning and Execution

2.2. Automated Data Extraction from Videos—Model Development

2.3. Model Predictions

2.4. Accounting for People under Beach Shelters

2.5. Data Visualization and Beach Usage Estimation

3. Results

3.1. Model Performance

3.2. Drone Surveys

3.3. Annualized Estimates

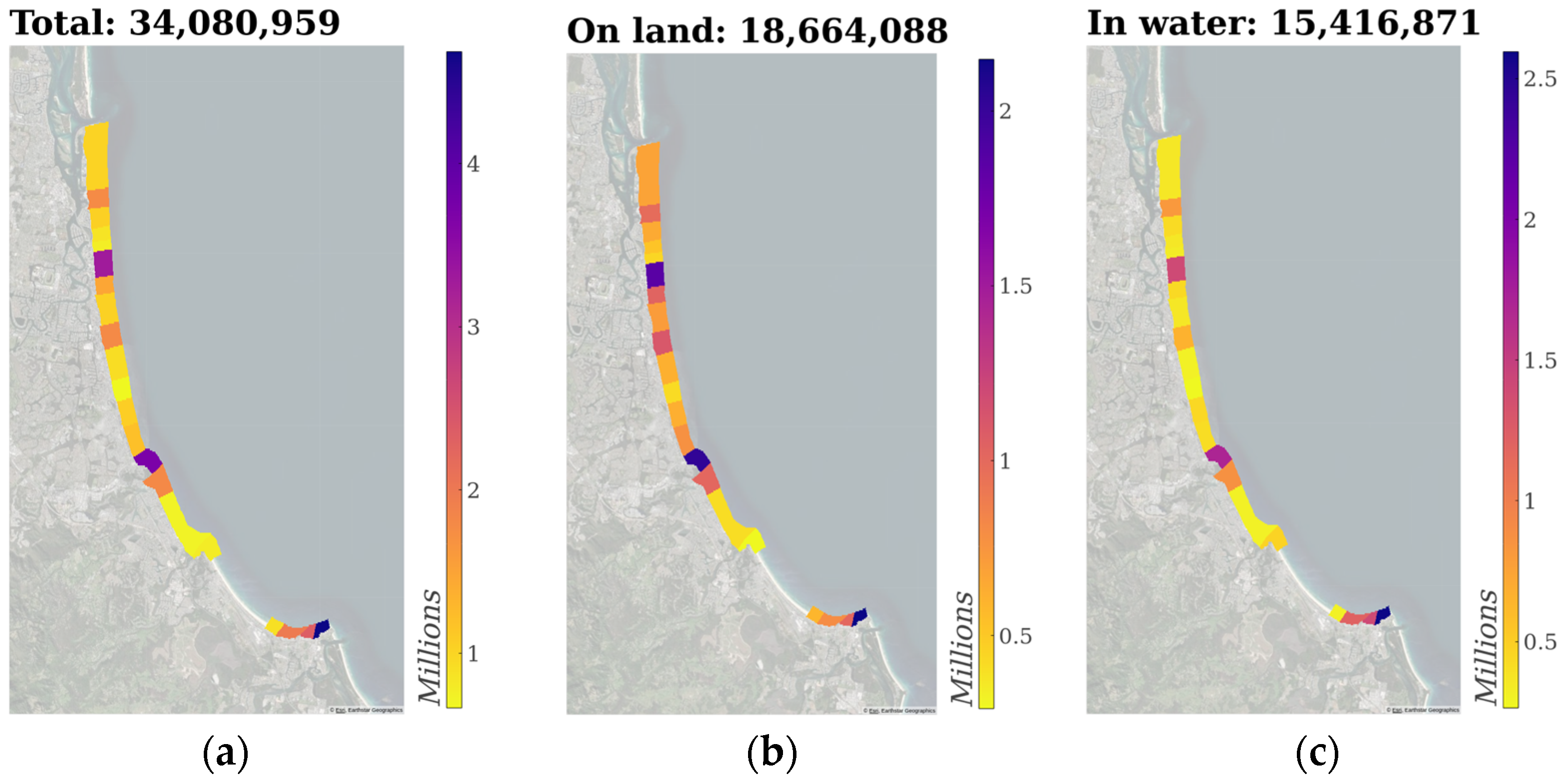

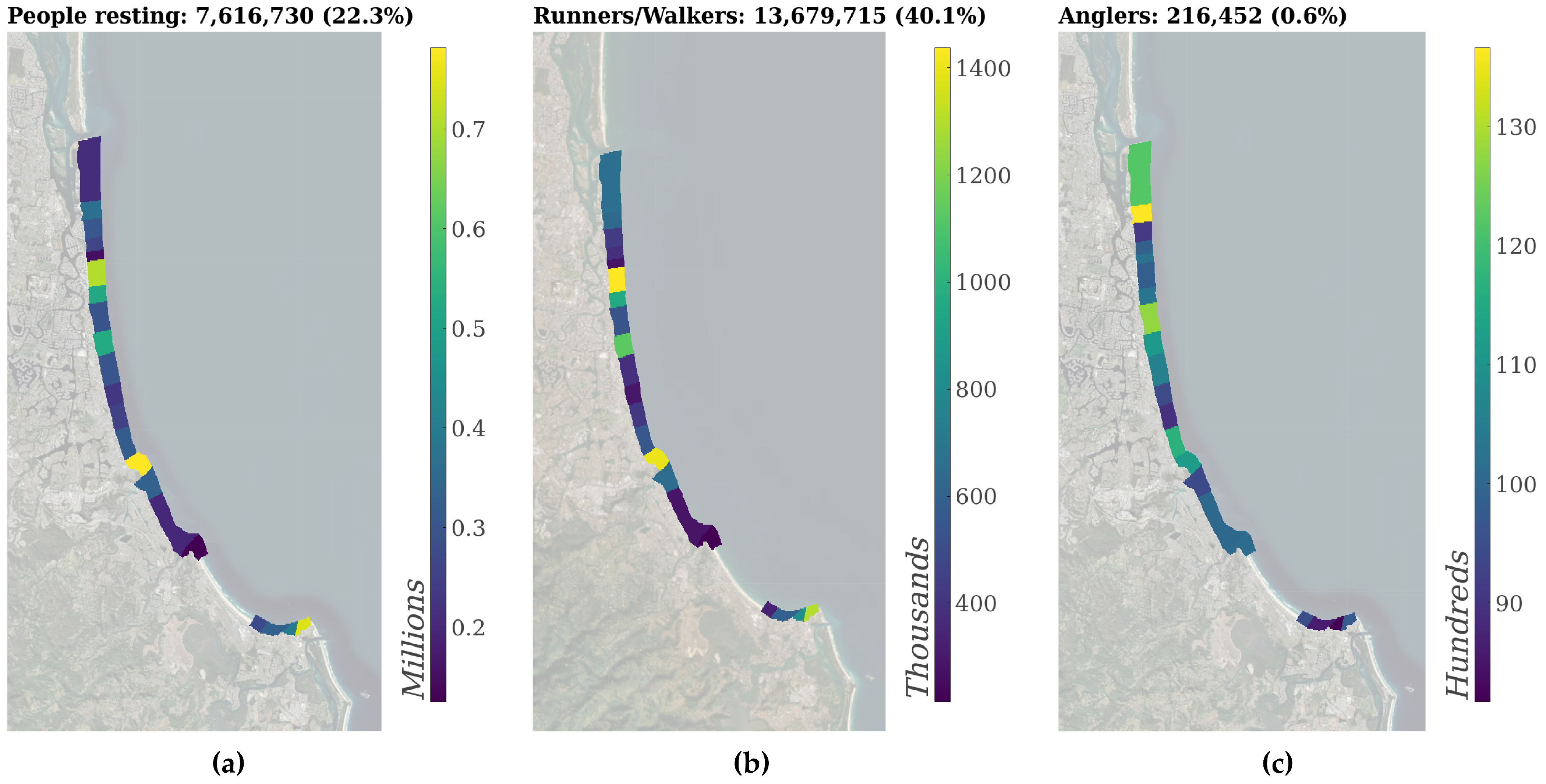

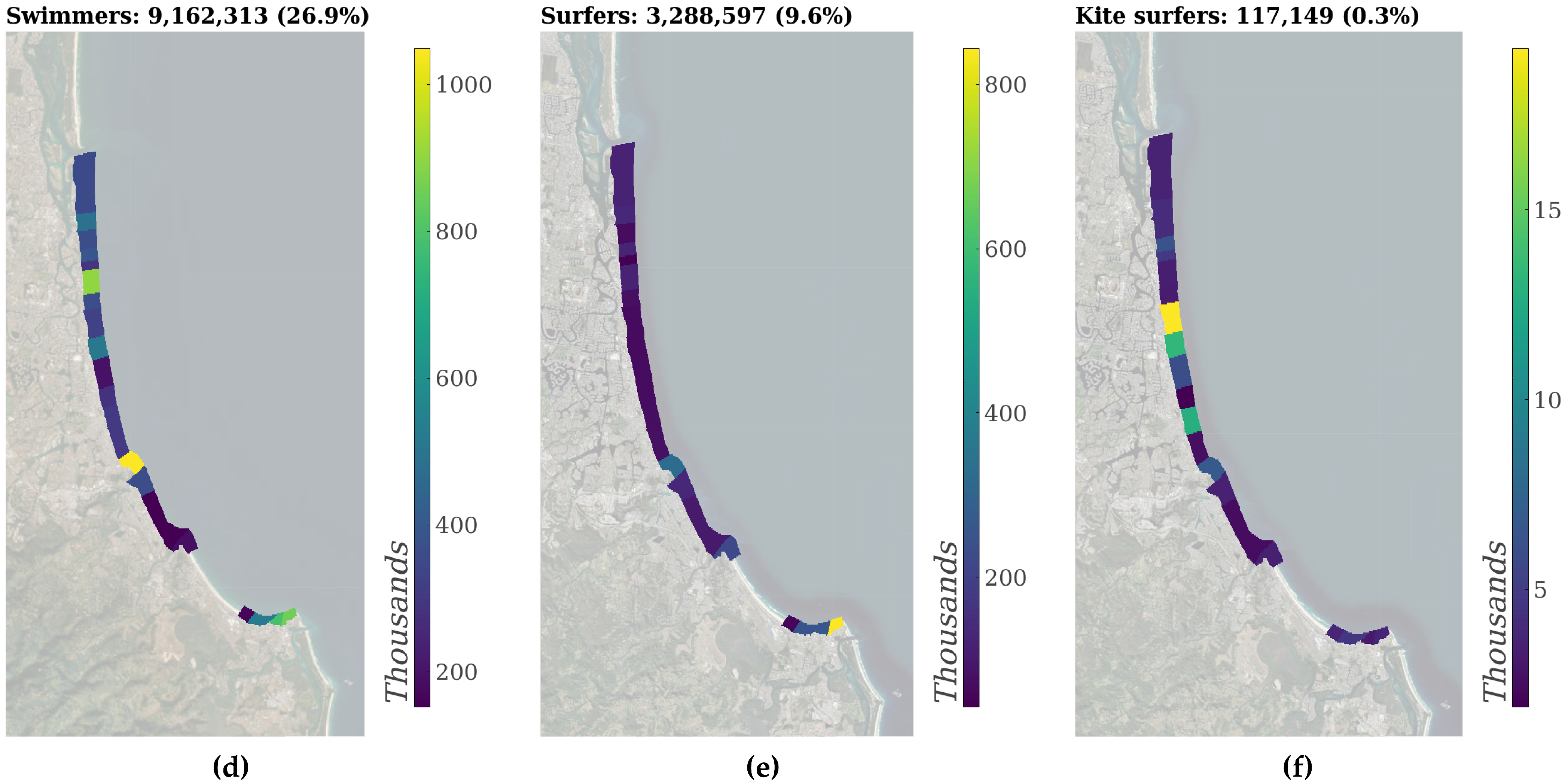

3.4. Importance of Explanatory Variables

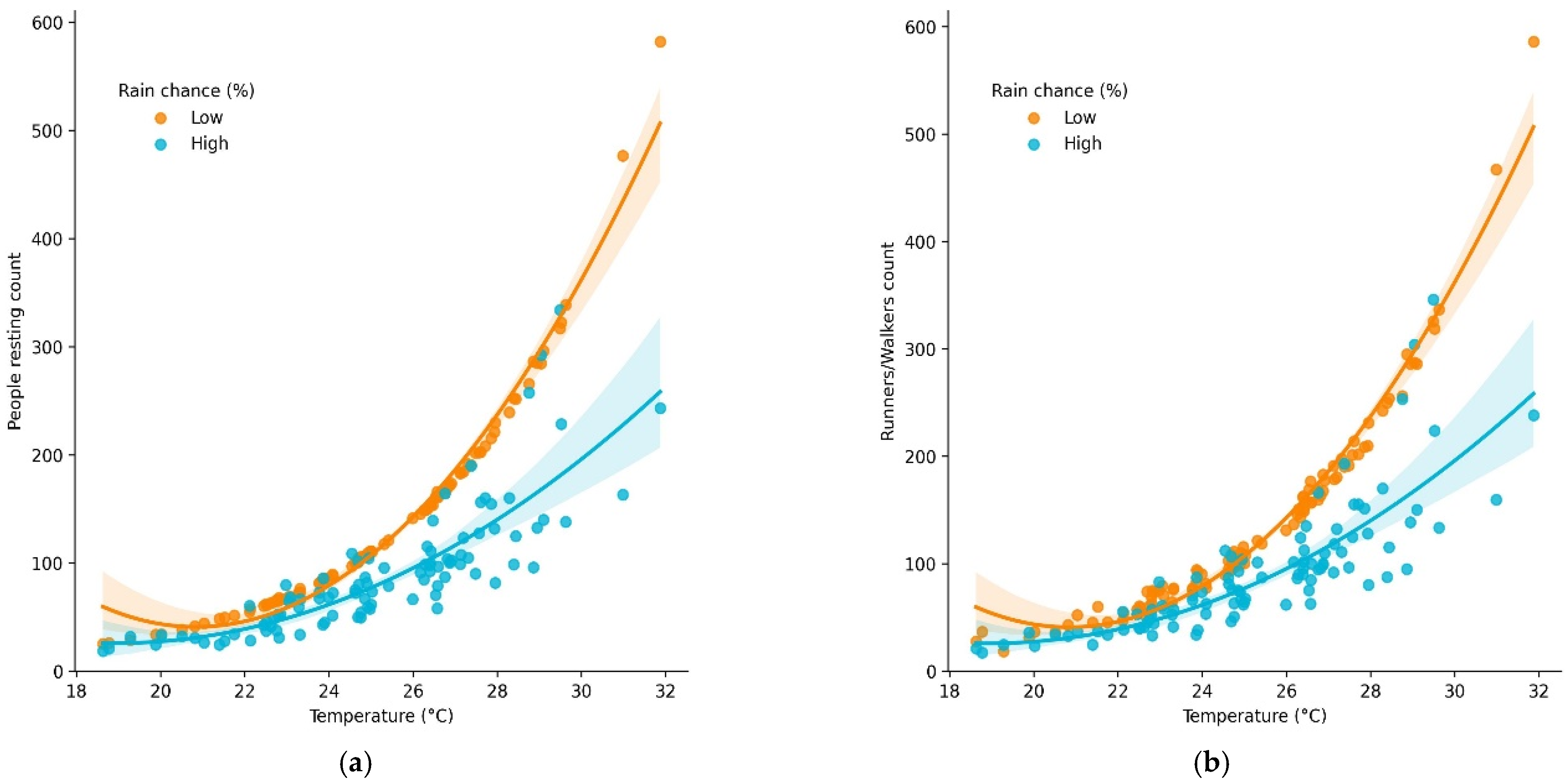

3.5. Effect of Distance to Lifeguard Towers

3.6. Comparison against Existing Land-Based Counts

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Luijendijk, A.; Hagenaars, G.; Ranasinghe, R.; Baart, F.; Donchyts, G.; Aarninkhof, S. The State of the World’s Beaches. Sci. Rep. 2018, 8, 6641. [Google Scholar] [CrossRef] [PubMed]

- Costanza, R.; d’Arge, R.; de Groot, R.; Farber, S.; Grasso, M.; Hannon, B.; Limburg, K.; Naeem, S.; O’Neill, R.V.; Paruelo, J.; et al. The value of the world’s ecosystem services and natural capital. Nature 1997, 387, 253–260. [Google Scholar] [CrossRef]

- Harris, L.R.; Defeo, O. Sandy shore ecosystem services, ecological infrastructure, and bundles: New insights and perspectives. Ecosyst. Serv. 2022, 57, 101477. [Google Scholar] [CrossRef]

- Buckley, R.C.; Cooper, M.-A. Mental health contribution to economic value of surfing ecosystem services. NPJ Ocean Sustain. 2023, 2, 20. [Google Scholar] [CrossRef]

- Short, A.D.; Hogan, C.L. Rip Currents and Beach Hazards: Their Impact on Public Safety and Implications for Coastal Management. J. Coast. Res. 1994, 12, 197–209. [Google Scholar]

- Scott, T.; Russell, P.; Masselink, G.; Wooler, A.; Short, A. Beach Rescue Statistics and their Relation to Nearshore Morphology and Hazards: A Case Study for Southwest England. J. Coast. Res. 2007, 50 (Suppl. 1), 1–6. [Google Scholar] [CrossRef]

- Murray, T.; Cartwright, N.; Tomlinson, R. Video-imaging of transient rip currents on the Gold Coast open beaches. J. Coast. Res. 2013, 2, 1809–1814. [Google Scholar] [CrossRef]

- Castelle, B.; Scott, T.; Brander, R.W.; McCarroll, R.J. Rip current types, circulation and hazard. Earth-Sci. Rev. 2016, 163, 1–21. [Google Scholar] [CrossRef]

- Schlacher, T.A.; Schoeman, D.S.; Dugan, J.; Lastra, M.; Jones, A.; Scapini, F.; McLachlan, A. Sandy beach ecosystems: Key features, sampling issues, management challenges and climate change impacts. Mar. Ecol. 2008, 29 (Suppl. 1), 70–90. [Google Scholar] [CrossRef]

- Defeo, O.; McLachlan, A.; Armitage, D.; Elliott, M.; Pittman, J. Sandy beach social–ecological systems at risk: Regime shifts, collapses, and governance challenges. Front. Ecol Env. 2021, 19, 564–573. [Google Scholar] [CrossRef]

- Murray, T.P.; Greaves, M.C.; Vieira da Silva, G.; Boyle, O.J.; Wynne, K.; Freeston, B.; Ditria, L.; Jardine, P.; Ditria, E.; Strauss, D.; et al. Utilising object detection from coastal surf cameras to assess surfer usage. In Proceedings of the Australasian Coasts & Ports 2023 Conference, Sunshine Coast, Australia, 15–18 August 2023. [Google Scholar]

- Power, H.E.; Pomeroy, A.W.M.; Kinsela, M.A.; Murray, T.P. Research Priorities for Coastal Geoscience and Engineering: A Collaborative Exercise in Priority Setting From Australia. Front. Mar. Sci. 2021, 8, 645797. [Google Scholar] [CrossRef]

- King, P.; McGregor, A. Who’s counting: An analysis of beach attendance estimates and methodologies in southern California. Ocean. Coast. Manag. 2012, 58, 17–25. [Google Scholar] [CrossRef]

- Hansen, A.S. Outdoor recreation monitoring in coastal and marine areas—An overview of Nordic experiences and knowledge. Geogr. Tidsskr.-Dan. J. Geogr. 2016, 116, 110–122. [Google Scholar] [CrossRef]

- Dwight, R.H.; Brinks, M.V.; SharavanaKumar, G.; Semenza, J.C. Beach attendance and bathing rates for Southern California beaches. Ocean. Coast. Manag. 2007, 50, 847–858. [Google Scholar] [CrossRef]

- Deacon, R.T.; Kolstad, C.D. Valuing Beach Recreation Lost in Environmental Accidents. J. Water Resour. Plann. Manag. 2000, 126, 374–381. [Google Scholar] [CrossRef]

- Koon, W.; Schmidt, A.; Queiroga, A.C.; Sempsrott, J.; Szpilman, D.; Webber, J.; Brander, R. Need for consistent beach lifeguard data collection: Results from an international survey. INJ Prev. 2021, 27, 308–315. [Google Scholar] [CrossRef]

- Harada, S.Y.; Goto, R.S.; Nathanson, A.T. Analysis of Lifeguard-Recorded Data at Hanauma Bay, Hawaii. Wilderness Environ. Med. 2011, 22, 72–76. [Google Scholar] [CrossRef]

- Jiménez, J.A.; Osorio, A.; Marino-Tapia, I.; Davidson, M.; Medina, R.; Kroon, A.; Archetti, R.; Ciavola, P.; Aarnikhof, S.G.J. Beach recreation planning using video-derived coastal state indicators. Coast. Eng. 2007, 54, 507–521. [Google Scholar] [CrossRef]

- Guillén, J.; García-Olivares, A.; Ojeda, E.; Osorio, A.; Chic, O.; González, R. Long-Term Quantification of Beach Users Using Video Monitoring. J. Coast. Res. 2008, 246, 1612–1619. [Google Scholar] [CrossRef]

- Lee, J.; Park, J.; Kim, I.; Kang, D.Y. Application of vision-based safety warning system to Haeundae Beach, Korea. J. Coast. Res. 2019, 91 (Suppl. 1), 216–220. [Google Scholar] [CrossRef]

- Drummond, C.; Blacka, M.; Harley, M.; Brown, W. Smart Cameras for Coastal Monitoring. In Proceedings of the Australasian Coasts & Ports 2021: Te Oranga Takutai, Adapt and Thrive, Te Pae, Christchurch, New Zealand, 11–13 April 2022; Volume 1, pp. 390–396. [Google Scholar]

- Wallmo, K. Assessment of Techniques for Estimating Beach Attendance; National Oceanic and Atmospheric Administration: Silver Spring, MD, USA, 2003. [Google Scholar]

- Horscha, E.; Welsha, M.; Pricea, J. Best practices for collecting onsite data to assess recreational use impacts from an oil spill. NOAA Tech. Memo. NOS ORR 2017, 11, 124. [Google Scholar] [CrossRef]

- Provost, E.J.; Coleman, M.A.; Butcher, P.A.; Colefax, A.; Schlacher, T.A.; Bishop, M.J.; Connolly, R.M.; Gilby, B.L.; Henderson, C.J.; Jones, A.; et al. Quantifying human use of sandy shores with aerial remote sensing technology: The sky is not the limit. Ocean. Coast. Manag. 2021, 211, 105750. [Google Scholar] [CrossRef]

- Gillan, J.K.; Ponce-Campos, G.E.; Swetnam, T.L.; Gorlier, A.; Heilman, P.; McClaran, M.P. Innovations to expand drone data collection and analysis for rangeland monitoring. Ecosphere 2021, 12, 03649. [Google Scholar] [CrossRef]

- Bondi, E.; Fang, F.; Hamilton, M.; Kar, D.; Dmello, D.; Noronha, V.; Choi, J.; Hannaford, R.; Iyer, A.; Joppa, L.; et al. Automatic detection of poachers and wildlife with UAVs. In Artificial Intelligence and Conservation. Artificial Intelligence for Social Good; Cambridge University Press: Cambridge, UK, 2019; pp. 77–100. [Google Scholar] [CrossRef]

- Subramaniyan, M.; Skoogh, A.; Bokrantz, J.; Sheikh, M.A.; Thürer, M.; Chang, Q. Artificial intelligence for throughput bottleneck analysis—State-of-the-art and future directions. J. Manuf. Syst. 2021, 60, 734–751. [Google Scholar] [CrossRef]

- Kleinschroth, F.; Banda, K.; Zimba, H.; Dondeyne, S.; Nyambe, I.; Spratley, S.; Winton, R.S. Drone imagery to create a common understanding of landscapes. Landsc. Urban Plan. 2022, 228, 104571. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Velastin, S.A.; Fernández, R.; Espinosa, J.E.; Bay, A. Detecting, Tracking and Counting People Getting On/Off a Metropolitan Train Using a Standard Video Camera. Sensors 2020, 20, 6251. [Google Scholar] [CrossRef]

- Arshad, B.; Barthelemy, J.; Pilton, E.; Perez, P. Where is my Deer? Wildlife tracking and counting via edge computing and deep learning. In Proceedings of the 2020 IEEE Sensors, Rotterdam, The Netherlands, 25–28 October 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Gómez-Pazo, A.; Pérez-Alberti, A. The Use of UAVs for the Characterization and Analysis of Rocky Coasts. Drones 2021, 5, 23. [Google Scholar] [CrossRef]

- Papakonstantinou, A.; Batsaris, M.; Spondylidis, S.; Topouzelis, K. A Citizen Science Unmanned Aerial System Data Acquisition Protocol and Deep Learning Techniques for the Automatic Detection and Mapping of Marine Litter Concentrations in the Coastal Zone. Drones 2021, 5, 6. [Google Scholar] [CrossRef]

- Kelaher, B.P.; Pappagallo, T.; Litchfield, S.; Fellowes, T.E. Drone-Based Monitoring to Remotely Assess a Beach Nourishment Program on Lord Howe Island. Drones 2023, 7, 600. [Google Scholar] [CrossRef]

- Regional Population 2021–2022, Centre for Population Analysis of Regional Population Data from the Australian Bureau of Statistics (ABS). Available online: https://population.gov.au/data-and-forecasts/key-data-releases/regional-population-2021-22 (accessed on 8 February 2024).

- Tourism Research Australia Australia Trade Investment Commission. Gold Coast, Regional Tourism Satellite Account, Annual Data for Australia’s Tourism Regions. Available online: https://www.tra.gov.au/en/economic-analysis/tourism-satellite-accounts/regional-tourism-satellite-account#accordion-095f0aeb35-item-a2f4ea4e30 (accessed on 2 August 2024).

- Save the Waves Coalition. World Surfing Reserves. Available online: https://www.savethewaves.org/wsr/ (accessed on 5 May 2024).

- Strauss, D.; Murray, T.; Harry, M.; Todd, D. Coastal data collection and profile surveys on the Gold Coast: 50 years on. In Coast & Ports 2017: Working with Nature; Engineers Australia: Cairns, Australia, 2017; Volume 1, pp. 1030–1036. [Google Scholar]

- City of Gold Coast. State of the Beaches Report 2022–2023, Coastal Management & Climate Change; City of Gold Coast: Gold Coast, Australia, 2024; p. 79. [Google Scholar]

- Australian Bureau of Meteorology. Historical Weather Observations and Statistics. Available online: https://reg.bom.gov.au/climate/data-services/station-data.shtml (accessed on 14 June 2023).

- Jocher, G.; Stoken, A.; Borovec, J.; Changyu, L.; Hogan, A.; Diaconu, L.; Ingham, F.; Poznanski, J.; Fang, J.; Yu, L. Ultralytics/Yolov5: v3. 1-Bug Fixes and Performance Improvements; Zenodo: Geneva, Switzerland, 2020; Available online: https://zenodo.org/records/4154370 (accessed on 10 October 2023).

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Cham, Switzerland, 2014. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in PyTorch. 2017. Available online: https://openreview.net/forum?id=BJJsrmfCZ (accessed on 9 February 2024).

- Morgan, N.; Bourlard, H. Generalization and parameter estimation in feedforward nets: Some experiments. Adv. Neural Inf. Process. Syst. 1989, 2, 630–637. [Google Scholar]

- Prechelt, L. Early stopping-but when? In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2002; pp. 55–69. [Google Scholar]

- Maggiolino, G.; Ahmad, A.; Cao, J.; Kitani, K. Deep OC-SORT: Multi-Pedestrian Tracking by Adaptive Re-Identification. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Kuala Lumpur, Malaysia, 8–11 October 2023; IEEE: Piscataway, NJ, USA, 2023. [Google Scholar]

- Mooser, A.; Anfuso, G.; Pranzini, E.; Rizzo, A.; Aucelli, P.P.C. Beach scenic quality versus beach concessions: Case studies from southern Italy. Land 2023, 12, 319. [Google Scholar] [CrossRef]

- Bednar, J.A.; Crail, J.; Crist-Harif, J.; Rudiger, P.; Brener, G.; Chris, B.; Thomas, I.; Mease, J.; Signell, J.; Liquet, M.; et al. Holoviz/Datashader: Version 0.14.3; Zenodo: Geneva, Switzerland, 2022; Available online: https://zenodo.org/records/7331952 (accessed on 26 March 2023).

- Salvatier, J.; Wiecki, T.V.; Fonnesbeck, C. Probabilistic programming in Python using PyMC3. PeerJ Comput. Sci. 2016, 2, e55. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.J.; Bourdev, L.D.; Girshick, R.B.; Hays, J.; Perona, P.; Ramanan, D.; Doll, P.; Zitnick, L.C. Microsoft COCO: Common Objects in Context; Papers with Code. 2024. Available online: https://paperswithcode.com/sota/object-detection-on-coco (accessed on 8 December 2022).

- Miller, D.; Moghadam, P.; Cox, M.; Wildie, M.; Jurdak, R. What’s in the black box? the false negative mechanisms inside object detectors. IEEE Robot. Autom. Lett. 2022, 7, 8510–8517. [Google Scholar] [CrossRef]

- Hoiem, D.; Chodpathumwan, Y.; Dai, Q. Diagnosing error in object detectors. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Miller, D.; Goode, G.; Bennie, C.; Moghadam, P.; Jurdak, R. Why object detectors fail: Investigating the influence of the dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4823–4830. [Google Scholar]

- Blackwell, B.D.; Tisdell, C.A. The Marginal Values of Lifesavers and Lifeguards to Beach Users in Australia and the United States. Econ. Anal. Policy 2010, 40, 209–227. [Google Scholar] [CrossRef]

- Jacobs, H. To count a crowd. Columbia J. Rev. 1967, 6, 37. [Google Scholar]

- Kore, A.; Abbasi Bavil, E.; Subasri, V.; Abdalla, M.; Fine, B.; Dolatabadi, E.; Abdalla, M. Empirical data drift detection experiments on real-world medical imaging data. Nat. Commun. 2024, 15, 1887. [Google Scholar] [CrossRef] [PubMed]

- Kaufman, E.L.; Lord, M.W.; Reese, T.W.; Volkmann, J. The Discrimination of Visual Number. Am. J. Psychol. 1949, 62, 498–525. [Google Scholar] [CrossRef]

- Cheyette, S.J.; Piantadosi, S.T. A unified account of numerosity perception. Nat. Hum. Behav. 2020, 4, 1265–1272. [Google Scholar] [CrossRef]

- Sam, D.B.; Peri, S.V.; Sundararaman, M.N.; Kamath, A.; Babu, R.V. Locate, Size, and Count: Accurately Resolving People in Dense Crowds via Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2739–2751. [Google Scholar] [CrossRef] [PubMed]

- Castellano, G.; Castiello, C.; Cianciotta, M.; Mencar, C.; Vessio, G. Multi-View Convolutional Network for Crowd Counting in Drone-Captured Images; Springer Nature Switzerland: Cham, Switzerland, 2020. [Google Scholar]

- Cruz, H.; Reyes, C.; Rolando, P.; Pinillos, M. Automatic Counting of People in Crowded Scenes, with Drones That Were Applied in Internal Defense Operations on October 20, 2019 in Ecuador; Springer Nature Singapore: Singapore, 2020. [Google Scholar]

- Saidon, M.S.; Mustafa, W.A.; Rajasalavam, V.R.; Khairunizam, W. Automatic People Counting System Using Aerial Image Captured by Drone for Event Management. In Intelligent Manufacturing and Mechatronics: Proceedings of SympoSIMM; Springer: Singapore, 2021. [Google Scholar]

- City of Gold Coast. Ocean Beaches Strategy 2021–2023: End of Life Review; City of Gold Coast: Gold Coast, Australia, 2024; p. 20. [Google Scholar]

- Ma, Y.; Wang, L.; Xu, N.; Zhang, S.; Wang, X.H.; Li, S. Estimating coastal slope of sandy beach from ICESat-2: A case study in Texas. Environ. Res. Lett. 2023, 18, 044039. [Google Scholar] [CrossRef]

- Salameh, E.; Frappart, F.; Almar, R.; Baptista, P.; Heygster, G.; Lubac, B.; Raucoules, D.; Almeida, L.P.; Bergsma, E.W.; Capo, S. Monitoring beach topography and nearshore bathymetry using spaceborne remote sensing: A review. Remote Sens. 2019, 11, 2212. [Google Scholar] [CrossRef]

- Vos, K.; Deng, W.; Harley, M.D.; Turner, I.L.; Splinter, K.D.M. Beach-face slope dataset for Australia. Earth Syst. Sci. Data 2022, 14, 1345–1357. [Google Scholar] [CrossRef]

- West, G.; Bayne, B. The Economic Impacts of Tourism on the Gold Coast; Common Ground Publishing: Altona, Australia, 2002. [Google Scholar]

- English, E.; von Haefen, R.H.; Herriges, J.; Leggett, C.; Lupi, F.; McConnell, K.; Welsh, M.; Domanski, A.; Meade, N. Estimating the value of lost recreation days from the Deepwater Horizon oil spill. J. Environ. Econ. Manag. 2018, 91, 26–45. [Google Scholar] [CrossRef]

| Land–Water Model | ||||

|---|---|---|---|---|

| Class | Training | Evaluation | Testing | Total |

| People on land | 9275 | 1669 | 8471 | 19,415 |

| People in water | 6840 | 1077 | 5382 | 13,299 |

| Shelters | 1901 | 344 | 330 | 2575 |

| Total | 18,016 | 3090 | 14,183 | 35,289 |

| Usage model | ||||

| Class | Training | Evaluation | Testing | Total |

| Anglers | 481 | 82 | 107 | 670 |

| Kite surfers | 208 | 36 | 43 | 287 |

| People resting | 3573 | 603 | 3045 | 7221 |

| Runners/Walkers | 5395 | 942 | 5319 | 11,656 |

| Surfers | 2468 | 402 | 682 | 3552 |

| Swimmers | 3871 | 752 | 4657 | 9280 |

| Shelters | 1791 | 370 | 414 | 2575 |

| Total | 17,787 | 3187 | 14,267 | 35,241 |

| Land–Water Model | |||

|---|---|---|---|

| Class | Precision | Recall | F1-Score |

| People on land | 0.94 | 0.95 | 0.95 |

| People in water | 0.90 | 0.92 | 0.91 |

| Shelters | 0.98 | 0.99 | 0.98 |

| Usage model | |||

| Class | Precision | Recall | F1-score |

| Anglers | 0.85 | 0.85 | 0.85 |

| Kite surfers | 0.84 | 0.86 | 0.85 |

| People resting | 0.90 | 0.89 | 0.89 |

| Runners/Walkers | 0.91 | 0.93 | 0.92 |

| Surfers | 0.89 | 0.90 | 0.90 |

| Swimmers | 0.86 | 0.90 | 0.89 |

| Shelters | 0.96 | 0.98 | 0.97 |

| Lifeguard Observed (2022) | Drone Survey Projection (2022–2023) | |

|---|---|---|

| Total people count | 16,489,292 (unknown error) | 34,080,959 ± SE 3.7 million |

| Land to water ratio | 1.59 | 1.21 |

| Lifeguard Counting Program | Drone Surveys + AI Counting Program | ||

|---|---|---|---|

| Spatial coverage | Unknown | ||

| Temporal coverage | Low | ||

| Accuracy | Medium | ||

| Precision | High | ||

| Data volume | |||

| Discrimination | |||

| Granularity |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Herrera, C.; Connolly, R.M.; Rasmussen, J.A.; McNamara, G.; Murray, T.P.; Lopez-Marcano, S.; Moore, M.; Campbell, M.D.; Alvarez, F. Drone Insights: Unveiling Beach Usage through AI-Powered People Counting. Drones 2024, 8, 579. https://doi.org/10.3390/drones8100579

Herrera C, Connolly RM, Rasmussen JA, McNamara G, Murray TP, Lopez-Marcano S, Moore M, Campbell MD, Alvarez F. Drone Insights: Unveiling Beach Usage through AI-Powered People Counting. Drones. 2024; 8(10):579. https://doi.org/10.3390/drones8100579

Chicago/Turabian StyleHerrera, César, Rod M. Connolly, Jasmine A. Rasmussen, Gerrard McNamara, Thomas P. Murray, Sebastian Lopez-Marcano, Matthew Moore, Max D. Campbell, and Fernando Alvarez. 2024. "Drone Insights: Unveiling Beach Usage through AI-Powered People Counting" Drones 8, no. 10: 579. https://doi.org/10.3390/drones8100579

APA StyleHerrera, C., Connolly, R. M., Rasmussen, J. A., McNamara, G., Murray, T. P., Lopez-Marcano, S., Moore, M., Campbell, M. D., & Alvarez, F. (2024). Drone Insights: Unveiling Beach Usage through AI-Powered People Counting. Drones, 8(10), 579. https://doi.org/10.3390/drones8100579