Prototype for Multi-UAV Monitoring–Control System Using WebRTC

Abstract

1. Introduction

2. Motivation

- Enhanced Data Collection Quality: Multiple UAVs improve the overall accuracy and comprehensiveness of the inspection.

- Reduced Operation Time: This is particularly beneficial for large-scale inspections, where a single UAV would take significantly longer to complete the task.

- Reliability: The redundancy provided by multiple UAVs ensures that if one UAV fails, others can continue the inspection, thereby increasing the overall reliability of the operation.

- Flexibility and Adaptability: Multiple UAVs can be programmed to follow complex trajectories and cover intricate structures more effectively than a single UAV. This adaptability is crucial for inspecting complex infrastructures such as bridges, wind turbines, and industrial buildings.

- How can a GCS efficiently collect and monitor real-time video streams and flight data from multiple UAVs?

- What are the key requirements for developing a WebRTC-based multi-UAV monitoring system, and how can it be successfully implemented?

Problem Statement

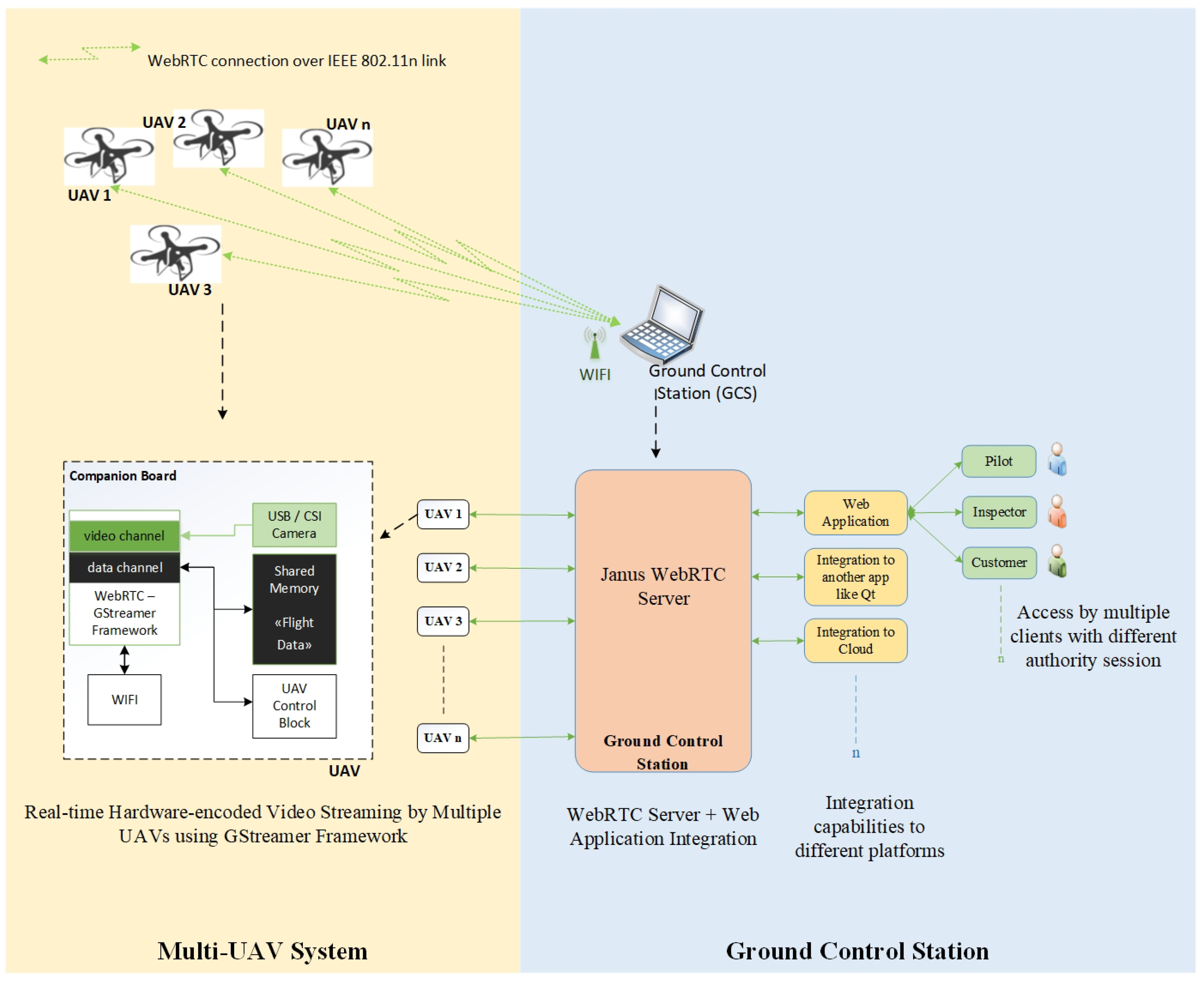

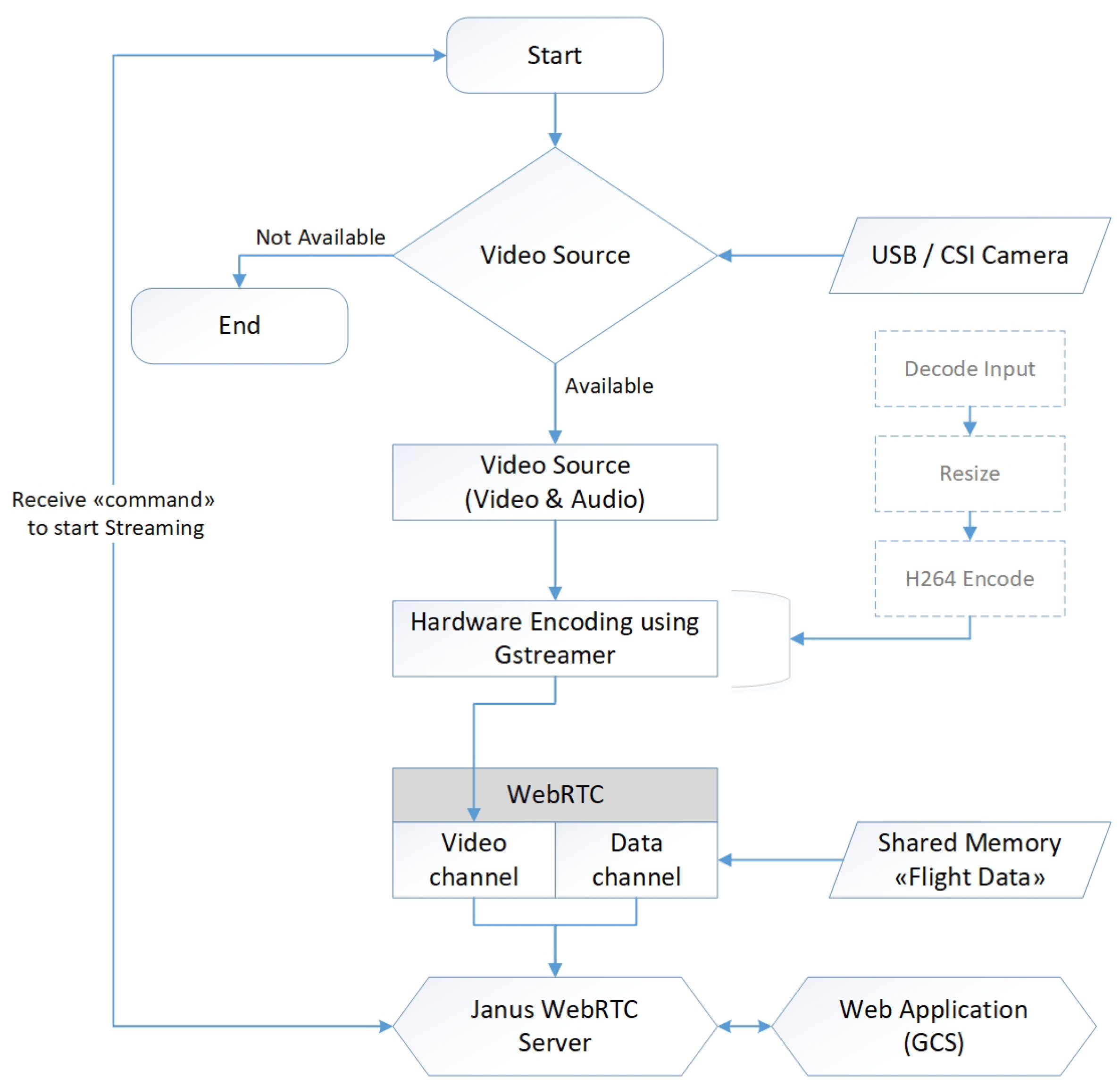

- The ability to send video feeds to the GCS from various sources, such as shared memory, USB Video Device Class (UVC), and Camera Serial Interface (CSI), with support for both hardware- and software-encoded video.

- Interoperability, meaning the provision of an adaptable solution that supports integration with native apps, cloud-based systems, and web apps.

- Support for multiple UAVs by utilizing a WebRTC media server to receive real-time video streams and flight data from multiple UAVs.

- Support for multiple client sessions, enabling clients with different authorizations—such as Pilots and Inspectors—to access the GCS. The proposed solution must be capable of relaying multiple WebRTC streams to different user sessions with high performance in real time.

3. Related Work

3.1. Literature Review of Related Solutions

3.2. Commercial WebRTC Solutions

- WOWZA [23]: Interactive broadcast, drone streaming, customer management.

- VIDIZMO [24]: IP camera streaming, drone streaming, over-the-top (OTT) broadcast, Virtual Reality (VR) streaming.

- UgCS [25]: Drone-based video streaming software for tablets and computers.

- nanoCOSMOS [26]: Cross-platform WebRTC-based video streaming.

- Liveswitch [27]: WebRTC-based video and audio streaming platform.

- Ant Media [28]: WebRTC-based streaming engine with cloud support.

3.3. Open-Source WebRTC Solutions

- Janus has the highest image quality score.

- Medooze and Mediasoup maintain latency below 50 ms. For Janus, latency rises to 268 ms after surpassing 150 participants, while for Kurento, latency exceeds one second. However, Janus, Mediasoup, and Medooze show similar latency results up to 150 participants. Therefore, Janus remains suitable for multi-UAV applications.

3.4. Why the Janus WebRTC Server?

- Scalability: Janus can handle a large number of simultaneous connections, making it suitable for large-scale video streaming applications [32].

- Reliability: Janus is designed to be reliable, with a number of built-in fail-safes to ensure that video streams continue even in the event of network issues [30].

4. Concept and Methods

4.1. The Proposed Architecture

4.2. Utilized Technology

4.3. Implementation Flowchart

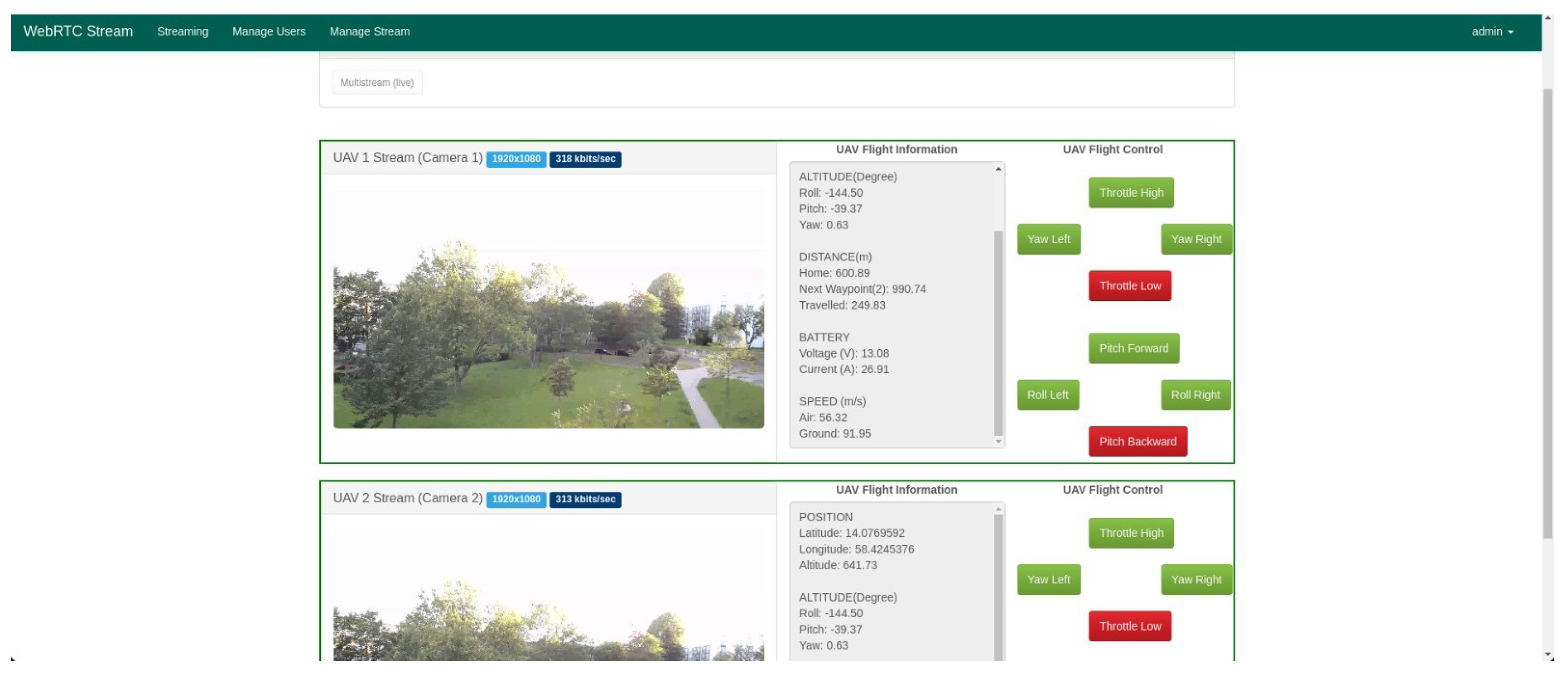

5. Results

5.1. Evaluation Criteria

- Video transmission latency must be less than 300 ms for each stream from multiple UAVs to support remote UAV piloting [38].

- The minimum resolution of the video stream should be Full High Definition (HD) (1920 × 1080).

- Flight data transmission must be handled through the WebRTC data channel.

- Support for incoming video streams and flight data transmission from multiple UAVs.

- Capability to relay video streams and flight data to multiple user connections with different authorization levels such as Pilot (Admin), Inspectors, and Customers.

5.2. Evaluation Results

5.2.1. Test Results of Proposed System

- Hardware Setup:

- Device: NVIDIA Jetson Nano

- Connection: Wi-Fi IEEE 802.11n dongle

- Cameras: Logitech USB camera (Logitech C920 HD Pro)

- Video Sources: 1 camera source and 8 video test sources in GStreamer

- Resolution: Full HD: 1920 × 1080

- Codec: H.264

- Latency: approximately 140 ms.

- Hardware Setup:

- Devices: NVIDIA Jetson Nano and Raspberry Pi 4 Model−B 8GB RAM

- Connection: Wi-Fi IEEE 802.11n dongle for both devices

- Cameras: 2 × Logitech USB cameras, one for each device

- Resolution: Full HD (1920 × 1080) for both streams

- Codec: H.264

- Latency: approximately 130 ms for each UAV.

- Hardware Setup:

- Device: NVIDIA Jetson Nano

- Connection: Wi-Fi IEEE 802.11n dongle

- Camera: CSI camera (Model: Waveshare IMX219-160 Camera Module)

- Resolution: Full HD (1920 × 1080)

- Codec: Hardware encoded H.264

- Latency: ranging between approx. 85 ms and 105 ms depending on network condition.

- Hardware Setup:

- Devices: 3 × NVIDIA Jetson Nano

- Connections: Device1 and Device2 = 5 GHz 802.11n, Device3 = 2.4 GHz

- Cameras: 2 × Logitech USB camera, 1 × CSI camera for hardware-encoded stream (Device1)

- Testing Variables: Different resolutions and bitrates to observe their impact on latency.

- Latency: The latency measurement results are presented in Table 3 below.

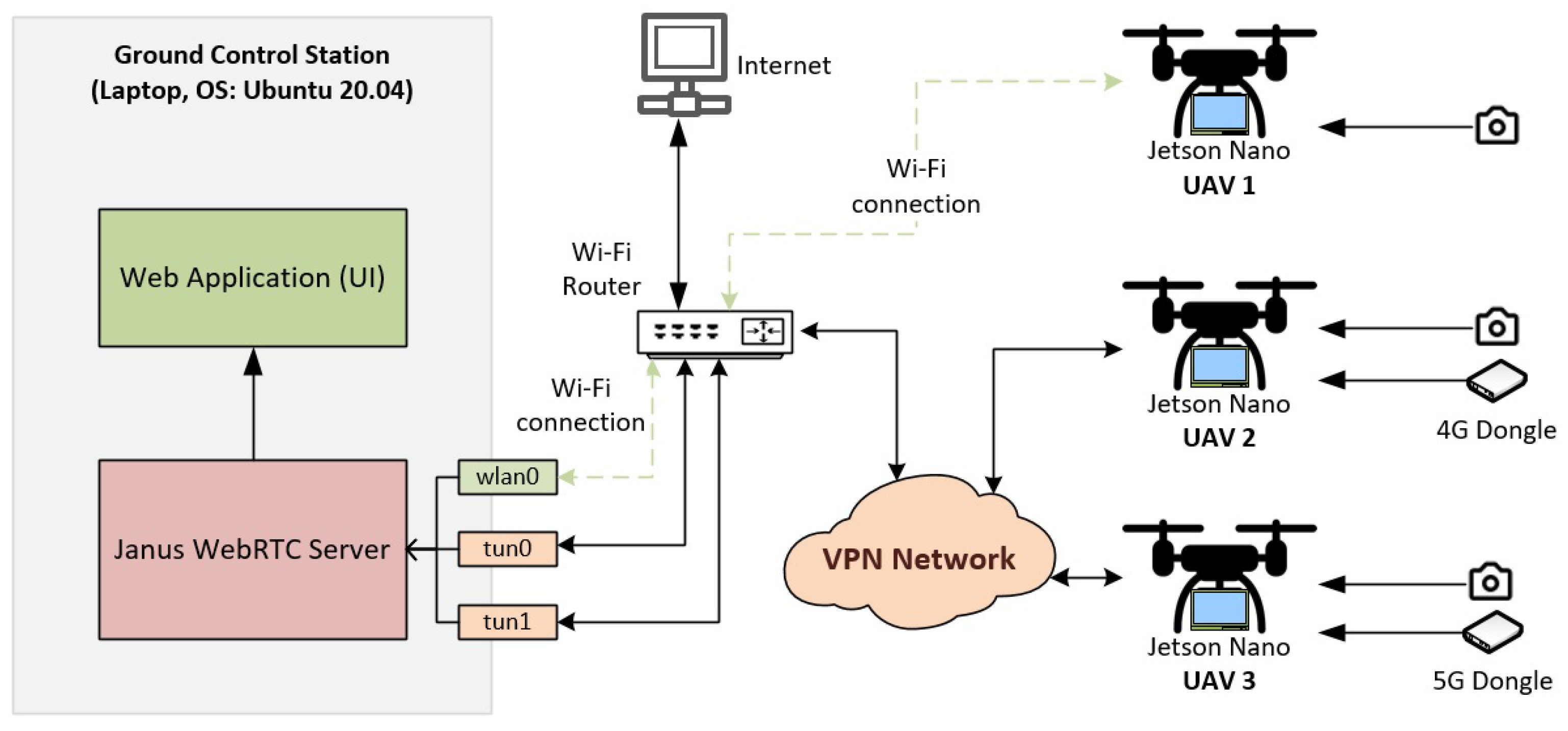

- Laptop running the Janus WebRTC server:

- CPU: Intel Core i7

- Memory: 16GB RAM

- Network: Wi-Fi (IEEE 802.11n)

- Operating System: Ubuntu 20.04

- Janus Server: The streaming plugin has been configured to handle multiple video streams while simultaneously managing flight data.

- Interface 1: Wi-Fi (wlan0)

- Interface 2: 4G (tun0)

- Interface 3: 5G (tun1)

- Wi-Fi Router:

- Model: TP-Link Archer C7

- Standard: IEEE 802.11n/ac (2.4 GHz and 5 GHz)

- Max Throughput: Up to 1.75 Gbps

- Configuration of the Devices:

- Device 1—Wi-Fi Connectivity (IEEE 802.11n)

- −

- Connected to the Wi-Fi network using an 802.11n USB dongle.

- Device 2—4G Connectivity

- −

- 4G USB Dongle: A 4G USB dongle was used to enable cellular connectivity.

- −

- Model: Huawei E3372h-320

- −

- Network Compatibility: 4G Long-Term Evolution (LTE) “Cat4” with fallback to 3G/2G.

- −

- Supported Bands: Various global LTE bands supporting download speeds of up to 150 Mbps.

- −

- Connection Type: USB 2.0.

- −

- Driver Compatibility: Plug-and-play on many Linux distributions, including Ubuntu (drivers often available via usb-modeswitch).

- −

- Setup: Plug the 4G USB dongle into the USB port of the NVIDIA Jetson Nano or Raspberry Pi.

- −

- Configuration: The necessary drivers were installed, and the cellular interface on the UAVs was configured using NetworkManager [40]. Static IPs were assigned through a cloud-based Virtual Private Network (VPN) to ensure consistent routing between the UAVs and the Janus server.$ sudo apt-get install modemmanager usb-modeswitch$ nmcli con add type gsm ifname ’*’ con-name ’4G-Connection’ apn ’web.vodafone.de’* Assign static IPs to UAVs:$ sudo apt-get install openvpn$ sudo openvpn –config/opt/vpnconfig.ovpn

- Device 3—5G Connectivity

- −

- 5G Modem: A 5G modem was used for high-speed data transmission.

- −

- Model: Netgear Nighthawk M5 (MR5200)

- −

- Network Compatibility: 5G NR and 4G LTE.

- −

- Supported Bands: Provides comprehensive support for global 5G bands, enabling download speeds of up to 2 Gbps.

- −

- Connection Type: USB-C.

- −

- Driver Compatibility: It may require additional configuration and firmware support, depending on the Linux distribution. Often used in tethering mode via USB, the 5G dongle should be plugged into the companion board (Jetson Nano) using a USB-C to USB-A adapter if necessary. If native driver support is unavailable, configure the dongle in tethering mode to set up the device as a network interface (usb0).

- −

- Configuration: Similar to the 4G setup, NetworkManager was used to establish the 5G connection. The modem settings were optimized for high bandwidth and low latency performance.

- Configuration at Janus Server (GCS):

- Janus Server Setup: Installed and configured Janus WebRTC server on the laptop running Ubuntu 20.04.

- Streaming Plugin: Configured janus.plugin.streaming.jcfg to receive multiple video streams from different UAVs.

5.2.2. Janus WebRTC Server Performance

- CPU Usage Analysis: The CPU usage of the Janus server during video streaming can be monitored using the “top” command, which provides real-time system statistics. While handling multiple video streams from UAVs, the Janus WebRTC server process (running under root) consumed 23.9% CPU and 0.4% memory over a period of 17 min and 80 s.

- Memory Usage Analysis: Memory usage during video streaming can be tracked using the “free” command, which provides detailed information about system memory. In this test, the system had 5933.6 MiB of memory, with 774.3 MiB free, 2346.2 MiB used, and 2813.1 MiB buffered/cached. The swap space totaled 2048.0 MiB, all of which was free, resulting in 2986.4 MiB of available memory. Monitoring memory usage ensures sufficient resources are available and helps detect potential memory-related issues during the streaming process.

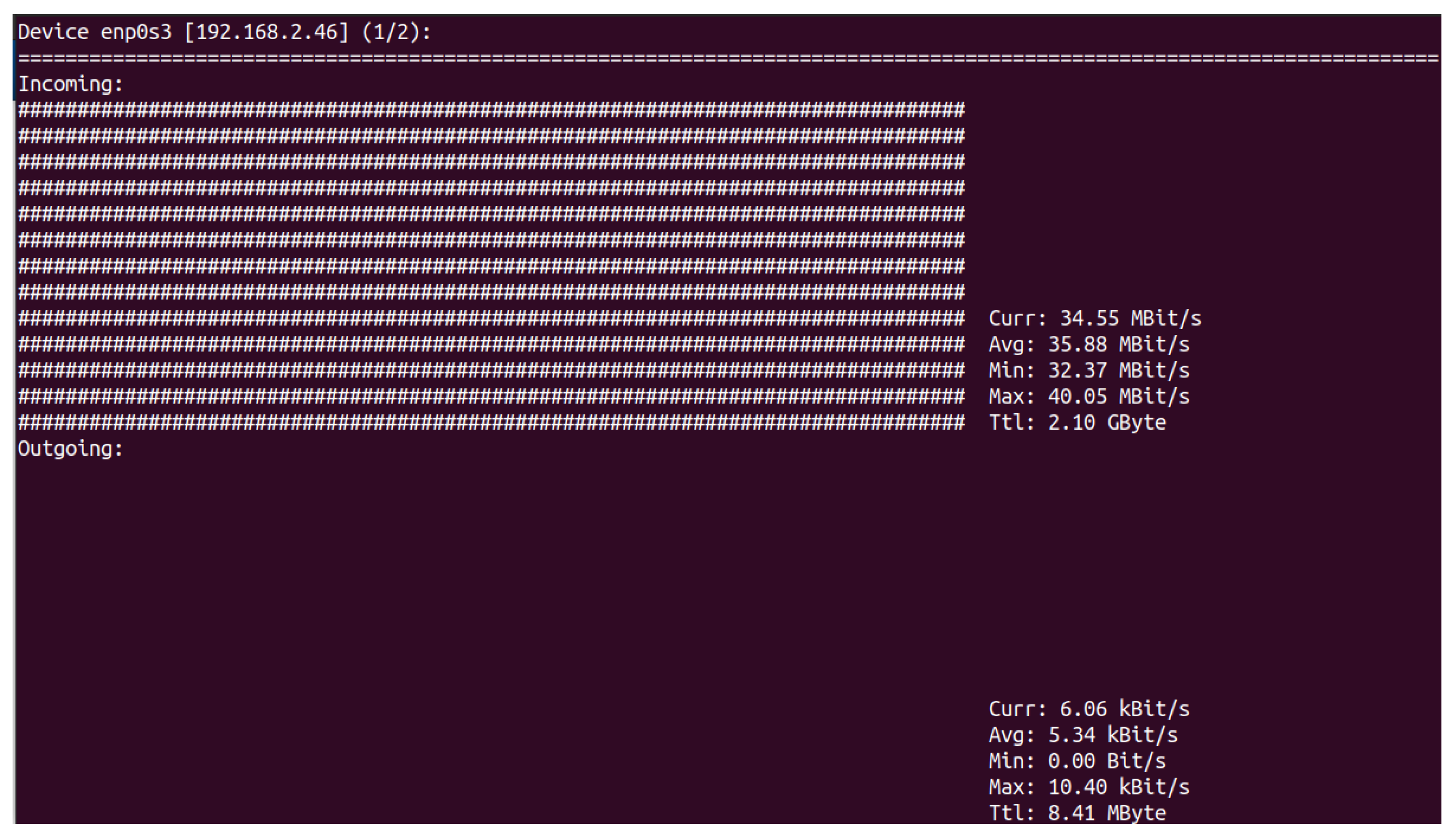

- Network Bandwidth Usage Analysis: To monitor network bandwidth usage during video streaming, tools like “iftop” or “nload” can be used. These tools provide real-time information about incoming and outgoing traffic. In Figure 10, the incoming network bandwidth had a current rate of 34.55 Mbit/s during multiple video streams from UAVs, with an average of 35.88 Mbit/s, a minimum of 32.37 Mbit/s, and a maximum of 40.05 Mbit/s, totaling 2.10 GBytes. Outgoing traffic had a current rate of 6.06 kbit/s, with an average of 5.34 kbit/s, a minimum of 0.00 bit/s, and a maximum of 10.40 kbit/s, totaling 8.41 MBytes.

- Logging and Debugging: Enabling logging and debugging in the Janus server allows for monitoring system performance and diagnosing issues during video streaming. Logs can be configured to output to a file or console, offering insights into the system’s behavior and helping to resolve potential problems.

5.2.3. Janus WebRTC Benchmark Results

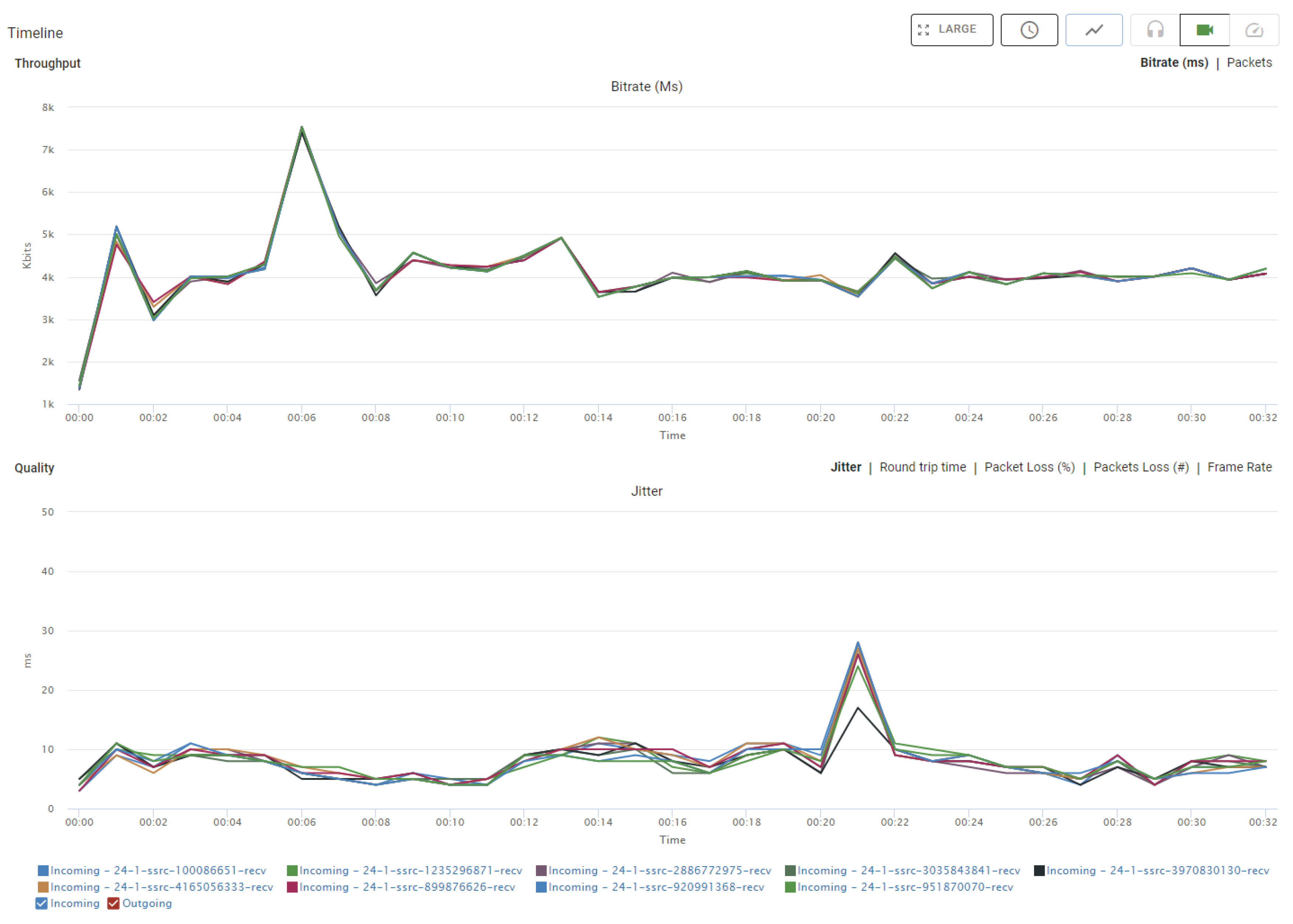

- Throughput: The upper section of the graph shows the bitrate for each stream in kilobits per second (Kbits). At the 00:01 mark, there is a spike reaching approximately 7000 Kbits, likely corresponding to the initial synchronization of the streams. After this peak, the bitrate fluctuates until around the 00:06 mark, after which it stabilizes between 3000 and 5000 Kbits for the remainder of the session. Despite some variability, the streams generally follow a consistent pattern, indicating stable data transmission rates.

- Quality: The lower section of the graph illustrates jitter, measured in milliseconds (ms), which reflects the variability in packet arrival times. At the 00:01 mark, there is a small peak in jitter (around 10 ms) that coincides with the bitrate peak, possibly indicating initial instability as the streams are established. Afterward, jitter remains low, fluctuating between 5 and 10 ms, with a notable peak to 25 ms at the 00:18 mark, possibly due to temporary network instability. Following this peak, jitter returns to stable levels for the rest of the session.

5.3. Comparative Analysis

6. Discussion

- Improving network reliability and performance: The system’s success depends heavily on the stability and speed of the network connection. Tests under challenging network conditions should be investigated to ensure high-quality video transmission.

- Enhancing user interface and control options: Improving the user interface and offering more advanced control features will enhance the system’s usability. Furthermore, enhancing the automation of connection process between UAVs and GCS will minimize the need for manual configuration.

- Adding additional features and capabilities: The system currently only provides real-time video & data streaming and control features. Expanding the system’s capabilities with features such as flight data analysis, mission status reporting, visualizing the current locations of the UAVs on a map, and advanced remote piloting would transform it into a complete GCS dashboard.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| APOLI | Automated Power Line Inspection |

| AREIOM | Adaptive Research Multicopter Platform |

| CSV | Comma Separated Values |

| CSI | Camera Serial Interface |

| CPU | Central Processing Unit |

| GCS | Ground Control Station |

| GPS | Global Positioning System |

| GPU | Graphics Processing Unit |

| HD | High Definition |

| H.264 | MPEG-4 AVC (Advanced Video Coding) |

| H.265 | High-Efficiency Video Coding |

| IMU | Inertial Measurement Unit |

| LTE | Long-Term Evolution |

| MAVLink | Micro Air Vehicle Link |

| MCU | Multipoint Control Unit |

| N.A. | Not Available |

| OTT | Over-the-top |

| QoE | Quality of Experience |

| QoS | Quality of Service |

| RTC | Real Time Communication |

| RTK-GPS | Real-time Kinematic Global Positioning System |

| RTP | Real-time Transport Protocol |

| SD | Standard Definition |

| SFU | Selective Forwarding Unit |

| SQL | Structured Query Language |

| TCP | Transmission Control Protocol |

| UAVs | Unmanned Aerial Vehicles |

| UVC | USB Video Device Class |

| V4L2 | Video for Linux 2 |

| VPN | Virtual Private Network |

| VR | Virtual Reality |

| WebRTC | Web Real-Time Communication |

| WoT | Web of Things |

References

- Bacco, M.; Catena, M.; De Cola, T.; Gotta, A.; Tonellotto, N. Performance analysis of WebRTC-based video streaming over power constrained platforms. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–7. [Google Scholar]

- Chodorek, A.; Chodorek, R.R.; Sitek, P. UAV-based and WebRTC-based open universal framework to monitor urban and industrial areas. Sensors 2021, 21, 4061. [Google Scholar] [CrossRef] [PubMed]

- Lagkas, T.; Argyriou, V.; Bibi, S.; Sarigiannidis, P. UAV IoT framework views and challenges: Towards protecting drones as “Things”. Sensors 2018, 18, 4015. [Google Scholar] [CrossRef] [PubMed]

- Tudevdagva, U.; Battseren, B.; Hardt, W.; Blokzyl, S.; Lippmann, M. Unmanned Aerial Vehicle-Based Fully Automated Inspection System for High Voltage Transmission Line. In Proceedings of the 12th International Forum on Strategic Technology IEEE Conference, IFOST2017, Ulsan, Republic of Korea, 31 May–2 June 2017; pp. 300–305. [Google Scholar]

- Automated Power Line Inspection. Available online: https://www.tu-chemnitz.de/informatik/ce/projects/projects.php.en#apoli (accessed on 14 September 2024).

- AREIOM: Adaptive Research Multicopter Platform. Available online: https://www.tu-chemnitz.de/informatik/ce/research/areiom-adm.php.en (accessed on 14 September 2024).

- Haohan, H.; Hangfan, Z.; Junhai, L.; Kuanrong, L.; Biao, P. Automatic and intelligent line inspection using UAV based on beidou navigation system. In Proceedings of the 2019 6th International Conference on Information Science and Control Engineering (ICISCE), Shanghai, China, 20–22 December 2019; pp. 1004–1008. [Google Scholar]

- Santos-González, I.; Rivero-García, A.; Molina-Gil, J.; Caballero-Gil, P. Implementation and Analysis of Real-Time Streaming Protocols. Sensors 2017, 17, 846. [Google Scholar] [CrossRef]

- Holland, J.; Begen, A.; Dawkins, S. Operational Considerations for Streaming Media; RFC 9317; RFC Editor: Marina del Rey, CA, USA, 2022. [Google Scholar]

- WebRTC. Available online: https://webrtc.org/ (accessed on 14 September 2024).

- Battseren, B. Software Architecture for Real-Time Image Analysis in Autonomous MAV Missions. Ph.D. Thesis, Chemnitz University of Technology, Chemnitz, Germany, 2024. [Google Scholar]

- Gao, C.; Wang, X.; Chen, X.; Chen, B.M. A hierarchical multi-UAV cooperative framework for infrastructure inspection and reconstruction. Control Theory Technol. 2024, 22, 394–405. [Google Scholar] [CrossRef]

- Liao, Y.H.; Juang, J.G. Real-time UAV trash monitoring system. Appl. Sci. 2022, 12, 1838. [Google Scholar] [CrossRef]

- Chodorek, A.; Chodorek, R.R.; Yastrebov, A. The prototype monitoring system for pollution sensing and online visualization with the use of a UAV and a WebRTC-based platform. Sensors 2022, 22, 1578. [Google Scholar] [CrossRef] [PubMed]

- Sacoto-Martins, R.; Madeira, J.; Matos-Carvalho, J.P.; Azevedo, F.; Campos, L.M. Multi-purpose Low Latency Streaming Using Unmanned Aerial Vehicles. In Proceedings of the 2020 12th International Symposium on Communication Systems, Networks and Digital Signal Processing (CSNDSP), Porto, Portugal, 20–22 July 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Gueye, K.; DEGBOE, B.M.; Samuel, O.; Ngartabé, K.T. Proposition of health care system driven by IoT and KMS for remote monitoring of patients in rural areas: Pediatric case. In Proceedings of the 2019 21st International Conference on Advanced Communication Technology (ICACT), PyeongChang, Republic of Korea, 17–20 February 2019; pp. 676–680. [Google Scholar]

- Wu, C.; Tu, S.; Tu, S.; Wang, L.; Chen, W. Realization of Remote Monitoring and Navigation System for Multiple UAV Swarm Missions: Using 4G/WiFi-Mesh Communications and RTK GPS Positioning Technology. In Proceedings of the 2022 International Automatic Control Conference (CACS), Kaohsiung, Taiwan, 3–6 November 2022; pp. 1–6. [Google Scholar]

- Gu, Q.; Michanowicz, D.R.; Jia, C. Developing a modular unmanned aerial vehicle (UAV) platform for air pollution profiling. Sensors 2018, 18, 4363. [Google Scholar] [CrossRef] [PubMed]

- Kobayashi, T.; Matsuoka, H.; Betsumiya, S. Flying communication server in case of a largescale disaster. In Proceedings of the 2016 IEEE 40th Annual Computer Software and Applications Conference (COMPSAC), Atlanta, GA, USA, 10–14 June 2016; Volume 2, pp. 571–576. [Google Scholar]

- Janak, J.; Schulzrinne, H. Framework for rapid prototyping of distributed IoT applications powered by WebRTC. In Proceedings of the 2016 Principles, Systems and Applications of IP Telecommunications (IPTComm), Chicago, IL, USA, 19 October 2016; pp. 1–7. [Google Scholar]

- GitHub—Jeffbass/Imagezmq: A Set of Python Classes that Transport OpenCV Images from one Computer to Another Using PyZMQ Messaging.—github.com. Available online: https://github.com/jeffbass/imagezmq (accessed on 14 September 2024).

- Image Transmission Protocol; MAVLink Developer Guide. Available online: https://mavlink.io/en/services/image_transmission.html (accessed on 14 September 2024).

- WOWZA—The Embedded Video Platform for Solution Builders. Available online: https://www.wowza.com/ (accessed on 14 September 2024).

- VIDIZMO—Low Latency Live Streaming. Available online: https://www.vidizmo.com/low-latency-live-video-streaming/ (accessed on 14 September 2024).

- Application|Drone-Based Video Streaming with UgCS ENTERPRISE. Available online: https://www.ugcs.com/video-streaming-with-ugcs (accessed on 14 September 2024).

- nanoStream Webcaster. Available online: https://www.nanocosmos.de/v6/webrtc (accessed on 14 September 2024).

- Liveswitch SERVER. Available online: https://developer.liveswitch.io/liveswitch-server/index.html (accessed on 14 September 2024).

- Ultra Low Latency WebRTC Live Streaming Media Server—Ant Media—antmedia.io. Available online: https://antmedia.io/ (accessed on 14 September 2024).

- André, E.; Le Breton, N.; Lemesle, A.; Roux, L.; Gouaillard, A. Comparative study of WebRTC open source SFUs for video conferencing. In Proceedings of the 2018 Principles, Systems and Applications of IP Telecommunications (IPTComm), Chicago, IL, USA, 16–18 October 2018; pp. 1–8. [Google Scholar]

- Janus—General Purpose WebRTC Server. Available online: https://janus.conf.meetecho.com/docs/index.html (accessed on 14 September 2024).

- Mediasoup. Available online: https://mediasoup.org/ (accessed on 14 September 2024).

- Amirante, A.; Castaldi, T.; Miniero, L.; Romano, S.P. Performance analysis of the Janus WebRTC gateway. In Proceedings of the AWeS ’15: Proceedings of the 1st Workshop on All-Web Real-Time Systems, Bordeaux, France, 21 April 2015; pp. 1–7. [Google Scholar]

- Amirante, A.; Castaldi, T.; Miniero, L.; Romano, S.P. Janus: A general purpose WebRTC gateway. In Proceedings of the IPTComm ’14: Proceedings of the Conference on Principles, Systems and Applications of IP Telecommunications, Chicago, IL, USA, 1–2 October 2014; pp. 1–8. [Google Scholar]

- GStreamer: Open source multimedia framework. Available online: https://gstreamer.freedesktop.org/ (accessed on 14 September 2024).

- IIT-RTC 2017 Qt WebRTC Tutorial (Qt Janus Client). 2017. Available online: https://www.slideshare.net/slideshow/iitrtc-2017-qt-webrtc-tutorial-qt-janus-client/86890694 (accessed on 14 September 2024).

- Jansen, B.; Goodwin, T.; Gupta, V.; Kuipers, F.; Zussman, G. Performance evaluation of WebRTC-based video conferencing. ACM SIGMETRICS Perform. Eval. Rev. 2018, 45, 56–68. [Google Scholar] [CrossRef]

- Kostuch, A.; Gierłowski, K.; Wozniak, J. Performance analysis of multicast video streaming in IEEE 802.11 b/g/n testbed environment. In Proceedings of the Wireless and Mobile Networking: Second IFIP WG 6.8 Joint Conference, WMNC 2009, Gdańsk, Poland, 9–11 September 2009; Proceedings. Springer: Berlin/Heidelberg, Germany, 2009; pp. 92–105. [Google Scholar]

- Baltaci, A.; Cech, H.; Mohan, N.; Geyer, F.; Bajpai, V.; Ott, J.; Schupke, D. Analyzing real-time video delivery over cellular networks for remote piloting aerial vehicles. In Proceedings of the 22nd ACM Internet Measurement Conference, Nice, France, 25–27 October 2022; pp. 98–112. [Google Scholar]

- Wireshark · Go Deep. Available online: https://www.wireshark.org/ (accessed on 14 September 2024).

- NetworkManager—Linux network configuration tool suite. Available online: https://networkmanager.dev/ (accessed on 22 September 2024).

- iftop: Display Bandwidth Usage on an Interface. Available online: https://pdw.ex-parrot.com/iftop/ (accessed on 22 September 2024).

- vnStat—A Network Traffic Monitor for Linux and BSD. Available online: https://humdi.net/vnstat/ (accessed on 22 September 2024).

- testRTC Guide. Available online: https://support.testrtc.com/hc/en-us/categories/8260858196239-testRTC-Guide (accessed on 14 September 2024).

- Lee, Y.; Sim, J.; Kim, D.H.; You, D. A Comparison of Serialization Formats for Point Cloud Live Video Streaming over WebRTC. In Proceedings of the 2024 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 6–8 January 2024; pp. 1–3. [Google Scholar] [CrossRef]

- Welcome to RTCBot’s Documentation! Available online: https://rtcbot.readthedocs.io/en/latest/ (accessed on 19 September 2024).

- WebRTC Tutorial—Real-Time Data Transmitting with WebRTC. Available online: https://getstream.io/resources/projects/webrtc/basics/rtcdatachannel (accessed on 19 September 2024).

- Green, M.; Mann, D.D.; Hossain, E. Measurement of latency during real-time wireless video transmission for remote supervision of autonomous agricultural machines. Comput. Electron. Agric. 2021, 190, 106475. [Google Scholar] [CrossRef]

- Diallo, B.; Ouamri, A.; Keche, M. A Hybrid Approach for WebRTC Video Streaming on Resource-Constrained Devices. Electronics 2023, 12, 3775. [Google Scholar] [CrossRef]

| Author | Types of UAV | Application | Main Features | Comments |

|---|---|---|---|---|

| Y.-H. Liao and J.-G. Juang (2024) [13] | UAV (Quadcopter) | Real-time UAV trash monitoring | Based on UAV and IoT protocols | Video streaming and data collection using Kafka and ZeroMQ and ImageZMQ |

| Chodorek et al. (2022) [14] | UAV (Quadcopter) | Monitoring system for pollution sensing and online visualization | WebRTC-based flying monitoring system | Video and data streaming, inspection with integrated sensors |

| Chodorek et al. (2021) [2] | UAV (Quadcopter) | IoT based system for monitoring of urban and industrial areas | Based on UAV and IoT with WebRTC server | Video streaming, inspection with integrated sensors |

| Sacoto-Martins et al. (2020) [15] | UAV (N.A.) | Multipurpose low-latency streaming using UAV | Use of WebRTC-based Media-Gateway | Real-time video streaming, Running on cloud platform. |

| Gueye et al. (2019) [16] | UAV (N.A.) | Remote monitoring and telemedicine for pediatric care in rural areas | Combination of Web of Things (WoT) and Kurento Media Server | Real-time voice, video and message system using WebRTC |

| Wu et al. (2019) [17] | UAV (N.A.) | Remote monitoring and navigation system for multiple UAVs | System architecture design and simulation | Communication via Micro Air Vehicle Link (MAVLink) protocol |

| Gu et al. (2018) [18] | UAV (Hexacopter) | Real-time monitoring of multiple air pollutants | Modular design | Real-time data fusion and synchronization |

| Lagkas et al. (2018) [3] | UAV (N.A.) | Ground purpose IoT | Enabling UAVs as secure and private IoT devices | Usage of various security and privacy measures for UAVs |

| Bacco et al. (2018) [1] | UAV (N.A.) | Real-time video streaming from UAV to ground station | Power consumption and video quality analysis | WebRTC settings to extend battery life in power-constrained scenarios |

| Kobayashi et al. (2016) [19] | UAV (N.A.) | Communication and information sharing during large-scale disasters | Drone acts as a mobile communication server | Supports asynchronous text chat and real-time video communication between rescue team |

| Janak et al. (2016) [20] | - | Development of distributed IoT applications | Web-based, virtual ports and a runtime environment for emulating IoT devices | Real-time data streaming from device to device |

| Video Codec | Data Rate (Mbps) | RTT (ms) | Frame Rate (FPS) | Packet Loss | Video Resolution (Pixels) | Color Depth | Frame Size (MB) |

|---|---|---|---|---|---|---|---|

| VP8 | 1.4397 | 83.6 | 47.4 | 2.51 | 854 × 483 | 8 Bit | 0.4125 |

| VP9 | 1.4225 | 84.2 | 45.5 | 2.44 | 892 × 502 | 8 Bit | 0.4478 |

| H.264 | 1.1542 | 77.6 | 36.1 | 2.43 | 1279 × 719 | 8 Bit | 0.9196 |

| H.265 | 8 to 20 | 100 | 30 | 2.00 | 3860 × 2160 | 8 Bit | 8.338 |

| Resolution | Bitrate (Mbps) | Latency (ms) | ||

|---|---|---|---|---|

| Device1 | Device2 | Device3 | ||

| 1920 × 1080 (Full HD) | 8 | 85 | 120 | 160 |

| 1280 × 720 (HD) | 4 | 70 | 100 | 130 |

| 640 × 480 (SD) | 2 | 60 | 90 | 120 |

| 1920 × 1080 (Full HD) | 4 | 70 | 100 | 140 |

| 1280 × 720 (HD) | 2 | 60 | 90 | 120 |

| 640 × 480 (SD) | 1 | 50 | 80 | 110 |

| Network Scenario | Video Resolution | Bitrate (Mbps) | Latency (ms) | Jitter (ms) | Packet Loss (%) | Network Bandwidth (Mbps) |

|---|---|---|---|---|---|---|

| Wi-Fi | Full HD | 8 | 120 | 10 | 1.5 | 40 |

| HD | 4 | 100 | 8 | 1.2 | 25 | |

| SD | 2 | 90 | 5 | 1 | 15 | |

| 4G LTE | Full HD | 8 | 90 | 12 | 1.8 | 35 |

| HD | 4 | 70 | 10 | 1.5 | 20 | |

| SD | 2 | 60 | 8 | 1.3 | 12 | |

| 5G | Full HD | 8 | 30 | 3 | 0.5 | 50 |

| HD | 4 | 25 | 2 | 0.3 | 40 | |

| SD | 2 | 20 | 1 | 0.2 | 25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kilic, F.; Hassan, M.; Hardt, W. Prototype for Multi-UAV Monitoring–Control System Using WebRTC. Drones 2024, 8, 551. https://doi.org/10.3390/drones8100551

Kilic F, Hassan M, Hardt W. Prototype for Multi-UAV Monitoring–Control System Using WebRTC. Drones. 2024; 8(10):551. https://doi.org/10.3390/drones8100551

Chicago/Turabian StyleKilic, Fatih, Mainul Hassan, and Wolfram Hardt. 2024. "Prototype for Multi-UAV Monitoring–Control System Using WebRTC" Drones 8, no. 10: 551. https://doi.org/10.3390/drones8100551

APA StyleKilic, F., Hassan, M., & Hardt, W. (2024). Prototype for Multi-UAV Monitoring–Control System Using WebRTC. Drones, 8(10), 551. https://doi.org/10.3390/drones8100551