1. Introduction

The recent advancements in drone technology have resulted in their widespread adoption across various industries worldwide. Drones offer favorable properties that make them highly suitable for complex mission environments, such as search and rescue operations in forests [

1,

2,

3] and bridge inspections [

4,

5]. These environments often present challenges such as cluttered obstacles and weak communication and satellite signals. In search and rescue (SAR) tasks, it is crucial for drones to navigate around obstacles efficiently and reach their destinations quickly. In this study, we focus on the navigation problem of drones operating in unknown and complex environments, where they encounter various obstacles and reach their destinations quickly and safely. To maximize the maneuverability of drones while ensuring flight safety, researchers typically divide the controller into two layers: a low-level angular rate and attitude layer, analogous to the human epencephalon; and a high-level velocity, position, and navigation layer, resembling the human brain [

6,

7,

8]. Among these parameters, the low-level control problem has been extensively analyzed, and accordingly, optimal solutions have been established. However, some issues still persist in high-level control methods, hindering the effective utilization of the high maneuverability of drones.

Based on extensive research, several factors have been identified as reasons for the improper utilization of the high maneuverability of drones. A recent study identified the latency of perception sensors, such as monocular and stereo cameras, as a contributing parameter [

9]. It discussed the effect of perception latency in high-speed movement robot platforms. The study proposed a general analysis method to evaluate the maximum latency when operating the robot at the ultimate velocity. It highlighted that simply reducing the longitudinal velocity does not necessarily guarantee the security of the robot. However, this method can only adjust the latency tolerance, and the suggested solution is to use more advanced sensors, such as event cameras. In contrast, reducing the latency of software and algorithms is a more economical approach. Another study introduced a low-level multi-sensor fusion algorithm, where vision and three-dimensional (3D) lidar data were segmented [

10]. Here, the core control system received and fused their results to reduce latency.

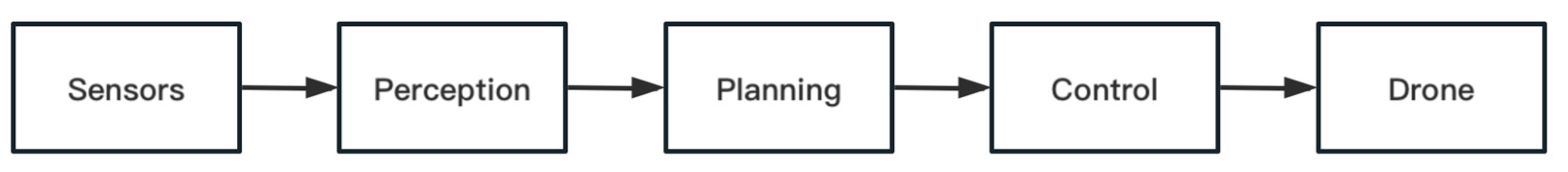

In addition to the factors mentioned above, the issue of coordination after modularization is a significant challenge. Traditional navigation pipelines for drones in unknown environments are typically divided based on perception, path planning, and control subtasks to solve the autonomous flight problem in a step-by-step manner [

11,

12,

13,

14,

15,

16]. A recent study proposed visual stereo simultaneous localization and mapping (SLAM) for drones, which accelerates the mapping process and thus provide an approach for map maintenance with a uniform distribution [

17]. The problem of high-quality 3D mapping was addressed using only onboard, low-computing consumption, and imperfect measurements [

18,

19,

20]. Some researchers focused on path planning using length analysis, search-based, and Euclidean signed distance field methods [

21,

22,

23]. The control subtask has a long history, and there are many well-known and effective algorithms, such as PID, H-infinite, sliding mode control, and model predictive control (MPC) [

24]. These methods enable drones to accomplish the tasks mentioned above through a parallel cooperation pipeline of components. Thus, it makes the entire system interpretable and facilitates the tuning of the parameters of each component. Although it may seem attractive from an engineering implementation perspective, due to the sequential nature, the communication latency between components and wastes computing resources on unnecessary functions is overlooked, leading to difficulties in achieving agile flight.

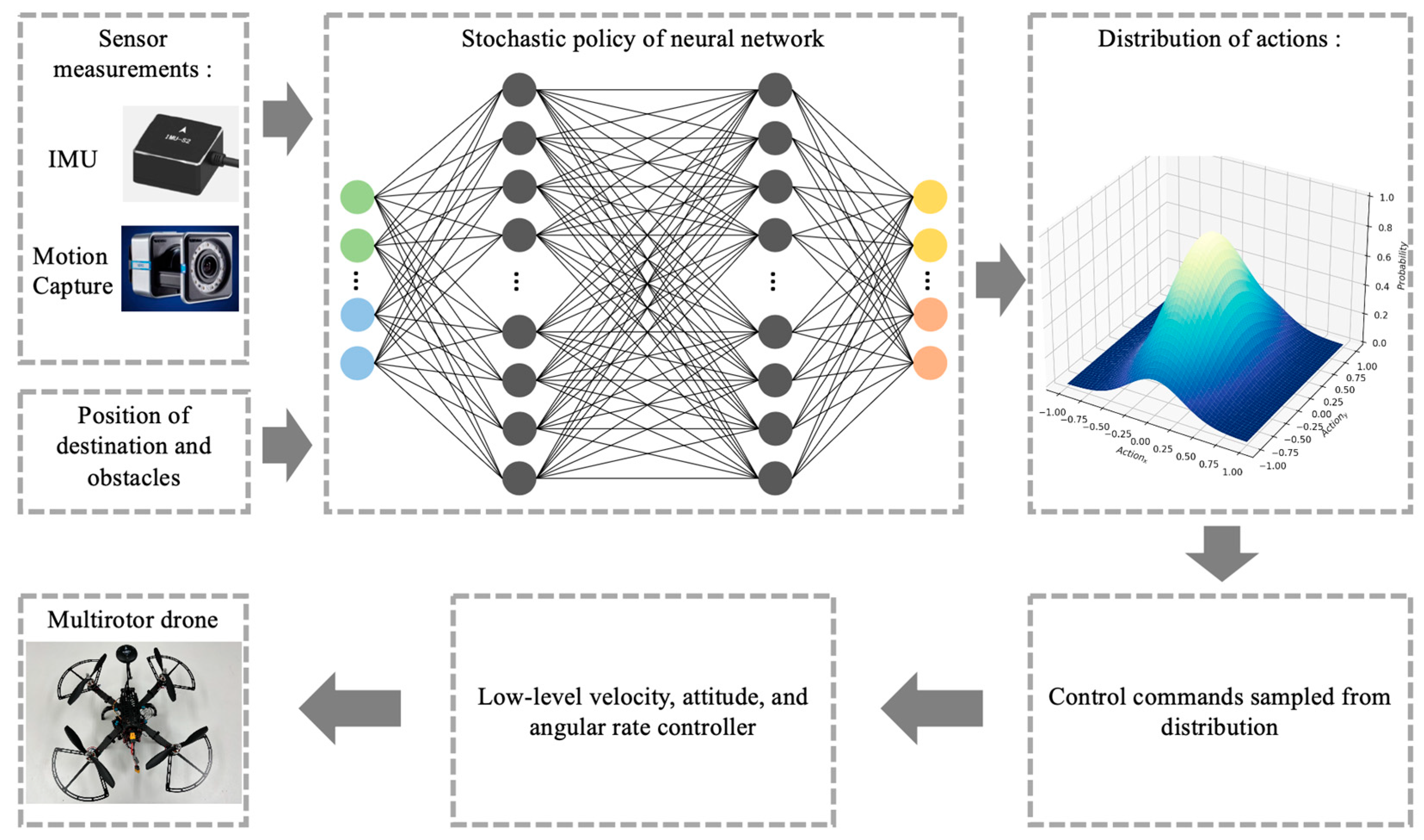

Thus, we propose a new guidance framework with a reinforcement-learning-based direct policy exploration and optimization method to maximize the maneuverability of drones and enhance flight speed in complex environments. In this framework, the low-level angular rate, attitude, and velocity are controlled by a well-tuned cascade PID, while the high-level path planning, position controller, and trajectory tracking controllers are merged into a control policy. The policy is a fully connected three-layer neural network that takes sensor measurements as inputs and outputs the distribution of the target velocity commands. Finally, the real control commands are sampled from the distribution and sent to a low-level controller, as shown in

Figure 1. The main contributions of this study are summarized as follows:

A novel navigation framework is proposed to improve the flight speed of drones in complex environments. The framework integrates an RL-based policy to replace the traditional path planning and trajectory tracking controllers in a conventional drone guidance pipeline. The experimental outcomes in both the simulated and real-world environments show that it can push the maneuverability of drones to the physical limit.

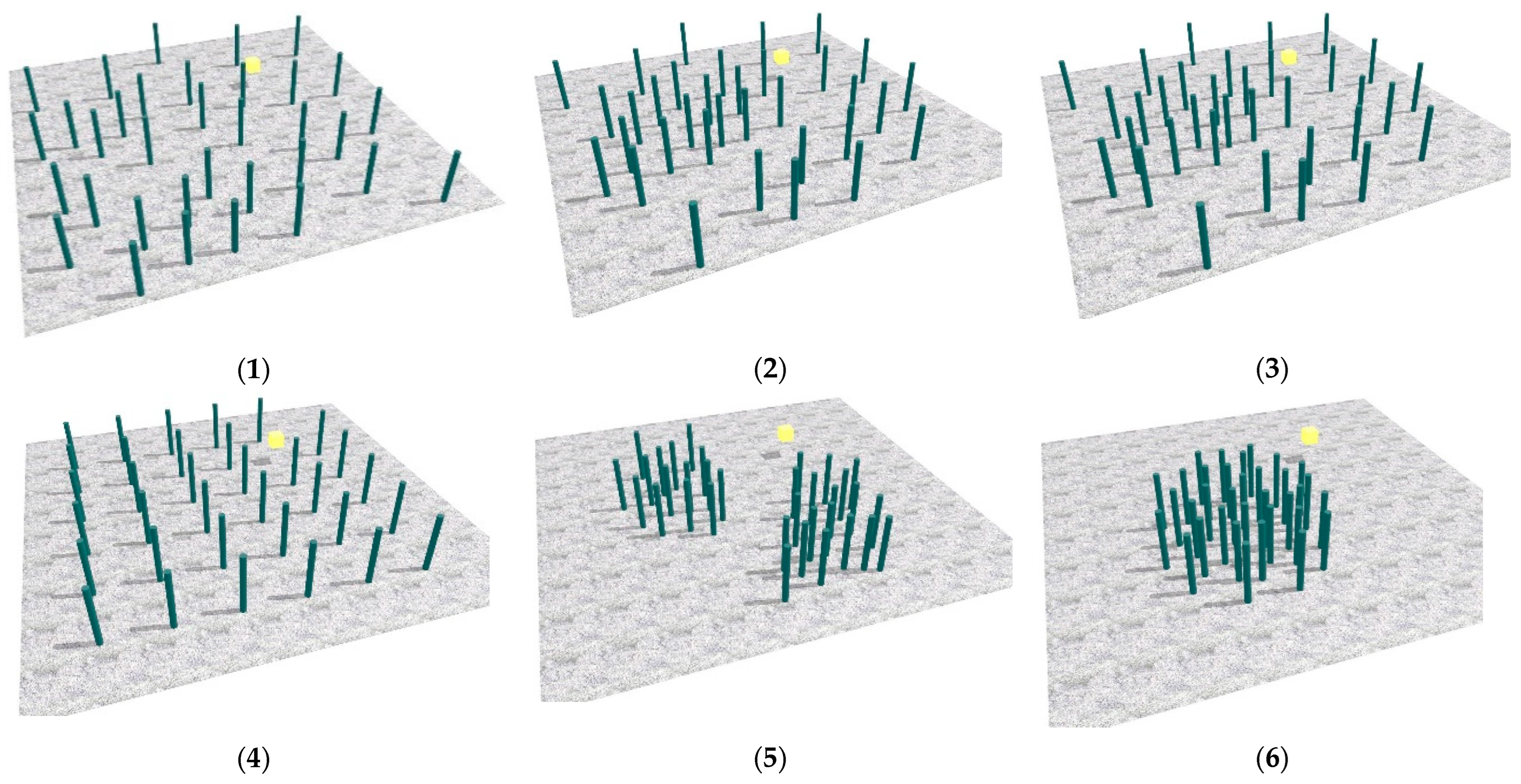

A novel direct policy exploration and optimization method is proposed, which eliminates the need for a teacher–student structure commonly used in imitation learning and does not require reference trajectories. To train the policy, multiple environments (

Figure 2) with varying obstacle distribution characteristics were created. Subsequently, the well-trained policy is directly applied to real-world drones for practical applications.

From a practical perspective, we designed a hybrid reward function to facilitate policy exploration and self-improvement. This function further comprises multiple continuous and discrete functions that can effectively evaluate the distance between the drone and the destination, energy consumption, collision risk with obstacles, and other such factors.

The remainder of this paper is structured as follows.

Section 2 discusses the classical perception–planning–control pipeline and the latest RL works.

Section 3 describes the low-level model-based attitude controller and high-level planning-tracking strategy in our approach, along with the designed reward function and the algorithm for direct policy exploration and optimization.

Section 4 shows the experiments that were conducted to train and test the policy of the proposed structure in a simulator. We also applied this method directly to real-world drones and analyzed the observed advantages and limitations. Finally, the conclusions, opportunities, and challenges of future work are discussed in

Section 5.

3. Method

As mentioned previously, we propose a new framework for guiding drones in complex environments. In the

Section 1, we introduce a model-based attitude controller. The

Section 2 presents the proposed method of direct policy exploration and optimization, which replaces the planning and trajectory-tracking modules in a classical pipeline. The

Section 3 describes the designed reward function, which provides an effective evaluation criterion for policy optimization.

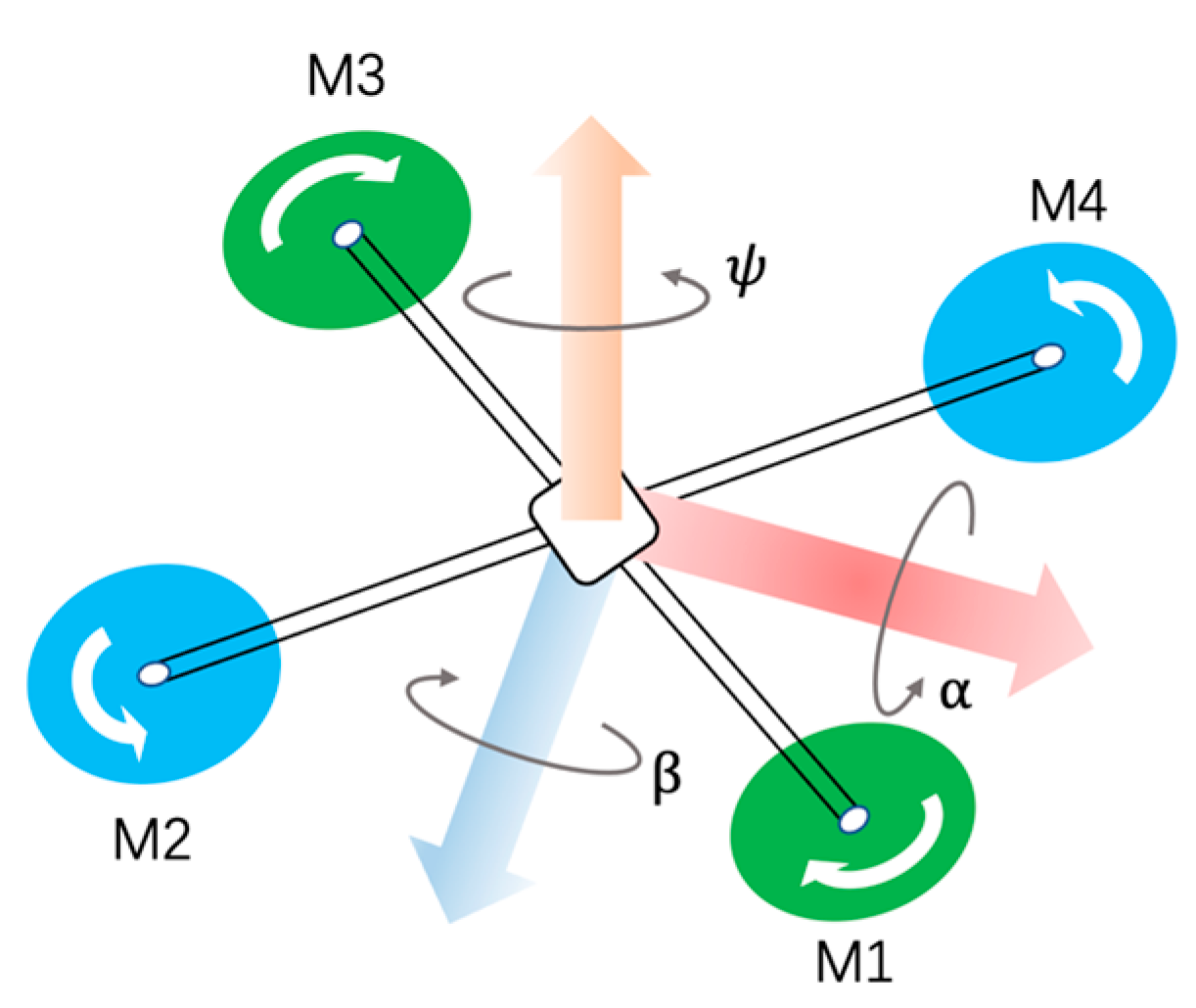

3.1. Low-Level Controller

Drones are typically agile, and they possess a six-dimensional motion capability, 3D position (denoted by

), and 3D orientation (denoted by

, as shown in

Figure 5. In our method, the low-level controller keeps the drone stable and agile, and precisely executes velocity commands from the high-level RL controller.

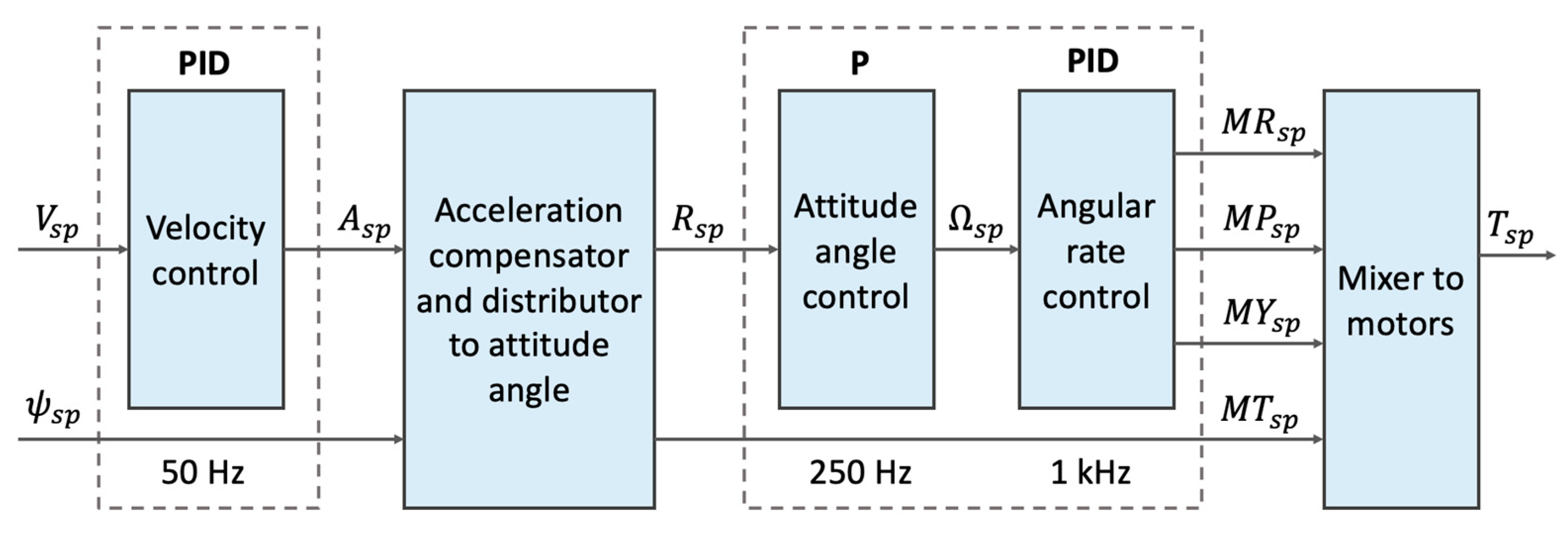

We proposed a cascaded control architecture that combines P and PID controllers with different loop frequencies to achieve a low-level control task. As shown in

Figure 6, the velocity setpoint

is input to the outer loop velocity control layer, which then outputs the expected acceleration

to the compensator and distributor layer. This layer is not a controller but a simple function of

, where g is the acceleration of gravity, which is a simple decomposition of forces; it will not be further elaborated here. The necessity of this layer is that the thrust of the motors is limited, and our goal is to maximize the maneuverability of drones within the allowed range of power performance. The maximum horizontal acceleration and maximum target attitude angle should be restricted within a reasonable range to avoid crashing due to insufficient power.

Then, the attitude angle setpoint

is input to the cascaded inner loop attitude angle and angular rate controller, which can output the motor thrust in the roll, pitch, and yaw directions, denoted by

,

, and

, respectively. These three setpoints, along with the throttle setpoint

, are decomposed into four rotation speed setpoints

according to Equation (10):

Notably, the loop frequency of each controller in cascade control is different. The inner loop controllers for the attitude angle and angular rate operate at 250 and 1 kHz, respectively. This is because the system may respond faster to the angular rate than to the attitude angle. In contrast, the outer loop controller for the velocity operates at 50 Hz. This is because the velocity is indirectly controlled by changing the attitude angle and thus has a slower response.

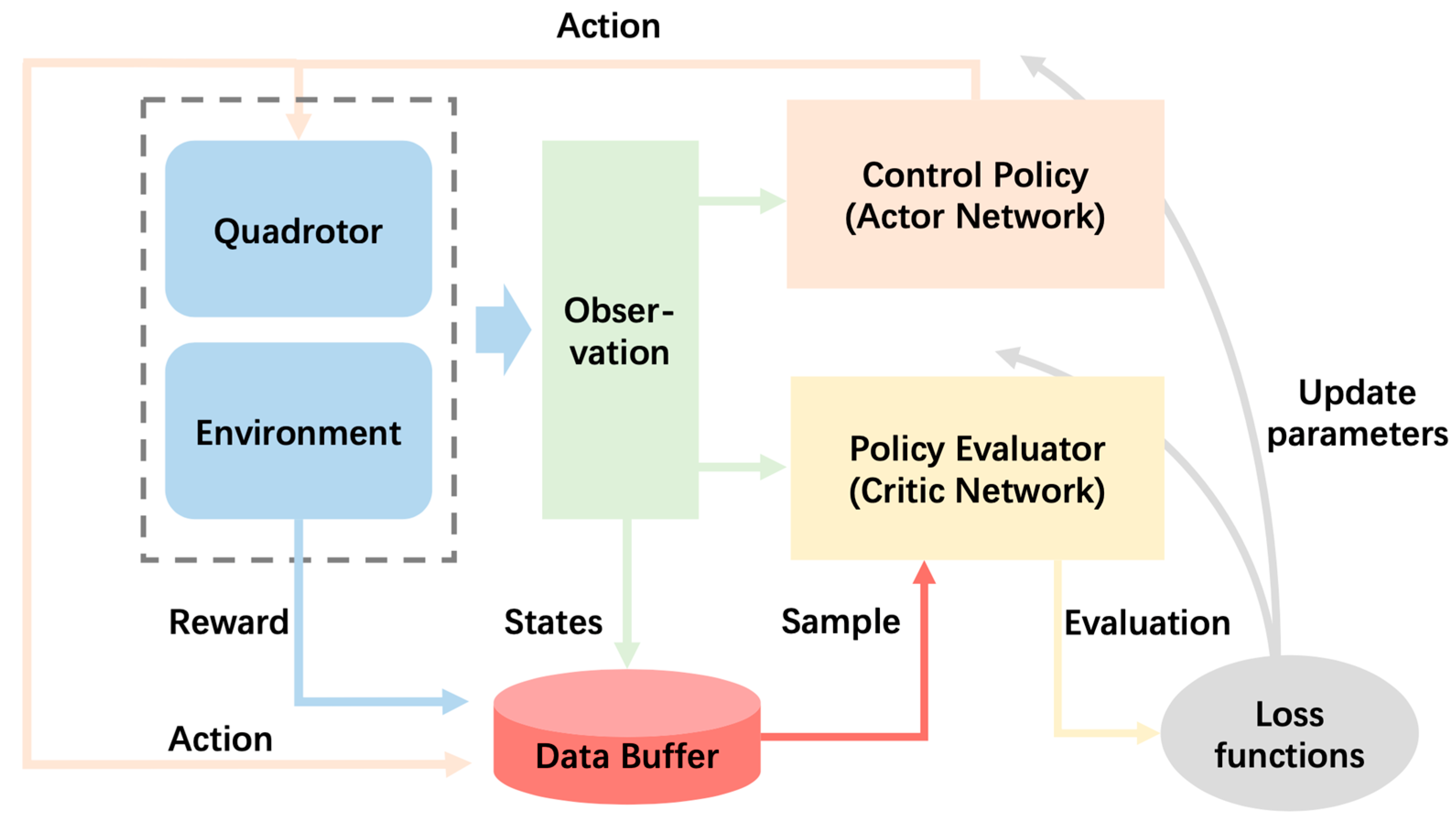

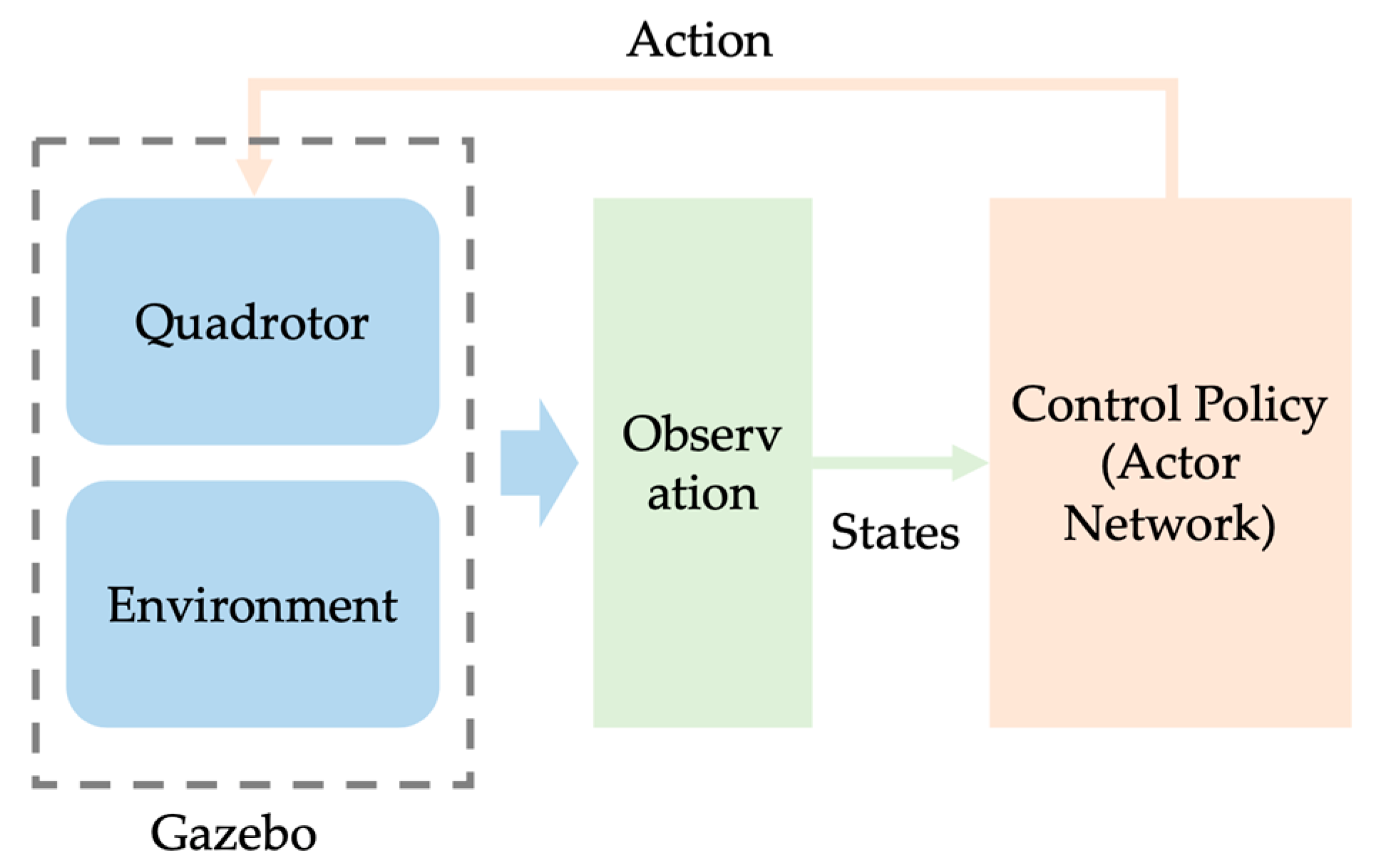

3.2. High-Level Policy

As mentioned previously, we aim to fundamentally solve the issue of high latency in pipelines based on environmental perception through path planning for drones flying in complex environments, which further helps maximize their agile mobility. We utilized the PPO algorithm to handle the information gathered from both onboard and external sensors concerning the environment and drone states in parallel. The algorithm generates control commands that are fed directly to the low-level controller in an end-to-end scheme. The policy training framework is illustrated in

Figure 7. The figure shows a partial set of state variables for the drone and its environment, obtained through a combination of onboard and external sensors.

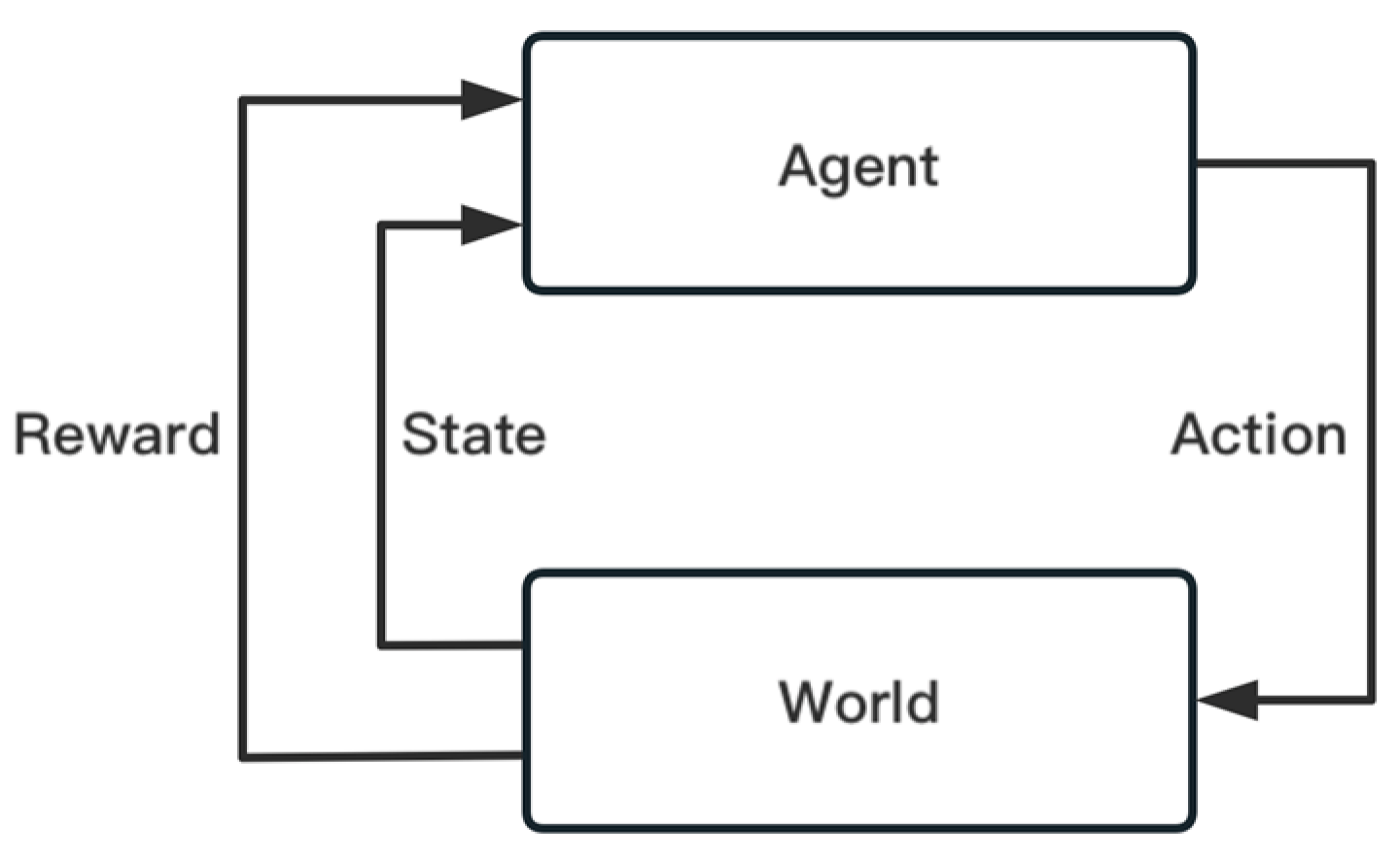

The framework consists of two neural networks. The first one is the actor network with parameters , which is evaluated to find an approximate optimal control policy, which provides a control command (referred to as an action in RL) to the low-level controller end-to-end based on the observation. At the beginning of the training process, the actions are chosen randomly, the drone transfers to a new state after the actions take effect, and the environment provides a reward that indicates the value of the actions. We refer to each complete navigation task process as an episode, with the sequence of states, actions, and rewards obtained during the process referred to as the trajectory . After several episodes, all trajectory data were stored in data buffer , and these data were periodically sampled and used to calculate the value of each state and action based on Equation (1).

Conversely, the value function

from Equation (2) reveals the value of a certain state,

s, on the

iteration, which is obtained through sampling and calculation from the data buffer. The action–value function

from Equation (3) represents the value of a certain action in that state, which is approximated using a critic network with the parameter

. The advantage function representing the advantage of action

a in state

s can then be obtained as:

For the actor and critic neural networks, we used two different loss functions to calculate the parameter gradients and used stochastic gradient ascent to optimize their performance. The actor network parameters are updated by maximizing the PPO clip objective.

The set of trajectories collected by applying the control policy

in the training environment is denoted as

. Here, T represents the time step length of a trajectory randomly sampled from

. Equation (12) shows two surrogate losses,

indicates the step length of parameter updates along the policy gradient, but it will lead to an unexpectedly large update of policy [

32]. To ensure a stable and convergent policy optimization, policy updates must be constrained within a reasonable range. The PPO introduced a clip function

with a hyperparameter

to limit the step length of policy update:

In contrast to the actor network, the parameter update of the critic network uses a gradient descent, and the objective function is the mean-squared error:

The is the discount accumulated reward from current state to the end of episode, and it can be calculated according to Equation (1) on the trajectory , which was sampled from the dataset . Here, is the evaluation of current state predicted using critic network with current parameters .

We then obtain the learning algorithm by synchronously optimizing the actor and critic networks stepwise:

3.3. Environment Setting and Reward Function

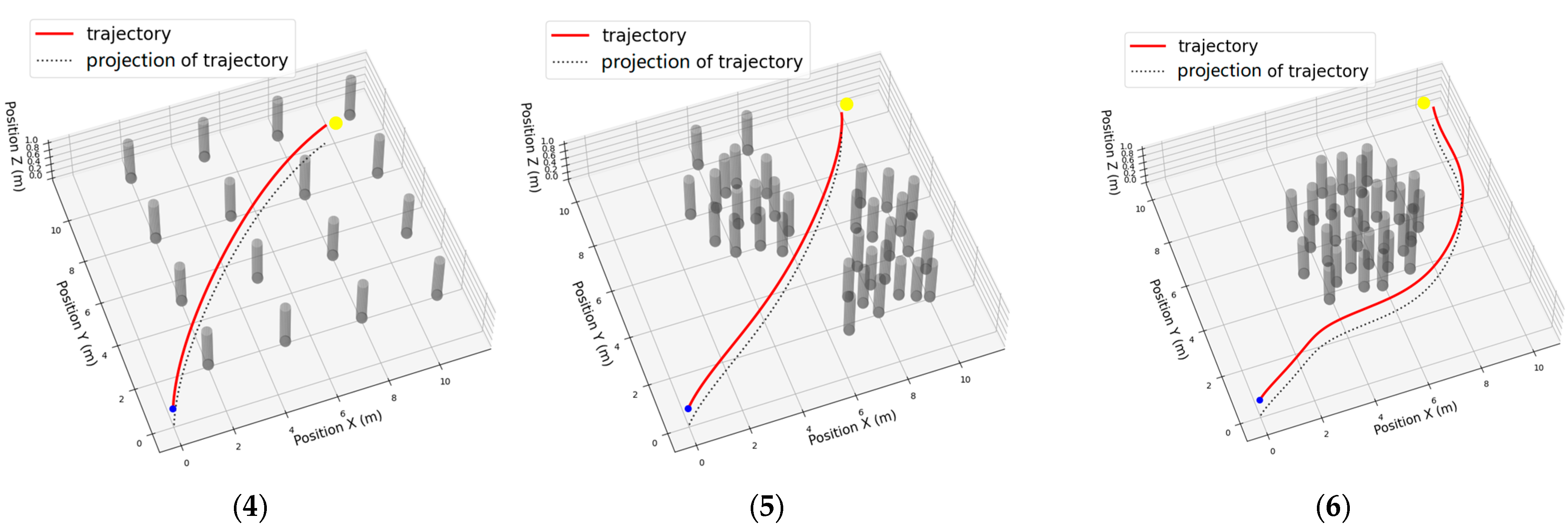

As shown in

Figure 2, there are varying numbers of cylindrical obstacles in the envisioned environments. The goal of this study is to enable drones to traverse cluttered environments safely and quickly. Without any mechanical protection, it may crash after collision with an obstacle. Further, without protective measures, the delicate and sensitive components of a drone, such as its propellers and motors, are vulnerable to damage from collisions with obstacles.

The reward function is the criterion for measuring the quality of a single step of an action, and both the value function

and the action–value function are

built on this basis. Therefore, the design of reward function must enable the control policy to avoid obstacles and fly to the destination as quickly as possible. Because our learning algorithm uses a clip function and the magnitude of a single policy update is constrained by a hyperparameter

, the reward function should be as continuous and smooth as possible in order to ensure stable convergence and avoid local optima. In accordance with these requirements, we designed a composite reward function with five components and their weights

as follows:

The first term

is a continuous reward, which presents a positive reward when the drone approaches the goal and a negative reward otherwise, and

is the distance between the drone and the destination. The physical interpretation of the second term,

, is the energy consumed by the current action. Because the control input

is the flight velocity of the drone and

is the kinetic energy of the drone, we moved the constant parameter

into weights for conciseness. This term can reduce meaningless actions, determine the shortest path, and suppress the action noise of a drone during a mission. The third term (Equation (16)) is a soft constraint on the flight speed. In reality, the ability of the drones is restricted due to the performance of their power systems and maneuverability. Long-term high loads may lead to serious accidents such as motor failures, which will provide a negative reward when the drone exceeds the

, to relieve the burden on the power system.

The fourth and final terms were designed to address sparse rewards in specific scenarios. When a drone collides with an obstacle, the symbol function provides a large negative reward as a punishment, whereas reaching the destination provides an extra positive reward. Further, the can add points of interest or avoid additional dangerous areas in the task.

4. Experiment

This chapter is divided into four parts to provide a detailed explanation of the experimental results.

Section 4.1 showcases the configuration of the simulator environment, in addition to the method and parameters used for policy training.

Section 4.2 presents the training results, where the average reward demonstrates the convergence of the algorithm in all the designed environments.

Section 4.3 and

Section 4.4 describe the performance of the trained controller in a simulator and the real world, respectively. The results indicate that the proposed algorithm performs well in different environments, with both the average and maximum flight speeds surpassing those of the PID algorithm.

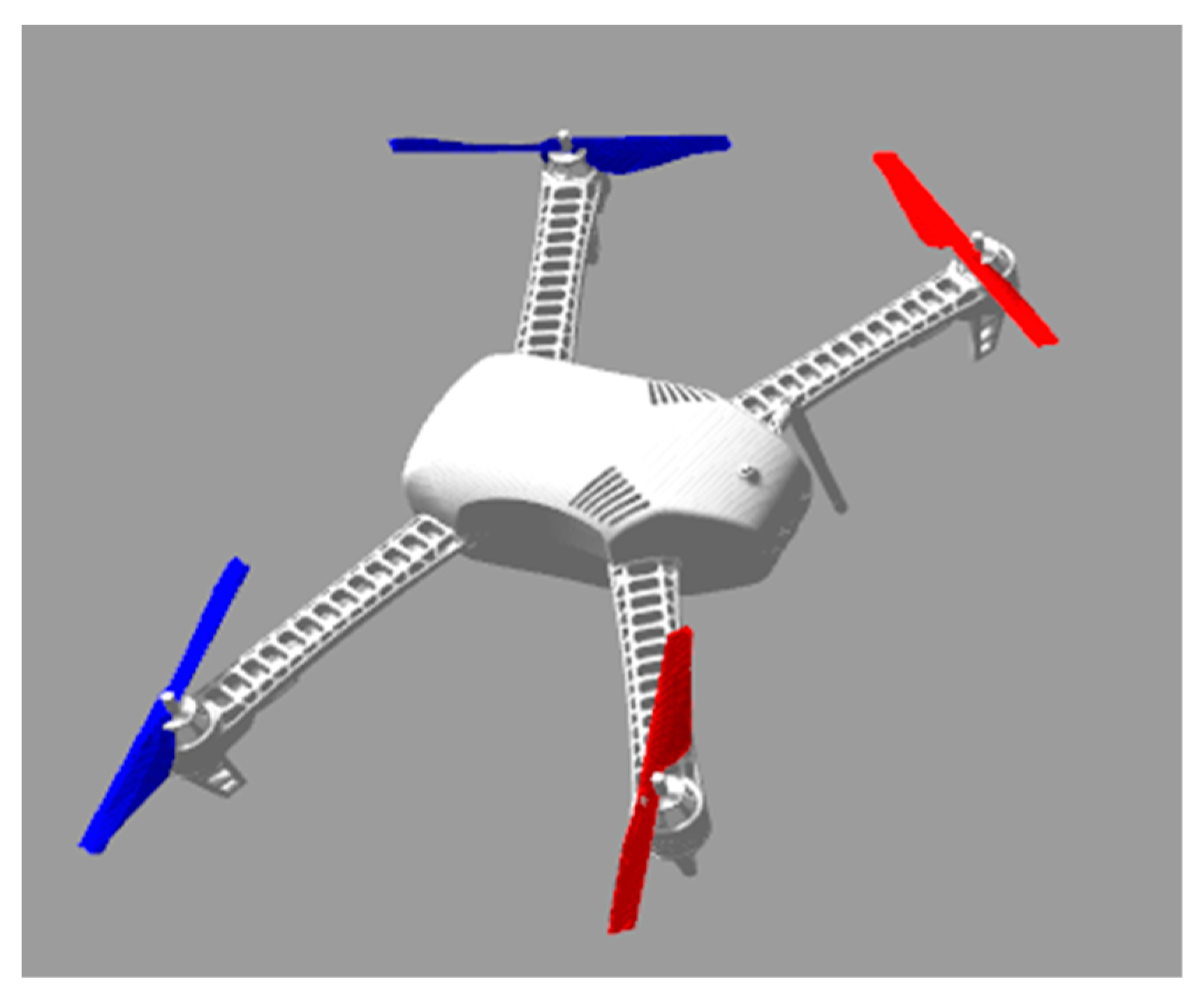

4.1. Policy Training Frame in Simulator

As described in Algorithm 1, the RL training process involves interacting with the environment based on the current policy, accumulating experience data, calculating the advantage function and the gradient of the policy, and updating the parameters of the actor and critic networks—with this process repeated iteratively. To improve the efficiency of collecting the experience data and ensure the safety of the interaction process, we used the Gazebo system to simulate the state of a drone in the real world. We used the model (

Figure 8) from [

38], which was designed for simulation in a robot operation system (ROS) and Gazebo, whose frame parameters are listed in

Table 1.

| Algorithm 1 Clipped Advantage Policy Optimization Algorithm. |

1: Initialize the actor and critic network using random parameters and .

2: Initialize the dataset to store trajectories .

3. for k = 0, 1, 2, …, (max episode) do

4. Initialize the environment and drone states, obtain initial observation, set to 0.

5. for t = 0, 1, 2, …, (max step per episode) do

6. Get action and log probability of it according to and policy .

7. Implement , get new state and reward from the environment.

8. Record the tuple to trajectory .

9. if crashed or arrive the destination or reach max timestep then

10. Reset the environment and drone states.

11. Store the trajectory to , .

12. end for

13. Calculate rewards-to-go using Equation (1) for every trajectory.

14. Calculate the estimation of advantage according to Equation (11) based on the current value function .

15. Update the actor network using Equation (12).

16. Update the critic network using Equation (14).

17. end for |

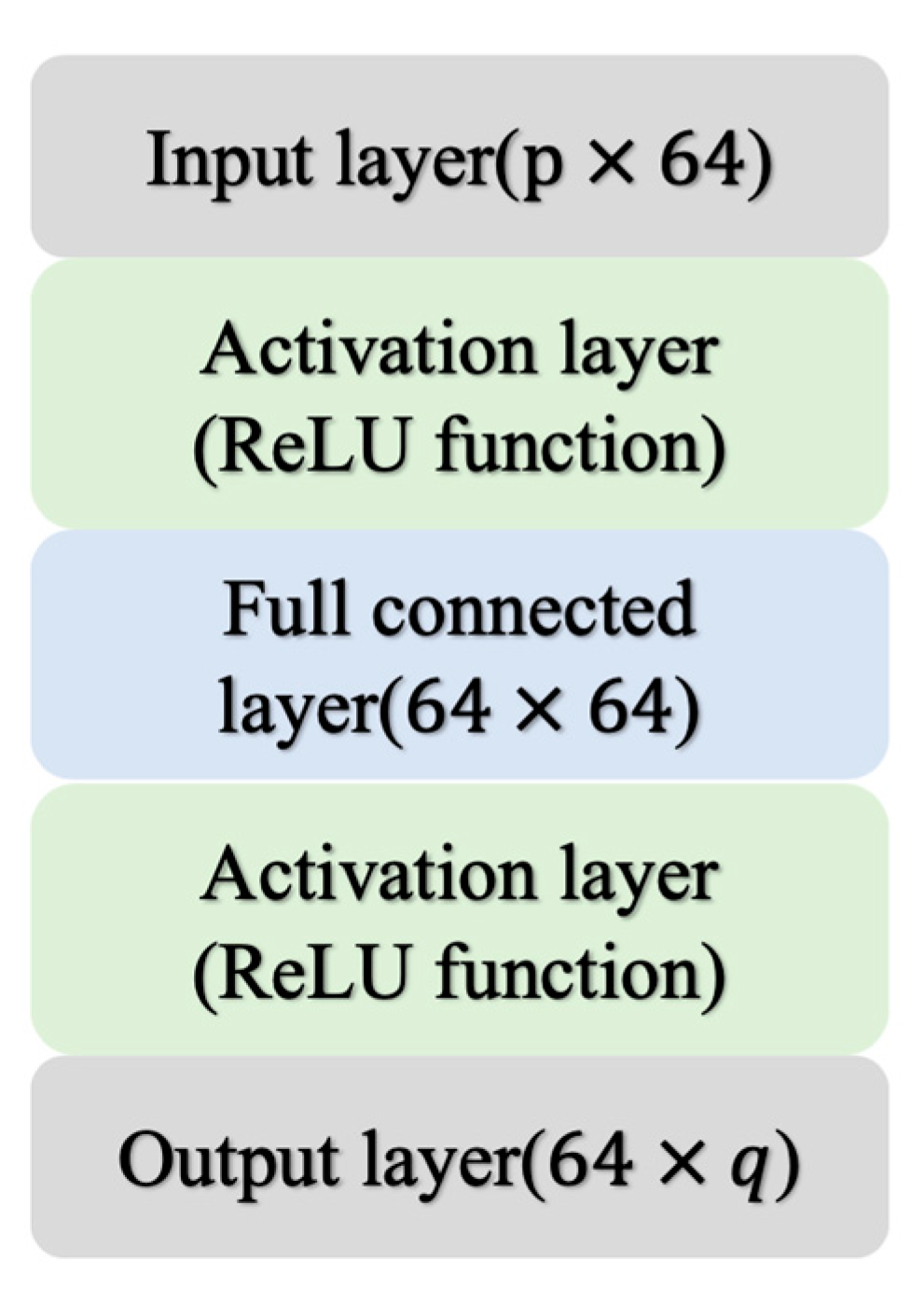

We tackled two structurally identical neural networks as actor and critic networks, which possess the same three-layer network structure and number of parameters, as shown in

Figure 9. Both networks use the ReLU function as the activation layer, connected behind the input layer and fully connected layer. The only differences were in the input and output layers. The input layer of both networks has the same dimensions as the system state. However, the output layer of the critic network had q = 1, whereas that of the actor network was q = 2.

4.2. Policy Training Process

We then used the hyperparameters in

Table 2 to train the control policy in all the designed environments. In the experiments, we found that adjusting some of the hyperparameters significantly influenced the training results. For example, an exceptionally small “Max step per episode” will lead to insufficient time for the algorithm to find a complete optimal trajectory, and so more steps are required in a larger scenario. And larger “Timesteps per batch” can improve data utilization efficiency but will also increase data collection time. Our policy optimization algorithm updates the policy, and after each update, the old data become ineffective. Therefore, we chose a parameter value that strikes a balance between efficiency and data collection time. Similar to gradient descent algorithms, the learning rate determines the step size of parameter updates. An excessively small learning rate can lead to slow convergence or becoming stuck in local optima, while an excessively large learning rate can cause oscillations or even divergence. Finally, the “Covariance matrix element value” controls the shape of the action distribution. A larger value will increase the exploration of the policy in the environment, making it less likely to become stuck in local optima. However, it may also slow down the convergence speed of the policy.

The Gazebo simulator, ROS, and reinforcement learning algorithm were deployed together on a desktop computer running Ubuntu 18.04. The main hardware configuration included an Intel 11,900 k CPU, an Nvidia 3090 GPU, 16-GB RAM, and a 512-GB hard drive.

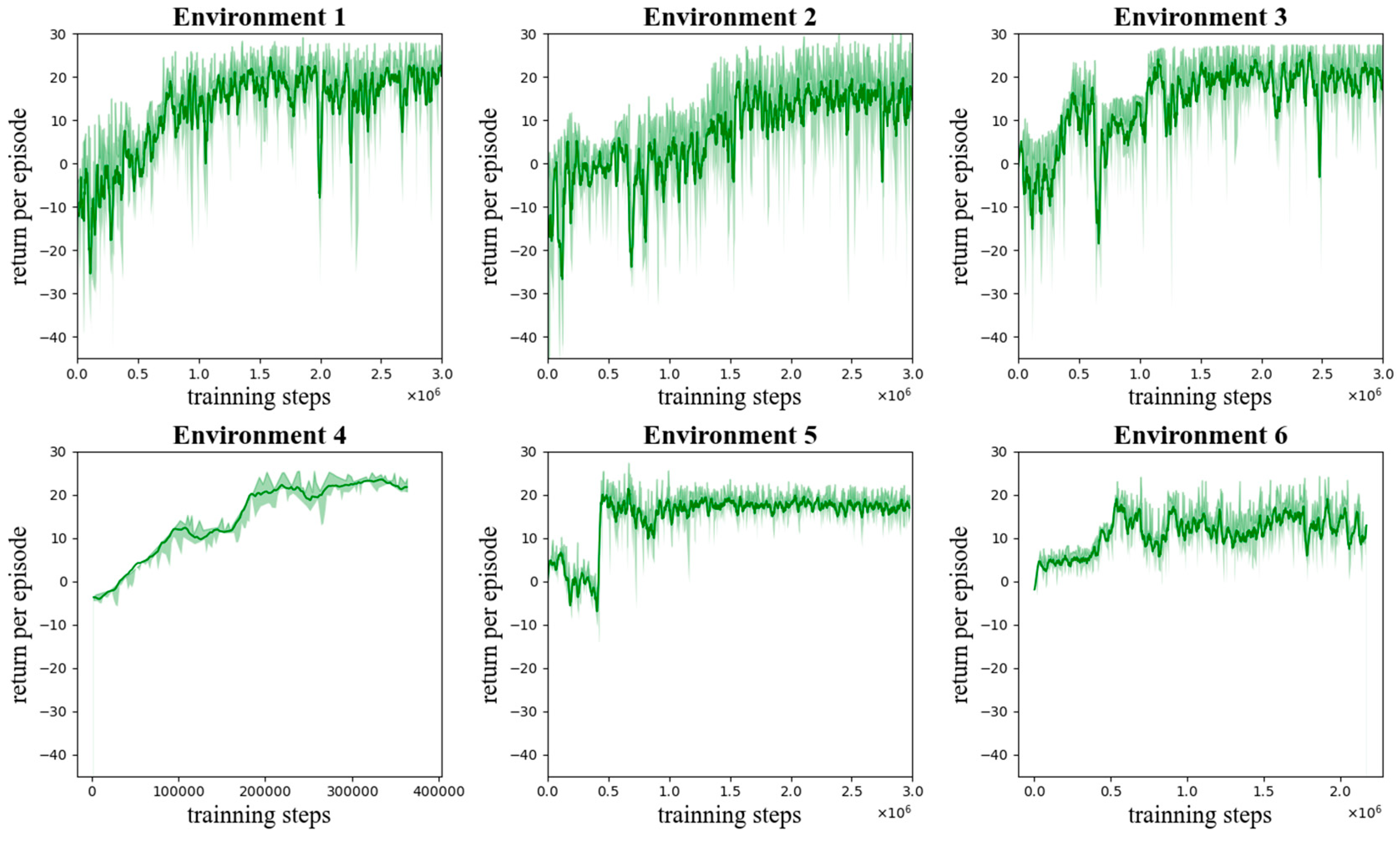

Figure 10 shows the total return for each episode during training and the average of 10 episodes. The

y-axis in the figure represents the accumulated reward that is obtained through the policy within an episode, where a higher reward indicates a better performance. Notably, different environments have different upper bounds. As shown in the figure, the proposed algorithm gradually improves the accumulated reward in all environments and finally converges.

It is worth noting that the training process exhibits different characteristics in environments with different obstacle distribution styles. In environments with more scattered obstacle distributions, such as env 1−3, the training process of the algorithm exhibited higher randomness during convergence. Further, sudden drops in returns may occur over several cycles as the average return increases. This is because the algorithm searches for the optimal policy, and the cluttered environments comprise random obstacles when exploring a certain direction. The returns obtained during these explorations were relatively low, owing to the penalty for collisions in the reward function. After several cycles of optimization, the optimization algorithm finds the optimal policy gradient again, and the average return resumes its upward trend.

In env3, where the obstacles are distributed in an obvious pattern (lined), the exploration–optimization process of the policy is a continuous upward trend. The performance of the algorithm in env5 is impressive. In the initial exploration process, the return value hovers around a low value and even decreases slightly. However, after exploring the gap between the two clusters of obstacles that were designed, the average return quickly rises to near-optimal levels in a few episodes. Similarly, in env6, after exploring the cluster of obstacles, the policy can be quickly optimized to a good level.

In summary, our proposed algorithm demonstrates a strong adaptability to different environments, successfully determines the correct policy gradient, and optimizes the policy to improve the return value in environments with cluttered, uniform, and concentrated obstacle distributions.

4.3. Flight Test in Designed Environments

During the training process of the control policy, because exploration and exploitation must be balanced, actions are sampled from the policy network, and this does not guarantee that the optimal action is always sampled. Therefore, during testing, we designed a simplified structure, as shown in

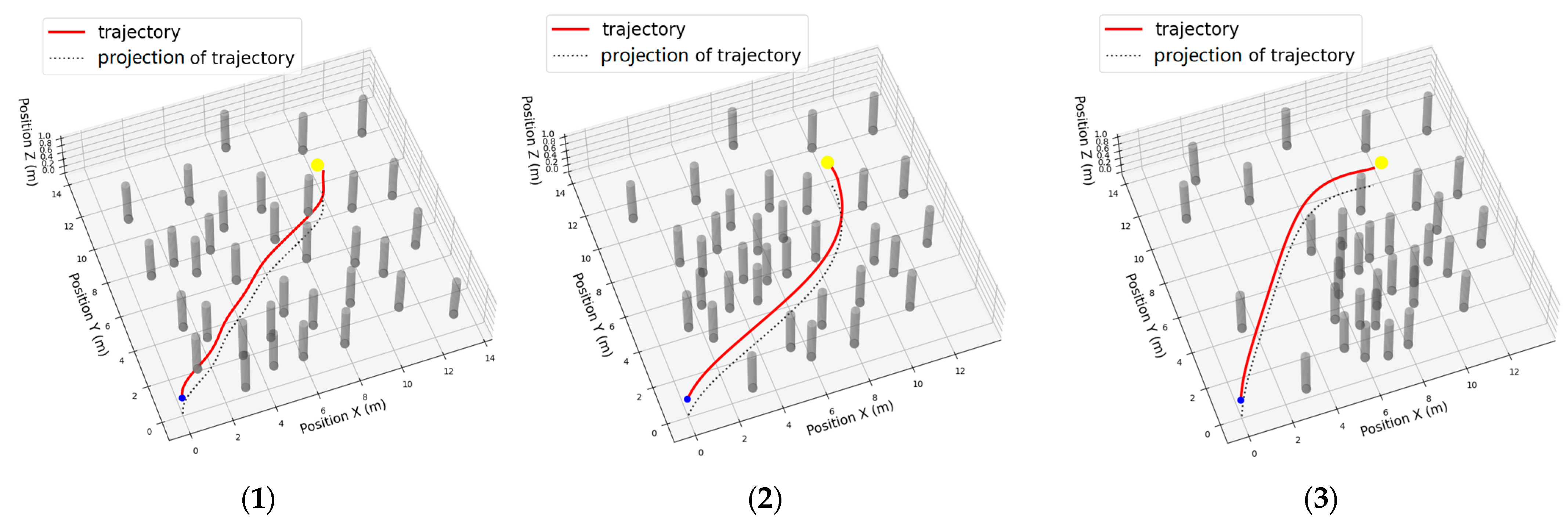

Figure 11, by removing components such as the critic network, loss function, optimizer, and data buffer and retaining only the policy network. Moreover, the control command received by the drone is selected directly based on the output of the policy network, which represents the optimal action. We tested the well-trained policy in all the designed environments in the Gazebo simulator.

Figure 12 shows the flight trajectories and projectories in the X–Y plane.

It can be observed that our algorithm performs well in these scenes and can choose different paths to avoid collisions with obstacles and arrive at the destination as soon as possible. In an environment with randomly distributed obstacles (env 0), neatly arranged obstacles (env 3), and two clusters obstacles (env 4), it selects the paths that are closest to a straight line, perfectly avoiding the obstacles and minimizing the path length. In an environment with a manually designed optimal path, the shortest smooth curve is chosen to minimize energy and time consumption, which proves that the energy evaluation item in our reward function works well. In env 5, with one cluster of obstacles, it first tries to move closer to the direction of the destination and then finds the shortest path to bypass the obstacles.

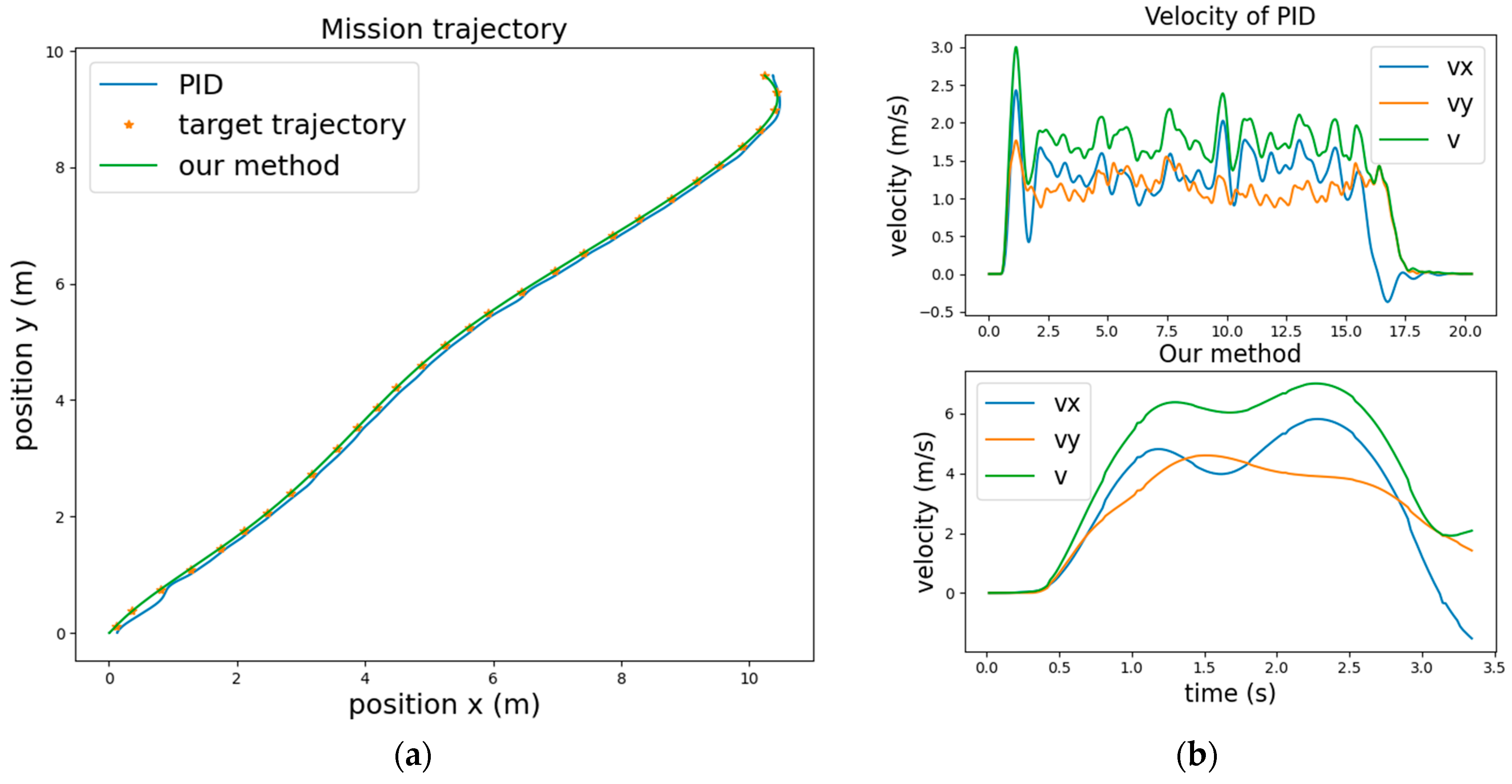

We compared the performance of the proposed method with that of a PID controller, as shown in

Figure 13. It was observed that the proposed method achieves a maximum speed of 7 m/s, whereas the PID controller can only reach 3 m/s and maintain it for a short duration. The proposed method completes the task in 3.4 s, while the PID algorithm requires 18 s. This clearly demonstrates that the proposed method has a significant advantage in terms of mission efficiency in complex environments.

4.4. Real-World Test

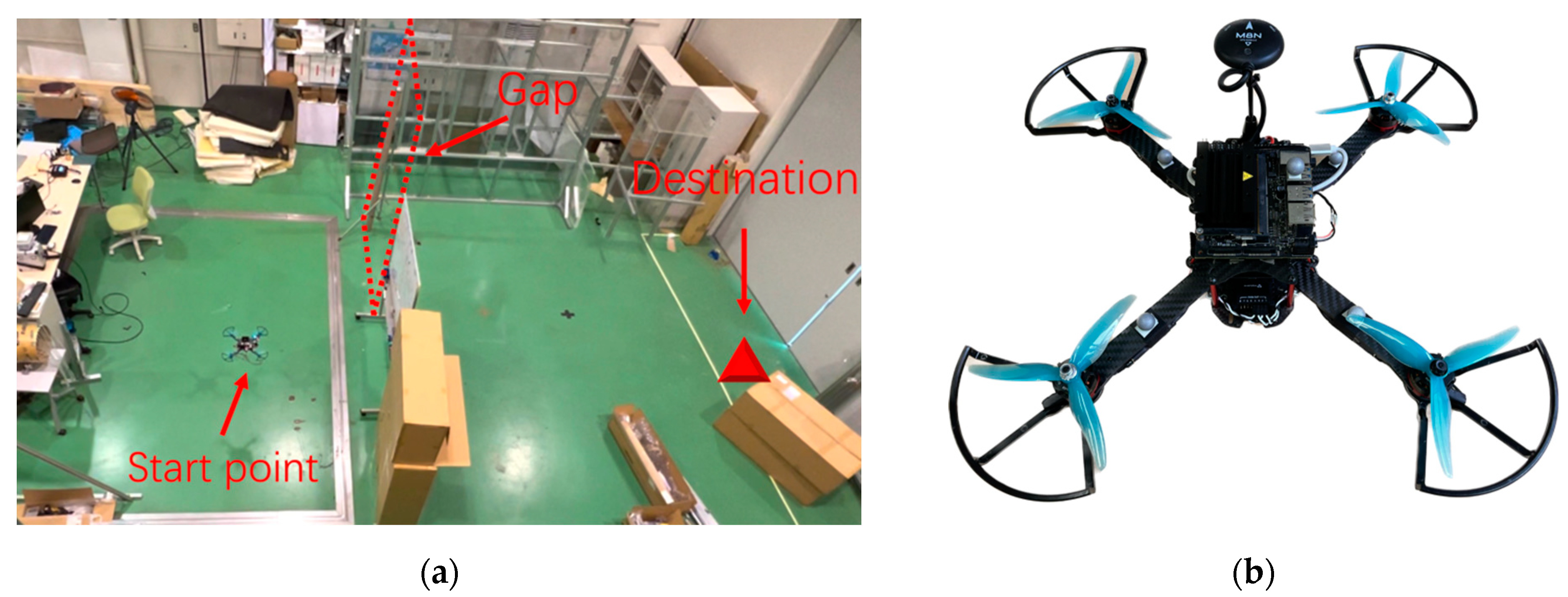

To validate the performance of the proposed algorithm in the real world, we designed a task of navigating through the gap between two walls, as shown in

Figure 14a. There is a wall with a gap between the starting point and the destination. The drone must pass through this gap, reach its destination, and hover. The testing methodology is essentially the same as that shown in

Figure 11, and the only difference is the substitution of the simulated environment and drone in Gazebo with a real drone (

Figure 14b). The low-level controller runs on Pixhawk hardware, whereas our high-level control policy runs on the Jetson Nano. The motion capture system data nodes run in the ROS and communicate through network interfaces.

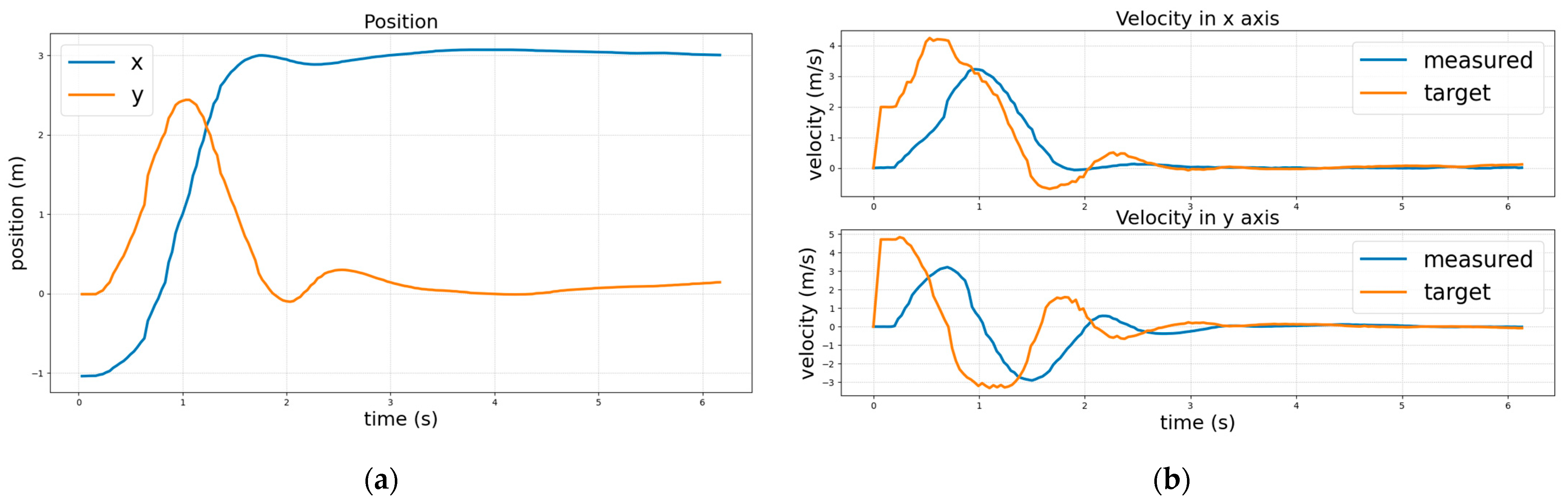

Figure 15a,b show the position and velocity, respectively. For safety purposes, we have implemented a backup system using traditional methods, which can be switched back and forth with the RL method. After 0.2 s from the start of the mission, the control commands generated by the policy begin to take effect, as shown in

Figure 15b. Due to the limited area of the site and considering safety, the quadcopter needs to hover after reaching the destination. Therefore, the maximum total speed is only around 4.39 m/s, and in the simulation, it can reach a maximum speed of 10 m/s. This experiment demonstrates that our method can still control the drone to complete the task of navigating through complex environments in the real world, despite the changes in drone hardware and low-level controllers.

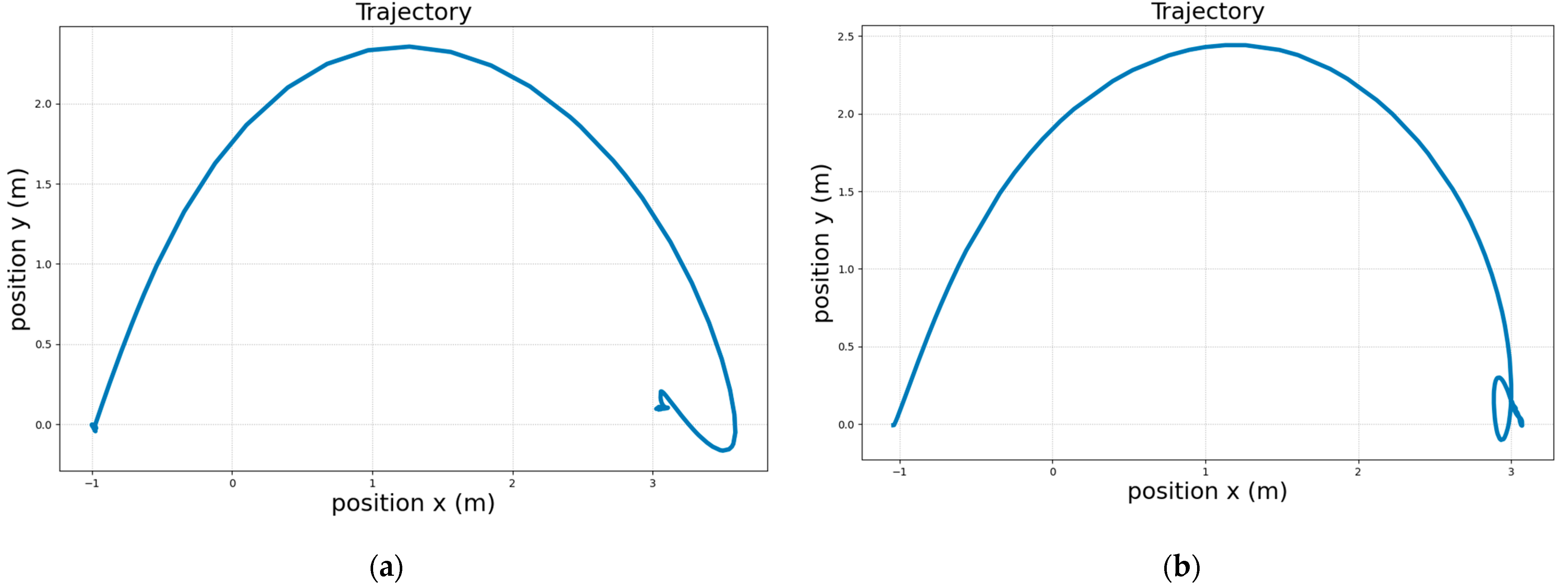

Furthermore, we observed an overshoot that was not present in the simulation tests, as shown in

Figure 16a. We analyzed two reasons for this observation. First, the low-level controller of the real-world drone performed poorly than that in the simulator. Second, the policy must wait for all the node data to be updated before it can start the computation. The ROS operates in a soft, real-time manner, and asynchrony between data can cause delays in the control commands. To mitigate the overshoot issue, we adjusted the speed controller parameters and increased the target-tracking speed, which partially improved the situation, as shown in

Figure 16b. The test video and code can be found in

https://www.youtube.com/watch?v=hRQc1lmJNkY (accessed on 8 July 2023) and

https://github.com/pilotliuhx/Drone-navigation-RL.git (accessed on 8 July 2023).

5. Conclusions

This paper proposes a novel end-to-end drone control method based on deep reinforcement learning. It directly takes the drone’s state, environmental information, and task objectives as inputs, and outputs the desired velocity to a low-level controller. Compared with traditional model-based pipeline and existing teacher–student learning framework, we faced two main difficulties. The first one was how to lead the policy optimization; we designed a hybrid reward function to evaluate the policy, with a consideration of task progress, energy cost, collision with obstacles, and arrival at the destination. And the other was how to maintain the same level of effectiveness in the simulation within the real world; we used the Gazebo simulator to ensure the reality of physics, and a model-based low-level angular rate, attitude angle, and velocity controller were used to provide consistent performance in velocity command tracking.

To evaluate the performance of the proposed method, obstacle environments with diverse characteristics were designed in a simulator, and the control policy was trained from scratch. Subsequently, the trained control policy was separated and tested in both the Gazebo simulator and a real-world environment. The results show that the proposed method successfully completed flying tasks in complex environments in both simulated and real-world experiments.

It was demonstrated that the proposed method performs well in complex environments with static obstacles. However, certain challenges remain unaddressed. First, the policy’s training efficiency was not high. With our current desktop configuration, a single training session takes approximately 7 h. In future works, we will aim to improve the training efficiency by using parallel training technology. Second, the direct application of the training policy to real-world drones resulted in an overshoot. This discrepancy between the simulation and reality suggests that further adjustments or enhancements may be required to improve the control performance in real-world scenarios.

In addition, there is a potential issue when dealing with moving obstacles. The navigation algorithm must consider the presence of dynamic obstacles and adjust its trajectories accordingly. Further investigation is required to ensure the safe and efficient navigation of drones in the presence of moving obstacles. These challenges provide valuable insights for future research and development, and addressing them will further enhance the performance and applicability of the proposed method.