QEHLR: A Q-Learning Empowered Highly Dynamic and Latency-Aware Routing Algorithm for Flying Ad-Hoc Networks

Abstract

:1. Introduction

1.1. Contribution of This Study

- (1)

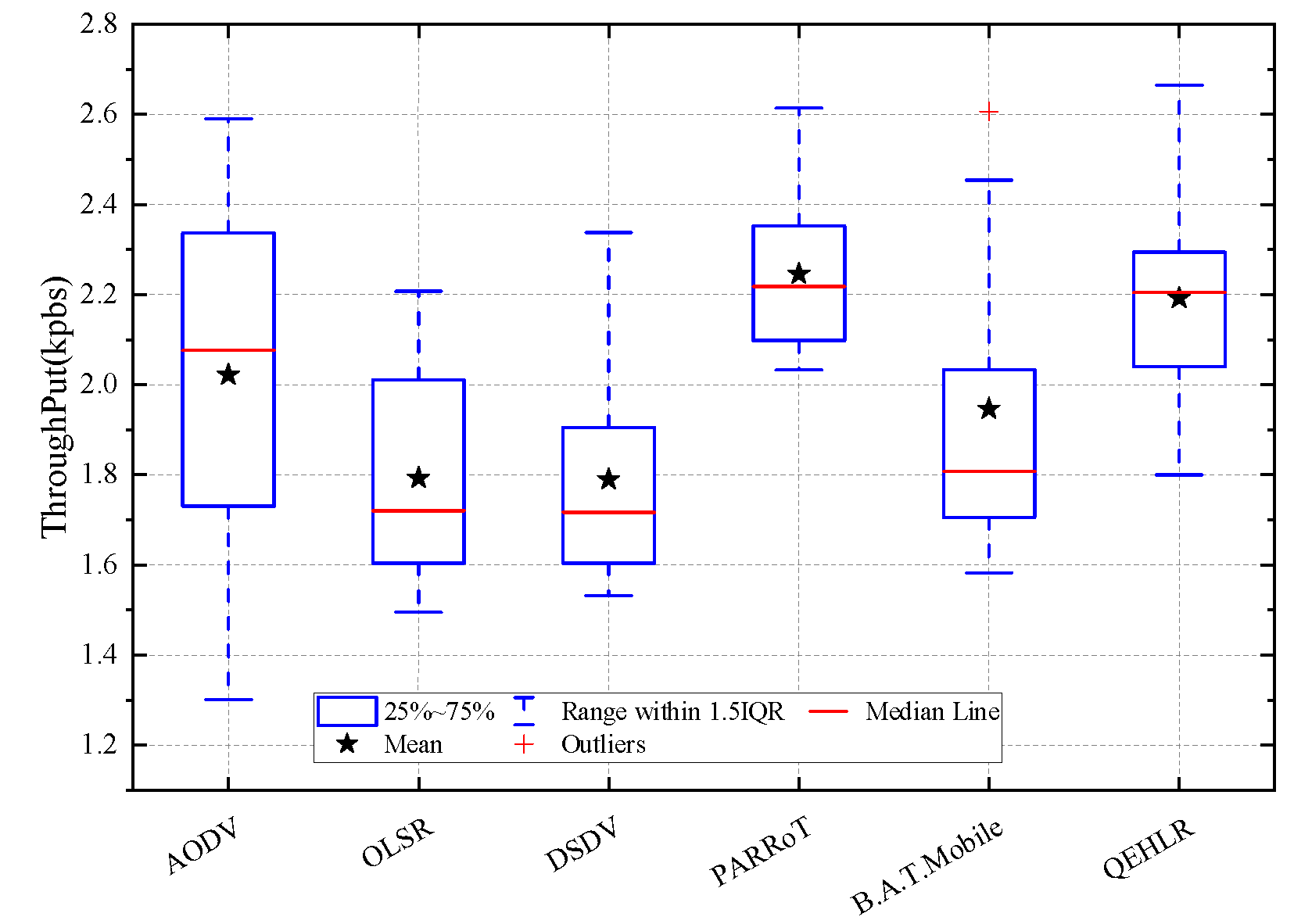

- A Q-learning empowered highly dynamic and latency-aware routing algorithm for ad-hoc networks (QEHLR) is proposed, which combines Q-learning with end-to-end delay improvement to address the issue of ineffective packet routing in highly dynamic FANETs using traditional routing algorithms. The method of Q-learning is used to learn the link status in the network, and effective routing is selected through Q-value to avoid connection loss. The remaining time of the link, or the path lifespan, is included in the routing criteria to maintain the routing table. QEHLR can delete estimated failed links according to the network status, reducing packet loss caused by failed routing selection. Routing experiments designed in this paper show a significant improvement in the packet transmission rate of the improved algorithm.

- (2)

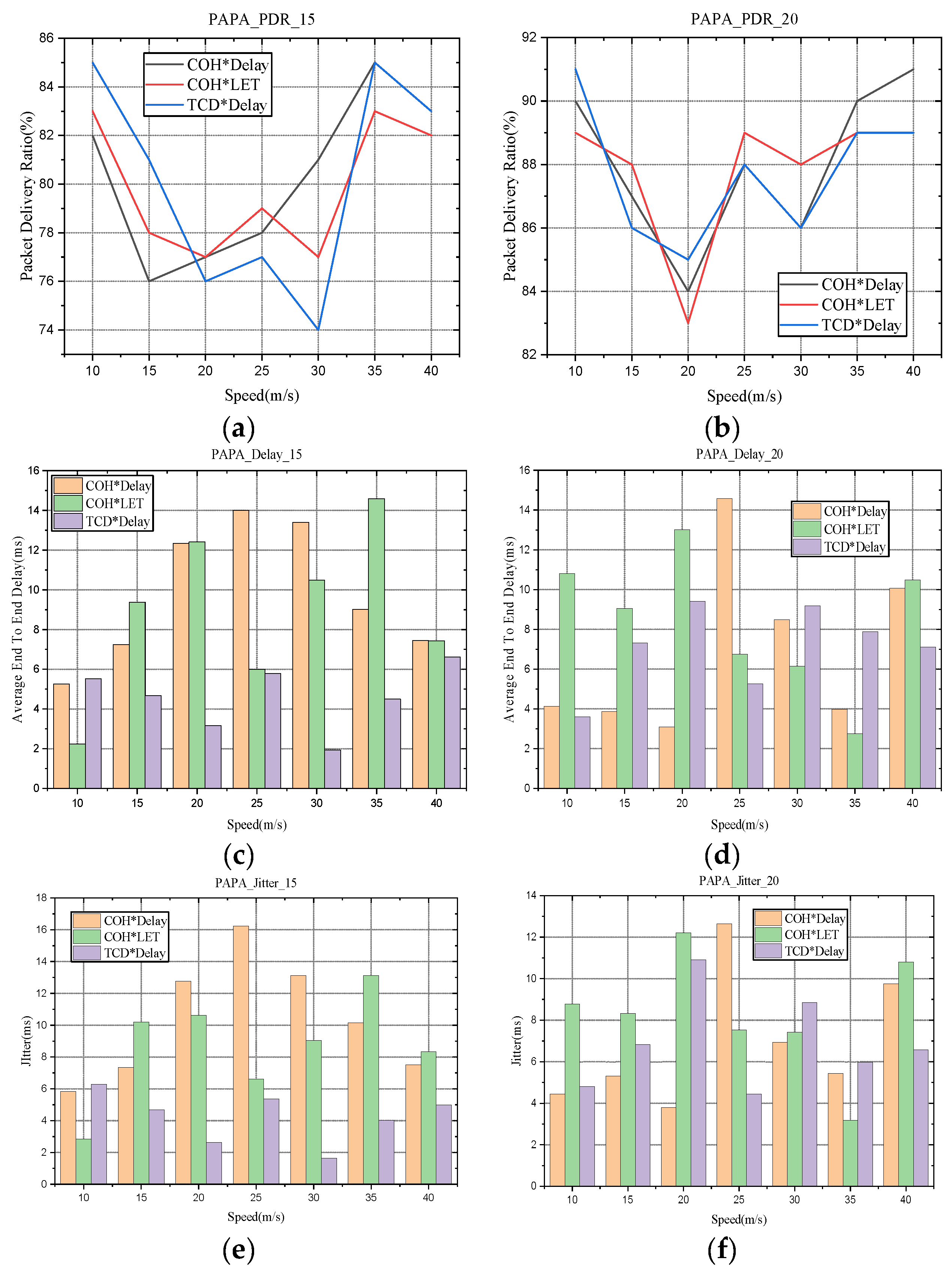

- A routing method based on topology change degree improvement is proposed to address the problem, where routing protocols cannot adapt to various mobile model task scenarios in FANET with high dynamic topology due to the diversification of task scenarios and the variability of tasks. The calculation factor for network topology change degree is introduced on the basis of the QEHLR protocol. The experimental results show that the improved routing algorithm can achieve a higher packet transmission rate and a lower delay.

1.2. Organization of This Article

2. Related Works

3. Modeling Analysis

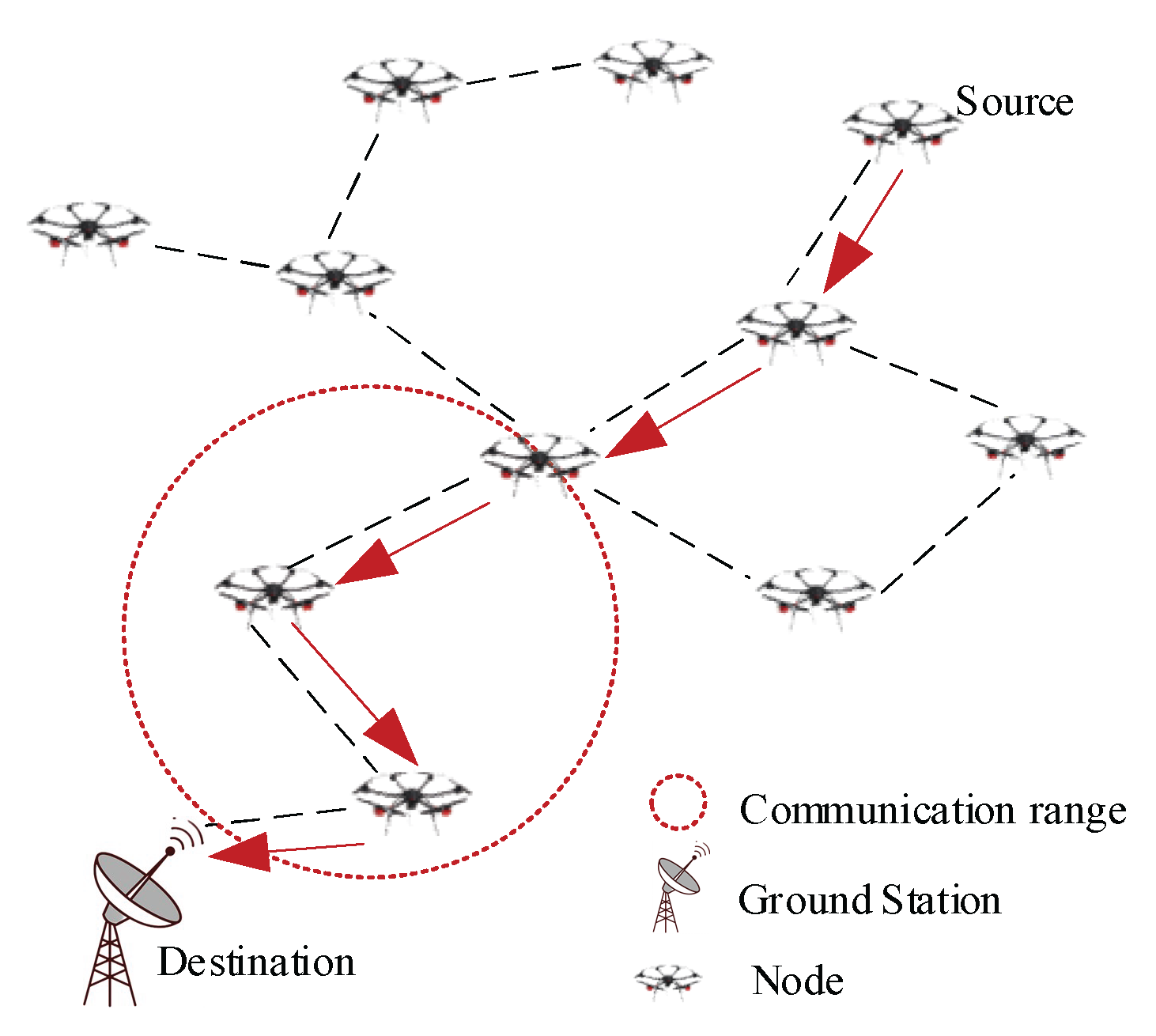

3.1. FANETs Communication Structure

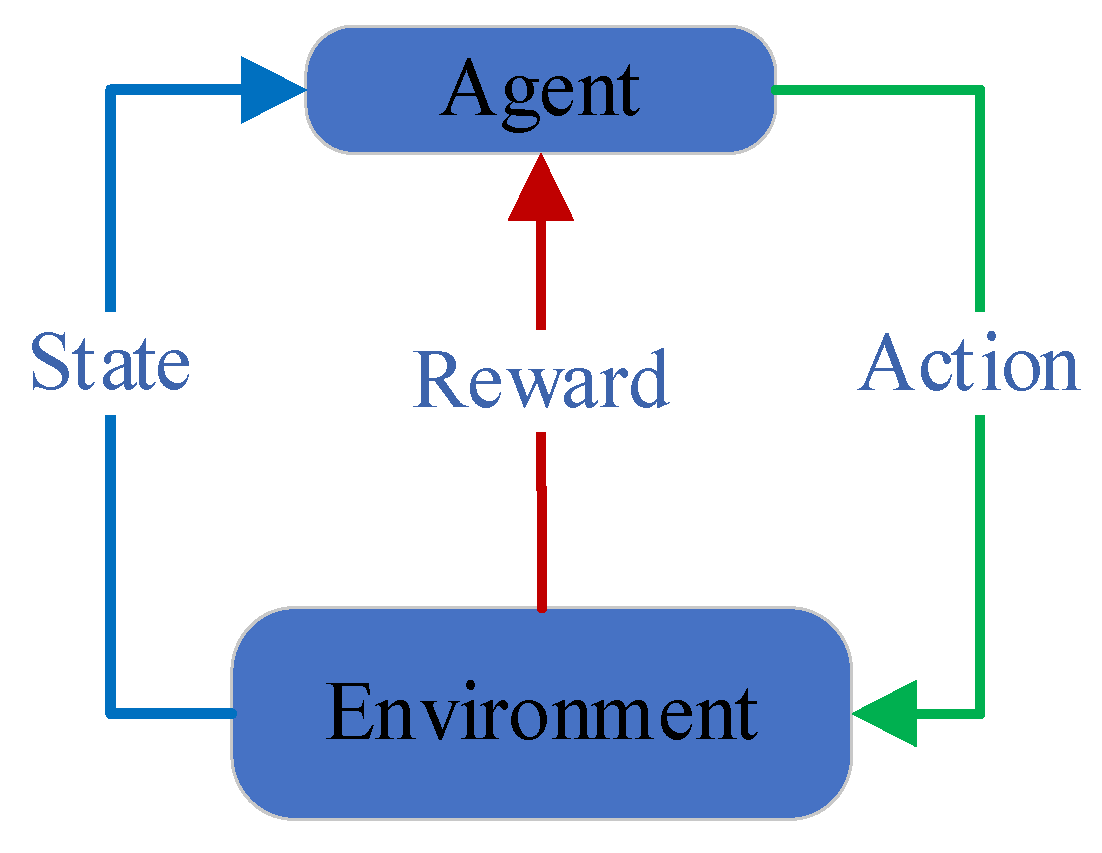

3.2. Reinforcement Learning

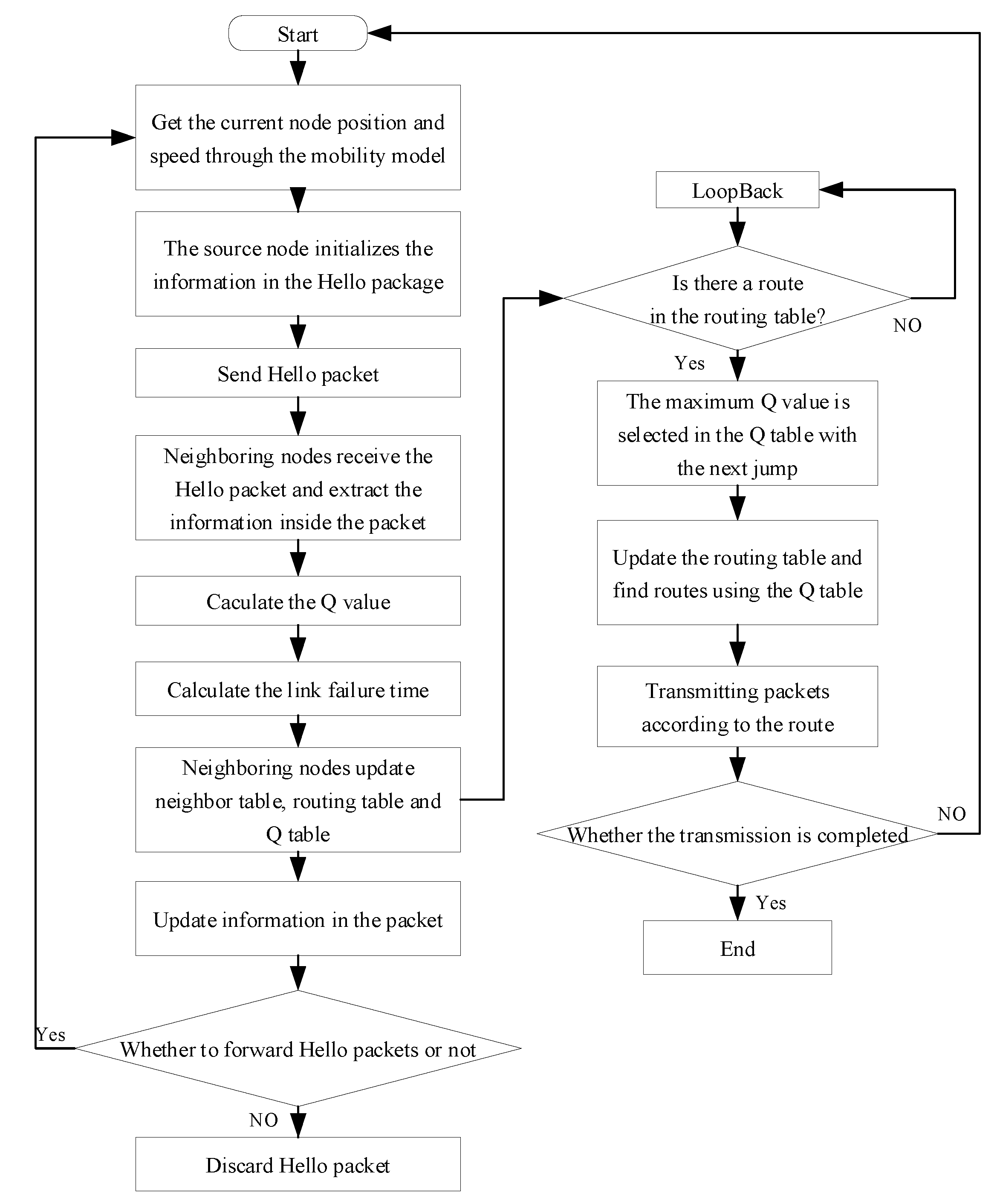

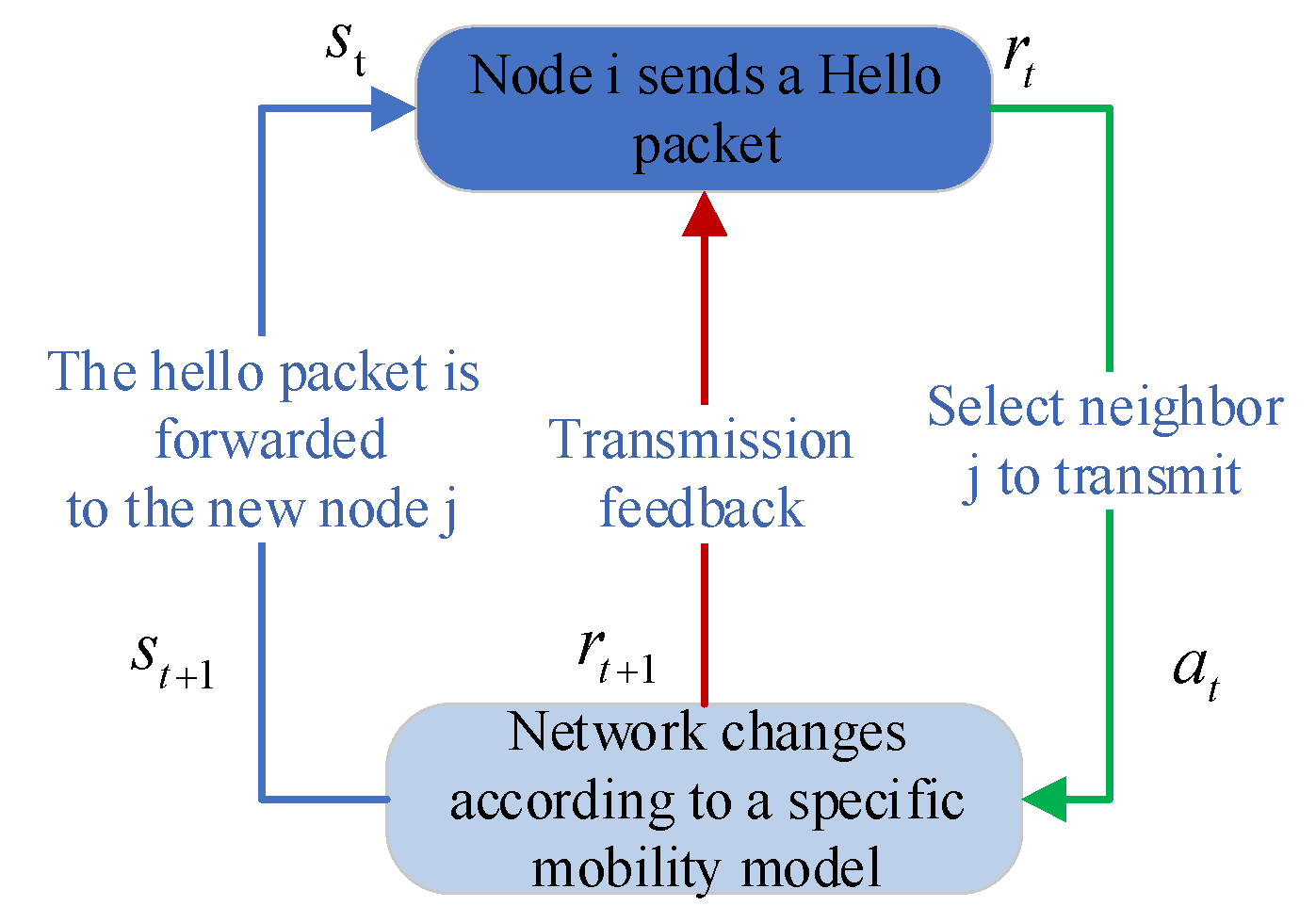

4. QEHLR Routing Protocol

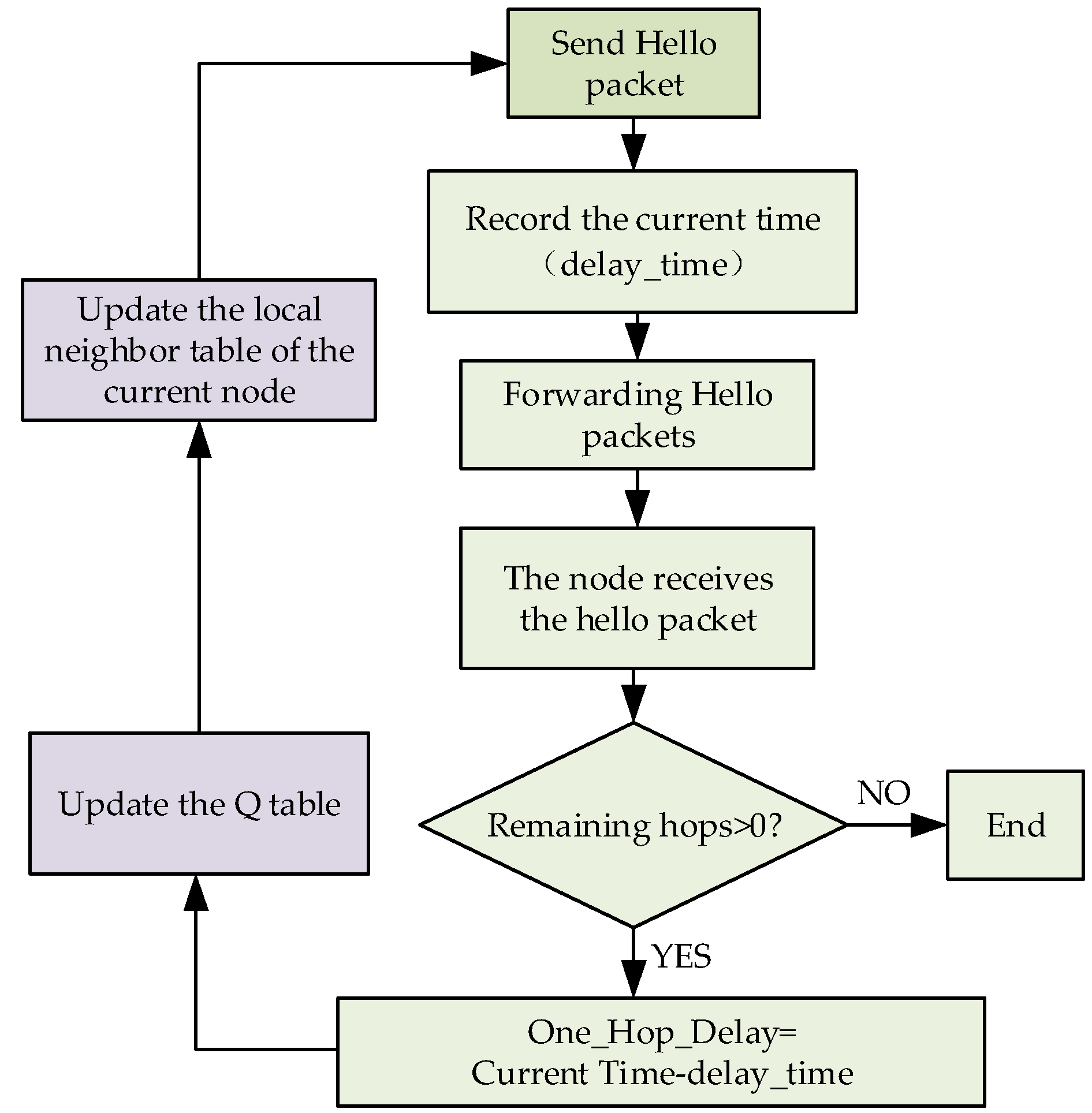

4.1. Routing Establishment

4.2. Routing Maintenance

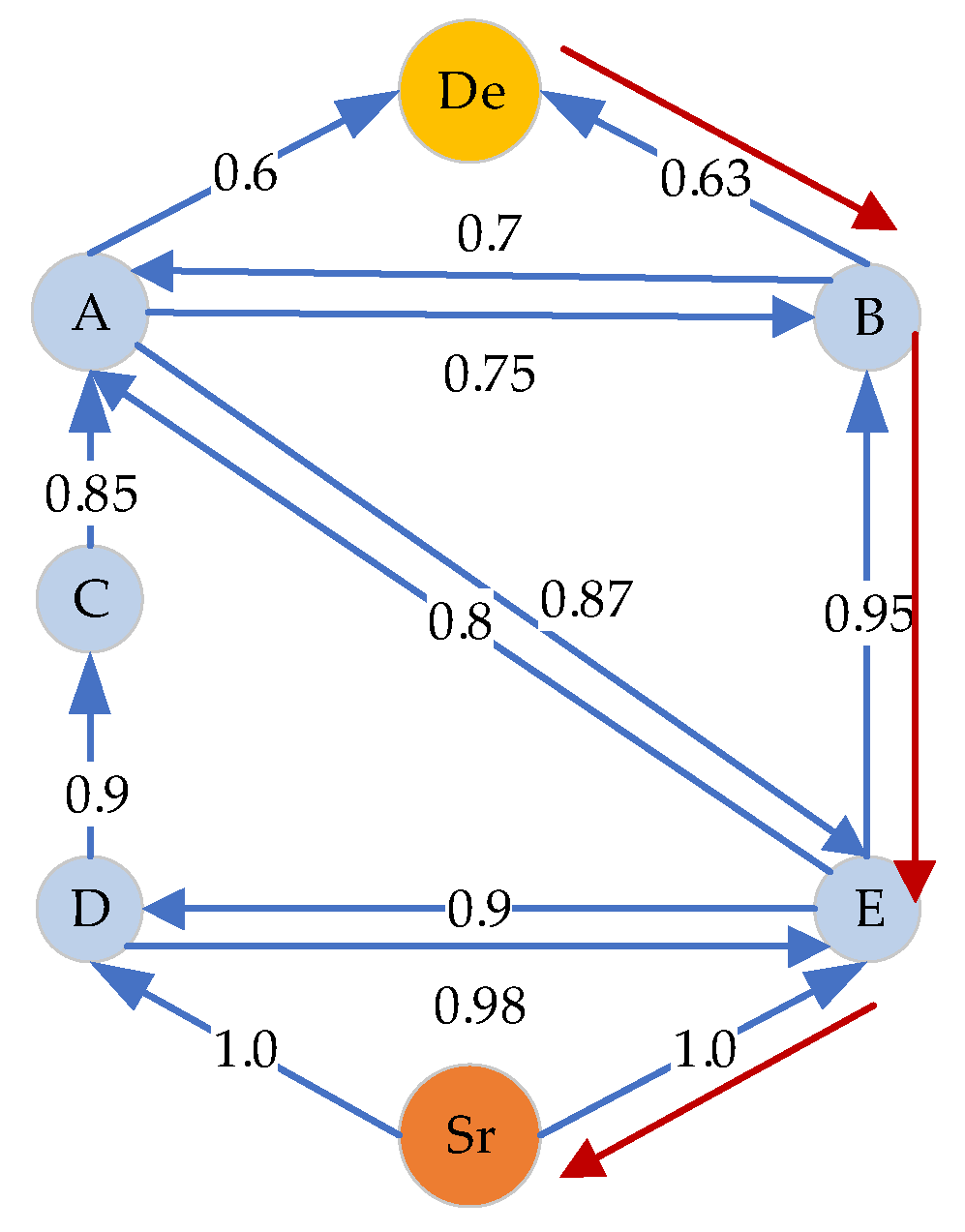

4.3. Routing Decision

5. Simulation Results

5.1. Evaluation Indicators

5.2. Simulation Environment

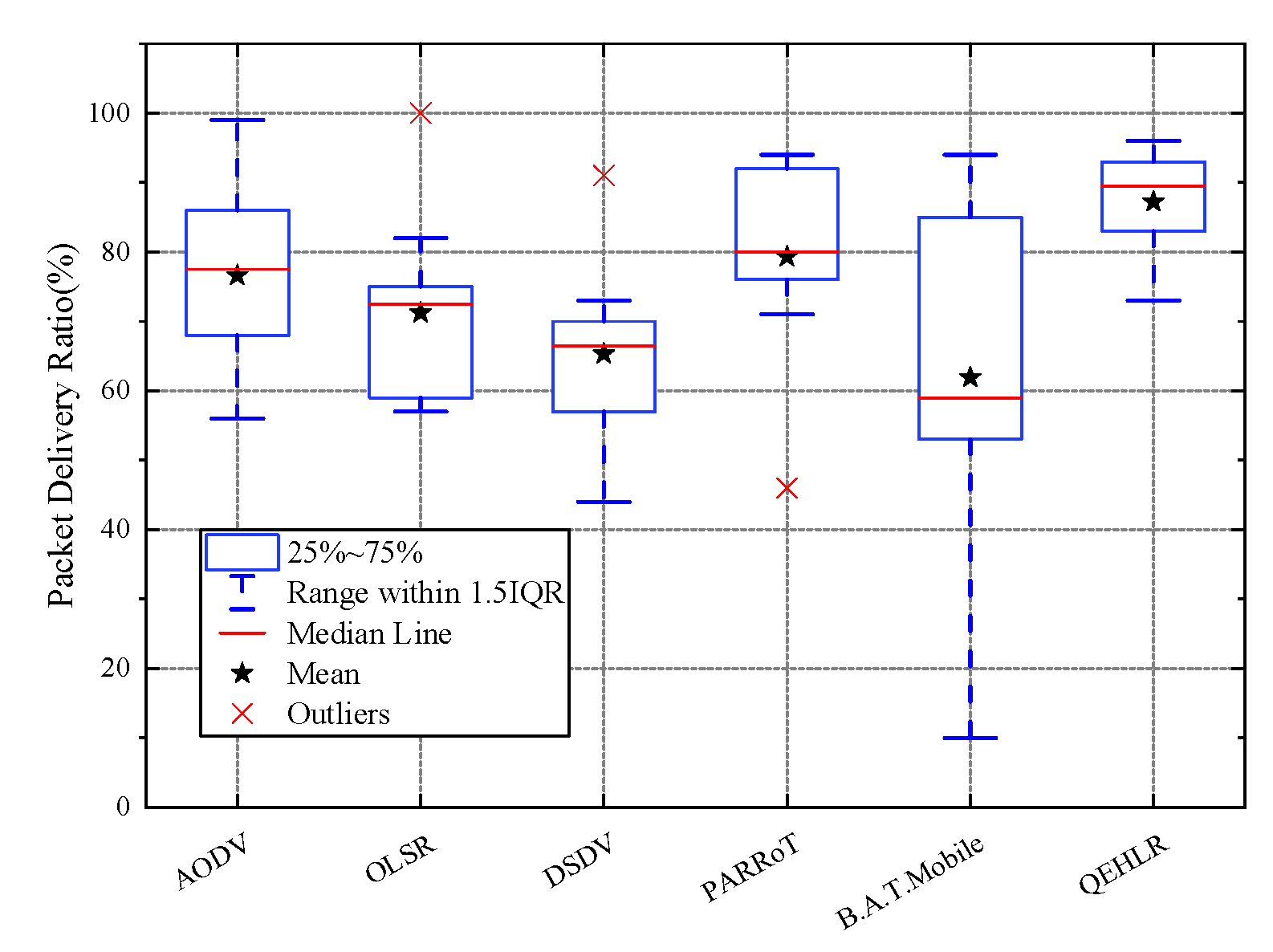

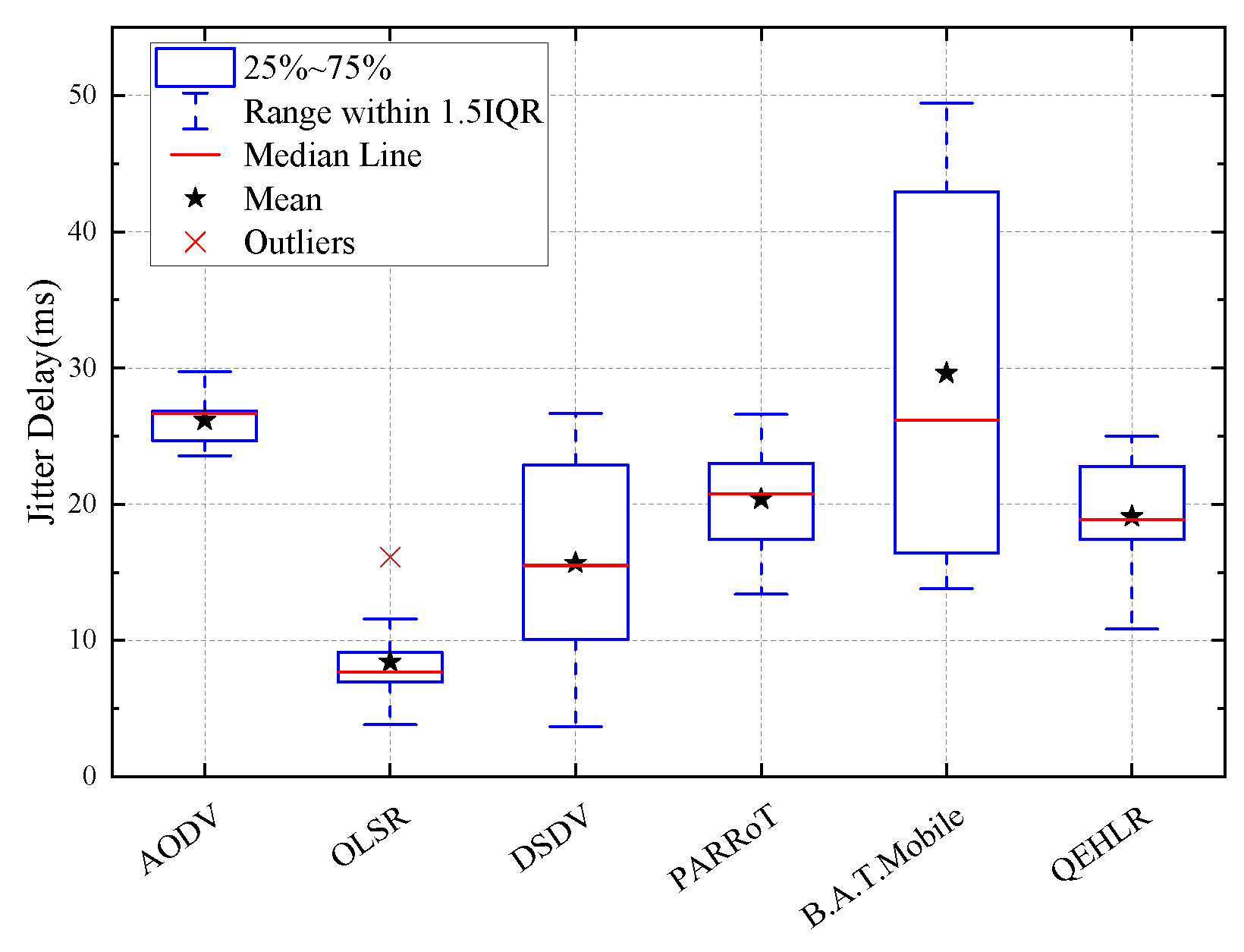

5.3. Simulation Result and Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bekmezci, I.; Sahingoz, O.K.; Temel, S. Flying Ad-Hoc Networks (FANETs): A survey. Ad Hoc Netw. 2013, 11, 1254–1270. [Google Scholar] [CrossRef]

- Hong, J.; Zhang, D. TARCS: A Topology Change Aware-Based Routing Protocol Choosing Scheme of FANETs. Electronics 2019, 8, 274. [Google Scholar] [CrossRef] [Green Version]

- Ullah, S.; Mohammadani, K.H.; Khan, M.A.; Ren, Z.; Alkanhel, R.; Muthanna, A.; Tariq, U. Position-Monitoring-Based Hybrid Routing Protocol for 3D UAV-Based Networks. Drones 2022, 6, 327. [Google Scholar] [CrossRef]

- Shumeye Lakew, D.; Sa’ad, U.; Dao, N.N.; Na, W.; Cho, S. Routing in Flying Ad Hoc Networks: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2020, 22, 1071–1120. [Google Scholar] [CrossRef]

- Nawaz, H.; Ali, H.M.; Laghari, A.A. UAV Communication Networks Issues: A Review. Arch. Comput. Methods. Eng. 2020, 28, 1349–1369. [Google Scholar] [CrossRef]

- Sang, Q.; Wu, H.; Xing, L.; Xie, P. Review and Comparison of Emerging Routing Protocols in Flying Ad Hoc Networks. Symmetry 2020, 12, 971. [Google Scholar] [CrossRef]

- Alzahrani, B.; Oubbati, O.S.; Barnawi, A.; Atiquzzaman, M.; Alghazzawi, D. UAV assistance paradigm: State-of-the-art in applications and challenges. J. Netw. Comput. Appl. 2020, 166, 102706. [Google Scholar] [CrossRef]

- Nazib, R.A.; Moh, S. Routing Protocols for Unmanned Aerial Vehicle-Aided Vehicular Ad Hoc Networks: A Survey. IEEE Access 2020, 8, 77535–77560. [Google Scholar] [CrossRef]

- Johnson, D.B.; Maltz, D.A. Dynamic Source Routing in Ad Hoc Wireless Networks. Mobile. Comput. 1996, 353, 153–181. [Google Scholar]

- Murthy, S.; Garcia-Luna-Aceves, J.J. An efficient routing protocol for wireless networks. Mob. Netw. Appl. 1996, 1, 183–197. [Google Scholar] [CrossRef] [Green Version]

- Perkins, C.E.; Bhagwat, P. Highly dynamic destination-sequenced distance-vector routing (DSDV) for mobile computers. Comput. Commun. Rev. 1994, 24, 234–244. [Google Scholar] [CrossRef] [Green Version]

- Clausen, T.; Jacquet, P. Optimized link state routing protocol (OLSR). RFC 2003, 3626, 1–75. [Google Scholar]

- Alshabtat, A.I.; Dong, L. Low latency routing algorithm for unmanned aerial vehicles ad-hoc networks. Int. J. Electr. Eng. 2011, 5, 989–995. [Google Scholar]

- Haas, Z. The Zone Routing Protocol (ZRP) for Ad Hoc Networks. Available online: https://www.ietf.org/proceedings/55/I-D/draft-ietf-manet-zone-zrp-04.txt (accessed on 10 April 2023).

- Park, V.; Corson, S. Temporally-Ordered Routing Algorithm (tora). Available online: http://www.ietf.org/proceedings/52/I-D/draftietf-manet-tora-spec-04.txt (accessed on 10 April 2023).

- Rovira-Sugranes, A.; Razi, A.; Afghah, F.; Chakareski, J. A review of AI-enabled routing protocols for UAV networks: Trends, challenges, and future outlook. Ad Hoc Netw. 2022, 130, 102790. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Q.; He, C.; Jaffrès-Runser, K.; Xu, Y.; Li, Z.; Xu, Y. QMR: Q-learning based Multi-objective optimization Routing protocol for Flying Ad Hoc Networks. Comput. Commun. 2020, 150, 304–316. [Google Scholar] [CrossRef]

- Li, R.; Li, F.; Li, X.; Wang, Y. QGrid: Q-learning based routing protocol for vehicular ad hoc networks. In Proceedings of the 2014 IEEE 33rd International Performance Computing and Communications Conference (IPCCC), Austin, TX, USA, 5–7 December 2014; pp. 1–8. [Google Scholar]

- Serhani, A.; Naja, N.; Jamali, A. QLAR: A Q-learning based adaptive routing for MANETs. In Proceedings of the 2016 IEEE/ACS 13th International Conference of Computer Systems and Applications (AICCSA), Agadir, Morocco, 29 November–2 December 2016; pp. 1–7. [Google Scholar]

- Jung, W.S.; Yim, J.; Ko, Y.B. QGeo: Q-Learning-Based Geographic Ad Hoc Routing Protocol for Unmanned Robotic Networks. IEEE Commun. Lett. 2017, 21, 2258–2261. [Google Scholar] [CrossRef]

- da Costa, L.A.L.F.; Kunst, R.; Pignaton de Freitas, E. Q-FANET: Improved Q-learning based routing protocol for FANETs. Comput. Netw. 2021, 198, 108379. [Google Scholar] [CrossRef]

- Yang, Q.; Jang, S.J.; Yoo, S.J. Q-Learning-Based Fuzzy Logic for Multi-objective Routing Algorithm in Flying Ad Hoc Networks. Wirel. Pers. Commun. 2020, 113, 115–138. [Google Scholar] [CrossRef]

- He, C.; Liu, S.; Han, S. A Fuzzy Logic Reinforcement Learning-Based Routing Algorithm For Flying Ad Hoc Networks. In Proceedings of the 2020 International Conference on Computing Networking and Communications (ICNC), Big Island, HI, USA, 17–20 February 2020; pp. 987–991. [Google Scholar]

- Sliwa, B.; Schuler, C.; Patchou, M.; Wietfeld, C. PARRoT: Predictive Ad-hoc Routing Fueled by Reinforcement Learning and Trajectory Knowledge. In Proceedings of the 2021 IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Helsinki, Finland, 25–28 April 2021; pp. 1–7. [Google Scholar]

- Sliwa, B.; Behnke, D.; Ide, C.; Wietfeld, C.B.A.T. Mobile: Leveraging Mobility Control Knowledge for Efficient Routing in Mobile Robotic Networks. In Proceedings of the 2016 IEEE Globecom Workshops (GC Wkshps), Washington, DC, USA, 4–8 December 2016; pp. 1–6. [Google Scholar]

- Neumann, A.; Aichele, C.; Lindner, M. Better Approach to Mobile Ad Hoc Networking (Batman). Available online: https://datatracker.ietf.org/doc/pdf/draft-wunderlich-openmesh-manet-routing-00.pdf (accessed on 10 April 2023).

- Rovira-Sugranes, A.; Afghah, F.; Qu, J.; Razi, A. Fully-Echoed Q-Routing With Simulated Annealing Inference for Flying Adhoc Networks. IEEE Trans. Netw. Sci. Eng. 2021, 8, 2223–2234. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Q.; Xu, Y. AR-GAIL: Adaptive routing protocol for FANETs using generative adversarial imitation learning. Comput. Netw. 2022, 218, 109382. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barton, A.G. Reinforcement learning: An introduction. Mach. Learn. 1992, 8, 225–227. [Google Scholar] [CrossRef] [Green Version]

- Johnson, R.; Jasik, H. Antenna Engineering Handbook, 2nd ed.; McGraw-Hill: New York, NY, USA, 1984; pp. 1–12. [Google Scholar]

- Hong, J.; Zhang, D. Topology Change Degree: A Mobility Metric Describing Topology Changes in MANETs and Distinguishing Different Mobility Patterns. Ad Hoc Sens. Wirel. Netw. 2019, 44, 153–171. [Google Scholar]

- Taha, M. An efficient software defined network controller based routing adaptation for enhancing QoE of multimedia streaming service. Multimed. Tools. Appl. 2023. [Google Scholar] [CrossRef]

- Camp, T.; Boleng, J.; Davies, V. A survey of mobility models for ad hoc network research. Wirel. Commun. Mob. Comput. 2002, 2, 483–502. [Google Scholar] [CrossRef]

- Broch, J.; Maltz, D.A.; Johnson, D.B.; Hu, Y.C.; Jetcheva, J. A performance comparison of multi-hop wireless ad hoc network routing protocols. In Proceedings of the 4th Annual ACM/IEEE International Conference on Mobile Computing and Networking, Dallas, TX, USA, 25–30 October 1998; pp. 85–97. [Google Scholar]

- Ye, Z.; Zhou, Q. Performance Evaluation Indicators of Space Dynamic Networks under Broadcast Mechanism. Space: Sci. Technol. 2021, 2021, 9826517. [Google Scholar] [CrossRef]

- Meng, Q.; Huang, M.; Xu, Y.; Liu, N.; Xiang, X. Decentralized Distributed Deep Learning with Low-Bandwidth Consumption for Smart Constellations. Space Sci. Technol. 2021, 2021, 9879246. [Google Scholar] [CrossRef]

| Characteristics | MANETs | VANETs | FANETs |

|---|---|---|---|

| Mobility | Low(2D) | Low(2D) | FW-Med(3D) RW-High(3D) |

| Speed | Low(6 km/h) | Med-High(20–100 km/h) | FW-High(100 km/h) RW-Med(50 km/m) |

| Mobility Model | Random | Manhattan | FW-Paparazzi RW-RWP |

| Topology Variation | Low | Med | FW-Med RW-High |

| Classification | Mobility Model | UAV Classification | Mission Scenario |

|---|---|---|---|

| Randomization | Random Waypoint | RW | Environmental Sensing/ Traffic/City Monitoring |

| Time Dependence | Gauss Markov | RW | Environmental Sensing/ Search/Detection and Rescue |

| Path Planning | Paparazzi | RW/FW | Agricultural Management/ Transportation/Urban Monitoring |

| Protocol | Contributions | Limitations |

|---|---|---|

| QMR [17] | Dynamic adjustment of Q-factor improves packet delivery rate and reduces latency | Designing alternatives for routing decisions based on speed alone is not good enough |

| Q-FANET [21] | QMR-based improvements to provide lower latency and jitter | Did not verify protocol performance at different speeds |

| QLFLMOR [22] | Maintain a lower hop count to reduce energy consumption and extend network survival time | Poor performance with a low number of nodes |

| FLRLR [23] | Reduce average hop count and improve link connectivity | It does not verify the number of hops versus packet delivery rate as the number of nodes increases |

| PARRoT [24] | Enables robust data delivery | Large computational complexity |

| Fully-echoed Q-routing [27] | Introduced a new full echo Q protocol that avoids connection loss | Does not consider node mobility in the protocol |

| AR-GAL [28] | A deeply reinforced routing protocol that introduces generative adversarial imitation learning can reduce latency and improve packet delivery rate | Routing failure exists at low flight density |

| Packet Architecture (Total Bytes 48) | |||

|---|---|---|---|

| 1 | 2 | 3 | 4 |

| Originator IP Address | |||

| Destination IP Address | |||

| Position Vector3D | |||

| Predict Vector3D | |||

| Q value | |||

| Observed Delay | |||

| MF (Mobility Factor) | |||

| Sequence Number | TTL | ||

| Gateway Node | |||||||

|---|---|---|---|---|---|---|---|

| Destination Node | Sr | A | B | C | D | E | |

| Sr | NULL | ||||||

| A | NULL | ||||||

| B | NULL | ||||||

| C | NULL | ||||||

| D | NULL | ||||||

| E | NULL | ||||||

| Parameters | Setting |

|---|---|

| Simulator | NS-3.33 |

| Initialization time | 5 s |

| MAC | 802.11 g/2.4 G |

| Delay model | Constant speed propagation delay model |

| Transmitting power | 20 dbm |

| Receiver sensitivity | −85 dbm |

| Transmission gain | 1 db |

| Reception gain | 1 db |

| Energy level | 1 |

| Channel loss model | Friis |

| Mobility model | Random way point |

| Simulation area | 500 m × 500 m × 500 m |

| Number of nodes | 25 |

| Speed of nodes | 13.9 m/s |

| Traffic | UDP |

| Rate control | Ideal WIFI manager |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xue, Q.; Yang, Y.; Yang, J.; Tan, X.; Sun, J.; Li, G.; Chen, Y. QEHLR: A Q-Learning Empowered Highly Dynamic and Latency-Aware Routing Algorithm for Flying Ad-Hoc Networks. Drones 2023, 7, 459. https://doi.org/10.3390/drones7070459

Xue Q, Yang Y, Yang J, Tan X, Sun J, Li G, Chen Y. QEHLR: A Q-Learning Empowered Highly Dynamic and Latency-Aware Routing Algorithm for Flying Ad-Hoc Networks. Drones. 2023; 7(7):459. https://doi.org/10.3390/drones7070459

Chicago/Turabian StyleXue, Qiubei, Yang Yang, Jie Yang, Xiaodong Tan, Jie Sun, Gun Li, and Yong Chen. 2023. "QEHLR: A Q-Learning Empowered Highly Dynamic and Latency-Aware Routing Algorithm for Flying Ad-Hoc Networks" Drones 7, no. 7: 459. https://doi.org/10.3390/drones7070459

APA StyleXue, Q., Yang, Y., Yang, J., Tan, X., Sun, J., Li, G., & Chen, Y. (2023). QEHLR: A Q-Learning Empowered Highly Dynamic and Latency-Aware Routing Algorithm for Flying Ad-Hoc Networks. Drones, 7(7), 459. https://doi.org/10.3390/drones7070459