Omni-Directional Capture for Multi-Drone Based on 3D-Voronoi Tessellation

Abstract

:1. Introduction

2. Collaborative Capture Strategy Based on 3D-Voronoi Tessellation

2.1. 3D-Voronoi Tessellation

2.2. Omni-Directional Minimum Area (ODMA) Capture Strategy

2.3. Variable Step Wolf Pack Algorithm (WPA)

2.4. Improved Artificial Potential Field

3. 3D-Voronoi Capture Strategy with ODMV

3.1. Minimum Volume Capture Strategy

3.2. Omni-Directional Minimum Volume (ODMV)

3.3. Convergence Proof

3.3.1. Convergence Proof of 3D-Voronoi Process

3.3.2. Convergence Proof of Minimum Volume Strategy

| Algorithm 1 The pseudocode of the ODMV algorithm. |

|

4. Simulations Analysis and Verification

4.1. Formation Capture under Non-Obstacle Environment

4.2. Formation Capture under Dynamic Target Environment

4.3. Formation Capture under Obstacle Environment

4.4. Formation Capture under Multiple Targets

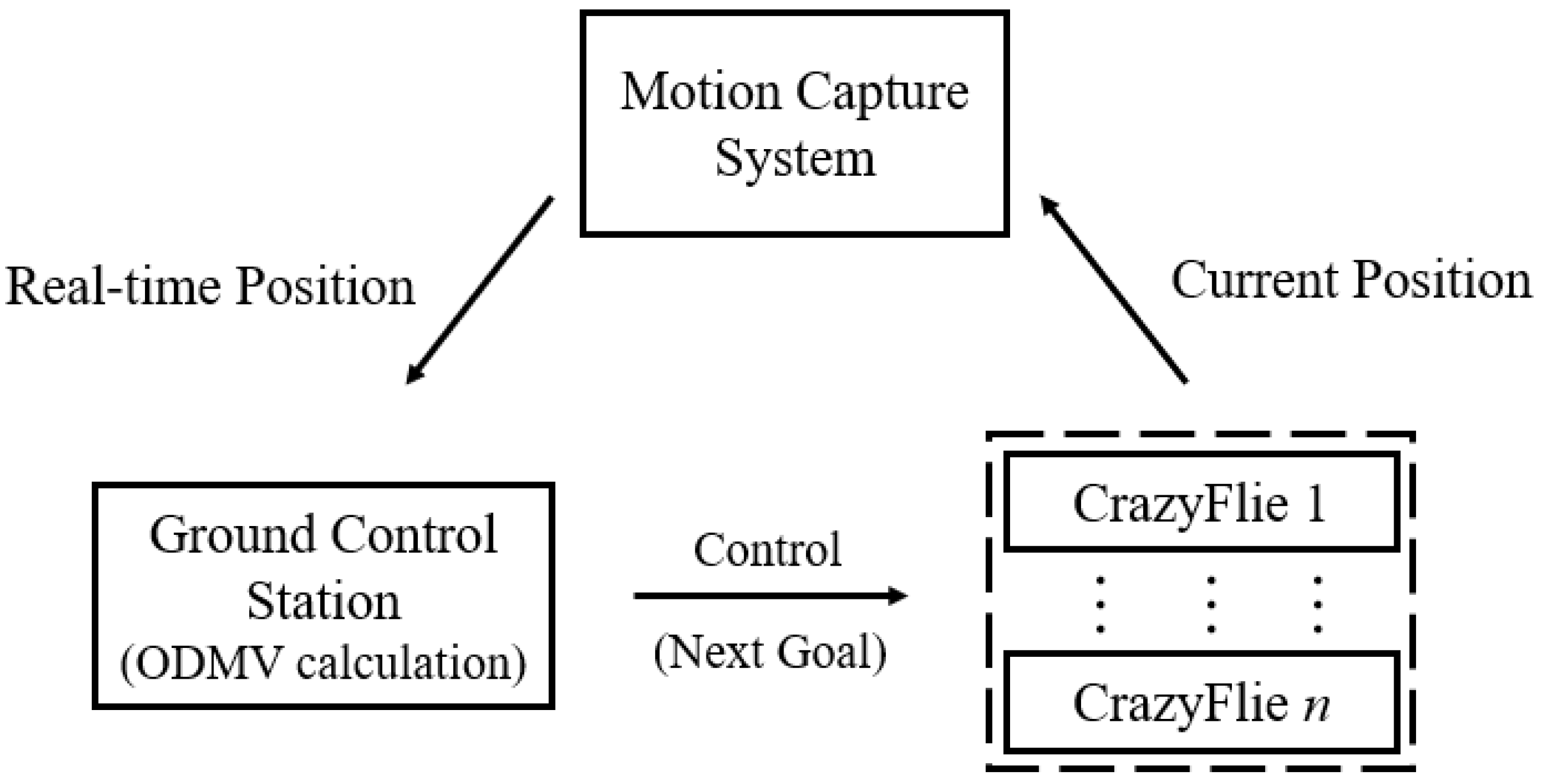

5. Crazyflies Capture Experiment

5.1. Obstacle-Free Environment

5.2. Multiple Obstacles Environment

5.3. Dynamic Target Environment

6. Summary and Outlook

- 1.

- To generate the capture direction of drone formation in a 3D environment, this paper proposes a capture strategy that combines 3D-Voronoi tessellation with minimum volume strategy. The 3D-Voronoi tessellation can ensure collision avoidance between drones, which can save computational consumption of formation. The minimum volume strategy can provide a capture direction of drones in formation to complete the final capture. The minimum volume capture strategy based on 3D-Voronoi tessellation provides a new way for multi-drone formation to capture the target in a 3D environment. In addition, we have demonstrated guaranteed capture and omni-directional capture angle in a 3D environment.

- 2.

- We have solved the problem of unequal capture angle between drones and target, which will reduce the swing of drones. This paper proposes the ODMV capture strategy, which allows drones to enter the capture formation at a better capture angle. In other words, drones will be distributed more reasonablely near the target 3D-Voronoi cell, presenting a better capture effect. Additionally, the ODMV strategy can minimize the volume of the target 3D-Voronoi cell, which will effectively prevent the re-escape of the target. The developed algorithm can keep and form a capture of the target, which means that the algorithm can capture the target and keep it within a polyhedron formed by multiple pursuers.

- 3.

- Based on the above contribution, the wolf pack algorithm (WPA) was introduced as the movement strategy for drones to verify the ODMV. By eliminating the head wolf following mechanism, the direction of drones is changed from head wolf drone to the direction provided by ODMV; this will help drones avoid the local minimum of traditional WPA. We also replaced the fixed step size with a variable one to improve the convergence accuracy of WPA.

- 4.

- The experiment of the 3D-Voronoi ODMV strategy was successfully conducted using physical drones. By utilizing four Crazyflies in conjunction with the motion capture system, the experiments were carried out in complex environments such as with obstacles and dynamic targets (Supplementary Materials).

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| V | 3D-Voronoi cell |

| q | Independent point |

| p | Generators |

| Target 3D-Voronoi cell | |

| The area of target Voronoi cell in 2D | |

| The motion direction of drones in minimum area strategy | |

| The centroid of adjacent line | |

| Robot position | |

| l | The length of adjacent line in 2D |

| The volume of target 3D-Voronoi cell | |

| The area of adjacent surface in 3D | |

| Target position | |

| The number of drones | |

| L | The distance between any two drones |

References

- Salim, C.; Makhoul, A.; Couturier, R. Energy-efficient secured data reduction technique using image difference function in wireless video sensor networks. Multimed. Tools Appl. 2019, 79, 1801–1819. [Google Scholar] [CrossRef]

- Kehagias, A. Generalized Cops and Robbers: A Multi-player Pursuit Game on Graphs. Dyn. Games Appl. 2019, 9, 1076–1099. [Google Scholar] [CrossRef] [Green Version]

- Li, C.M.; Guo, P.P.; Wu, P.D.; Liu, X.L. Extraction of Terrain Feature Lines from Elevation Contours Using a Directed Adjacent Relation Tree. ISPRS Int. J. Geo-Inf. 2018, 7, 163–177. [Google Scholar] [CrossRef] [Green Version]

- Fang, X.; Wang, C.; Xie, L.H. Cooperative Pursuit With Multi-Pursuer and One Faster Free-Moving Evader. IEEE Trans. Cybern. 2022, 52, 1405–1414. [Google Scholar] [CrossRef]

- Du, W.B.; Guo, T.; Chen, J.; Li, B.Y.; Zhu, G.X. Cooperative pursuit of unauthorized UAVs in urban airspace via Multi-agent reinforcement learning. Transp. Res. Part C-Emerg. Technol. 2021, 128, 103–112. [Google Scholar] [CrossRef]

- Fan, Z.L.; Yang, H.Y.; Han, Y.L. Reinforcement learning based target capture control for multi-agent systems. J. Aviat. 2023, 3, 1–10. [Google Scholar]

- Salmon, J.L.; Willey, L.C.; Casbeer, D. Single Pursuer and Two Cooperative Evaders in the Border Defense Differential Game. J. Aerosp. Inf. Syst. 2020, 17, 229–239. [Google Scholar] [CrossRef]

- Koyuncu, E.; Inalhan, G. Exploiting Delayed and Imperfect Information for Generating Approximate UAV Target Interception Strategy. J. Intell. Robot. Syst. 2013, 61, 313–329. [Google Scholar] [CrossRef]

- Kokolakis, N.M.T.; Vamvoudakis, K.G. Bounded rational Dubins vehicle coordination for target tracking using reinforcement learning. Automatica 2023, 149, 110732. [Google Scholar] [CrossRef]

- Sun, Z.; Sun, H.; Li, P. Cooperative strategy for pursuit-evasion problem in the presence of static and dynamic obstacles. Ocean Eng. 2023, 279, 114476. [Google Scholar] [CrossRef]

- Khrenov, M.; Rivera-Ortiz, P.; Diaz-Mercado, Y. Geometric Feasibility for Defense Manifold Maintenance in Planar Reach-Avoid Games Against a Fast Evader. IFAC-PapersOnLine 2021, 54, 807–813. [Google Scholar] [CrossRef]

- Li, W.H.; Qin, K.Y.; Shao, J.L.; Lin, B. Dynamic Target Enclosing Control Scheme for Multi-Agent Systems via a Signed Graph-Based Approach. IEEE/CAA J. Autom. Sin. 2023, 10, 560–562. [Google Scholar] [CrossRef]

- Shao, J.L.; Shi, L.; Cheng, Y.H.; Li, T. Asynchronous Tracking Control of Leader–Follower Multiagent Systems With Input Uncertainties Over Switching Signed Digraphs. IEEE Trans. Cybern. 2022, 52, 6379–6390. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.; Wang, H.; Luo, H. Collaborative pursuit-evasion strategy of UAV/UGV heterogeneous system in complex three-dimensional polygonal environment. Complexity 2020, 2020, 7498740. [Google Scholar] [CrossRef]

- Santos, R.F.; Ramachandran, R.K.; Vieira, M. Parallel multi-speed Pursuit-Evasion Game algorithms. Robot. Auton. Syst. 2023, 163, 104382. [Google Scholar] [CrossRef]

- Li, X.; Qi, G.Y.; Zhang, L.M. Time-varying formation dynamics modeling and constrained trajectory optimization of multi-quadrotor UAVs. Nonlinear Dyn. 2021, 106, 3265–3284. [Google Scholar] [CrossRef]

- Tian, B.; Li, P.; Lu, H.; Zong, Q.; He, L. Distributed pursuit of an evader with collision and obstacle avoidance. IEEE Trans. Cybern. 2021, 15, 13512–13520. [Google Scholar] [CrossRef] [PubMed]

- Shah, K.; Schwager, M. Grape: Geometric risk-aware pursuit-evasion. Robot. Auton. Syst. 2019, 121, 103246. [Google Scholar] [CrossRef]

- Sun, W.; Tsiotras, P. Sequential pursuit of multiple targets under external disturbances via Zermelo–Voronoi diagrams. Automatica 2017, 81, 253–260. [Google Scholar] [CrossRef] [Green Version]

- Casini, M.; Garulli, A. On the advantage of centralized strategies in the three-pursuer single- evader game. Syst. Control Lett. 2022, 160, 105122. [Google Scholar] [CrossRef]

- Pan, S.; Huang, H.; Ding, J.; Zhang, W.; Tomlin, C.J. Pursuit, evasion and defense in the plane. In Proceedings of the 2012 American Control Conference (ACC), Montreal, QC, Canada, 27–29 June 2012; pp. 4167–4173. [Google Scholar]

- Huang, H.; Zhou, Z.; Zhang, W.; Ding, J.; Stipanovic, D.M.; Tomlin, C.J. Safe-Reachable Area Cooperative Pursuit. Available online: ftp://ftp.math.ucla.edu/pub/camreport/cam12-55.pdf (accessed on 1 May 2023).

- Zhou, Z.; Zhang, W.; Ding, J.; Huang, H.; Stipanovic, D.M.; Tomlin, C.J. Cooperative pursuit with Voronoi partitions. Automatica 2016, 72, 67–72. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Shi, W.; Zhang, P. Evader cooperative capture by multiple pursuers with area-minimization policy. In Proceedings of the 15th International Conference on Control and Automation (ICCA), Edinburgh, UK, 16–19 July 2019; pp. 875–880. [Google Scholar]

- Wang, Y.; He, G.; Ma, Y. Research on multi-robots self-organizing cooperative pursuit algorithm based on Voronoi graph. In Proceedings of the 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; pp. 3840–3844. [Google Scholar]

- Pierson, A.; Wang, Z.; Schwager, M. Intercepting rogue robots: An algorithm for capturing multiple evaders with multiple pursuers. IEEE Robot. Autom. Lett. 2016, 2, 530–537. [Google Scholar] [CrossRef]

- Wu, H.S.; Zhang, F.M.; Wu, L.S. A New Swarm Intelligence Algorithm: Wolf Pack Algorithm. Syst. Eng. Electron. Technol. 2013, 35, 2430–2438. [Google Scholar]

- Cao, K.; Zhang, J.H.; Chen, Y.Q. Voronoi Coverage based on Improved Artificial Potential Field. In Proceedings of the 2022 International Conference on Mechanical and Electronics Engineering (ICMEE), Xi’an, China, 21–23 October 2022; pp. 2430–2438. [Google Scholar]

| Criteria/Ref. | [15] | [16] | [17] | [18] | [19] | [20] | [21] | [22] | [23] | [24] | Our Work |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Captures | M | M | M | M | M | M | M | M | M | M | M |

| Targets | S | S | S | S | S | S | S | S | M | M | M |

| 2D/3D | 2D | 2D | 2D | 2D | 2D | 2D | 2D | 2D | 2D | 2D & 3D | 3D |

| Collision avoidance | Yes | No | No | No | No | Yes | Yes | Yes | No | No | Yes |

| Constraint on capture angle | No | No | No | No | No | No | No | No | No | No | Yes |

| Capture guaranteed | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Experiments | No | Yes | No | No | No | Yes | No | No | No | Yes | Yes |

| Strategies (Section)/Indicators | Distance between Drones and Target (m) | Capture Level |

|---|---|---|

| Variable step WPA formation capture (Section 4.1) | 2.799 | 12.50% |

| Minimum volume formation capture (Section 4.1) | 0.788 | 88.42% |

| ODMV formation capture (Section 4.1) | 0.302 | 99.26% |

| Formation capture under dynamic target (Section 4.2) | 0.201 | 99.05% |

| Formation capture under obstacle environment (Section 4.3) | 0.205 | 98.63% |

| Formation capture under multiple targets (Section 4.4) | 0.310 | 98.26% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, K.; Chen, Y.-Q.; Gao, S.; Yan, K.; Zhang, J.; An, D. Omni-Directional Capture for Multi-Drone Based on 3D-Voronoi Tessellation. Drones 2023, 7, 458. https://doi.org/10.3390/drones7070458

Cao K, Chen Y-Q, Gao S, Yan K, Zhang J, An D. Omni-Directional Capture for Multi-Drone Based on 3D-Voronoi Tessellation. Drones. 2023; 7(7):458. https://doi.org/10.3390/drones7070458

Chicago/Turabian StyleCao, Kai, Yang-Quan Chen, Song Gao, Kun Yan, Jiahao Zhang, and Di An. 2023. "Omni-Directional Capture for Multi-Drone Based on 3D-Voronoi Tessellation" Drones 7, no. 7: 458. https://doi.org/10.3390/drones7070458

APA StyleCao, K., Chen, Y.-Q., Gao, S., Yan, K., Zhang, J., & An, D. (2023). Omni-Directional Capture for Multi-Drone Based on 3D-Voronoi Tessellation. Drones, 7(7), 458. https://doi.org/10.3390/drones7070458