Construction of an Orthophoto-Draped 3D Model and Classification of Intertidal Habitats Using UAV Imagery in the Galapagos Archipelago

Abstract

1. Introduction

2. Materials and Methods

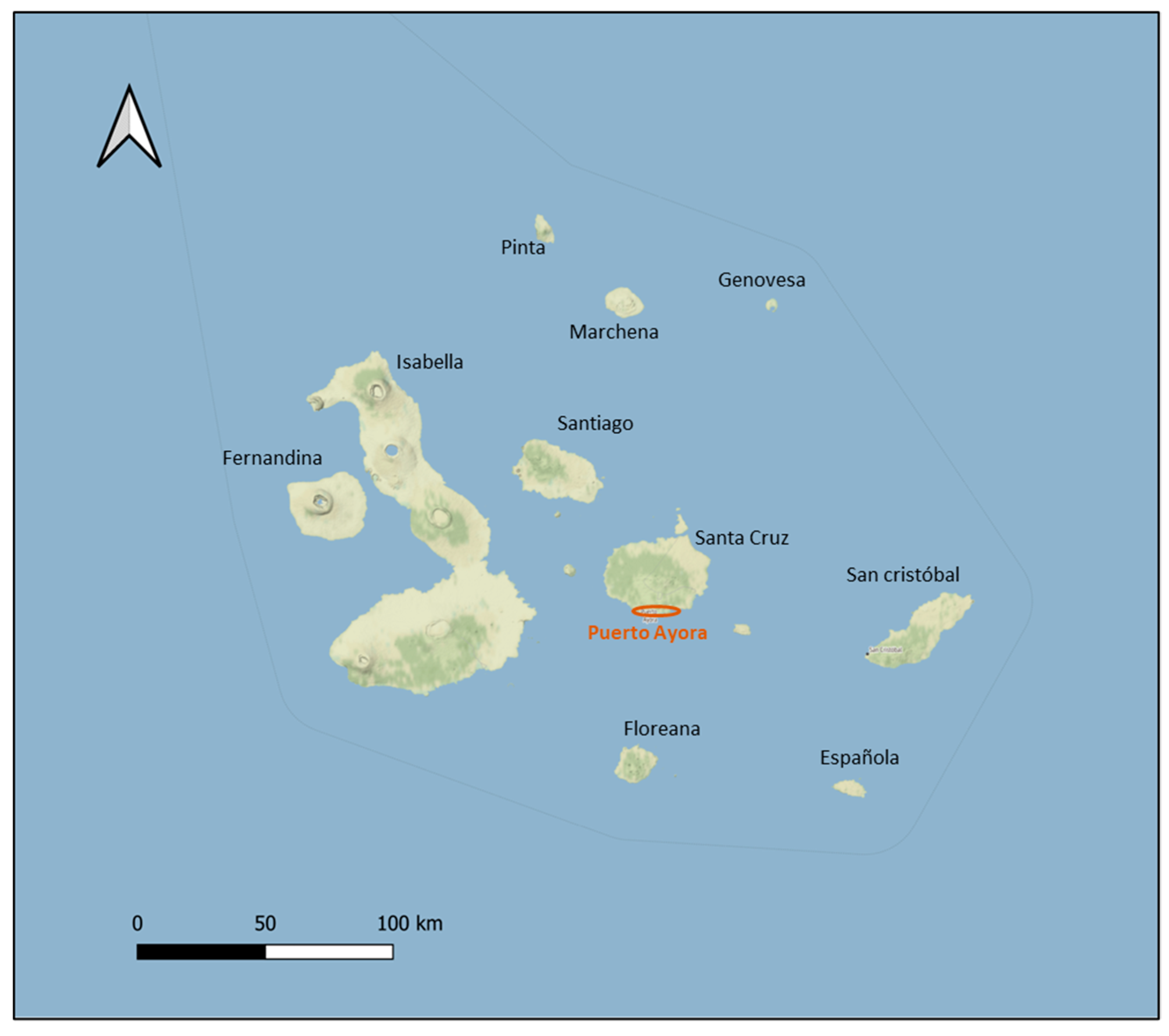

2.1. Study Area

2.2. GNSS Measurements for Georeferencing

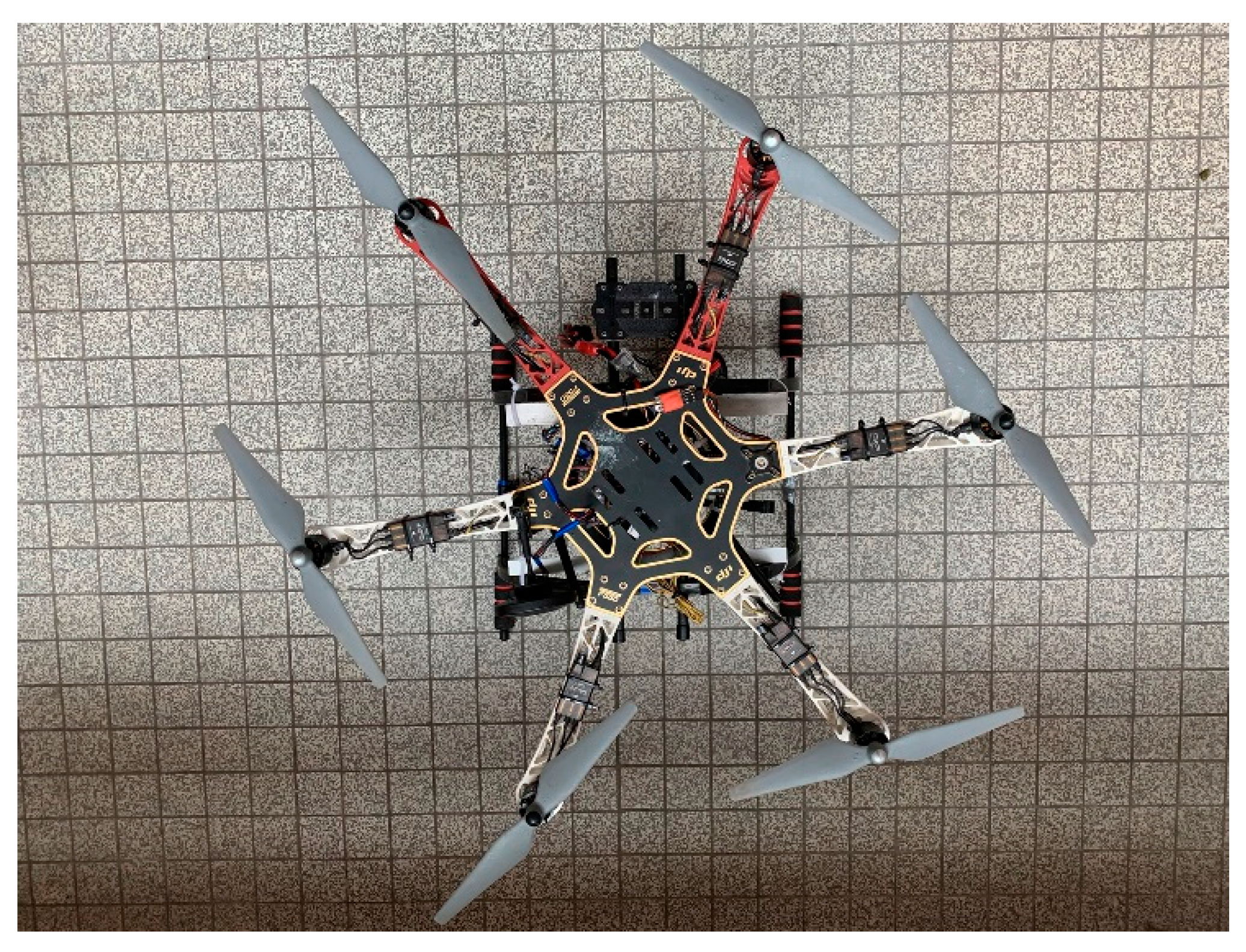

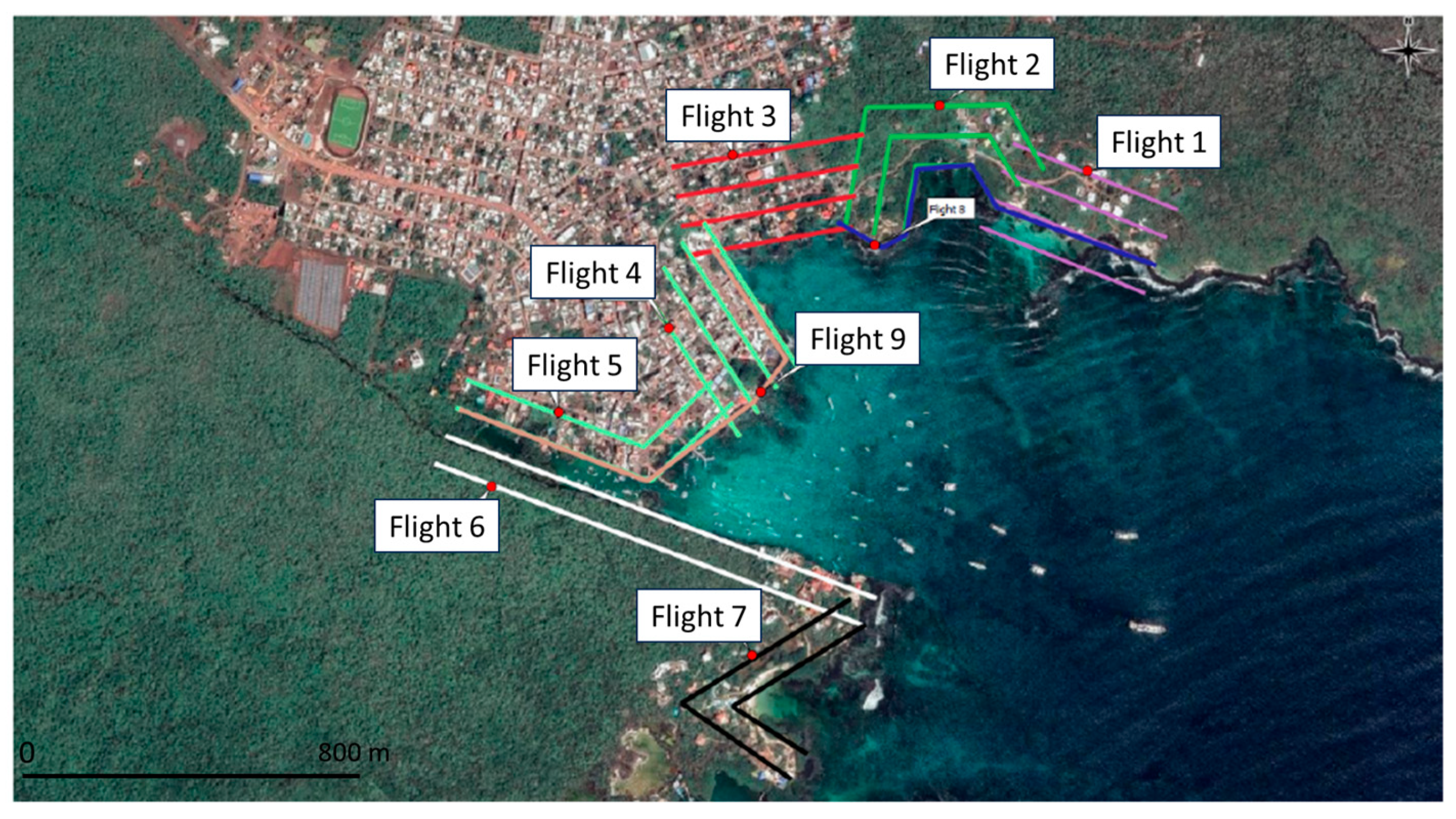

2.3. Imagery Collection with Uncrewed Aerial Vehicle

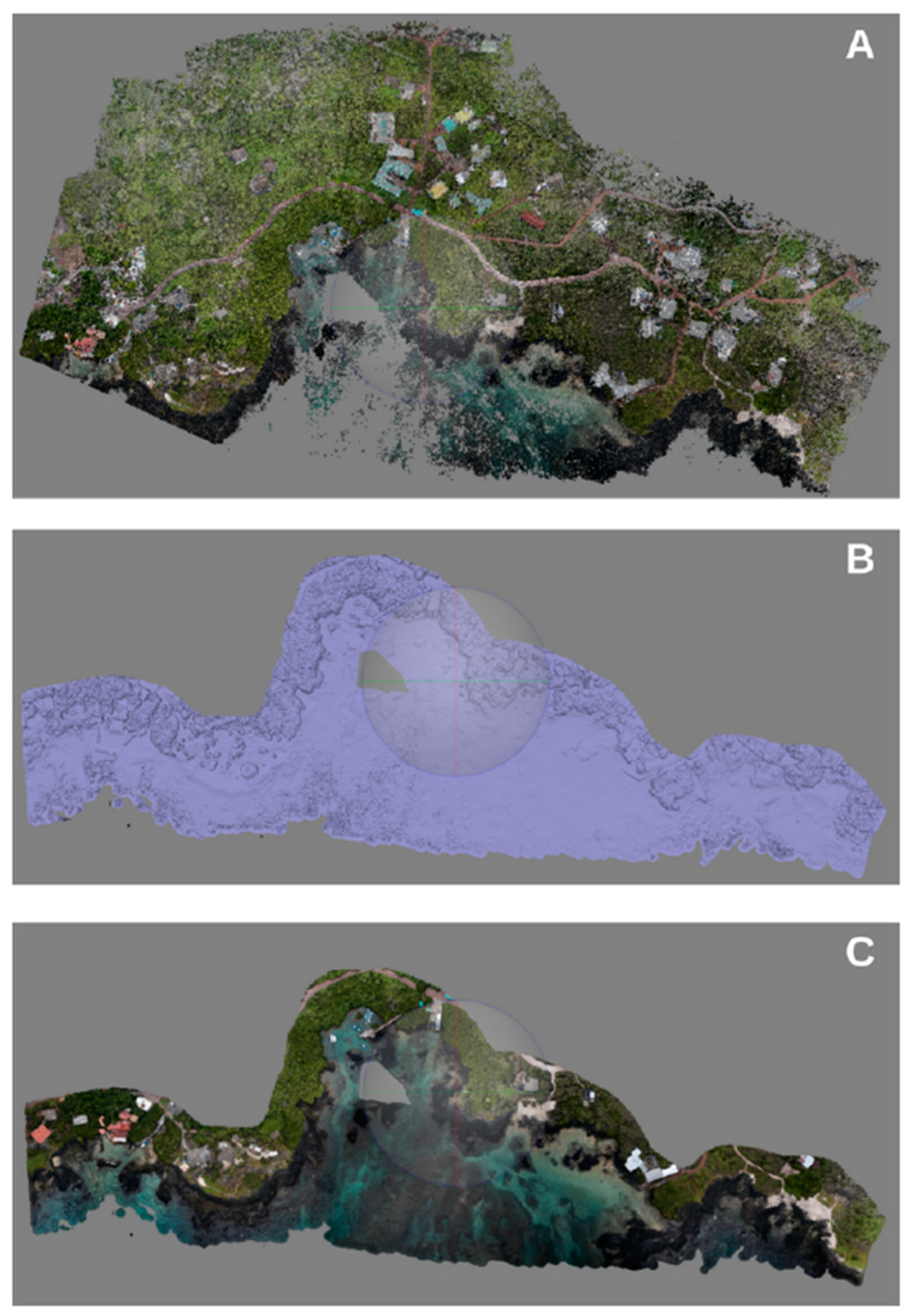

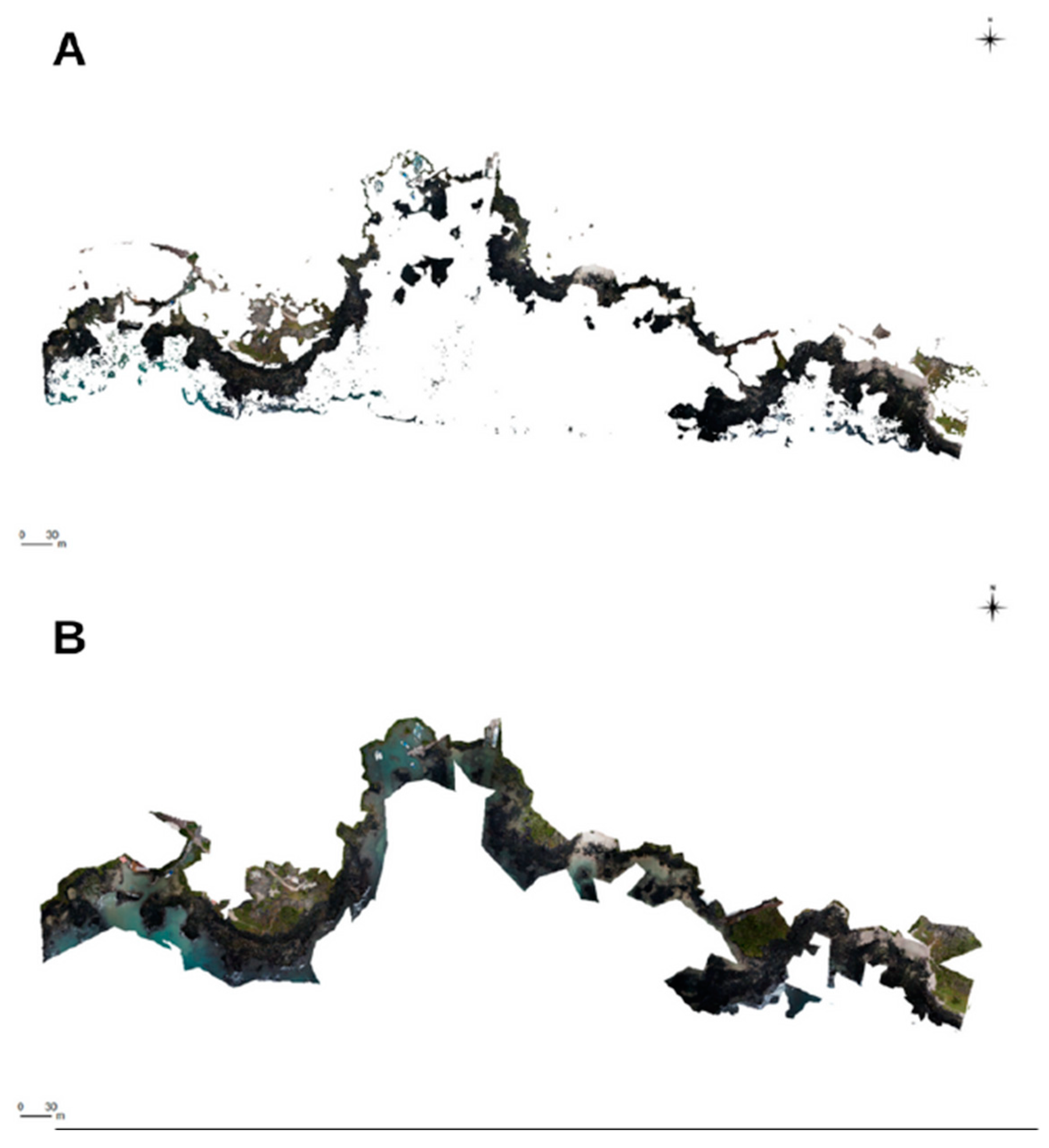

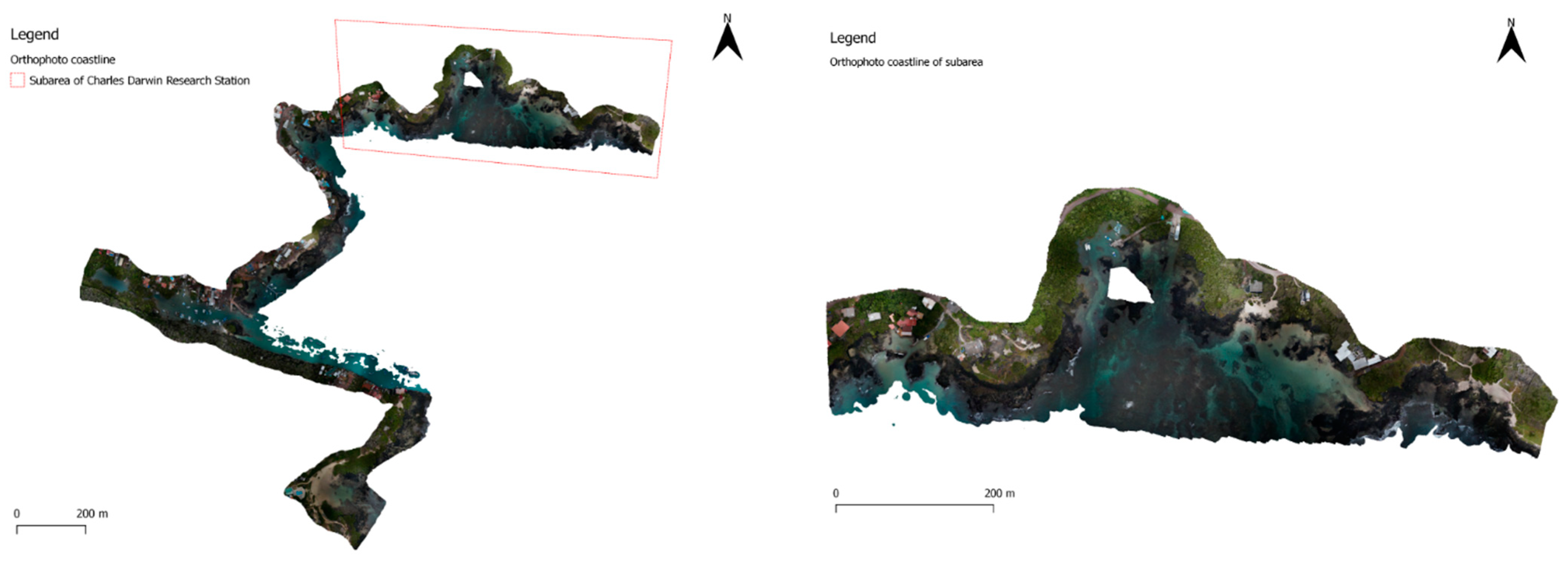

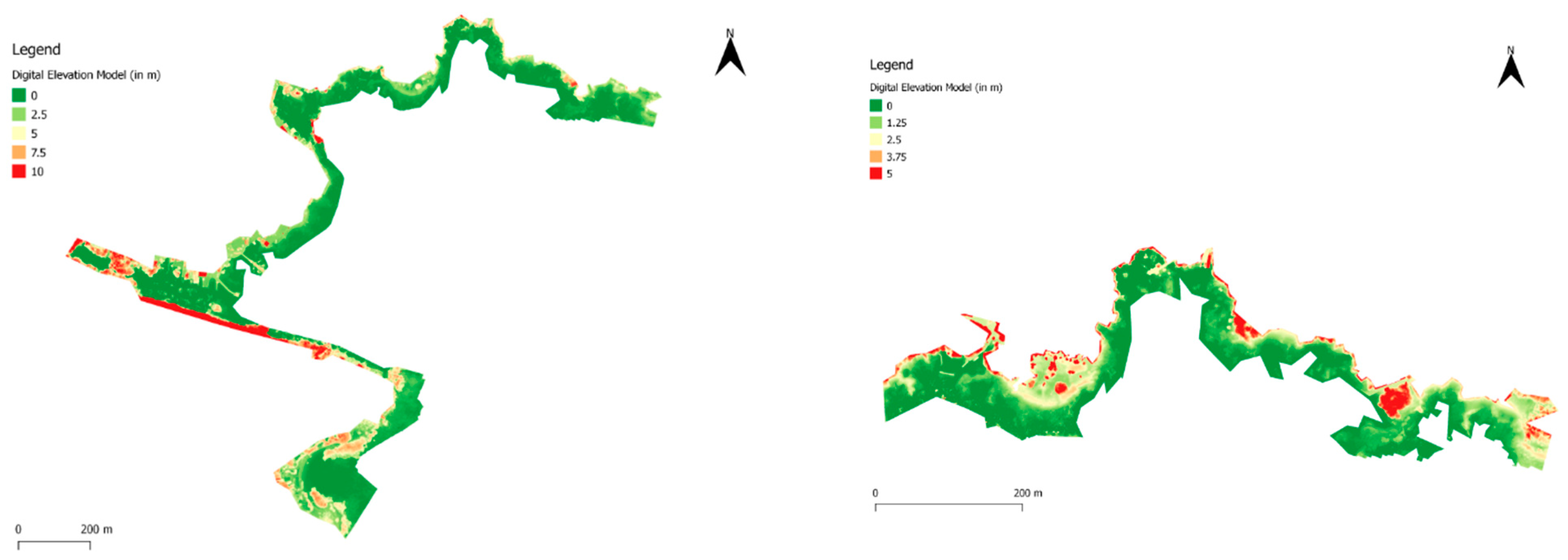

2.4. D Modeling of the Coastal Region

2.5. Automatization of the Habitat Classification

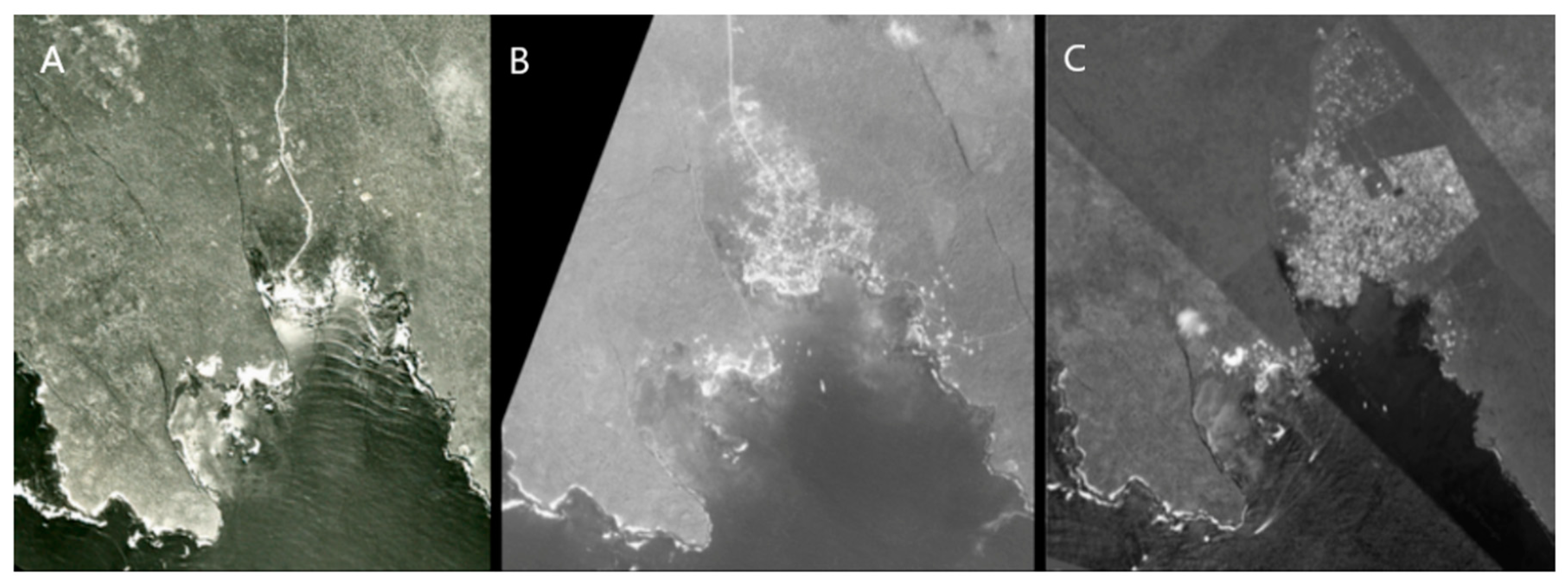

2.5.1. Preprocessing of the Orthophoto

2.5.2. Classification Procedure

2.6. Validation

3. Results

3.1. D Modeling of the Coastal Region

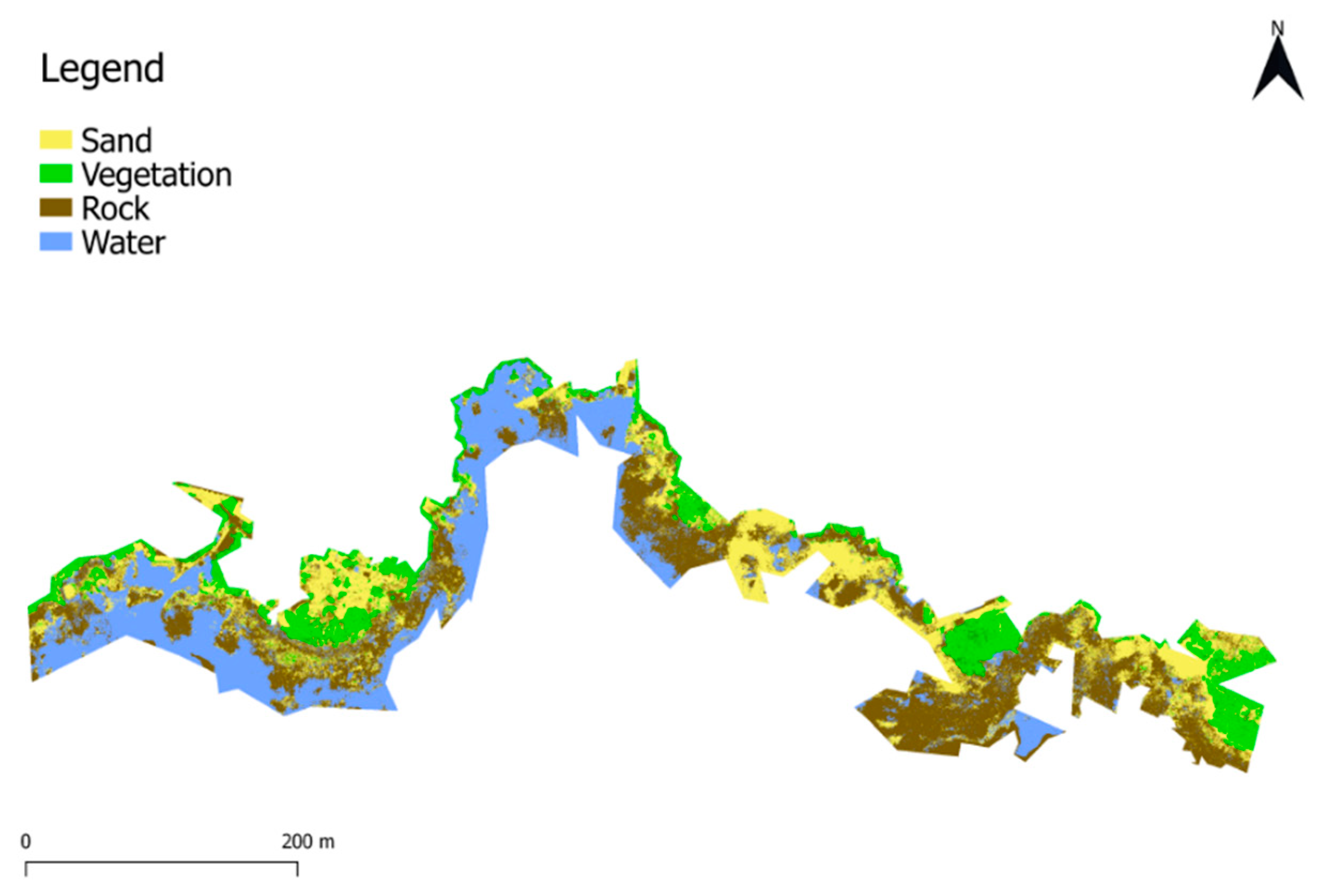

3.2. Automatization of the Habitat Classification

3.3. Validation

4. Discussion

4.1. GNSS Measurements for Georeferencing

4.2. Data Collection with Uncrewed Aerial Vehicle

4.3. D modeling of the Coastal Region

4.4. Automatization of the Habitat Classification

4.5. Validation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Permits

Appendix A

| Device | Horizontal | Vertical |

|---|---|---|

| Trimble 8—RTK | 8 mm + 1 ppm RMS | 15 mm + 1 ppm RMS |

| Trimble 8—PPK | 8 mm + 1 ppm RMS | 15 mm + 1 ppm RMS |

| Trimble 10—RTK | 8 mm + 1 ppm RMS | 15 mm + 1 ppm RMS |

| Trimble 10—Static and Fast Static | 3 mm + 0.5 ppm RMS | 5 mm + 0.5 ppm RMS |

| Label | x (m) | y (m) | z (m) |

|---|---|---|---|

| gcp31 | 799,051.572 | 9,917,348.444 | 12.495 |

| gcp33 | 799,167.133 | 9,917,438.823 | 13.911 |

| gcp34 | 799,286.202 | 9,917,562.073 | 7.574 |

| gcp35 | 799,321.482 | 9,917,795.994 | 10.908 |

| gcp39 | 799,872.905 | 9,917,850.448 | 5.525 |

| gcp40 | 799,607.303 | 9,917,900.804 | 7.143 |

| gcp41 | 799,511.031 | 9,917,762.844 | 3.326 |

| gcp42 | 798,964.022 | 9,917,263.031 | 13.240 |

| gcp43 | 799,369.041 | 9,916,998.423 | 7.375 |

| gcp45 | 799,213.977 | 9,917,625.279 | 10.077 |

| gcp46 | 799,611.387 | 9,917,781.724 | 3.148 |

| gcp50 | 799,404.836 | 9,916,934.678 | 16.418 |

| gcp51 | 799,042.448 | 9,916,630.257 | 5.030 |

| gcp52 | 799,280.290 | 9,917,471.070 | 11.390 |

| gcp53 | 799,190.859 | 9,917,608.680 | 17.326 |

| x1 | 799,104.596 | 9,917,340.420 | 3.181 |

| x2 | 799,178.385 | 9,916,663.176 | 7.001 |

| x3 | 798,992.051 | 9,917,221.791 | 11.980 |

| x4 | 799,086.871 | 9,917,326.735 | 2.211 |

| x5 | 799,197.161 | 9,917,333.538 | 2.588 |

| x6 | 799,061.097 | 9,917,179.476 | 2.971 |

| x7 | 798,706.702 | 9,917,273.955 | 2.032 |

| x8 | 798,589.225 | 9,917,522.339 | 7.357 |

| x9 | 799,359.393 | 9,916,832.773 | 1.910 |

| x10 | 799,114.913 | 9,916,440.213 | 2.908 |

| x11 | 800,049.947 | 9,917,852.916 | 6.058 |

| x101 | 800,213.769 | 9,917,682.033 | 1.917 |

| x102 | 800,215.203 | 9,917,848.795 | 6.686 |

| x103 | 799,805.455 | 9,918,081.176 | 5.227 |

| x104 | 799,341.435 | 9,917,942.760 | 6.648 |

| x105 | 799,091.362 | 9,917,880.390 | 8.327 |

| x106 | 799,008.321 | 9,917,614.719 | 7.487 |

| Total Weight | 2583 g |

| Diameter | 550 mm |

| Batteries | 2 × (4 Ah, 16,8 V) |

| Flight Controller | Pixhawk with GPS (uBLOXNEO-M8N) |

| Hexacopter DJI 550 | 1673 g |

| 2 Batteries | 2 × 283 g |

| Camera with Lens | 344 g |

| Sensor Type | CMOS |

| Sensor Size | 15.6 mm × 23.4 mm |

| Camera Resolution | 16 MP |

| Shutter Speed | 30 s—1/4000 s |

| Focus Distance | 20 mm |

| Weight | 276 g |

| Date | High Tide | Low Tide |

|---|---|---|

| 9 August 2017 a.m. | 3:37 a.m. GALT/2.03 m | 9:43 a.m. GALT/0.34 m |

| 9 August 2017 p.m. | 3:46 p.m. GALT/1.99 m | 9:57 p.m. GALT/0.22 m |

| 10 August 2017 a.m. | 4:11 a.m. GALT/2.06 m | 10:20 a.m. GALT/0.31 m |

| 10 August 2017 p.m. | 4:23 p.m. GALT/1.99 m | 10:33 p.m. GALT/0.22 m |

| Flight Number | Date | Take Off | Touchdown | Tide |

|---|---|---|---|---|

| Flight 1 | 9 August 2017 | 1:23:44 p.m. GALT | 1:29:40 p.m. GALT | Rising |

| Flight 2 | 9 August 2017 | 1:59:34 p.m. GALT | 2:07:28 p.m. GALT | Rising |

| Flight 3 | 9 August 2017 | 3:03:46 p.m. GALT | 3:09:30 p.m. GALT | Rising |

| Flight 4 | 9 August 2017 | 3:36:10 p.m. GALT | 3:42:42 p.m. GALT | Rising/High |

| Flight 5 | 10 August 2017 | 9:39:32 a.m. GALT | 9:44:44 a.m. GALT | Falling |

| Flight 6 | 10 August 2017 | 10:32:00 a.m. GALT | 10:39:12 a.m. GALT | Low/Rising |

| Flight 7 | 10 August 2017 | 10:50:46 a.m. GALT | 10:59:30 a.m. GALT | Low/Rising |

| Flight 8 | 10 August 2017 | 11:43:20 a.m. GALT | 11:49:44 a.m. GALT | Low/Rising |

| Flight 9 | 10 August 2017 | 11:58:02 a.m. GALT | 12:03:28 p.m. GALT | Low/Rising |

| Altitude | 120 m |

| Ground Sampling Distance | 2.86 cm |

| Ground Width | 140.4 m |

| Ground Length | 96.6 m |

| Distance between Flight Lines | 55 m |

| Overlap between Flight Lines | 33 m, 60% |

| Distance between Image-Centers | 10 m |

| Overlap within Flight Lines | 8 m, 80% |

| Label | x Error (m) | y Error (m) | z Error (m) | Total Error (m) |

|---|---|---|---|---|

| gcp31 | −0.0297 | 0.0333 | 0.0111 | 0.0460 |

| gcp33 | 0.0093 | 0.0028 | −0.0011 | 0.0098 |

| gcp34 | 0.0030 | 0.0043 | −0.0030 | 0.0060 |

| gcp35 | 0.0128− | 0.0035 | −0.0011 | 0.0133 |

| gcp39 | −0.0005 | −0.0013 | −0.0027 | 0.0031 |

| gcp40 | −0.0296 | 0.0116 | 0.0029 | 0.0320 |

| gcp41 | −0.0065 | 0.0029 | 0.0012 | 0.0072 |

| gcp42 | −0.0038 | −0.0074 | 0.0054 | 0.0099 |

| gcp43 | −0.0099 | 0.0034 | −0.0067 | 0.0125 |

| gcp45 | −0.0139 | 0.0183 | 0.0082 | 0.0244 |

| gcp46 | 0.0204 | −0.0117 | −0.0017 | 0.0236 |

| gcp50 | 0.0294 | −0.0412 | −0.0049 | 0.0509 |

| gcp51 | 0.0089 | 0.0003 | 0.0063 | 0.0109 |

| gcp52 | −0.0102 | −0.0017 | 0.0030 | 0.0108 |

| gcp53 | 0.0064 | −0.0049 | −0.0076 | 0.0110 |

| x1 | 0.0258 | −0.0275 | 0.0147 | 0.0405 |

| x2 | −0.0123 | −0.0188 | −0.0101 | 0.0247 |

| x3 | 0.0069 | 0.0216 | −0.0095 | 0.0246 |

| x4 | 0.0210 | −0.0119 | −0.0230 | 0.0334 |

| x5 | −0.0282 | 0.0013 | −0.0025 | 0.0284 |

| x6 | −0.0009 | −0.0176 | 0.0038 | 0.0180 |

| x7 | 0.0005 | −0.0009 | −0.0004 | 0.0011 |

| x8 | −0.0036 | 0.0090 | −0.0083 | 0.0127 |

| x9 | −0.0144 | 0.0582 | 0.0164 | 0.0622 |

| x11 | 0.0450 | −0.0116 | 0.0009 | 0.0465 |

| x101 | −0.0139 | −0.0235 | −0.0022 | 0.0274 |

| x102 | −0.0218 | 0.0342 | −0.0019 | 0.0406 |

| x103 | −0.0046 | −0.0048 | 0.0006 | 0.0067 |

| x104 | 0.0047 | 0.0038 | −0.0014 | 0.0061 |

| x105 | 0.0009 | −0.0021 | 0.0021 | 0.0031 |

| x106 | 0.0054 | −0.0123 | −0.0012 | 0.0135 |

| Total | 0.0170 | 0.0189 | 0.0075 | 0.0265 |

| Align Photos | |

|---|---|

| Accuracy | Medium |

| Pair selection | Disabled |

| Keypoint limit | 0 (infinite number of points) |

| Tiepoint limit | 10,000 |

| Constrain features by mask | Yes |

| Build dense cloud | |

| Quality | Medium |

| Depth filtering | Mild (not filtering out too many details) |

| Build mesh | |

| Surface type | Arbitrary |

| Source data | Dense cloud |

| Polygon count | Medium for subarea; low for full area |

| Interpolation | Enabled |

| Point classes | All |

| Add texture | |

| Mapping mode | Generic |

| Texture size | 20,000 × 20,000 |

| Create orthophoto | |

| Pixel size | 2.79 cm × 2.79 cm |

| Segment Mean Shift | |

|---|---|

| Spectral Detail | 18 |

| Spatial Detail | 18 |

| Minimum Segment Size In Pixels | 3 |

| General Parameters for ARGA | |

|---|---|

| Minimum number of pixels | 60 |

| Maximum number of pixels | 400 |

| Class 1: sand | |

| Maximum spectral distance | 10 |

| Number of ROIs with ARGA | 10 |

| Number of ROIs with MDP | 0 |

| Class 2: vegetation | |

| Maximum spectral distance | 20 (needed for diverse vegetation reflectance) |

| Number of ROIs with ARGA | 10 |

| Number of ROIs with MDP | 0 |

| Class 3: rock | |

| Maximum spectral distance | 10 |

| Number of ROIs with ARGA | 10 |

| Number of ROIs with MDP | 5 |

| Class 4: water | |

| Maximum spectral distance | 20 (needed for stormy water) |

| Number of ROIs with ARGA | 10 |

| Number of ROIs with MDP | 5 |

| General Parameters | |

|---|---|

| Maximum spectral distance | 10 |

| Minimum number of pixels | 60 |

| Maximum number of pixels | 400 |

| Number of ROIs | 40 |

| Align Photos | |

| All | 19 h 36 min |

| Build Dense Cloud | |

| All | 52 min |

| Build Mesh | |

| Subarea | 12 h 35 min |

| Full study area | 7 h 35 min |

| Eastern part | 4 h 43 min |

| Western part | 3 h 50 min |

| Add Texture | |

| Subarea | 15 min |

| Full study area | 9 min |

| Eastern part | 23 min |

| Western part | 10 min |

| Class | Number of Pixels | Percentage | Area (m2) |

|---|---|---|---|

| Sand | 21,712,072 | 25.63 | 16,895 |

| Vegetation | 12,603,259 | 14.87 | 9807 |

| Rock | 26,339,652 | 31.09 | 20,496 |

| Water | 24,073,987 | 28.41 | 18,733 |

References

- Creel, L. Ripple Effects: Population and Costal Regions; Population Reference Bureau: Washington, DC, USA, 2003. [Google Scholar]

- Curran, S.; Kumar, A.; Lutz, W.; Williams, M. Interactions between coastal and marine ecosystems and human population systems: Perspectives on how consumption mediates this interaction. Ambio 2002, 31, 264–268. [Google Scholar] [CrossRef]

- Sayre, R.; Noble, S.; Hamann, S.; Smith, R.; Wright, D.; Breyer, S.; Butler, K.; Van Graafeiland, K.; Frye, C.; Karagulle, D. A new 30 meter resolution global shoreline vector and associated global islands database for the development of standardized ecological coastal units. J. Oper. Oceanogr. 2019, 12, S47–S56. [Google Scholar] [CrossRef]

- Veron, S.; Mouchet, M.; Govaerts, R.; Haevermans, T.; Pellens, R. Vulnerability to climate change of islands worldwide and its impact on the tree of life. Sci. Rep. 2019, 9, 14471. [Google Scholar] [CrossRef]

- Steibl, S.; Laforsch, C. Disentangling the environmental impact of different human disturbances: A case study on islands. Sci. Rep. 2019, 9, 13712. [Google Scholar] [CrossRef] [PubMed]

- Damgaard, C. Integrating hierarchical statistical models and machine-learning algorithms for ground-truthing drone images of the vegetation: Taxonomy, abundance and population ecological models. Remote Sens. 2021, 13, 1161. [Google Scholar] [CrossRef]

- Murfitt, S.L.; Allan, B.M.; Bellgrove, A.; Rattray, A.; Young, M.A.; Ierodiaconou, D. Applications of unmanned aerial vehicles in intertidal reef monitoring. Sci. Rep. 2017, 7, 10259. [Google Scholar] [CrossRef]

- Airoldi, L. Effects of patch shape in intertidal algal mosaics: Roles of area, perimeter and distance from edge. Mar. Biol. 2003, 143, 639–650. [Google Scholar] [CrossRef]

- Johnson, M.E. Why are ancient rocky shores so uncommon? J. Geol. 1988, 96, 469–480. [Google Scholar] [CrossRef]

- Edgar, G.; Banks, S.; Fariña, J.; Calvopiña, M.; Martínez, C. Regional biogeography of shallow reef fish and macro-invertebrate communities in the Galapagos archipelago. J. Biogeogr. 2004, 31, 1107–1124. [Google Scholar] [CrossRef]

- Couce, E.; Ridgwell, A.; Hendy, E.J. Future habitat suitability for coral reef ecosystems under global warming and ocean acidification. Glob. Chang. Biol. 2013, 19, 3592–3606. [Google Scholar] [CrossRef] [PubMed]

- Ubina, N.A.; Cheng, S.-C. A Review of Unmanned System Technologies with Its Application to Aquaculture Farm Monitoring and Management. Drones 2022, 6, 12. [Google Scholar] [CrossRef]

- Adade, R.; Aibinu, A.M.; Ekumah, B.; Asaana, J. Unmanned Aerial Vehicle (UAV) applications in coastal zone management—A review. Environ. Monit. Assess. 2021, 193, 154. [Google Scholar] [CrossRef] [PubMed]

- Douterloigne, K.; Gautama, S.; Philips, W. On the accuracy of 3D landscapes from UAV image data. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; pp. 589–592. [Google Scholar]

- Ndlovu, H.S.; Odindi, J.; Sibanda, M.; Mutanga, O.; Clulow, A.; Chimonyo, V.G.; Mabhaudhi, T. A comparative estimation of maize leaf water content using machine learning techniques and unmanned aerial vehicle (UAV)-based proximal and remotely sensed data. Remote Sens. 2021, 13, 4091. [Google Scholar] [CrossRef]

- Coggan, R.; Populus, J.; White, J.; Sheehan, K.; Fitzpatrick, F.; Piel, S. Review of Standards and Protocols for Seabed Habitat Mapping; Mapping European Seabed Habitats (MESH): Peterborough, UK, 2007. [Google Scholar]

- Yang, Z.; Yu, X.; Dedman, S.; Rosso, M.; Zhu, J.; Yang, J.; Xia, Y.; Tian, Y.; Zhang, G.; Wang, J. UAV remote sensing applications in marine monitoring: Knowledge visualization and review. Sci. Total Environ. 2022, 2022, 155939. [Google Scholar] [CrossRef]

- Konar, B.; Iken, K. The use of unmanned aerial vehicle imagery in intertidal monitoring. Deep. Sea Res. Part II Top. Stud. Oceanogr. 2018, 147, 79–86. [Google Scholar] [CrossRef]

- Azhar, M.; Schenone, S.; Anderson, A.; Gee, T.; Cooper, J.; van der Mark, W.; Hillman, J.R.; Yang, K.; Thrush, S.F.; Delmas, P. A framework for multiscale intertidal sandflat mapping: A case study in the Whangateau estuary. ISPRS J. Photogramm. Remote Sens. 2020, 169, 242–252. [Google Scholar] [CrossRef]

- Diruit, W.; Le Bris, A.; Bajjouk, T.; Richier, S.; Helias, M.; Burel, T.; Lennon, M.; Guyot, A.; Ar Gall, E. Seaweed Habitats on the Shore: Characterization through Hyperspectral UAV Imagery and Field Sampling. Remote Sens. 2022, 14, 3124. [Google Scholar] [CrossRef]

- Rossiter, T.; Furey, T.; McCarthy, T.; Stengel, D.B. UAV-mounted hyperspectral mapping of intertidal macroalgae. Estuar. Coast. Shelf Sci. 2020, 242, 106789. [Google Scholar] [CrossRef]

- Chen, C.; Zhang, C.; Schwarz, C.; Tian, B.; Jiang, W.; Wu, W.; Garg, R.; Garg, P.; Aleksandr, C.; Mikhail, S. Mapping three-dimensional morphological characteristics of tidal salt-marsh channels using UAV structure—From-motion photogrammetry. Geomorphology 2022, 407, 108235. [Google Scholar] [CrossRef]

- Koyama, A.; Hirata, T.; Kawahara, Y.; Iyooka, H.; Kubozono, H.; Onikura, N.; Itaya, S.; Minagawa, T. Habitat suitability maps for juvenile tri-spine horseshoe crabs in Japanese intertidal zones: A model approach using unmanned aerial vehicles and the Structure from Motion technique. PLoS ONE 2020, 15, e0244494. [Google Scholar] [CrossRef]

- Yang, B.; Hawthorne, T.L.; Aoki, L.; Beatty, D.S.; Copeland, T.; Domke, L.K.; Eckert, G.L.; Gomes, C.P.; Graham, O.J.; Harvell, C.D. Low-Altitude UAV Imaging Accurately Quantifies Eelgrass Wasting Disease From Alaska to California. Geophys. Res. Lett. 2023, 50, e2022GL101985. [Google Scholar] [CrossRef]

- Sarira, T.V.; Clarke, K.; Weinstein, P.; Koh, L.P.; Lewis, M. Rapid identification of shallow inundation for mosquito disease mitigation using drone-derived multispectral imagery. Geospat. Health 2020, 15. [Google Scholar] [CrossRef] [PubMed]

- Ballari, D.; Orellana, D.; Acosta, E.; Espinoza, A.; Morocho, V. UAV monitoring for environmental management in Galapagos Islands. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Prague, Czech Republic, 2–19 July 2016; Volume 41. [Google Scholar]

- Vandeputte, R.; Sidharta, G.; Goethals, P. Classification of Intertidal Habitats Using Drone Imagery in the Galapagos Archipelago; Ghent University: Ghent, Belgium, 2018. [Google Scholar]

- Riascos-Flores, L.; Bruneel, S.; Van der Heyden, C.; Deknock, A.; Van Echelpoel, W.; Forio, M.A.E.; De Saeyer, N.; Berghe, W.V.; Spanoghe, P.; Bermudez, R.; et al. Polluted paradise: Occurrence of pesticide residues within the urban coastal zones of Santa Cruz and Isabela (Galapagos, Ecuador). Sci. Total Environ. 2021, 763, 142956. [Google Scholar] [CrossRef]

- INEC. Censo de Población y Vivienda Galápagos 2015 (CPVG Noviembre 2015); INEC: Abeokuta, Nigeria, 2015. [Google Scholar]

- IMO/FAO/UNESCO/WMO/WHO/IAEA/UN/UNEP Joint Group of Experts on the Scientific Aspects of Marine Pollution. Protecting the Oceans from Land-Based Activities: Land-Based Sources and Activities Affecting the Quality and Uses of the Marine, Coastal and Associated Freshwater Environment; GESAMP: Norwich, UK, 2001. [Google Scholar]

- Trimble. Trimble. Available online: https://www.trimble.com/en (accessed on 30 March 2023).

- Landau, H.; Chen, X.; Klose, S.; Leandro, R.; Vollath, U. Trimble’s RTK and DGPS solutions in comparison with precise point positioning. In Observing Our Changing Earth; Springer: Berlin/Heidelberg, Germany, 2009; pp. 709–718. [Google Scholar]

- Jia, M.; Dawson, J.; Moore, M. AUSPOS: Geoscience Australia’s on-line GPS positioning service. In Proceedings of the 27th International Technical Meeting of The Satellite Division of the Institute of Navigation (ION GNSS+ 2014), Tampa, FL, USA, 8–12 September 2014; pp. 315–320. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F.; Gonizzi-Barsanti, S. Dense image matching: Comparisons and analyses. In Proceedings of the 2013 Digital Heritage International Congress (DigitalHeritage), Marseille, France, 28 October–1 November 2013; pp. 47–54. [Google Scholar]

- Steiniger, S.; Bocher, E. An overview on current free and open source desktop GIS developments. Int. J. Geogr. Inf. Sci. 2009, 23, 1345–1370. [Google Scholar] [CrossRef]

- METEO365. Meteo365.com. Available online: Meteo365.com (accessed on 15 August 2022).

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Weih, R.C.; Riggan, N.D. Object-based classification vs. pixel-based classification: Comparative importance of multi-resolution imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, C7. [Google Scholar]

- Ventura, D.; Bruno, M.; Lasinio, G.J.; Belluscio, A.; Ardizzone, G. A low-cost drone based application for identifying and mapping of coastal fish nursery grounds. Estuar. Coast. Shelf Sci. 2016, 171, 85–98. [Google Scholar] [CrossRef]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Belluscio, A.; Ardizzone, G. Mapping and classification of ecologically sensitive marine habitats using unmanned aerial vehicle (UAV) imagery and object-based image analysis (OBIA). Remote Sens. 2018, 10, 1331. [Google Scholar] [CrossRef]

- ArcGIS Pro. Overview of Image Classification. Available online: https://pro.arcgis.com/en/pro-app/latest/help/analysis/image-analyst/overview-of-image-classification.htm#:~:text=The%20object%2Dbased%20approach%20groups,deciding%20how%20pixels%20are%20grouped (accessed on 3 March 2023).

- Visalli, R.; Ortolano, G.; Godard, G.; Cirrincione, R. Micro-Fabric Analyzer (MFA): A new semiautomated ArcGIS-based edge detector for quantitative microstructural analysis of rock thin-sections. ISPRS Int. J. Geo-Inf. 2021, 10, 51. [Google Scholar] [CrossRef]

- Congedo, L. Semi-automatic classification plugin for QGIS. Sapienza Univ. 2013, 1, 25. [Google Scholar]

- Congedo, L. Semi-automatic classification plugin documentation. Release 2016, 4, 29. [Google Scholar]

- Chust, G.; Galparsoro, I.; Borja, A.; Franco, J.; Uriarte, A. Coastal and estuarine habitat mapping, using LIDAR height and intensity and multi-spectral imagery. Estuar. Coast. Shelf Sci. 2008, 78, 633–643. [Google Scholar] [CrossRef]

- Ukrainski, P.; Classification Accuracy Assessment. Confusion Matrix Method. Available online: http://www.50northspatial.org/classification-accuracy-assessment-confusion-matrix-method/ (accessed on 24 January 2023).

- Liu, X.; Lian, X.; Yang, W.; Wang, F.; Han, Y.; Zhang, Y. Accuracy assessment of a UAV direct georeferencing method and impact of the configuration of ground control points. Drones 2022, 6, 30. [Google Scholar] [CrossRef]

- Rozenstein, O.; Karnieli, A. Comparison of methods for land-use classification incorporating remote sensing and GIS inputs. Appl. Geogr. 2011, 31, 533–544. [Google Scholar] [CrossRef]

- McMahon, S.M.; Harrison, S.P.; Armbruster, W.S.; Bartlein, P.J.; Beale, C.M.; Edwards, M.E.; Kattge, J.; Midgley, G.; Morin, X.; Prentice, I.C. Improving assessment and modelling of climate change impacts on global terrestrial biodiversity. Trends Ecol. Evol. 2011, 26, 249–259. [Google Scholar] [CrossRef]

- Young, S.S.; Wamburu, P. Comparing Drone-Derived Elevation Data with Air-Borne LiDAR to Analyze Coastal Sea Level Rise at the Local Level. Pap. Appl. Geogr. 2021, 7, 331–342. [Google Scholar] [CrossRef]

- Papakonstantinou, A.; Topouzelis, K.; Pavlogeorgatos, G. Coastline zones identification and 3D coastal mapping using UAV spatial data. ISPRS Int. J. Geo-Inf. 2016, 5, 75. [Google Scholar] [CrossRef]

- Laso, F.J.; Benítez, F.L.; Rivas-Torres, G.; Sampedro, C.; Arce-Nazario, J. Land cover classification of complex agroecosystems in the non-protected highlands of the Galapagos Islands. Remote Sens. 2019, 12, 65. [Google Scholar] [CrossRef]

- Szuster, B.W.; Chen, Q.; Borger, M. A comparison of classification techniques to support land cover and land use analysis in tropical coastal zones. Appl. Geogr. 2011, 31, 525–532. [Google Scholar] [CrossRef]

- Ismail, A.; Rashid, A.S.A.; Sa’ari, R.; Mustaffar, M.; Abdullah, R.A.; Kassim, A.; Yusof, N.M.; Abd Rahaman, N.; Apandi, N.M.; Kalatehjari, R. Application of UAV-based photogrammetry and normalised water index (NDWI) to estimate the rock mass rating (RMR): A case study. Phys. Chem. Earth Parts A/B/C 2022, 127, 103161. [Google Scholar] [CrossRef]

- Monteiro, J.G.; Jiménez, J.L.; Gizzi, F.; Přikryl, P.; Lefcheck, J.S.; Santos, R.S.; Canning-Clode, J. Novel approach to enhance coastal habitat and biotope mapping with drone aerial imagery analysis. Sci. Rep. 2021, 11, 1–13. [Google Scholar] [CrossRef]

- Casado, M.R.; Gonzalez, R.B.; Kriechbaumer, T.; Veal, A. Automated identification of river hydromorphological features using UAV high resolution aerial imagery. Sensors 2015, 15, 27969–27989. [Google Scholar] [CrossRef]

- de Lima, R.L.P.; Boogaard, F.C.; de Graaf-van Dinther, R.E. Innovative water quality and ecology monitoring using underwater unmanned vehicles: Field applications, challenges and feedback from water managers. Water 2020, 12, 1196. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, X.; Wang, Z.; Yang, L.; Xie, Y.; Huang, Y. UAVs as remote sensing platforms in plant ecology: Review of applications and challenges. J. Plant Ecol. 2021, 14, 1003–1023. [Google Scholar] [CrossRef]

- Harsh, S.; Singh, D.; Pathak, S. Efficient and Cost-effective Drone–NDVI system for Precision Farming. Int. J. New Pract. Manag. Eng. 2021, 10, 14–19. [Google Scholar] [CrossRef]

- Verfuss, U.K.; Aniceto, A.S.; Harris, D.V.; Gillespie, D.; Fielding, S.; Jiménez, G.; Johnston, P.; Sinclair, R.R.; Sivertsen, A.; Solbø, S.A. A review of unmanned vehicles for the detection and monitoring of marine fauna. Mar. Pollut. Bull. 2019, 140, 17–29. [Google Scholar] [CrossRef] [PubMed]

- Hassija, V.; Chamola, V.; Agrawal, A.; Goyal, A.; Luong, N.C.; Niyato, D.; Yu, F.R.; Guizani, M. Fast, reliable, and secure drone communication: A comprehensive survey. IEEE Commun. Surv. Tutor. 2021, 23, 2802–2832. [Google Scholar] [CrossRef]

- Kumar, A.; Jain, S. Drone-based monitoring and redirecting system. Dev. Future Internet Drones (IoD): Insights Trends Road Ahead 2021, 2021, 163–183. [Google Scholar]

- Lin, C.; He, D.; Kumar, N.; Choo, K.-K.R.; Vinel, A.; Huang, X. Security and privacy for the internet of drones: Challenges and solutions. IEEE Commun. Mag. 2018, 56, 64–69. [Google Scholar] [CrossRef]

- Maddikunta, P.K.R.; Hakak, S.; Alazab, M.; Bhattacharya, S.; Gadekallu, T.R.; Khan, W.Z.; Pham, Q.-V. Unmanned aerial vehicles in smart agriculture: Applications, requirements, and challenges. IEEE Sens. J. 2021, 21, 17608–17619. [Google Scholar] [CrossRef]

| Sand | Vegetation | Rock | Water | Total | |

|---|---|---|---|---|---|

| Sand | 34,307 | 130 | 7628 | 92 | 42,157 |

| Vegetation | 0 | 8058 | 118 | 0 | 8176 |

| Rock | 0 | 0 | 65,790 | 7514 | 73,304 |

| Water | 0 | 0 | 36,370 | 67,146 | 103,516 |

| Total | 34,307 | 8188 | 109,906 | 74,752 | 227,153 |

| Class | Producer’s Accuracy (%) | User’s Accuracy (%) | Kappa Hat |

|---|---|---|---|

| Sand | 100.00 | 81.38 | 0.78 |

| Vegetation | 98.41 | 98.56 | 0.99 |

| Rock | 59.86 | 89.75 | 0.80 |

| Water | 89.83 | 64.87 | 0.48 |

| Overall accuracy (%) | 77.17 | ||

| Kappa hat | 0.66 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

De Cock, A.; Vandeputte, R.; Bruneel, S.; De Cock, L.; Liu, X.; Bermúdez, R.; Vanhaeren, N.; De Wit, B.; Ochoa, D.; De Maeyer, P.; et al. Construction of an Orthophoto-Draped 3D Model and Classification of Intertidal Habitats Using UAV Imagery in the Galapagos Archipelago. Drones 2023, 7, 416. https://doi.org/10.3390/drones7070416

De Cock A, Vandeputte R, Bruneel S, De Cock L, Liu X, Bermúdez R, Vanhaeren N, De Wit B, Ochoa D, De Maeyer P, et al. Construction of an Orthophoto-Draped 3D Model and Classification of Intertidal Habitats Using UAV Imagery in the Galapagos Archipelago. Drones. 2023; 7(7):416. https://doi.org/10.3390/drones7070416

Chicago/Turabian StyleDe Cock, Andrée, Ruth Vandeputte, Stijn Bruneel, Laure De Cock, Xingzhen Liu, Rafael Bermúdez, Nina Vanhaeren, Bart De Wit, Daniel Ochoa, Philippe De Maeyer, and et al. 2023. "Construction of an Orthophoto-Draped 3D Model and Classification of Intertidal Habitats Using UAV Imagery in the Galapagos Archipelago" Drones 7, no. 7: 416. https://doi.org/10.3390/drones7070416

APA StyleDe Cock, A., Vandeputte, R., Bruneel, S., De Cock, L., Liu, X., Bermúdez, R., Vanhaeren, N., De Wit, B., Ochoa, D., De Maeyer, P., Gautama, S., & Goethals, P. L. M. (2023). Construction of an Orthophoto-Draped 3D Model and Classification of Intertidal Habitats Using UAV Imagery in the Galapagos Archipelago. Drones, 7(7), 416. https://doi.org/10.3390/drones7070416