Abstract

Autonomous landing is one of the key technologies for unmanned aerial vehicles (UAVs) which can improve task flexibility in various fields. In this paper, a vision-based autonomous landing strategy is proposed for a quadrotor micro-UAV based on a novel camera view angle conversion method, fast landing marker detection, and an autonomous guidance approach. The front-view camera of the micro-UAV video is first modified by a new strategy to obtain a top-down view. By this means, the landing marker can be captured by the onboard camera of the micro-UAV and is then detected by the YOLOv5 algorithm in real time. The central coordinate of the landing marker is estimated and used to generate the guidance commands for the flight controller. After that, the guidance commands are sent by the ground station to perform the landing task of the UAV. Finally, the flight experiments using DJI Tello UAV are conducted outdoors and indoors, respectively. The original UAV platform is modified using the proposed camera view angle-changing strategy so that the top-down view can be achieved for performing the landing mission. The experimental results show that the proposed landing marker detection algorithm and landing guidance strategy can complete the autonomous landing task of the micro-UAV efficiently.

1. Introduction

Micro unmanned aerial vehicles (UAVs) have the characteristics of small size, low cost, and strong flexibility. The quadrotor micro-UAV is one type of them and vertical take-off and landing can be achieved, which further improves the maneuverability of the micro-UAV [1]. In both the military and civilian fields, the micro-UAV plays an important role, such as military reconnaissance, geographic mapping, aerial videography, fire monitoring, agricultural plant protection, collective rescue, and power inspection [2,3,4]. For the quadrotor micro-UAV, the landing issue is one of the key technologies for improving the autonomous mission execution capability of the UAV. During the landing phase, how to capture the landing position independently without human intervention and determine an autonomous landing strategy is a challenging problem for achieving an accurate fixed-point landing mission of the quadrotor micro-UAV. Hence, intelligent perception and autonomous guidance strategy are necessities.

Generally, it is difficult for a traditional GPS-based guidance strategy to meet the demand of fixed-point landing of the UAV with high precision, since the GPS signals usually show large positioning errors and are prone to be interfered with in constrained environments [5]. In recent years, with the development of computer vision and image processing technology, vision-based guidance technology has been developed for the autonomous landing of a UAV. More and more attention has been devoted to vision-based guidance owing to many outstanding characteristics, such as independence from the outside world, strong anti-interference ability, high accuracy, and low cost. In the vision-based autonomous landing method of the UAV, usually, two aspects are considered, including the landing marker detection and landing guidance strategy.

With respect to landing marker detection, the edge detection algorithm and deep-learning detection algorithm are widely used. In [6,7], the Sobel and Canny edge detection algorithms are used to detect landing markers. Good edge extraction results can be achieved since the landing marker is a cooperative objective that is commonly combined by “H”, “T”, rectangle, circle, triangle, etc. [8,9]. However, when the key edge features of the landing marker are lost, traditional edge detection cannot make full use of the rich image information, which will reduce the detection accuracy. To overcome this problem, deep-learning detection methods are used for the landing marker detection, mainly including two categories: two-stage algorithm and one-stage algorithm. The fast region-based convolutional network is representative of a two-stage algorithm where object detection is mainly divided into two parts. A special module is used to generate region proposals, find prospects, and adjust bounding boxes. Regarding the one-stage algorithm, the Single Shot MultiBox Detector (SSD) and You Only Look Once (YOLO) algorithms are usually used, which directly classify and adjust the bounding box based on an anchor. Generally, high detection accuracy can be achieved using a two-stage algorithm but the detection speed might be very slow; while for one-stage algorithms, the detection accuracy is usually lower than that of two-stage methods, the detection speed is higher, which is an advantage for missions that need realtime detection ability. In [10], a guidance sign detection method named LightDenseYOLO is proposed. Compared with the traditional Hough transform, the speed, and accuracy are effectively improved. In [11], the YOLO algorithm is modified to improve the recognition and positioning accuracy of the Aruco marker. In [12], the QR code is used as a landing marker and the convolutional neural network is used to identify the target. In the experiments, the QR code can be identified and the location information is accurately obtained. In [13], colors are added to the landing marker. A detection algorithm is proposed based on color information. Finally, the landing marker detection is realized based on a large UAV platform.

As mentioned above, several good results have been achieved for vision-based UAV landing in the simulation environment or large UAV platform but there are still challenges in the deep-learning method deployment and real application for the micro-UAV. Considering the payload and computation capability of the micro-UAV, it is needed for getting a balance between the accuracy and speed of the marker detection algorithm.

With respect to the autonomous guidance strategy of the UAV, much research has been studied. In [14], an adaptive autonomous UAV landing strategy is proposed and the landing experiment is carried out in the Gazebo simulation environment. In [15], a guidance strategy based on visual and inertial information fusion is proposed for UAV landing on the ship. The relative motion information between the UAV and the landing position is sent to the control system to compute the control commands for guiding the UAV landing. In [16], a low-complexity position-based visual servoing (PBVS) controller is designed for the vision-based autonomous landing of a quadcopter UAV and the feasibility of the method is proved by numerical simulations and experiments. In [17], image-based visual servoing (IBVS) technology is used for the autonomous landing of the UAV. In order to improve the accuracy of speed estimation, a Kalman filter is used to fuse GPS data and image data. After simulation and flight experiments, the UAV finally completed tracking and landing on the mobile vehicle with an average error of 0.2 m. In [18], a fuzzy control method is proposed to control the vertical, longitudinal, lateral, and directional velocities of the UAV. The attitude of the UAV can be estimated based on the vision detection algorithm. In [19], an autonomous landing method of a micro-UAV based on a monocular camera is proposed. The closed-loop PID controller is used to accomplish the tracking and landing of the UAV. With the increase in sophisticated environments and complex missions for autonomous UAV landings, the issue of the UAV cooperating with moving and tilting targets has received extensive attention. In [20], an autonomous landing method based on visual navigation is proposed for UAV landing on a mobile autonomous surface vehicle (USV). The landing process is divided into horizontal tracking and vertical landing, and the PID controller is used for the design of the UAV autonomous landing guidance law. In [21], an autonomous landing method is proposed for the UAV–USV system. The landing marker is recognized first and then the attitude angle of the USV is estimated for the design of the landing strategy. This landing strategy considers the synchronous motion of the UAV and USV and can improve the landing accuracy under wave action. In [22], an autonomous landing method is proposed for landing the UAV on a moving vehicle where the velocity commands are directly computed in image space. In [23], a discrete-time nonlinear model predictive controller is designed to optimize the trajectory and time range, so as to land the UAV on a tilted platform. Using the visual servoing method, both PBVS and IBVS must obtain depth information for coordinate transformation. However, for the micro-UAVs, the monocular camera instead of the depth camera is usually used considering the limited size of the UAV. This means that the depth information is difficult to obtain, resulting that the above methods are not suitable for the micro-UAVs. In addition, some guidance strategies are difficult to deploy on the micro-UAV considering the limited computation capability. Usually, different guidance strategies are considered for different application scenarios and different experimental platforms [24]. In this sense, it is necessary to find an autonomous and easily deployed guidance solution for the autonomous landing of the micro-UAV with consideration given to the limitations of the onboard and computation resource.

In this paper, a vision-based autonomous landing guidance strategy is proposed for a quadrotor micro-UAV based on camera-view angle conversion and a fast landing marker detection approach. The main contribution of the proposed strategy lies on:

- (1)

- A vision-based guidance strategy is proposed. This strategy only requires pixel-level coordinates to guide the micro-UAV to land autonomously, without the depth information of the image. The designed autonomous landing strategy shows low complexity and is suitable for the deployment of the micro-UAV;

- (2)

- In the landing guidance design, the pixel area obtained from the YOLOv5 detection result instead of the exact depth distance is used to guide the micro-UAV to approach the landing site where the complex estimation of the depth information is avoided;

- (3)

- The angle view of the onboard front-view camera is changed through a lens. By this means, the top-down view can be obtained by the onboard camera to capture the landing marker. It provides the possibility of autonomous landing for the micro-UAV with only a front-view camera.

The rest of the paper is organized as follows. Section 2 introduces the architecture of the autonomous landing guidance system. Section 3 introduces the landing marker detection and coordinate estimation methods. Section 4 introduces the modified view angle conversion after refitting DJI Tello UAV and the design of the guidance strategy. Section 5 analyzes the results of the autonomous landing experiment. Section 6 presents the conclusions.

2. The Framework of the Proposed Vision-Based Autonomous Landing Strategy

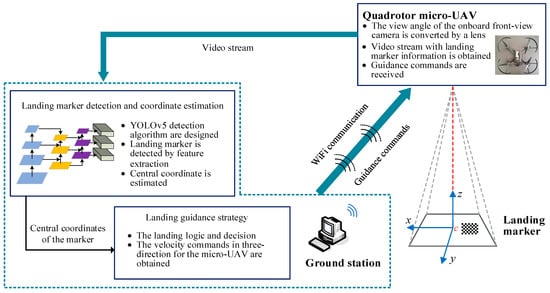

The proposed UAV autonomous landing guidance system mainly includes a quadrotor micro-UAV platform, the landing marker, and the ground station. The structure diagram of the micro-UAV autonomous landing system is shown in Figure 1.

Figure 1.

Structure diagram of the proposed autonomous landing guidance strategy for the micro-UAV.

The quadrotor micro-UAV platform is DJI Tello UAV which has an onboard front-view camera. To capture the image of the landing marker using the onboard camera, a lens is added to the UAV to modify the view angle of the original camera. Thus, the downside view can be achieved where a landing marker is included. The landing marker is used as a sign to guide the micro-UAV to the designated landing site. After achieving the landing marker, the video stream with the landing marker inside is sent to the ground station, where communication with the micro-UAV and ground-station computer are achieved by WiFi communication. On the ground station, it is responsible for image processing and landing marker detection using the YOLOv5 algorithm. Meanwhile, the central coordinates of the landing marker are estimated according to the detected object. Then, the coordinates are sent to the guidance-strategy module, using the guidance commands, including three-directional velocities generated according to the designed guidance law. At last, the guidance commands are sent to the micro-UAV to guide the UAV gradually approaching the center of the landing marker based on the proposed landing guidance logic. In the following sections, a detailed detection method and guidance strategy are presented.

3. Landing Marker Detection and Coordinate Estimation

In this section, the landing marker dataset is first introduced, followed by the design of the marker detection algorithm. Meanwhile, the coordinate estimation method is introduced for the landing guidance strategy.

3.1. Landing Marker Dataset

An efficient landing marker should be easy to be identified for ensuring the landing accuracy. In this paper, the checkerboard calibration board is used as the landing marker, which is composed of black and white square grids, as shown in Figure 2. Even if the camera view is constrained when the micro-UAV approaches the landing marker, or the image is incomplete due to the landing marker being occluded by other objects, the landing marker can be easily captured and accurately detected by the internal features. For the checkerboard landing marker detection, the traditional edge detection uses the gray and color of the image to extract the edge of the object but it is difficult to obtain effective detection results when the key feature points are missing. Therefore, the depth convolution neural network is preferred to achieve the goal of stable and accurate marker detection, considering the landing marker occlusion problem, lighting change issue, and the marker size variation as the consequence of gradually approaching the landing site of the micro-UAV. In this paper, the YOLOv5 algorithm is used to detect the landing marker in real time after comparison with several object detection algorithms.

Figure 2.

Images of several scenarios included in the dataset used for network training.

For network model training, the landing marker dataset is one of the key problems for ensuring the efficiency and accuracy of the detection. In real applications, the micro-UAV might encounter various complex environments during flight. Hence, the pictures of the landing marker in various scenarios are collected to constitute the datasets for neural-network training. The considered scenarios include lighting variation, occlusion problem, the landing marker at the edge of the camera’s frame, small target, and other factors, as shown in Figure 2. Finally, the landing marker dataset is obtained consisting of a total of 1500 pictures.

3.2. Landing Marker Detection and Coordinate Estimation by YOLOv5 Algorithm

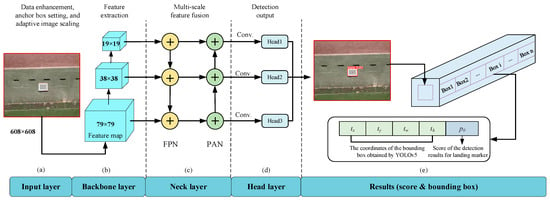

The network structure of the YOLOv5 algorithm used in this paper is shown in Figure 3, where four parts are included, that is, the input layer, backbone layer, neck layer, and head layer.

Figure 3.

The landing marker detection diagram based on the YOLOv5 network. (a) Input layer. (b) Backbone layer. (c) Neck layer. (d) Head layer. (e) The coordinates of the bounding box obtained by YOLOv5 and the score of the detection results for landing marker.

In the input layer, the data enhancement, adaptive anchor box calculation, and adaptive image scaling are performed. The mosaic data enhancement method is adopted for image preprocessing which is especially effective for small objects. Hence, it is one of the reasons why this algorithm is selected in this paper. The adaptive anchor box sets the initial anchor-box size, which is used for bounding box regression in the prediction phase. The adaptive image scaling operation normalizes the image size, converts the input image to the same size, and then sends it to the network. Figure 3b shows the YOLOv5 backbone network layer. The backbone layer extracts the features from the input pictures through downsampling. The neck layer is mainly used for multiscale feature fusion of features extracted by the backbone, as shown in Figure 3c. Regarding the structure of the YOLOv5, the feature pyramid networks (FPN) plus path aggregation networks (PAN) structure is used. The FPN performs multiscale context feature fusion on the two effective features obtained from the backbone feature extraction layer to improve the feature expression ability of the network. At the same time, YOLOv5 uses the cross-stage partial 2 (CSP2) structure designed in CSPnet to replace the original CSP structure, strengthening the ability of feature fusion. The detection results are predicted in the head layer as observed in Figure 3d. The head layer mainly includes the generalized intersection over union (G_IOU) loss function of the bounding box and nonmaximum suppression (NMS). The G_IOU loss function is used to perform regression operations on the bounding box so that the positioning result of the bounding box is more accurate. The NMS can remove redundant detection results and retains the bounding box with the highest confidence for output. In Figure 3e, five parameters of the YOLOv5 network detection results are presented which will be used for the following guidance strategy, including the central coordinates (tx, ty) of the predicted bounding box, the width and height (tw, th) of the bounding box, and the confidence p0 of the detection results. The predicted central coordinate (tx, ty) is the offset of the upper left corner coordinate of the grid cell. The central coordinate of the bounding box is expressed as (bx, by) and can be calculated by

where (cx, cy) is the coordinate value of the upper left corner of the grid cell, is a sigmoid function, and (tx, ty) is the offset.

Based on the central coordinates of the bounding box, the central-pixel coordinate (x, y) of the landing marker in the image can be obtained by

where (w, h) is the image size.

The requirements of detection accuracy and detection speed should be considered when selecting the network. Precision (P) is the proportion of correctly detected landing markers to the total number of landing markers detected. Recall (R) is the proportion of correctly detected landing markers to the total number of landing markers in the dataset. The evaluation index is described in Equations (5) and (6).

where TP is the number of true positive samples, FP is the number of false positive samples, and TN is the number of true negative samples.

The average precision (AP) is used to measure the accuracy of the algorithm in detecting landing markers. The AP is obtained by calculating the area of the PR curve, which is a curve with precision as the vertical axis and recall as the horizontal axis. Larger values of AP indicate better performance of the algorithm in detecting landing markers. The evaluation index is described in Equation (7).

By defining to be the number of frames processed per second, then the evaluation index of the speed can be obtained by

where NF is the number of frames and T is the total time to detect all frames.

The above two index Equations (7) and (8) are used to evaluate the performance of the network in the experiment and are regarded as the basis for the network selection.

4. Autonomous Landing Guidance Strategy

In this section, the guidance strategy of the autonomous landing scheme for the micro-UAV is first designed. Then, the detailed conversion of the view angle for the UAV camera is described. On this basis, the change of the pixel coordinates and pixel area for the landing marker in the visual image when the micro-UAV moves in different directions is analyzed. At last, the landing guidance law is designed to generate the commands including three-directional velocities for completing the micro-UAV landing mission.

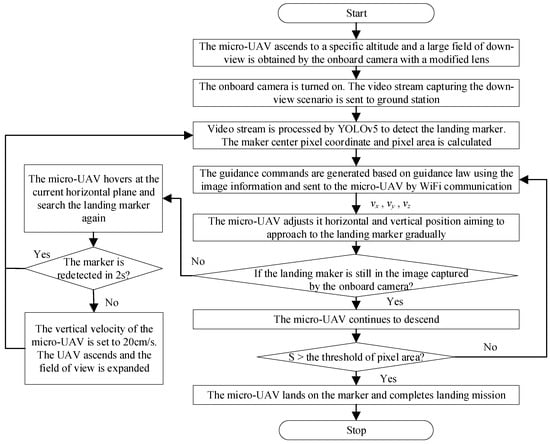

4.1. Landing Guidance Strategy

The proposed autonomous landing guidance strategy for the micro-UAV is designed as shown in Figure 4. The main steps are described as follows.

Figure 4.

The flow chart of the autonomous landing guidance strategy for the micro-UAV.

Step 1. The view angle of the onboard camera with the front view is converted by a lens so that the downside view can be obtained. The micro-UAV is taken off to an altitude of 6 m to enlarge the field of view of the onboard camera. Before executing the autonomous landing strategy, it is assumed that the micro-UAV has moved into the landing area and that the landing marker is in the view of the micro-UAV’s camera.

Step 2. At the predefined altitude, the onboard camera is turned on. The ground pictures are then captured and the video stream is transmitted back to the ground-station computer by WiFi communication method.

Step 3. On the ground station, the video stream is processed by YOLOv5 in real time aiming to detect the landing marker. Simultaneously, the central-pixel coordinate information of the landing marker is obtained and sent to the guidance strategy.

Step 4. The central-pixel coordinates are provided to the guidance law module. The guidance commands, including three directional velocities, are generated at the ground station. The commands are then sent to the micro-UAV by WiFi communication.

Step 5. According to guidance commands, the micro-UAV adjusts its horizontal and vertical position in order for approaching to the landing marker gradually.

Step 6. During the descent of the micro-UAV, if the marker is lost (that is, the marker cannot be captured by the onboard camera), the micro-UAV hovers without horizontal movement for another 2 s in an attempt to capture the marker again. If the marker still cannot be captured in 2 s, the vertical ascent velocity of the micro-UAV is set to 20 cm/s. The micro-UAV will move up and the field of view of the camera will be expanded. Since the detection area has been expanded, the landing marker can be captured again. Once the landing marker is found again, the micro-UAV will stop ascending and remain in its current position. Then, steps 3–5 are executed.

Step 7. During the descending of the micro-UAV, the pixel area of the landing marker in the image is getting larger and larger. If the pixel area is larger than the preset threshold, the micro-UAV will stop the transmission of the video stream and landing guidance strategy. The micro-UAV then stops and lands on the landing marker.

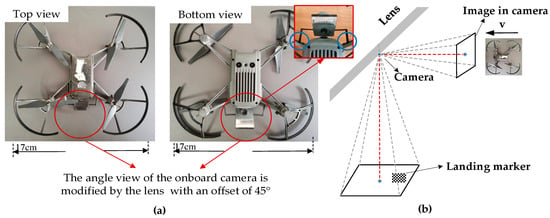

4.2. Conversion of Camera View Angle

The micro-UAV platform used in this paper is DJI Tello UAV, as shown in Figure 5a. However, the original onboard camera of the DJI Tello UAV can only capture the front view. In order to achieve the vision-based landing mission, a lens with an offset of 45° is installed, with the help of which the scenario of the downside can be projected into the lens. After the refraction of light, the camera takes images from the lens so the UAV can obtain the down view. Figure 5a shows the modified lens for the DJI Tello UAV as depicted in the red circle. The changing principle of the view field by the modified lens is shown in Figure 5b.

Figure 5.

(a) The micro-UAV and the onboard camera with a lens installed. (b) The changing principle of the view field by the modified lens.

In the vision-based landing strategy, the pixel coordinate and pixel area of the landing marker in the image is used to guide the micro-UAV to land autonomously. Therefore, the relationship between the movement of the landing marker in the image and the motion of the micro-UAV must be first clarified. Through this relationship, the flight direction of the micro-UAV can then be determined. Based on several experimental tests, the relationship is obtained as follows. When the micro-UAV moves forward, the pixel position of the landing marker in the image moves upwards instead of downwards in a general sense because of the modified view angle of the lens. When the micro-UAV moves to the left, the pixel position of the landing marker moves right in the image. When the micro-UAV descends, the pixel area of the landing marker in the image captured by the camera will gradually become large as the micro-UAV approaches the landing site. The above relationship is shown in Table 1.

Table 1.

The relationship between the moving direction of the micro-UAV and that of the pixel of the landing marker in the image.

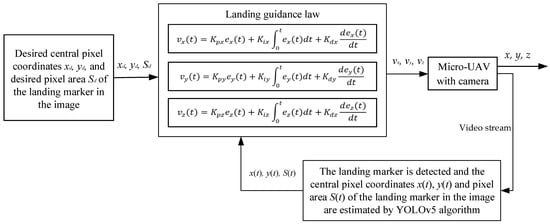

4.3. Guidance-Law Design

Based on the estimated central coordinates of the landing marker by the algorithm described in Section 3, the guidance law is designed to generate the landing commands for the micro-UAV. In this paper, the velocity commands in three directions are used to guide the micro-UAV to approach the landing marker. The guidance-law diagram is shown in Figure 6. As the micro-UAV descends, the onboard camera continuously captures the images of the landing marker. These images are sent back to the ground station and the landing marker is detected by the YOLOv5 algorithm. Then, the central coordinates x(t), y(t), and pixel area S(t) of the landing marker in the image are calculated and sent to the guidance-law module. After that, compared with the desired pixel coordinates xd, yd, and pixel area Sd, the visual errors are sent to the guidance law to compute the velocity commands vx, vy, and vz in three directions. At last, the commands are sent to the micro-UAV for completing the landing task.

Figure 6.

Schematic diagram of the proposed landing guidance law for the micro-UAV.

As shown in Figure 6, the central coordinates x(t), y(t), and the pixel area S(t) of the landing marker at the current moment can be estimated by the real-time YOLOv5 detection algorithm. On this basis, the landing guidance law is then designed including horizontal guidance and vertical guidance, respectively.

Regarding the horizontal guidance, the guidance law is designed as shown in Equations (9) and (10) based on the relationship between the moving direction of the micro-UAV and that of the pixel of the landing marker in the image as depicted in Table 1.

In this paper, it is assumed that the camera and the UAV can be considered as a whole because of the small size of the micro-UAV and the fact that the camera is fixed on the micro-UAV. Then, the center of the image can be regarded as the horizontal center of the micro-UAV. The goal of the vision-based landing is to adjust the position of the micro-UAV to make sure that the center of the landing marker is exactly on the center of the image, i.e., (xd, yd). Hence, the error between the desired central coordinates (xd, yd) and the current central pixel of the detected landing marker (x(t), y(t)) at a certain time can be used to generate the horizontal-velocity command to guide the horizontal movement of the micro-UAV.

Regarding the vertical guidance, the pixel area S(t) is used to design the guidance law as shown in Equation (11).

The flight altitude of the micro-UAV cannot be accurately acquired since the onboard camera of the micro-UAV is a monocular camera without depth information. However, the height-related information is a necessity for the landing guidance. Hence, in this paper, a new method is proposed to estimate the height error between the landing marker and the micro-UAV by the pixel area. The main idea is based on the characteristic that the pixel area of the landing marker in the image captured by the camera will gradually become larger as the micro-UAV approaches the landing marker. Then, the pixel area S(t) of the landing marker can be used as an indirect indicator to represent the height of the micro-UAV relative to the landing marker. In the proposed guidance strategy, at the end of the landing guidance, the pixel area of the landing marker can be set as Sd which is a threshold used as a reference indicator for stopping the video transmission and the landing guidance strategy. That is, if the pixel area of the landing marker is greater than the threshold at a certain time, the micro-UAV lands on the marker and completes the landing mission. The error between the desired pixel area and the current pixel area of the detected landing marker is calculated by Equation (11), which can also be regarded as a parameter to adjust the altitude of the micro-UAV to approach the landing marker.

After calculating the visual errors in the three directions (ex(t), ey(t), and ez(t)), a PID guidance law is designed to generate the velocity commands in three directions. By the guidance law, the micro-UAV gradually decreases the visual errors and achieves the landing task. The autonomous guidance law of the micro-UAV can be seen in two parts: the horizontal and the vertical landing. In the horizontal direction, the visual errors ex(t) and ey(t) are sent to the PID guidance law to generate the velocity in the x, y direction, as shown in Equations (12) and (13). The visual error ez(t) is sent to the PID guidance law to generate the velocity in the z direction, as shown in Equation (14).

where vx(t), vy(t), and vz(t) represent the velocity in the x, y, and z direction, and ex(t), ey(t), and ez(t) are the visual errors in the x, y, and z directions. Kpx, Kpy, Kpz, Kix, Kiy, Kiz, Kdx, Kdy, and Kdz are the gains of PID guidance law.

The above guidance law is used to calculate the velocity commands in three directions at the ground station, and the commands are then sent to the micro-UAV to reduce the position error relative to the landing marker. By this means, the micro-UAV gradually descends and lands on the landing marker finally.

5. Experiment Results Analysis

To verify the proposed method, four experiments are conducted including the landing marker detection, the indoor experiment of the autonomous landing of the micro-UAV, the outdoor experiment of the autonomous landing of the UAV, and the experiment of the micro-UAV landing on the moving target. The first experiment is used to evaluate the detection performance of the landing marker for the following autonomous UAV landing mission. The second experiment is to guide the micro-UAV to land on a marker in an indoor laboratory where a motion capture system can be used to monitor the trajectory of the UAV landing process. The third experiment is an outdoor experiment to realize the autonomous landing task of the micro-UAV in the real world. The fourth experiment is performed to verify that the micro-UAV can land on the moving target using the UAV autonomous landing strategy proposed in this paper. In this section, the experimental platform is first introduced, and the experimental process is then presented in detail. Meanwhile, the experimental results are analyzed. Finally, our experimental results are compared with other vision-based methods of autonomous UAV landing.

5.1. The Experimental Platform

In the experiment, the quadrotor micro-UAV platform is DJI Tello UAV. The micro-UAV is equipped with four motors, one battery, a WiFi module, a camera, etc. The camera of the UAV can provide 1280 × 720 pixels video resolution. Detailed parameters are shown in Table 2. The computer used in the ground station is equipped with an i5-7300HQ CPU and NVIDIA RTX3050 GPU. The computers used for the motion capture system in the indoor laboratory are equipped with an i7-11700F CPU.

Table 2.

Parameters of the experimental platform.

The size of the landing marker is set to be 225 mm × 175 mm. The maximum altitude of the landing marker to be detected by the vision system is 6 m when using a 225 × 175 mm size landing marker. If the landing marker needs to be detected at a higher altitude, a larger size landing marker is needed. The larger landing marker allows for the start of the landing mission from a higher altitude. When the flight altitude is 6 m in the outdoor experiment, the range of the view field can be captured by the micro-UAV is 8.50 m × 6.50 m. When the flight altitude is 2 m in the indoor experiment, the range of the view field can be captured by the micro-UAV is 2.90 m × 2.20 m.

5.2. The Landing Marker Detection

In this paper, the landing marker detection method is the YOLOv5 algorithm. To evaluate the performance in terms of detection accuracy and speed of the YOLOv5 detection method, two other network-based detection methods are also conducted as a comparison. With respect to the evaluation indicator, detection accuracy is one of the most important indexes for object detection algorithms, since the landing marker should be recognized at first, which is a basis for the following autonomous landing mission. The higher the accuracy of detection means that the detection algorithm is more effective in detecting the landing marker. At the same time, the detection speed is a critical factor for the real-time micro-UAV application. It is generally considered in vision-based flight applications that the real-time requirement can be met when the processing algorithm can complete the detection process of the current frame before the next frame is sent to the ground-station computer. Therefore, the detection speed should be greater than the sampling rate (FPS = 30 in this paper) of the camera. Hence, FPS ≥ 30 is the minimum standard to ensure that the micro-UAV can process the image timely during the autonomous landing mission. The commonly used single-stage object detection algorithms including SSD and YOLO are chosen for comparison. In network training and testing, the same landing marker datasets made by ourselves are used, and the equipment used is also completely consistent with each other. The average precision, as depicted in Equation (7), is used to indicate the accuracy of the algorithm, and FPS in Equation (8) is used to express the speed of the algorithm. The test results are shown in Table 3.

Table 3.

Test results of different detecting methods.

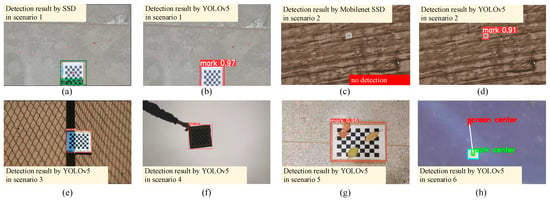

As shown in Table 3, the SSD algorithm has the highest accuracy achieving 99.7%. However, the detection speed does not meet the requirement of real-time applications. The Mobilenet SSD where the original VGG network is replaced by the MobilenetV2 network, can effectively improve the detection speed of the SSD algorithm, but the accuracy is obviously reduced and it is unable to identify small targets at a high altitude, as shown in Figure 7c. The YOLOv4 algorithm has a detection accuracy of 99.1% and an average detection speed of 30 FPS. The YOLOv4-tiny algorithm has a detection accuracy of 98.9% and an average detection speed of 34 FPS. The YOLOv5 algorithm has a detection accuracy of 99.5% and a detection speed of 40 FPS. The accuracy and speed of detection are better than the YOLOv4-tiny algorithm and the YOLOv4 algorithm. In addition, it can accurately recognize a landing marker in a variety of complex scenarios. It can be concluded that the YOLOv5 algorithm can achieve good detection results while meeting the real-time requirements at the same time.

Figure 7.

Landing marker detection results. (a) The detection result of SSD when the marker is at the edge of the camera’s frame. (b) The detection result of YOLOv5 when the marker is at the edge of the camera’s frame. (c) Detection result for small target by Mobilenet SSD. (d) Detection result for small target by YOLOv5. (e) YOLOv5: Detection result under strong light. (f) YOLOv5: Detection result in a cloudy environment. (g) YOLOv5: Detection result of large area occlusion. (h) YOLOv5: Real-time detection result of the landing marker deployed on DJI Tello UAV.

Figure 7 shows the detection results of several landing marker images in different scenarios using different methods. In Figure 7a, the SSD algorithm is poorly detected at the edge of the camera’s frame. In Figure 7b, compared with the detection result of the SSD algorithm in Figure 7a when the target is at the edge of the camera’s frame, the YOLOv5 algorithm can still accurately detect the landing marker in this scenario and the score is greater than SSD. In Figure 7c, the result of the Mobilenet SSD algorithm is present when the landing marker is small in the image due to the high altitude of the micro-UAV. It can be seen from the detection result that the algorithm cannot detect small targets correctly. In Figure 7d, the YOLOv5 algorithm can accurately detect the landing marker even if the landing marker is small in the image, compared with the detection result of the Mobilenet SSD algorithm in Figure 7c. Figure 7e–f show the detection results of the YOLOv5 algorithm in different scenarios, including a strong light environment and a cloudy environment. The results show that the YOLOv5 algorithm can adapt to changes in light intensity. In Figure 7g, a large area occlusion environment is considered and the result shows that even if the marker is blocked by an object, the marker detection can still be accomplished by the YOLOv5 algorithm using the internal image features. In Figure 7h, the YOLOv5 algorithm is deployed on the DJI Tello UAV for real-time detection. The result shows that the algorithm can be deployed on the UAV to achieve high detection accuracy and speed. Based on the above analysis, the landing marker in various scenarios can be accurately detected by the YOLOv5 algorithm, which indicates that it is suitable for the research of vision-based autonomous landing of the micro-UAV.

5.3. The Indoor Experiment of the Autonomous Landing of the Micro-UAV

Before a real flight test, the WiFi communication between the micro-UAV and the ground station is first established. The landing marker is placed in an open area. When the micro-UAV enters the autonomous landing area, the ground information can be captured using the onboard camera with a modified lens. Then, the video stream is sent to the ground-station computer. On the ground-station computer, the deep-learning YOLOv5 algorithm is pretrained to detect the returned video images in real time. Once the landing marker is recognized, its central-pixel coordinate is estimated and then used for guidance commands generation. The central coordinate is sent to the PID guidance law to compute the UAV guidance commands, which are finally sent to the micro-UAV to perform the autonomous landing task. At the same time, the motion capture system installed around the wall of the laboratory is used to monitor the micro-UAV. Hence, the flight data of the micro-UAV during the indoor experiment process can be saved for further analysis.

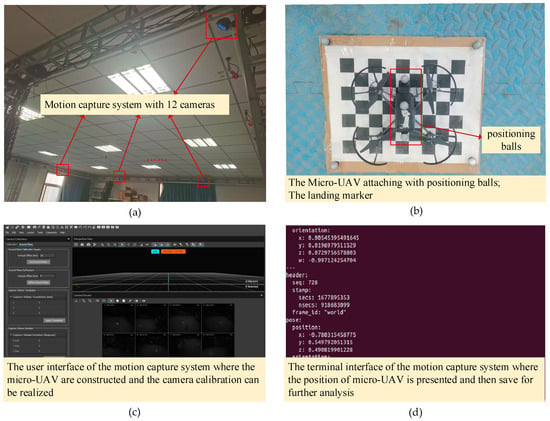

Figure 8 shows the hardware and software used in the indoor experiment. Figure 8a is a physical image of the motion capture system, consisting of 12 high-definition cameras. Figure 8b shows the micro-UAV and landing marker used in the experiment. Three positioning balls are fixed on the micro-UAV and used to compute the position of the micro-UAV by the motion capture system. Figure 8c shows the user interface of the motion capture positioning system, which is responsible for camera calibration, rigid bodies creation, and micro-UAV monitoring. Figure 8d shows the terminal interface of the motion capture system where the position of the micro-UAV is presented and then save for further analysis.

Figure 8.

Equipment diagram for indoor experiment. (a) The motion capture positioning system. (b) The micro-UAV and landing marker with positioning balls. (c) The user interface of the motion capture system. (d) The terminal interface of the motion capture system.

In the indoor experiment, the desired altitude of the micro-UAV is set to be 1.8 m, the adjustment range of the horizontal velocity is set as [−8 cm/s, +8 cm/s], and the maximum descent velocity is set to be 8 cm/s. The gain of the PID guidance law for the experiment is set as shown in Table 4, and the landing guidance strategy updates the commands with a sampling and updating frequency of 10 Hz.

Table 4.

Parameter settings for the landing guidance law.

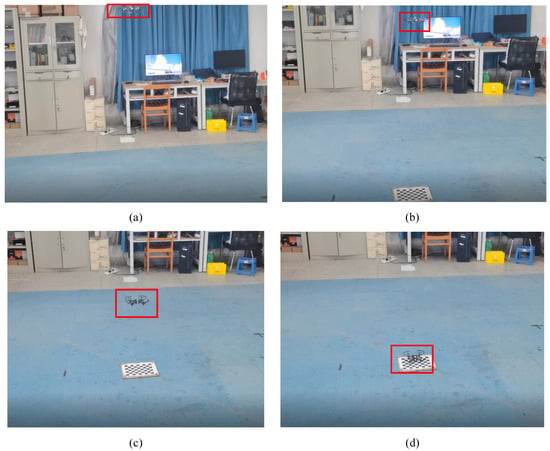

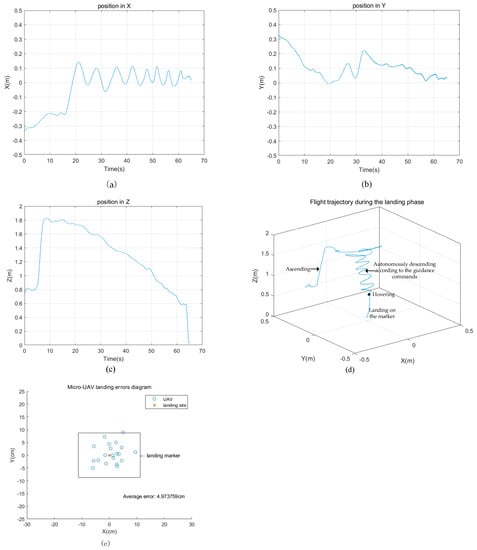

Figure 9 shows the indoor experiment results of the autonomous landing of the micro-UAV, including four representative stages. In Figure 9a, the micro-UAV ascends to the desired altitude of 1.8 m and starts capturing images of the ground. In Figure 9b, the micro-UAV autonomously descends according to the commands generated by the guidance strategy. In Figure 9c, the pixel area of the landing marker exceeds the threshold; hence the micro-UAV hovers at an altitude of 0.6 m and stops the video transmission and autonomous guidance strategy. In Figure 9d, the micro-UAV finally lands on the marker completing the landing task. In addition, the motion capture positioning system is used to collect the flight trajectory of the micro-UAV during the indoor experiment. The position curves in the x, y, and z directions and the three-dimensional flight trajectory and for the autonomous landing of the micro-UAV are shown in Figure 10.

Figure 9.

Images of the indoor experiment where the red rectangle is DJI Tello UAV. (a) The UAV ascends to the desired altitude and starts capturing images of the ground. (b) The UAV autonomously descends by the guidance strategy. (c) The UAV stops the video transmission and autonomous guidance strategy. (d) The UAV lands on the marker.

Figure 10.

Flight trajectory of the micro−UAV during the indoor experiment. (a–c) Results of the position in x, y, z direction. (d) The three−dimensional trajectory of the micro−UAV. (e) Micro−UAV landing position diagram in the static target landing experiments.

Figure 10a shows the position of the micro-UAV in the x direction. The micro-UAV reaches the landing marker at around 20 s and then adjusts within a range of [−0.1 m, 0.1 m]. Figure 10b shows the position of the micro-UAV in the y direction. The micro-UAV adjusts its position and finally reaches the landing marker. Figure 10c shows the position of the micro-UAV in the z direction. Due to height limitations in the laboratory, the maximum altitude is set to be 1.8 m. At around 8 s, the micro-UAV ascends to the desired altitude and then autonomously descends according to guidance commands in order to approach the landing marker. At around 61 s, the micro-UAV descends to 0.6 m. Based on the predesigned guidance strategy, if the pixel area of the landing marker exceeds the threshold, the micro-UAV will stop the autonomous landing guidance. Hence, the micro-UAV hovers for a few seconds at the altitude of 0.6 m and then lands on the landing marker. The whole autonomous landing time of the micro-UAV takes 50 s. Figure 10d shows the 3D flight trajectory of the micro-UAV during the indoor autonomous landing experiment. Figure 10e shows the distribution diagram of the UAV landing positions and the target-site position in the static target landing experiments. The UAV took off from different directions to conduct 20 landing experiments and all the landings were completed. It can be seen from the diagram that the average error between the UAV landing position and the target position is 5 cm.

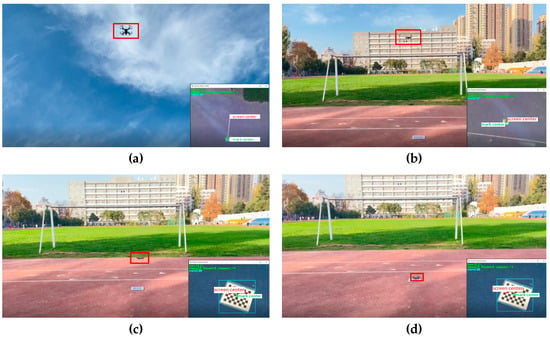

5.4. The Outdoor Experiment of the Autonomous Landing of the Micro-UAV

The autonomous landing experiment is carried out in the outdoor environment. The main configuration of the guidance experiment is the same as the indoor experiment except that no motion capture system is used. In the outdoor experiment, the desired altitude of the micro-UAV is set to be 6 m, the horizontal velocity adjustment range is set to be [−8 cm/s, +8 cm/s], and the maximum descent velocity is set to be 16 cm/s. The flight images are recorded by a mobile phone, and the detection images are recorded by the ground station. As shown in Figure 11, the large image shows the flight scene of the micro-UAV and the small image at the bottom right corner shows the real-time detection results by the YOLOv5 algorithm. The relative position of the micro-UAV and landing marker can be seen in the detected images. The red point is the position of the micro-UAV and the green point is the position of the landing marker. The velocity of the micro-UAV in three directions, the score for the landing marker detection, and the FPS are also shown in these images.

Figure 11.

Images of the outdoor experiment where the red rectangle is DJI Tello UAV. (a) The UAV ascends to the desired altitude and starts capturing images of the ground. (b) The UAV autonomously descends by the guidance strategy. (c) The UAV stops the video transmission and autonomous guidance strategy. (d) The UAV lands on the marker.

Figure 11 shows the four main stages of the autonomous landing flight experiment. Figure 11a shows the first stage, where the micro-UAV ascends to 6 m in order to enlarge the field of view of the onboard camera. The camera is then turned on to capture ground images and the video is transmitted back to the ground station. In Figure 11b, the central coordinates and pixel area of the landing marker are obtained by the YOLOv5 algorithm and then sent to the guidance law to calculate the velocity commands in three directions. The micro-UAV receives the commands and autonomously adjusts its position to approach to the landing marker. In Figure 11c, the pixel area of the landing marker in the image is larger than the set threshold; then, the micro-UAV stops the video transmission and autonomous landing guidance strategy. In Figure 11d, after receiving the end instructions, the micro-UAV turns off the rotors to complete the final landing. The autonomous landing of the micro-UAV takes about 80 s. Overall, the proposed guidance strategy can complete the outdoor autonomous landing mission as analyzed in the experiment results.

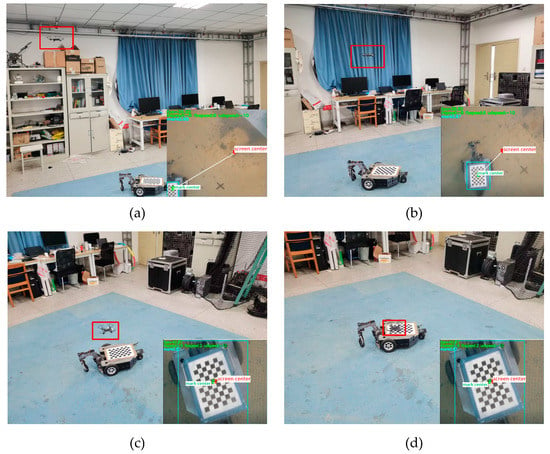

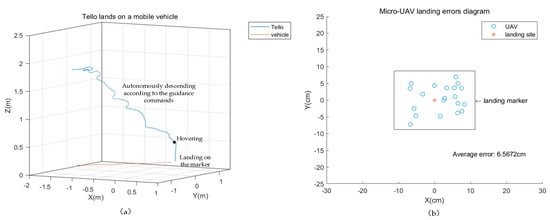

5.5. The Experiment of the Micro-UAV Landing on the Moving Target

In order to verify the suitability of the proposed autonomous landing strategy for mobile scenarios, an experiment of micro-UAV landing on a moving platform is performed in the paper. In the experiment, the vehicle moves forward with the landing marker and the micro-UAV tracks the mobile vehicle and lands on the landing marker. The starting altitude of the micro-UAV is set to be 2 m, the adjustment range of the horizontal velocity is set as [−8 cm/s, +8 cm/s], and the maximum descent velocity is set to be 10 cm/s. Figure 12 shows the four images of the autonomous landing experiment that the micro-UAV lands on the mobile vehicle. Figure 13a shows the three-dimensional trajectory diagram of the micro-UAV for landing on the mobile vehicle. The whole autonomous landing time of the micro-UAV takes 40 s. Figure 13b shows the micro-UAV landing positions and the target-site position. The micro-UAV took off from different directions to conduct 20 landing experiments and all the landing missions were completed. It can be seen from Figure 13b that the average error between the UAV landing position and the target position is 6.6 cm.

Figure 12.

Images of the experiment that the micro-UAV landed on the moving platform. (a) The UAV ascends to the desired altitude and starts capturing images of the ground and the vehicle starts moving. (b) The UAV autonomously descends by the guidance strategy. (c) The UAV stops the video transmission and autonomous guidance strategy. (d) The UAV lands on the moving target.

Figure 13.

Results of the micro−UAV landing on the moving platform. (a) The three-dimensional trajectory of the micro−UAV for landing on the moving platform. (b) Micro−UAV landing position diagram in the moving target landing experiments.

In order to compare the performance of the proposed autonomous landing strategy with existing vision-based autonomous landing methods for the UAV. The obtained results are summarized in Table 5 and the main differences between them are analyzed.

Table 5.

Comparative table of the different landing strategies.

6. Conclusions

In this paper, an autonomous landing guidance strategy is proposed for the micro-UAV based on the camera angle of view conversion, landing marker detection, and autonomous guidance law. The front-view camera of the micro-UAV is modified by a lens to obtain the downside view. Hence, the images of the ground with the landing marker can be captured by the micro-UAV camera. The central coordinates and pixel area of the landing marker are obtained by the YOLOv5 algorithm detection for generating guidance commands. Based on the movement relationship between the micro-UAV and the landing marker in the image captured by the modified onboard camera, the autonomous guidance law of the micro-UAV is designed to generate the velocity commands in three directions. At last, the indoor and outdoor flight experiments are conducted using DJI Tello micro-UAV to verify the proposed autonomous landing strategy. The results show that the proposed autonomous landing guidance strategy can guide the micro-UAV to complete the landing mission autonomously. The micro-UAV’s angle of view is converted by a modified lens in order to achieve a landing mission, but it will obscure the forward view which is an important part of other flight missions. To solve this problem, in future work, the conversion between forward and downward view can be further considered by controlling the rotation of the lens according to different aerial mission requirements.

Author Contributions

Conceptualization, L.M., Q.L., Y.Z. and B.W.; Data curation, Q.L., N.F. and W.S.; Formal analysis, Y.Z., N.F. and X.X.; Methodology, L.M. and Q.L.; Project administration, B.W., L.M. and Y.Z.; Resources, Y.Z. and B.W.; Validation, Q.L. and W.S.; Writing—review & editing, L.M., Q.L. and X.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (No. 61833013, No. 62103326, No. 62003266, and No. 61903297), Young Talent Fund of University Association for Science and Technology in Shaanxi (No. 20210114), the Industry-University-Research Innovation Foundation for the Chinese Ministry of Education (No. 2021ZYA07002), China Postdoctoral Science Foundation (No. 2022MD723834), and the Natural Sciences and Engineering Research Council of Canada.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kumar, V.; Michael, N. Opportunities and challenges with autonomous micro aerial vehicles. Int. J. Robot. Res. 2012, 31, 1279–1291. [Google Scholar] [CrossRef]

- Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F. Fault-tolerant cooperative navigation of networked UAV swarms for forest fire monitoring. Aerosp. Sci. Technol. 2022, 123, 107494. [Google Scholar] [CrossRef]

- Su, J.; Zhu, X.; Li, S.; Chen, W. AI meets UAVs: A survey on AI empowered UAV perception systems for precision agriculture. Neurocomputing 2023, 518, 242–270. [Google Scholar] [CrossRef]

- Faiyaz Ahmed, M.D.; Mohanta, J.C.; Sanyal, A. Inspection and identification of transmission line insulator breakdown based on deep learning using aerial images. Electr. Power Syst. Res. 2022, 211, 108199. [Google Scholar] [CrossRef]

- Jung, Y.; Lee, D.; Bang, H. Close-range vision navigation and guidance for rotary UAV autonomous landing. In Proceedings of the 2015 IEEE International Conference on Automation Science and Engineering (CASE), Gothenburg, Sweden, 24–28 August 2015; pp. 342–347. [Google Scholar] [CrossRef]

- Yuan, B.; Ma, W.; Wang, F. High Speed Safe Autonomous Landing Marker Tracking of Fixed Wing Drone Based on Deep Learning. IEEE Access 2022, 10, 80415–80436. [Google Scholar] [CrossRef]

- Lim, J.; Lee, T.; Pyo, S.; Lee, J.; Kim, J.; Lee, J. Hemispherical InfraRed (IR) Marker for Reliable Detection for Autonomous Landing on a Moving Ground Vehicle From Various Altitude Angles. IEEE/ASME Trans. Mechatron. 2022, 27, 485–492. [Google Scholar] [CrossRef]

- Zhao, L.; Li, D.; Zhao, C.; Jiang, F. Some achievements on detection methods of UAV autonomous landing markers. Acta Aeronaut. Astronaut. Sin. 2022, 43, 25882-025882. [Google Scholar] [CrossRef]

- Xu, S.; Lin, F.; Lu, Y. Pose estimation method for autonomous landing of quadrotor unmanned aerial vehicle. In Proceedings of the 2022 IEEE 6th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 4–6 March 2022; pp. 1246–1250. [Google Scholar] [CrossRef]

- Nguyen, P.H.; Arsalan, M.; Koo, J.H.; Naqvi, R.A.; Truong, N.Q.; Park, K.R. LightDenseYOLO: A Fast and Accurate Marker Tracker for Autonomous UAV Landing by Visible Light Camera Sensor on Drone. Sensors 2018, 18, 1703. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Wang, B.; Tan, X.; Wu, J.; Wei, L. Corner location and recognition of single Aruco marker under occlusion based on YOLO algorithm. J. Electron. Imaging 2021, 30, 033012. [Google Scholar] [CrossRef]

- Janousek, J.; Marcon, P.; Klouda, J.; Pokorny, J.; Raichl, P.; Siruckova, A. Deep Neural Network for Precision Landing and Variable Flight Planning of Autonomous UAV. In Proceedings of the 2021 Photonics & Electromagnetics Research Symposium (PIERS), Hangzhou, China, 21–25 November 2021; pp. 2243–2247. [Google Scholar] [CrossRef]

- Feng, K.; Li, W.; Ge, S.; Pan, F. Packages delivery based on marker detection for UAVs. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 2094–2099. [Google Scholar] [CrossRef]

- Wang, J.; McKiver, D.; Pandit, S.; Abdelzaher, A.F.; Washington, J.; Chen, W. Precision UAV Landing Control Based on Visual Detection. In Proceedings of the 2020 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), Shenzhen, China, 6–8 August 2020; pp. 205–208. [Google Scholar] [CrossRef]

- Meng, Y.; Wang, W.; Han, H.; Ban, J. A visual/inertial integrated landing guidance method for UAV landing on the ship. Aerosp. Sci. Technol. 2019, 85, 474–480. [Google Scholar] [CrossRef]

- Lin, J.; Wang, Y.; Miao, Z.; Zhong, H.; Fierro, R. Low-Complexity Control for Vision-Based Landing of Quadrotor UAV on Unknown Moving Platform. IEEE Trans. Ind. Inform. 2022, 18, 5348–5358. [Google Scholar] [CrossRef]

- Cho, G.; Choi, J.; Bae, G.; Oh, H. Autonomous ship deck landing of a quadrotor UAV using feed-forward image-based visual servoing. Aerosp. Sci. Technol. 2022, 130, 107869. [Google Scholar] [CrossRef]

- Olivares-Mendez, M.A.; Kannan, S.; Voos, H. Vision based fuzzy control autonomous landing with UAVs: From V-REP to real experiments. In Proceedings of the 2015 23rd Mediterranean Conference on Control and Automation (MED), Torremolinos, Spain, 16–19 June 2015; pp. 14–21. [Google Scholar] [CrossRef]

- Cheng, H.; Chen, Y.; Li, X.; Wong, W. Autonomous takeoff, tracking and landing of a UAV on a moving UGV using onboard monocular vision. In Proceedings of the 32nd Chinese Control Conference, Xi’an, China, 26–28 July 2013; pp. 5895–5901. [Google Scholar]

- Zhang, H.; Hu, B.; Xu, Z.; Cai, Z.; Liu, B.; Wang, X.; Geng, T.; Zhong, S.; Zhao, J. Visual Navigation and Landing Control of an Unmanned Aerial Vehicle on a Moving Autonomous Surface Vehicle via Adaptive Learning. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 5345–5355. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Ge, Y.; Guan, Z.; Ye, G. Synchronized Motion-Based UAV-USV Cooperative Autonomous Landing. J. Mar. Sci. Eng. 2022, 10, 1214. [Google Scholar] [CrossRef]

- Keipour, A.; Pereira, G.A.S.; Bonatti, R.; Garg, R.; Rastogi, P.; Dubey, G.; Scherer, S. Visual Servoing Approach to Autonomous UAV Landing on a Moving Vehicle. Sensors 2022, 22, 6549. [Google Scholar] [CrossRef] [PubMed]

- Vlantis, P.; Marantos, P.; Bechlioulis, C.P.; Kyriakopoulos, K.J. Quadrotor landing on an inclined platform of a moving ground vehicle. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2202–2207. [Google Scholar] [CrossRef]

- Zeng, Z.; Zheng, H.; Zhu, Y.; Luo, Z. A Research on Control System of Multi-rotor UAV Self-precision Landing. J. Guangdong Univ. Technol. 2020, 37, 87–94. [Google Scholar] [CrossRef]

- Wubben, J.; Fabra, F.; Calafate, C.T.; Krzeszowski, T.; Marquez-Barja, J.M.; Cano, J.-C.; Manzoni, P. Accurate Landing of Unmanned Aerial Vehicles Using Ground Pattern Recognition. Electronics 2019, 8, 1532. [Google Scholar] [CrossRef]

- Araar, O.; Aouf, N.; Vitanov, I. Vision Based Autonomous Landing of Multirotor UAV on Moving Platform. J. Intell. Robot. Syst. 2017, 85, 369–384. [Google Scholar] [CrossRef]

- Chen, C.; Chen, S.; Hu, G.; Chen, B.; Chen, P.; Su, K. An auto-landing strategy based on pan-tilt based visual servoing for unmanned aerial vehicle in GNSS-denied environments. Aerosp. Sci. Technol. 2021, 116, 106891. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).