Evaluation of Human Behaviour Detection and Interaction with Information Projection for Drone-Based Night-Time Security

Abstract

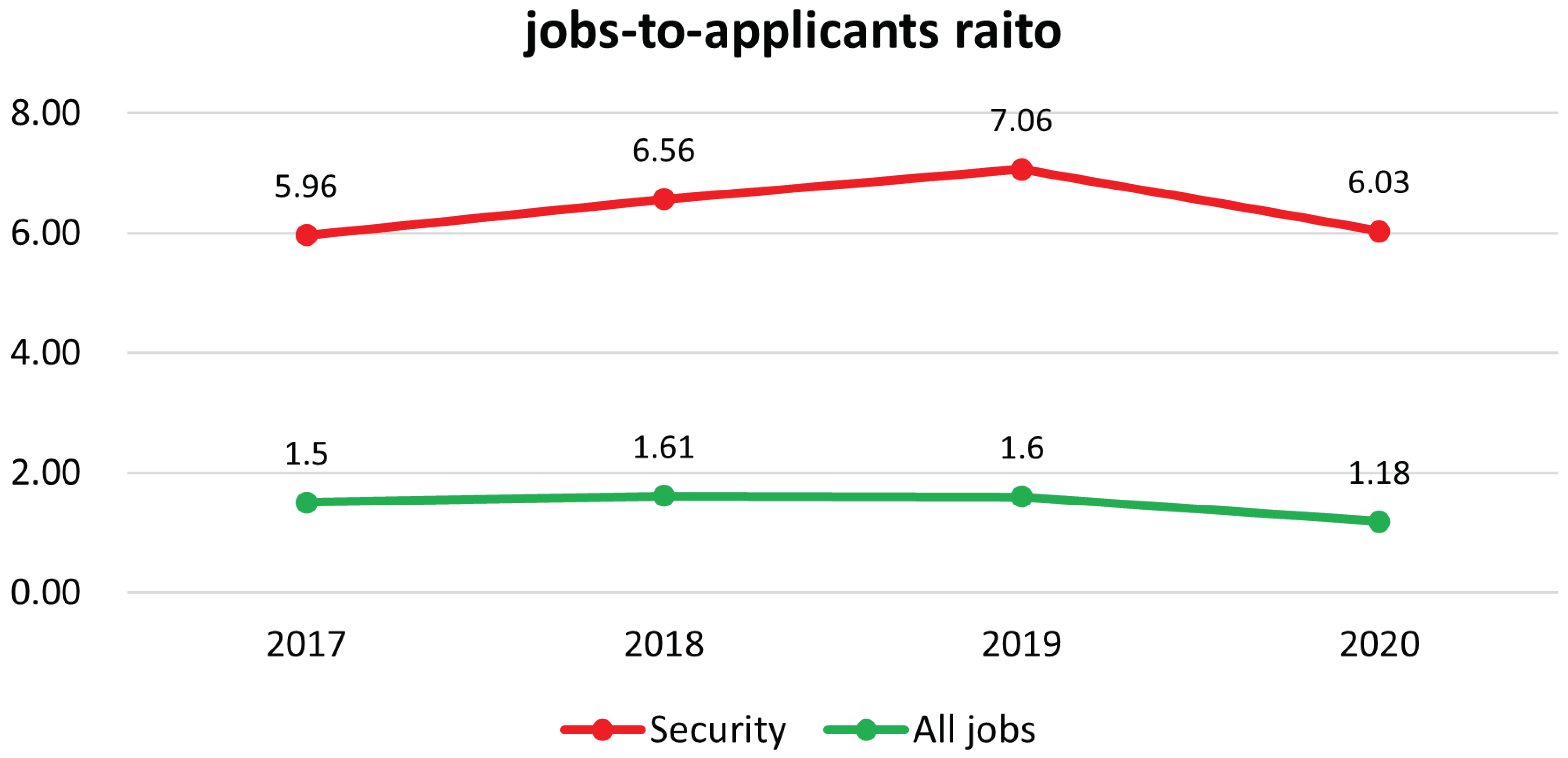

:1. Introduction

2. Related Works

2.1. Creating Datasets for Drone-Based Person Detection

2.2. Person Recognition Using Aerial Video

2.3. Aerial Human Violence Detection Using UAV

2.4. Investigation of Comfortable Drone Human Approach Strategies

3. Night Security Drone Aerial Ubiquitous Display

3.1. Aerial Ubiquitous Display Overview

3.2. Hardware Configurations

3.3. Software Configuration

3.3.1. ArduPilot

3.3.2. Human Behaviour Detection Models

3.3.3. DroneKit

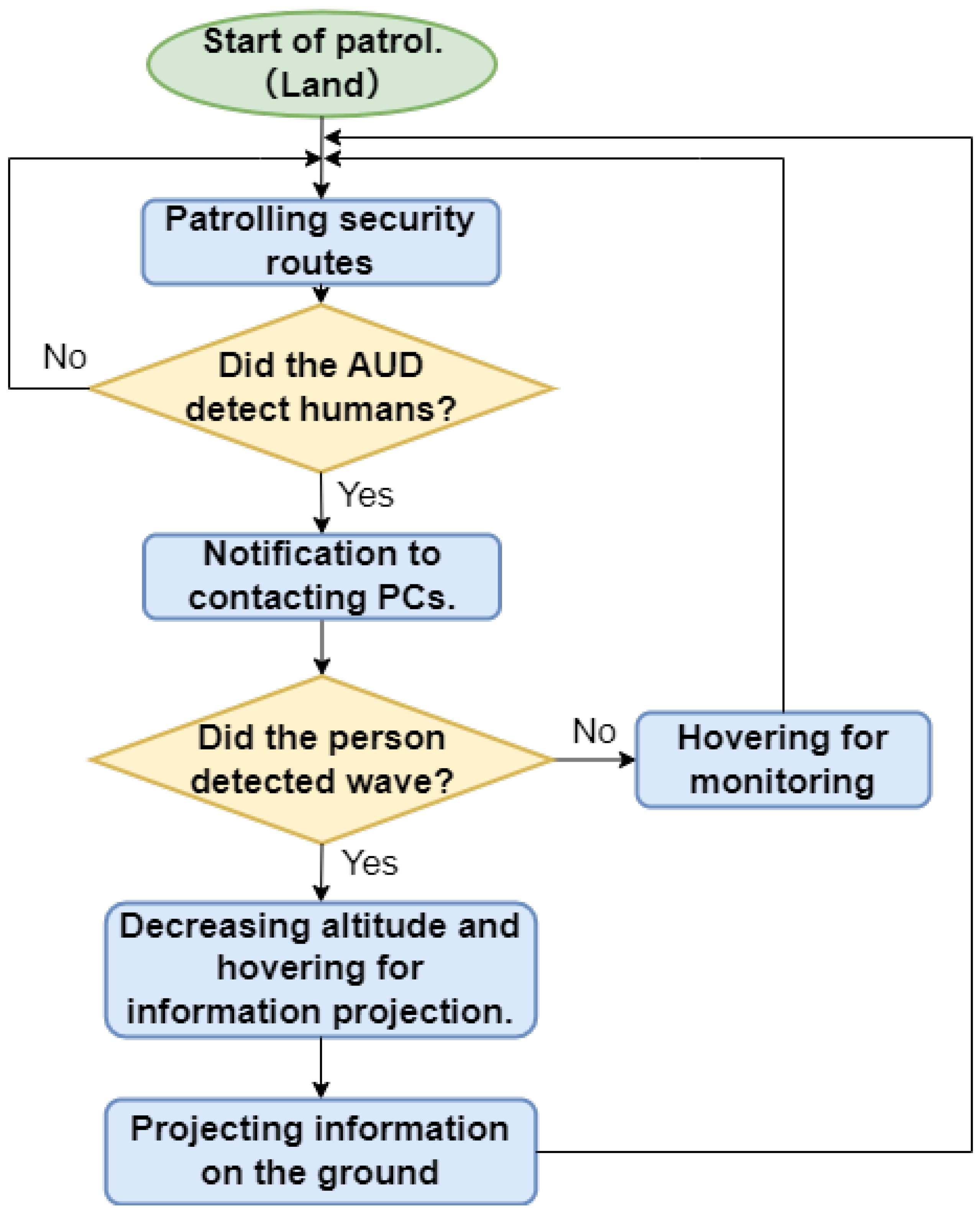

4. Nighttime Security System with Aerial Ubiquitous Display

4.1. System Overview

- Patrol flights on security routes.

- Real-time human behavior detection and alert function at the time of detection.

- Hovering for monitoring when detecting human presence.

- Decreasing altitude and hovering for information projection when detecting hand-waving behavior.

- Projecting information using a projector.

4.2. Functions in the System

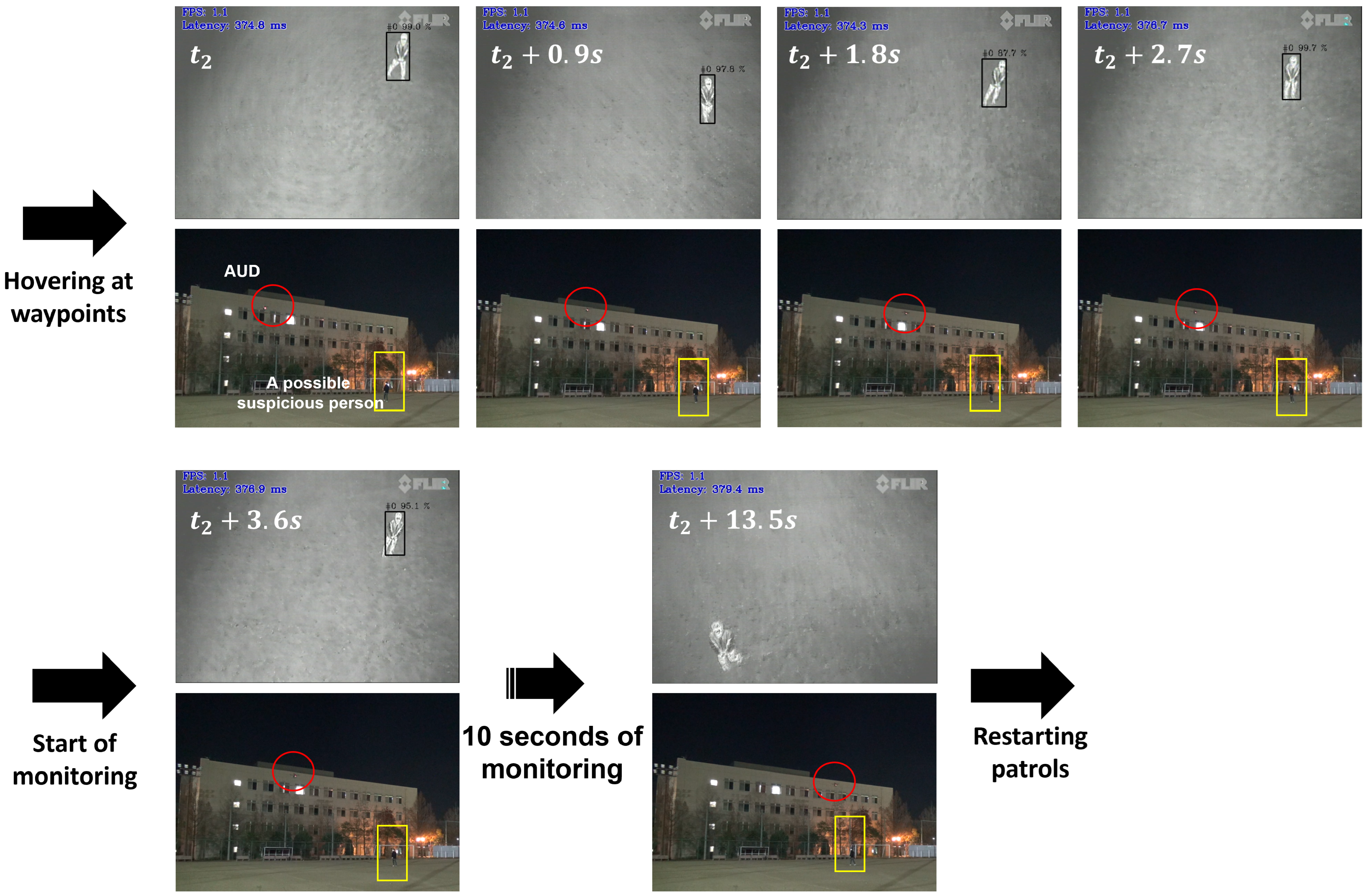

4.2.1. Patrol Flights on Security Routes

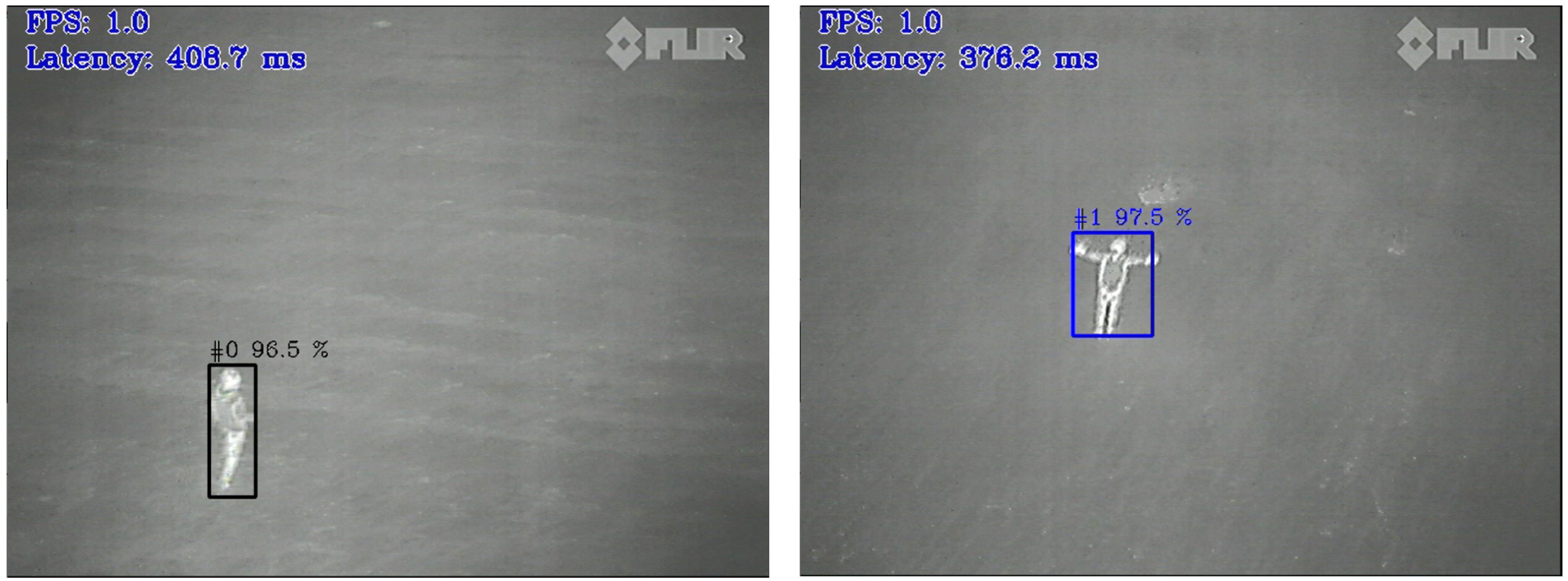

4.2.2. Real-Time Human Behavior Detection and Alert Function at the Time of Detection

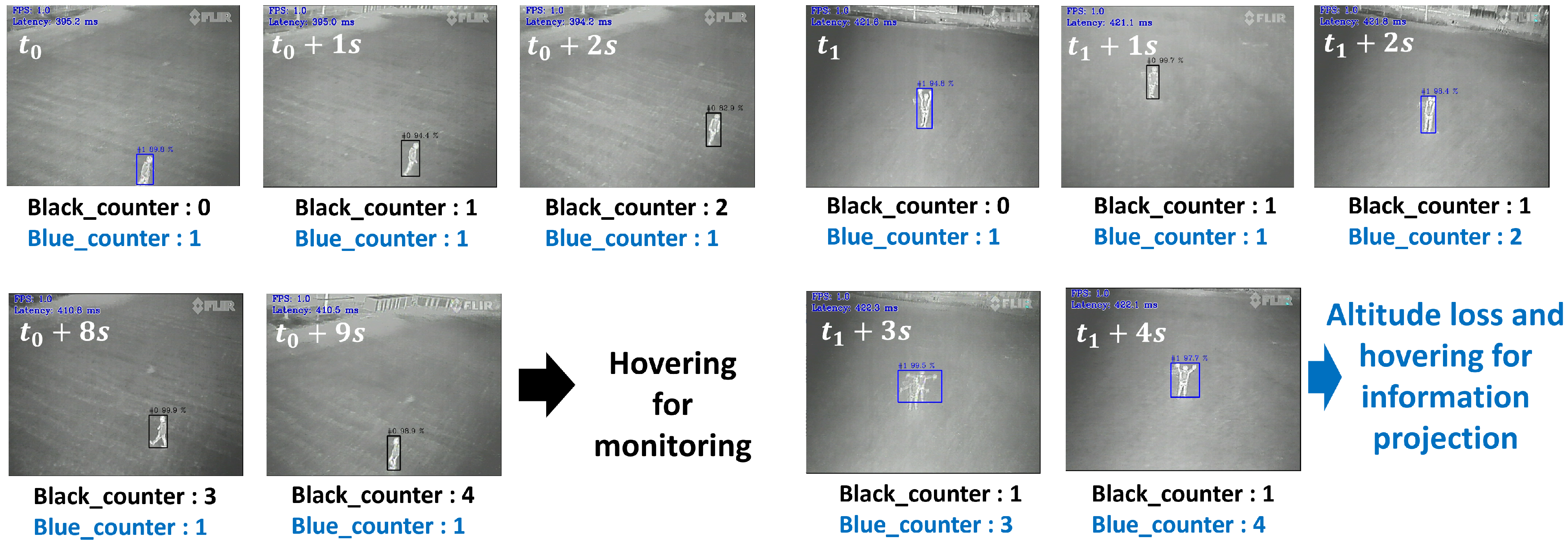

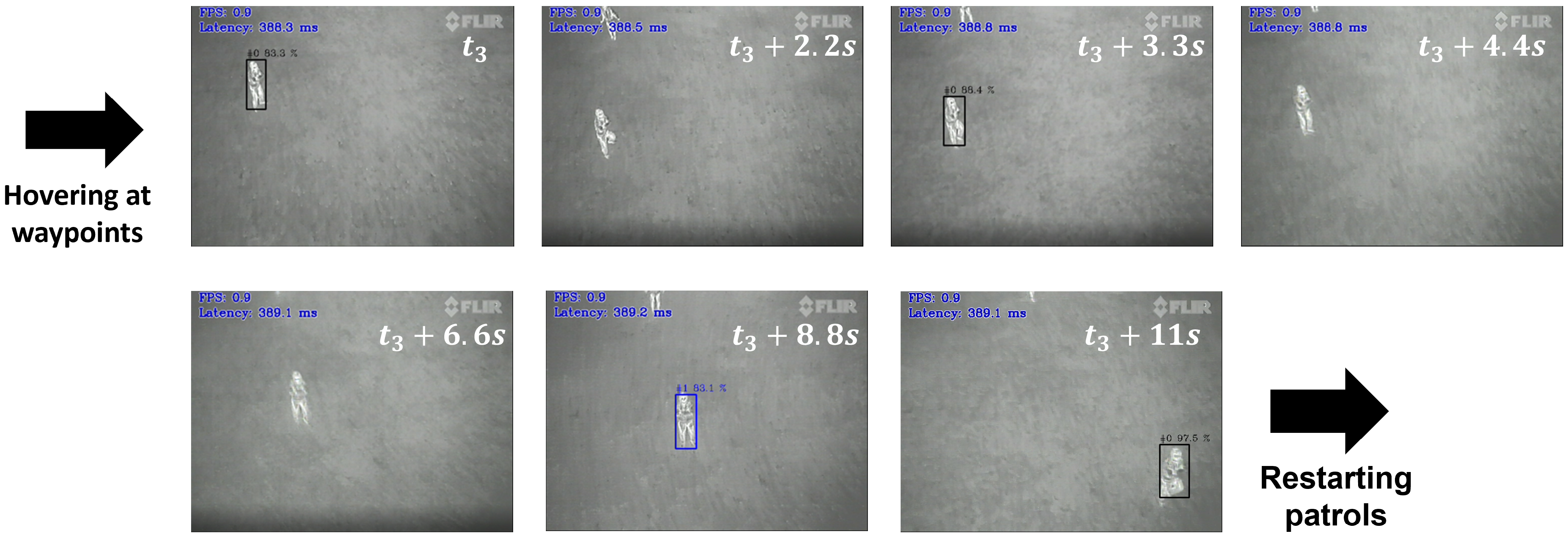

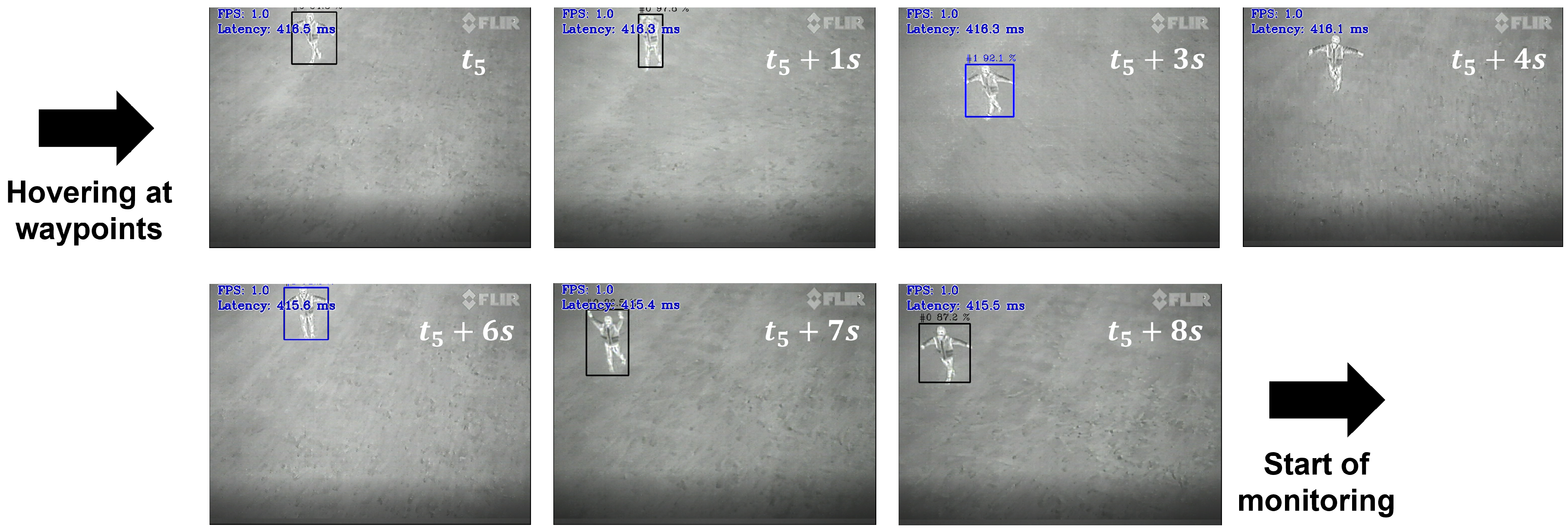

4.2.3. Hovering for Monitoring When Detecting Human Presence

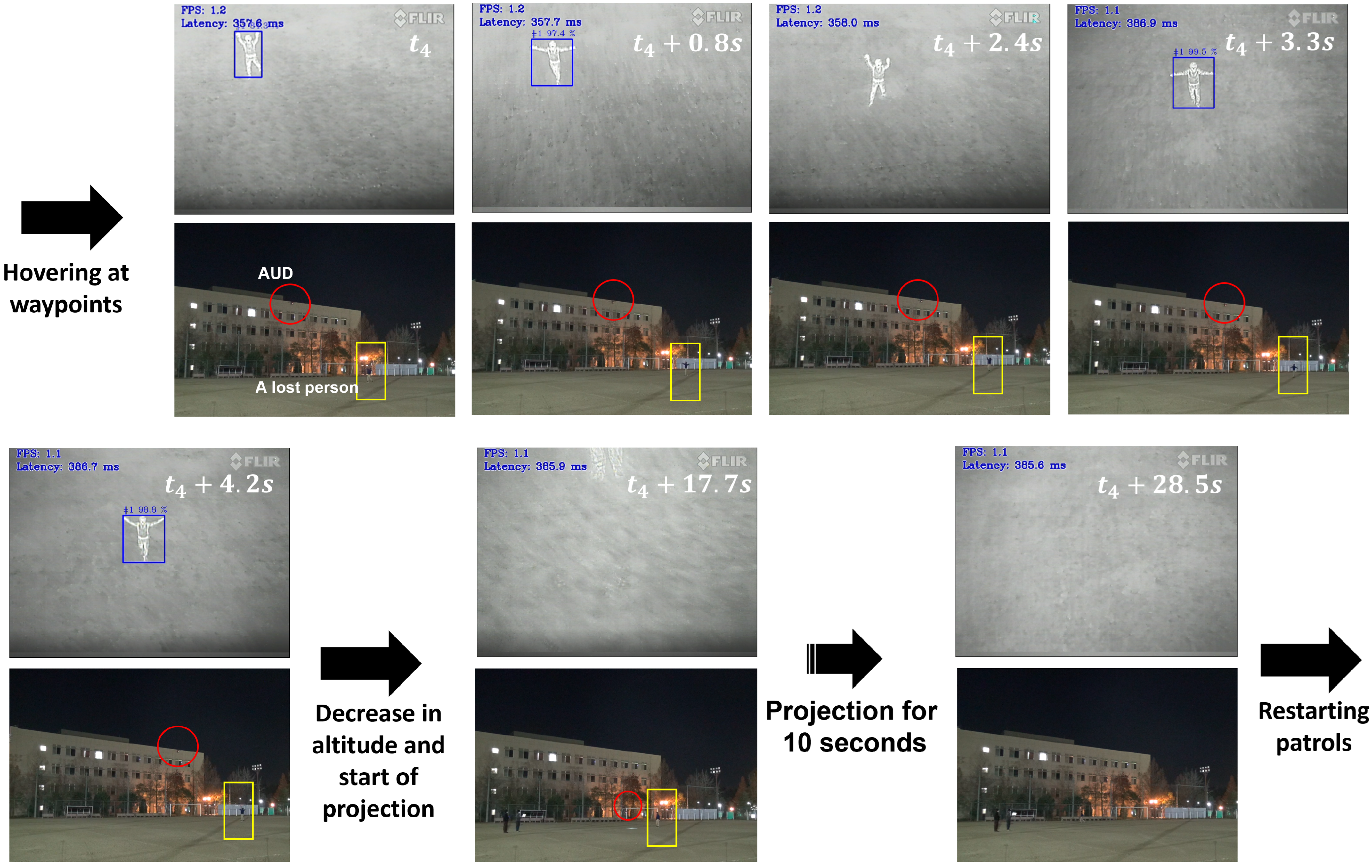

4.2.4. Decreasing Altitude and Hovering for Information Projection When Detecting Hand-Waving Behavior

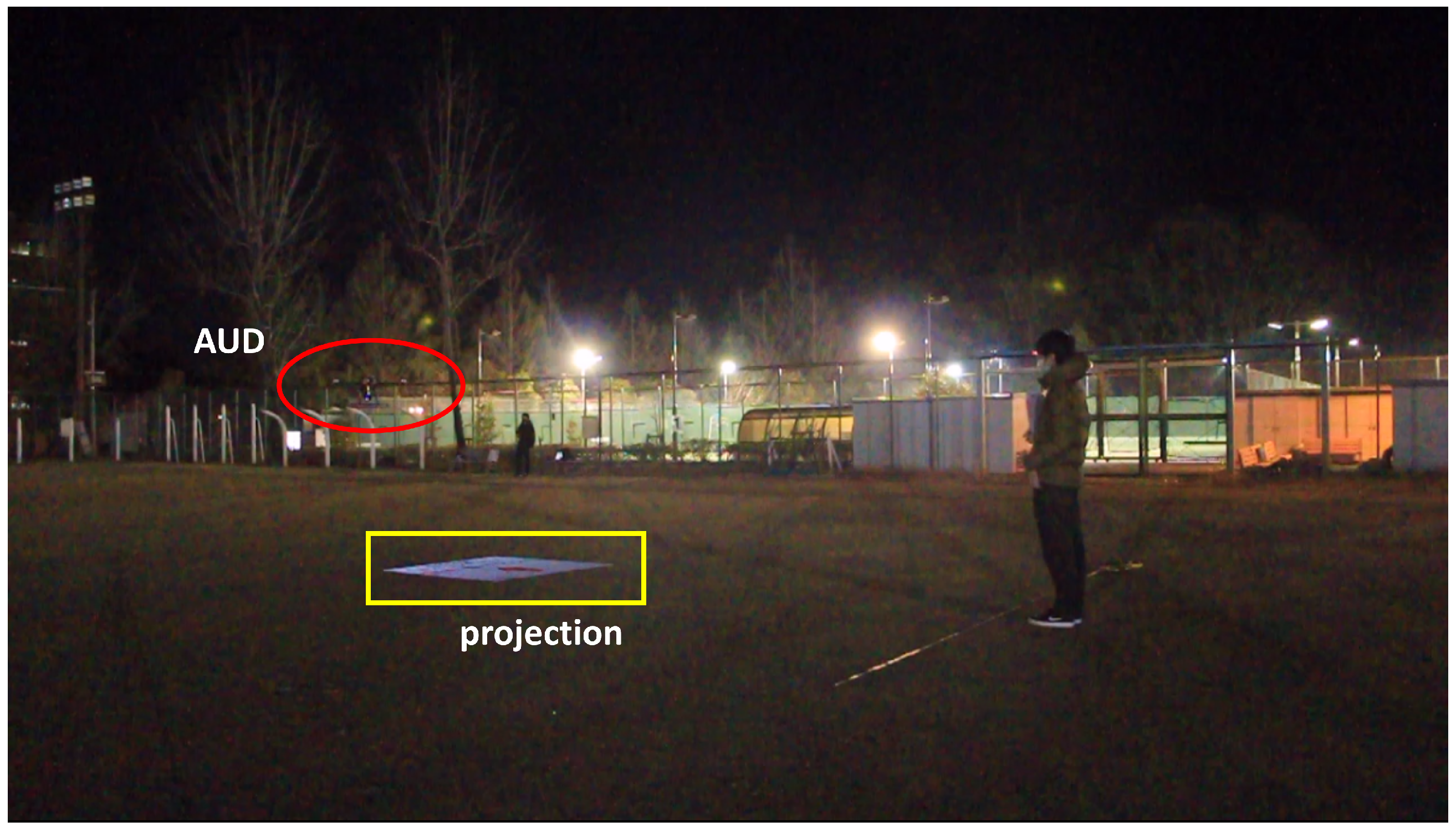

4.2.5. Projecting Information Using a Projector

5. Experiment

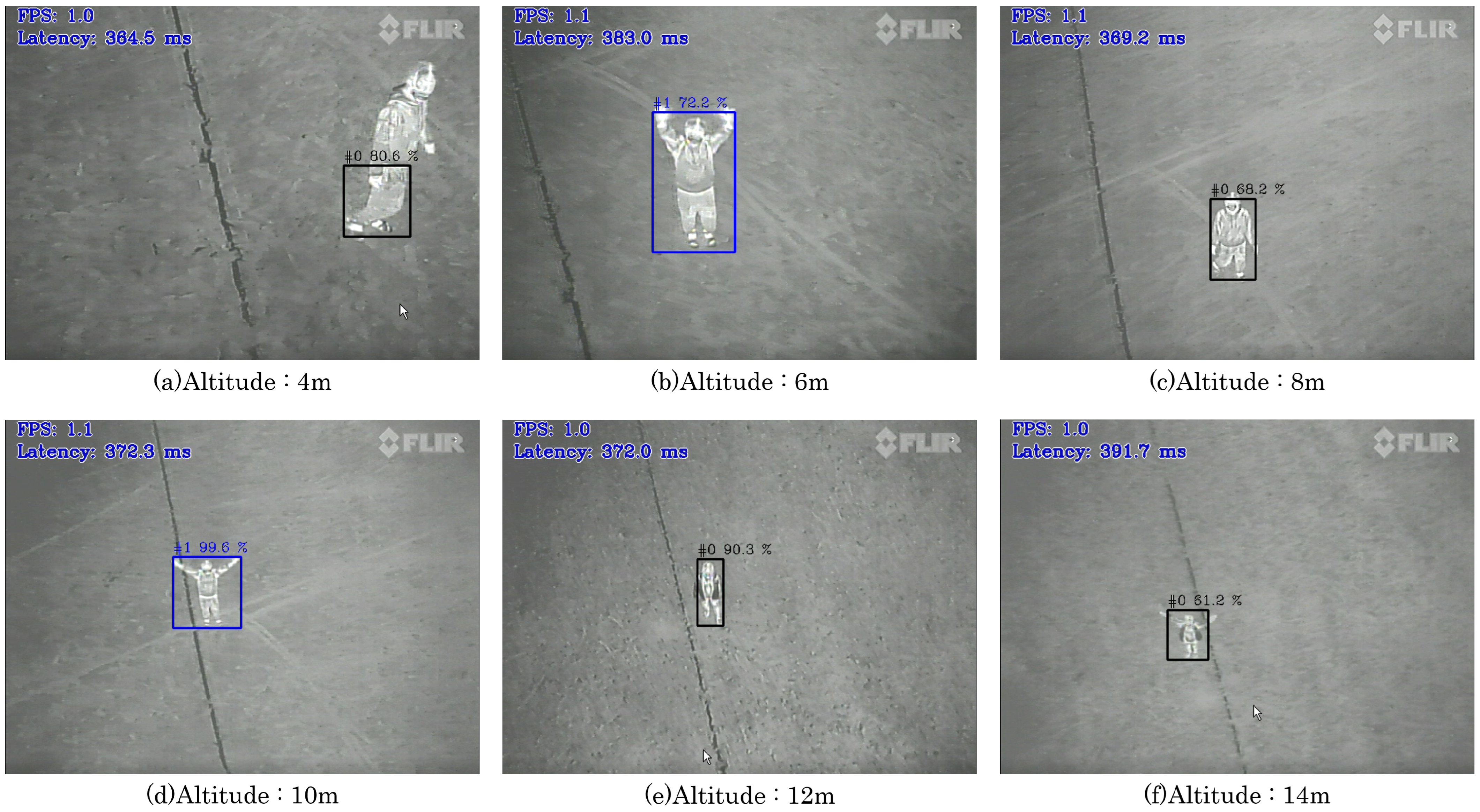

5.1. Experimental Details

5.1.1. Person Monitoring Scenario

5.1.2. Information Projection Scenario for Waving Person

5.1.3. Mixed Scenario of Monitoring and Information Projection

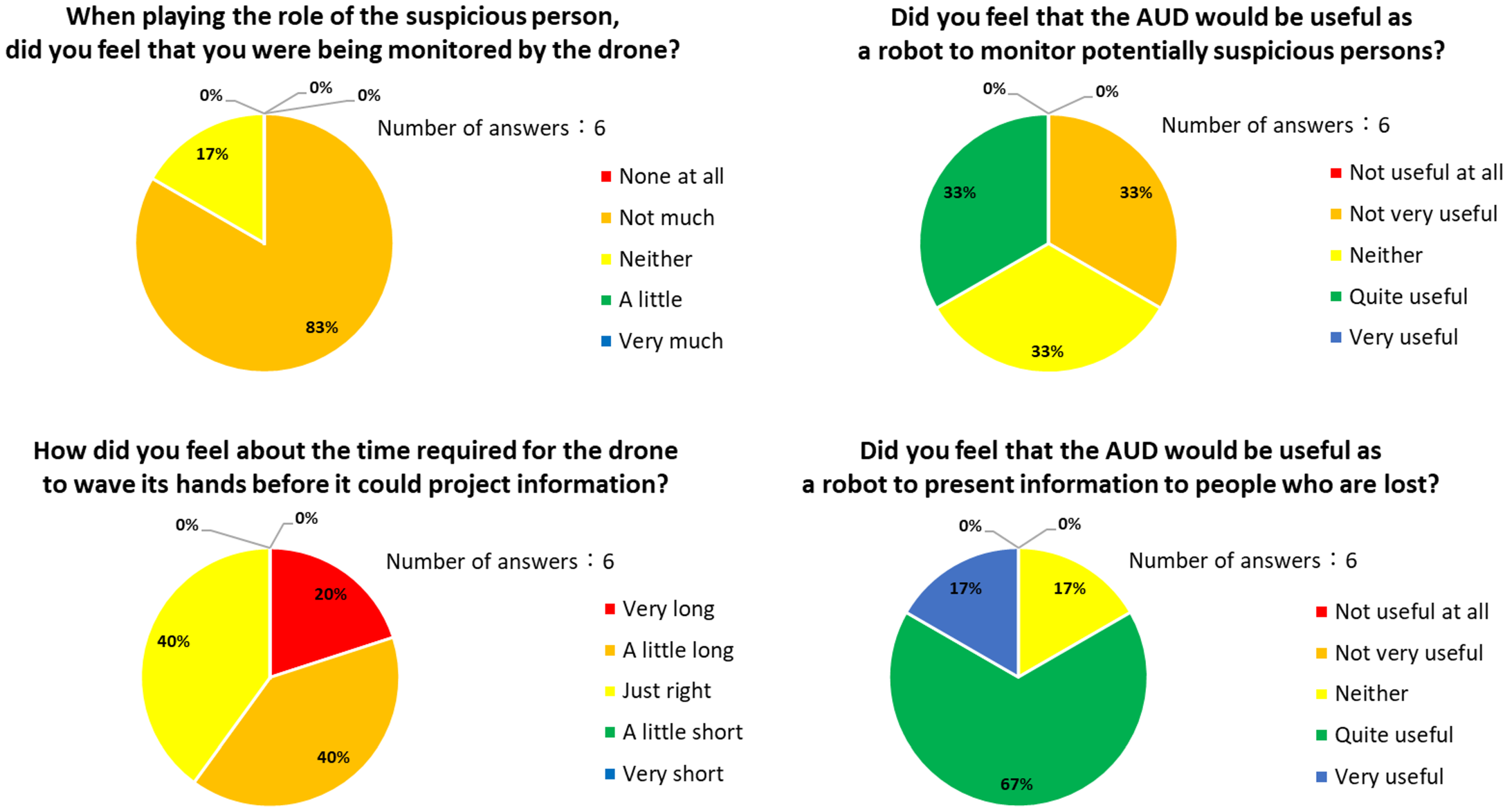

5.2. Evaluation Method

- Evaluation of the accuracy of decisions in action detection.

- Evaluation of monitoring and information projection through questionnaires.

6. Results

7. Discussion

7.1. Discussion of Monitoring for Possible Suspicious Persons

7.2. Discussion of Information Projection to a Waving Person

7.3. Discussion of All Scenarios

8. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Interview Series: Human Resources and Internal Fraud in Japan. Available online: https://www.jnsa.org/result/soshiki/09_ajssa.html (accessed on 28 April 2023). (In Japanese).

- Job Openings-To-Applicants Ratio Japan FY 1981–2020. Available online: https://www.statista.com/statistics/1246267/japan-job-openings-to-applicants-ratio/ (accessed on 28 April 2023).

- 80% of Those Who Considered Leaving Their Jobs in the Security Industry, the Key to Continuing Employment Was Being Able to WORK at Their Own Pace. Available online: https://www.baitoru.com/dipsouken/all/detail/id=481 (accessed on 28 April 2023). (In Japanese).

- Shimosasa, Y.; Wakabayashi, K.; Moriguchi, T.; Sugiura, M.; Fujise, H.; Kotani, K. Development and Safety Policy of the Outdoor Security Robot ALSOK Guardrobo i. J. Robot. Soc. Jpn. 2006, 24, 156–158. (In Japanese) [Google Scholar] [CrossRef]

- Security and Guidance Robot REBORG-Z. Available online: https://www.alsok.co.jp/corporate/robot/reborg-x/ (accessed on 28 April 2023). (In Japanese).

- Shimosasa, Y.; Kanemoto, J.I.; Hakamada, K.; Horii, H.; Ariki, T.; Sugawara, Y.; Kojio, F.; Kimura, A.; Yuta, S. Some results of the test operation of a security service system with autonomous guard robot. In Proceedings of the 2000 26th Annual Conference of the IEEE Industrial Electronics Society. IECON 2000. 2000 IEEE International Conference on Industrial Electronics, Control and Instrumentation. 21st Century Technologies, Nagoya, Japan, 22–28 October 2000; Volume 1, pp. 405–409. [Google Scholar]

- Saitoh, M.; Takahashi, Y.; Sankaranarayanan, A.; Ohmachi, H.; Marukawa, K. A mobile robot testbed with manipulator for security guard application. In Proceedings of the 1995 IEEE International Conference on Robotics and Automation, Nagoya, Japan, 21–27 May 1995; Volume 3, pp. 2518–2523. [Google Scholar]

- Fully Autonomous Drone Security System Equipped with AI indoors at Tokyo Sky Tree Town®. Realizing Manpower Saving and Efficiency in Security by Using Drones. Available online: https://www.alsok.co.jp/company/news/news_details.htm?cat=2&id2=1039 (accessed on 28 April 2023). (In Japanese).

- Mishra, B.; Garg, D.; Narang, P.; Mishra, V. Drone-surveillance for search and rescue in natural disaster. Comput. Commun. 2020, 156, 1–10. [Google Scholar] [CrossRef]

- Flammini, F.; Naddei, R.; Pragliola, C.; Smarra, G. Towards Automated Drone Surveillance in Railways: State-of-the-Art and Future Directions. In Proceedings of the Advanced Concepts for Intelligent Vision Systems: 17th International Conference, ACIVS 2016, Lecce, Italy, 24–27 October 2016; pp. 336–348. [Google Scholar]

- Srivastava, A.; Badal, T.; Garg, A.; Vidyarthi, A.; Singh, R. Recognizing human violent action using drone surveillance within real-time proximity. J. Real-Time Image Process. 2021, 18, 1851–1863. [Google Scholar] [CrossRef]

- Iwashita, Y.; Ryoo, M.S.; Fuchs, T.J.; Padgett, C. Recognizing Humans in Motion: Trajectory-based Aerial Video Analysis. In Proceedings of the British Machine Vision Conference, Bristol, UK, 9–13 September 2013. [Google Scholar]

- Wojciechowska, A.; Frey, J.; Sass, S.; Shafir, R.; Cauchard, J.R. Collocated human-drone interaction: Methodology and approach strategy. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019; pp. 172–181. [Google Scholar]

- Kanda, T. Research on Human Information Processing Using Robotic Media: Spatial Arrangement in Human-robot Interaction. IPSJ Mag. 2008, 49, 24–29. (In Japanese) [Google Scholar]

- Walters, M.L.; Dautenhahn, K.; Woods, S.N.; Koay, K.L. Robotic etiquette: Results from user studies involving a fetch and carry task. In Proceedings of the 2007 2nd ACM/IEEE International Conference on Human-Robot Interaction (HRI), Arlington, VA, USA, 8–11 March 2007; pp. 317–324. [Google Scholar]

- Butler, J.T.; Agah, A. Psychological effects of behavior patterns of a mobile personal robot. Auton. Robot. 2001, 10, 185–202. [Google Scholar] [CrossRef]

- Kakiuchi, R.; Tran, D.T.; Lee, J.H. Inspection of the Most Suitable Approach and Information Projection Method for Interactions in the Night Flight of a Projector-Mounted Drone. J. Robot. Mechatron. 2022, 34, 1441–1450. [Google Scholar] [CrossRef]

- ArduPilot. Available online: https://ardupilot.org/ (accessed on 28 April 2023).

- ArduPilot. “Copter Home”. Available online: https://ardupilot.org/copter/ (accessed on 28 April 2023).

- ArduPilot, “Mission Planner Home”. Available online: https://ardupilot.org/planner/ (accessed on 28 April 2023).

- Imazaike, T.; Tuan, T.D.; Lee, J.-H. Human Action Recognition Using Infrared Camera for Night Security by Drone; SICE SI System Integration Division: Fukuoka, Japan, 2020. (In Japanese) [Google Scholar]

- DroneKit. Available online: https://dronekit.io/ (accessed on 28 April 2023).

- GitHub Dronekit/Dronekit-Python. Available online: https://github.com/dronekit/dronekit-python (accessed on 28 April 2023).

- Bradley, M.M.; Lang, P.J. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef] [PubMed]

| Size (W, D, H) | 750, 750, 372 mm |

| Weight | 3650 g |

| The weight of a load (cameras, projectors, etc.) | 650 g |

| Payload (possible loadings other than main frame and battery) | 1500 g |

| Flight time | 20 min |

| Battery capacity | 8000 mAh |

| Voltage | 22.2 V |

| Altitude (m) | TP | FP | FN | TN | Precision | Recall | F-Measure | Sound Level (dB) |

|---|---|---|---|---|---|---|---|---|

| 4 | 0 | 6 | 11 | 19 | 0 | 0 | 0 | 73.1 |

| 6 | 20 | 9 | 3 | 4 | 0.69 | 0.87 | 0.770 | 72.2 |

| 8 | 24 | 8 | 4 | 6 | 0.75 | 0.86 | 0.801 | 70.6 |

| 10 | 39 | 8 | 1 | 5 | 0.83 | 0.98 | 0.899 | 68.2 |

| 12 | 30 | 7 | 3 | 3 | 0.81 | 0.91 | 0.857 | 66.3 |

| 14 | 22 | 8 | 7 | 2 | 0.73 | 0.76 | 0.745 | 66.4 |

| No. | Question Content |

|---|---|

| I | When playing the role of the suspicious person, did you feel that you were being monitored by the drone? |

| II | Did you feel that the AUD would be useful as a robot to monitor potentially suspicious persons? |

| III | How did you feel about the time required for the drone to wave its hands before it could project information? (Only subjects who successfully determined the waving behavior in the role of a lost person answered). |

| IV | Did you feel that the AUD would be useful as a robot to present information to people who are lost? |

| No. | Options |

|---|---|

| I | 1. None at all 2. Not much 3. Neither 4. A little 5. Very much |

| II | 1. Not useful at all 2. Not very useful 3. Neither 4. Quite useful 5. Very useful |

| III | 1. Very long 2. A little long 3. Just right 4. A little short 5. Very short |

| IV | 1. Not useful at all 2. Not very useful 3. Neither 4. Quite useful 5. Very useful |

| Determining Potential Suspicious Persons | Determining Who Lost Their Way | |||||

|---|---|---|---|---|---|---|

| Success | Failure | Success Rate | Success | Failure | Success Rate | |

| Monitoring scenario 1 | 2 | 1 | 66.7% (2/3) | - | - | - |

| Monitoring scenario 2 | 2 | 2 | 50.0% (2/4) | - | - | - |

| Projection scenario 1 | - | - | - | 3 | 0 | 100% (3/3) |

| Projection scenario 2 | - | - | - | 3 | 1 | 75.0% (3/4) |

| Mixed scenario 1 | 2 | 0 | 100% (2/2) | 2 | 0 | 100% (2/2) |

| Mixed scenario 2 | 1 | 1 | 50.0% (1/2) | 1 | 1 | 50.0% (1/2) |

| Success | Failure | Success Rate | |

|---|---|---|---|

| Determining potential suspicious persons | 7 | 4 | 63.6% (7/11) |

| Determining who lost their way | 9 | 2 | 81.8% (9/11) |

| Sum of decisions on possible suspicious persons and lost persons | 16 | 6 | 72.7% (16/22) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kakiuchi, R.; Tran, D.T.; Lee, J.-H. Evaluation of Human Behaviour Detection and Interaction with Information Projection for Drone-Based Night-Time Security. Drones 2023, 7, 307. https://doi.org/10.3390/drones7050307

Kakiuchi R, Tran DT, Lee J-H. Evaluation of Human Behaviour Detection and Interaction with Information Projection for Drone-Based Night-Time Security. Drones. 2023; 7(5):307. https://doi.org/10.3390/drones7050307

Chicago/Turabian StyleKakiuchi, Ryosuke, Dinh Tuan Tran, and Joo-Ho Lee. 2023. "Evaluation of Human Behaviour Detection and Interaction with Information Projection for Drone-Based Night-Time Security" Drones 7, no. 5: 307. https://doi.org/10.3390/drones7050307

APA StyleKakiuchi, R., Tran, D. T., & Lee, J.-H. (2023). Evaluation of Human Behaviour Detection and Interaction with Information Projection for Drone-Based Night-Time Security. Drones, 7(5), 307. https://doi.org/10.3390/drones7050307