Repeated UAV Observations and Digital Modeling for Surface Change Detection in Ring Structure Crater Margin in Plateau

Abstract

1. Introduction

2. Research Method

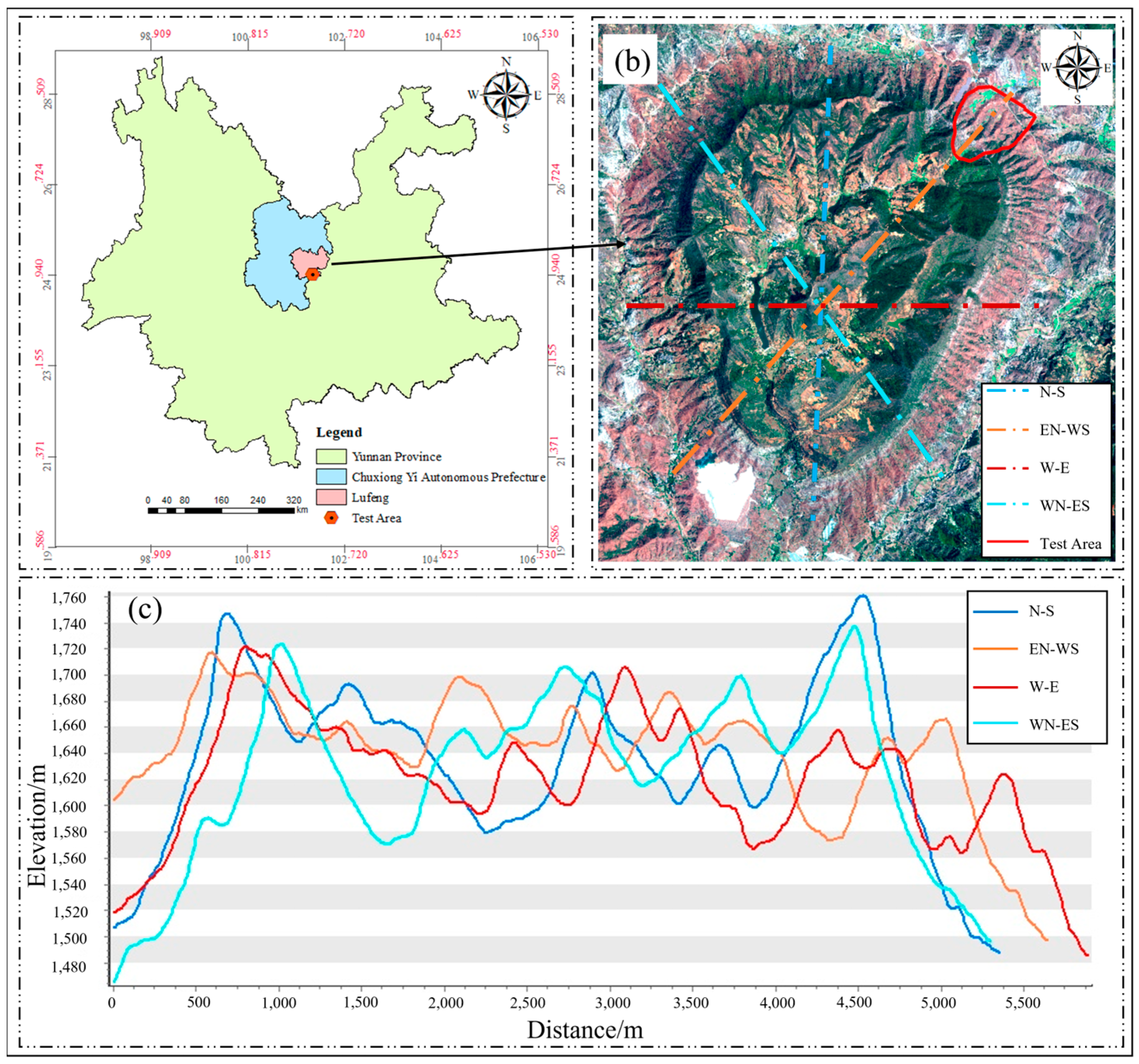

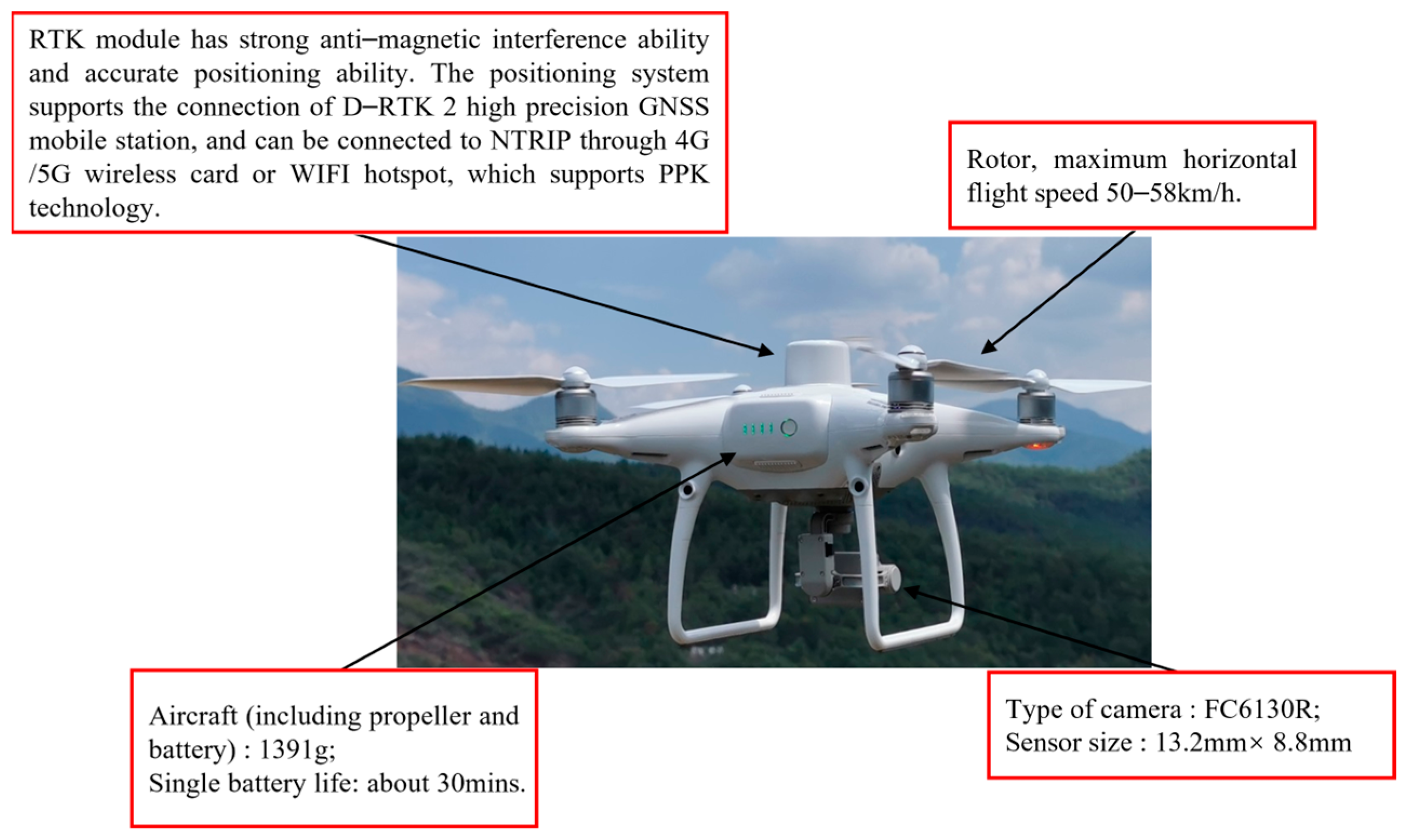

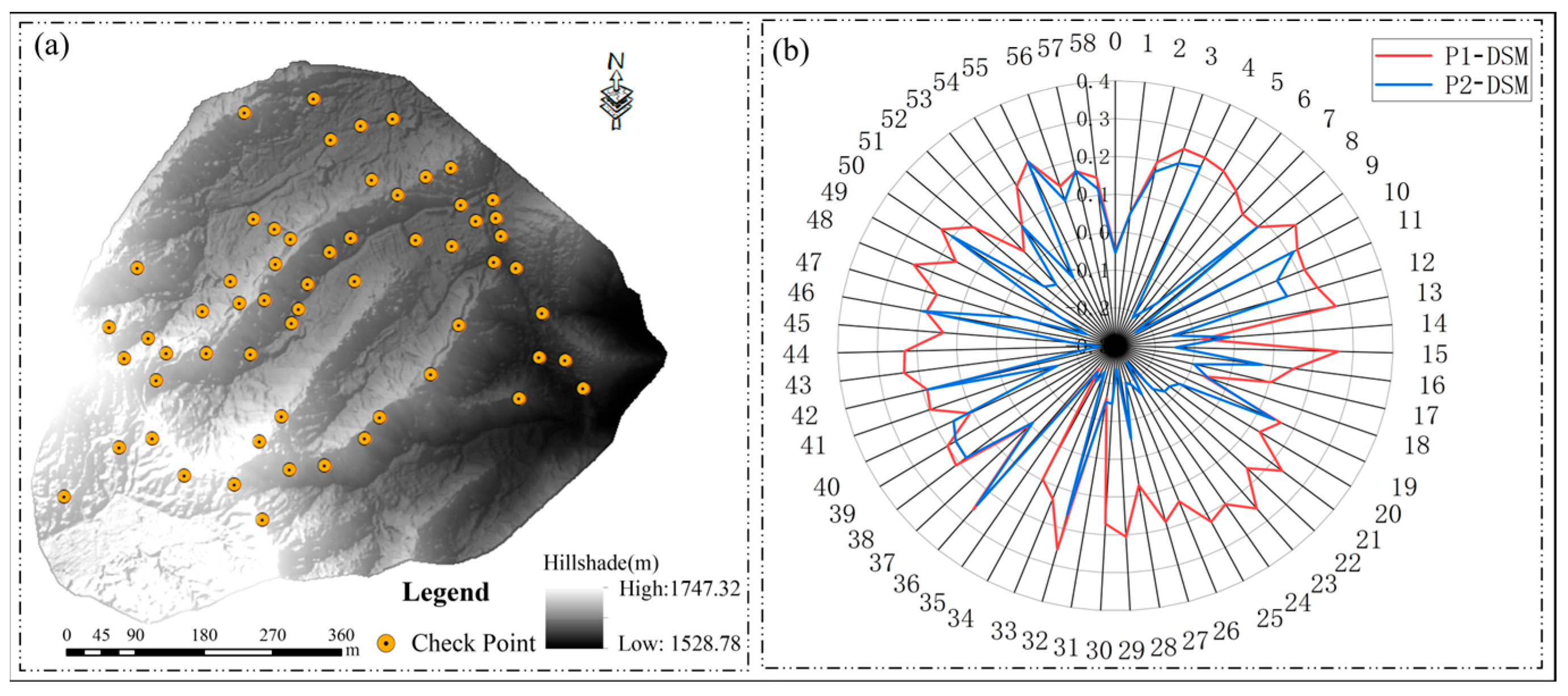

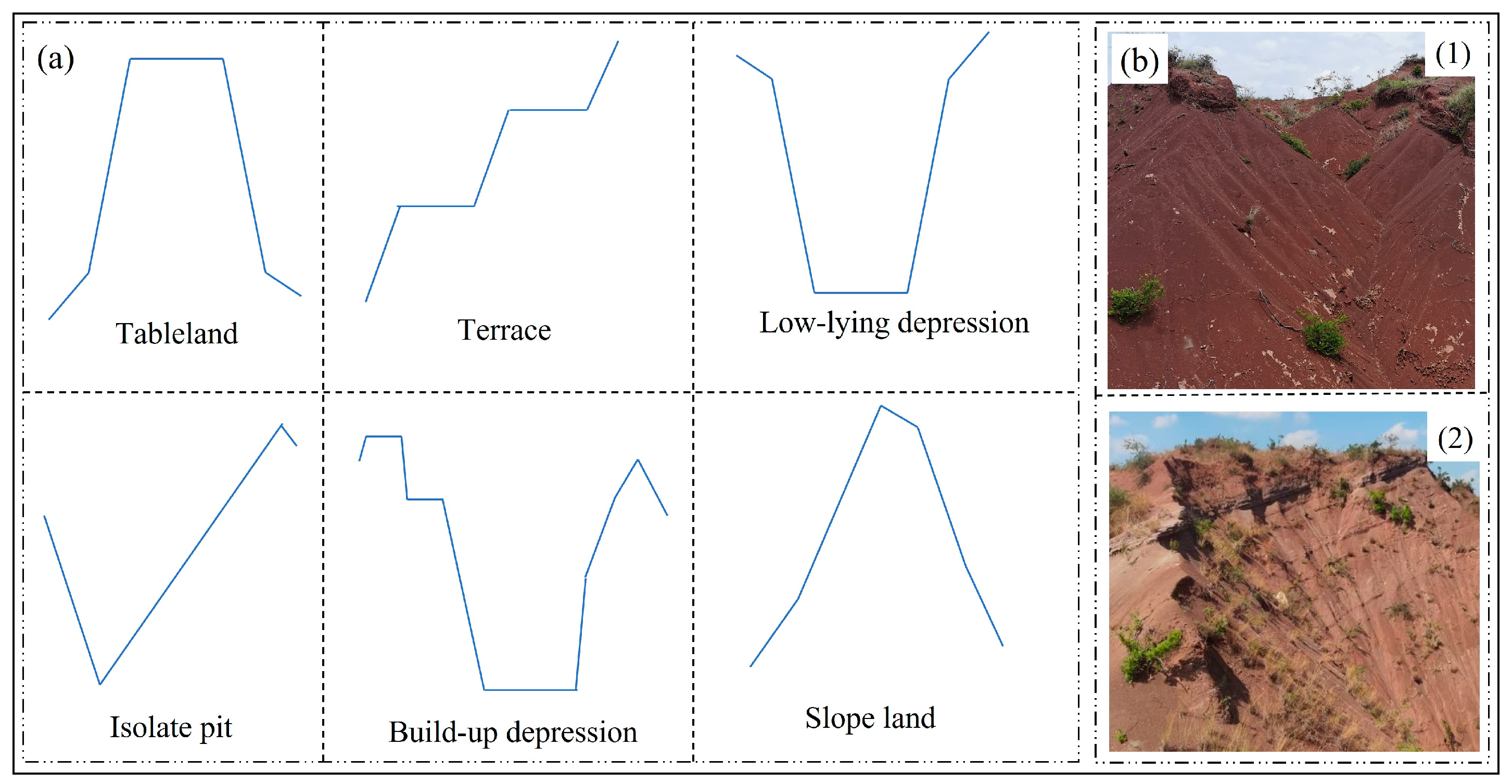

2.1. Test Area and Data Collection

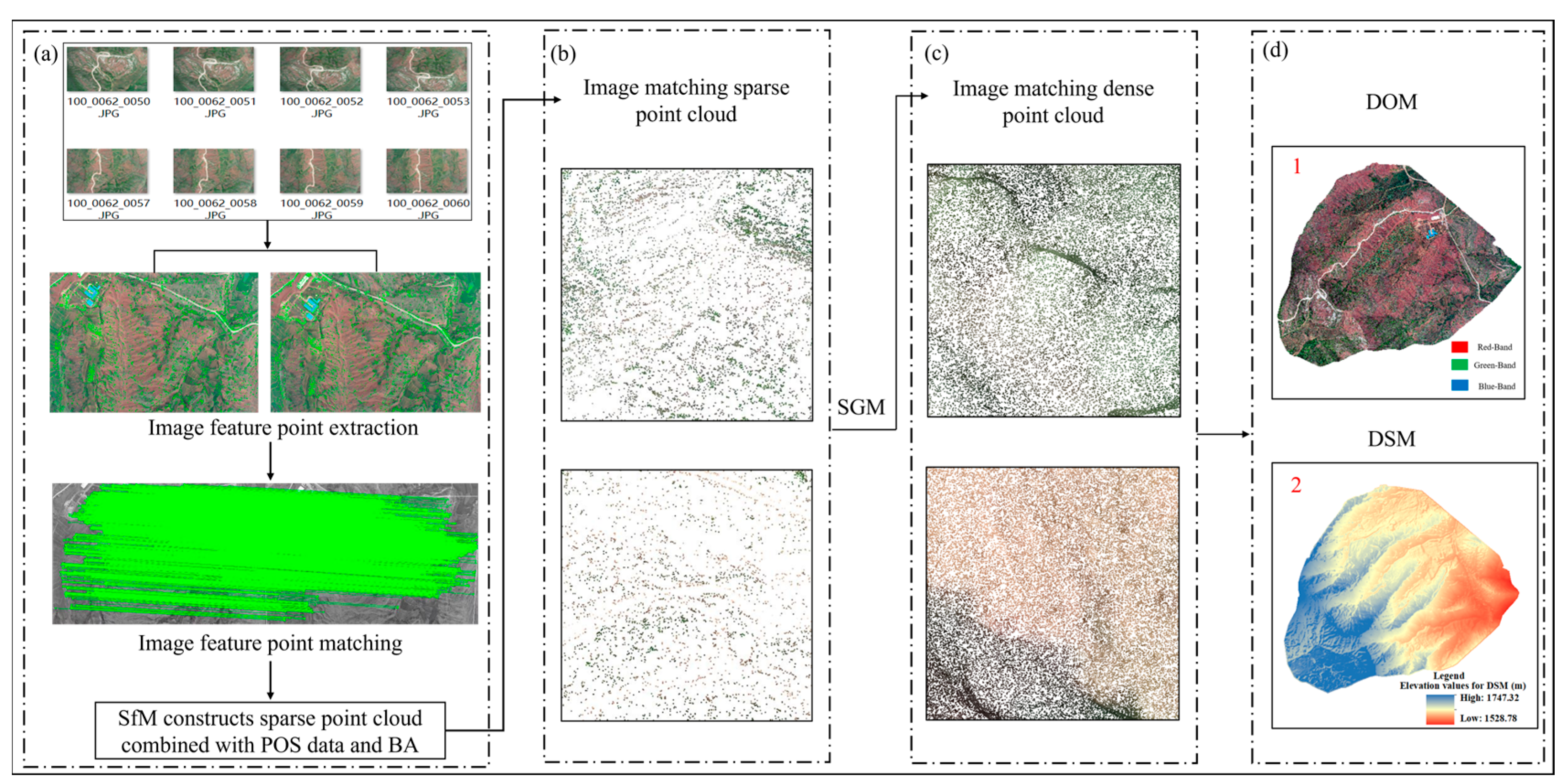

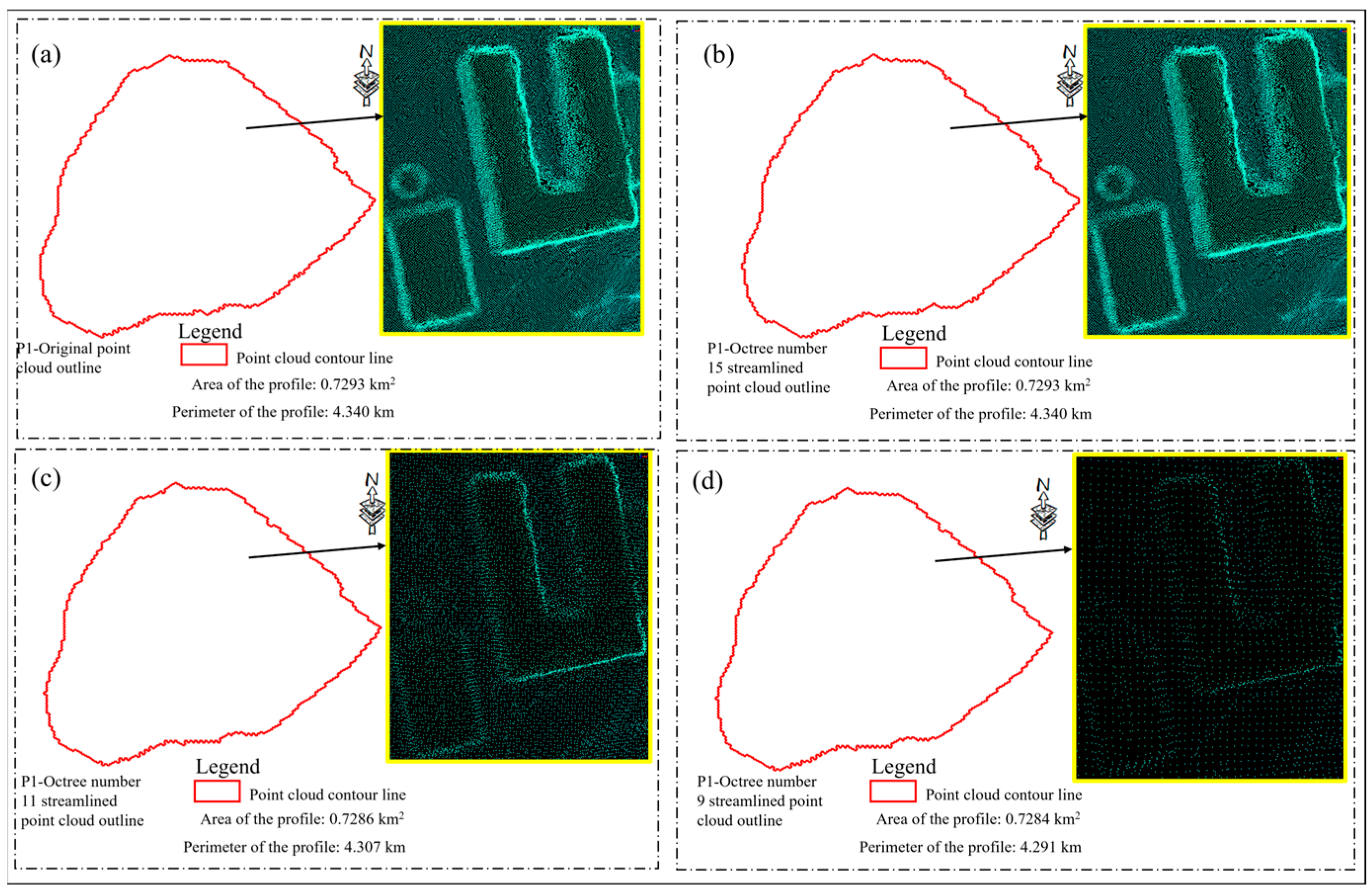

2.2. UAV Digital Model Building Techniques

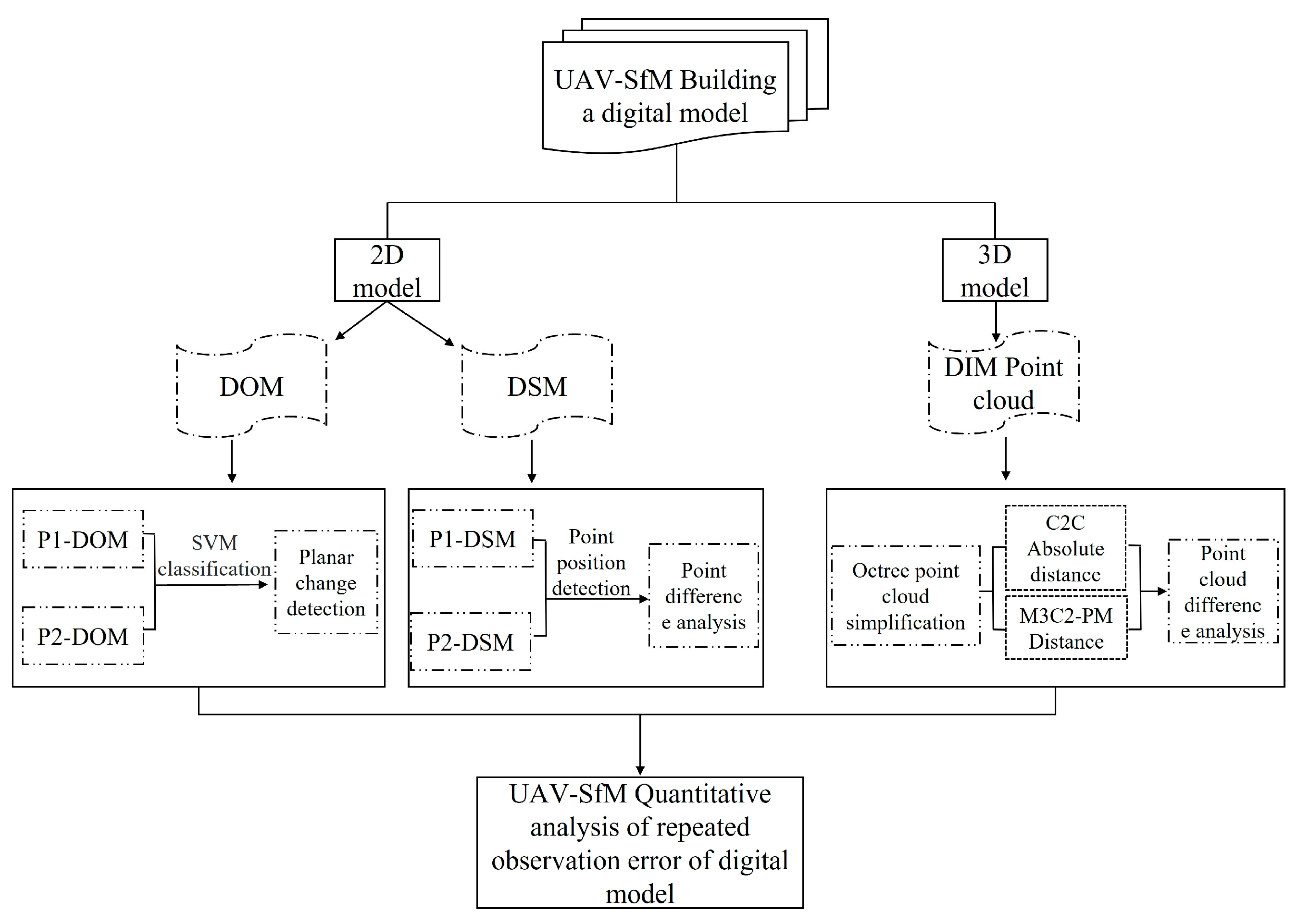

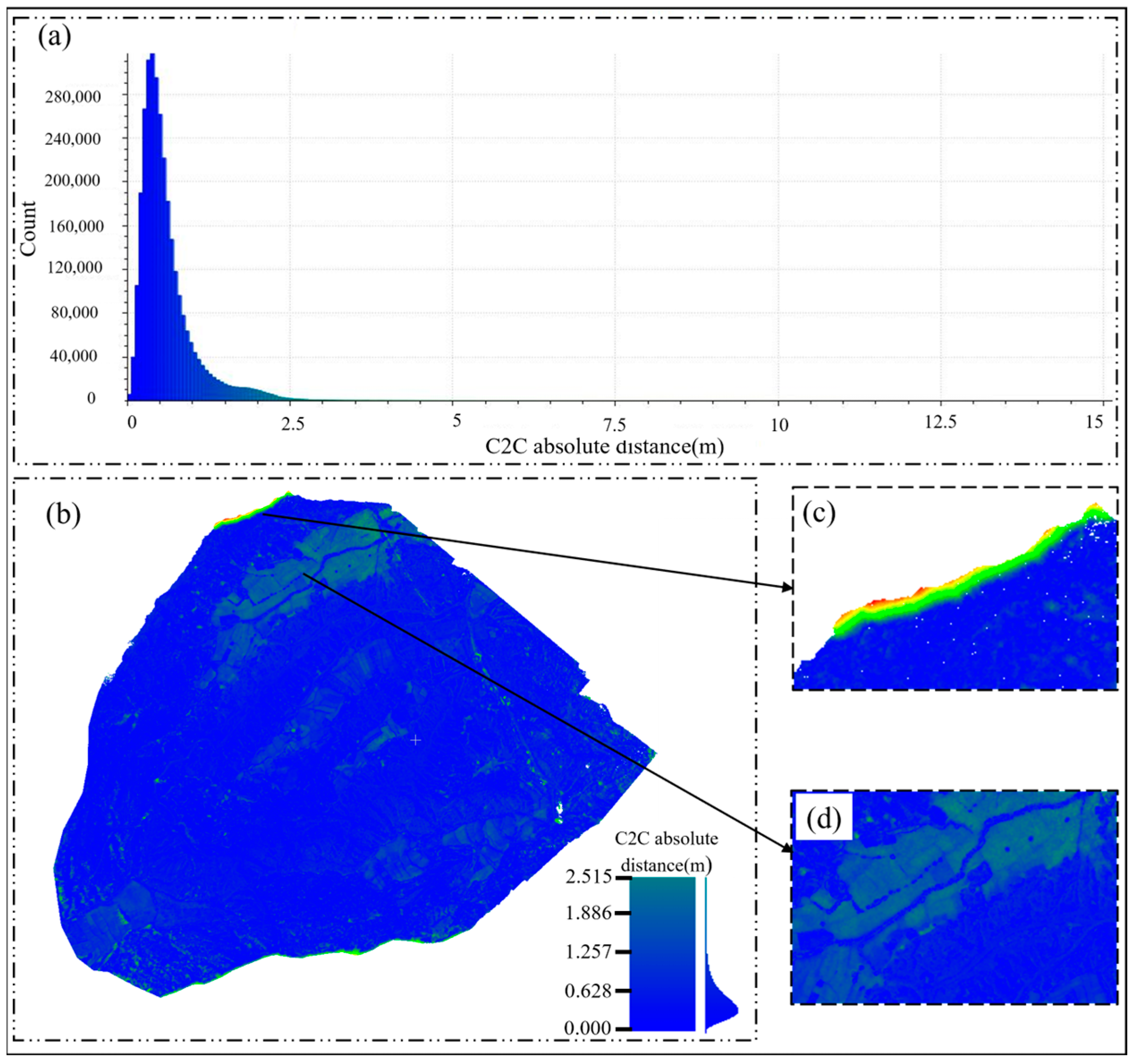

2.3. UAV Technical Framework for Quantification of Repeated Observation Errors

3. Test Results and Analysis

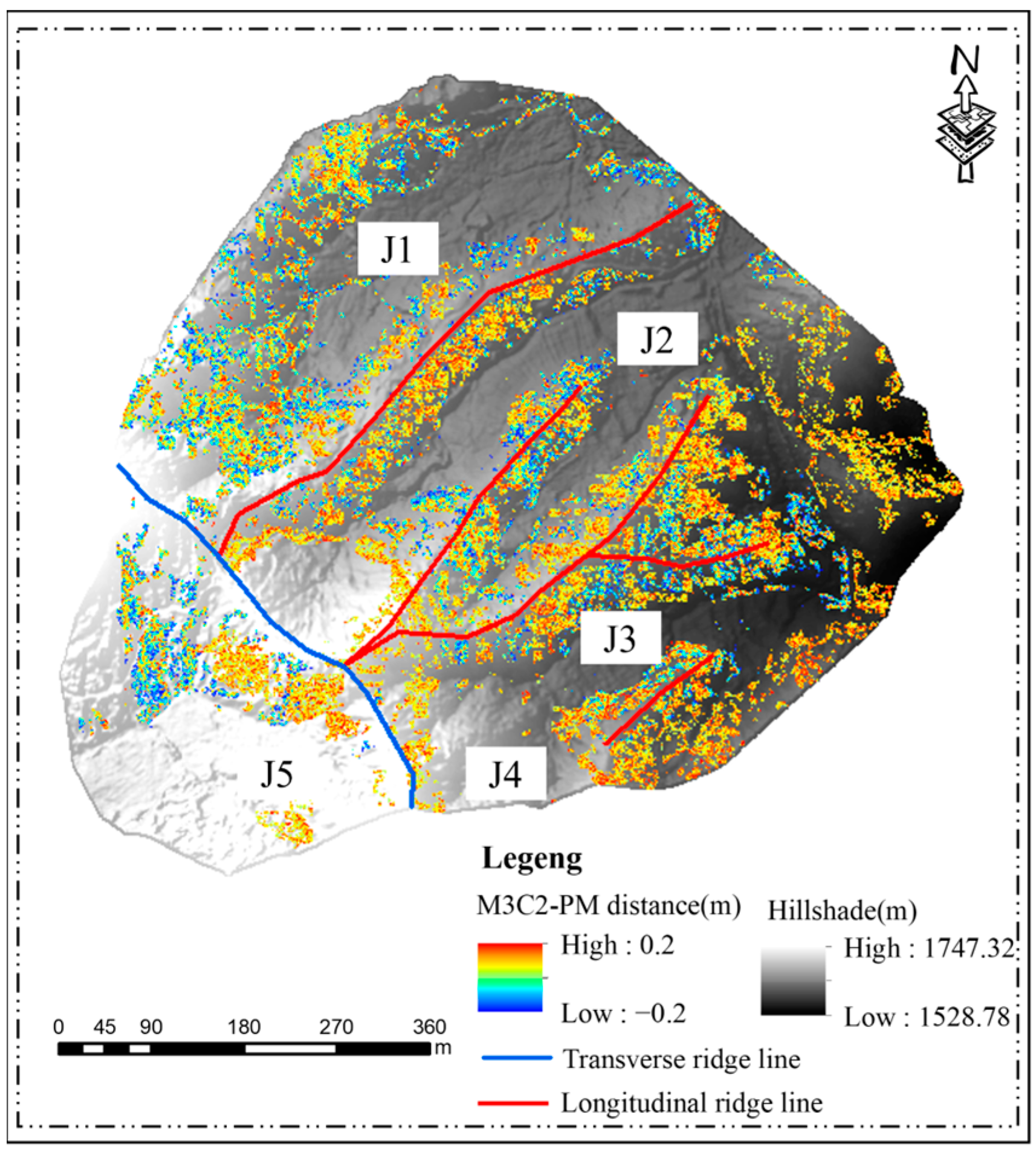

3.1. Two Phases of DSM Point Surface Change Detection Results and Analysis

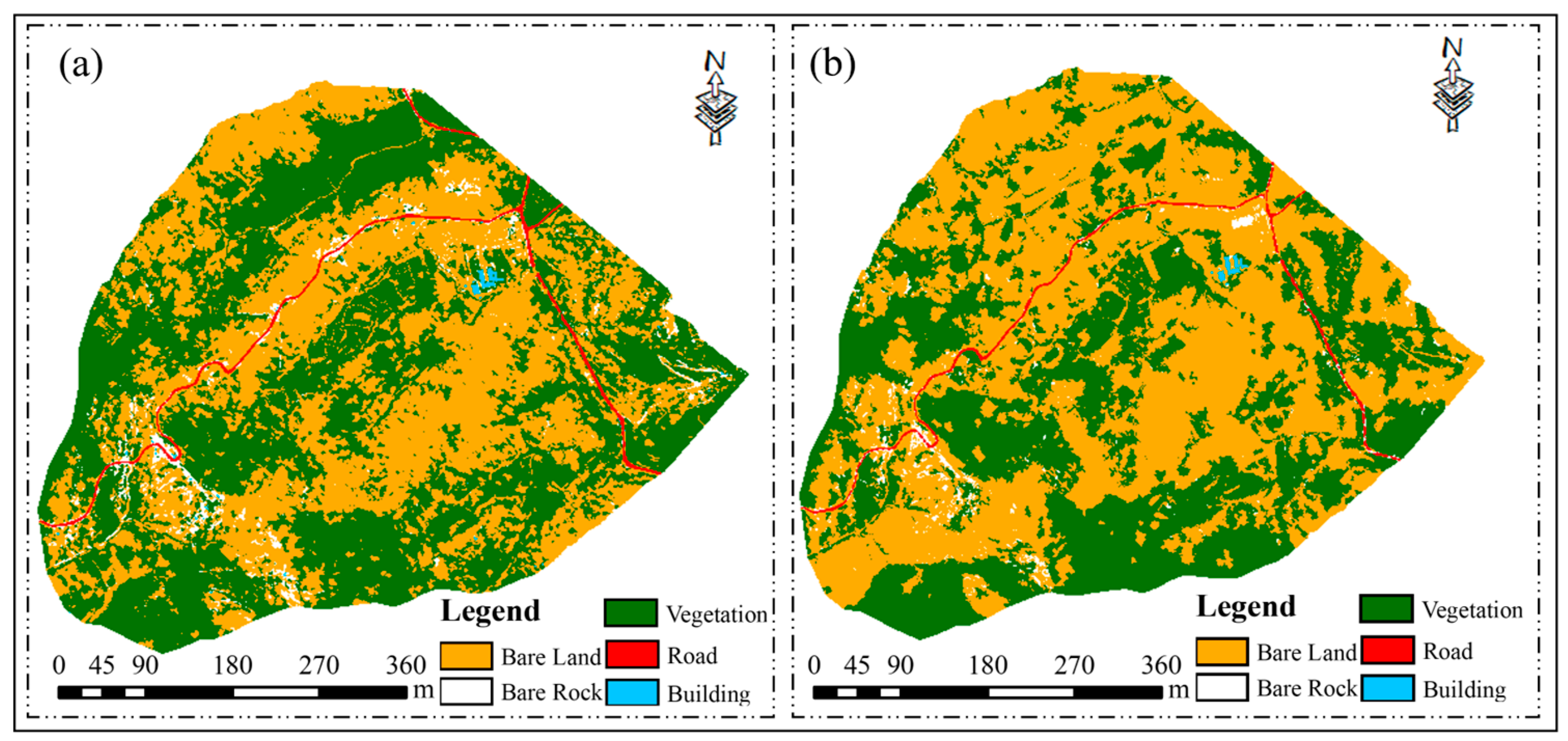

3.2. Two Phases of DOM Surface Change Detection Results and Analysis

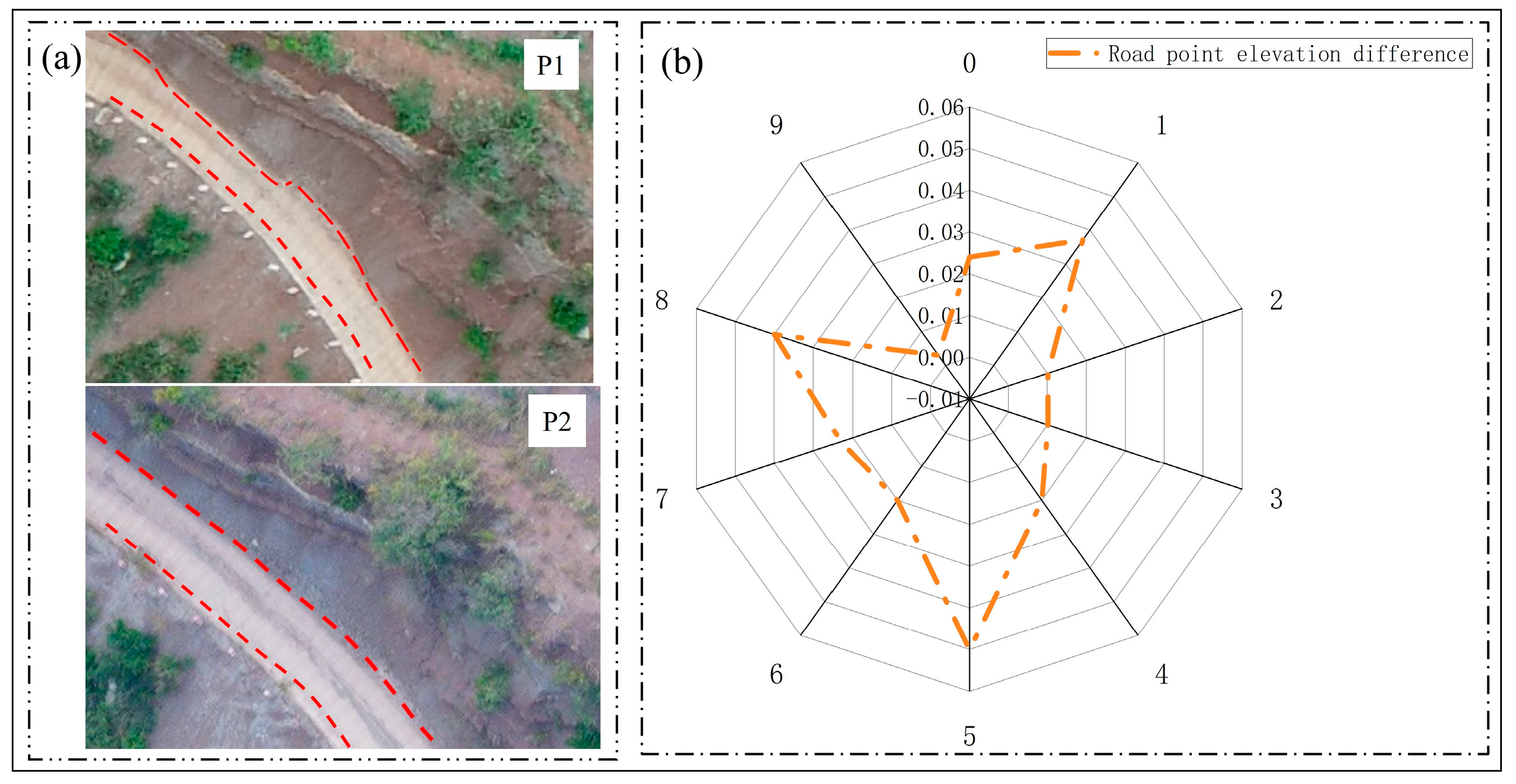

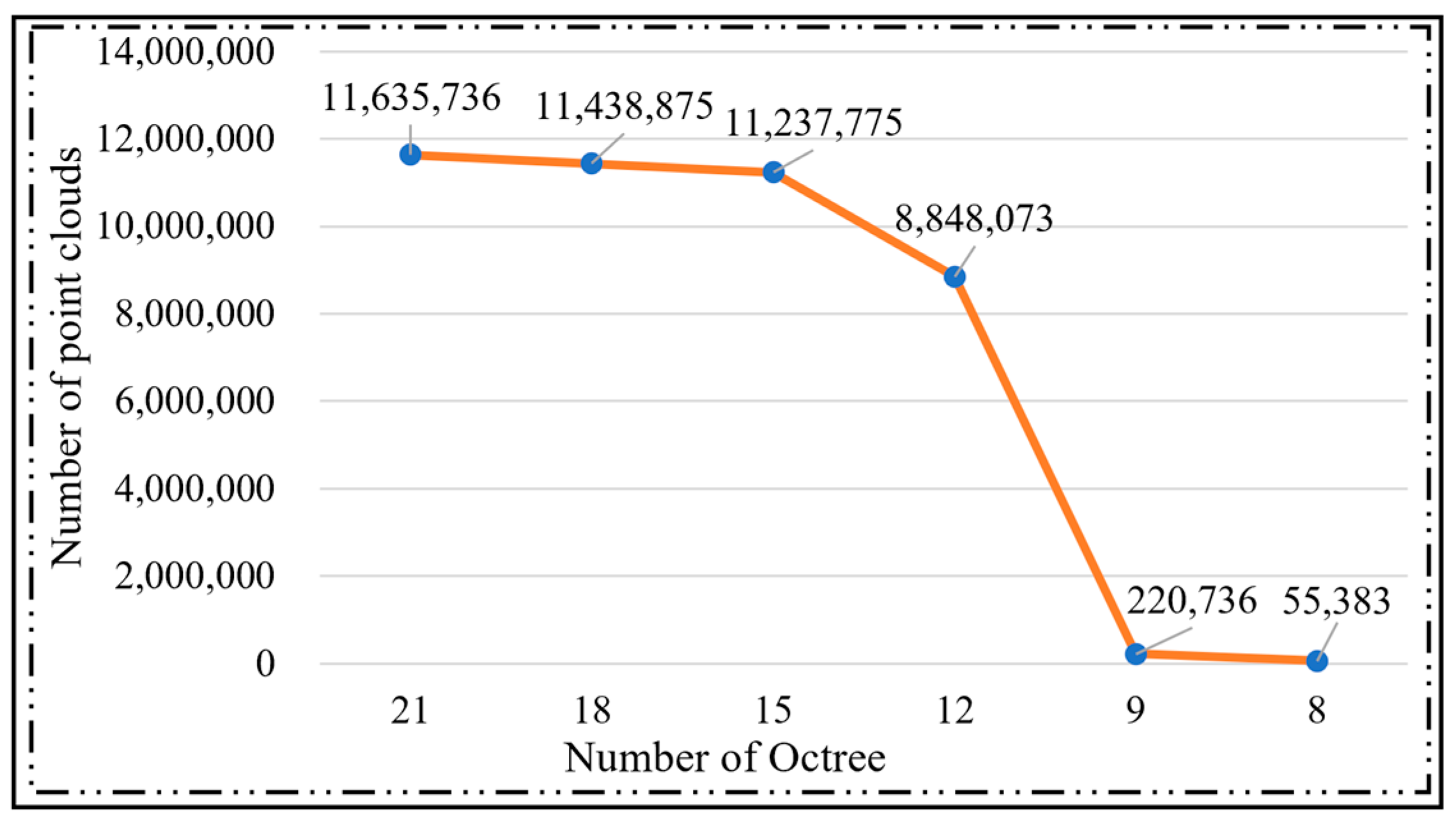

3.3. Two Phases of DIM Point Cloud Bulk Surface Change Detection Results and Analysis

4. Results and Discussion

4.1. Results

4.2. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Uzkeda, H.; Poblet, J.; Magan, M.; Bulnes, M.; Martin, S.; Fernandez-Martinez, D. Virtual outcrop models: Digital techniques and an inventory of structural models from North-Northwest Iberia (Cantabrian Zone and Asturian Basin). J. Struct. Geol. 2022, 157, 104568–104585. [Google Scholar] [CrossRef]

- He, H.; Ye, H.; Xu, C.; Liao, X. Exploring the Spatial Heterogeneity and Driving Factors of UAV Logistics Network: Case Study of Hangzhou, China. ISPRS Int. J. Geo-Inf. 2022, 11, 419. [Google Scholar] [CrossRef]

- Hussain, Y.; Schlogel, R.; Innocenti, A.; Hamza, O.; Iannucci, R.; Martino, S.; Havenith, H.B. Review on the Geophysical and UAV-Based Methods Applied to Landslides. Remote Sens. 2022, 14, 4564. [Google Scholar] [CrossRef]

- Ilinca, V.; Șandric, I.; Chițu, Z.; Irimia, R.; Gheuca, I. UAV applications to assess short-term dynamics of slow-moving landslides under dense forest cover. Landslides 2022, 19, 1717–1734. [Google Scholar] [CrossRef]

- Marques, A.; Racolte, G.; Zanotta, D.C.; Menezes, E.; Cazarin, C.L.; Gonzaga, L.; Veronez, M.R. Adaptive Segmentation for Discontinuity Detection on Karstified Carbonate Outcrop Images From UAV-SfM Acquisition and Detection Bias Analysis. IEEE Access 2022, 10, 20514–20526. [Google Scholar] [CrossRef]

- Cho, J.; Lee, J.; Lee, B. Application of UAV Photogrammetry to Slope-Displacement Measurement. KSCE J. Civ. Eng. 2022, 26, 1904–1913. [Google Scholar] [CrossRef]

- Vollgger, S.A.; Cruden, A.R. Mapping folds and fractures in basement and cover rocks using UAV photogrammetry, Cape Liptrap and Cape Paterson, Victoria, Australia. J. Struct. Geol. 2016, 85, 168–187. [Google Scholar] [CrossRef]

- Gomez, C.; Setiawan, M.A.; Listyaningrum, N.; Wibowo, S.B.; Suryanto, W.; Darmawan, H.; Bradak, B.; Daikai, R.; Sunardi, S.; Prasetyo, Y.; et al. LiDAR and UAV SfM-MVS of Merapi Volcanic Dome and Crater Rim Change from 2012 to 2014. Remote Sens. 2022, 14, 5193. [Google Scholar] [CrossRef]

- Vecchi, E.; Tavasci, L.; De Nigris, N.; Gandolfi, S. GNSS and Photogrammetric UAV Derived Data for Coastal Monitoring: A Case of Study in Emilia-Romagna, Italy. J. Mar. Sci. Eng. 2021, 9, 1194. [Google Scholar] [CrossRef]

- Qian, G.Q.; Yang, Z.L.; Dong, Z.B.; Tian, M. Three-dimensional Morphological Characteristics of Barchan Dunes Based on Photogrammetry with A Multi-rotor UAV. J. Desert Res. 2019, 39, 18–25. [Google Scholar]

- Zhang, C.B.; Yang, S.T.; Zhao, C.S.; Lou, H.Z.; Zhang, Y.C.; Bai, J.; Wang, Z.W.; Guan, Y.B.; Zhang, Y. Topographic data accuracy verification of small consumer UAV. J. Remote Sens. 2018, 22, 185–195. [Google Scholar]

- Gao, S.P.; Ran, Y.K.; Wu, F.Y.; Xu, L.X.; Wang, H.; Liang, M.J. Using UAV photogrammetry technology to extract information of tectonic activity of complex alluvial fan—A case study of an alluvial fan in the southern margin of Barkol basin. Seismol. Geol. 2017, 39, 793–804. [Google Scholar]

- Clapuyt, F.; Vanacker, V.; Van Oost, K. Reproducibility of UAV-based earth topography reconstructions based on Structure-from-Motion algorithms. Geomorphology 2016, 260, 4–15. [Google Scholar] [CrossRef]

- Yu, J.J.; Kim, D.W.; Lee, E.J.; Son, S.W. Determining the Optimal Number of Ground Control Points for Varying Study Sites through Accuracy Evaluation of Unmanned Aerial System-Based 3D Point Clouds and Digital Surface Models. Drones 2020, 4, 49. [Google Scholar] [CrossRef]

- Gao, S.; Gan, S.; Yuan, X.P.; Bi, R.; Li, R.B.; Hu, L.; Luo, W.D. Experimental Study on 3D Measurement Accuracy Detection of Low Altitude UAV for Repeated Observation of an Invariant Surface. Processes 2021, 10, 4. [Google Scholar] [CrossRef]

- Barba, S.; Barbarella, M.; Di Benedetto, A.; Fiani, M.; Gujski, L.; Limongiello, M. Accuracy Assessment of 3D Photogrammetric Models from an Unmanned Aerial Vehicle. Drones 2019, 3, 79. [Google Scholar] [CrossRef]

- Farella, E.M.; Torresani, A.; Remondino, F. Refining the Joint 3D Processing of Terrestrial and UAV Images Using Quality Measures. Remote Sens. 2020, 12, 2837. [Google Scholar] [CrossRef]

- Mousavi, V.; Varshosaz, M.; Rashidi, M.; Li, W.L. A New Multi-Criteria Tie Point Filtering Approach to Increase the Accuracy of UAV Photogrammetry Models. Drones 2022, 6, 413. [Google Scholar] [CrossRef]

- Xi, D.P.; Jiang, W.P. Research on 3D Modeling of Geographic Features and Integrating with Terrain Model. Bull. Surv. Mapp. 2011, 4, 23–25. [Google Scholar]

- Wang, L.; Guo, G.J.; Liu, Y. Structuring methods of geographic entities towards construction of smart cities. Bull. Surv. Mapp. 2022, 2, 20–24. [Google Scholar] [CrossRef]

- Huang, H.; Ye, Z.H.; Zhang, C.; Yue, Y.; Cui, C.Y.; Hammad, A. Adaptive Cloud-to-Cloud (AC2C) Comparison Method for Photogrammetric Point Cloud Error Estimation Considering Theoretical Error Space. Remote Sens. 2022, 14, 4289. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; Smith, M.W. 3-D uncertainty-based topographic change detection with structure-from-motion photogrammetry: Precision maps for ground control and directly georeferenced surveys. Earth Surf. Process. Landf. 2017, 42, 1769–1788. [Google Scholar] [CrossRef]

- Yan, L.; Fei, L.; Chen, C.H.; Ye, Z.Y.; Zhu, R.X. A Multi-View Dense Image Matching Method for High-Resolution Aerial Imagery Based on a Graph Network. Remote Sens. 2016, 8, 799. [Google Scholar] [CrossRef]

- Dong, Y.Q.; Zhang, L.; Cui, X.M.; Ai, H.B. An improved progressive triangular irregular network densification filtering method for the dense image matching point clouds. J. China Univ. Min. Technol. 2019, 48, 459–466. [Google Scholar] [CrossRef]

- Gao, S.; Yuan, X.P.; Gan, S.; Yang, M.L.; Hu, L.; Luo, W.D. Experimental analysis of spatial feature detection of the ring geomorphology at the south edge of Lufeng Dinosaur Valley based on UAV imaging point cloud. Bull. Geol. Sci. Technol. 2021, 40, 283–292. [Google Scholar] [CrossRef]

- Keshtkar, H.; Voigt, W.; Alizadeh, E. Land-cover classification and analysis of change using machine-learning classifiers and multi-temporal remote sensing imagery. Arab. J. Geosci. 2017, 10, 1813–1838. [Google Scholar] [CrossRef]

- Ai, Z.T.; An, R.; Lu, C.H.; Chen, Y.H. Mapping of native plant species and noxious weeds to investigate grassland degradation in the Three-River Headwaters region using HJ-1A/HSI imagery. Int. J. Remote Sens. 2020, 41, 1813–1838. [Google Scholar] [CrossRef]

- Fang, F.; Chen, X.J. A Fast Data Reduction Method for Massive Scattered Point Clouds Based on Slicing. Geomat. Inf. Sci. Wuhan Univ. 2013, 38, 1353–1357. [Google Scholar]

- Barnhart, T.B.; Crosby, B.T. Comparing Two Methods of Surface Change Detection on an Evolving Thermokarst Using High-Temporal-Frequency Terrestrial Laser Scanning, Selawik River, Alaska. Remote Sens. 2013, 5, 2813–2837. [Google Scholar] [CrossRef]

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef]

- Gong, Y.L.; Yan, L. Building Change Detection Based on Multi-Level Rules Classification with Airborne LiDAR Data and Aerial Images. Spectrosc. Spectr. Anal. 2015, 35, 1325–1330. [Google Scholar]

- Mao, X.G.; Hou, J.Y.; Bai, X.F.; Fan, W.Y. Multiscale Forest Gap Segmentation and Object-oriented Classification Based on DOM and LiDAR. Trans. Chin. Soc. Agric. Mach. 2017, 48, 152–159. [Google Scholar]

- DiFrancesco, P.M.; Bonneau, D.; Hutchinson, D.J. The Implications of M3C2 Projection Diameter on 3D Semi-Automated Rockfall Extraction from Sequential Terrestrial Laser Scanning Point Clouds. Remote Sens. 2020, 12, 1885. [Google Scholar] [CrossRef]

- Meng Hout, R.; Maleval, V.; Mahe, G.; Rouvellac, E.; Crouzevialle, R.; Cerbelaud, F. UAV and LiDAR Data in the Service of Bank Gully Erosion Measurement in Rambla de Algeciras Lakeshore. Water 2020, 12, 2478–2506. [Google Scholar]

- Zhou, F.B.; Liu, X.J. Research on the Automated Classification of Micro-land form Based on Grid DEM. J. Wuhan Univ. Technol. 2008, 2, 172–175. [Google Scholar]

- Zhou, F.B.; Zou, L.H.; Liu, X.J.; Meng, F.Y. Micro Landform Classification Method of Grid DEM Based on Convolutional Neural Network. Geomat. Inf. Sci. Wuhan Univ. 2021, 46, 1186–1193. [Google Scholar]

| UAV Platform | Camera Params | ||

|---|---|---|---|

| Type | DJI Phantom 4 RTK | Type | FC6310R |

| Maximum area for a single flight | 0.7 km2 | Sensor size | 13.2 mm × 8.8 mm |

| Hover time | 60 min | Photo size | 5472 × 3648/pixel |

| Highest working altitude | 1850 km | Pixel size | 2.41 μm |

| Maximum flight rate | 12 m/s | Camera focal length | 8.8 mm |

| Point Cloud Simplification | Number of Octree | Number of Point Clouds | Max Positive Distance (mm) | Max Negative Distance (mm) | Mean Positive Deviation (mm) | Mean Negative Deviation (mm) | Standard Deviation (mm) |

|---|---|---|---|---|---|---|---|

| P1 | 14 | 11,429,372 | 6.7108 | −4.2862 | 0.0211 | −0.0267 | 0.0349 |

| 13 | 11,236,415 | 8.2580 | −6.4234 | 0.0322 | −0.0359 | 0.0483 | |

| 12 | 8,848,073 | 12.1168 | −7.5916 | 0.0254 | −0.0302 | 0.0679 | |

| 11 | 3,152,781 | 6.7203 | −11.2484 | 0.0386 | −0.0394 | 0.0797 | |

| 10 | 858,742 | 29.2274 | −9.4400 | 0.0906 | −0.0852 | 0.1684 | |

| 9 | 220,736 | 5.9734 | −10.6810 | 0.1970 | −0.1927 | 0.2792 | |

| P2 | 14 | 11,346,632 | 0.2214 | −0.2111 | 0.0213 | −0.0226 | 0.0344 |

| 13 | 11,183,022 | 0.1617 | −0.1653 | 0.0240 | −0.0289 | 0.0372 | |

| 12 | 8,117,006 | 3.1165 | −1.3441 | 0.0295 | −0.0332 | 0.0433 | |

| 11 | 2,855,882 | 0.4111 | −4.4794 | 0.0431 | −0.0465 | 0.0660 | |

| 10 | 856,353 | 1.0998 | −6.3910 | 0.1018 | −0.1052 | 0.1429 | |

| 9 | 224,668 | 7.5991 | −9.5990 | 3.3294 | −2.3203 | 0.4573 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, W.; Gan, S.; Yuan, X.; Gao, S.; Bi, R.; Chen, C.; He, W.; Hu, L. Repeated UAV Observations and Digital Modeling for Surface Change Detection in Ring Structure Crater Margin in Plateau. Drones 2023, 7, 298. https://doi.org/10.3390/drones7050298

Luo W, Gan S, Yuan X, Gao S, Bi R, Chen C, He W, Hu L. Repeated UAV Observations and Digital Modeling for Surface Change Detection in Ring Structure Crater Margin in Plateau. Drones. 2023; 7(5):298. https://doi.org/10.3390/drones7050298

Chicago/Turabian StyleLuo, Weidong, Shu Gan, Xiping Yuan, Sha Gao, Rui Bi, Cheng Chen, Wenbin He, and Lin Hu. 2023. "Repeated UAV Observations and Digital Modeling for Surface Change Detection in Ring Structure Crater Margin in Plateau" Drones 7, no. 5: 298. https://doi.org/10.3390/drones7050298

APA StyleLuo, W., Gan, S., Yuan, X., Gao, S., Bi, R., Chen, C., He, W., & Hu, L. (2023). Repeated UAV Observations and Digital Modeling for Surface Change Detection in Ring Structure Crater Margin in Plateau. Drones, 7(5), 298. https://doi.org/10.3390/drones7050298