1. Introduction

In recent years, the rapid development of drone technology has resulted in increased convenience in people’s daily lives. However, invasive and disorderly flying of UAVs are increasing, posing a serious threat to public safety [

1]. UAV targets have the characteristics of low flying altitudes, slow speeds, and miniaturization [

2]. This makes their detection by traditional radar and radio methods difficult and costly [

3,

4]. Detecting UAVs based on sound is greatly disturbed by noise, so their detection is not obvious [

5]. In addition to the above methods, the deep learning method based on two-dimensional image data is gradually being applied to UAV detection and has achieved good results [

6,

7]. Therefore, it is promising to study a new object detection method based on UAV images to address the lack of suitable detection methods.

With the development of deep learning and updates to graphics computing devices, image-based target detection methods have become a hotspot in the field of target detection [

8]. An increasing number of engineering practices are being applied to facial recognition, pedestrian target detection, and autonomous driving. The representative algorithms in these technologies include R-CNN and Faster R-CNN based on two-stage detection [

9], as well as SSD (single-shot multi-box detector) and YOLO (you only look once) series algorithms based on one-stage detection [

10]. The former methods are called two-stage algorithms because the target candidate regions are generated separately from the classification localization. The latter are called one-stage algorithms because the extraction of features and classification localization are carried out continuously. As the fourth version of the YOLO series of algorithms, YOLOv4 is the first to use CSPDarkNet-53 as the backbone network to extract image features [

11], PANet (path aggregation network) as the feature fusion structure, and SPP (spatial pyramid pooling) to enhance feature extraction [

12], which greatly improves the performance of the one-stage detection algorithm in model accuracy and reasoning speed.

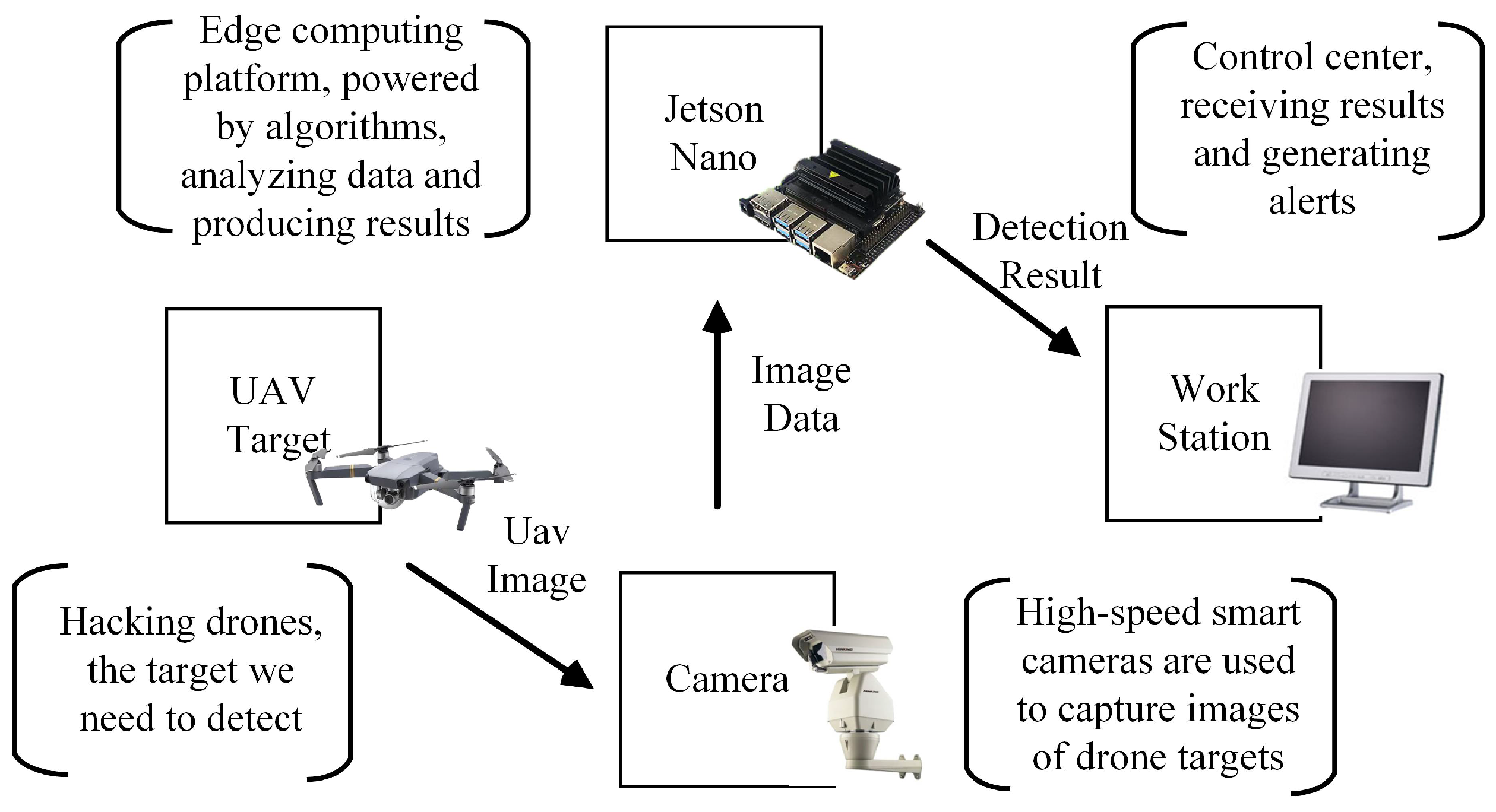

At present, object detection applications are mostly divided into cloud computing and edge computing. Cloud computing is a mode of unifying the data collected on the edge side to the central processor for operation and then making decisions. This model has high latency, network instability, and low bandwidth problems, making it unsuitable for UAV detection tasks that require fast responses and have high error costs. On the contrary, edge computing can solve these problems well. Edge computing is a technology that provides cloud services and IT environment services for developers and service providers at the edge side of the network [

13]. By integrating detection algorithms, edge computing platforms can partially replace the data processing functions of cloud devices and servers and directly store or detect the target data. This edge-side data processing method not only reduces the data transmission and communication time between devices but also saves on network bandwidth and energy consumption. Moreover, this method also frees target detection from its dependence on large servers and GPU devices and enables users to flexibly arrange edge computing devices to meet the needs of various detection tasks.

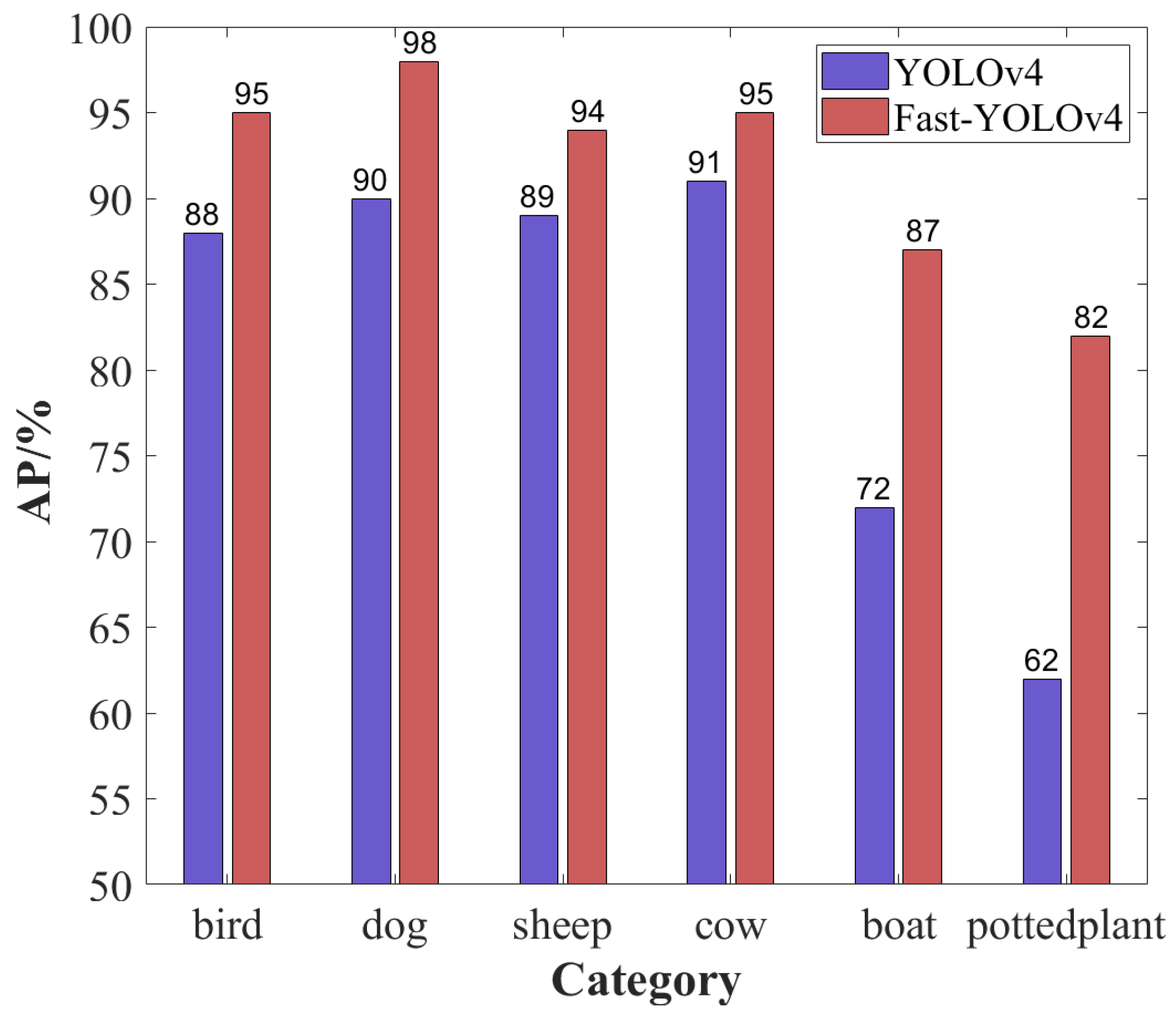

In target detection, accuracy and speed are the two most important metrics to measure a model’s performance. Many scholars have also improved the detection algorithm based on these two points. In terms of accuracy, the proposed feature pyramid network and the application of various box-filtering algorithms enhance the detection capability of the model for multiscale targets. In terms of speed, various lightweight networks are proposed to greatly reduce the model parameters and accelerate the detection speed, so that the detection algorithm can be carried to the edge computing platform to complete the task. Aiming at the problems of slow detection speed and poor effect of multiscale target detection, we propose a UAV target detection algorithm Fast-YOLOv4 based on edge computing, which combines the lightweight network MobileNetV3, the improved Multiscale-PANet, and the soft-merge algorithm. To summarize, the innovations of the algorithm are as follows:

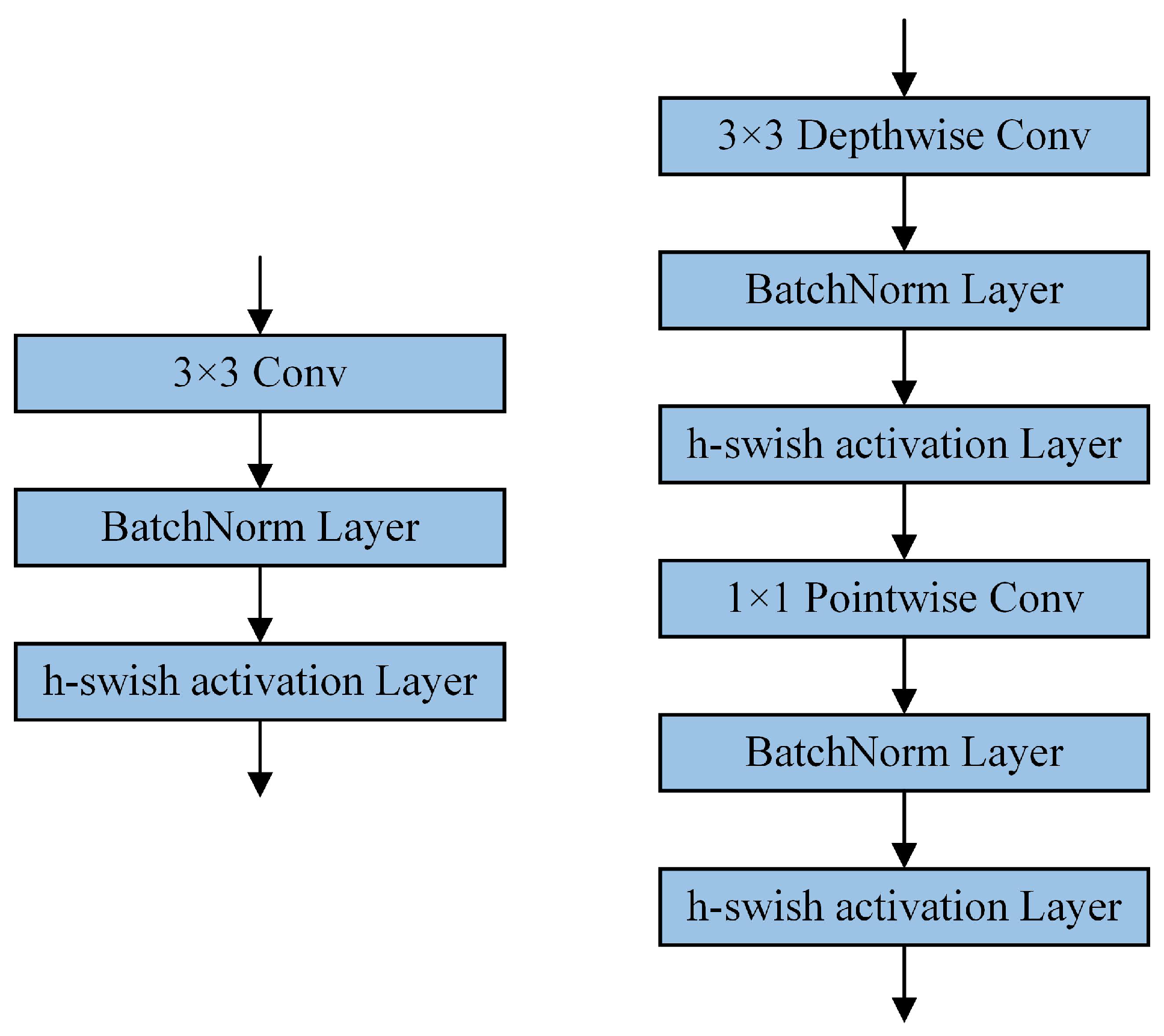

Using the lightweight network MobileNetV3 to improve the backbone of YOLOv4 and introduce depth-wise separable convolution and inverse residual structures. These improvements can greatly reduce the number of model parameters and improve the detection speed.

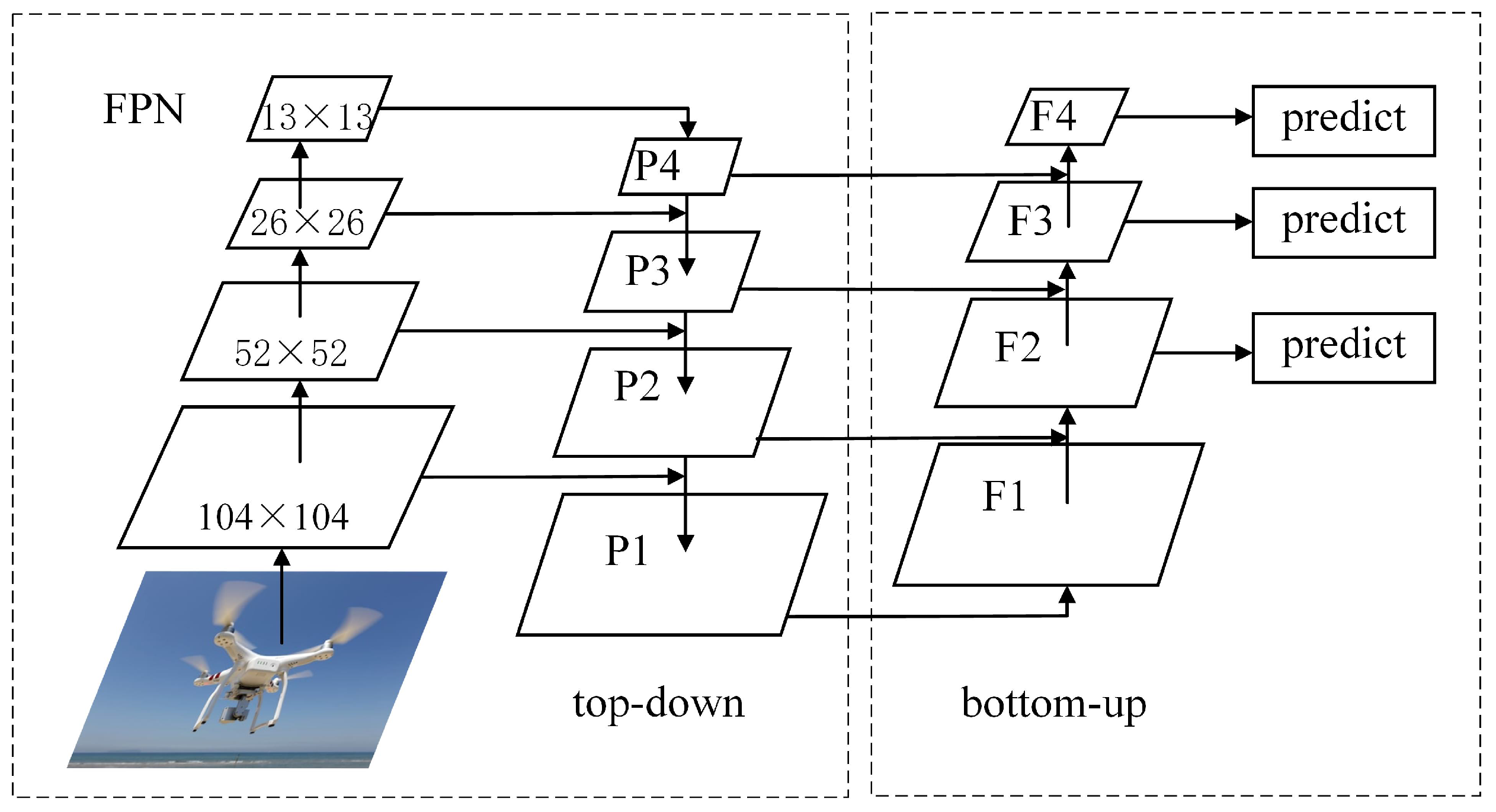

Adding the fourth feature fusion scale in the neck structure, PANet, of YOLOv4 and enhancing the flow superposition of high-dimensional image features and low-dimensional location features, improving the classification and localization accuracy of multiscale targets.

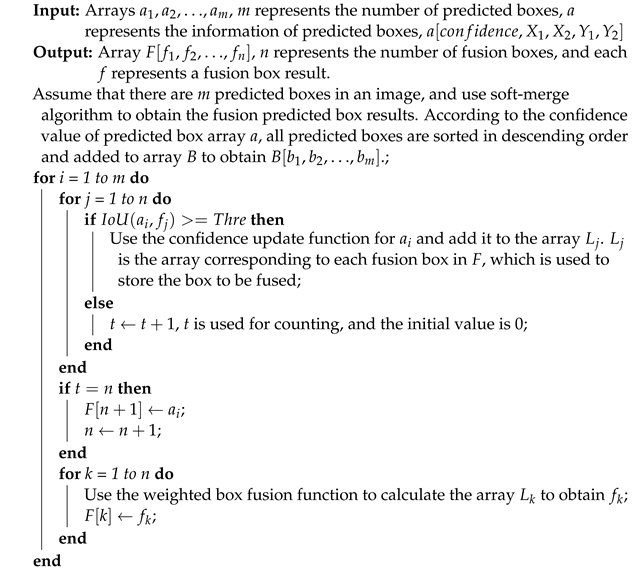

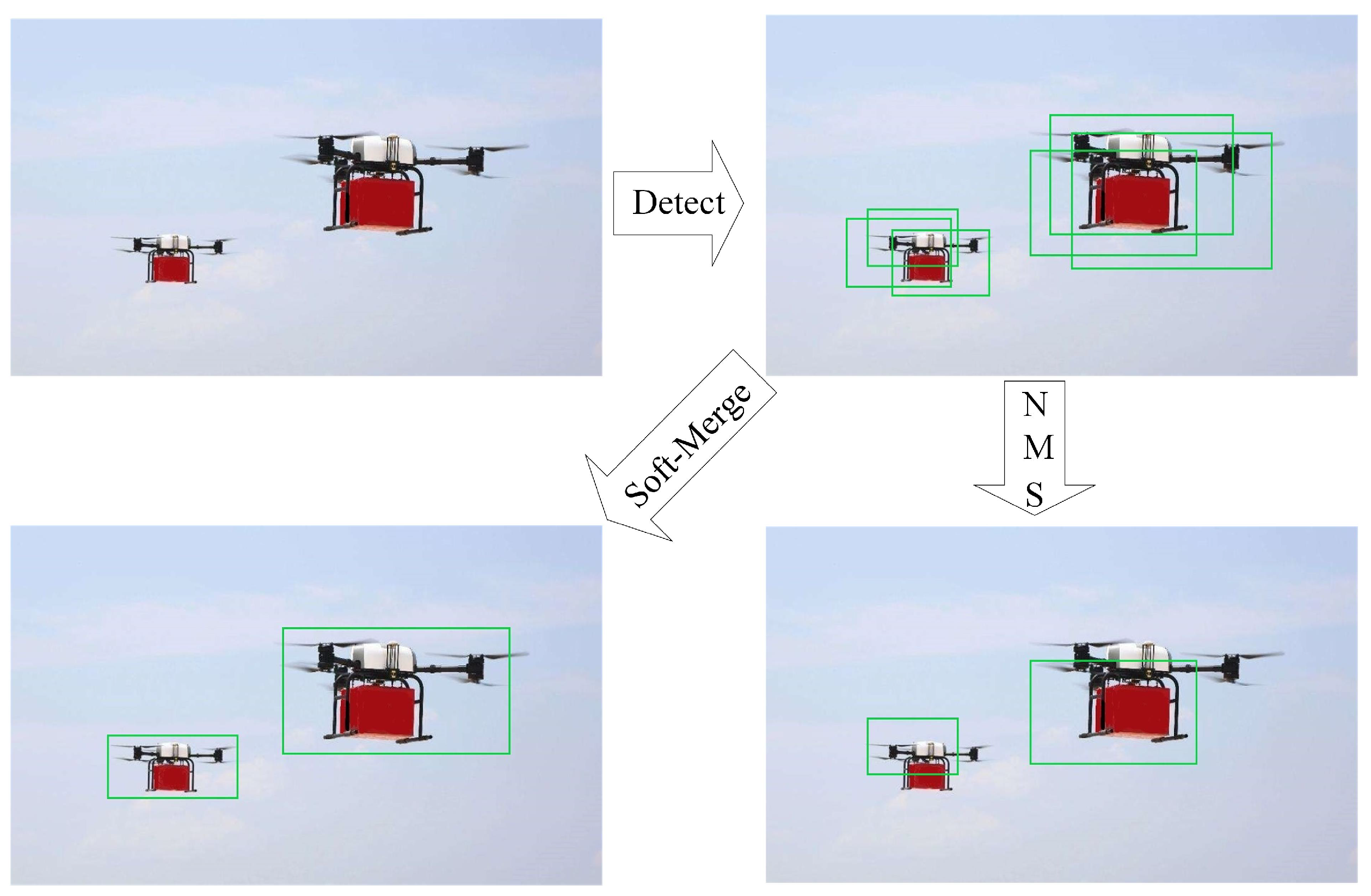

Replacing NMS with the improved soft-merge algorithm. It is a predicted box-filtering algorithm and can fuse the predicted box information to obtain better-predicted boxes. The problems of missing detection and false detection are also reduced, enhancing the recognition effect.

Combined with the edge-embedded platform NVIDIA Jetson Nano, the fast target detection algorithm is equipped to reduce the response time and energy consumption, and realize the real-time multi-scene accurate detection of UAV targets.

Through those improvements, Fast-YOLOv4 achieves high precision and fast detection of UAV targets on an edge computing platform, which provides a solution for research on UAV target detection.

2. Related Work

In this section, we mainly introduce work to four parts of our model: multiscale detection, box-filtering algorithms, lightweight networks, and edge computing about UAVs, as well as their related methods and applications. For a long time, multiscale detection has presented a major problem in target detection. From the initial image pyramid network and feature pyramid network method to the most commonly used Res2Net and PANet methods [

12,

14,

15], multiscale detection is gradually maturing. For example, aiming at the significant differences in the scales of drone targets, Zeng et al. proposed a UAV detection network combining Res2net and a mixed feature pyramid structure, which achieved a more than 93% mAP in a self-built dataset [

16]. However, the model is a little bloated and difficult to carry over to the edge platform. For mask target detection, Zhu et al. introduced a path aggregation network into the input feature layer of YOLOv4-Tiny, which improves the mAP of mask detection by 4.3% [

17]. However, the method does not propose innovative improvements to the multiscale structure in that paper, which is slightly inadequate.

Another way to improve the model accuracy is to improve the box-filtering algorithm. Because the traditional NMS method is not effective in detecting occluded targets, many scholars have tried to study and improve it. To solve the problem of poor detection of occluded targets, Zhang et al. introduced position information into the NMS to adjust the final score of the box and proposed a distance-based intersection ratio loss function. The method was tested using classical datasets, and the accuracy was improved by 2% [

18]. Ning et al. proposed an improved non-maximum suppression method called I-SSD (Inception-SSD) that can obtain weighted averages of the coordinates of the predicted boxes and improve the ability of the model’s filtering results [

19]. The proposed I-SSD algorithm achieves a 78.6% mAP on the Pascal VOC 2007 test. Roman et al. also proposed a weighted box fusion method by fusing predicted boxes of different detection models to obtain integration results. The method significantly improves the quality of the fused predicted rectangles for an ensemble and achieves the best results in dataset challenge competitions [

20].

In addition to the detection accuracy of multiscale targets, the speed improvement in the detection model and the deployment of an edge platform are also current hot issues. To solve these problems, most scholars use lightweight networks to improve the target detection model. Zhou et al. proposed a lightweight YOLOv4 ship detection algorithm combined with MobileNetv2 to solve the problem that the large model could not run on the micro-platform and realized the high-precision and rapid detection of ship targets [

21]. Liu et al. proposed a pruned-YOLOv4 model to overcome the problem of low detection speed and poor detection effect of small targets. The model achieves 90.5% mAP, and its processing speed is increased by 60.4%, which accomplishes the real-time detection of drones well [

22]. However, the accuracy performance of the model is slightly inadequate. It can be seen from these papers that lightweight networks are very effective at improving target detection, which suggests ideas for their application to target detection in more ways.

Because of the broad application prospects of object detection combined with edge computing, many researchers try to carry the object detection algorithm onto the embedded platform to complete the detection task. Daniel et al. achieve an accurate detection of UAV targets by building YOLOv3 on the edge platform of NVIDIA Jetson TX2, with an average accuracy of 88.9% on the self-built dataset [

23]. To solve the problem of dynamic obstacle avoidance in safe drone navigation, Adrian et al. propose a novel method of performing onboard drone detection and localization using depth maps. This method is integrated into a small quadrotor and reduces the maximum obstacle avoidance error distance to 10% [

24]. In terms of expanding the extent of applicable mission scenarios of UAVs and coordinating the flight formations of fleets of UAVs, Roberto et al. proposed a YOLO object detection system integrated into an original processing architecture. This approach achieves a high level of accuracy and is robust against challenging conditions in terms of illumination, background, and target-range variability [

25].

Combined with the ideas for improvement presented by related papers, the proposed Fast-YOLOv4 algorithm in this paper improves the detection accuracy in a multiscale structure and predicted box algorithm and improves the speed of the lightweight network. In the following experiments, the proposed algorithm shows higher accuracy and faster speed than other algorithms on the edge computing platform and realizes a highly accurate and fast detection of multiscale UAV targets.

5. Dataset and Experimental Preparation

5.1. Dataset Information

The datasets used in the experiment include the PASCAL VOC 07+12 dataset and UAV dataset. Firstly, the algorithm is trained on the VOC 07+12 dataset, and then the algorithm is trained and tested on the UAV dataset via transfer learning. The PASCAL VOC 07+12 dataset is a public competition dataset used by the PASCAL VOC Competition in 2007 and 2012. The dataset contains 20 classes of targets with a total of 21,504 images, of which 16,551 are training set images and 4952 are test set images for target detection. UAV dataset is constructed by combining the public dataset [

30] and autonomously taking images of UAV targets. It includes three scales of large, medium, and small UAV targets, with 3698 images and 3719 targets. The division of the experimental dataset first randomly selects 20% of the images as the test set and then selects 90% of the remaining 80% of images as the training set and the rest as the validation set.

Table 2 shows detailed information on the UAV dataset.

5.2. Experimental Environment and Hyperparameter

The experimental environment includes the workstation and the edge computing platform NVIDIA Jetson Nano. The workstation is used to train and validate the algorithm, and Jetson Nano is used to test the final performance of the algorithm. The configuration of the workstation is Intel (R) Core(TM) I9-10900F CPU @ 2.80 GHz 2.81 GHz 64 G memory based on Windows 10 operating system. The GPU is an NVIDIA Quadro P4000 with 8 G of video memory. The configuration of NVIDIA Jetson Nano is a 128-core CUDA Maxwell GPU, a quad-core ARM A57 1.43 GHz CPU with 4 GB LPDDR4 memory and 472 GFLOPS of processing power. The deep learning framework used in the experiment is PyTorch 1.8.1, and the GPU acceleration library is CUDA10.2.

Hyperparameters are a set of parameters related to model training. They are also related to the length of training time and training effect.

Table 3 shows the hyperparameter information of model training.

5.3. Evaluation Metrics

The evaluation metrics of the experiment include object detection accuracy AP (average precision), mAP (mean average precision), IoU, detection speed FPS (frames per second), model parameters, and model volume size.

The mAP can comprehensively evaluate the localization and classification effect of the model for multi-class and multi-target tasks. Calculating the

requires calculating the AP for each class in the recognition task and then taking its average. The formula is as follows:

In Formula (

5),

C represents the number of total classes, and

represents the AP value of class

i.

Calculating

requires knowing the values of

P (precision) and

R (recall). The formulas for these three metrics are as follows:

In Formulas (

6)–(

8),

(true positive) means that the input is a positive sample and the predicted result is also a positive sample;

(false positive) means that the input is a negative sample and the predicted result is a positive sample;

(false negative) means that the input is a positive sample and the prediction result is a negative sample; and

(true negative) means that the input is a negative sample and the prediction result is a negative sample.

The IoU metric is used to calculate the ratio of the intersection and union of two bounding boxes. In essence, it converts the accuracy of object detection division into a comparison of the area between detection results and the true values. Under different IoU conditions, we can calculate different detection accuracies to comprehensively measure the accuracy of the model. Assuming that the areas of the two bounding boxes are

A and

B, the formula of the

metric can be written as:

The FPS metric is the time that a model takes to detect a picture or the number of pictures detected in one second. The larger the FPS, the faster the model is detecting, which can be used to measure the detection speed of the model. The model parameters and model volume size are both metrics of model complexity. They all represent the size of the model, which can directly reflect the model size.

7. Conclusions

Aiming to address the problems in the detection of the multiscale UAV target, we present a novel real-time target detection algorithm called Fast-YOLOv4 based on edge computing. The proposed algorithm is based on YOLOv4 and uses the lightweight network MobileNetV3 to improve the backbone, which can reduce structural complexity and model parameters to speed up detection. Then, our approach combines the Multiscale-PANet to enhance the use and extraction of shallow features, strengthen the detection of small-scale targets, and improve the model’s accuracy. In view of missing detection and false detection of multiscale targets, the proposed approach adopts an improved weighted boxes fusion algorithm, called soft-merge, to perform the weighted fusion of the predicted boxes instead of the traditional NMS. Finally, Fast-YOLOv4 achieves the best results in the fast detection of UAV targets in the edge computing platform Jetson Nano. To summarize, Fast-YOLOv4 provides a practical and feasible research method for real-time UAV target detection based on edge computing, which is worthy of further research.