Abstract

The intensity and frequency of bushfires have increased significantly, destroying property and living species in recent years. Presently, unmanned aerial vehicle (UAV) technology advancements are becoming increasingly popular in bushfire management systems because of their fundamental characteristics, such as manoeuvrability, autonomy, ease of deployment, and low cost. UAVs with remote-sensing capabilities are used with artificial intelligence, machine learning, and deep-learning algorithms to detect fire regions, make predictions, make decisions, and optimize fire-monitoring tasks. Moreover, UAVs equipped with various advanced sensors, including LIDAR, visual, infrared (IR), and monocular cameras, have been used to monitor bushfires due to their potential to provide new approaches and research opportunities. This review focuses on the use of UAVs in bushfire management for fire detection, fire prediction, autonomous navigation, obstacle avoidance, and search and rescue to improve the accuracy of fire prediction and minimize their impacts on people and nature. The objective of this paper is to provide valuable information on various UAV-based bushfire management systems and machine-learning approaches to predict and effectively respond to bushfires in inaccessible areas using intelligent autonomous UAVs. This paper aims to assemble information about the use of UAVs in bushfire management and to examine the benefits and limitations of existing techniques of UAVs related to bushfire handling. However, we conclude that, despite the potential benefits of UAVs for bushfire management, there are shortcomings in accuracy, and solutions need to be optimized for effective bushfire management.

1. Introduction

Forests are essential global resources that provide a wide range of social, economic, and environmental benefits. They offer significant ecological support to all species, provide vital ecosystem services, and protect cultural and social aspects. However, the intensity and frequency of bushfires have been increasing significantly in recent years, and giant forest areas are destroyed every year because of human activities [1] as well as natural causes. Bushfire affects almost three hundred thousand people worldwide, burning millions of hectares of land and costing billions of dollars [2] every year, and controlling widespread bushfires remains a challenge for humanity.

Research in 2012 estimated that 15 civilians died due to a bushfire, close to the average of 13 people per year [3] thus far. Australian Parliament media reported that 75 people died, including 13 Victorian firefighters, 1 casual firefighter, and 3 South Australian firefighters [4]. Forest Fire Management Victoria recorded that 47 people in Victoria and 28 people in South Australia died. Experts estimate that, in the coming years, the spread of forest fires will dramatically increase due to climate change [5]. Effective control of bushfires is considered one of the essential roles in protecting and preserving natural resources [6,7].

Several traditional methods and tools, such as satellite images, wireless sensor networks (WSNs), smoke detectors, watchtowers, human monitors, and remotely piloted vehicles, have been used to monitor and detect bushfires [8]. For instance, the Australian CSIRO [9] long-running project has a computational system named Spark for bushfire spread predictions. Users can design their fire propagation models by building on Spark’s computational fire propagation solver with manually entered information into a tool that uses an algorithm to predict the fire behaviour. However, traditional methods and other models are deemed inefficient and have different practical problems, such as less reliability, high cost, and inadequate capability; moreover, they rely on manual human decision-making and input.

Recent technological advancements can overcome the limitations of traditional fire detection methods’ limitations and significantly contribute to the early detection and suppression of bushfires to inspect the affected area and help people overcome impacts, including human death, economic losses, and environmental damage. As a result, UAVs have been proposed as an appropriate technology to handle bushfires due to their vast capabilities [10]. In addition, UAVs can access high-risk zones without human interaction, confirm fire, and provide real-time updates with complete accuracy for strategic and tactical planning, with no risk to human lives [11]. UAVs with vision-based remote-sensing techniques have become a most popular tool for effective bushfire monitoring, detection systems [12], and even fighting fires with low cost and more accuracy.

In recent years, worldwide attention and research related to UAV forest fire applications have increased considerably because of their ability to capture clear images from low altitudes and challenging locations, such as areas covered by smoke, impaired vision, polluted air, and hazardous conditions. UAV-based forest fire management systems can cover functions, such as detecting fires in progress and predicting the future expansion and direction of a fire based on real-time data [13].

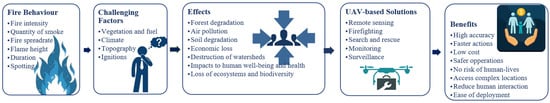

Figure 1 illustrates the challenges and effects of bushfires and suggestions for UAV-based solutions for overcoming bushfire-related impacts. This paper reviews existing literature on bushfire management and examines future uses for prediction in fire monitoring. We primarily aim to collect information about UAVs used in bushfire management systems and to evaluate their benefits and limitations for various applications in UAV-based bushfire management, such as bushfire detection and prediction. A further objective of this paper is to highlight the potential limitations of Intelligent Autonomous UAVs as fire detection technology and to highlight the need for them to navigate and predict fire behaviour in uncertain conditions.

Figure 1.

Solutions to reduce impacts of bushfires and the challenges they pose.

Furthermore, we note that UAV-based bushfire management can contribute to increased accuracy and positively impact social, economic, and environmental factors. Recognition techniques are used in fire detection and monitoring, a field where significant advances have been made over the past few decades. Data must be fed into complex mathematical models (which are difficult to gather in real-time and in unfamiliar conditions) to use prediction methods. There are other obstacles, as well as hardware failures, adverse weather, communication breakdowns, and regulation issues.

In recent years, machine-learning and remote-sensing technologies have enabled the monitoring and detection of forest fires more efficiently. Research has shown the possibility of using UAVs to identify and even extinguish forest fires; however, further development of this technology still needs to be improved. Further, using UAVs in combination with other remote-sensing techniques will require additional investigation.

Our main objective was to conduct a thorough bibliographic review of the use of UAVs in bushfire management and describe the advantages and limitations of using UAVs to manage fire in a variety of applications, including fire detection, fire prediction, autonomous navigation, obstacle avoidance, and search and rescue. The paper reviews the existing literature on bushfire management approaches and the potential uses of UAVs. This paper also explores how UAV-based bushfire management can improve prediction accuracy and boost the social, economic, and environmental benefits of better management.

By identifying limitations of using UAVs in bushfire management, this paper seeks to motivate further research and development to accelerate the research and development in this crucial field. This paper is motivated by the lack of a survey focusing comprehensively on these issues. Table 1 demonstrates most of the shortcomings of existing surveys that this paper will cover on UAV applications in bushfire management. In particular, this paper contributes to the following:

Table 1.

Comparison of existing surveys on UAV-based tasks in bushfire management applications with the findings of this paper.

- Review of the existing literature on the understanding of bushfire management and investigating the role of UAVs in bushfire management applications and their benefits and limitations.

- Specifying and exploring different technologies adapted to UAVs to increase performance accuracy.

- Studying and analysing UAV-based applications on bushfire management, research trends, and the challenges facing UAVs in each application domain.

- Identifying and examining UAV technical and environmental challenges across different application domains.

The rest of this paper is organised as follows: Section 2 outlines the methodology for the paper. Section 3 reviews the existing surveys related to bushfire management systems. Section 4 discusses the literature related to bushfire applications and the key technologies used in such scenarios. The last section reviews the challenges and development issues related to this research area before concluding the paper.

2. Methodology

This paper summarizes the existing research and demonstrates the UAV-based approach, sensors utilized by the UAV, and machine-learning approaches as well as their associated advantages and downsides in bushfire management tasks. The following questions are investigated through a comprehensive literature review consisting of the following:

- How are UAV technologies utilized in bushfire management?

- What benefits and limitations do using UAVs impose in bushfire management?

- How efficiently is UAV technology used to manage bushfires?

As a result, the literature review assessed many research studies from journals, conferences, and other electronic databases to integrate and synthesize the information. A variety of keywords were used to locate relevant study papers, including wildfire, forest fire, bushfire, sensors, swarms, autonomous UAVs, machine learning, prediction, detection, obstacle detection and avoidance, search and rescue, and navigation, in databases, such as Elsevier, IEEE, Scopus, MDPI, Google Scholar, Springer, and Science Direct.

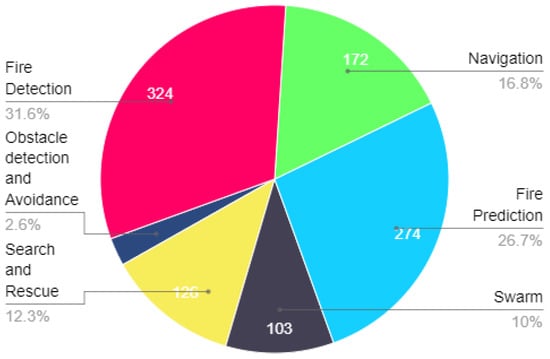

A total of 1026 papers were identified by our search (Figure 2), of which we selected the most relevant for further evaluation and excluded others. A higher number of studies have been performed on the applications of Fire detection compared with other UAV-based bushfire applications. Comparatively, a few studies have been found in obstacle detection and avoidance based on UAV-based bushfire management. The visual representation of the structure of the reviewed works illustrates in Figure 3.

Figure 2.

Statistical analysis of experiments on different types of UAV-based operations in bushfire management systems.

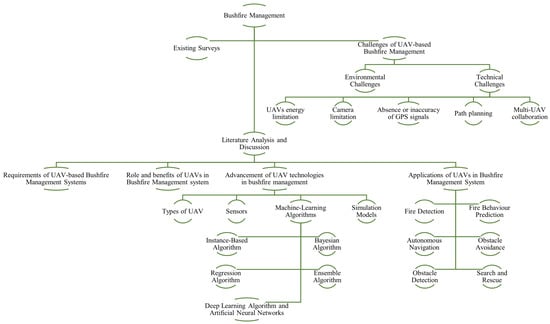

Figure 3.

Structure of the reviewed work.

3. Related Work

A preliminary literature review shows that previous studies related to UAVs are widely being researched on understanding and modelling a predicting system in disaster management. UAVs with various types of technology are being used or tested to monitor and detect bushfires. A few literature surveys have been conducted to summarize the research work performed around the world regarding UAV-based bushfire management systems.

Hossain et al. [1] discussed and summarized the research conducted worldwide until 2019 in the domain of UAV-based bushfire monitoring and detection. The author initially covered some of the research work performed at various universities and research centres, such as the University of South Florida, University of Alaska Fairbanks, Concordia University, United States Forest Service (USFS), and NASA Arms Research Centre (NASA-ARC), since 1961. The authors also mentioned the operations and infrastructure, such as sensors, aircraft type, and communication of UAVs used as forest fire monitoring systems.

They noted several positive points of using UAVs while highlighting the constraints of using such technology. Similarly, Yuan et al. [18] followed the same concept as Hossain by conducting a literature survey of the applications of UAVs for bushfire management. The relevant research undertaken during this period is also systematically arranged as per the approach employed in the previous analysis of Hossain. The author discussed UAVs with vision-based systems that focus on the drawbacks of image capture failure and improve the quality of the acquired data.

Some researchers proposed an outline for robotic technology-based UAVs for forest firefighting. Roldán-Gómez et al. [14] enlisted and discussed the different types of robots in the context of firefighting missions. Mainly, the focus is on the operation of the comprehensive application of drone swarms in firefighting. In addition to this, it addresses some of the problems of current operations, challenges that have to be overcome, and the current limitations in the autonomy and communications of UAVs.

On the other hand, Akhloufi et al. [10] reviewed the previous works related to UAVs, specifically for wildland fires. The authors considered onboard sensor instruments, fire perception algorithms, and coordination strategies based on an application-specific outline. In addition, they proposed recent frameworks of both aerial vehicles and Unmanned Ground Vehicles (UGV) for a more efficient wildland firefighting strategy at a larger scale are presented. However, the author expressed the limitations in autonomy, reliability, fault tolerance, and the future scope of deep learning in developing autonomous operational systems with or without human intervention.

4. Literature Analysis and Discussion

4.1. Requirements of UAV-Based Bushfire Management Systems

Fire incidents are becoming more dangerous, more complicated, and more extensive in scale [8]. These incidents result in increasingly more work for the first responders to respond. A possible technical solution is using unmanned firefighting equipment, which would prevent further damage and protect the firefighters. The use of UAVs in this context is increasingly considered promising [19]. As a matter of fact, UAVs can be applied for several purposes within firefighting practices, such as risk assessment, detection, and extinguishment.

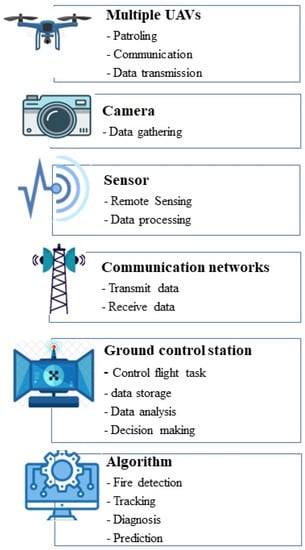

Each UAV observes the terrain with onboard sensors during the fire detection process to identify fire automatically. Generally, the flight plan is strictly bounded due to the limitations of UAV capabilities, such as duration, range, altitude, and sensor resolution [20]. Multiple UAVs can work simultaneously along their predetermined paths, depending on the size and characteristics of the surveillance region, to detect a target. Once the fire has been confirmed, the ground station and decision support system should deliver estimations of the fire features so that firefighters can effectively guide their efforts. For these types of operations to be effective, different types of UAVs equipped with sensors must work together with a single ground control centre. Figure 4 shows the components of UAVs involved in detection and monitoring tasks.

Figure 4.

Components of a UAV-based bushfire management system.

In addition, all UAVs engaged in bushfire missions should function at night and during the day—even in the most challenging weather conditions—to ensure a successful detection process. A GPS receiver and an inertial measurement unit (IMU) should be equipped with these aircraft for automatic flying along paths and automatic geolocalization of their positions. Furthermore, they must be able to communicate effectively with each other so they can solve their tasks as efficiently as possible. Consequently, every UAV should be equipped with all sensors necessary to detect fires and onboard communication devices that enable the UAV to receive commands from a ground control centre, relay information back to it, and exchange information with other UAVs.

4.2. Role and Benefits of UAVs in Bushfire Management Systems

Effective time management is crucial in bushfire situations. UAVs are gaining popularity due to their flexibility and low cost in comparison with traditional methods of disaster management. UAVs are primarily used to gather situational awareness in fire incidents, which can be used to direct the efforts of firefighters in locating and controlling hot spots. For that purpose, UAVs have been used for initial detection and rescue operations.

These missions may be performed using UAVs capable of flying rapidly, flying to a location, mapping the area affected by the fire, and sharing the information with all relevant authorities within a few minutes [21]. During search and rescue operations, UAVs provide rescuers with critical information about the route that must be followed to reduce the time required to locate victims and intervene. Furthermore, this helps to reduce the time it takes to find victims and intervene [22].

Additionally, a UAV can carry a variety of sensors, including a thermal imaging camera with multiple colour options. Together, all these sensors give a better picture of the fire’s spread and speed, which allows authorities to develop a better plan for fire relief. Further, this reduces the risk for humans and alleviates life-threatening situations by enabling them to operate UAVs cite Akhloufi. 2020 remotely. Hence, UAVs have had a significant impact on providing information for decision making.

Drones are generally less expensive and make it easier to collect high-quality geospatial data after a disaster compared with manned aircraft and satellite images. Many countries, such as Australia, the United States, Canada, and Europe, have been using UAVs as an integral part of firefighting in the modern era. Changing climate has led to longer bushfire seasons and this has increased the cost of firefighting in the case of the USA [23]. Thus, the use of UAVs in firefighting has increased in the USA [24].

For instance, the Los Angeles Fire Department used UAVs in 2017 to tackle fires [25]. According to the Department of Interior statistics, federal firefighters used UAVs for 340 bushfires in Oregon and used them in 12 states total in the same period [26]. Similarly, in Canada, the Alberta government hired Elevated Robotic Services, which deploys UAVs to mines to help firefighters locate the fire [27]. Further, researchers at the University of British Columbia used UAVs in December 2017 to inspect the wreckage caused by the bushfires that ravaged the province [28].

Songsheng Li, a computer engineering researcher at Guangdong College of Business and Technology in Zhaoqing, China, is developing an early warning system for bushfires that uses UAVs to assess forest fire risks, gather environmental data, and patrol forests [29]. Furthermore, China’s Aviation Industry Corporation (AVIC) developed an amphibious aircraft, the AG600, designed to be used in forest firefighting and maritime rescue operations [30]. Bushfire activity in the past few years has been significantly higher in Australia than in other countries in the region. More than 85% of Australia’s firefighting air services are owned or leased by the Australian government, with the remainder sourced from other countries. Therefore, the country has now set its sights on expanding its aerial firefighting.

4.3. Advancement of UAV Technologies in Bushfire Management

This section addresses the most relevant technologies adopted in UAVs in bushfire management. UAV structure, working methodology, navigation, and flying features have seen tremendous progress in recent decades. Several factors have contributed to the performance of UAVs, including their geometric structure; the mechanisms used for flying, sensing, path planning, and intelligent behaviour; and the adoption of UAVs [31].

4.3.1. Types of UAV

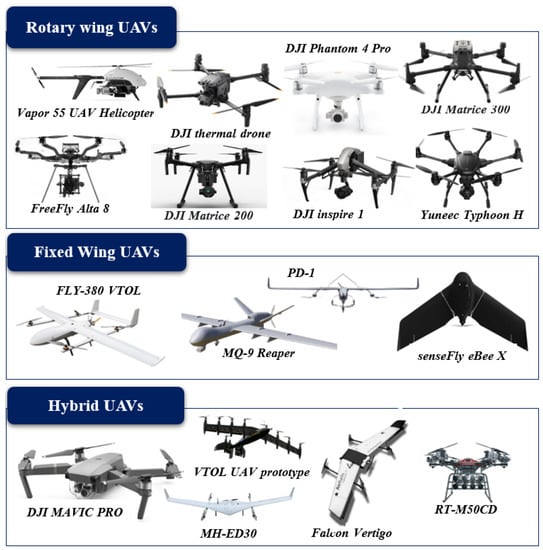

UAVs can be categorized into fixed-wing, rotary-wing, and hybrid fixed/rotary wing (Figure 5) based on their designs, autonomy, size, weight, flying mechanisms, and power source [32,33]. A fixed-wing UAV can be flown at high altitudes and can rapidly survey a wide area, while the rotary UAVs can hover at low altitudes and collect high-resolution data [11,34]. A fixed-wing UAV, also known as a vertical takeoff/landing UAV (VTOL), is used for the initial recognition of a coalition within an airspace coalition through its superior flight capabilities and computation capabilities. Rotary-wing UAVs (HTOL-horizontal takeoff/landing UAVs) are also called observer UAVs because they contribute to the sensing and recording capabilities of the coalition.

Figure 5.

Different categories of frequently used UAV models in bushfire management.

The structures of fixed-winged UAVs are generally simpler than those of rotary-winged UAVs, so they require less complicated maintenance and repair processes and, therefore, can operate for longer periods and at higher speeds [35]. Furthermore, they can carry larger payloads over longer distances while using less power, as they have natural gliding capabilities with no power requirement. In contrast, rotary-winged UAVs are capable of vertical takeoffs and landings, while fixed-winged UAVs require a runway or launcher [35]. A hybrid drone is a UAV that combines the advantages of fixed-wing, and rotary-wing UAVs [36].

Clearly, all three types: fixed-wing [37,38], rotary wing [39,40], and hybrid [36] UAVs are effectively used in different bushfire management operations. The latest UAVs used in bushfire management and their specifications are given in Table 2.

Table 2.

Specifications of UAVs in bushfire management systems.

Early detection of forest fires has been used with both fixed-wing and rotary drones, both equipped with cameras, either optical or thermal [36]. UAVs can be deployed in different numbers and scenarios based on the frequency and severity of bushfires in a region. For instance, the European Union funded a project called COMET, which explored unmanned systems for monitoring forest fires [41]. The researchers developed their system based on three UAVs: two fixed wings and one rotary wing, which worked together to conduct a variety of prescribed fire experiments to test its detection and monitoring capabilities.

Comparatively, many authors suggested rotary-wing UAVs for the detection task. For instance, the authors [42] used a six rotor UAV (DJI S900) with a Sony A7 camera for the bushfire detection model. Furthermore, another researcher [43] used Crazyfile 2.0; this is an open-source nano aerial vehicle at only 27 g in weight and is equipped with a mini ArduCAM 320*240 resolution camera. The author proved that the open-source quadrotor navigated autonomously toward the fire with the help of IMU and imitated its behaviour in the real environment. Considering accurate image matching with 80% overlap and 60% side lap, Bilgilioglu et al. [44] used a DJI Phantom 4 type UAV. These flights captured images using a 1/2.3″ CMOS sensor to ensure the coverage ratio.

4.3.2. Sensors

Advances in aircraft bushfire detection will depend on developing new or more powerful sensors and on the small size, increased power efficiency, lower prices, and enhanced performance of existing sensors that will enable their use in a broader range of platforms. Various sensors, including LiDAR, visual, IR, and monocular, have proven helpful in detection operations [45,46]. However, how sensors are used is quite diverse, depending on their purpose and nature.

For instance, thermal cameras are useless in daylight during hot summer days, fixed cameras are ineffective in dark weather (such as clouds, evening, and night), and infrared sensors cannot detect smoke [47]. A sensor can be classified as active or passive based on its principal functionality. The passive sensor reads only the energy emitted by an object from another source, such as the sun reflected on the object or the scenery under observation. In contrast, active sensors radiate light or emit waves that are detected when they bounce back.

A camera must do a great deal of image processing to obtain useful information from the chunks of raw data it obtains from the sensor. Thermal or IR cameras, optical or visual cameras, and spectrometers are commonly used as passive sensors in sensing applications [45,48,49]. Apart from the additional algorithm required for calculating the range and other parameters of the bushfire and obstacles, extracting points of interest is a separate process that requires additional processing power [50].

Sensors that work in visible light are optical or visual cameras, such as monocular and stereo cameras [51]. They capture images of the surrounding environment and objects to provide valuable information. The advantages of cameras are their small size, weight, low power consumption, flexibility, and ease of mounting. Contrary to this, the disadvantages of using such sensors include their high sensitivity to weather conditions, lack of picture clarity, sensitivity to lighting levels, and the background’s colour contrast. When any of these factors are involved in the process, the quality of the captured image drops dramatically.

Thermal or infrared (IR) cameras, which have a longer wavelength than visible light, are used in low-light conditions as infrared light is used [51]. Since thermal cameras output distorted, blurry, and low-resolution images compared to RGB cameras, it is possible to analyse the data of a thermal camera by generating artificial control points and analysing them to determine inclination and orientation [52]. However, their performance can also be improved by combining them with a visual camera during the night or in poor lighting conditions.

There are various types of active sensors, such as LiDARs [53], sonars [54], and radars [55]. A sensor of this type has a fast response, is resistant to weather and lighting conditions, can scan a more extensive area, requires little processing power, and can return accurate data about obstacles, such as their distance and angle. Sensors for light detection and ranging (LiDAR) use two mechanisms: laser pulses to illuminate surfaces, while the other measures the time it takes for the vibrations to bounce back to determine the distance. Data collection using LiDAR is fast and extremely accurate. It can detect small objects due to their short wavelength and reconstruct images of the surrounding environment in monochrome and colour. However, transparent objects, such as clear glass, can never be detected by LiDAR.

Ultrasonic or sonar sensors emit sound waves and listen for their reflections to calculate the distance between an object and the sensor [45,56]. The object’s transparency does not affect sonar sensors, in contrast to LiDARs. For example, LiDARs cannot discern clear glass, while sonar sensors are not impacted by colour. However, objects that reflect sound in a different direction than the receiver or whose materials absorb sound will degrade the performance of the sonar sensor. A radio detection and ranging (Radar) sensor transmits a radio signal, which is reflected back to the radar when it encounters an object. The radar calculates the distance between an object and the radar based on the time it takes to bounce back the signal. Unlike other sensors, radars can function in any weather condition, regardless of lighting conditions or cloud cover.

They also provide wide coverage, making them perfect for outdoor use. Despite this, radars cannot provide accurate dimensions of objects due to their low output resolution, which makes only detection possible [57]. As outlined in Table 3, different types of sensors and cameras have been summarised with their positive and negative features, which are most commonly used in UAV-based forest fire detection systems.

Table 3.

Different types of sensors/cameras used in firefighting UAVs and their positive/negative features.

4.3.3. Machine-Learning Algorithms

Machine learning (ML) uses data and algorithms to automatically learn without human assistance and to adjust actions to improve decision-making accuracy. Machine learning is a vital component of artificial intelligence (AI) and can be used in data and algorithms to make classifications and predictions related to the problem. ML algorithms learn directly from data and develop their internal model without external influences.

Furthermore, ML approaches have been widely used in many disaster management systems, importantly, bushfire management. In particular, ML approaches have been used in bushfire applications, such as fire detection [18,58,59,60,61,62,63,64], fire mapping [65,66,67], fire weather and climate change prediction [68], fire occurrence, fire behaviour prediction [69], fire effects [70,71], and fire management [62,72,73]. Many types of research have been conducted in recent decades with UAV and machine-learning algorithms in bushfire management.

Generally, ML algorithms fall into the categories of supervised, unsupervised, and semi-supervised learning. Supervised ML uses an algorithm to learn the parameters of that function using the available data to classify the data or predict outcomes accurately. Unsupervised learning uses algorithms to analyse and cluster unlabelled datasets to discover hidden patterns or data groupings without the need for human intervention. The third category, semi-supervised learning, falls in the middle of supervised and unsupervised learning. It uses a small quantity of labelled data and a large quantity of unlabelled data for prediction. The following items provide the most commonly used ML methods from these learning paradigms based on their similarity.

Instance-Based Algorithm: Instance-based learning models involve decision problems using examples of training data considered necessary or necessary [74]. By comparing new data to the database using a similarity measure, such methods establish a database of sample data and make predictions based on the best match [74]. Regarding their functionality, several bushfire management operations employ instance-based algorithms (Table 4). An example is a k-nearest neighbour (KNN) algorithm, a simple but very effective instanced-based algorithm that uses the Euclidean distance to calculate data points’ similarity to one another [17].

Table 4.

Instance-based algorithms used in different bushfire management studies.

Another popular instance-based algorithm type is support vector machines (SVMs). SVM determines the boundary separating the boundaries of each class in an n-dimensional space based on n dimensions of data. The distance between the nearest points of each class determines the optimal hyperplane in which the decision boundary is maximized. For classification and regression problems, recently developed DL algorithms have proved more efficient than SVMs [17]. However, for limited training samples, SVMs may offer better performances. A Self-Organizing Map (SOM) is an instance-based algorithm for detecting features or reducing dimensional. Unlike error correction, SOM uses competitive learning and preserves the input space’s topological properties using a neighbourhood function. It performs topologically ordered mappings to produce two-dimensional representations of training samples from an input space.

Bayesian Algorithm: Bayesian algorithms use Bayes’ Theorem explicitly to solve problems, such as classification and regression, where every pair of features is independent. The Bayesian network (BN), also known as the Bayes Net/Belief Network, is a popular Bayesian algorithm in many applied domains (Table 5). It specifies probabilistic relationships between variables in an intuitive graphical language, along with tools for calculating the probability resulting from those relationships [17]. Another form of BN is Naive Bayes, which multiplies each input variable’s conditional probability by the likelihood function as the output. The fast and straightforward implementation of NB makes it a good solution for problems that do not presume conditional independence. However, the prediction accuracy can be low when this assumption is violated [17].

Table 5.

Bayesian algorithms used in different bushfire management studies.

Ensemble Algorithm: The ensemble method incorporates several weaker models that are independently trained to make an overall prediction that is based on combining their predictions. The results of these methods are usually more accurate than those of a single model. The random forest (RF) is an ensemble model consisting of many decision trees that are individually trained. As part of an RF model, each component decision tree makes a classification decision based on the maximum number of votes. The class that received the most votes is chosen as the final classification. An RF can also perform regressions, and the final output is determined by averaging the individual tree outputs. By minimizing the correlation between trees and reducing the model variance, this algorithm achieves a high degree of performance but at the expense of increasing bias and loss of interpretability. Despite the increased performance, the increased bias and loss of interpretability come with the improved performance [17] (Table 6).

Table 6.

Ensemble algorithms used in different bushfire management studies.

Deep-Learning Algorithm and Artificial Neural Networks: Artificial neural networks have evolved into deep-learning algorithms in the modern era. These methods design more extensive neural networks and handle large datasets of labelled analogue data, such as images, text, audio, and video. Artificial neural networks (ANNs) are built from a set of inputs multiplied linearly, each with a weight associated with it. The final weighted sum is converted into the output signal using a nonlinear activation function. A deep neural network (DNN) uses many layers of hidden information. This includes convolutional neural networks (CNNs), widely used in image analysis, and recurrent neural networks (RNNs), which are useful for modelling dynamic temporal phenomena.

Many different types of hyperparameters can be used when designing DNNs, including the connectivity between nodes, the number of layers, and the types of activation functions [17]. An ANN is typically trained by processing input data that feeds through network layers and activation functions to create an output, regardless of its architecture. An error measurement is used to gauge the performance of models in the supervised setting, which compares the result with labelled training data. Table 7 shows the summary of bushfire management studies based on deep learning and artificial neural networks.

Table 7.

Artificial neural networks and deep-learning algorithms used in different bushfire management studies.

Regression Algorithm: The regression algorithm is one of the supervised learning algorithms in machine learning that helps identify predictors between data points and labels. The regression model uses the measure of the error to refine the predictions made by the model over time according to the relationship between variables. In machine learning, regression algorithms are used to predict future values. Through regression analysis, future values are predicted using the input data/historical data. An ML label is defined as the target predicted variable, while regression helps determine whether a label is related to data points. Table 8 shows the summary of bushfire management studies based on a regression algorithm.

Table 8.

Regression algorithms used in different bushfire management studies.

4.3.4. Simulation Models

A bushfire model predicts and understands bushfire behaviour through numerical simulation. As a result of the simulation system, bushfire suppression can be improved in terms of safety, risk reduction, and damage reduction. The objective of such a system is to recreate fire behaviour, such as the speed at which a fire spreads, the direction in which it spreads, and the amount of heat it generates [86] in addition to estimating fire effects, fire simulation attempts to measure fuel consumption, tree mortality, smoke production, and the ecological impacts of fire [87]. Additionally, a simulation system can provide bushfire managers with predictions of fire propagation, thereby, increasing the effectiveness of suppression efforts and potentially reducing costs.

Several fire-spreading simulators have been developed in the past (Table 9). Spark is one of the toolkits for processing, simulating, and analysing bushfires from start to finish. Spark’s GPU-based computational fire propagation solver enables users to design custom fire propagation models by including input, processing, and visualization components tailored to bushfire modelling [88]. Meteorological forecasts can be read directly into Spark models to provide weather information [89]. The Spark platform easily incorporates environmental data, such as land slope, vegetation, and un-burnable areas into the fire spread rate calculation and leverages this information.

Table 9.

Simulation model and their specifications used in different studies.

However, an extended period can introduce a large amount of uncertainty into results due to changes in the environmental components. WFDS (Wildland-Urban Interface Fire Dynamics Simulator) is based on the FDS (Fire Dynamics Simulator) developed at the U.S. National Institute of Standards and Technology (NIST) [90]. An approximation to the fluid dynamics, combustion, and thermal degradation of solid fuels is used in this fully three-dimensional, physics-based, semi-coupled fire atmosphere model [91]. In WFDS, data assimilation is limited to nudging weather data with only limited capabilities [90].

Another popular simulator is the Fire Area Simulator ( FARSITE ) [98,99], which simulates fire spread and behaviour in diverse terrain, fuel, and weather conditions. This simulator uses the fire behaviour prediction system BEHAVE, which was developed by Rothermel [86]. The FARSITE model has been selected as the best fire growth prediction model by many federal land management agencies [87] due to its flexibility and free availability to anyone. However, this requires fuel layers that are expensive and difficult to construct.

Although most existing vegetation layers and databases do not quantify fuel information to the level of detail and resolution needed for running the FARSITE model, most fire and land managers need more fuel maps to run FARSITE. Due to inexperience with modelling and mapping vegetation and fuels under fire conditions, some efforts to create FARSITE layers from existing maps have failed [87]. In addition, Prometheus is the Canadian wildland fire growth simulation model based on Fire Weather Index and Fire Behaviour Prediction subsystems of the Fire Danger Rating System [100]. The model computes fire behaviour and spread depending on conditions, such as fuel, topography, and weather. It is a user-friendly software; users can modify fuel and weather data as ASCII files [87].

The Phoenix [101] fire characterization model was developed in Australia to help manage bushfire risk. This simulation tool directly relates the impacts of various management strategies to changes in fire characteristics across the landscape and the nature of the impact on various values and assets in the landscape [87]. In contrast to many standard fire behaviour models, Phoenix can adapt to changes in fire conditions, fuel, weather, and topographic conditions as the fire moves and grows.

Apart from this, there are many fire simulation tools, such as FIRE! [102], EXTENDED SWARM [103], FireMaster [104], FireStation [105], EMBYR [105], WildFire Analyst [106], FSim [107], NEXUS [108], FlamMap [109], BehavePlus [110], FOFEM [111], and FIRETEC [94] are available for bushfire prediction. Simulators mainly concentrate on mathematical principles, inputs and outputs, and programming languages for defining the parameters. Modelling bushfires primarily intends to develop procedures that can be incorporated into calculation tools for forest fire management and research.

It is important to identify, before an event occurs, the likely occurrence place and the progress and possible damage a bushfire could cause, particularly in the event that multiple ignitions occur simultaneously. In addition to developing different bushfire management systems, computer science and numerical weather prediction have played a vital role in the evolution of bushfire spread models. The development of more powerful, versatile, and sophisticated coupled systems has facilitated the management of bushfires in response to advancements in modelling, numerical methods, and remote sensing.

4.4. Applications of UAVs in Bushfire Management Systems

This section will review different strategies for using UAVs in bushfire management systems. As with any bushfire management system, they should sense or perceive their surroundings through sensors while flying over the affected area. Since the accuracy of detection operations depends on the quality and intensity of data provided by a camera. Most researchers have used infrared and visual cameras for monitoring operations; however, intelligent use of the combined multiple environmental sensors is yet to be seen.

Moreover, the system should utilise appropriate decision-making technology to increase prediction accuracy. Based on the literature studied, fire behaviour prediction, pre-mission path planning, and adaptation to environments are highly important aspects. Appropriate algorithms and, in some cases, combinations of algorithms could be utilised to achieve more efficient responses to uncertain conditions.

4.4.1. Fire Detection

One of the primary objectives of the bushfire monitoring system is fire detection. The detection of bushfires can be assessed onboard or by the ground station to detect the presence of fire using gathered information UAVs while patrolling over the high-risk zones. Data processed by sensors detect fires and then extract fire-related measures, which are then passed on to subsystems for action in such a scenario.

Early fire detection will enable the authorities to respond quickly and with as much force as possible when fighting forest fires. The traditional methods, such as smoke detectors and thermal sensors, need to provide exact information about where, what size, and how fast the fire is spreading. Onboard sensors used in UAVs can overcome these limitations by detecting fires early, being flexible, having a wide field of view, measuring their size, propagation direction, and rate of spread, and locating them precisely.

Bushfire detection may be difficult if the flames are hidden under a heavy forest canopy or smoke, as the flames may only become visible once they reach the forest crown. Many fire factors, such as heat, light, smoke, motion, and chemical by-products have been used in detection algorithms and computer vision techniques with the detection process to overcome this limitation [8]. Furthermore, high-sensitivity infrared cameras or sensors are required for effective flame detection during nighttime operations and when dealing with natural phenomena, such as fog, steam, and clouds [1]. Reliable early detection systems should consider multiple fire factors in both day and night operations to obtain reliable bushfire detection.

The advancement of UAVs featuring sensors has been widely used for bushfire detection over the last few decades using computer vision techniques. The detection of bushfires can be assessed onboard or by the ground station to detect the presence of fire using gathered information UAVs while patrolling over the high-risk zones. In such a scenario, data processed by sensors detect fires and then extract fire-related measures, which are then passed on to subsystems for action.

Researchers used image processing techniques with features, such as motion, colour, and coordinates to detect smoke produced by the fire [40,112,113,114] to onboard visual and infrared sensors data for fire detection [18]. For instance, infrared cameras and automatic image processing techniques have relied on the BOSQUE system for fire detection, including the rejection of false alarms [115]. Table 10 summarizes the applications of UAVs in bushfire detection operations.

Table 10.

Applications of UAVs in bushfire detection.

Ref. [114] used conventional cameras on towers to provide a continuous sequence of video frames of an alerting area. The author suggested an image processing technique with a thresholding process for fire detection that distinguished fire from non-fire in select regions of interest (ROIs) to save computational costs. Pastor et al. [118], and Dios et al. [119] used statistical data fusion techniques to merge individually processed data from each visual and infrared camera of a rotary-wing UAV.

Another study performed by Israeli Aircraft Industries equipped both forward-looking infrared (FLIR) and visible spectrum cameras to develop a UAV. They used both sensors to process data and produce information, such as fire characteristics (GLOBAL FIRE MONITORING CENTER, 2001). Furthermore, a fire segmentation algorithm for forest fire detection and monitoring using a small UAV was developed based on Vipin et al. [12], consisting of a rule-based colour model [120]. Then, the effectiveness of fire detection and monitoring was assessed using the DJI F550.

Severe fire conditions can cause hardware failure in a UAV. Therefore, multiple UAVs working in collaboration can provide more reliable continuous monitoring. A simulation was proposed for fire monitoring tasks, allowing obtaining a complete view of fire propagation by using multiple UAVs [121]. The information gathered from several UAVs from different points of view was used to estimate the evolution of the fire. Another study has been proposed in a bushfire front monitoring task with up to nine UAVs that incorporate wind [122]. Moreover, two different UAV models: a multi-copter and a fixed-wing model, were analysed. In addition, the researchers analysed both the advantages and disadvantages of MSTA and VDN algorithms in terms of the task complexity and scalability with the number of UAVs.

Similarly, an experiment with Qball X-4 quadrotors was explored with a group of six UAVs patrolling around the fire perimeter to provide information with minimum latency [123]. Initially, they started by forming a leader–follower model for detecting fire, and later on, the model was expanded by adding fault-tolerant cooperative control (FTCC). Furthermore, an effective path planning algorithm for UAVs tasked to monitor a forest fire was implemented to evaluate the time evolution of a bush fire [124]. As a result of using multiple UAVs, the effectiveness of the mission, when and which UAV should be taken down for refuelling, the coordination of UAV paths to cover the most critical areas, and how to measure the performance of the entire fleet of UAVs have been addressed.

4.4.2. Fire Behaviour Prediction

Predicting fire propagation and using autonomous intelligence is essential for developing quick, effective, and advanced firefighting strategies. Therefore, the evolution of the fire front and other properties of the fire are very important, such as the fire front location, the spread rate, the flame height, the angle at which the flames incline, and the size of the burning area. By analysing the gathered information, an appropriate machine-learning algorithm can be developed to predict fire behaviour, navigate in the optimal path, and avoid obstacles while flying over the fire zone.

Fire-behaviour prediction is an essential task of fire-monitoring operations to provide continuous information about the fire to firefighters and to help develop effective firefighting strategies. This task involves predicting the propagation, estimating the rate of fire spread, fire intensity, post-fire damage evaluation, and fire descriptions, such as the fire front location, flame height, and so on. Many fire prediction methodologies have been developed with the aid of visual and infrared cameras and machine-learning algorithms in recent decades.

Sherstjuk et al. [20] used a combination of the multi-UAV-based automatic monitoring system and remote-sensing techniques that estimate necessary fire parameters and predict fire spreading. The authors reported achieving, under different terrain and weather conditions, 96% accuracy in predicting fire behaviour. A grid-based probability model [121] estimated the propagation direction using the probability of fire spreading to the surrounding grids. In addition, many other intelligent algorithms have also been investigated [65,66,125,126], including random forest, SVM, ANN, DNN, and MDP.

These approaches are widely applied in bushfire behaviour predictions due to their adequate high performance in different surroundings. Toujani et al. [127] proposed an approach based on the Markov process for the burned area prediction in northern Tunisia. Spatiotemporal factors influencing fire behaviour are classified using a self-organising map (SOM). Liang et al. [128] estimated the scale of a bushfire using the size of the burned area and the fire’s duration. The prediction models were established as a back propagation neural network (BPNN), a recurrent neural network (RNN), and long short-term memory (LSTM). As a result, they found the highest accuracy achieved when using LSTM among the other classification methods. Table 11 shows research-based ML methods and different types of sensors and cameras used in UAV-based bushfire prediction applications.

Table 11.

Applications of UAVs in bushfire prediction.

4.4.3. Autonomous Navigation

UAVs have been proposed as an adequate technology for bushfire management because of their capabilities and attributes. However, UAVs must be fully aware of their state, location, direction, navigation speed, starting point, and target location to complete the mission [129]. Sometimes, due to internal and external constraints, it is not easy to proceed with the task by UAVs. In some disaster relief situations, it would not be easier to obtain a map of the target area in advance. Building maps simultaneously as the flight would be essential for efficient management under such circumstances.

Literature related to navigation problems, the Markov decision problem (MDP), and the partially observable Markov decision problem (POMDP) has been recommended while making navigation decisions under uncertain conditions [72,73,121,130]. Similarly, the navigation problem under an uncertain environment in a cluttered and GPS-denied environment using onboard autonomous UAVs was proposed by Vanegas, and Gonzalez [131]. The authors compared and integrated two online POMDP solvers, partially observable Monte Carlo planning (POMCP) [132] and an adaptive belief tree (ABT), to form a modular system architecture along with a motion control module and perception module.

Accuracy in uncertain conditions plays an essential role in any autonomous navigation system since it can detect, provide crucial information about obstacles in the way, and reduce collision risks. Obstacle detection and avoidance are the essential characteristics of autonomous navigation since these characteristics can detect and provide the necessary information (such as distances between the UAV and obstacles) of nearby obstacles, which will help reduce the risks of collision and operation errors.

If an obstacle is becoming closer to UAVs, the UAV should be able to avoid or change direction under the instructions given by the obstacle avoidance module. In addition, the UAV navigation process needs to find an optimal path between the target position and starting position based on the shortest flying time, minimum cost of work, and the shortest flying route. The UAV needs to avoid obstacles.

UAVs with consumer-based digital cameras and fire-detection algorithms are used for inspection purposes. The study [43] used monocular cameras for collecting videos, and simultaneous localization and mapping (SLAM) systems were used for navigation. The simulation results showed that both methods allowed the aircraft to track bushfires accurately, scale with different numbers of aircraft, and generalize to different bushfire shapes [133]. A novel algorithm was implemented with block-based texture features, colour features, and artificial neural networks (ANN) to detect smoke and flame from a single image [1]. The proposed algorithm for existing UAV-based fire monitoring systems can provide rapid, reliable, and continuous detection under any situation.

Despite their positive results, many issues related to UAV-based forest fire monitoring systems, including their architecture, sensors, suitable platforms, and remote sensing, still need to be further investigated. However, localized environmental and terrain conditions combined with natural disturbances, such as wind, atmospheric pressure, and temperature, cause losses in UAV sensory information, such as fire factor prediction and navigation near the fire. A proper mechanism and path-planning strategy must be developed to improve the prediction accuracy and resolve the above-mentioned issues.

4.4.4. Obstacle Detection

UAVs have become integral to a wide range of public activities today due to their intelligence and autonomy. Thus, intelligent collision avoidance systems enable UAVs to be safer by preventing accidents with other objects and reducing risk. The first stage of any collision avoidance system is detecting obstacles with high accuracy and efficiency. While flying over high-risk zones, UAVs should be able to sense their surroundings and the environment to locate obstacles. Therefore, UAVs require sensitive sensors, and thus lightweight sensors may be the best choice due to their size, weight, and power constraints. Additionally, authors suggested that UAVs equipped with more than one sensor can be more effective in detecting threats than those equipped with only one sensor [45].

Various factors may influence obstacle detection, including size, direction, and wind speed. Several approaches were presented for obstacle detection in vision-based navigation. Various approaches [134,135,136] have used a 3D model, while other approaches [134,137,138] calculated the distance of the obstacles. Methods based on optical flow [139,140,141] or perspective cues [142] can also estimate the presence of an obstacle without considering a 3D model.

However, optical flow approaches do not deal with forwarding movement because of the opening problem. Therefore, frontal obstacles would only provide a movement component normal to the perceived edges, not actual frontal movement information. SIFT descriptors, and multi-scale oriented-patches (MOPS) are combined in [143] to provide 3D information about objects by extracting their edges and corners. The presented approach, nonetheless, requires a long computational time.

4.4.5. Obstacle Avoidance

Collision avoidance systems are vital for both non-autonomous and autonomous vehicles since they can detect, provide crucial information about obstacles, and reduce collision risks. A number of factors are involved in collisions, including operator negligence, equipment failure, and bad weather conditions. Intelligent collision avoidance methods can significantly reduce the risk of collisions between planes and save lives in this way. A UAV collision avoidance system ensures that no collision occurs with stationary or moving obstacles.

The obstacle avoidance operation concludes by finding obstacles and calculating the distances between the UAV and obstacles when an obstacle is nearby. For that purpose, the collision avoidance system can detect obstacle characteristics (such as the velocity, size, and position), calculate the risk of collision if the object is approaching, and make collision avoidance decisions as a result of the calculations [45].

Visual sensors are used to obtain visual information for obstacle avoidance based on optical flow-based and SLAM-based methods. Based on optical flow, Gosiewski et al. [144] used image processing techniques to avoid obstacles by obtaining the depth of the image and formulating local information flow. Yasin et al. [45] reviewed the strategies and mechanisms in UAVs for obstacle detection and collision avoidance.

They discussed the different types of sensors, such as passive sensors: camera, infrared, and active sensors: RADAR, LiDAR, and SONAR for collision avoidance in the context of UAVs. The survey categorised collision avoidance techniques into geometric, force-field, optimisation-based, sense, and avoid methods and explained different scenarios and technical aspects.

Al-Kaff et al. [134] presented a bio-inspired approach to detect the change of obstacle size during flight and to mimic human eyes for obstacle detection and avoidance in UAVs. The system concluded with vision-based navigation, and an obstacle-detection algorithm was performed based on the input images captured from the front camera. This could identify the obstacle by comparing the sequential images and finding the nearest obstacles. However, the vision-based method in some specific operations needs to acquire an accurate distance. In contrast, the SLAM algorithm in UAVs can navigate and avoid obstacles with environmental information [145].

4.4.6. Search and Rescue

A search and rescue operation’s primary objectives are to save lives, protect civilians, and manage disasters. Using onboard cameras and navigation sensors, UAVs could help disaster responders identify potential victims in large disaster areas scouted in the skies by UAVs. SAR missions can be drastically reduced by using UAVs, resulting in significant financial savings, time savings, and the ability to save lives [146]. Several fundamental factors must be considered when designing a UAV-based search and rescue system, including the restrictions on energy consumption, the hazards of the environment, the data quality, and communication between UAVs [147,148]. Furthermore, the authors in [148] analysed how these parameters affect search performance and studied different search algorithms.

Recent research has shown that UAVs can be highly useful for SAR operations [146,147,149]. As part of a search and rescue mission, a UAV may be deployed in heavy snow and woods at night and during the day to identify victims. The use of unmanned aerial vehicles with electro-optical sensors, real-time processing modules, and advanced communication systems can improve the ability of government authorities and rescue agencies to identify and locate wounded and missing people during disasters and afterwards [146]. Moreover, this multipurpose UAV can carry emergency kits or life-support devices that can be dropped on victims in an emergency [147].

An innovative UAV-routing framework that maximizes mobility and transmission power for emergency message delivery and gathering is introduced in [150]. An article published in [151] presented a way to obtain information about a disaster-affected region. This method is accomplished by taking photos in real-time, marking their positions and altitudes, and sending those images and flight characteristics to a ground control station, creating a three-dimensional danger map based solely on local information. Researchers showed how UAVs equipped with vision cameras [152] and ML techniques [153,154] can assist in avalanche SAR operations. According to [155], data from onboard sensors provide colour and depth information for detecting a human body. Furthermore, the authors presented a computational model that rotates the point of view around the target and is size-invariant. Table 12 outlined the UAV-based other applications in bushfire management.

Table 12.

Other applications of UAVs in bushfire management.

5. Challenges of UAV-Based Bushfire Management

The application of UAVs to bushfire operations has received sufficient attention due to their significant impact on remote sensing. However, some challenges prevent firefighters from adapting UAV applications and affect the accuracy and performance of operations with UAVs. As a result, this section highlights the challenges of UAVs in bushfire operations, which may be used to improve processes in the future.

5.1. Technical Challenges

New challenges arise in the design of the systems, the performance of networks, the optimization of communication channels, remote sensing, and the exploration of energy constraints in light of the technical requirements of aerial applications. For example, UAVs operating cooperatively on disaster rescue must meet tight timing synchronization requirements, whereas considerable communication delays may be tolerated in disaster area scanning applications [147]. In some disaster areas, transmission rates are low, while in others, high rates are required for applications, such as identifying trapped people [147]. We examine the existing technical challenges associated with UAV-based bushfire management in this section.

UAV energy limitations: One of the greatest challenges is battery power consumption since UAVs depend on their onboard battery to power their operations. The power consumption is particularly an issue for smaller-sized UAVs that cannot carry larger batteries due to their payload capacity limitations [158]. In some instances, UAVs must be operated over disaster-stricken regions for extended periods. This is not always possible due to limited onboard power sources for UAV hovering, data processing, wireless communications, and image analysis. Researchers are investigating ways to extend UAV power supplies while on a mission to avoid disruptions. For example, energy harvesting using far-field wireless power transfer from dedicated power sources has been proposed [159,160]. Ubiquitous solutions for implementing in situ and contactless power supply for commodity UAVs, particularly small-sized ones, are yet to be available in the market.

Camera limitation: There is a need to address the limitations of current lightweight digital cameras based on their radiometric and geometrical properties. UAV digital cameras are not designed for remote-sensing applications and are designed for the general market. In addition, the camera’s detectors may also become saturated in areas with high contrasts, such as when the affected area includes a dark forest and a snow-covered field. Additionally, many cameras suffer from vignetting, where the central part of the image appears brighter than the edges [161]. Several techniques can be used to improve the quality of the image, such as using micro four-thirds cameras with fixed interchangeable lenses instead of retractable lenses. This results in significantly enhanced calibrations; removing blurry, under or overexposed, and saturated images can make a large difference in the processing stage [162].

Absence or inaccuracy of GPS signals: In addition to allowing autonomous UAVs to navigate in indoor environments without GPS signals, another challenge is to enable them to make a safe landing [163]. A UAV uses GPS sensors to pinpoint its location before taking off so that it can plan a flight path to its destination [164]. A GPS may not function properly due to obstructions or inadequate satellite signals, and the accuracy of such a system may be decreased for low-cost UAVs. It is challenging to fly in settings without GPS and to only rely on onboard sensors for localization. This is particularly true when obstacles must be avoided in the airspace or when the target’s location is unclear, so that the UAV has to fly and explore until it reaches the target.

Multi-UAV collaboration: Enhancing efficiency requires collaboration and coordination among UAVs. Moreover, the UAVs have been integrated with cooperative wireless networks to provide communication facilities between them in managing bushfires. During such situations, tight timing synchronization between multiple UAVs is required when they work together on bushfire monitoring. At the same time, extensive communication delays can be tolerated when the application scans the disaster area. There is a limit to the payload capacity of mini-UAVs due to their small size [147]. To effectively manage disasters, they must cooperate among the team, and with the ground network, such as a cloud, with many available options, such as radars, infrared cameras, thermal cameras, and image sensors [165].

Path planning: For autonomous operations of UAVs to cover the area of interest as per dynamic situations, intelligent trajectory planning and optimization are necessary [166]. While the trajectories need to be optimized for addressing the situation’s needs (e.g., fire spot locations), optimizations are also required for a trajectory to be energy efficient [167,168]. This optimization raises the need for multi-criteria optimizations, which becomes a multi-agent optimization problem when applied to multi-UAV scenarios.

This would lead to more complexity in the optimization model, and convergence time could be an issue in real-time systems involving UAV onboard computers. In addition, dynamic changes may occur during bushfire monitoring, such as the joining or disconnection of UAVs, the removal of physical obstacles, and the threat of dynamic changes. The UAV’s previous path must be changed, and new directions must be calculated dynamically [169] in these situations. Therefore, high-performance techniques in dynamic path planning are needed to monitor a system efficiently.

5.2. Environmental Challenges

Weather conditions challenge UAVs because they often lead to deviations from their predetermined paths. Weather challenges become particularly difficult and cardinal when natural or human-made disasters occur, including rain, snow, bush clouds, hurricanes, lightning, or air pollution. UAVs risk failing to accomplish their missions due to adverse weather conditions in such scenarios. There is considerable evidence [170] that power consumption is more sensitive to environmental factors, such as side winds than to altitude or payload.

During clear and well-defined shadows on a sunny day, the automated image-matching algorithms used in the triangulation process and digital elevation model generation may have critical problems [162]. If the clouds are moving quickly, shaded areas can appear differently on different images acquired during the same mission, thus, causing the aerial triangulation process to fail for some images and also causing errors in digital elevation models that are generated automatically. A mosaic with poor visual quality can also result from the automated colour-balancing algorithms when they are affected by patterns of light and shade across images [162].

Safe and controlled UAV landings under dynamic conditions, such as a moving platform or oscillating platforms, are affected by this environmental disturbance. Different methodologies or algorithms should be implemented to minimise the effects of such uncertainties and disturbances. As part of [171], the authors proposed a method of triangular mesh generation that considers the wind field and performs online adjustments that minimise the losses due to the identified wind field to optimise the coverage in urban areas. The authors [166,172] proposed the collision avoidance of UAVs to deal with the instability caused by winds, sensor noise, and unknown obstacle acceleration.

The system’s accuracy is based on the characteristics of UAVs, such as obstacle detection and avoidance as well as finding the optimal path and shortest flying path, in uncertain environmental conditions. The collision avoidance methods considered approach is robust with low data overheads and low response times, which would be a better choice in all kinds of environments for avoiding obstacles to ensure the safety of the UAVs. Moreover, a more efficient path planning algorithm is necessary to integrate and make sure to reach the destination after avoiding collisions without becoming stuck.

6. Conclusions

UAVs can be utilized in bushfire management systems to detect fire faster, provide imagery from difficult-to-reach locations, make operations safer, and save lives. However, research shows that modern technologies in bushfire management systems need to be more efficient, which is evident in large-scale bushfires due to poor management systems [173]. In this paper, we examined the efficiency of using UAVs for bushfire management, including fire detection, fire prediction, search and rescue, navigation, and other technologies used to maximize the accuracy of results.

Furthermore, this paper discussed the benefits and drawbacks of using UAVs in bushfire management by compiling relevant data about the use of UAVs in bushfire management. This paper demonstrated that localized environmental and terrain impediments make it difficult for UAVs to predict fire factors accurately and to navigate. A review of a significant amount of recent research showed the difficulty of locating bushfires using environmental sensors and GPS data.

This paper highlighted the significance of the architecture, sensors, suitable platforms, and remote-sensing capabilities of UAV-based bushfire management. Infrared cameras mounted on UAVs most often form part of a multisensor payload, usually in combination with RGB and thermal sensors. This is convenient since operators can capture visual and thermal data simultaneously. It is more effective to aggregate knowledge from different sources rather than only from one sensor.

Despite adverse weather conditions, UAVs must be capable of battling fires as they increase in size, frequency, and intensity. UAVs need to have high energy efficiency when dealing with large fires or operations that last a long time. Moreover, when working with firefighters, the UAVs must be reliable and equipped with advanced thermal sensors, longer flight times, heat resistance, obstacle avoidance, and waterproofing to operate in all temperatures and conditions. Hence, there is high urgency for more development in the aforementioned areas using robust and global optimization algorithms for bushfire management.

Furthermore, device vibration, poor camera quality, fast movement, and UAV rotation often disturb images and cause low-quality imaging. UAVs with machine-learning and deep-learning technology can address numerous operational and management challenges in bushfire management. In some cases, the sensors equipped on the UAVs are inadequate to sense the information for a large-scale affected region. Furthermore, UAVs may fail to detect fire in smoke or cloudy situations and may make false or missed detections due to uncertainty; this reduces the potential acceptable level of the firefighting industries and government authorities.

Moreover, UAV-based bushfire management still has several drawbacks, such as high initial costs, the loss of UAVs due to uncertainty or hardware failure, sensor capabilities, technical issues, the absence of established procedures for processing massive amounts of data, and the requirement of experts. Ultimately, robust UAV-based bushfire management can result in significant social, economic, and environmental benefits associated with bushfire management as well as enhancing decision-making and firefighting.

Author Contributions

Conceptualization, S.P., F.S. and J.H.; methodology, S.P.; validation, S.P., F.S. and J.H.; formal analysis, S.P.; writing—original draft preparation, S.P.; writing— editing, S.P.; reviewing the manuscript, S.P., F.S. and J.H.; visualization, S.P.; supervision, F.S. and J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ABT | Adaptive Belief Tree |

| LSTM | Long Short-Term Memory |

| AI | Artificial Intelligence |

| MASTER | MODIS/ASTER |

| ANN | Artificial Neural Network |

| MDP | Markov Decision Process |

| ARC | Ames Research Center |

| ML | Machine Learning |

| ASTER | Advanced Space-borne Thermal Emission Reflection Radiator |

| MODIS | Moderate Resolution Imaging Spectroradiometer |

| AVIC | Aviation Industry Corporation |

| MOPS | Multi-Scale Oriented Patches |

| BPNN | Back-propagation Neural Network |

| NASA | National Aeronautics and Space Administration |

| CSIRO | Commonwealth Scientific and Industrial Research Organisation |

| NIST | National Institute of Standards and Technology |

| CCD | Charge Coupled Device |

| NN | Neural Network |

| CMOS | complementary metal-oxide semiconductor |

| POMCP | Partially Observable Monte Carlo Planning |

| CNN | Convolutional Neural Network |

| POMDP | Partially Observable Markov Decision Process |

| DBNet | Deep Belif Network |

| Radar | Radio Detection and Ranging |

| DCNN | Deep convolutional neural networks |

| RF | Random Forest |

| DL | Deep Learning |

| RAPT | RAre class Prediction in absence of True labels |

| DSS | Decision Support System |

| RGB | Red Green Blue |

| DT | Decision Tree |

| RL | Reinforcement Learning |

| EMBYR | Ecological Model for Burning the Yellowstone Region |

| RNN | Recurrent Neural Network |

| FARSITE | Fire Area Simulator |

| ROI | Region of Interest |

| FDS | Fire Dynamics Simulator |

| SAR | Search and Rescue |

| FiRE | Fire Response Experiment |

| SIFT | Scale-Invariant Feature Transform |

| FLIR | Forward-Looking Infrared |

| SLAM | Simultaneous Localization and Mapping |

| FOFEM | First Order Fire Effects Model |

| SLR | Single-Lens Reflex |

| FSim | Feature-Similarity |

| SONAR | Sound Navigation and Ranging |

| FTCC | Fault-Tolerant Cooperative Control |

| SOM | Self-Organizing Map |

| FuzCoC | Fuzzy Complementary Criterion |

| SVR | Support Vector Regression |

| GPR | Gaussian Process Regression |

| UAV | Unmanned Aerial Vehicle |

| GPS | Global Positioning System |

| UGV | Unmanned Ground Vehicle |

| IEEE | Institute of Electrical and Electronics Engineers |

| USFS | United States Forest Service |

| IMU | Inertial Measurement Unit |

| WFDS | Wildland-Urban Fire Dynamics Simulator |

| IR | Infrared |

| WSN | Wireless Sensor Network |

| kNN | k-Nearest Neighbour |

| YOLO | You Only Look Once |

| LIDAR | Light Detection and Ranging |

References

- Hossain, F.A.; Youmin, Z.; Yuan, C.; Su, C. Wildfire Flame and Smoke Detection Using Static Image Features and Artificial Neural Network. In Proceedings of the 2019 First International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 23–27 July 2019; pp. 1–6. [Google Scholar]

- Ivić, M. Artificial Intelligence and Geospatial Analysis in Disaster Management. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2019, XLII-3/W8, 161–166. [Google Scholar] [CrossRef]

- Thomas, D.; Butry, D.; Gilbert, S.; Webb, D.; Fung, J. The Costs and Losses of Wildfires; National Institute of Standards and Technology Special Publication; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2017; Volume 1215. [Google Scholar]

- Richards, L.; Brew, N.; Smith, L. 2019–20 Australian bushfires—Frequently asked questions: A quick guide. Res. Ser. 2019, 2, 1–10. [Google Scholar]

- Wuebbles, D.J.; Fahey, D.W.; Hibbard, K.A.; Arnold, J.R.; DeAngelo, B.; Doherty, S.; Easterling, D.R.; Edmonds, J.; Edmonds, T.; Hall, T.; et al. Climate Science Special Report: Fourth National Climate Assessment (NCA4); U.S. Global Change Research Program: Washington, DC, USA, 2017; Volume 1, p. 470. [Google Scholar]

- Weiskittel, A.R.; Maguire, D.A. Response of Douglas-fir leaf area index and litterfall dynamics to Swiss needle cast in north coastal Oregon, USA. Ann. For. Sci. 2007, 64, 121–132. [Google Scholar] [CrossRef]

- Kolarić, D.; Skala, K.; Dubravić, A.T. Integrated system for forest fire early detection and management. Period. Biol. 2008, 110, 205–211. [Google Scholar]

- Allison, R.S.; Johnston, J.M.; Craig, G.; Jennings, S. Airborne optical and thermal remote sensing for wildfire detection and monitoring. Sensors 2016, 16, 1310. [Google Scholar] [CrossRef]

- CSIRO, Model Library. Available online: https://research.csiro.au/spark/resources/model-library (accessed on 10 June 2021).

- Akhloufi, M.A.; Castro, N.A.; Couturier, A.T. Unmanned aerial systems for wildland and forest fires: Sensing, perception, cooperation and assistance. arXiv 2020, arXiv:2004.13883. [Google Scholar]

- Afghah, F.; Razi, A.; Chakareski, J.; Ashdown, J. Wildfire monitoring in remote areas using autonomous unmanned aerial vehicles. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Paris, France, 29 April–2 May 2019; pp. 835–840. [Google Scholar]

- Vipin, V.T. Image processing based forest fire detection. Int. J. Emerg. Technol. Adv. Eng. 2012, 2, 87–95. [Google Scholar]

- Zhang, Y.; Jiang, J. Bibliographical review on reconfigurable fault-tolerant control systems. Annu. Rev. Control. 2008, 32, 229–252. [Google Scholar] [CrossRef]

- Roldán-Gómez, J.J.; González-Gironda, E.; Barrientos, A. A Survey on Robotic Technologies for Forest Firefighting: Applying Drone Swarms to Improve Firefighters’ Efficiency and Safety. Appl. Sci. 2021, 11, 363. [Google Scholar] [CrossRef]

- Arif, M.; Alghamdi, K.K.; Sahel, S.A.; Alosaimi, S.O.; Alsahaft, M.E.; Alharthi, M.A.; Arif, M. Role of machine learning algorithms in forest fire management: A literature review. J. Robot. Autom. 2021, 5, 212–226. [Google Scholar]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A review on early forest fire detection systems using optical remote sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef] [PubMed]

- Jain, P.; Coogan, S.C.P.; Subramanian, S.G.; Crowley, M.; Taylor, S.; Flannigan, M.D. A review of machine learning applications in wildfire science and management. Environ. Rev. 2020, 28, 478–505. [Google Scholar] [CrossRef]

- Yuan, C.; Zhang, Y.; Liu, Z. A survey on technologies for automatic forest fire monitoring, detection, and fighting using unmanned aerial vehicles and remote sensing techniques. Can. J. For. Res. 2015, 45, 783–792. [Google Scholar] [CrossRef]