Assessment of Indiana Unmanned Aerial System Crash Scene Mapping Program

Abstract

1. Introduction

1.1. Affordability

1.2. Public Safety Implementation

1.3. 2-Dimensional Measuring Techniques

1.4. 3-Dimensional Measuring Techniques

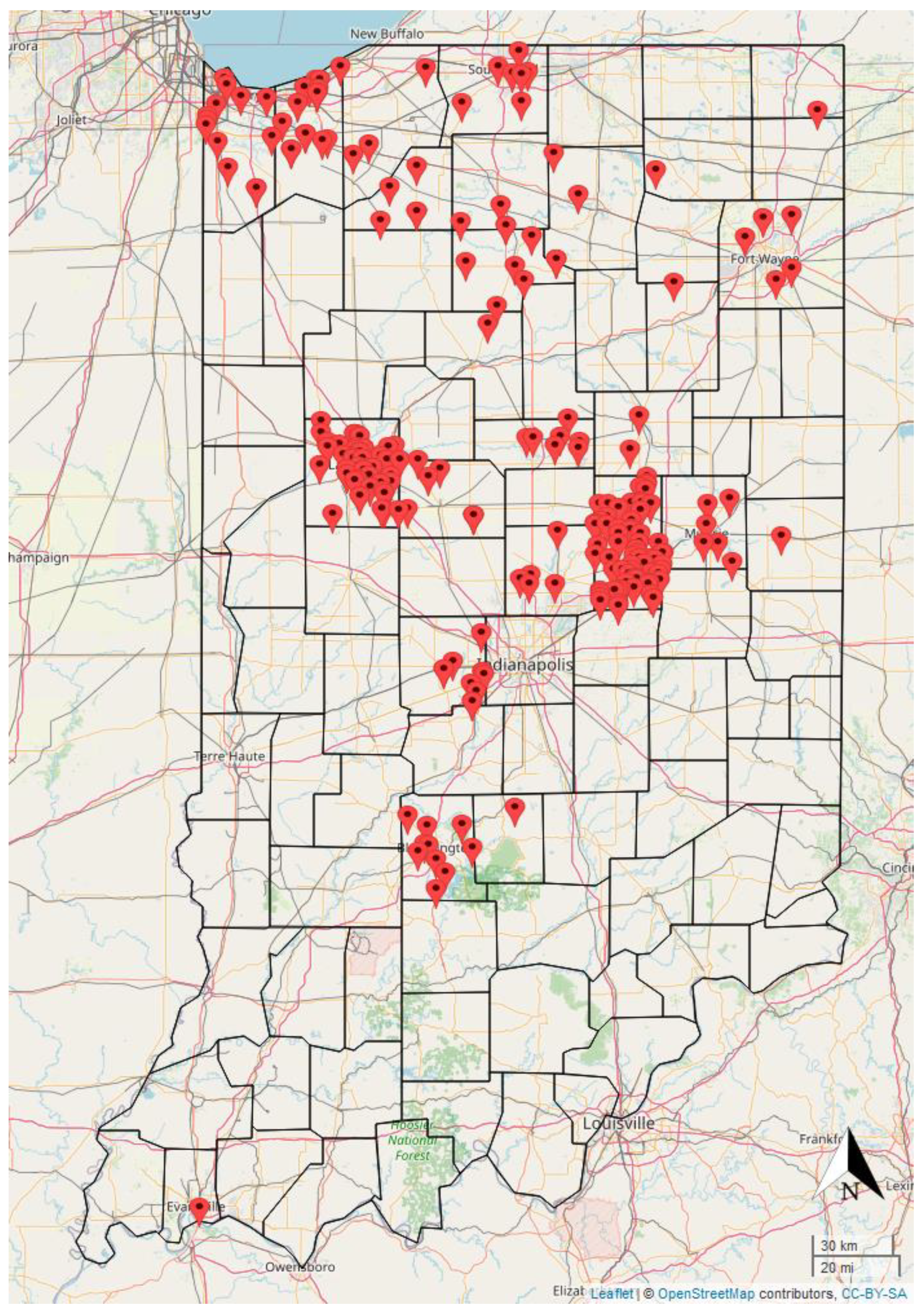

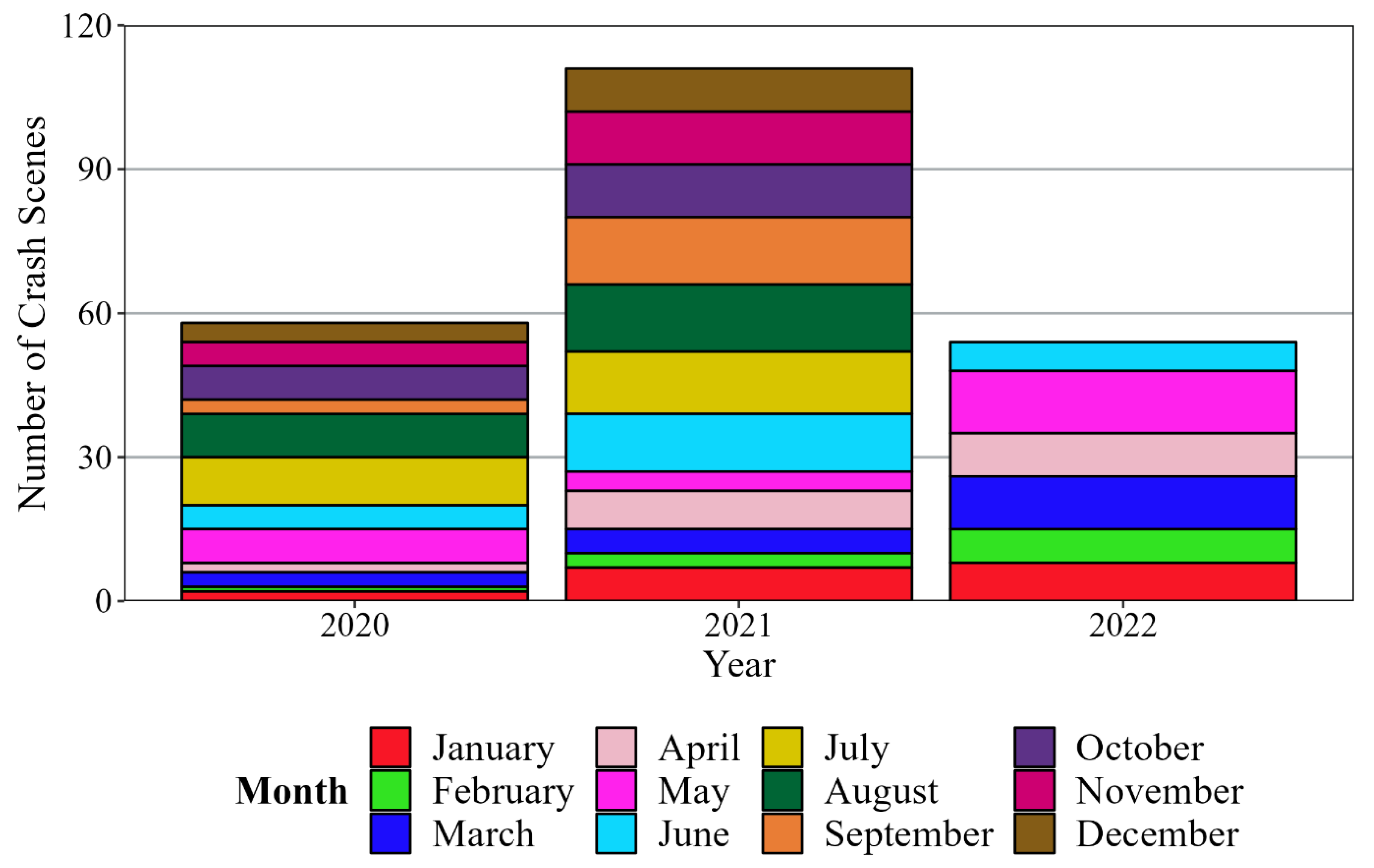

2. Statewide Deployment and Program Summary Statistics

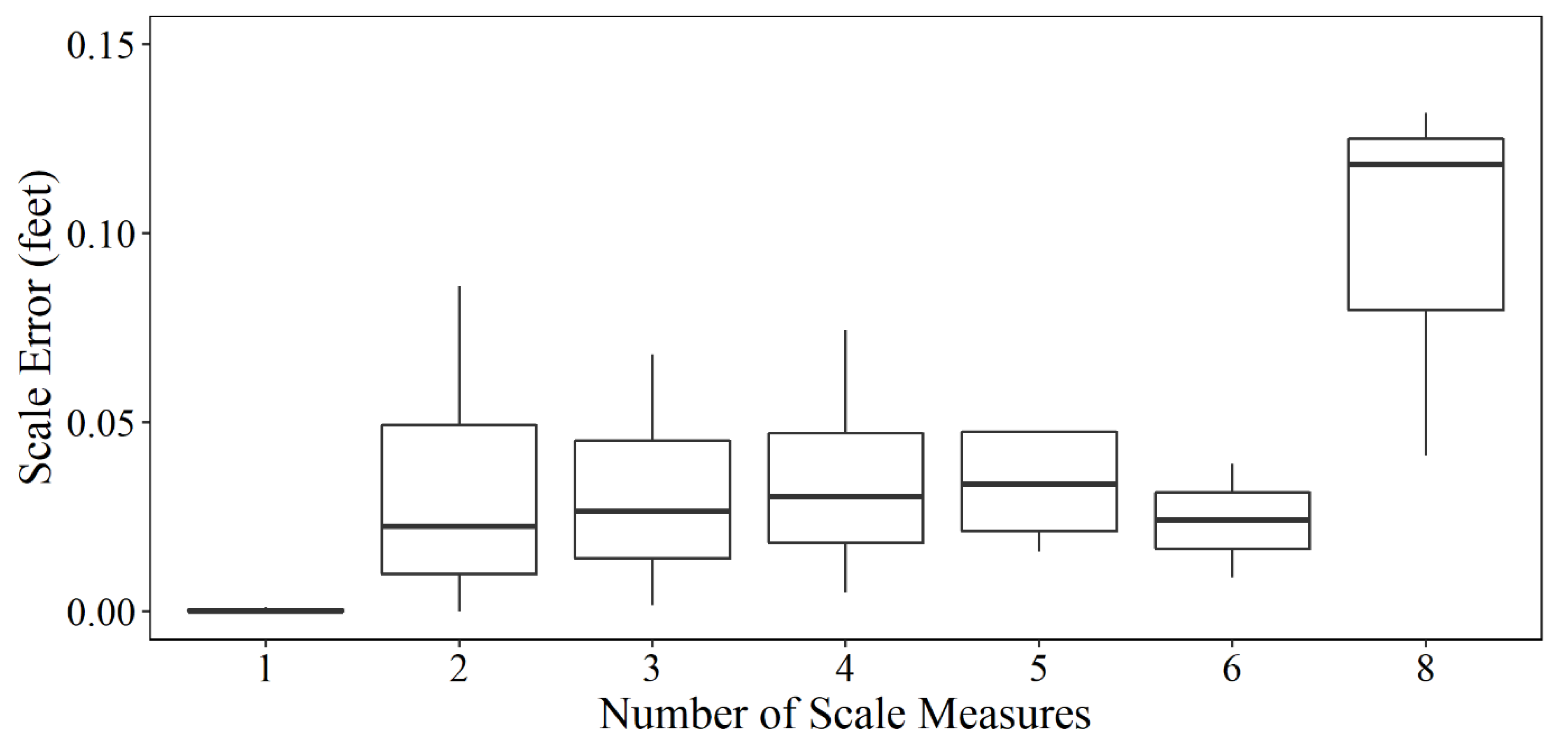

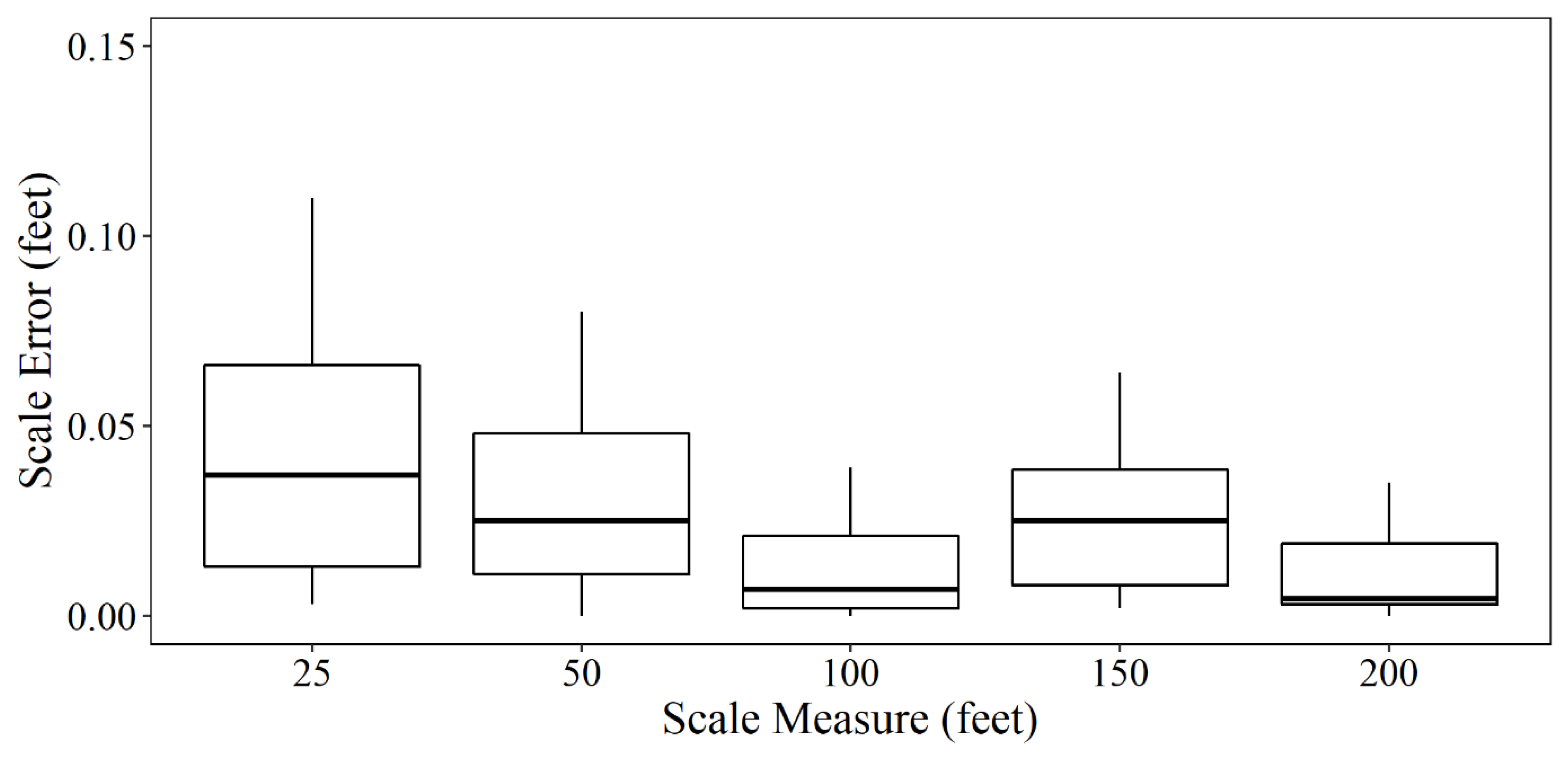

3. Spatial Accuracy of UAS-Based Photogrammetric Crash Scene Mapping

3.1. Scale Measurement Distances

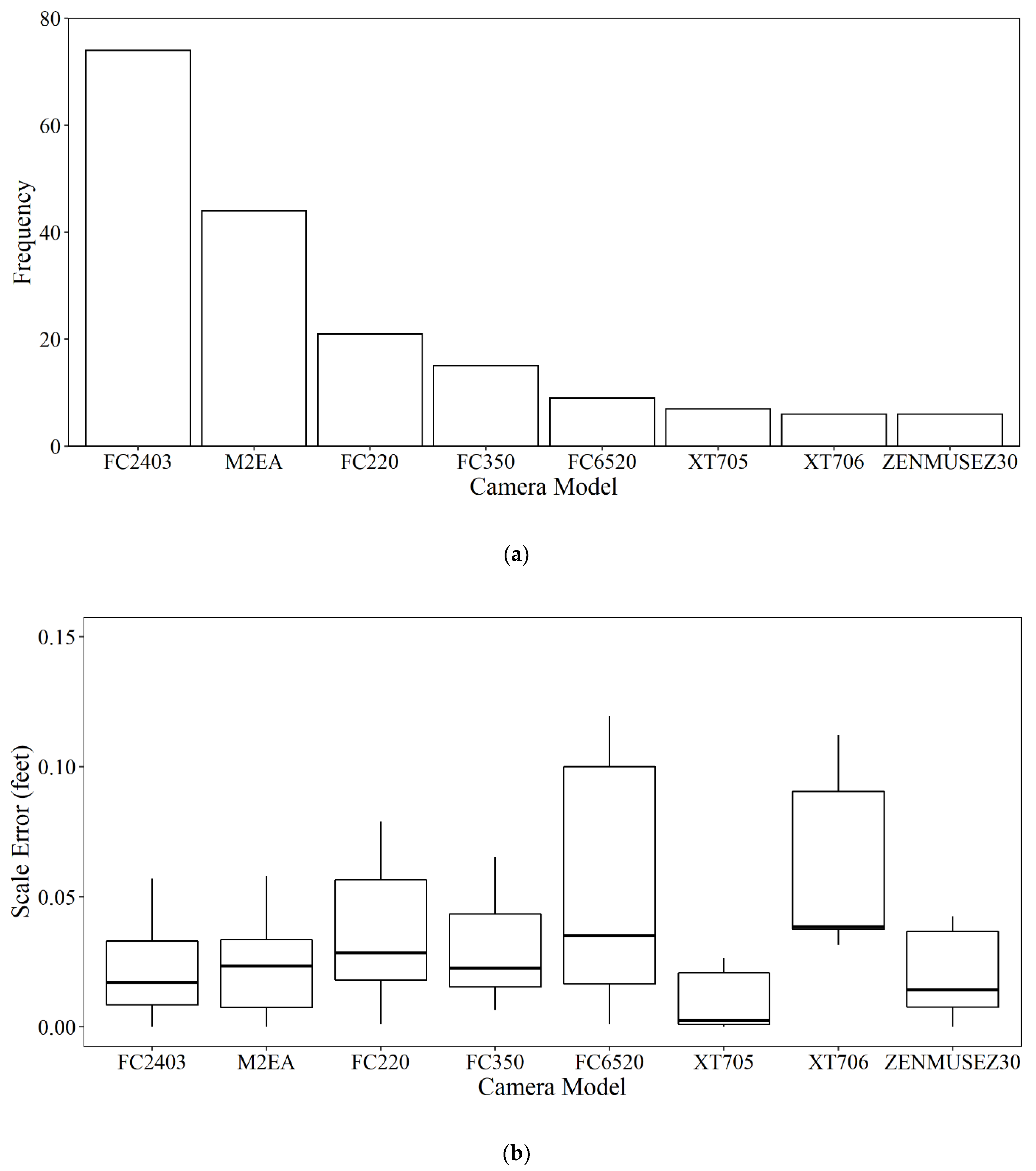

3.2. UAS Camera Models

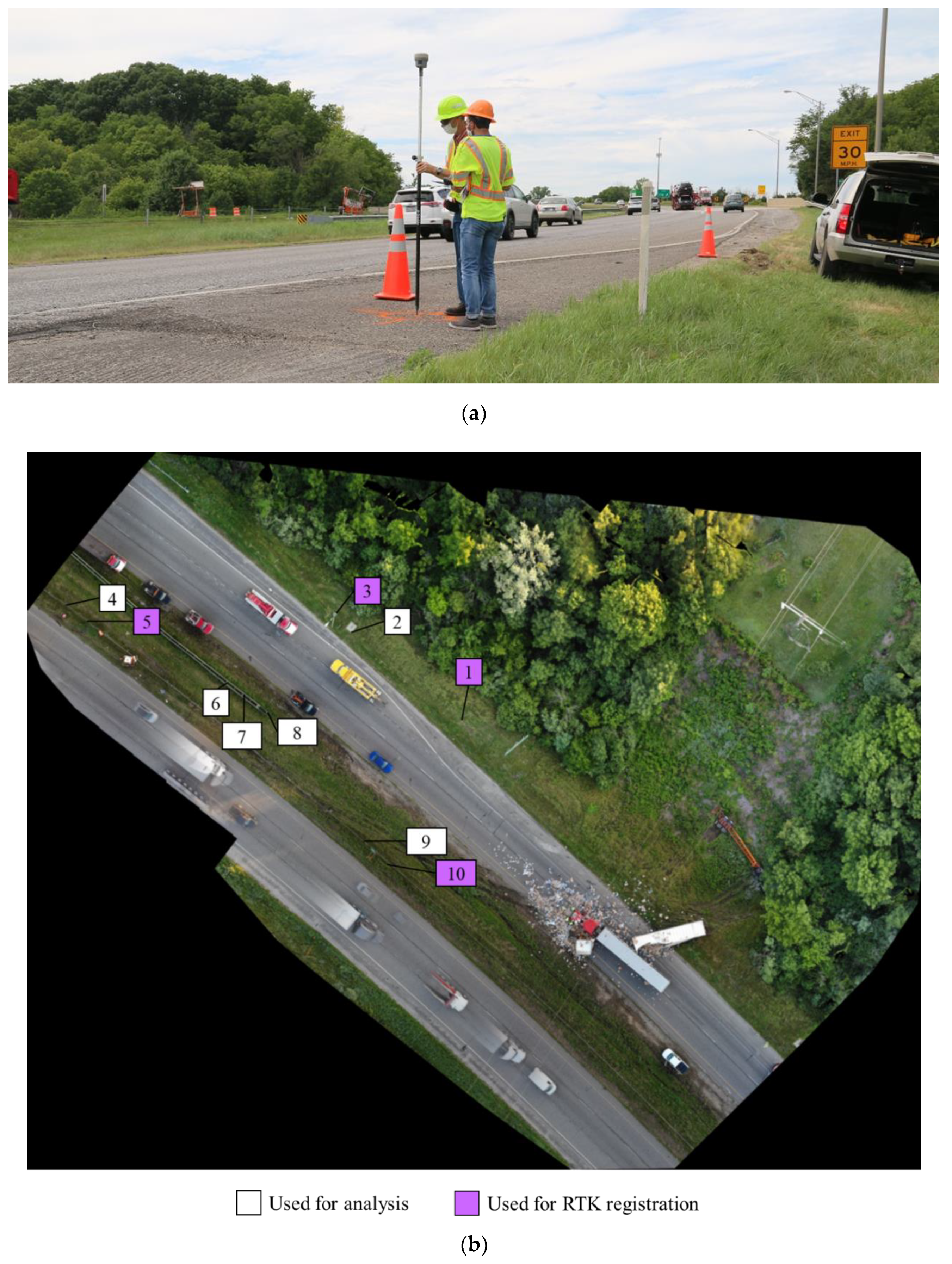

4. Case Study Comparison with Terrestrial Measurements

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Nikolakopoulos, K.G.; Lampropoulou, P.; Fakiris, E.; Sardelianos, D.; Papatheodorou, G. Synergistic Use of UAV and USV Data and Petrographic Analyses for the Investigation of Beachrock Formations: A Case Study from Syros Island, Aegean Sea, Greece. Minerals 2018, 8, 534. [Google Scholar] [CrossRef]

- Wang, D.; Xing, S.; He, Y.; Yu, J.; Xu, Q.; Li, P. Evaluation of a New Lightweight UAV-Borne Topo-Bathymetric LiDAR for Shallow Water Bathymetry and Object Detection. Sensors 2022, 22, 1379. [Google Scholar] [CrossRef] [PubMed]

- Abd-Elrahman, A.; Quirk, B.; Corbera, J.; Habib, A. Small Unmanned Aerial System Development and Applications in Precision Agriculture and Natural Resource Management. Eur. J. Remote Sens. 2019, 52, 504–505. [Google Scholar] [CrossRef]

- Bolourian, N.; Hammad, A. LiDAR-Equipped UAV Path Planning Considering Potential Locations of Defects for Bridge Inspection. Autom. Constr. 2020, 117, 103250. [Google Scholar] [CrossRef]

- Barmpounakis, E.N.; Vlahogianni, E.I.; Golias, J.C. Unmanned Aerial Aircraft Systems for Transportation Engineering: Current Practice and Future Challenges. Int. J. Transp. Sci. Technol. 2016, 5, 111–122. [Google Scholar] [CrossRef]

- Outay, F.; Mengash, H.A.; Adnan, M. Applications of Unmanned Aerial Vehicle (UAV) in Road Safety, Traffic and Highway Infrastructure Management: Recent Advances and Challenges. Transp. Res. Part A: Policy Pract. 2020, 141, 116–129. [Google Scholar] [CrossRef] [PubMed]

- Coifman, B.; McCord, M.; Mishalani, R.G.; Redmill, K. Surface Transportation Surveillance from Unmanned Aerial Vehicles. In Proceedings of the 83rd Annual Meeting of the Transportation Research Board, Washington, DC, USA, 11–15 January 2004; pp. 209–219. [Google Scholar]

- Farradine, P.B. Use of Unmanned Aerial Vehicles in Traffic Surveillance and Traffic Management; Technical Memorandum Prepared For Florida Department of Transportation: Tallahassee, FL, USA, 2005. [Google Scholar]

- McCormack, E. The Use of Small Unmanned Aircraft by the Washington State Department of Transportation; Department of Transportation: Washington, DC, USA, 2008. [Google Scholar]

- Gheisari, M.; Esmaeili, B. Applications and Requirements of Unmanned Aerial Systems (UASs) for Construction Safety. Saf. Sci. 2019, 118, 230–240. [Google Scholar] [CrossRef]

- Gheisari, M.; Karan, E.P.; Christmann, H.C.; Irizarry, J.; Johnson, E.N. Investigating Unmanned Aerial System (UAS) Application Requirements within a Department of Transportation. In Proceedings of the Transportation Research Board 94th Annual Meeting, Transportation Research Board, Washington, DC, USA, 11–15 January 2015. [Google Scholar]

- Zink, J.; Lovelace, B. Unmanned Aerial Vehicle Bridge Inspection Demonstration Project. 2015. Available online: http://www.dot.state.mn.us/research/TS/2015/201540TS.pdf (accessed on 5 September 2022).

- Seo, J.; Duque, L.; Wacker, J.P. Field Application of UAS-Based Bridge Inspection. Transp. Res. Rec. 2018, 2672, 72–81. [Google Scholar] [CrossRef]

- Owens, N.D.; Armstrong, A.H.; Mitchell, C.; Brewster, R. Federal Highway Administration Focus States Initiative: Traffic Incident Management Performance Measures Final Report; Federal Highway Administration: Washington, DC, USA, 2009. [Google Scholar]

- Desai, J.; Sakhare, R.; Rogers, S.; Mathew, J.K.; Habib, A.; Bullock, D. Using Connected Vehicle Data to Evaluate Impact of Secondary Crashes on Indiana Interstates. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–21 September 2021. [Google Scholar]

- Liu, X.; Zou, H.; Niu, W.; Song, Y.; He, W. An Approach of Traffic Accident Scene Reconstruction Using Unmanned Aerial Vehicle Photogrammetry. In Proceedings of the 2019 2nd International Conference on Sensors, Signal and Image Processing, 8–Prague, Czech Republic, 8–10 October 2019. [Google Scholar]

- Liu, Y.; Bai, B.; Zhang, C. UAV Image Mosaic for Road Traffic Accident Scene. In Proceedings of the 2017 32nd Youth Academic Annual Conference of Chinese Association of Automation (YAC), Hefei, China, 19–21 May 2017. [Google Scholar]

- Bullock, J.L.; Hainje, R.; Habib, A.; Horton, D.; Bullock, D.M. Public Safety Implementation of Unmanned Aerial Systems for Photogrammetric Mapping of Crash Scenes. Transp. Res. Rec. 2019, 2673, 567–574. [Google Scholar] [CrossRef]

- Jacobson, L.N.; Legg, B.; O’Brien, A.J. Incident Management Using Total Stations; Transportation Research Record: Thousand Oaks, CA, USA, 1992. [Google Scholar]

- Agent, K.R.; Deacon, J.A.; Pigman, J.G.; Stamatiadis, N. Evaluation of Advanced Surveying Technology for Accident Investigation (1994). Kentucky Transportation Center Research Report. 433. Available online: https://uknowledge.uky.edu/ktc_researchreports/433/ (accessed on 5 September 2022).

- Forman, P.; Parry, I. Rapid data collection at major incident scenes using three dimensional laser scanning techniques. In Proceedings of the IEEE 35th Annual 2001 International Carnahan Conference on Security Technology (Cat. No. 01CH37186), London, UK, 16–19 October 2001; IEEE: Piscataway, NJ, USA, 2001. [Google Scholar]

- Poole, G.; Venter, P.R. Measuring Accident Scenes Using Laser Scanning Systems and the Use of Scan Data in 3D Simulation and Animation. In Proceedings of the 23rd Annual Southern African Transport Conference 2004, Pretoria, South Africa, 12–15 July 2004. [Google Scholar]

- Desai, J.; Liu, J.; Hainje, R.; Oleksy, R.; Habib, A.; Bullock, D. Assessing Vehicle Profiling Accuracy of Handheld LiDAR Compared to Terrestrial Laser Scanning for Crash Scene Reconstruction. Sensors 2021, 21, 8076. [Google Scholar] [CrossRef] [PubMed]

- Pagounis, V.; Tsakiri, M.; Palaskas, S.; Biza, B.; Zaloumi, E. 3D Laser Scanning for Road Safety and Accident Reconstruction. In Proceedings of the XXIIIth International FIG Congress, Munich, Germany, 8–13 October 2006; p. 13. [Google Scholar]

- Osman, M.R.; Tahar, K.N. 3D Accident Reconstruction Using Low-Cost Imaging Technique. Adv. Eng. Softw. 2016, 100, 231–237. [Google Scholar] [CrossRef]

- Du, X.; Jin, X.; Zhang, X.; Shen, J.; Hou, X. Geometry Features Measurement of Traffic Accident for Reconstruction Based on Close-Range Photogrammetry. Adv. Eng. Softw. 2009, 40, 497–505. [Google Scholar] [CrossRef]

- Žuraulis, V.; Levulytė, L.; Sokolovskij, E. Vehicle Speed Prediction from Yaw Marks Using Photogrammetry of Image of Traffic Accident Scene. Procedia Eng. 2016, 134, 89–94. [Google Scholar] [CrossRef][Green Version]

- Jiao, P.; Miao, Q.; Zhang, M.; Zhao, W. A Virtual Reality Method for Digitally Reconstructing Traffic Accidents from Videos or Still Images. Forensic Sci. Int. 2018, 292, 176–180. [Google Scholar] [CrossRef] [PubMed]

- Chu, T.; Starek, M.J.; Berryhill, J.; Quiroga, C.; Pashaei, M. Simulation and Characterization of Wind Impacts on SUAS Flight Performance for Crash Scene Reconstruction. Drones 2021, 5, 67. [Google Scholar] [CrossRef]

- Kamnik, R.; Nekrep Perc, M.; Topolšek, D. Using the Scanners and Drone for Comparison of Point Cloud Accuracy at Traffic Accident Analysis. Accid. Anal. Prev. 2020, 135, 105391. [Google Scholar] [CrossRef] [PubMed]

- Pérez, J.A.; Gonçalves, G.R.; Rangel, J.M.G.; Ortega, P.F. Accuracy and Effectiveness of Orthophotos Obtained from Low Cost UASs Video Imagery for Traffic Accident Scenes Documentation. Adv. Eng. Softw. 2019, 132, 47–54. [Google Scholar] [CrossRef]

- Professional Photogrammetry and Drone Mapping Software. Pix4D. Available online: https://www.pix4d.com/ (accessed on 5 September 2022).

- Agisoft Metashape: Agisoft Metashape. Available online: https://www.agisoft.com/ (accessed on 5 September 2022).

- VisualSFM: A Visual Structure from Motion System. Available online: http://ccwu.me/vsfm/index.html (accessed on 5 September 2022).

- Drone Mapping Software. OpenDroneMap. Available online: https://www.opendronemap.org/ (accessed on 5 September 2022).

- Hasheminasab, S.M.; Zhou, T.; Habib, A. GNSS/INS-Assisted Structure from Motion Strategies for UAV-Based Imagery over Mechanized Agricultural Fields. Remote Sens. 2020, 12, 351. [Google Scholar] [CrossRef]

- He, F.; Zhou, T.; Xiong, W.; Hasheminnasab, S.M.; Habib, A. Automated Aerial Triangulation for UAV-Based Mapping. Remote Sens. 2018, 10, 1952. [Google Scholar] [CrossRef]

| Measured Segment | Segment Endpoints (from Figure 11) | Orthophoto (ft) | RTK (ft) | Error (ft) |

|---|---|---|---|---|

| A | P2, P4 | 160.79 | 160.75 | 0.03 |

| B | P2, P6 | 82.30 | 82.38 | −0.08 |

| C | P2, P7 | 77.34 | 77.47 | −0.13 |

| D | P2, P8 | 73.17 | 73.31 | −0.14 |

| E | P2, P9 | 132.21 | 132.38 | −0.16 |

| F | P4, P6 | 94.05 | 94.11 | −0.06 |

| G | P4, P7 | 106.18 | 106.14 | 0.04 |

| H | P4, P8 | 124.99 | 124.84 | 0.15 |

| I | P4, P9 | 218.88 | 218.80 | 0.07 |

| J | P6, P7 | 12.45 | 12.34 | 0.11 |

| K | P6, P8 | 31.62 | 31.38 | 0.23 |

| L | P6, P9 | 129.85 | 129.70 | 0.16 |

| M | P7, P8 | 19.16 | 19.04 | 0.12 |

| N | P7, P9 | 117.64 | 117.60 | 0.04 |

| O | P8, P9 | 98.99 | 99.08 | −0.09 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Desai, J.; Mathew, J.K.; Zhang, Y.; Hainje, R.; Horton, D.; Hasheminasab, S.M.; Habib, A.; Bullock, D.M. Assessment of Indiana Unmanned Aerial System Crash Scene Mapping Program. Drones 2022, 6, 259. https://doi.org/10.3390/drones6090259

Desai J, Mathew JK, Zhang Y, Hainje R, Horton D, Hasheminasab SM, Habib A, Bullock DM. Assessment of Indiana Unmanned Aerial System Crash Scene Mapping Program. Drones. 2022; 6(9):259. https://doi.org/10.3390/drones6090259

Chicago/Turabian StyleDesai, Jairaj, Jijo K. Mathew, Yunchang Zhang, Robert Hainje, Deborah Horton, Seyyed Meghdad Hasheminasab, Ayman Habib, and Darcy M. Bullock. 2022. "Assessment of Indiana Unmanned Aerial System Crash Scene Mapping Program" Drones 6, no. 9: 259. https://doi.org/10.3390/drones6090259

APA StyleDesai, J., Mathew, J. K., Zhang, Y., Hainje, R., Horton, D., Hasheminasab, S. M., Habib, A., & Bullock, D. M. (2022). Assessment of Indiana Unmanned Aerial System Crash Scene Mapping Program. Drones, 6(9), 259. https://doi.org/10.3390/drones6090259