Quantifying Understory Vegetation Cover of Pinus massoniana Forest in Hilly Region of South China by Combined Near-Ground Active and Passive Remote Sensing

Abstract

:1. Introduction

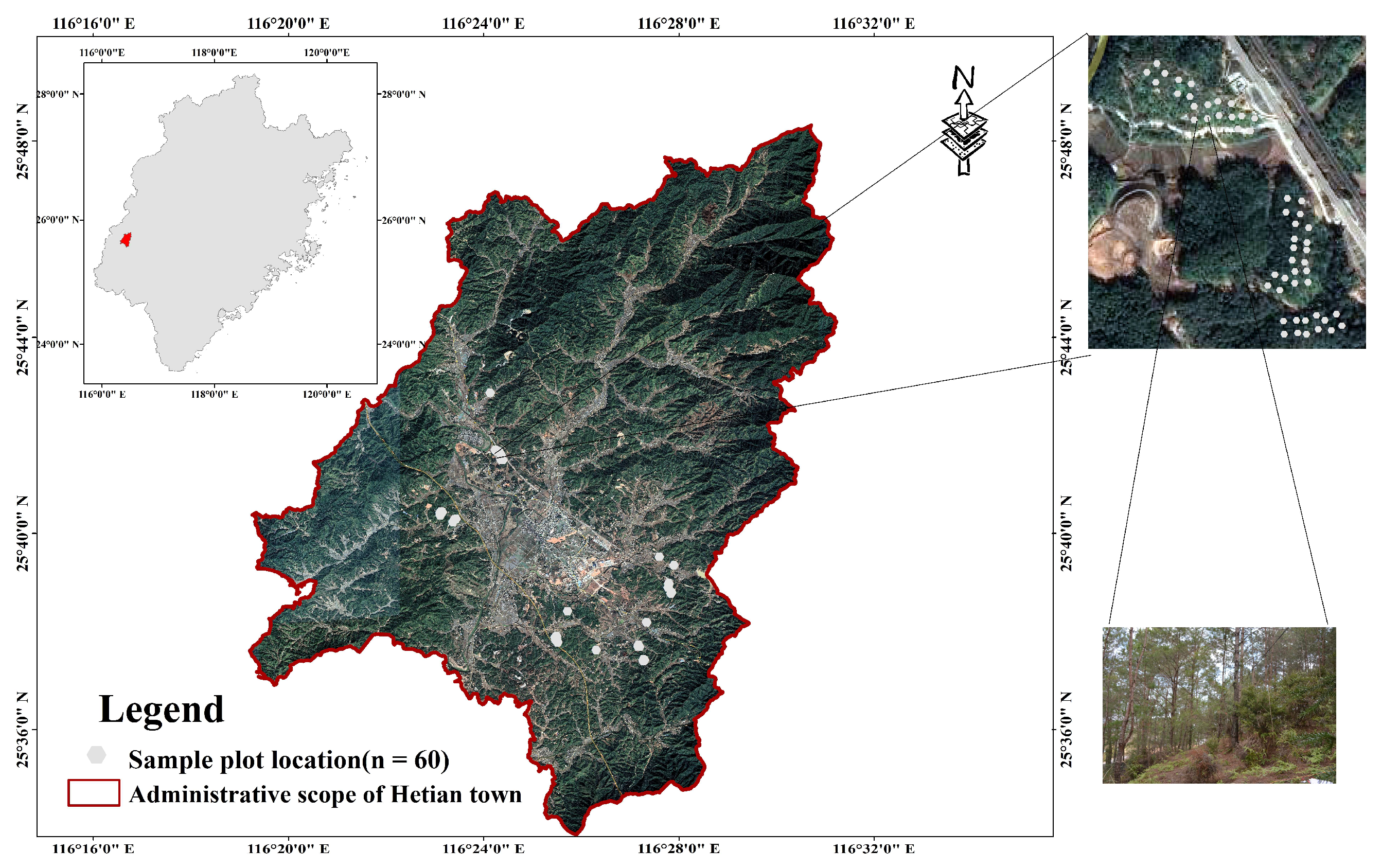

2. Study Area and Sample Site Overview

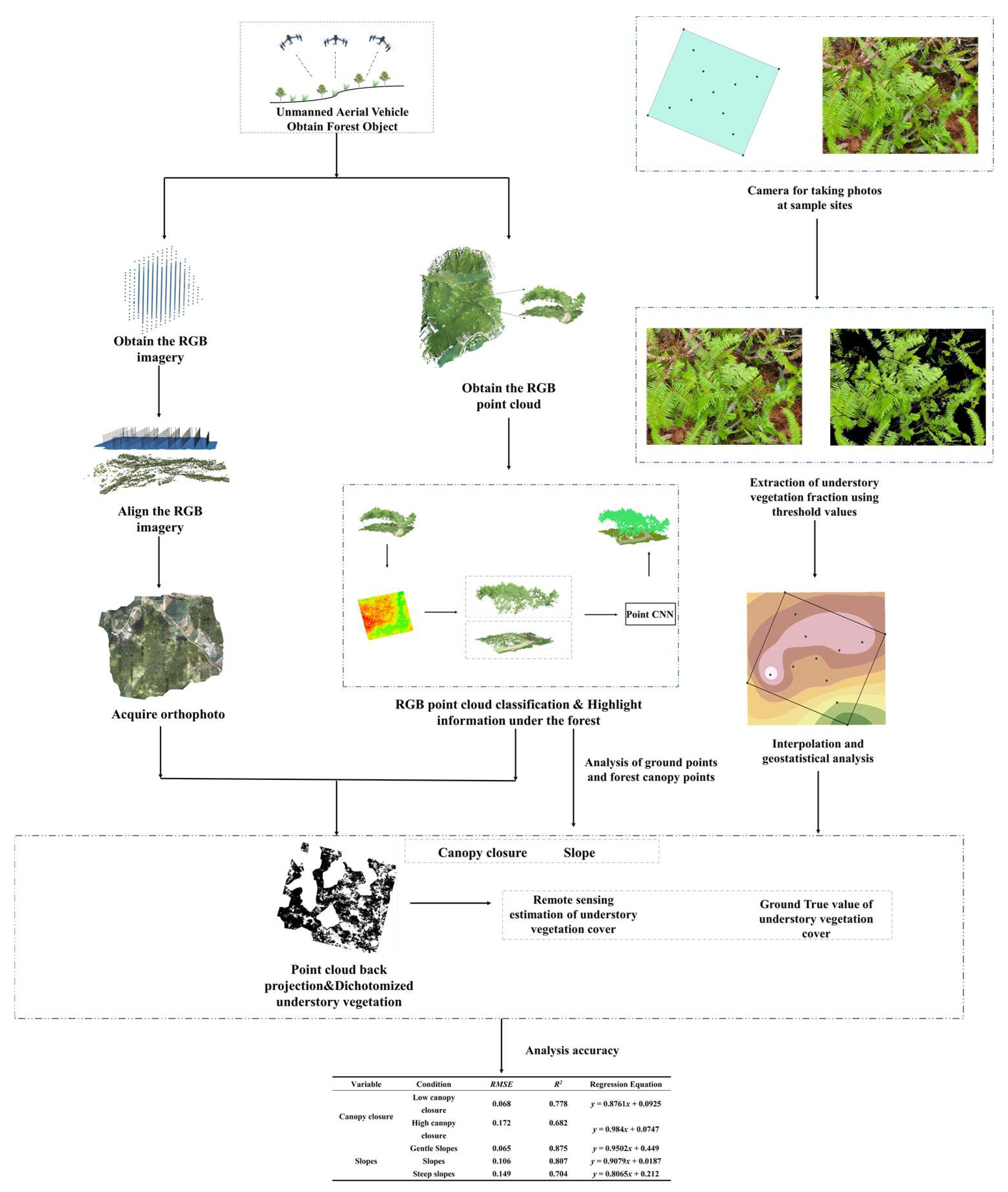

3. Data and Methodology

3.1. Data Acquisition

3.1.1. Field Measurements Acquisition

3.1.2. UAV Visible Light Remote Sensing Data Acquisition

3.1.3. UAV LiDAR Data Acquisition

3.2. Methodology

3.2.1. Data Pre-Processing

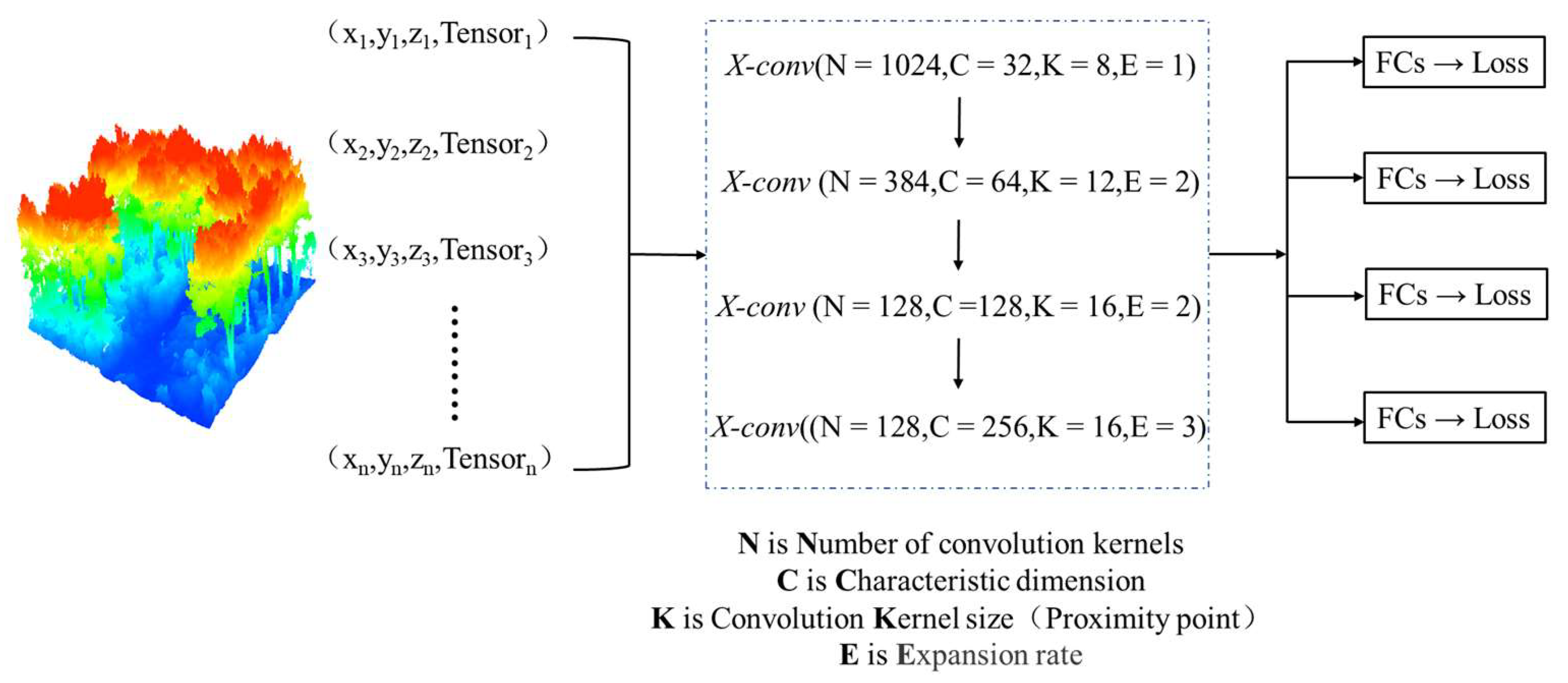

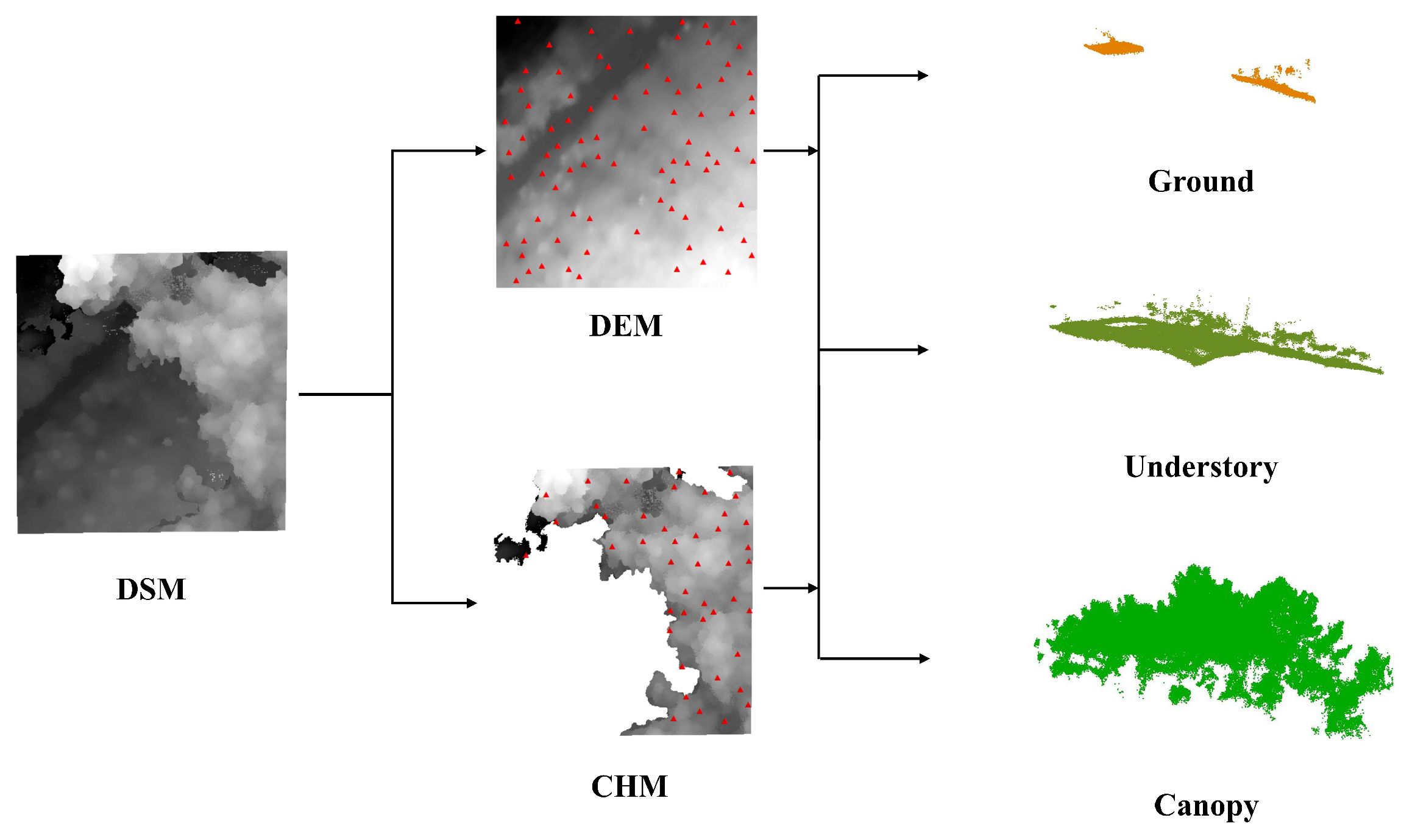

3.2.2. High-Precision Separation Method for Three-Dimensional Structure of Pinus massoniana Forest

3.2.3. Two-Dimensional Presentation and Quantification of Three-Dimensional Information of Forest Understory Vegetation

3.2.4. Method of Calculating the Ground-Truthing Value of Understory Vegetation Cover

3.2.5. Method of Sample Site Information Statistics

4. Results

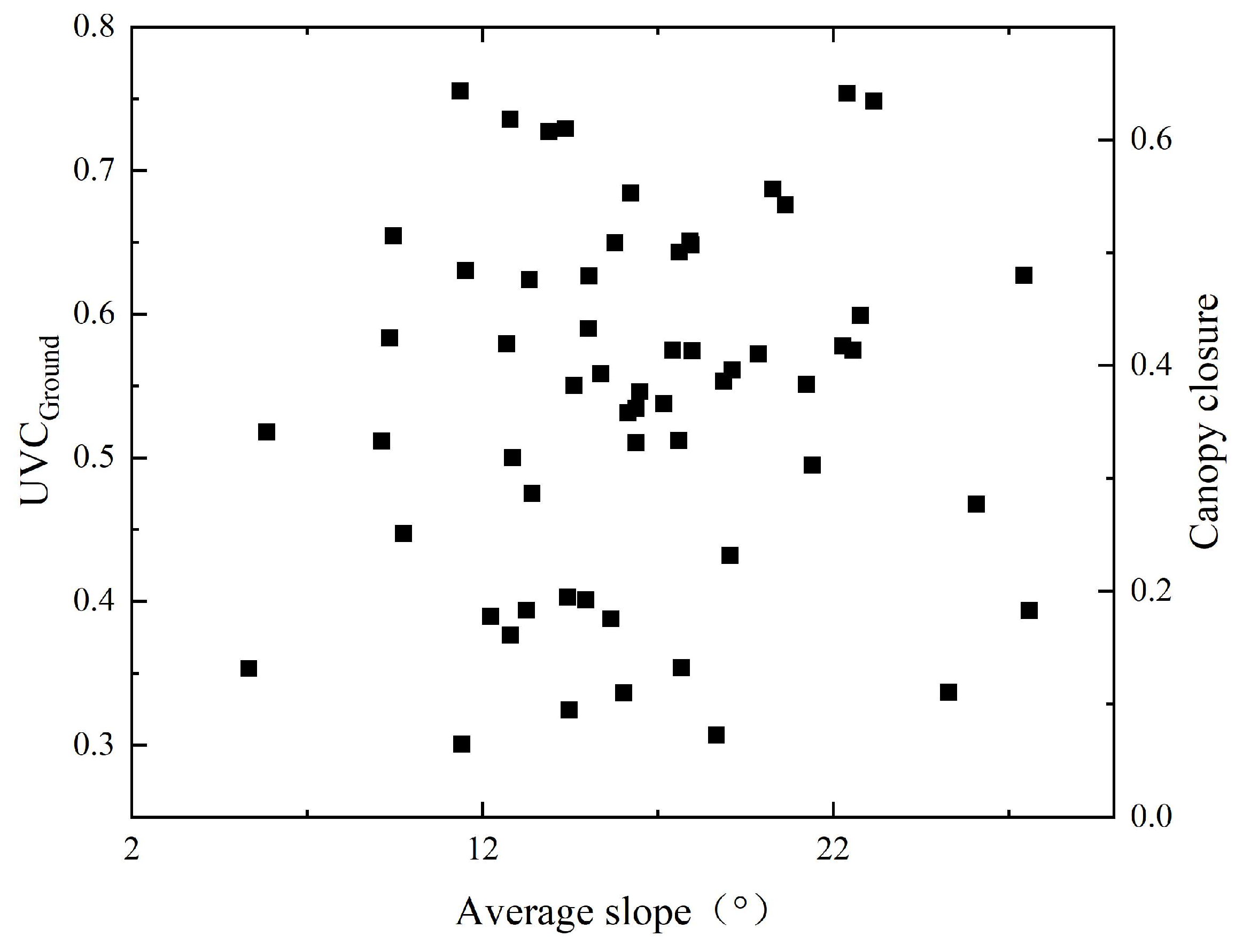

4.1. Field Measurements on Sample Site Topography, Canopy Closure, and Understory Vegetation Cover

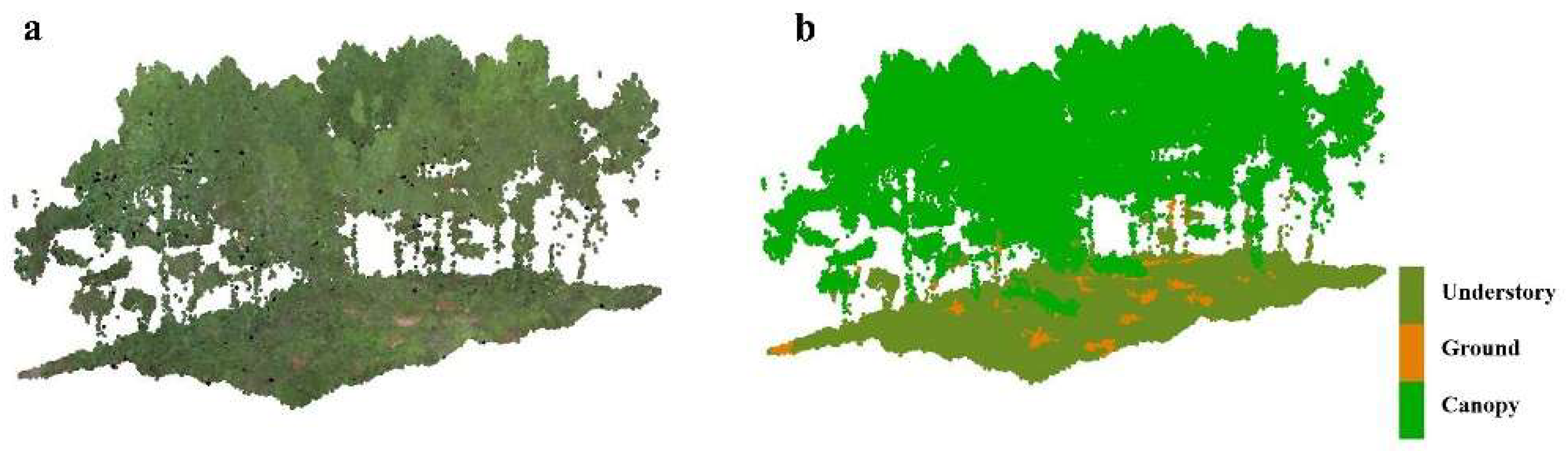

4.2. Three-Dimensional Structural Decomposition of Pinus massoniana Forest

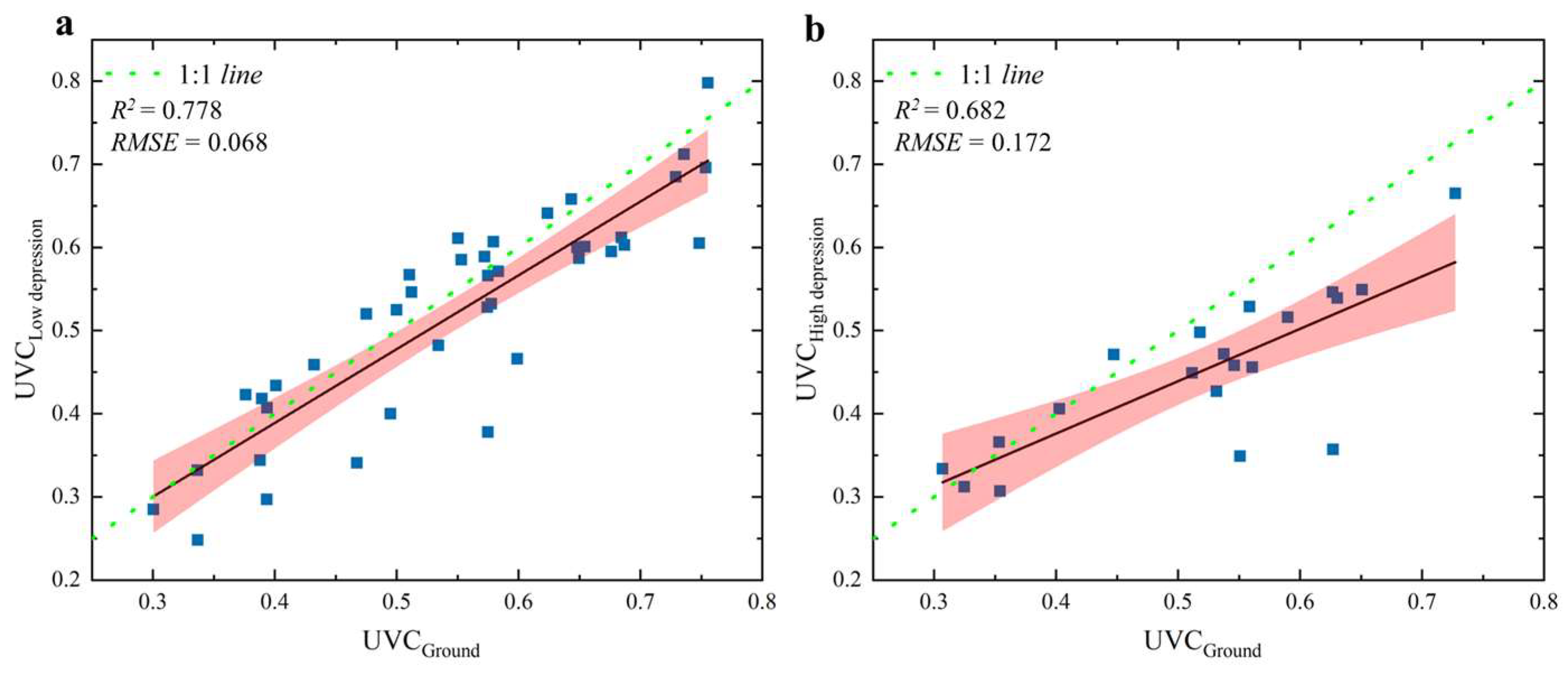

4.3. Combined Active and Passive Remote Sensing to Quantify Understory Vegetation Cover

5. Discussion

5.1. Effect of Point Cloud Segmentation Methods on Quantifying Understory Vegetation Cover

5.2. Influence of Point Cloud Inverse Projection Algorithm and Slope on Quantifying Understory Vegetation Cover

5.3. The Applicability of Quantitative Understory Vegetation Methods

5.4. Effect of Canopy Closure on Quantifying Understory Vegetation Cover

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jin, Y.; Liu, C.; Qian, S.S.; Luo, Y.; Zhou, R.; Tang, J.; Bao, W. Large-scale patterns of understory biomass and its allocation across China’s forests. Sci. Total Environ. 2022, 804, 150169. [Google Scholar] [CrossRef]

- Hart, S.A.; Chen, H.Y.H. Understory Vegetation Dynamics of North American Boreal Forests. Crit. Rev. Plant. Sci. 2006, 25, 381–397. [Google Scholar] [CrossRef]

- Lyu, M.; Xie, J.; Giardina, C.P.; Vadeboncoeur, M.A.; Feng, X.; Wang, M.; Ukonmaanaho, L.; Lin, T.; Kuzyakov, Y.; Yang, Y. Understory ferns alter soil carbon chemistry and increase carbon storage during reforestation with native pine on previously degraded sites. Soil Biol. Biochem. 2019, 132, 80–92. [Google Scholar] [CrossRef]

- Zhu, W.; Kang, Y.; Li, X.; Wan, S.; Dong, S. Changes in understory vegetation during the reclamation of saline-alkali soil by drip irrigation for shelterbelt establishment in the Hetao Irrigation Area of China. Catena 2022, 214, 106247. [Google Scholar] [CrossRef]

- Landuyt, D.; De Lombaerde, E.; Perring, M.P.; Hertzog, L.R.; Ampoorter, E.; Maes, S.L.; De Frenne, P.; Ma, S.; Proesmans, W.; Blondeel, H.; et al. The functional role of temperate forest understorey vegetation in a changing world. Glob. Chang. Biol. 2019, 25, 3625–3641. [Google Scholar] [CrossRef]

- Guevara, C.; Gonzalez-Benecke, C.; Wightman, M. Ground Cover—Biomass Functions for Early-Seral Vegetation. Forests 2021, 12, 1272. [Google Scholar] [CrossRef]

- Li, T.; Li, M.; Ren, F.; Tian, L. Estimation and Spatio-Temporal Change Analysis of NPP in Subtropical Forests: A Case Study of Shaoguan, Guangdong, China. Remote Sens. 2022, 14, 2541. [Google Scholar] [CrossRef]

- Palmroth, S.; Bach, L.H.; Lindh, M.; Kolari, P.; Nordin, A.; Palmqvist, K. Nitrogen supply and other controls of carbon uptake of understory vegetation in a boreal Picea abies forest. Agric. For. Meteorol. 2019, 276, 107620. [Google Scholar] [CrossRef]

- Zhang, Y.; Onda, Y.; Kato, H.; Feng, B.; Gomi, T. Understory biomass measurement in a dense plantation forest based on drone-SfM data by a manual low-flying drone under the canopy. J. Environ. Manage. 2022, 312, 114862. [Google Scholar] [CrossRef]

- Li, L.; Chen, J.; Mu, X.; Li, W.; Yan, G.; Xie, D.; Zhang, W. Quantifying Understory and Overstory Vegetation Cover Using UAV-Based RGB Imagery in Forest Plantation. Remote Sens. 2020, 12, 298. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Zhang, Y.; Yu, S.; Li, F.; Liu, S.; Zhou, L.; Fu, S. Responses of soil labile organic carbon and water-stable aggregates to reforestation in southern subtropical China. J. Plant. Ecol. 2021, 14, 191–201. [Google Scholar] [CrossRef]

- Fragoso-Campón, L.; Quirós, E.; Mora, J.; Gutiérrez Gallego, J.A.; Durán-Barroso, P. Overstory-understory land cover mapping at the watershed scale: Accuracy enhancement by multitemporal remote sensing analysis and LiDAR. Environ. Sci. Pollut. R. 2020, 27, 75–88. [Google Scholar] [CrossRef] [PubMed]

- Ecke, S.; Dempewolf, J.; Frey, J.; Schwaller, A.; Endres, E.; Klemmt, H.; Tiede, D.; Seifert, T. UAV-Based Forest Health Monitoring: A Systematic Review. Remote Sens. 2022, 14, 3205. [Google Scholar] [CrossRef]

- Chen, Q.; Gao, T.; Zhu, J.; Wu, F.; Li, X.; Lu, D.; Yu, F. Individual Tree Segmentation and Tree Height Estimation Using Leaf-Off and Leaf-On UAV-LiDAR Data in Dense Deciduous Forests. Remote Sens. 2022, 14, 2787. [Google Scholar] [CrossRef]

- Karel, K.; Martin, S.; Peter, S. Very High Density Point Clouds from UAV Laser Scanning for Automatic Tree Stem Detection and Direct Diameter Measurement. Remote Sens. 2020, 12, 1236. [Google Scholar]

- Neuville, R.; Bates, J.S.; Jonard, F. Estimating Forest Structure from UAV-Mounted LiDAR Point Cloud Using Machine Learning. Remote Sens. 2021, 13, 352. [Google Scholar] [CrossRef]

- Almeida, D.R.A.; Stark, S.C.; Chazdon, R.; Nelson, B.W.; Cesar, R.G.; Meli, P.; Gorgens, E.B.; Duarte, M.M.; Valbuena, R.; Moreno, V.S.; et al. The effectiveness of lidar remote sensing for monitoring forest cover attributes and landscape restoration. For. Ecol. Manag. 2019, 438, 34–43. [Google Scholar] [CrossRef]

- Handayani, H.H.; Bawasir, A.; Cahyono, A.B.; Hariyanto, T.; Hidayat, H. Surface drainage features identification using LiDAR DEM smoothing in agriculture area: A study case of Kebumen Regency, Indonesia. Int. J. Image Data Fusion. 2022, 1–22. [Google Scholar] [CrossRef]

- Campbell, M.J.; Dennison, P.E.; Hudak, A.T.; Parham, L.M.; Butler, B.W. Quantifying understory vegetation density using small-footprint airborne lidar. Remote Sens. Environ. 2018, 215, 330–342. [Google Scholar] [CrossRef]

- Wing, B.M.; Ritchie, M.W.; Boston, K.; Cohen, W.B.; Gitelman, A.; Olsen, M.J. Prediction of understory vegetation cover with airborne lidar in an interior ponderosa pine forest. Remote Sens. Environ. 2012, 124, 730–741. [Google Scholar] [CrossRef]

- Stoddart, J.; de Almeida, D.R.A.; Silva, C.A.; Görgens, E.B.; Keller, M.; Valbuena, R. A Conceptual Model for Detecting Small-Scale Forest Disturbances Based on Ecosystem Morphological Traits. Remote Sens. 2022, 14, 933. [Google Scholar] [CrossRef]

- Kölle, M.; Laupheimer, D.; Schmohl, S.; Haala, N.; Rottensteiner, F.; Wegner, J.D.; Ledoux, H. The Hessigheim 3D (H3D) benchmark on semantic segmentation of high-resolution 3D point clouds and textured meshes from UAV LiDAR and Multi-View-Stereo. ISPRS Open J. Photogramm. Remote Sens. 2021, 1, 100001. [Google Scholar] [CrossRef]

- Yin, H.; Yi, W.; Hu, D. Computer vision and machine learning applied in the mushroom industry: A critical review. Comput. Electron. Agric. 2022, 198, 107015. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, X.; Dedman, S.; Rosso, M.; Zhu, J.; Yang, J.; Xia, Y.; Tian, Y.; Zhang, G.; Wang, J. UAV remote sensing applications in marine monitoring: Knowledge visualization and review. Sci. Total Environ. 2022, 838, 155939. [Google Scholar] [CrossRef] [PubMed]

- Yuan, X.; Shi, J.; Gu, L. A review of deep learning methods for semantic segmentation of remote sensing imagery. Expert Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Mishra, P.; Sadeh, R.; Bino, E.; Polder, G.; Boer, M.P.; Rutledge, D.N.; Herrmann, I. Complementary chemometrics and deep learning for semantic segmentation of tall and wide visible and near-infrared spectral images of plants. Comput. Electron. Agric. 2021, 186, 106226. [Google Scholar] [CrossRef]

- Riehle, D.; Reiser, D.; Griepentrog, H.W. Robust index-based semantic plant/background segmentation for RGB- images. Comput. Electron. Agric. 2020, 169, 105201. [Google Scholar] [CrossRef]

- Wang, J.; Liu, W.; Gou, A. Numerical characteristics and spatial distribution of panoramic Street Green View index based on SegNet semantic segmentation in Savannah. Urban. For. Urban. Gree. 2022, 69, 127488. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wang, Y.; Cao, J.; Li, Y.; Tu, C. APM: Adaptive permutation module for point cloud classification. Comput. Graphics. 2021, 97, 217–224. [Google Scholar] [CrossRef]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. PointCNN: Convolution On $\mathcal{X}$-Transformed Points. Comput. Vis. Pattern Recognit. 2018, 1801, 07791. [Google Scholar]

- Ao, Z.; Wu, F.; Hu, S.; Sun, Y.; Su, Y.; Guo, Q.; Xin, Q. Automatic segmentation of stem and leaf components and individual maize plants in field terrestrial LiDAR data using convolutional neural networks. Crop. J. 2021, in press. [Google Scholar] [CrossRef]

- Neyns, R.; Canters, F. Mapping of Urban Vegetation with High-Resolution Remote Sensing: A Review. Remote Sens. 2022, 14, 1031. [Google Scholar] [CrossRef]

- Zhang, W.; Gao, F.; Jiang, N.; Zhang, C.; Zhang, Y. High-Temporal-Resolution Forest Growth Monitoring Based on Segmented 3D Canopy Surface from UAV Aerial Photogrammetry. Drones 2022, 6, 158. [Google Scholar] [CrossRef]

- Liu, X.; Su, Y.; Hu, T.; Yang, Q.; Liu, B.; Deng, Y.; Tang, H.; Tang, Z.; Fang, J.; Guo, Q. Neural network guided interpolation for mapping canopy height of China’s forests by integrating GEDI and ICESat-2 data. Remote Sens. Environ. 2022, 269, 112844. [Google Scholar] [CrossRef]

- Lin, L.; Yu, K.; Yao, X.; Deng, Y.; Hao, Z.; Chen, Y.; Wu, N.; Liu, J. UAV Based Estimation of Forest Leaf Area Index (LAI) through Oblique Photogrammetry. Remote Sens. 2021, 13, 803. [Google Scholar] [CrossRef]

- Deng, L.; Chen, Y.; Zhao, Y.; Zhu, L.; Gong, H.; Guo, L.; Zou, H. An approach for reflectance anisotropy retrieval from UAV-based oblique photogrammetry hyperspectral imagery. Int. J. Appl. Earth Obs. 2021, 102, 102442. [Google Scholar] [CrossRef]

- Prata, G.A.; Broadbent, E.N.; de Almeida, D.R.A.; St. Peter, J.; Drake, J.; Medley, P.; Corte, A.P.D.; Vogel, J.; Sharma, A.; Silva, C.A.; et al. Single-Pass UAV-Borne GatorEye LiDAR Sampling as a Rapid Assessment Method for Surveying Forest Structure. Remote Sens. 2020, 12, 4111. [Google Scholar] [CrossRef]

- Wallace, L.; Saldias, D.S.; Reinke, K.; Hillman, S.; Hally, B.; Jones, S. Using orthoimages generated from oblique terrestrial photography to estimate and monitor vegetation cover. Ecol. Indic. 2019, 101, 91–101. [Google Scholar] [CrossRef]

- Zhou, Y.; Flynn, K.C.; Gowda, P.H.; Wagle, P.; Ma, S.; Kakani, V.G.; Steiner, J.L. The potential of active and passive remote sensing to detect frequent harvesting of alfalfa. Int. J. Appl. Earth Obs. 2021, 104, 102539. [Google Scholar] [CrossRef]

- Wu, Z.; Dye, D.; Vogel, J.; Middleton, B. Estimating Forest and Woodland Aboveground Biomass Using Active and Passive Remote Sensing. Photogramm. Eng. Remote Sens. 2016, 82, 271–281. [Google Scholar] [CrossRef]

- Kašpar, V.; Hederová, L.; Macek, M.; Müllerová, J.; Prošek, J.; Surový, P.; Wild, J.; Kopecký, M. Temperature buffering in temperate forests: Comparing microclimate models based on ground measurements with active and passive remote sensing. Remote Sens. Environ. 2021, 263, 112522. [Google Scholar] [CrossRef]

- Gu, Z.; Wu, X.; Zhou, F.; Luo, H.; Shi, X.; Yu, D. Estimating the effect of Pinus massoniana Lamb plots on soil and water conservation during rainfall events using vegetation fractional coverage. Catena 2013, 109, 225–233. [Google Scholar] [CrossRef]

- Wang, X.; Li, S.; Huang, S.; Cui, Y.; Fu, H.; Li, T.; Zhao, W.; Yang, X. Pinus massoniana population dynamics: Driving species diversity during the pioneer stage of ecological restoration. Glob. Ecol. Conserv. 2021, 27, e1593. [Google Scholar] [CrossRef]

- Maalek, R. Field Information Modeling (FIM)™: Best Practices Using Point Clouds. Remote Sens. 2021, 13, 967. [Google Scholar] [CrossRef]

- Chen, S.; Zha, X.; Bai, Y.; Wang, L. Evaluation of soil erosion vulnerability on the basis of exposure, sensitivity, and adaptive capacity: A case study in the Zhuxi watershed, Changting, Fujian Province, Southern China. Catena 2019, 177, 57–69. [Google Scholar] [CrossRef]

- Zhang, X.; Sun, H.; Qiao, X.; Yan, X.; Feng, M.; Xiao, L.; Song, X.; Zhang, M.; Shafiq, F.; Yang, W.; et al. Hyperspectral estimation of canopy chlorophyll of winter wheat by using the optimized vegetation indices. Comput. Electron. Agric. 2022, 193, 106654. [Google Scholar] [CrossRef]

- Li, D.; Liu, J.; Hu, S.; Cheng, G.; Li, Y.; Cao, Y.; Dong, B.; Chen, Y.F. A deep learning-based indoor acceptance system for assessment on flatness and verticality quality of concrete surfaces. J. Build. Eng. 2022, 51, 104284. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Shen, X.; Huang, Q.; Wang, X.; Li, J.; Xi, B. A Deep Learning-Based Method for Extracting Standing Wood Feature Parameters from Terrestrial Laser Scanning Point Clouds of Artificially Planted Forest. Remote Sens. 2022, 14, 3842. [Google Scholar] [CrossRef]

| UVCGround | Average Slope (°) | Canopy Closure | |

|---|---|---|---|

| mean | 0.539 | 16.466 | 0.36 |

| min | 0.301 | 5.341 | 0.1 |

| max | 0.755 | 27.596 | 0.6 |

| std | 0.124 | 4.791 | 0.119 |

| Canopy Closure Case (Digital Surface Model) | Legend | |

|---|---|---|

| Low canopy closure (0.1–0.4) |  |  |

| High canopy closure (0.4–0.7) |  |

| Topographic Profile (Point Cloud) | Legend | |

|---|---|---|

| Gentle slopes (6°–12°) |  |  |

| Inclined slopes (13°–22°) |  | |

| Steep slopes (>22°) |  |

| Methods | Canopy Layer | Understory Vegetation | Ground | Overall Accuracy |

|---|---|---|---|---|

| Point CNN | 80.5/86.9/82.2 | 72.1/77.8/75.0 | 69.4/73.3/71.9 | 76.2 |

| cloth simulation filter | 78.5/82.9/80.7 | 49.8/51.4/50.6 | 29.6/44.9/37.8 | 56.4 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, R.; Bao, T.; Tian, S.; Song, L.; Zhong, S.; Liu, J.; Yu, K.; Wang, F. Quantifying Understory Vegetation Cover of Pinus massoniana Forest in Hilly Region of South China by Combined Near-Ground Active and Passive Remote Sensing. Drones 2022, 6, 240. https://doi.org/10.3390/drones6090240

Wang R, Bao T, Tian S, Song L, Zhong S, Liu J, Yu K, Wang F. Quantifying Understory Vegetation Cover of Pinus massoniana Forest in Hilly Region of South China by Combined Near-Ground Active and Passive Remote Sensing. Drones. 2022; 6(9):240. https://doi.org/10.3390/drones6090240

Chicago/Turabian StyleWang, Ruifan, Tiantian Bao, Shangfeng Tian, Linghan Song, Shuangwen Zhong, Jian Liu, Kunyong Yu, and Fan Wang. 2022. "Quantifying Understory Vegetation Cover of Pinus massoniana Forest in Hilly Region of South China by Combined Near-Ground Active and Passive Remote Sensing" Drones 6, no. 9: 240. https://doi.org/10.3390/drones6090240

APA StyleWang, R., Bao, T., Tian, S., Song, L., Zhong, S., Liu, J., Yu, K., & Wang, F. (2022). Quantifying Understory Vegetation Cover of Pinus massoniana Forest in Hilly Region of South China by Combined Near-Ground Active and Passive Remote Sensing. Drones, 6(9), 240. https://doi.org/10.3390/drones6090240