Abstract

Mission planning for small uncrewed aerial systems (sUAS) as a platform for remote sensors goes beyond the traditional issues of selecting a sensor, flying altitude/speed, spatial resolution, and the date/time of operation. Unlike purchasing or contracting imagery collections from traditional satellite or manned airborne systems, the sUAS operator must carefully select launching, landing, and flight paths that meet both the needs of the remote sensing collection and the regulatory requirements of federal, state, and local regulations. Mission planning for aerial drones must consider temporal and geographic changes in the environment, such as local weather conditions or changing tidal height. One key aspect of aerial drone missions is the visibility of the aircraft and communication with the aircraft. In this research, a visibility model for low-altitude aerial drone operations was designed using a GIS-based framework supported by high spatial resolution LiDAR data. In the example study, the geographic positions of the visibility of an aerial drone used for water sampling at low altitudes (e.g., 2 m above ground level) were modeled at different levels of tidal height. Using geospatial data for a test-case environment at the Winyah Bay estuarine environment in South Carolina, we demonstrate the utility, challenges, and solutions for determining the visibility of a very low-altitude aerial drone used in water sampling.

1. Introduction

The opportunities for using aerial drones in research and management activities are continually expanding as the technical capabilities of aerial drones continue to evolve. The role of image or video collection by aerial drones is commonplace in a multitude of research applications. Moreover, the practical use of aerial drones with imaging cameras has been incorporated by numerous local, state, and federal agencies in their quick response or management activities [1,2]. The use of aerial drones for delivering products is commonly discussed and utilized in special circumstances [3,4]. Using an aerial approach for carrying non-imaging sensors, ranging from atmospheric/aquatic [5,6] and auditory sensors to fire suppression/ignition bomblets, is emerging as a practical management technique. The use of aerial drones in any of these applications requires careful planning of a mission to meet the regulatory, temporal, and geographic environmental conditions at the application site at the time of a mission. The mission operator must anticipate weather conditions (e.g., wind, precipitation), GNSS signal reception, drone-to-operator control transmission, and the visibility of the drone by the operator during the mission. To meet federal and most state regulations, the operator must consider launch/landing access, flight path in 3-D space, image/data privacy permissions, and the visibility of the aerial drone [7]. Moreover, the use of aerial drones can negatively impact wildlife in a study environment [8]. There are no commercial or research products available for aerial drone operations capable of meeting this multitude of criteria. This paucity of planning applications has led to our current research effort.

1.1. Flight Planning Applications/Research

Most aerial drone research has focused on the imagery or other data collected and processed for a product. Little research has focused on the critical first step—mission planning. Some focused research on optimizing flight paths for delivery [9], operation in an urban environment [10], an indoor environment [11], in mountainous terrain [12], for solar-powered flight constraints [13], and under windy conditions [14] has been conducted. Solutions for optimizing areal coverage using multiple aerial drones have also been provided [15,16]. Others have developed models for risk assessments in the use of aerial drones on construction sites [17]. While there are many online websites (e.g., B4UFLY in the USA, D-Link in the EU) to guide the user in the Federal restrictions for the aerial drone in the airspace, there are few sites/apps that will provide local restriction information (e.g., at the state, county, municipality levels). There are apps/websites to assist in weather prediction for the expected flight location and date/time (e.g., UAV Forecast). There are no known apps/models to guide the operator in planning a low-altitude mission in complex terrain, although limited research on low-altitude flights is progressing [18]. There are no known apps, models, or online resources to guide the aerial drone operator in the visibility of the aerial drone during an intended flight path.

The limited body of literature on sUAS water sampling approaches illuminates the need for planning fieldwork well in advance of sampling. Commercial sUAS mission planning applications are designed to layout flight lines, photo centers, altitude, and look-angles for orthomosaics and 3-D surface models. Fundamentally, these applications assume the landscape is flat or low relief without surface cover that would impact flight paths or communication between the operator and aircraft. The input data do not include terrain and surface cover that may impede or influence flight paths or imagery or the dynamic nature of a water body. Planning for a launch/landing site to conduct water samples is not a trivial problem, and almost no research has been conducted to date on modeling sUAS image/data collection scenarios in the multi-faceted geographic context. We are unaware of previous research related to the geographic context problem with sUAS [19] in a water sampling application. Previous research on optimizing sUAS aircraft trajectories is in the classical path planning research problem domain. Such work focuses on minimizing travel time and energy requirements, such as battery life for sUAS vehicles, caloric requirements for humans [20], coordinating multiple aerial assets [21], meteorological [22], or terrain characteristics [23] that would impact such operations.

1.2. Water Sampling from an Aerial Drone

In situ sampling from a small boat (e.g., skiff) involves a boat/motor, licensing, training (for the uninitiated), insurance, and either mooring or transporting/launching. The actual water sample collection with a small boat may introduce contamination to the sample by sediment or trace metals. At best, a skiff-based sampling approach over broad areas is relatively slow, resulting in samples collected at different moments in a tidal cycle, if not on different days. The placement of in situ sensors at fixed sites obviates the frequent need for a boat or skiff to visit locations. However, careful planning in site selection for permanent/semi-permanent sensors is required in the research design prior to positioning fixed sensors, effectively negating the possibility of situation-driven sampling. In addition, fixed sensors are subject to biofouling [24,25] and possible damage from anthropogenic and/or environmental causes. Both boat-based sampling and semi-permanent water sampling sensors may not detect transient changes (e.g., wrack, decomposing carcasses, anthropogenically deposited trash) in the surrounding environment as such causes are often not visible to the boat occupants, for example, even a few meters inland from a marsh bank.

The traditional approach to water sampling in large water bodies or estuarine environments is either with the use of fixed water sampling sensors or human travel to sites in a skiff. There are numerous advantages to the use of an sUAS-based approach for remote water sampling [26,27]; foremost are accessibility, synoptic view, safety, avoiding water contamination, responsiveness, and autonomous operation. Access to sampling sites is unimpeded by shallow water or convoluted water courses. The sUAS with a camera can provide a synoptic view of the sample site to document the context and other potential water quality contributing factors. Both the use of a human-operated skiff or aerial drone can be impacted by weather; however, skiff operations may pose additional safety hazards in the form of drowning or exposure to environmental contaminants. The sUAS approach virtually eliminates non-natural turbidity with only a small propeller-wind-driven wake on the surface. The sUAS and sensor can be moved rapidly for quick-response needs. Fixed site sensors need maintenance and are subject to biofouling. The sUAS-based sensor can be maintained easily, calibrated for peak performance, and modified/exchanged as needed. Finally, the sUAS can be operated in an autonomous mode for rapid and consistent sampling. An aerial drone approach would also provide in situ data for calibration and validation of cyanobacterial algal blooms in both inland and coastal water studies based on satellite remote sensing methods [28].

In coastal salt marshes, samples could be collected easily from shallow waters at tidal stages that prevent access by boat and over broader spatial scales at a single point in the tidal cycle (faster sampling than visiting each station by boat, even with access). Moreover, remote sampling with real-time data transmission permits testing potential sample sites for locations that may contain our analyte of interest.

Some good research efforts on the design and use of a sUAS for water sampling have been conducted [29,30,31,32]. Others have adopted the use of an aerial drone for measuring water temperature with a thermal camera and lowered temperature probe [33,34]. The use of laser-induced fluorescence from a manned aircraft [35] or sUAS [36,37,38] for water sampling has also been conducted.

In this research, we focus on the problem of the visibility of an aerial drone at very low altitudes as it conducts water sampling. The implementation approach uses a modified viewshed analysis approach with high spatial resolution data (in X-Y-Z), meeting the precise requirements of the aerial drone over the water surface. While viewshed analysis has a long history in the GIScience community, little research has focused on data precision impacts on the modeled viewshed results [39,40].

Visibility is very important for two primary reasons: (1) most countries/states require the operator to have visual (and unaided, such as with binoculars) sight of the drone at all times during operation, and (2) electronic two-way transmission of drone control data and drone status/imagery. In this research, we develop and test a GIS-based visibility model for mapping locations where the aerial drone will be visible/hidden to the remote pilot. Our need in this research was to monitor the location of the aerial drone at very low altitudes (e.g., 2 m above water level) in a coastal wetland environment. The uniqueness of the sUAS planning model for water sampling is the highly dynamic nature of the governing factors and the fine-grain geospatial specificity required.

2. Methodology

2.1. Study Site

Prior to field testing of the sUAS, a visibility model was used to determine areas visibility/invisibility for different tidal heights and for different altitudes above water level at the Winyah Bay National Estuarine Research Reserve. North Inlet is located at the Baruch Marine Field Laboratory (BMFL) and is part of the North Inlet—Winyah Bay National Estuarine Research Reserve (NERR) on the coast of South Carolina. For the modeling example, we assumed that the sUAS remote pilot would be standing on the raised platform (2.4 m elevation NAVD88) at Oyster Landing adjacent to BMFL in the North Inlet estuary (Figure 1). Airspace over this estuarine environment is class G (no flight restrictions below 122 m above ground level in the USA).

Figure 1.

Study environment at the Winyah Bay National Estuarine Research Reserve for collecting water samples in the estuarine environment. Oyster Landing is marked by the aircraft symbol.

2.2. Aerial Drone and Sensors

The problem of modeling aerial drone visibility at low altitudes is independent of the characteristics of a motor-powered aerial drone (fixed wing, quadcopter, helicopter) and, in general, the intended application for the drone. Our developed visibility model is generic and aerial drone agnostic. The unique problem of low-altitude operations and operator-to-drone visibility requirement for collecting water samples was our problem context. In this research, we customized an Aurelia X6 Standard hexacopter (six motors) to carry all sensors and related equipment (Figure 2). The Aurelia X6 Standard has a payload of 5.0 kg (excluding batteries), a flight time of about 20 min (when fully loaded), and an operational range of 2.4 km (1.5 mi). Fully loaded with sensors and water sample collections, the operational drone would weigh about 11.75 kg. The drone was customized to carry a laser fluorometer, conductivity and depth sensors, three 250 mL water sampling containers, and up to four micro pumps, an Arduino Nano 33 BLE Sense microcontroller to control the fluorescence sensor, and a Raspberry Pi 4B for controlling all flight equipment, pumps, and storing data. The fluorescence sensor operates at the 450 nm wavelength to excite chlorophyll in phytoplankton with return emittance in the 680 nm wavelength range. Water is drawn up through a 2 m tube into an aluminum housing containing the chlorophyll fluorescence sensor. The same water is then either stored in one of the three sampling containers or exhausted through a flushing line. The decision to store the water sample can be determined remotely from the real-time data stream. The stored water is returned to the laboratory for further analysis. The precise (i.e., a few cm) vertical height of the drone above water level can be determined when the drone is below 1.5 m height above the water by using an acoustic sensor. A more detailed presentation of the aerial drone design, modification, and integration of all sensors is found in [41]. Specifics on the laser fluorescence sensor and calibration/sampling are in [27].

Figure 2.

Water-sampling drone with laser fluorimeter and water storage bottles hovering just over 2 m above water level. The siphon tube is 2 m in length and discussed in [42].

2.3. Geospatial Data and Pre-Processing

To model the visibility of an aerial drone, geospatial data that commensurate with the spatial resolution requirements (X-Y-Z) are required. For example, in our application operating at ~2 m above the water level, vertical elevation data with vertical accuracies better than 1 m (95% confidence level) were required. Moreover, the vertical elevation data must reflect the surface cover that would modify the visibility of the aerial drone. In many if not most cases, the elevation data would be analogous to a fine resolution digital surface model (DSM) of the vegetative and anthropogenic features rather than a digital elevation model (DEM) of the terrain. For our wetland environment at the North Inlet Estuary, there were no anthropogenic features, and the vegetative cover over the potential water sample sites in the estuary was dominated by smooth cordgrass. In lesser abundance are giant cordgrass, black needlerush, salt marsh bulrush, American three-square, soft-stem bulrush, cattails, and pickerel-weed. This vegetative cover typically grows in two varieties referred to as low and high marsh cover, with the high cover approaching about 1.5 to 1.8 m in height above the terrain. While modeling a viewshed is problematic in tree-covered vegetation [42], our targeted estuarine areas were almost exclusively covered solely by marsh vegetation rather than variable height and density of tree foliage. Thus, the visibility modeling problem could be more easily solved with a simpler DSM of the marsh canopy.

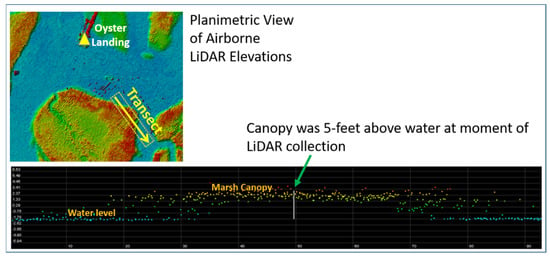

For the North Inlet Estuary study area, both fine resolution (~0.5 m) aerial imagery and airborne LiDAR were available. The vegetative surface cover would be modeled from the full point cloud of the LiDAR data (Figure 3). In general, the use of airborne LiDAR for mapping the terrain beneath wetland marsh grasses results in poor terrain representation as the number of ground returns is extremely low. However, for our use, we only needed to model the DSM represented by the tops of the marsh grasses. The problem of modeling any heterogeneous canopy height (e.g., forests, scrub/shrub, marsh) requires the definition of what is the canopy ‘top’. The traditional approach is to use the maximum LiDAR point elevation in a spatial neighborhood whose size reflects the spatial resolution requirements of the study. Using the maximum elevation in a small neighborhood (i.e., 2 m × 2 m) of first return LiDAR points, we generated a DSM of the entire study environment.

Figure 3.

Planimetric view of airborne LiDAR data collected over the Oyster Landing site at Winyah Bay National Estuarine Research Reserve. Points are colored for elevation. Point cloud demonstrates why aerial LiDAR is less useful for mapping terrain under marsh grass but can be used for grass height over a spatial neighborhood (e.g., a few meters).

A major challenge in our study was the varying height of the non-vegetative surface—the tidally-influenced water level. The tidal height at the time of airborne LiDAR collection dictates the lowest representative height of the DEM as no elevations lower than the water level at the collection time can be shown. Secondly, we needed to synthetically raise the water level to different scenario heights to model the visibility of the sUAS above the tidal scenario water level.

The airborne LiDAR data were collected in the late winter in early 2017 at expected low tidal levels. The coordinate system was the South Carolina State Plane International feet in the NAD83 (2011) horizontal datum and NAVD88 vertical datum. The ellipsoidal heights were transformed to NAVD88 heights with the GEOID12B model. The accuracy assessment conducted by the aeroservice contractor for the non-vegetated areas reported a 0.061 m root mean squared error (RMSE). The aggregated nominal point density (ANPD) was 5.26 points per m2. To resolve local and NAVD88 tidal height, we used the same airborne LiDAR returns over the water surface to estimate the tidal height at LiDAR collection. It is well-known that LiDAR pulses operating in the short infrared wavelengths are effectively absorbed by water, making it problematic for mapping water bodies or surfaces covered by water. However, water surfaces with wave activity, however small, typically result in some low-intensity LiDAR returns. Some returns were detected by the airborne LiDAR sensor (e.g., Figure 3) and were used to estimate local tidal height in the NAVD88 datum. The tidal elevation at Oyster landing during LiDAR collection was determined to be −0.55 m NAVD88. Mean low water (MLW) and mean high water (MHW) at Oyster Landing are −0.680 m and +0.625 m, respectively. Thus, the LiDAR collection was very near the mean low water (actually 13 cm higher than MLW). For our analysis, we assume the water elevation surface was MLW.

All of the returns over the water were used to build a DEM representing the water surface. The DSM for the non-water areas was constructed from the vegetative height surface described earlier. Two raster model strata were thus created: (1) the water surface elevations at a near mean low water and (2) vegetative canopy elevations (i.e., the DSM). For scenarios of tidal heights (e.g., MLW, MHW, and those in-between), we simply added the prescribed tidal height to the baseline MLW DEM. As the tidal height in a scenario increased above the mudflat or vegetated areas, the location was recorded as a water location. The apparent (but not actual) drop in vertical height of the aerial drone caused by earth curvature with increasing distance from the remote pilot was modeled with a latitude-dependent Earth curvature model.

2.4. Visibility of a Distant Object

Modeling the visibility of an aerial drone at distance from the operator is dependent on several parameters such as the size of the drone, illumination included (e.g., strobe), distance and curvature of the earth, atmospheric conditions (e.g., haze, low-lying clouds), and the operator’s eye condition. The condition of the operator’s eye is highly variable. To simplify the problem in actual operation, we assumed that the drone would be carrying a strobe illumination source that would also satisfy FAA legal requirements for visibility and obviate the size of the drone. The use of a strobe would also obviate the issues with varying operator’s eye condition and largely obviate the issues with a hazy atmosphere at an operational distance out to 2.0 km.

The consideration of the curvature of the Earth is, in general, not important for most drone visibility modeling applications at higher altitudes with less stringent precision requirements. However, for our operational conditions near 2 m above water level, the curvature of the earth should be considered. The general equation for the apparent drop in vertical height (vd) with distance (d) from the viewer and radius (r) of the earth at a specified latitude is:

where vd = apparent vertical drop of distant object in meters,

vd = −1 × r × (1 − cos (0.009° × d))

r = radius of earth at study area latitude in meters,

d = distance between observer and aerial drone in kilometers.

Our study area is approximately 34 degrees north latitude, and so the earth radius is 6,378,489 m. At a distance of 2000 m from the viewer, the aerial drone would appear to be 0.31 m lower to the ground than the actual.

The concept of refraction was not included in this initial model design as the effects over the operational distance of about 2000 m are relatively negligible, and incorporating the minimal refraction impacts would also require data on the atmospheric conditions at flight time.

3. Example Mission

In the example aerial mission, the sUAS would fly out to about 1600 m distance from the remote pilot standing on the boardwalk at Oyster Landing. The boardwalk is 2.4 m above mean sea level, and the pilot’s eye height above the boardwalk is assumed to be 1.6 m. We assume the aerial drone would carry an artificial strobe light enabling the remote pilot to see the strobe, even in daylight, up to the FAA required distance of 4800 m (3 miles), farther than our maximum distance of 1600 m. We include the effects of the Earth’s curvature.

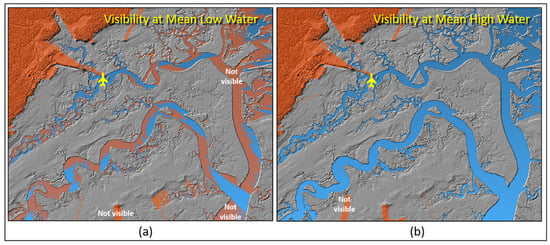

We first tested the deployment using a 2 m siphon tube lowered 1 m in the water. The result would be that the sUAS is 1 m above the water surface. The extreme water sample cases would be at MLW and MHW. Modeling the visible/invisible portions of the wetland environment at 1 m above water level at MLW is shown in Figure 4a. In this scenario, the sUAS would be hidden in large portions of the estuarine creeks, almost exclusively caused by the roughly ~1 to 2 m height of the marsh grasses. Tree cover in the northwestern part of the environment would completely exclude visibility, although this portion of the environment does not contain estuarine waters and thus, would not be sampled.

Figure 4.

(a) Modeled visible/invisible areas of the Winyah Bay wetland environment for an aerial drone at 1 m above water level at mean low water (MLW). (b) Modeled visible/invisible areas for an aerial drone at 1 m above water level at mean high water (MHW). The sUAS in any of the orange-colored areas would be invisible to the operator at the Oyster Landing location.

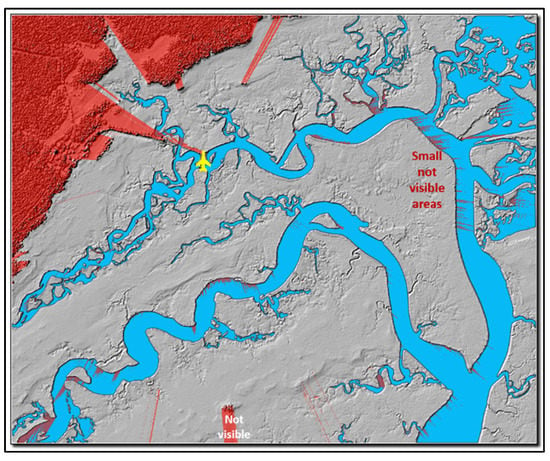

A second model tested the visibility during MHW (Figure 4b). The results of this modeling effort indicated that the sUAS at 1 m above the water would be visible in all of the water courses in the study environment. A final model examined the sUAS visibility at an altitude of 1.5 m above MLW, assuming the siphon tube would only be lowered to only 0.5 m depth (or a longer siphon tube would be used). The results of the 1.5 m sUAS height indicated favorable visibility in virtually all of the environment except for a few locations along the main channel (Figure 5). Thus, the “invisible” locations would be avoided as water sample locations, and interim flight path segments would be elevated above 2 m.

Figure 5.

Modeled visible/invisible areas of the Winyah Bay wetland environment for an aerial drone at 1.5 m above water level at mean low water (MLW). The sUAS in any of the orange-colored areas would be invisible to the operator at the Oyster Landing location.

4. Discussion

A novel GIS-based sUAS planning model was designed and implanted in a GIS-based modeling approach to assist in planning the launch, flight, and collection of water observations/samples for an estuarine environment under varying tidal height scenarios. This visibility model permits modeling various scenarios under different sUAS heights and remote pilot locations to ensure both a safe and legal (flying within line-of-sight) operation while fulfilling the research objectives of water sample collections.

Unfortunately, the sUAS community and supporting companies have not yet designed and offered flight planning applications that include the physical environment that are impediments rather than the objects of investigation, particularly a physical environment that is highly temporal, such as tidal or riverine variations. Moreover, the level of precision/accuracy for safe and successful flight operations requires geospatial data that are commensurate with the application requirements. In this study, the requirements were an approximately decimeter-level precision/accuracy in the vertical domain with a few meters level of precision/accuracy in the horizontal domain. The careful consideration of horizontal and vertical datums, waypoint coordinate sources, and precision geospatial data are essential for low-altitude autonomous operations over water surfaces. The required geospatial data for decimeter level (vertical domain) operations include not only the position of the drone in geographic space (e.g., using real-time kinematic GNSS or relative positioning with laser/sonic sensors) but the environmental features that are impediments to the operation. Hardened features such as terrain surfaces and building faces may be collected with such precision/accuracy and may be available. However, other natural features, such as trees, light poles, and signage, are typically not recorded with such high accuracy or even availability. Moreover, these features may be highly temporal or semi-transparent. For example, the aerial drone might be visible through tree branch/leaves openings, particularly for the trees whose lower branches are lost as the tree matures.

In our study in the estuarine environment, the significant challenge was building a digital surface model of the vegetation canopy. Since the cordgrass vegetation largely creates a visibly opaque surface (to the drone operator), the surface model was relatively simple in concept. Determining the tidal water levels for the DEM and subsequent scenarios is more challenging. A limitation of our approach for other environments would be in a vegetative environment with a broken canopy or leaf-off conditions where the drone may be visible through the foliage [40].

While the Federal legal constraints on aerial drone operation are well known, the local regulations are less known and often difficult to determine (e.g., county, park). Determining launch/landing and operational sites for the remote pilot are also regulated in most states [7]. Fine-grained geospatial data to support a GIS-based planning model solution are almost non-existent. Some of the geospatial data, such as the location of critical infrastructure with overflight restrictions, are not published for security/safety reasons. While current and near-term weather conditions are available at moderately coarse resolutions (e.g., nearest weather station), fine-scale data, such as the wind turbulence near vertical features (buildings, mountains, etc.), must be temporally modeled. Fine-scale data to support transient features, such as forest management, vehicles, or even new residential/commercial developments, can be problematic to acquire. Fluvial data to support tidal or riverine conditions can also be problematic to model between known monitoring stations and at fine resolution scales. For example, the water surface of riverine environments may be rough (e.g., rocks, debris) inclined surfaces whose height is dynamic with the upstream conditions. These multitude of physical processes that impact the operation of an aerial drone at very low altitudes are challenging yet are the domain of, and challenge for, the aerial drone research community.

We are continuing both the visibility modeling research and the use of an aerial drone with exotic sensors (e.g., lasers) in other water and non-water applications. For example, a modified visibility modeling approach is being used to plan for repeat photography with aerial drones, such as repeating early 1900s ground and aerial photographic positions. After several operational tests with the fully outfitted drone, we are also modifying the system to carry other sensors.

Author Contributions

Conceptualization, M.E.H., M.L.M., N.I.V. and T.L.R.; modeling methodology, M.E.H. and M.D.; viewshed modeling, M.E.H. laser fluorescence design/integration: M.L.M., C.E. and Z.K.; drone modification/control/integration: N.I.V., K.R.I.S., M.K. and B.K.; writing—review and editing, M.E.H., M.L.M., N.I.V., T.L.R., Z.K., C.E. and K.R.I.S.; project administration, M.L.M.; funding acquisition, M.L.M., M.E.H., N.I.V. and T.L.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded internally by the University of South Carolina ASPIRE II program, grant #130200-21-57352.

Data Availability Statement

LiDAR data for the study site can be obtained through the USGS LiDAR Explorer application or through the NOAA Digital Coast.

Acknowledgments

Design and implementation of the laser fluorometer were led by Michael L. Myrick and his research team of Caitlyn English and Zechariah Kitzhaber. The modification of the aerial drone and hardware/software integration with all sensors and command/control of the drone was led by Nikolaos I. Vitzilaios and his research team of Kazi Ragib I. Sanim, Michail Kalaitzakis, and Bhanuprakash Kosaraju. James L. Pinckney provided essential guidance on the study site and environmental issues with data collection. Silvia Piovan provided a careful review of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Quirk, B.; Haack, B. Federal government applications of UAS technology. In Applications of Small Unmanned Aircraft Systems; Sharma, J.B., Ed.; CRC Press: New York, NY, USA, 2019; pp. 81–113. [Google Scholar]

- Walter, M. Drones in Urban Stormwater Management: A Review and Future Perspectives. Urban Water J. 2019, 16, 505–518. [Google Scholar] [CrossRef]

- Niglio, F.; Comite, P.; Cannas, A.; Pirri, A.; Tortora, G. Preliminary Clinical Validation of a Drone-Based Delivery System in Urban Scenarios Using a Smart Capsule for Blood. Drones 2022, 6, 195. [Google Scholar] [CrossRef]

- Kim, J.J.; Kim, I.; Hwang, J. A change of perceived innovativeness for contactless food delivery services using drones after the outbreak of COVID-19. Int. J. Hosp. Manag. 2020, 93, 102758. [Google Scholar] [CrossRef]

- Barbieri, L.; Kral, S.T.; Bailey, S.C.C.; Frazier, A.E.; Jacob, J.D.; Reuder, J.; Brus, D.; Chilson, P.B.; Crick, C.; Detweiler, C.; et al. Intercomparison of Small Unmanned Aircraft System (sUAS) Measurements for Atmospheric Science during the LAPSE-RATE Campaign. Sensors 2019, 19, 2179. [Google Scholar] [CrossRef] [PubMed]

- Schuyler, T.J.; Bailey, S.C.C.; Guzman, M.I. Monitoring Tropospheric Gases with Small Unmanned Aerial Systems (sUAS) during the Second CLOUDMAP Flight Campaign. Atmosphere 2019, 10, 434. [Google Scholar] [CrossRef]

- Hodgson, M.E.; Sella-Villa, D. State-level statutes governing unmanned aerial vehicle use in academic research in the United States. Int. J. Remote Sens. 2021, 42, 5366–5395. [Google Scholar] [CrossRef]

- Mulero-Pázmány, M.; Jenni-Eiermann, S.; Strebel, N.; Sattler, T.; Negro, J.J.; Tablado, Z. Unmanned aircraft systems as a new source of disturbance for wildlife: A systematic review. PLoS ONE 2017, 12, e0178448. [Google Scholar] [CrossRef]

- Liu, M.; Liu, X.; Zhu, M.; Zheng, F. Stochastic Drone Fleet Deployment and Planning Problem Considering Multiple-Type Delivery Service. Sustainability 2019, 11, 3871. [Google Scholar] [CrossRef]

- Chen, Y.; She, J.; Li, X.; Zhang, S.; Tan, J. Accurate and Efficient Calculation of Three-Dimensional Cost Distance. ISPRS Int. J. Geo-Inf. 2020, 9, 353. [Google Scholar] [CrossRef]

- Nägeli, T.; Meier, L.; Domahidi, A.; Alonso-Mora, J.; Hilliges, O. Real-time planning for automated multi-view drone cinematography. ACM Trans. Graph. 2017, 36, 1–10. [Google Scholar] [CrossRef]

- Kurdel, P.; Češkovič, M.; Gecejová, N.; Adamčík, F.; Gamcová, M. Local Control of Unmanned Air Vehicles in the Mountain Area. Drones 2022, 6, 54. [Google Scholar] [CrossRef]

- Le, W.; Xue, Z.; Chen, J.; Zhang, Z. Coverage Path Planning Based on the Optimization Strategy of Multiple Solar Powered Unmanned Aerial Vehicles. Drones 2022, 6, 203. [Google Scholar] [CrossRef]

- Jayaweera, H.M.P.C.; Hanoun, S. Path Planning of Unmanned Aerial Vehicles (UAVs) in Windy Environments. Drones 2022, 6, 101. [Google Scholar] [CrossRef]

- Nedjati, A.; Izbirak, G.; Vizvari, B.; Arkat, J. Complete Coverage Path Planning for a Multi-UAV Response System in Post-Earthquake Assessment. Robotics 2016, 5, 26. [Google Scholar] [CrossRef]

- Cho, S.-W.; Park, J.-H.; Park, H.-J.; Kim, S. Multi-UAV Coverage Path Planning Based on Hexagonal Grid Decomposition in Maritime Search and Rescue. Mathematics 2021, 10, 83. [Google Scholar] [CrossRef]

- Moud, H.I.; Flood, I.; Zhang, X.; Abbasnejad, B.; Rahgozar, P.; McIntyre, M. Qualitative and Quantitative Risk Analysis of Unmanned Aerial Vehicle Flights on Construction Job Sites: A Case Study. Int. J. Adv. Intell. Syst. 2019, 12, 135–146. [Google Scholar] [CrossRef]

- Melita, C.D.; Guastella, D.C.; Cantelli, L.; Di Marco, G.; Minio, I.; Muscato, G. Low-Altitude Terrain-Following Flight Planning for Multirotors. Drones 2020, 4, 26. [Google Scholar] [CrossRef]

- Pascarelli, C.; Marra, M.; Avanzini, G.; Corallo, A. Environment for Planning Unmanned Aerial Vehicles Operations. Aerospace 2019, 6, 51. [Google Scholar] [CrossRef]

- Cummings, M.; Marquez, J.; Roy, N. Human-automated path planning optimization and decision support. Int. J. Human-Computer Stud. 2012, 70, 116–128. [Google Scholar] [CrossRef]

- Stodola, P. Improvement in the model of cooperative aerial reconnaissance used in the tactical decision support system. J. Déf. Model. Simul. Appl. Methodol. Technol. 2017, 14, 483–492. [Google Scholar] [CrossRef]

- Tokekar, P.; vander Hook, J.; Mulla, D.; Isler, V. Sensor Planning for a Symbiotic UAV and UGV System for Precision Agriculture. IEEE Trans. Robot. 2016, 32, 1498–1511. [Google Scholar] [CrossRef]

- Fikar, C.; Gronalt, M.; Hirsch, P. A decision support system for coordinated disaster relief distribution. Expert Syst. Appl. 2016, 57, 104–116. [Google Scholar] [CrossRef]

- Roesler, C.; Uitz, J.; Claustre, H.; Boss, E.; Xing, X.; Organelli, E.; Briggs, N.; Bricaud, A.; Schmechtig, C.; Poteau, A.; et al. Recommendations for obtaining unbiased chlorophyll estimates from in situ chlorophyll fluorometers: A global analysis of WET Labs ECO sensors. Limnol. Oceanogr. Methods 2017, 15, 572–585. [Google Scholar] [CrossRef]

- Olson, R.J.; Sosik, H. A submersible imaging-in-flow instrument to analyze nano-and microplankton: Imaging FlowCytobot. Limnol. Oceanogr. Methods 2007, 5, 195–203. [Google Scholar] [CrossRef]

- Kalaitzakis, M.; Cain, B.; Vitzilaios, N.; Rekleitis, I.; Moulton, J. A marsupial robotic system for surveying and inspection of freshwater ecosystems. J. Field Robot. 2020, 38, 121–138. [Google Scholar] [CrossRef]

- English, C.; Kitzhaber, Z.; Sanim, K.R.I.; Kalaitzakis, M.; Kosaraju, B.; Pinckney, J.L.; Hodgson, M.E.; Vitzilaios, N.I.; Richardson, T.L.; Myrick, M.L. Chlorophyll Fluorometer for Intelligent Water Sampling by a Small Uncrewed Aircraft system (sUAS). Appl. Spectrosc. 2022; in press. [Google Scholar]

- Page, B.P.; Kumar, A.; Mishra, D.R. A novel cross-satellite based assessment of the spatio-temporal development of a cyanobacterial harmful algal bloom. Int. J. Appl. Earth Obs. Geoinf. ITC J. 2018, 66, 69–81. [Google Scholar] [CrossRef]

- Schwarzbach, M.; Laiacker, M.; Mulero-Pazmany, M.; Kondak, K. Remote water sampling using flying robots. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems, ICUAS 2014—Conference Proceedings, Orlando, FL, USA, 27–30 May 2014; pp. 72–76. [Google Scholar] [CrossRef]

- Ore, J.P.; Elbaum, S.; Burgin, A.; Detweiler, C. Autonomous aerial water sampling. J. Field Robot. 2015, 32, 1095–1113. [Google Scholar] [CrossRef]

- Castendyk, D.; Straight, B.; Voorhis, J.; Somogyi, M.; Jepson, W.; Kucera, B. Using aerial drones to select sample depths in pit lakes. In Proceedings of the 13th International Conference on Mine Closure, Australian Centre for Geomechanics, Perth, Australia, 3–5 September 2019; pp. 1113–1126. [Google Scholar] [CrossRef]

- Prior, E.M.; O’Donnell, F.C.; Brodbeck, C.; Donald, W.N.; Runion, G.B.; Shepherd, S.L. Measuring High Levels of Total Suspended Solids and Turbidity Using Small Unoccupied Aerial Systems (sUAS) Multispectral Imagery. Drones 2020, 4, 54. [Google Scholar] [CrossRef]

- Demario, A.; Lopez, P.; Plewka, E.; Wix, R.; Xia, H.; Zamora, E.; Gessler, D.; Yalin, A.P. Water Plume Temperature Measurements by an Unmanned Aerial System (UAS). Sensors 2017, 17, 306. [Google Scholar] [CrossRef]

- Fitch, K.; Kelleher, C.; Caldwell, S.; Joyce, I. Airborne thermal infrared videography of stream temperature anomalies from a small unoccupied aerial system. Hydrol. Process. 2018, 32, 2616–2619. [Google Scholar] [CrossRef]

- Kim, H.H. New Algae Mapping Technique by the Use of an Airborne Laser Fluorosensor. Appl. Opt. 1973, 12, 1454–1459. [Google Scholar] [CrossRef] [PubMed]

- Aruffo, E.; Chiuri, A.; Angelini, F.; Artuso, F.; Cataldi, D.; Colao, F.; Fiorani, L.; Menicucci, I.; Nuvoli, M.; Pistilli, M.; et al. Hyperspectral Fluorescence LIDAR Based on a Liquid Crystal Tunable Filter for Marine Environment Monitoring. Sensors 2020, 20, 410. [Google Scholar] [CrossRef]

- Grishin, M.Y.; Lednev, V.N.; Pershin, S.M.; Bunkin, A.F.; Kobylyanskiy, V.V.; Ermakov, S.A.; A Kapustin, I.; A Molkov, A. Laser remote sensing of an algal bloom in a freshwater reservoir. Laser Phys. 2016, 26, 125601. [Google Scholar] [CrossRef]

- Duan, Z.; Li, Y.; Wang, J.; Zhao, G.; Svanberg, S. Aquatic environment monitoring using a drone-based fluorosensor. Appl. Phys. A 2019, 125, 108. [Google Scholar] [CrossRef]

- Heather, A.S.; Manson, S.M. Heights and locations of artificial structures in viewshed calculation: How close is close enough? Landsc. Urban Plan. 2007, 82, 257–270. [Google Scholar] [CrossRef]

- Zong, X.; Wang, T.; Skidmore, A.K.; Heurich, M. The impact of voxel size, forest type, and understory cover on visibility estimation in forests using terrestrial laser scanning. GIScience Remote Sens. 2021, 58, 323–339. [Google Scholar] [CrossRef]

- Sanim, K.; Kalaitzakis, M.; Kosaraju, B.; Kitzhaber, Z.; English, C.; Vitzilaios, N.; Myrick, M.; Hodgson, M.; Richardson, T. Development of an Aerial Drone System for Water Analysis and Sampling. In Proceedings of the 2022 International Conference on Unmanned Aircraft Systems (ICUAS), Dubrovnik, Croatia, 21–24 June 2022; pp. 1601–1607. [Google Scholar] [CrossRef]

- Llobera, M. Modeling visibility through vegetation. Int. J. Geogr. Inf. Sci. 2007, 21, 799–810. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).