Design of a UAV for Autonomous RFID-Based Dynamic Inventories Using Stigmergy for Mapless Indoor Environments

Abstract

:1. Introduction

2. Related Work

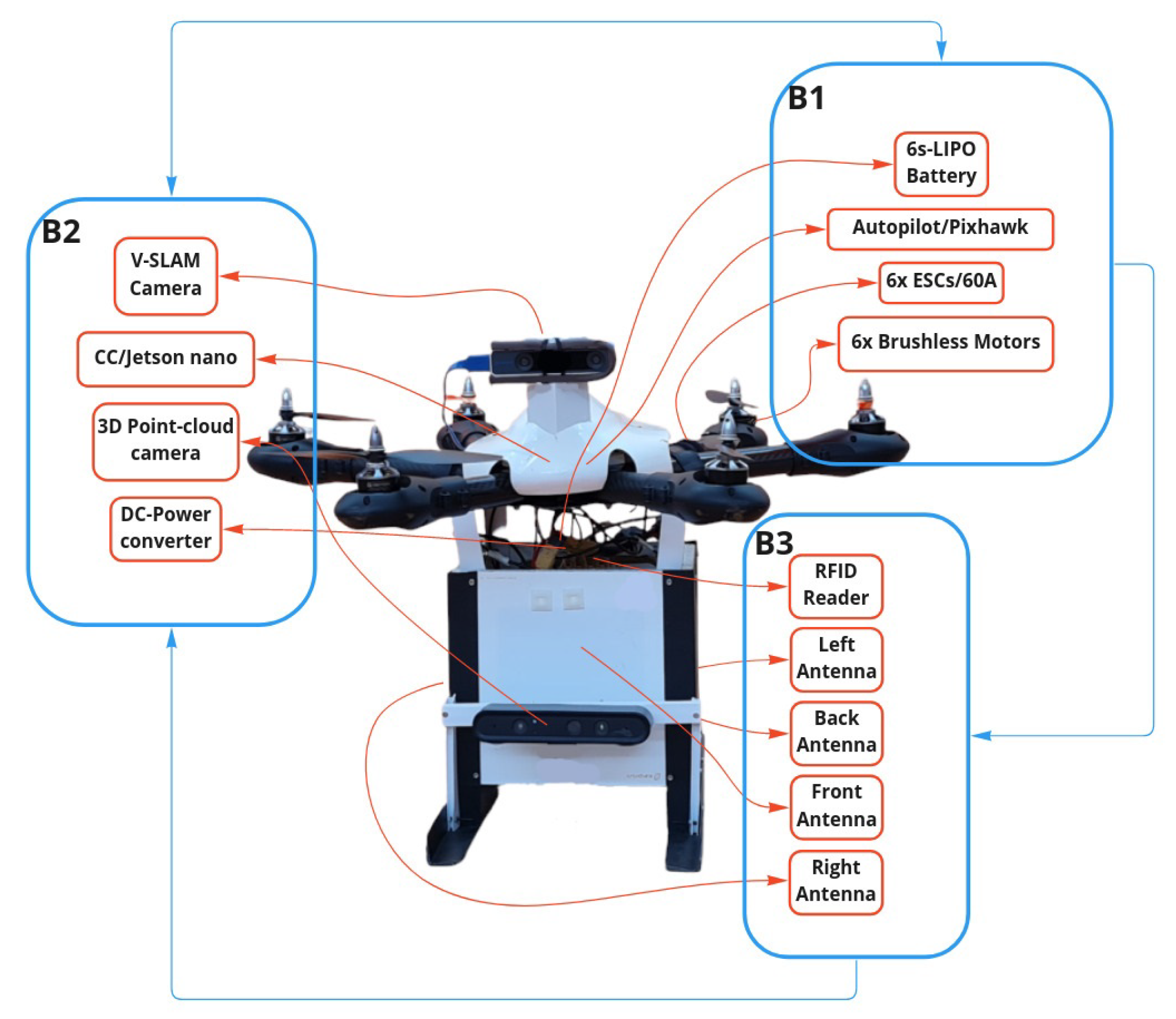

3. Hardware Design and Functionality

3.1. Main Flight System, B1

3.2. Sensors and Processing Units, B2

3.3. RFID-Payload, B3

4. RFID-SOAN Workflow

4.1. Part 1: Passive OA System

4.2. Part 2: The RFID Stigmergic Navigation Algorithm

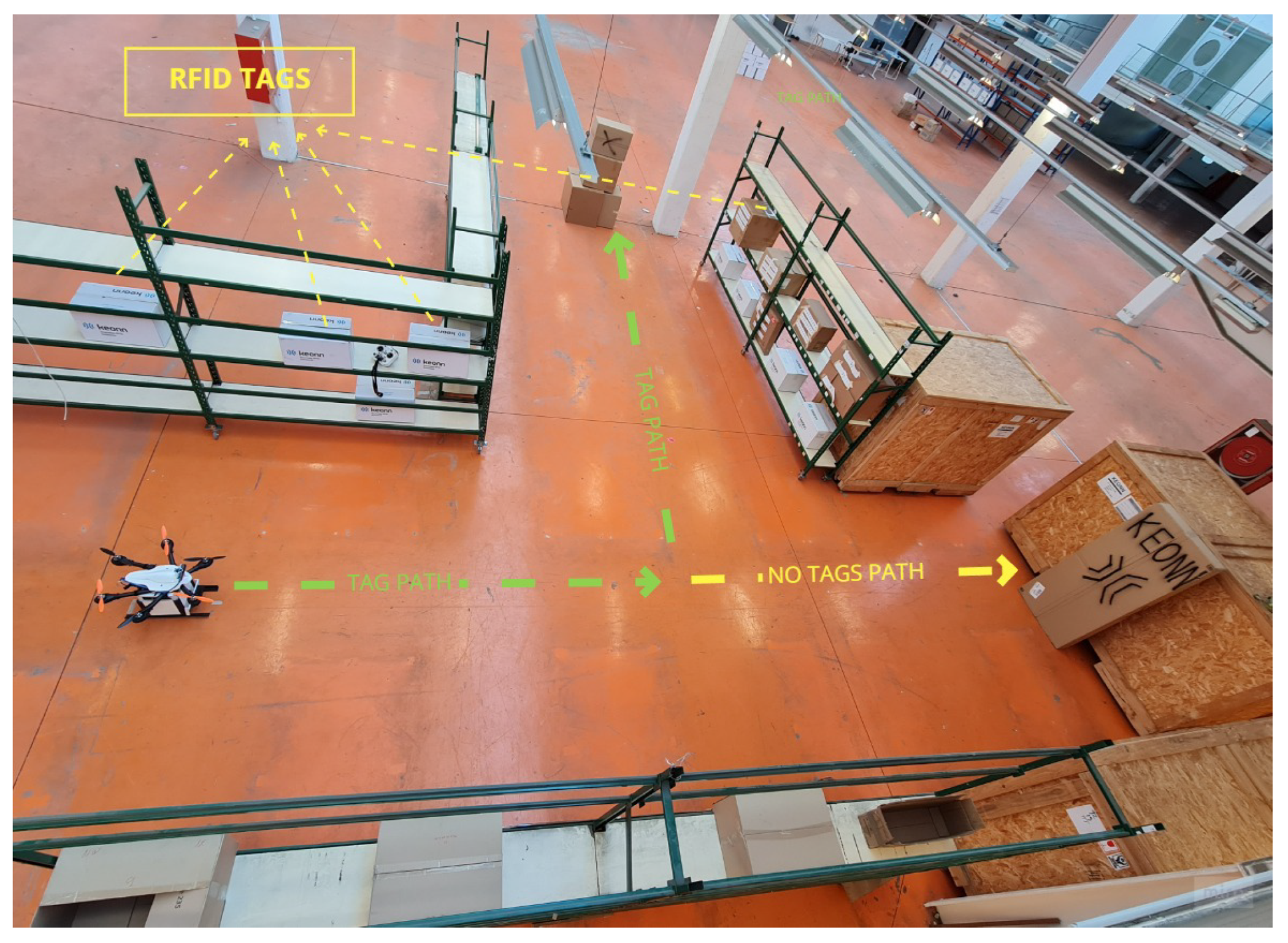

5. Experiments

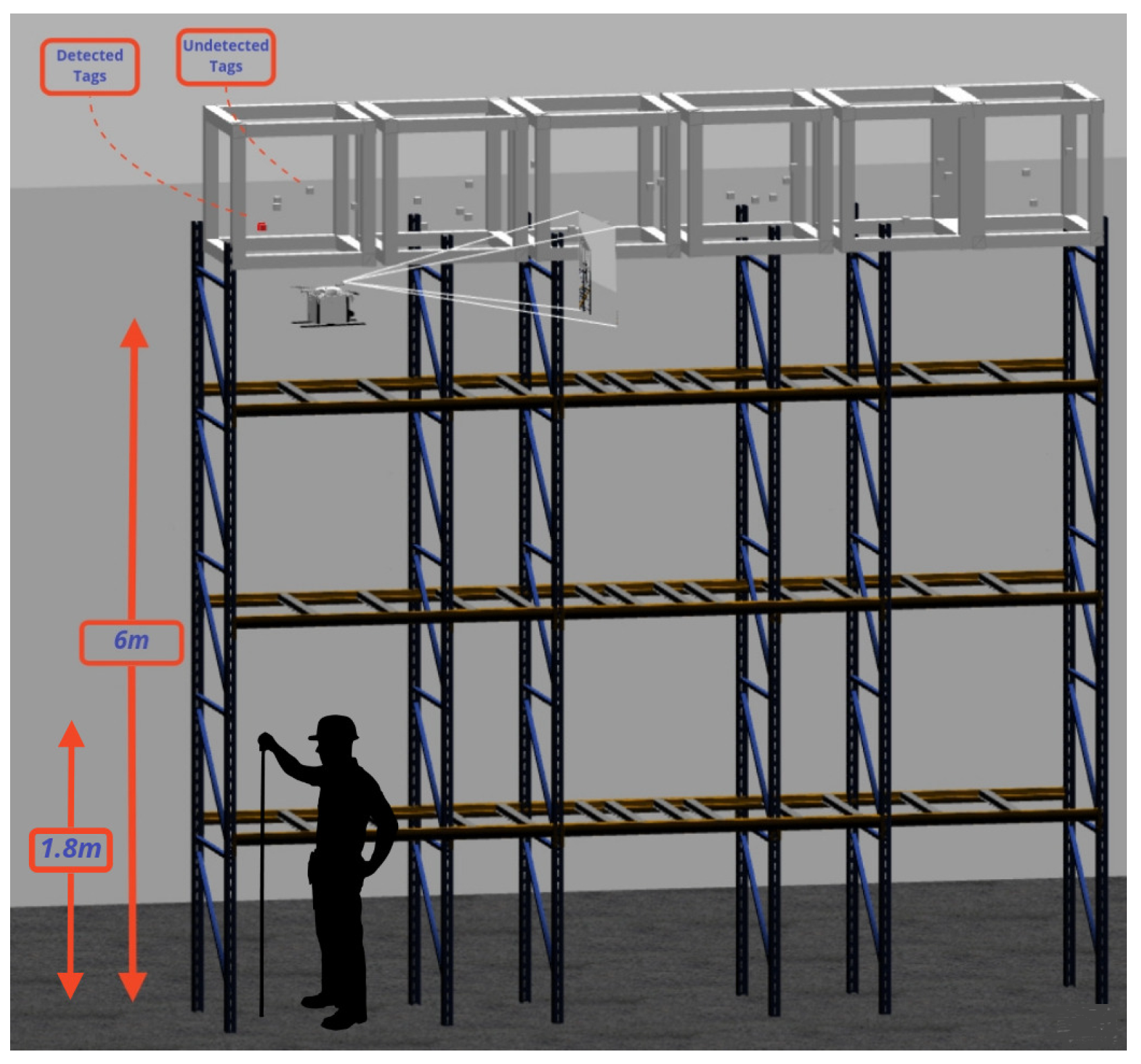

5.1. Scenario 1: One Side, One Aisle, 330 RFID Tags

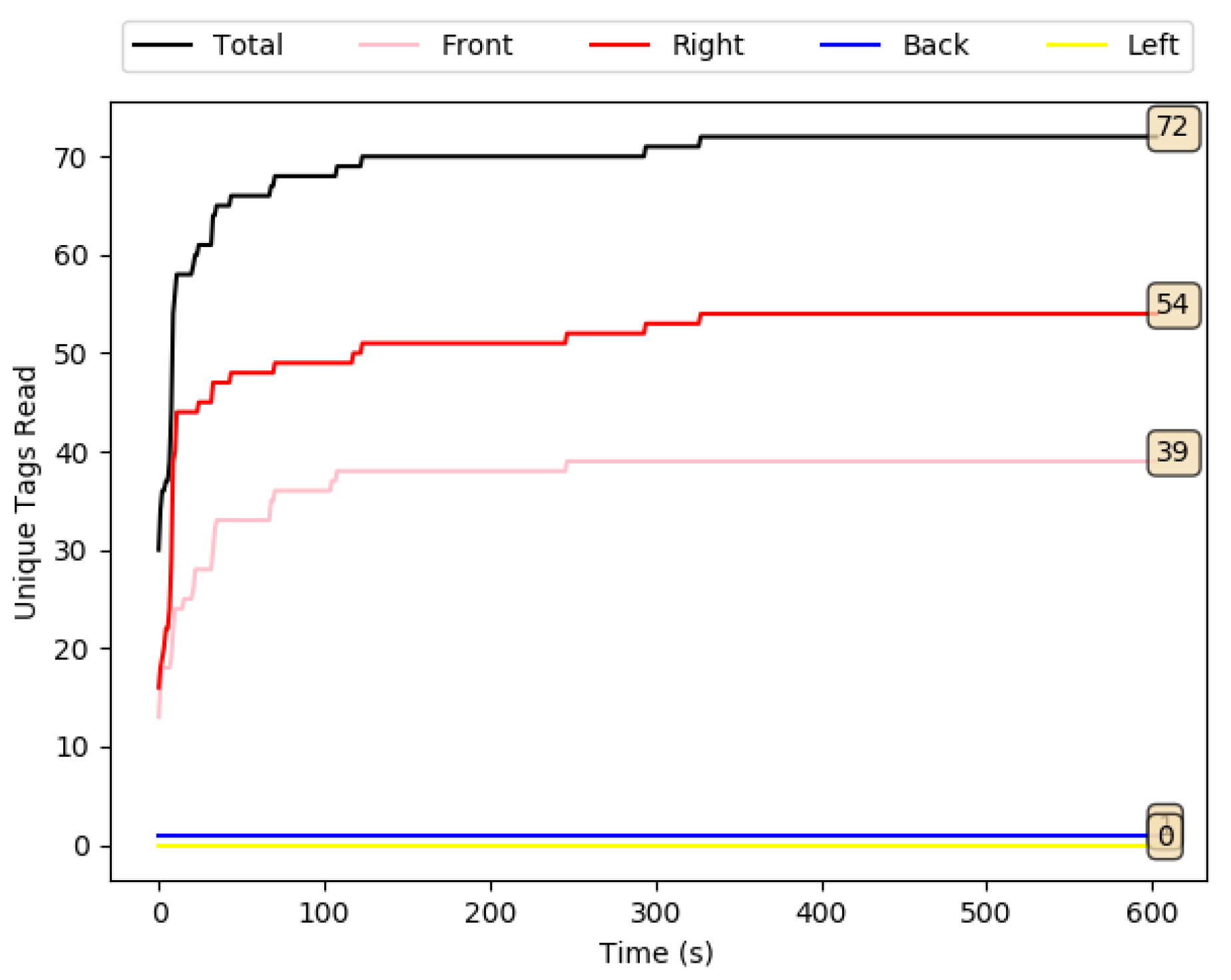

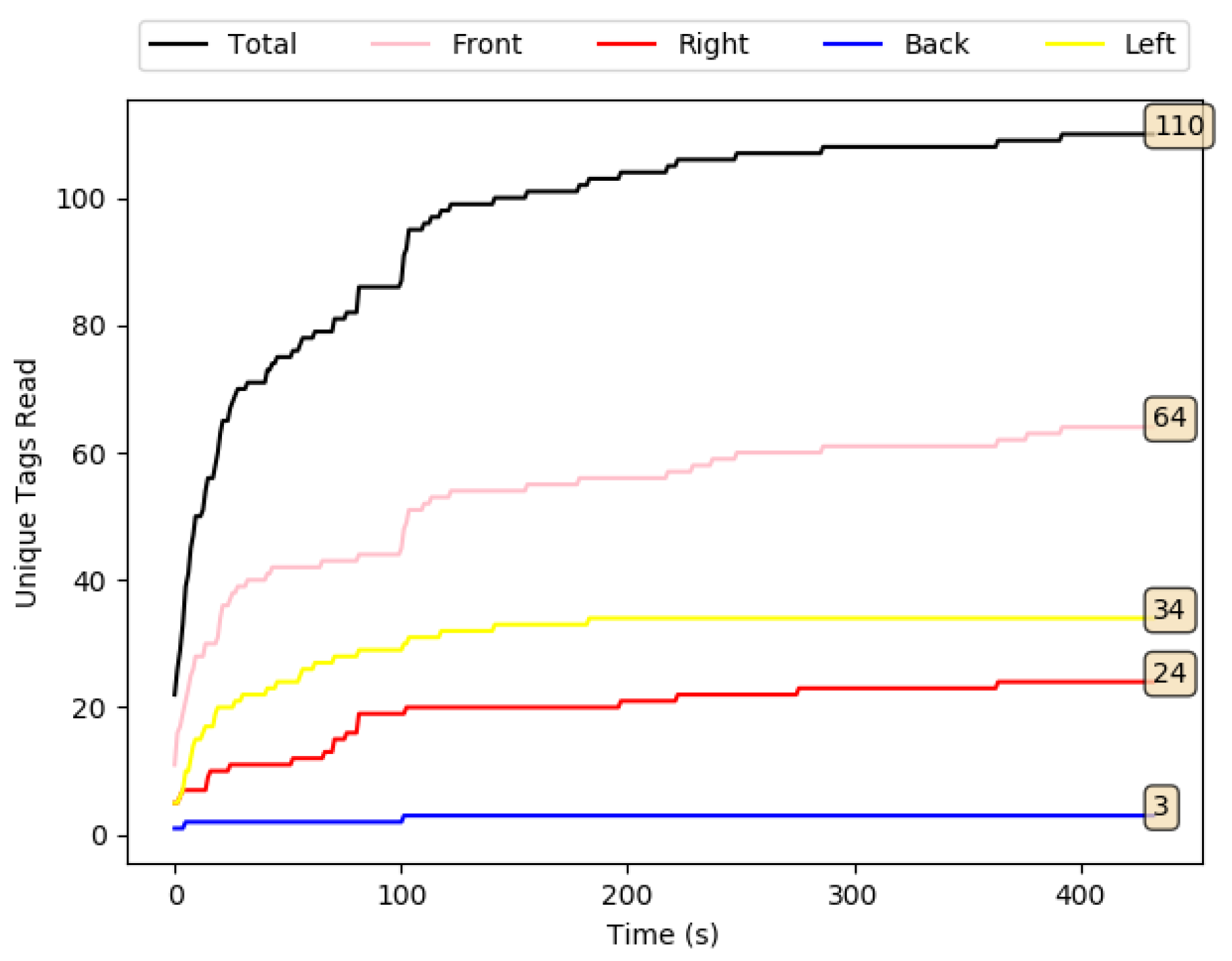

5.1.1. Experiment 1A: Scenario 1, UAV at a Static Position

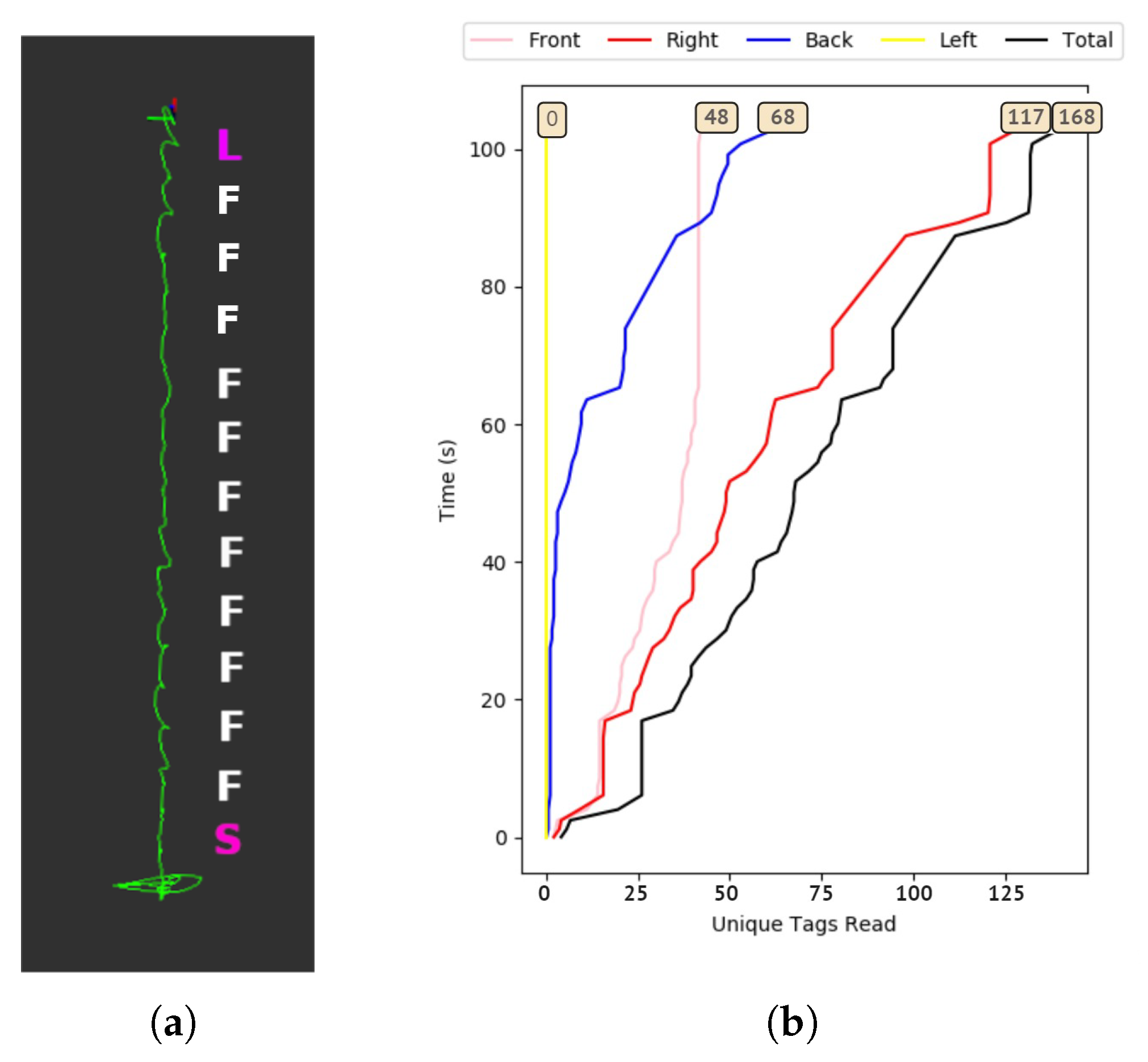

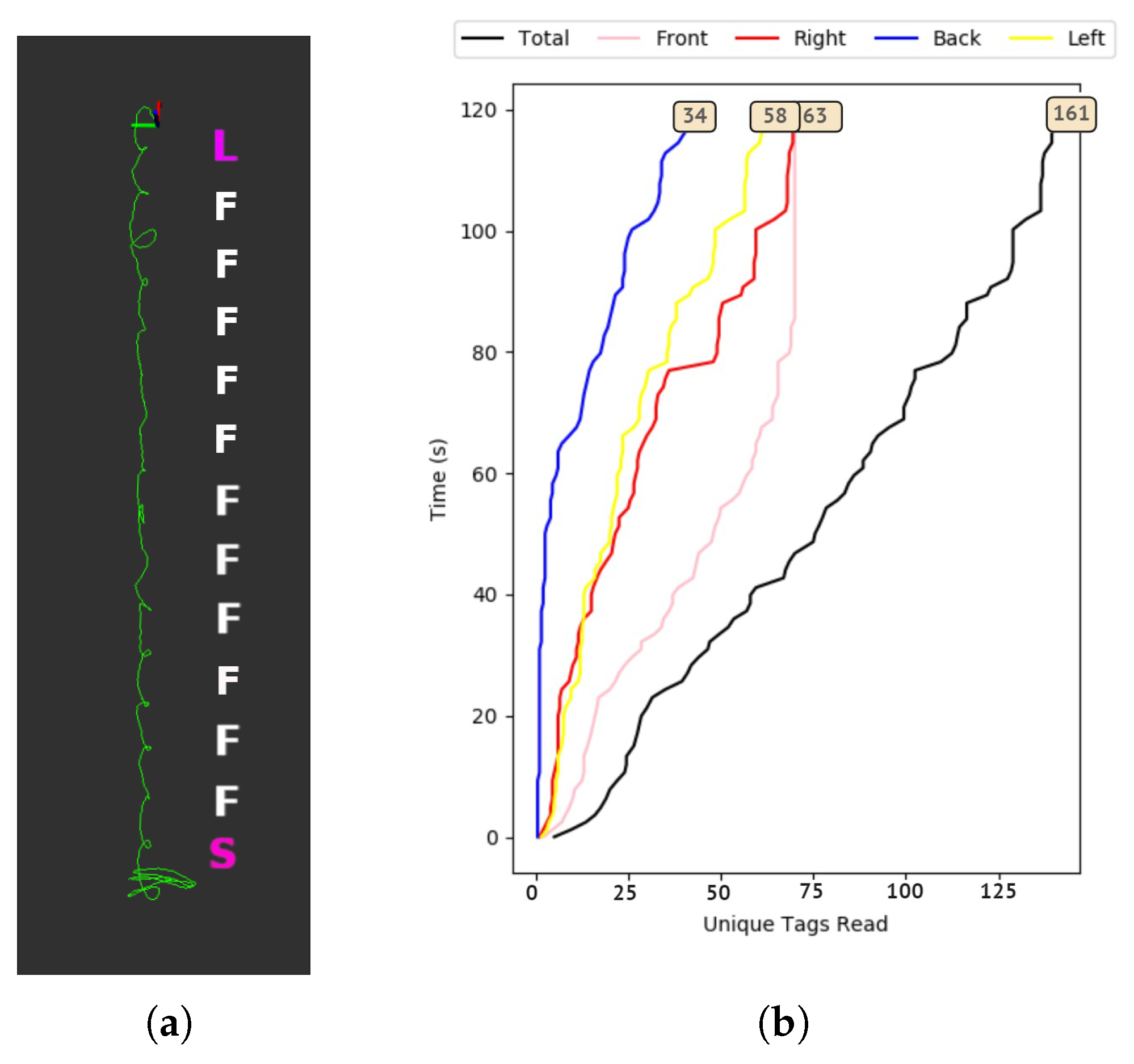

5.1.2. Experiment 1B: Scenario 1, UAV Using Dead Reckoning Navigation

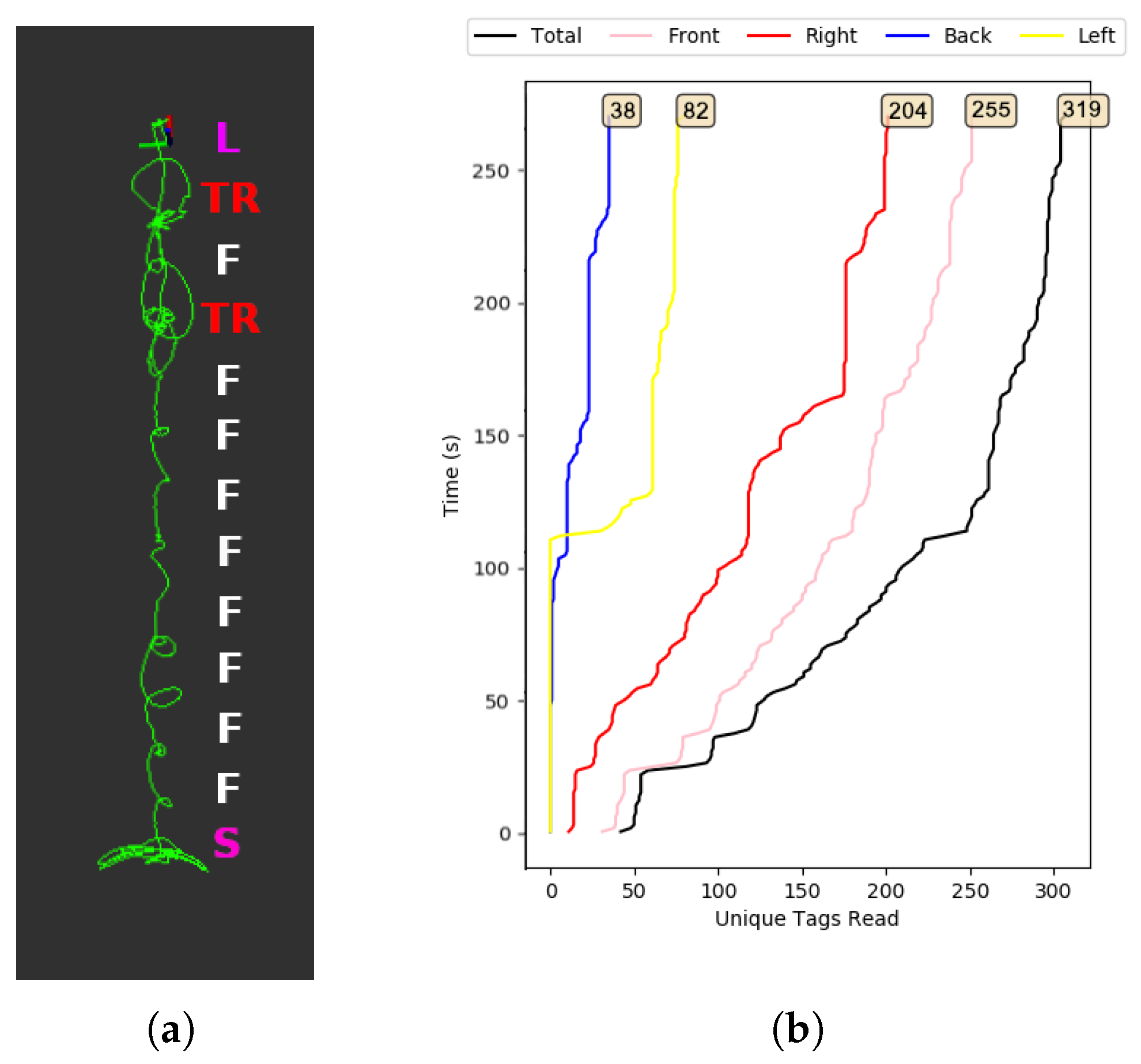

5.1.3. Experiment 1C: Scenario 1, UAV Using RFID-SOAN Navigation

5.2. Scenario 2: Two Sides, One Aisle, 330 Tags

5.2.1. Experiment 2A: Scenario 2, UAV at a static position

5.2.2. Experiment 2B: Scenario 2, UAV Using Dead Reckoning Navigation

5.2.3. Experiment 2C: Scenario 2, UAV Using RFID-SOAN Navigation

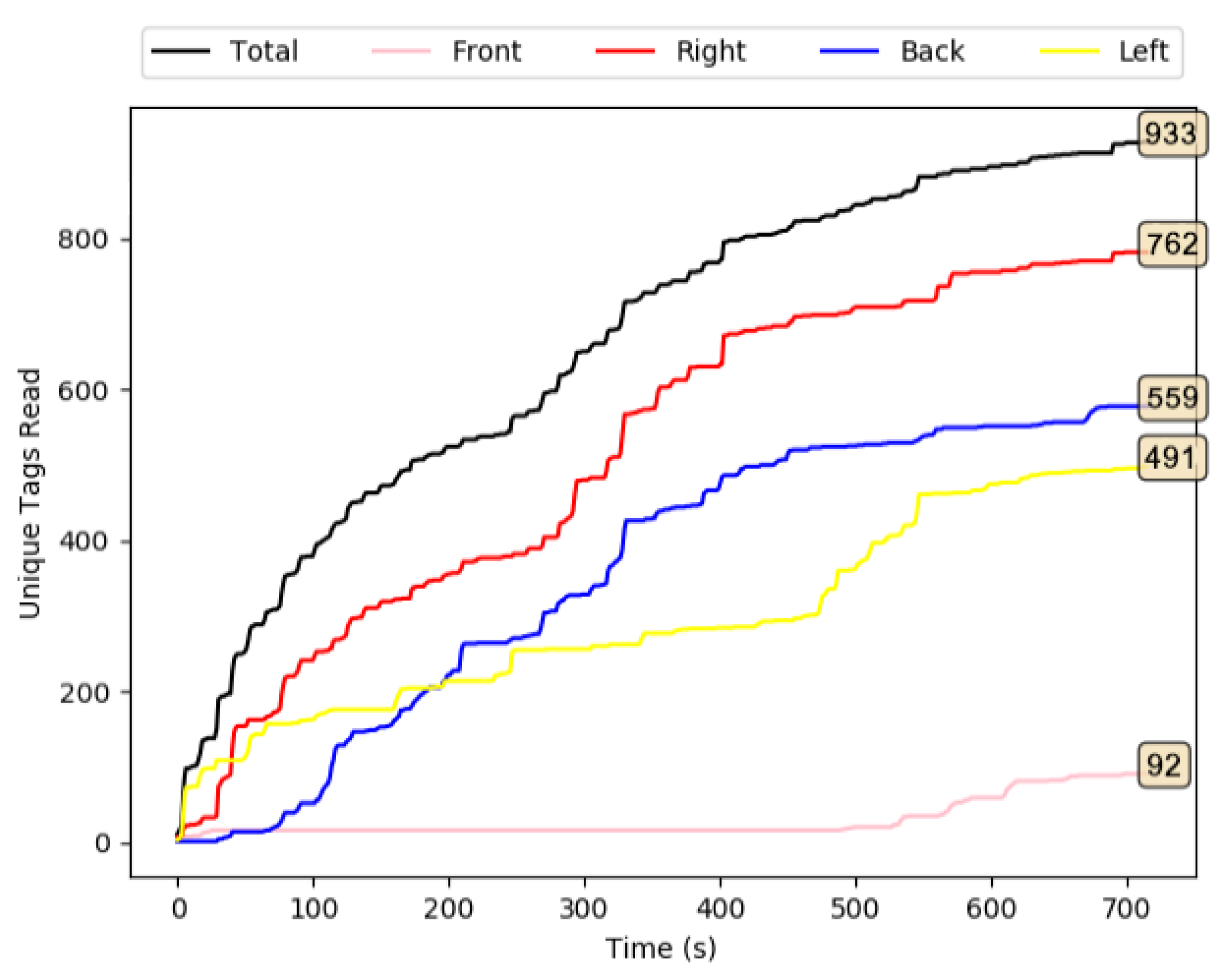

5.3. Scenario 3: Two Sides, One Aisle, 660 RFID Tags

5.3.1. Experiment 3A: Scenario 3, UAV at a Static Position

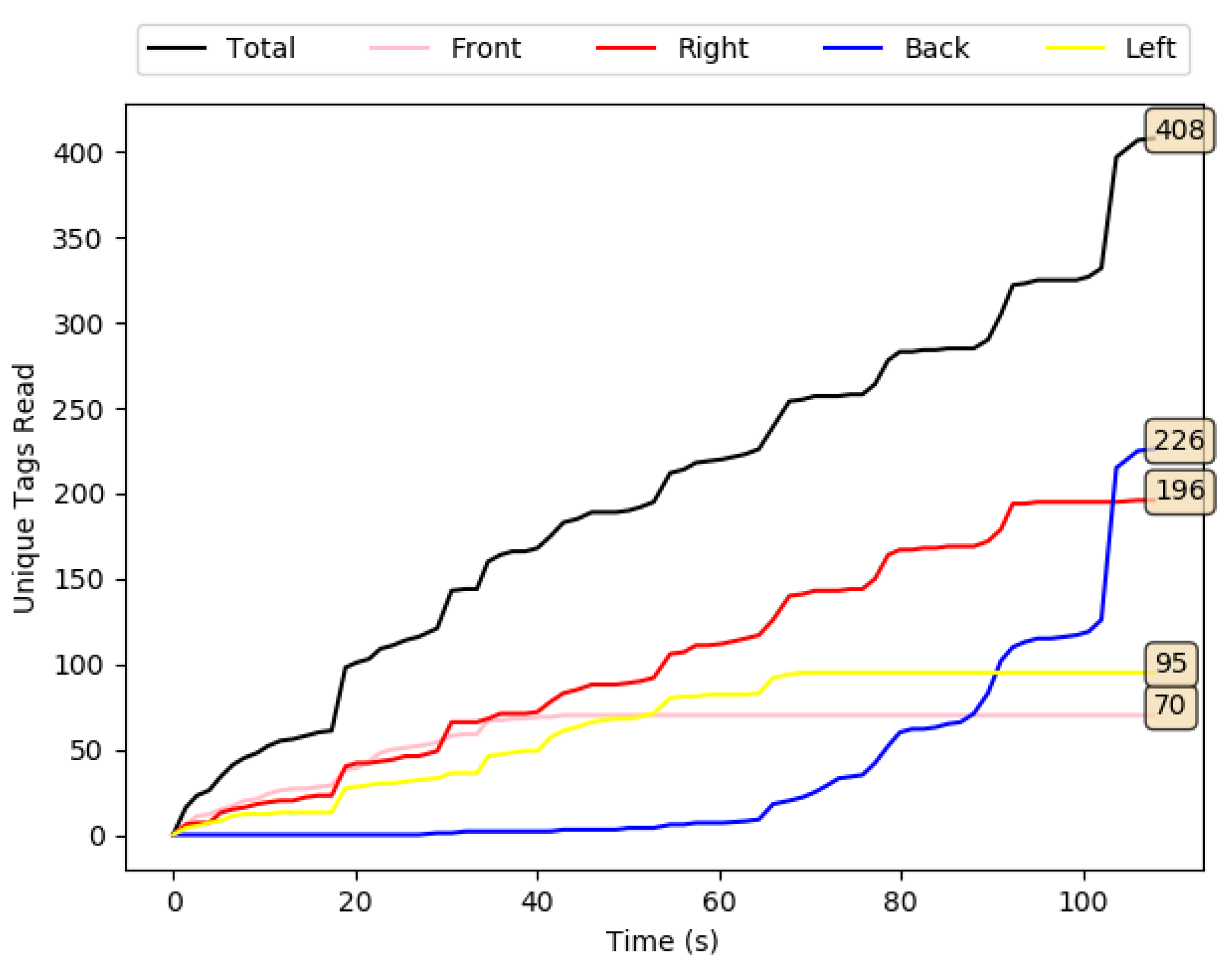

5.3.2. Experiment 3B: Scenario 3, UAV Using Dead Reckoning Navigation

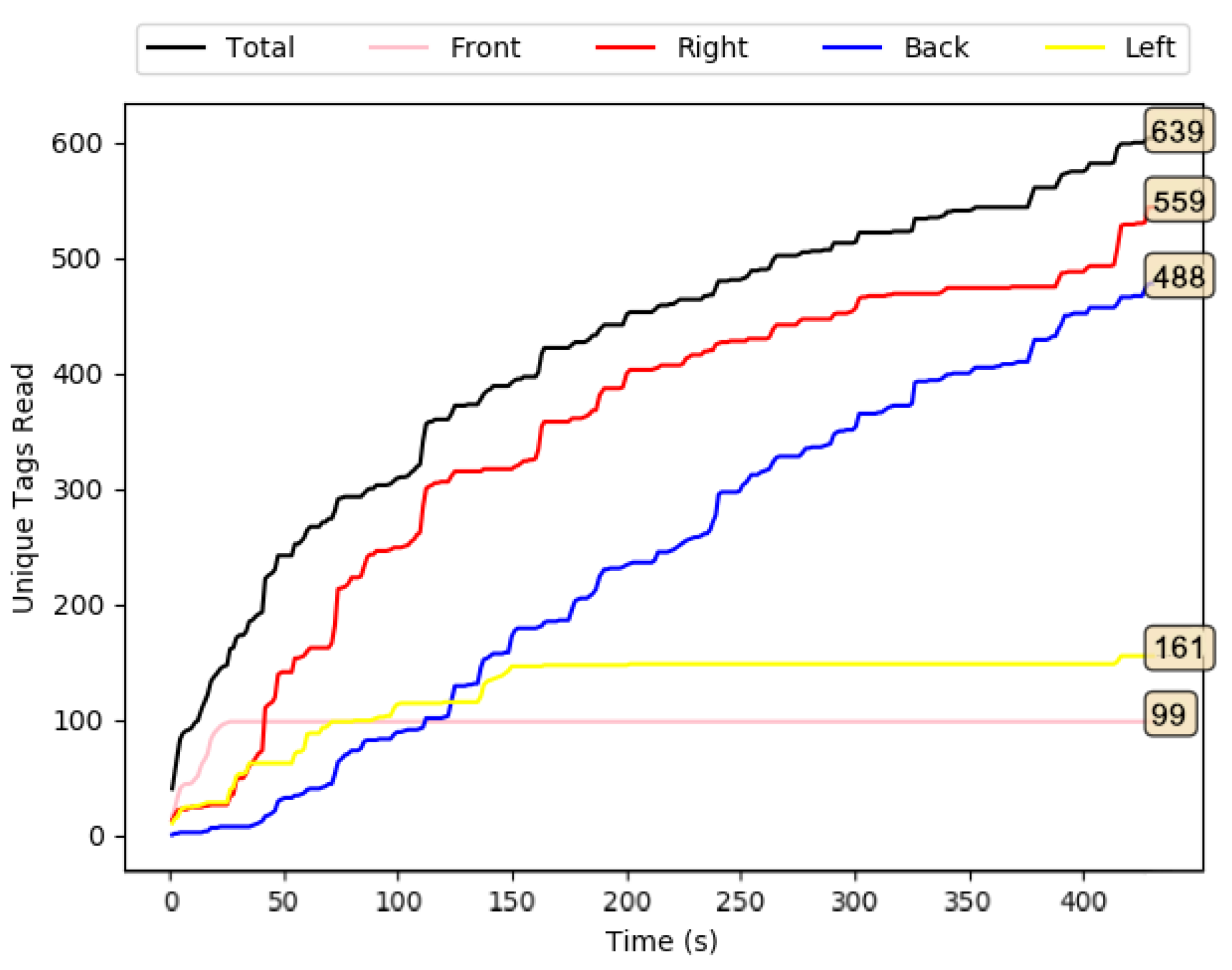

5.3.3. Experiment 3C: Scenario 3 UAV Using RFID-SOAN Navigation

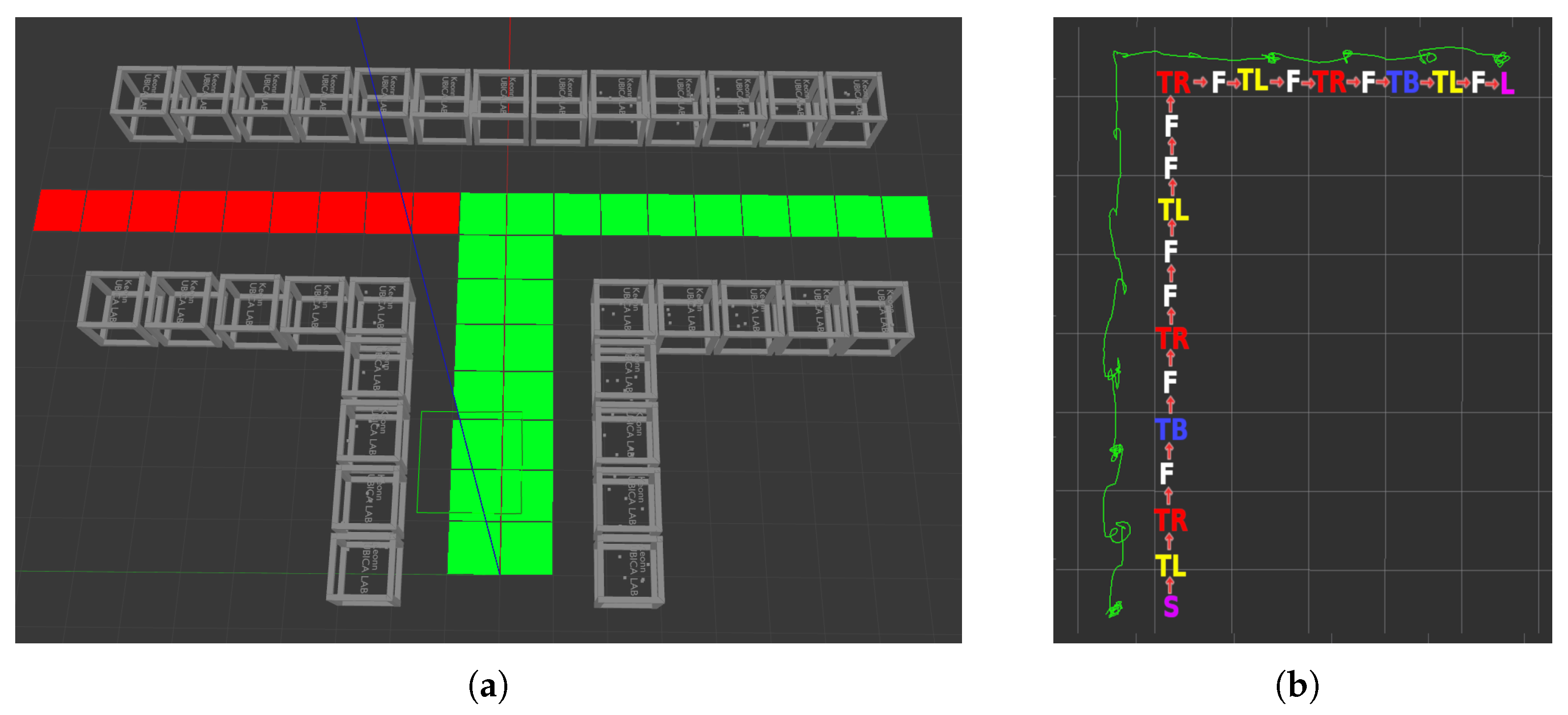

5.4. Scenario 4: Fixtures Forming Two Aisles with a T Shape, Varying Number of Tags

5.4.1. Experiment 4A: Scenario 4, RFID-SOAN Navigation, 300 RFID Tags

5.4.2. Experiment 4B: Scenario 4, RFID-SOAN Navigation, 480 RFID Tags

5.4.3. Experiment 4C: Scenario 4, RFID-SOAN Navigation, 960 RFID Tags

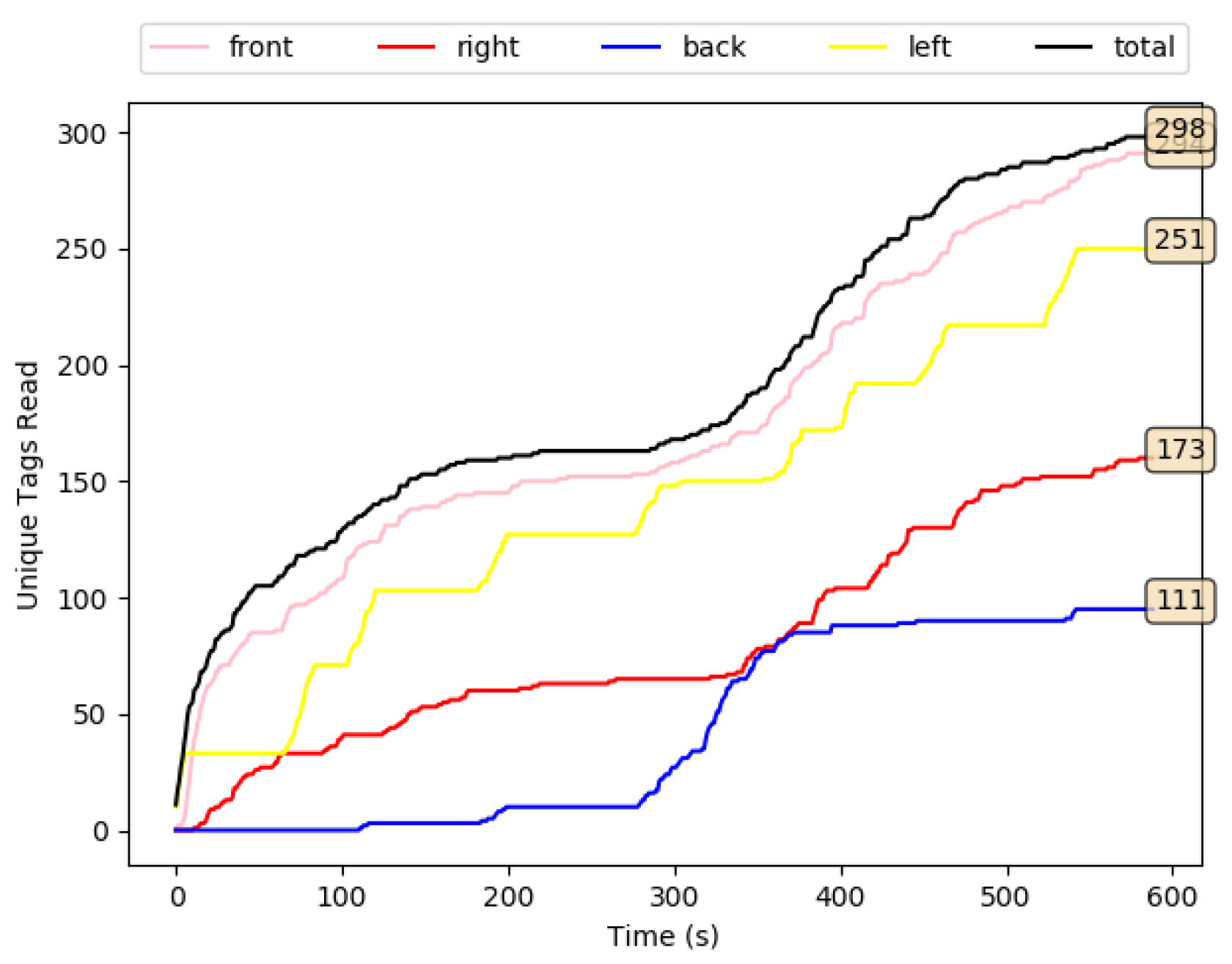

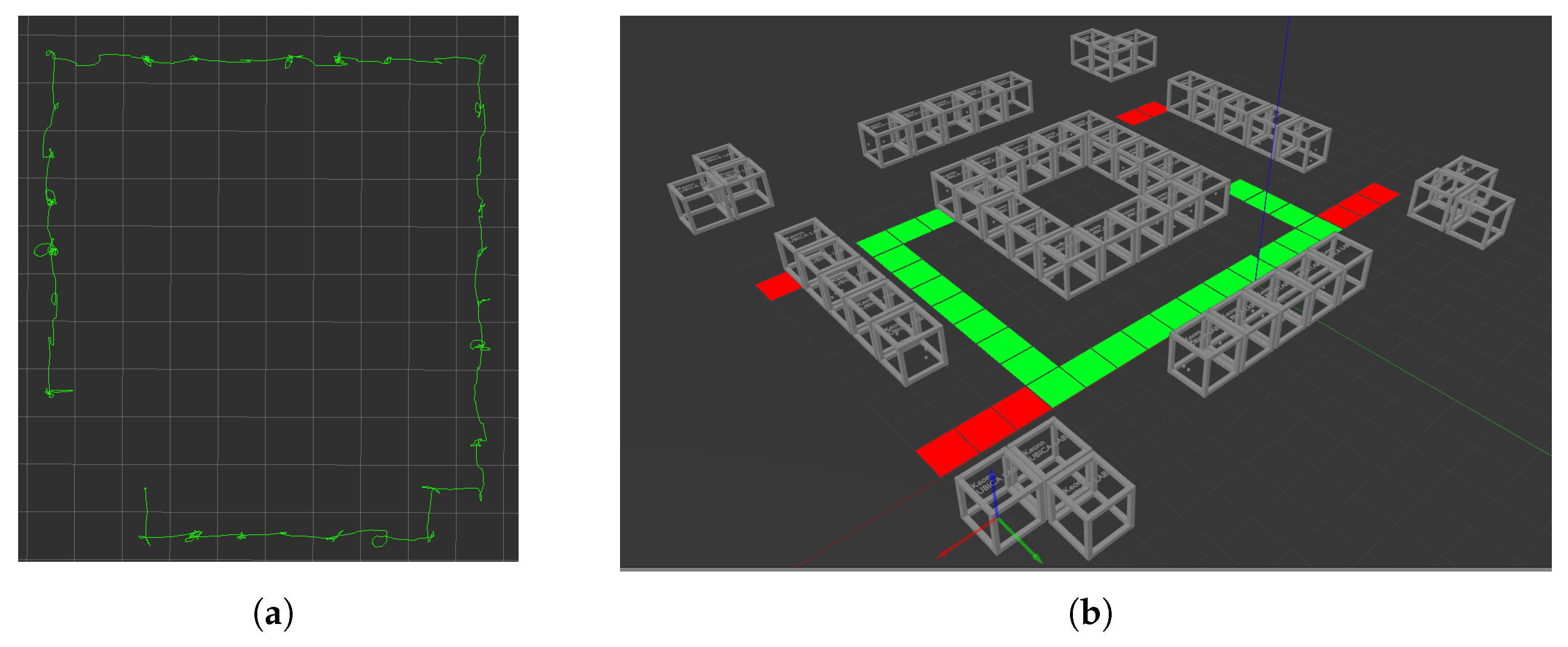

6. Scenario 5: Simulation

6.1. Experiment 5A: T-Shaped Map Layout

6.2. Experiment 5B: Square Shape Map Layout

7. Conclusions

8. Future Work

- i

- Extending the RFID-SOAN algorithm for 3D Navigation. Although the proposed algorithm enables the UAV to read above 96.66% of RFID tags in the scenarios presented, the tags in the ground truth were placed horizontally within a fixed height. This makes it easy for the UAV to read most tags by flying at a fixed altitude. The RFID-SOAN algorithm should be extended to three dimensions, enabling the UAV to inventory tags at different heights. Without this, inventories with tags at different heights must be approached as consecutive 2D inventories at increasing heights. This may require increasing the number of RFID antennas, with the consequent increase in cost and/or decrease in autonomy.

- ii

- Flight time is considered a major parameter for UAVs, due to the limited size of the power source that they can carry. In order to increase this parameter for the designed UAV, lighter material for antennas and more power efficient RFID readers can be considered.

- iii

- Robust indoor positioning. We are currently working on making the designed UAV more robust in indoors navigation, using extended sensor fusion to further assure accurate and stable obstacle avoidance while executing an inventory mission.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fernández-Caramés, T.M.; Blanco-Novoa, O.; Suárez-Albela, M.; Fraga-Lamas, P. A UAV and Blockchain-Based System for Industry 4.0 Inventory and Traceability Applications. Proceedings 2019, 4, 26. [Google Scholar] [CrossRef]

- Sholes, E. Evolution of a UAV autonomy classification taxonomy. In Proceedings of the 2007 IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2007; pp. 1–16. [Google Scholar]

- Alajmi, A.A.; Vulpe, A.; Fratu, O. UAVs for Wi-Fi receiver mapping and packet sniffing with antenna radiation pattern diversity. Wirel. Pers. Commun. 2017, 92, 297–313. [Google Scholar] [CrossRef]

- Audi Media Centre. Audi Uses Drones to Locate Vehicles at Neckarsulm Site. Available online: https://www.audi-mediacenter.com/en/photos/detail/audi-uses-drones-to-locate-vehicles-at-neckarsulm-site-92519 (accessed on 15 June 2022).

- Tatum, M.C.; Liu, J. Unmanned aerial vehicles in the construction industry. In Proceedings of the Unmanned Aircraft System Applications in Construction, Creative Construction Conference, Primosten, Croatia, 19–22 June 2017; pp. 19–22. [Google Scholar]

- Chen, L.; Plambeck, E.L. Dynamic inventory management with learning about the demand distribution and substitution probability. Manuf. Serv. Oper. Manag. 2008, 10, 236–256. [Google Scholar] [CrossRef]

- Heylighen, F. Stigmergy as a Universal Coordination Mechanism: Components, varieties and applications. In Human Stigmergy: Theoretical Developments and New Applications; Springer: New York, NY, USA, 2015. [Google Scholar]

- Mason, Z. Programming with stigmergy: Using swarms for construction. In Proceedings of the ICAL 2003: The Eighth International Conference on Artificial Life, Cambridge, MA, USA, 9–13 December 2002; pp. 371–374. [Google Scholar]

- Karsai, I. Decentralized control of construction behavior in paper wasps: An overview of the stigmergy approach. Artif. Life 1999, 5, 117–136. [Google Scholar] [CrossRef] [PubMed]

- Andrew, A.M. BEHAVIOR-BASED ROBOTICS by Ronald C. Arkin, with a foreword by Michael Arbib, Intelligent Robots and Autonomous Agents series, MIT Press, Cambridge, Mass., 1998, xiv 491 pp, ISBN 0-262-01165-4 (£39.95; Hbk). Robotica 1999, 17, 229–235. [Google Scholar] [CrossRef]

- Morenza-Cinos, M.; Casamayor-Pujol, V.; Soler-Busquets, J.; Sanz, J.L.; Guzm, R.; Pous, R. Development of an RFID Inventory Robot (AdvanRobot). In Robot Operating System (ROS): The Complete Reference; Springer International Publishing: Cham, Switzerland, 2017; Volume 2, pp. 387–417. [Google Scholar] [CrossRef]

- Ehrenberg, I.; Floerkemeier, C.; Sarma, S. Inventory Management with an RFID-equipped Mobile Robot. In Proceedings of the 2007 IEEE International Conference on Automation Science and Engineering, Scottsdale, AZ, USA, 22–25 September 2007; pp. 1020–1026. [Google Scholar] [CrossRef]

- Greco, G.; Lucianaz, C.; Bertoldo, S.; Allegretti, M. A solution for monitoring operations in harsh environment: A RFID reader for small UAV. In Proceedings of the 2015 International Conference on Electromagnetics in Advanced Applications (ICEAA), Turin, Italy, 7–11 September 2015; pp. 859–862. [Google Scholar]

- Zhang, J.; Wang, X.; Yu, Z.; Lyu, Y.; Mao, S.; Periaswamy, S.C.; Patton, J.; Wang, X. Robust RFID Based 6-DoF Localization for Unmanned Aerial Vehicles. IEEE Access 2019, 7, 77348–77361. [Google Scholar] [CrossRef]

- Eyesee: The Drone Allowing to Automate Inventory in Warehouses. 2016. Available online: http://www.hardis-group.com (accessed on 15 June 2022).

- Airborne Data Collection. 2016. Available online: http://dronescan.co (accessed on 15 June 2022).

- The Flying Inventory Assistant. 2016. Available online: http://www.fraunhofer.de (accessed on 15 June 2022).

- Jhunjhunwala, P.; Shriya, M.; Rufus, E. Development of Hardware based Inventory Management System using UAV and RFID. In Proceedings of the 2019 International Conference on Vision towards Emerging Trends in Communication and Networking (ViTECoN), Vellore, India, 30–31 March 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Kwon, W.; Park, J.H.; Lee, M.; Her, J.; Kim, S.H.; Seo, J.W. Robust autonomous navigation of unmanned aerial vehicles (uavs) for warehouses’ inventory application. IEEE Robotics Autom. Lett. 2019, 5, 243–249. [Google Scholar] [CrossRef]

- Bae, S.M.; Han, K.H.; Cha, C.N.; Lee, H.Y. Development of inventory checking system based on UAV and RFID in open storage yard. In Proceedings of the 2016 International Conference on Information Science and Security (ICISS), Pattaya, Thailand, 19–22 December 2016; pp. 1–2. [Google Scholar]

- Susnea, I.; Vasiliu, G.; Filipescu, A.; Serbencu, A.; Radaschin, A. Virtual pheromones to control mobile robots. A neural network approach. In Proceedings of the 2009 IEEE International Conference on Automation and Logistics, Shenyang, China, 5–7 August 2009; pp. 1962–1967. [Google Scholar] [CrossRef]

- Khaliq, A.A. From Ants to Service Robots: An Exploration in Stigmergy-Based Navigation Algorithms. Ph.D. Thesis, Örebro University, Örebro, Sweden, 2018. [Google Scholar]

- Khaliq, A.A.; Saffiotti, A. Stigmergy at work: Planning and navigation for a service robot on an RFID floor. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1085–1092. [Google Scholar]

- Casamayor-Pujol, V.; Morenza-Cinos, M.; Gastón, B.; Pous, R. Autonomous stock counting based on a stigmergic algorithm for multi-robot systems. Comput. Ind. 2020, 122, 103259. [Google Scholar] [CrossRef]

- Keonn’s AdvanReader 160. Available online: https://keonn.com/components-product/advanreader-160/ (accessed on 15 June 2022).

- Keonn’s AdvantennaSP11. Available online: https://keonn.com/components-product/advantenna-sp11/ (accessed on 15 June 2022).

- GEN. 2 RFID Protocol Gen. 2. Available online: https://www.gs1.org/epc-rfid (accessed on 15 June 2022).

- Koenig, N.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)(IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2149–2154. [Google Scholar]

- Koubâa, A. Robot Operating System (ROS); Springer: Berlin/Heidelberg, Germany, 2017; Volume 1. [Google Scholar]

- Alajami, A.; Moreno, G.; Pous, R. RFID-Sensor Gazebo Plugin. ROS Wiki. 2022. Available online: http://wiki.ros.org/RFIDsensor_Gazebo_plugin (accessed on 15 June 2022).

- Beul, M.; Droeschel, D.; Nieuwenhuisen, M.; Quenzel, J.; Houben, S.; Behnke, S. Fast Autonomous Flight in Warehouses for Inventory Applications. IEEE Robots Autom. Lett. 2018, 3, 3121–3128. [Google Scholar] [CrossRef]

| Antenna Dir. | Vector | Weight |

|---|---|---|

| Front | ||

| Right | ||

| Back | ||

| Left |

| Experiment | Map-Layout | Num. Tags in Map | RFID Path Exploration | Read Tags |

|---|---|---|---|---|

| 1C | 1-side/1-isle | 330 | Successful | 96.66% |

| 2C | 2-sides/1-isle | 330 | Successful | 97.27% |

| 3C | 2-sides/1-isle | 660 | Successful | 96.81% |

| 4A | T-shape | 300 | Successful | 97.33% |

| 4B | T-shape | 480 | Successful | 97.29% |

| 4C | T-shape | 960 | Successful | 97.18% |

| 5A | T-shape | 300 | Successful | 99.33% |

| 5B | Square-shape | 1700 | Successful | 96.41% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alajami, A.A.; Moreno, G.; Pous, R. Design of a UAV for Autonomous RFID-Based Dynamic Inventories Using Stigmergy for Mapless Indoor Environments. Drones 2022, 6, 208. https://doi.org/10.3390/drones6080208

Alajami AA, Moreno G, Pous R. Design of a UAV for Autonomous RFID-Based Dynamic Inventories Using Stigmergy for Mapless Indoor Environments. Drones. 2022; 6(8):208. https://doi.org/10.3390/drones6080208

Chicago/Turabian StyleAlajami, Abdussalam A., Guillem Moreno, and Rafael Pous. 2022. "Design of a UAV for Autonomous RFID-Based Dynamic Inventories Using Stigmergy for Mapless Indoor Environments" Drones 6, no. 8: 208. https://doi.org/10.3390/drones6080208

APA StyleAlajami, A. A., Moreno, G., & Pous, R. (2022). Design of a UAV for Autonomous RFID-Based Dynamic Inventories Using Stigmergy for Mapless Indoor Environments. Drones, 6(8), 208. https://doi.org/10.3390/drones6080208