3D AQI Mapping Data Assessment of Low-Altitude Drone Real-Time Air Pollution Monitoring

Abstract

:1. Introduction

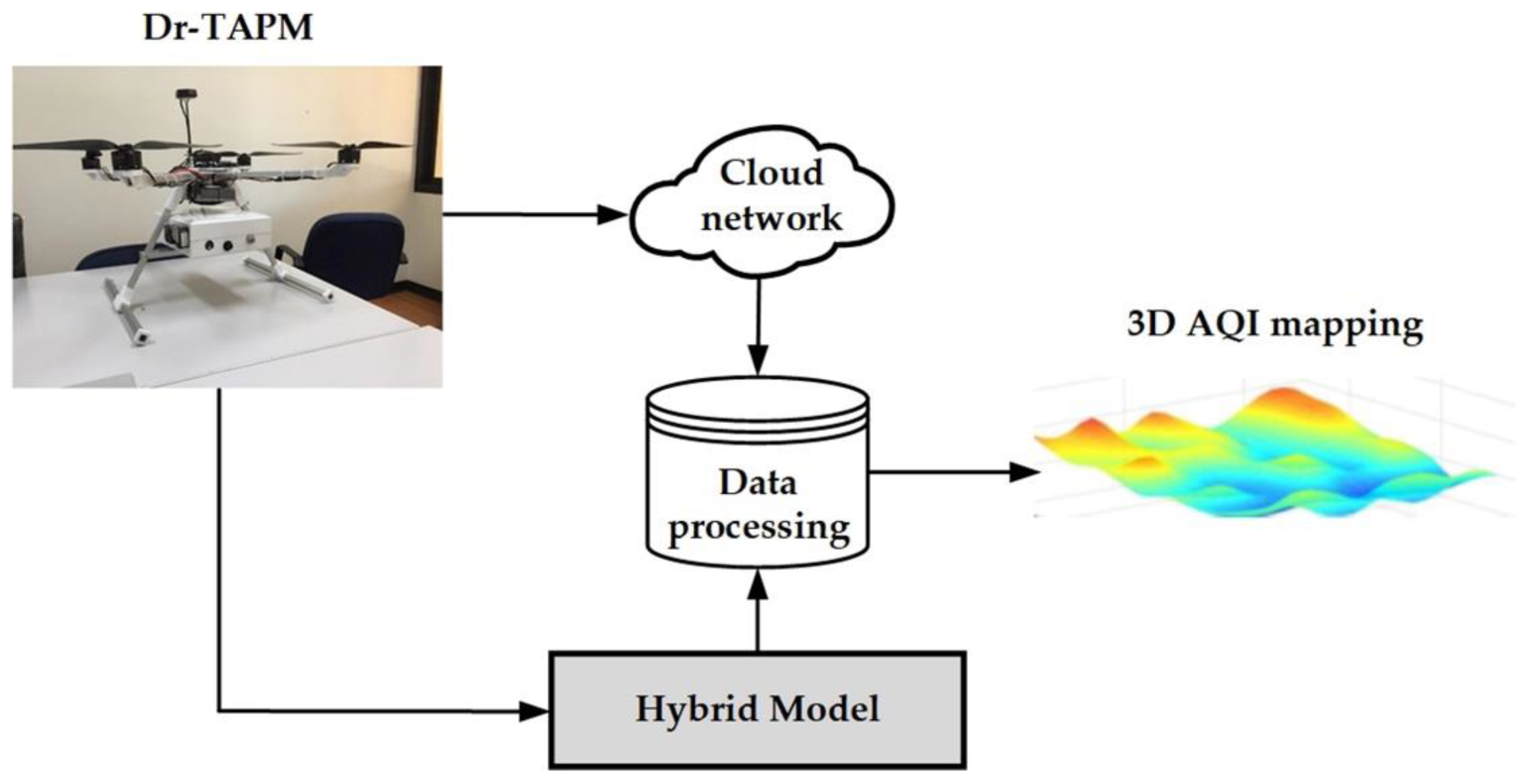

2. Design of Frameworks

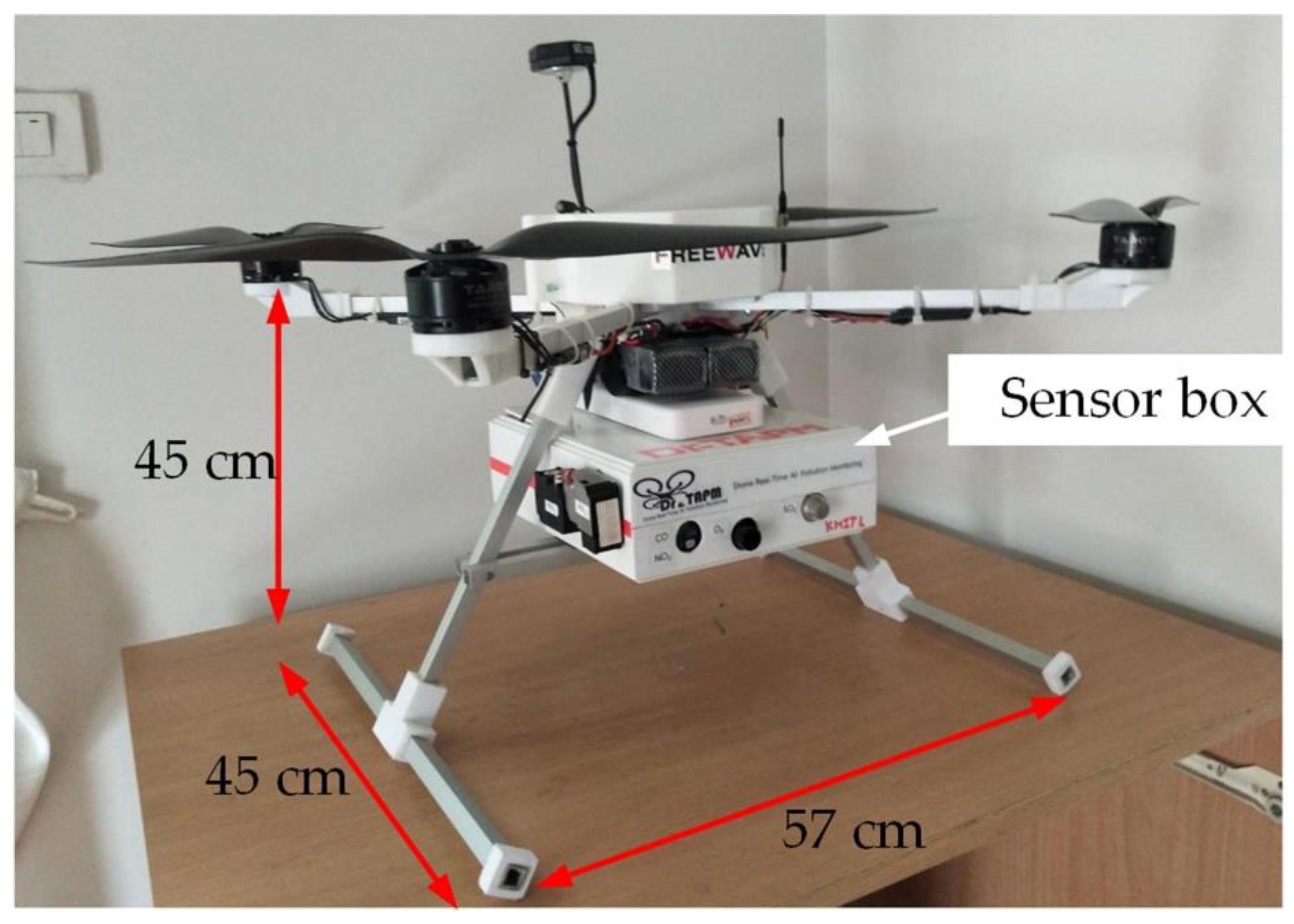

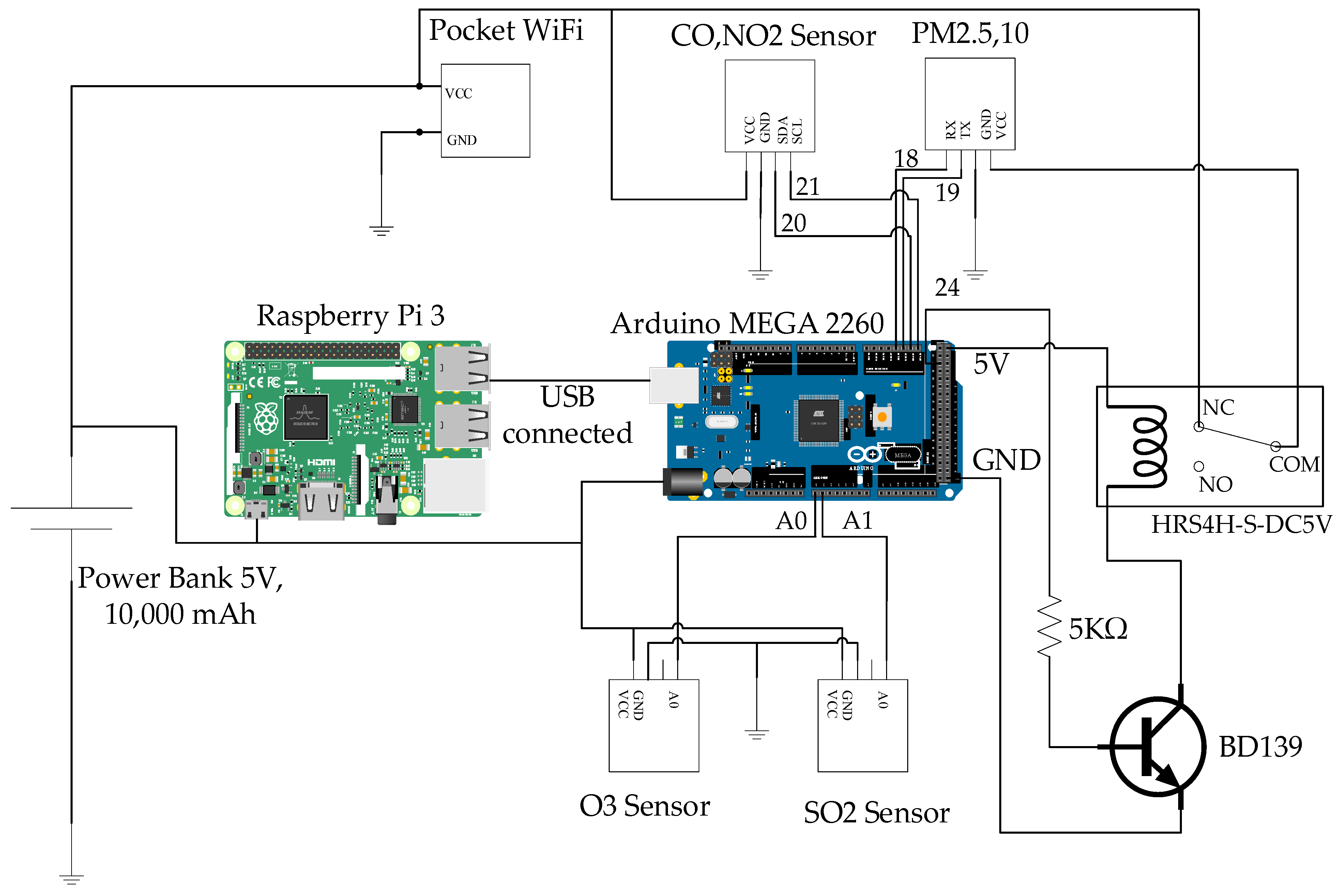

2.1. Dr-TAPM

2.2. Cloud Network

2.3. Data Processing

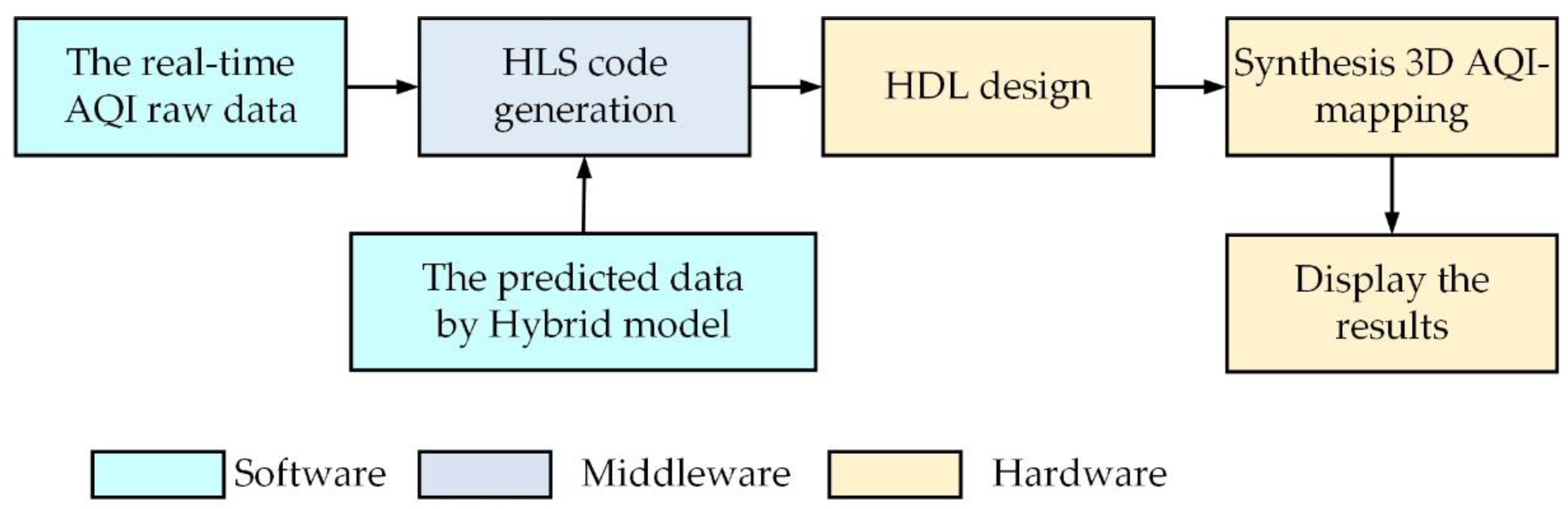

3. Methodology

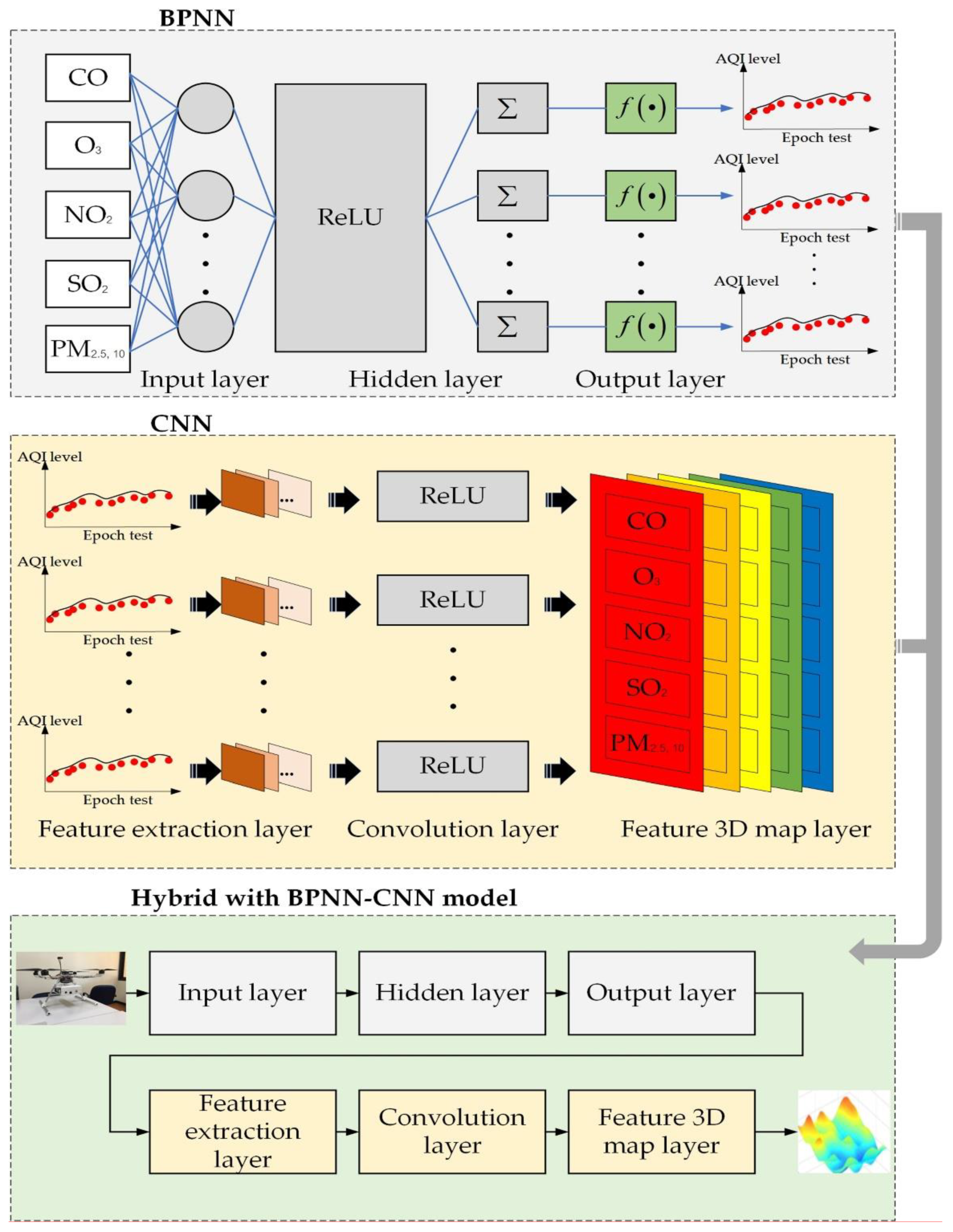

Hybrid Model

4. Data Assessment

4.1. Training Model

4.2. Experimental Setup in Case Study of Open Burning Smoke Detection

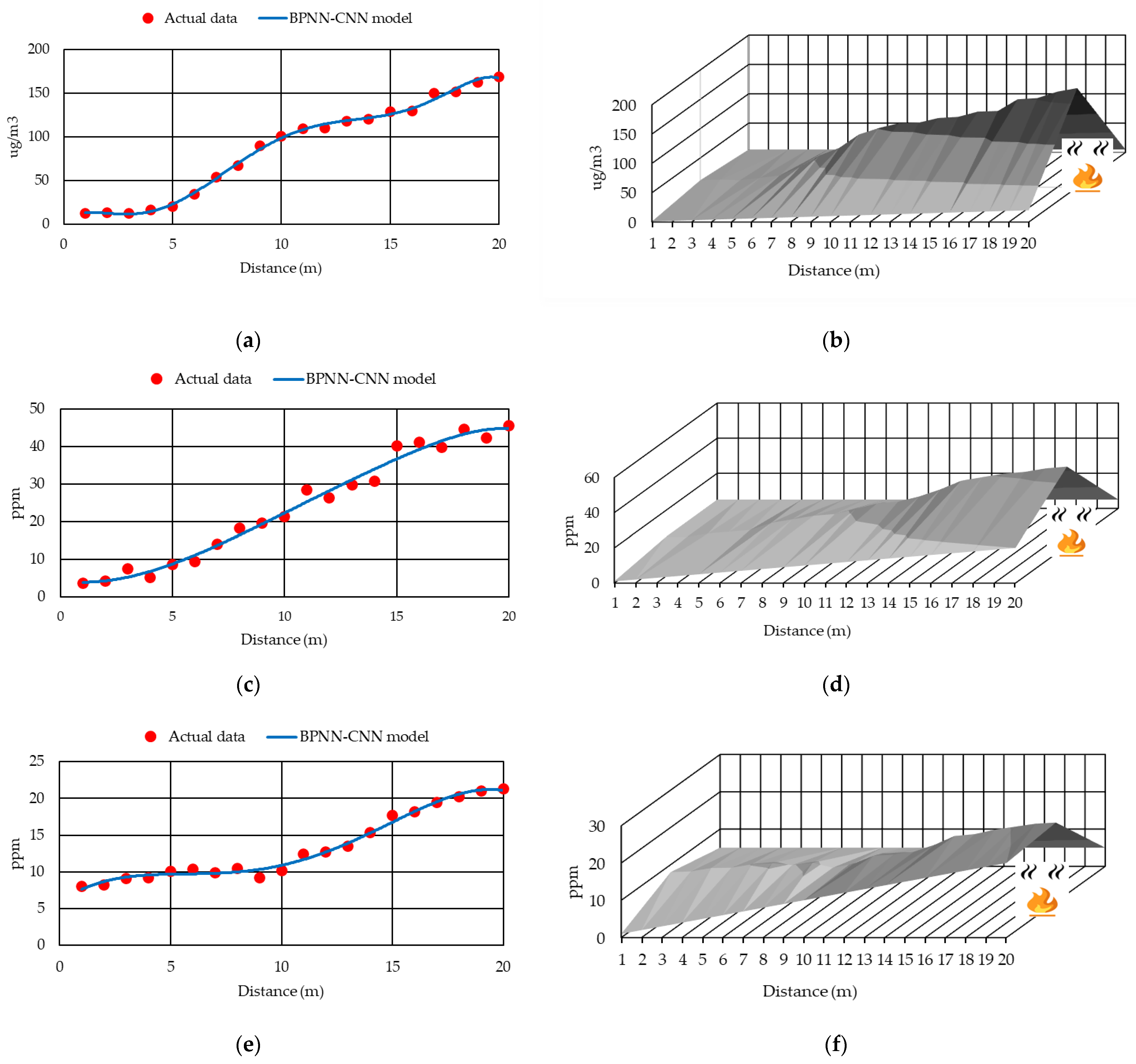

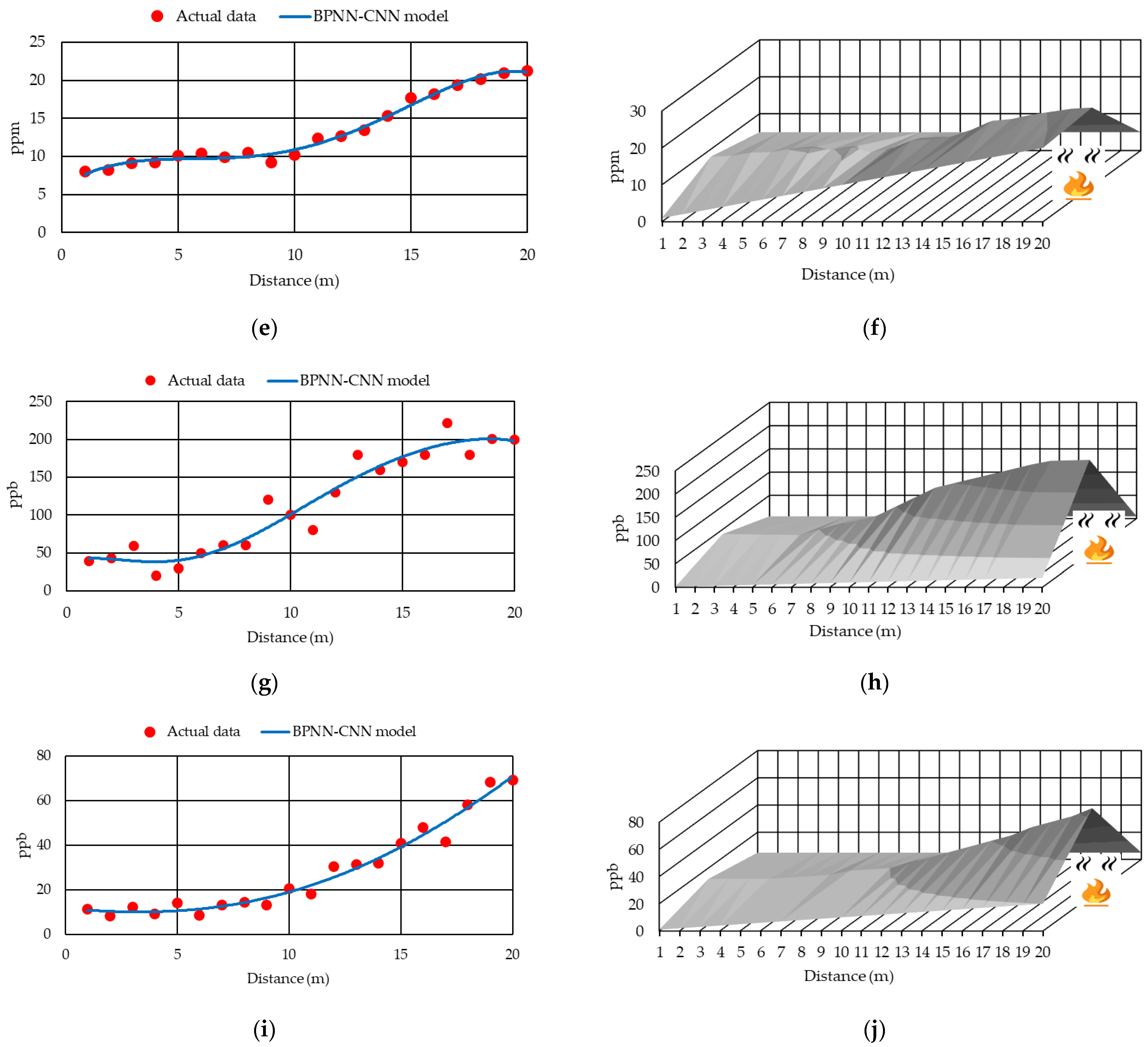

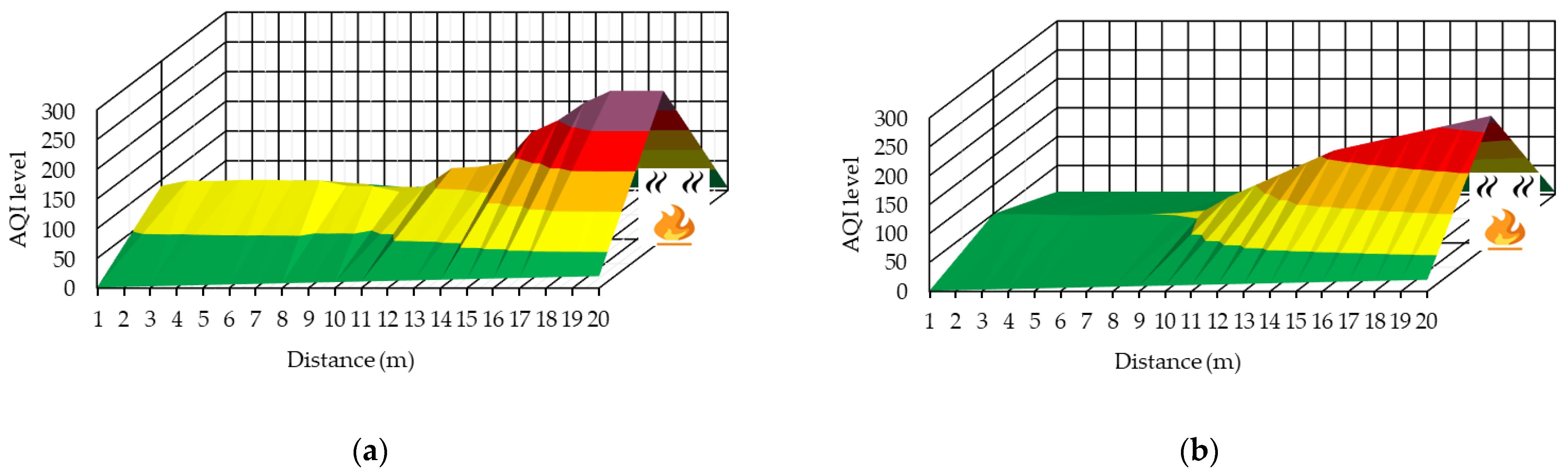

4.3. Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- 2021 World Air Quality Report. Available online: https://www.iqair.com/th-en/world-most-polluted-countries (accessed on 19 July 2022).

- Pansuk, J.; Junpen, A.; Garivait, S. Assessment of air pollution from household solid waste open burning in Thailand. Sustainability 2018, 10, 2553. [Google Scholar] [CrossRef] [Green Version]

- Bhola, R.G.; Luisa, T.M.; Chandra, S.P.O. Air Pollution Health and Environmental Impacts; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Thanonphat, B.; Savitri, G.; Sebastein, B.; Agapol, J. An inventory of air pollutant emissions from biomass open burning in Thailand using MODIS burned area product (MCD45A1). J. Sustain. Energy Environ. 2014, 5, 85–94. [Google Scholar]

- Bui, X.N.; Lee, C.; Nguyen, Q.L.; Adeel, A.; Cao, X.C.; Nguyen, V.N.; Le, V.C.; Nguyen, H.; Le, Q.T.; Duong, T.H.; et al. Use of unmanned aerial vehicles for 3D topographic mapping and monitoring the air quality of open-pit mines. J. Pol. Miner. Eng. Soc. 2019, 21, 222–238. [Google Scholar] [CrossRef]

- Attila, S.; Simona, D.; Ioan, D.; Mihaela, F.-I.; Flaviu, M.F.-I. Air quality assessment system based on self-driven drone and LoRaWAN network. Comput. Commun. 2021, 175, 13–24. [Google Scholar]

- Rohi, G.; Ejofodomi, O.; Ofualagba, G. Autonomous monitoring, analysis, and countering of air pollution using environmental drones. Heliyon 2020, 6, e03252. [Google Scholar] [CrossRef] [Green Version]

- Duangsuwan, S.; Jamjareekulagarn, P. Development of drone real-time air pollution monitoring for mobile smart sensing in areas with poor accessibility. Sens. Mater. 2020, 32, 511–520. [Google Scholar] [CrossRef] [Green Version]

- Pochwala, S.; Gardecki, A.; Lewandowski, P.; Somogyi, V.; Anweiler, S. Developing of low-cost air pollution sensor-measurements with the unmanned aerial vehicles in Poland. Sensors 2020, 20, 3582. [Google Scholar] [CrossRef]

- Lambey, V.; Prasad, A. A review on air quality measurement using an unmanned aerial vehicle. Water Air Soil Pollut. 2021, 232, 109. [Google Scholar] [CrossRef]

- Alexandru, C.; Adrian, C.F.; Den, T.; Laura, R. Autonomous multi-rotor aerial platform for air pollution monitoring. Sensors 2021, 22, 860. [Google Scholar]

- Dharmendra, S.; Meenakshi, D.; Rahul, K.; Chintan, N. Sensors and systems for air quality assessment monitoring and management: A review. J. Environ. Manag. 2021, 289, 112510. [Google Scholar]

- Nguyen, Q.L.; Cao, X.C.; Le, V.C.; Nguyen, N.B.; Dang, A.T.; Le, Q.T.; Bui, X.N. 3D spatial interpolation methods for open-pit mining air quality with data acquired by small UAV based monitoring system. J. Pol. Miner. Eng. Soc. 2020, 263–273. [Google Scholar] [CrossRef]

- Yang, Y.; Zheng, Z.; Bian, K.; Song, L.; Han, Z. Real-time profiling of fine-grained air quality index distribution using UAV sensing. IEEE Internet Things J. 2018, 5, 186–196. [Google Scholar] [CrossRef]

- Wang, S.-Y.; Lin, W.-B.; Shu, Y.-C. Design of machine learning prediction system based on the internet of things framework for monitoring fine PM concentrations. Environments 2021, 8, 99. [Google Scholar] [CrossRef]

- Duran, Z.; Ozcan, K.; Atik, E.M. Classification of photogrammetric and airbore LiDAR point clouds using machine learning algorithms. Drones 2021, 5, 104. [Google Scholar] [CrossRef]

- Steenbeek, A.; Nex, F. CNN-based dense monocular visual SLAM for real-time UAV exploration in emergency conditions. Drones 2022, 6, 79. [Google Scholar] [CrossRef]

- Ding, Z.; Zhao, Y.; Li, A.; Zheng, Z. Spatial-temporal attention two-stream convolution neural network for smoke region detection. Fire 2021, 4, 66. [Google Scholar] [CrossRef]

- Guede-Fernandez, F.; Martins, L.; de Almeida, R.V.; Gamboa, H.; Vieira, P. A deep learning based object identification system for forest fire detection. Fire 2021, 4, 75. [Google Scholar] [CrossRef]

- Lin, G.; Zhang, Y.; Guo, X.; Zhang, Q. Smoke detection on video sequence using 3D convolution neural networks. Fire 2019, 55, 1827–1847. [Google Scholar]

- Wang, W.; Mao, W.; Tong, X.; Xu, G. A novel recursive model based on a convolutional long short-term memory neural network for air pollution prediction. Remote Sens. 2021, 13, 1284. [Google Scholar] [CrossRef]

- Ahmed, M.; Xiao, Z.; Shen, Y. Estimation of ground PM2.5 concentrations in Pakistan using convolutional neural network and multi-pollution satellite images. Remote Sens. 2022, 14, 1735. [Google Scholar] [CrossRef]

- Yang, Y.; Bai, Z.; He, Z.; Zheng, Z.; Bian, K.; Song, L. AQNet: Fine-Grained 3D spatio-temporal air quality monitoring by aerial-ground WSN. In Proceeding of the IEEE Conference on Computer Communications Poster and Demo, Honolulu, HI, USA, 15–19 April 2018; pp. 1–2. [Google Scholar]

- Kow, P.-Y.; Wang, Y.-S.; Zhou, Y.; Kao, I.-F.; Issermann, M.; Chang, L.-G.; Chang, F.-J. Seamless integration of convolutional and back–propagation neural networks for regional multi-step-ahead PM2.5 forecasting. J. Clean. Prod. 2020, 261, 121285. [Google Scholar] [CrossRef]

- Bai, L.; Wang, J.; Ma, X.; Lu, H. Air pollution forecasts: An overview. Int. J. Environ. Res. Public Health 2018, 15, 780. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bai, Y.; Li, Y.; Wang, X.; Xie, J.; Li, C. Air pollutants concentrations forecasting using back propagation neural network based on wavelet decomposition with meteorological conditions. Atmos. Pollut. Res. 2016, 7, 557–566. [Google Scholar] [CrossRef]

- Zaini, N.; Ean, L.W.; Ahmed, A.N.; Malek, M.A. A systematic literature review of deep learning neural network for time series air quality forecasting. Environ. Sci. Pollut. Res. 2022, 29, 4958–4990. [Google Scholar] [CrossRef]

| Parameters | Values |

|---|---|

| Number of K raw datasets raw datasets | 1400 |

| Number of n | 5 |

| Number of m | 40 |

| Testing data | 20% |

| Training data | 80% |

| Air Pollutant Parameters | MAE | RMSE | R2 |

|---|---|---|---|

| PM2.5,10 | 0.351 | 1.561 | 0.995 |

| CO | 0.510 | 2.054 | 0.983 |

| O3 | 0.352 | 1.565 | 0.994 |

| SO2 | 0.310 | 1.341 | 0.997 |

| NO2 | 0.250 | 1.118 | 0.991 |

| Average | 0.402 | 1.787 | 0.992 |

| Air Pollutant Parameters | MAE | RMSE | R2 |

|---|---|---|---|

| PM2.5,10 | 1.050 | 4.696 | 0.964 |

| CO | 0.950 | 4.248 | 0.968 |

| O3 | 1.100 | 4.919 | 0.961 |

| SO2 | 1.150 | 5.143 | 0.956 |

| NO2 | 0.850 | 3.801 | 0.979 |

| Average | 1.020 | 4.561 | 0.965 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duangsuwan, S.; Prapruetdee, P.; Subongkod, M.; Klubsuwan, K. 3D AQI Mapping Data Assessment of Low-Altitude Drone Real-Time Air Pollution Monitoring. Drones 2022, 6, 191. https://doi.org/10.3390/drones6080191

Duangsuwan S, Prapruetdee P, Subongkod M, Klubsuwan K. 3D AQI Mapping Data Assessment of Low-Altitude Drone Real-Time Air Pollution Monitoring. Drones. 2022; 6(8):191. https://doi.org/10.3390/drones6080191

Chicago/Turabian StyleDuangsuwan, Sarun, Phoowadon Prapruetdee, Mallika Subongkod, and Katanyoo Klubsuwan. 2022. "3D AQI Mapping Data Assessment of Low-Altitude Drone Real-Time Air Pollution Monitoring" Drones 6, no. 8: 191. https://doi.org/10.3390/drones6080191

APA StyleDuangsuwan, S., Prapruetdee, P., Subongkod, M., & Klubsuwan, K. (2022). 3D AQI Mapping Data Assessment of Low-Altitude Drone Real-Time Air Pollution Monitoring. Drones, 6(8), 191. https://doi.org/10.3390/drones6080191