A Hybrid Model and Data-Driven Vision-Based Framework for the Detection, Tracking and Surveillance of Dynamic Coastlines Using a Multirotor UAV

Abstract

:1. Introduction

1.1. Related Literature

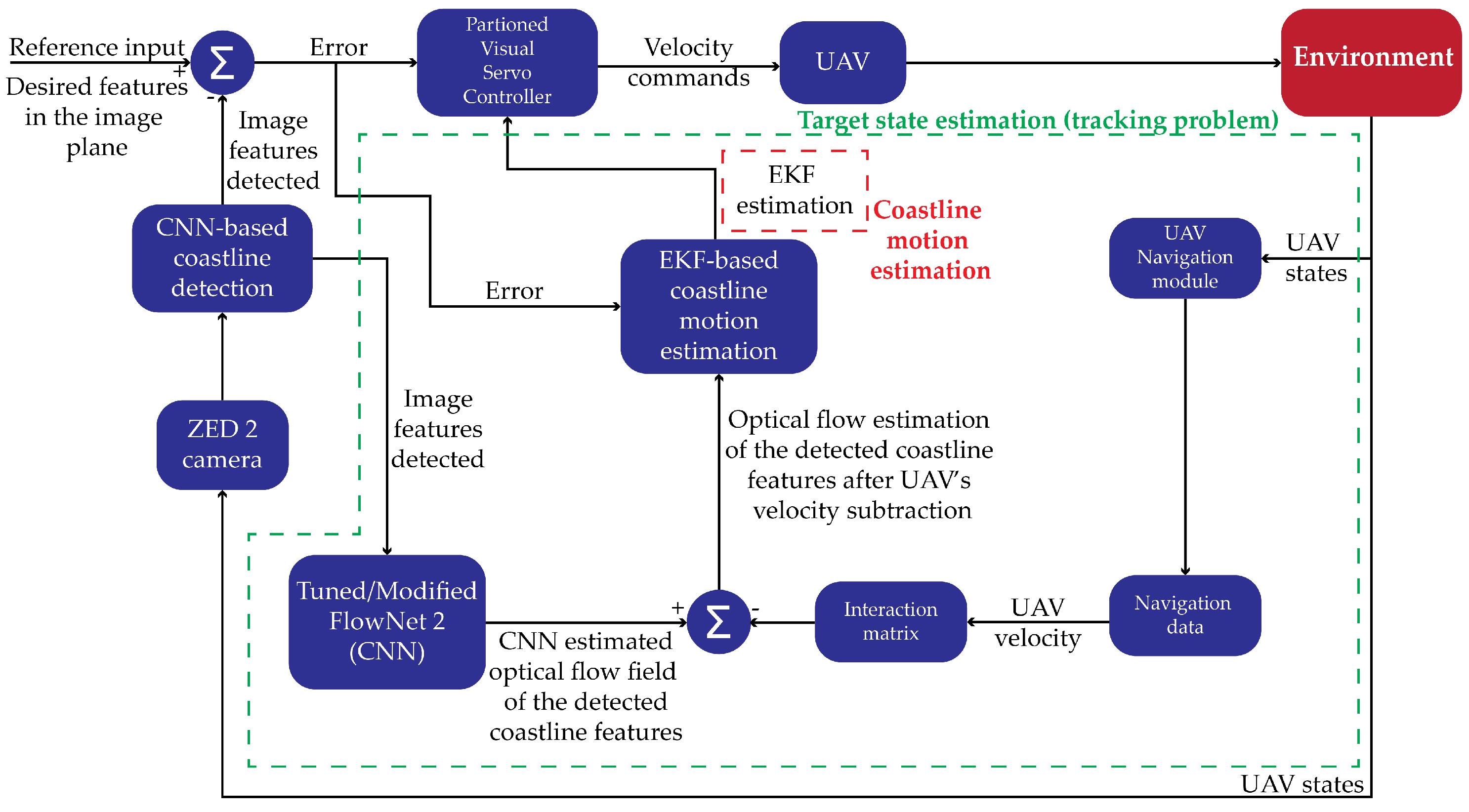

1.2. Contributions

- Implementation and training of a CNN for detecting shoreline features from raw camera images.

- Deployment of a CNN for the optical flow estimation of the detected coastline.

- Formulation of an EKF based on an approximate wave motion model, which provides an online estimate of the coastline motion in the image plane.

- Formulation of a Neural-Network aided EKF that learns from data. This module combines the EKF implementation (model-based method) and the CNN-based (data-driven method) optical flow estimation to estimate the shoreline motion in the image plane online directly.

- Development of a robust PVS control strategy for the autonomous navigation of an octocopter along a wavy shoreline, incorporating as feedback the output of (4) while ensuring the latter is always retained inside the camera field of view.

1.3. Outline

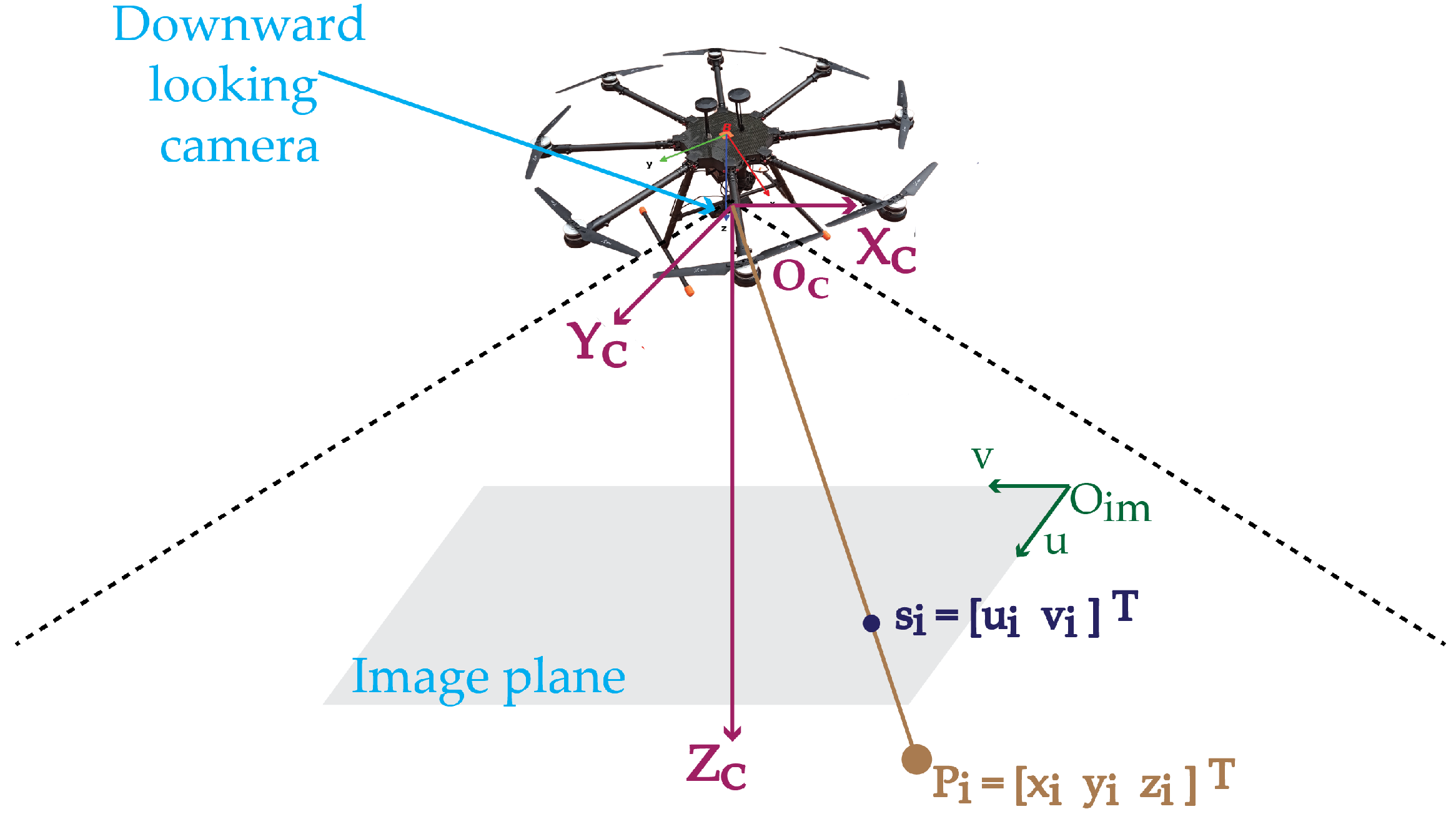

2. Preliminaries

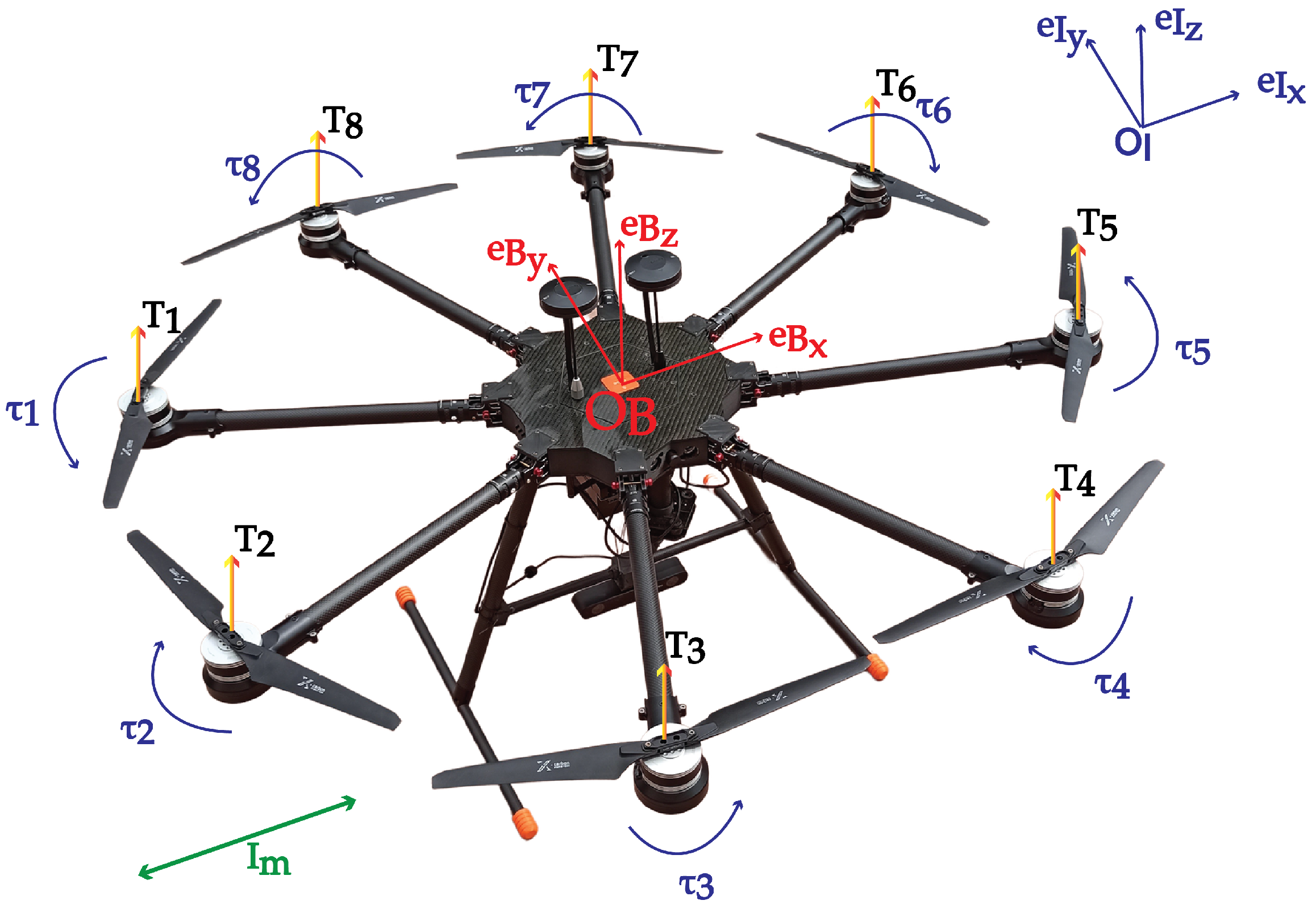

2.1. Multirotor Equations of Motion

2.2. Multirotor Low-Level Control

- an inner loop executing attitude control while using as input references roll, pitch, yaw, and throttle values,

- an outer loop executing translational motion control while using as input references the desired position or velocity values.

3. Problem Statement

- Detection of the features belonging to the coastline through a CNN-based online estimator.

- Estimation of the features flow because of the motion of the coastline induced by the waves, through the hybrid model-based (MB)/data-driven (DD) proposed real-time estimator.

- Development of a feature trajectory planning term in the field of the image that is integrated in the overall control scheme and is responsible for the movement of the vehicle along the shoreline.

- Formulation of a PVS tracking controller with the aim of converging the error close to zero, while , despite the camera calibration and depth measurement errors (i.e., the focal lengths and the features depth , are not precisely estimated).

4. Materials and Methods

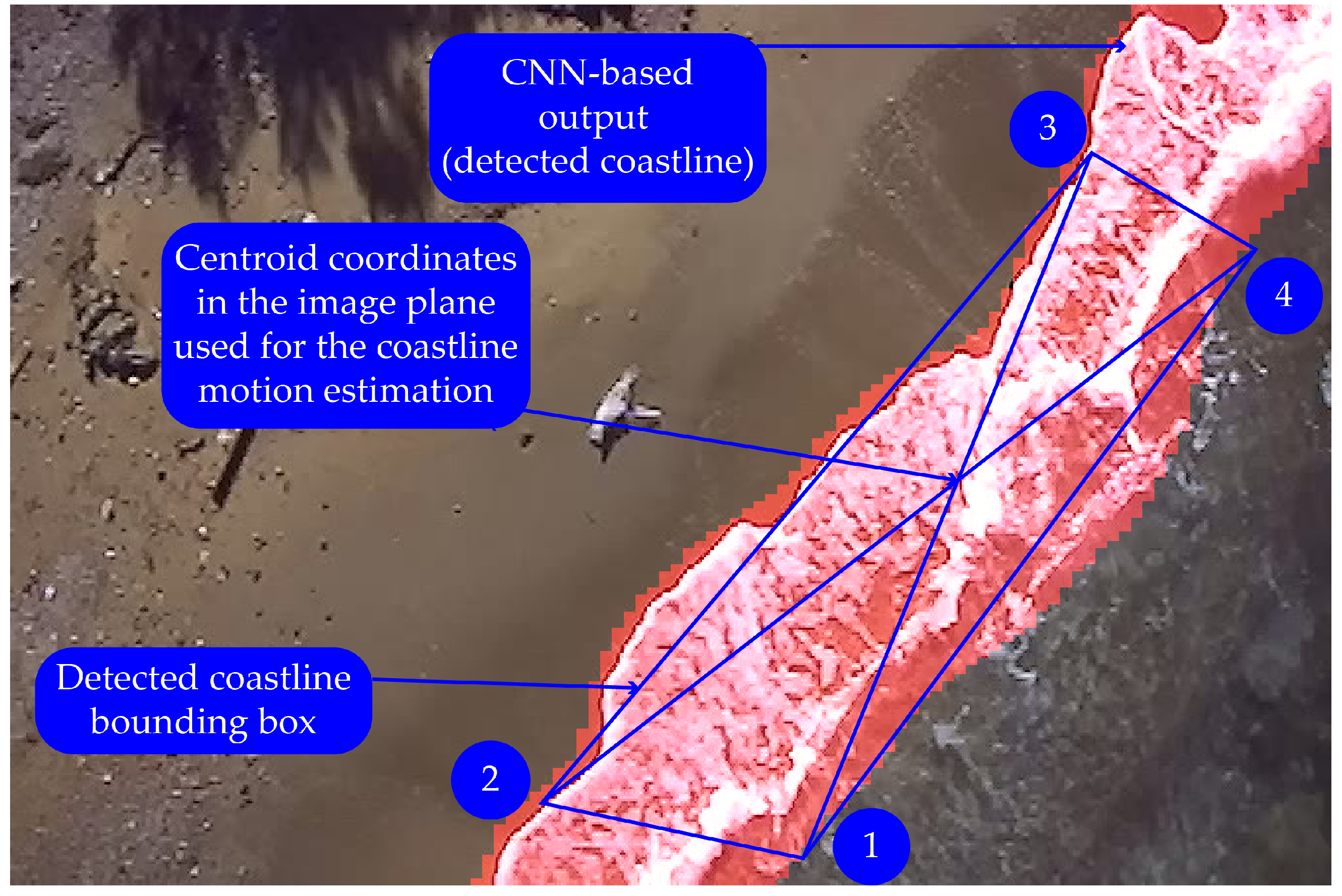

4.1. CNN-Based Coastline Detection

- Polygons are used to indicate the coastline through the labeling procedure.

- Masks are generated (binary images according to the annotated features from the labeling procedure).

- The frames were resized from pixels to pixels.

- Two-class classification (Class 0: Sea and Ground as black background on the mask and Class 1: Coastline).

- The training and validation sets were enhanced using a variety of augmentation methods.

4.2. CNN-Based Coastline Optical Flow Estimation

4.3. EKF-Based Coastline Motion Estimation

- The bounding box centroid, which is part of the shoreline, is the projection of a water particle , which has a rest location , and so follows the Gerstner wave model.

- We consider that there is just one dominant frequency that impacts the wave’s amplitude , while the other frequencies have a tiny contribution and may thus be ignored.

- The waves’ direction is constant throughout time, therefore . The constant phase terms , appear in the sinusoidal terms of the surface position components , , respectively.

4.3.1. System Model

4.3.2. Measurement Model

4.3.3. State Update

4.4. Neural Network Aided Kalman Filtering for Coastline Motion Estimation

4.4.1. Preliminaries

- There is no knowledge of the distribution of the noise signals and .

- The functions and could be used to approximate the true underlying dynamics. Approximations of this type can be used to depict continuous-time dynamics in discrete time, acquire data with misaligned sensors, and other types of mismatches.

4.4.2. Hybrid MB/DD Real-Time Estimator Formulation

4.4.3. Simulator

- safety reasons → increased risk of vehicle crash during the early testing of prototype autonomous flight algorithms

- logistics problems while rapid prototyping → inability to conduct experiments frequently (e.g., every day) along the coast

- Navigation sensors (GPS, IMU, altimeter, etc.)

- Downward-looking stereo camera system (ZED 2), providing frame-based image data

- Position of the pixels belonging to the coastline, which results from the outcome of the CNN-based coastline detection module.

- Approximation of the vector, which results from the outcome of the CNN-based optical flow of the pixels belonging to the detected coastline after subtracting the vehicle velocity.

4.4.4. Training & Deployment

- Feature 1: The measurement difference .

- Feature 2: The innovation difference .

- Feature 3: The forward evolution difference . This value reflects the difference between two successive posterior state estimates, where the accessible feature for time instance t is .

- Feature 4: The forward update difference , i.e., the difference between the posterior state estimate and the prior state estimate, where is used for the time step t.

4.5. PVS Control Strategy

4.5.1. Control Development

- Successful tracking of a dynamic coastline.

- Handling of the motion caused from the coastline waves.

- Maintenance of the coastline as close as possible to the center of the camera’s FoV.

4.5.2. Stability Analysis

4.5.3. Implementation Details

Error Feedback

Level Frame Mapping

Quadrotor Under-Actuation

5. Results

5.1. Experimental Setup

5.2. Experimental Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Klemas, V.V. Coastal and environmental remote sensing from unmanned aerial vehicles: An overview. J. Coast. Res. 2015, 31, 1260–1267. [Google Scholar] [CrossRef] [Green Version]

- Adade, R.; Aibinu, A.M.; Ekumah, B.; Asaana, J. Unmanned Aerial Vehicle (UAV) applications in coastal zone management—A review. Environ. Monit. Assess. 2021, 193, 1–12. [Google Scholar] [CrossRef] [PubMed]

- de Araújo, M.C.B.; da Costa, M.F. Visual diagnosis of solid waste contamination of a tourist beach: Pernambuco, Brazil. Waste Manag. 2007, 27, 833–839. [Google Scholar] [CrossRef] [PubMed]

- Ariza, E.; Jiménez, J.A.; Sardá, R. Seasonal evolution of beach waste and litter during the bathing season on the Catalan coast. Waste Manag. 2008, 28, 2604–2613. [Google Scholar] [CrossRef] [PubMed]

- Asensio-Montesinos, F.; Anfuso, G.; Williams, A. Beach litter distribution along the western Mediterranean coast of Spain. Mar. Pollut. Bull. 2019, 141, 119–126. [Google Scholar] [CrossRef]

- Kraft, M.; Piechocki, M.; Ptak, B.; Walas, K. Autonomous, onboard vision-based trash and litter detection in low altitude aerial images collected by an unmanned aerial vehicle. Remote Sens. 2021, 13, 965. [Google Scholar] [CrossRef]

- Chaumette, F.; Hutchinson, S. Visual servo control. I. Basic approaches. IEEE Robot. Autom. Mag. 2006, 13, 82–90. [Google Scholar] [CrossRef]

- Chaumette, F.; Hutchinson, S. Visual servo control. II. Advanced approaches [Tutorial]. IEEE Robot. Autom. Mag. 2007, 14, 109–118. [Google Scholar] [CrossRef]

- Hutchinson, S.; Hager, G.D.; Corke, P.I. A tutorial on visual servo control. IEEE Trans. Robot. Autom. 1996, 12, 651–670. [Google Scholar] [CrossRef] [Green Version]

- Malis, E.; Chaumette, F.; Boudet, S. 2 1/2 D visual servoing. IEEE Trans. Robot. Autom. 1999, 15, 238–250. [Google Scholar] [CrossRef] [Green Version]

- Silveira, G.; Malis, E. Direct visual servoing: Vision-based estimation and control using only nonmetric information. IEEE Trans. Robot. 2012, 28, 974–980. [Google Scholar] [CrossRef]

- Kanellakis, C.; Nikolakopoulos, G. Survey on computer vision for UAVs: Current developments and trends. J. Intell. Robot. Syst. 2017, 87, 141–168. [Google Scholar] [CrossRef] [Green Version]

- Guenard, N.; Hamel, T.; Mahony, R. A practical visual servo control for an unmanned aerial vehicle. IEEE Trans. Robot. 2008, 24, 331–340. [Google Scholar] [CrossRef] [Green Version]

- Azinheira, J.R.; Rives, P. Image-based visual servoing for vanishing features and ground lines tracking: Application to a uav automatic landing. Int. J. Optomechatronics 2008, 2, 275–295. [Google Scholar] [CrossRef]

- Salazar, S.; Romero, H.; Gómez, J.; Lozano, R. Real-time stereo visual servoing control of an UAV having eight-rotors. In Proceedings of the 2009 6th International Conference on Electrical Engineering, Computing Science and Automatic Control (CCE), Toluca, Mexico, 10–13 January 2009; pp. 1–11. [Google Scholar]

- Araar, O.; Aouf, N. Visual servoing of a quadrotor uav for autonomous power lines inspection. In Proceedings of the 22nd Mediterranean Conference on Control and Automation, Palermo, Italy, 16–19 June 2014; pp. 1418–1424. [Google Scholar]

- Asl, H.J.; Yoon, J. Adaptive vision-based control of an unmanned aerial vehicle without linear velocity measurements. ISA Trans. 2016, 65, 296–306. [Google Scholar]

- Shi, H.; Li, X.; Hwang, K.S.; Pan, W.; Xu, G. Decoupled visual servoing with fuzzy Q-learning. IEEE Trans. Ind. Inform. 2016, 14, 241–252. [Google Scholar] [CrossRef]

- Polvara, R.; Patacchiola, M.; Sharma, S.; Wan, J.; Manning, A.; Sutton, R.; Cangelosi, A. Toward end-to-end control for UAV autonomous landing via deep reinforcement learning. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 115–123. [Google Scholar]

- Lee, D.; Ryan, T.; Kim, H.J. Autonomous landing of a VTOL UAV on a moving platform using image-based visual servoing. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 971–976. [Google Scholar]

- Karras, G.C.; Bechlioulis, C.P.; Fourlas, G.K.; Kyriakopoulos, K.J. Target Tracking with Multi-rotor Aerial Vehicles based on a Robust Visual Servo Controller with Prescribed Performance. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 480–487. [Google Scholar]

- Vlantis, P.; Marantos, P.; Bechlioulis, C.P.; Kyriakopoulos, K.J. Quadrotor landing on an inclined platform of a moving ground vehicle. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2202–2207. [Google Scholar]

- Jabbari Asl, H.; Oriolo, G.; Bolandi, H. Output feedback image-based visual servoing control of an underactuated unmanned aerial vehicle. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2014, 228, 435–448. [Google Scholar] [CrossRef]

- Asl, H.J.; Bolandi, H. Robust vision-based control of an underactuated flying robot tracking a moving target. Trans. Inst. Meas. Control 2014, 36, 411–424. [Google Scholar] [CrossRef]

- Kassab, M.A.; Maher, A.; Elkazzaz, F.; Baochang, Z. UAV target tracking by detection via deep neural networks. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 139–144. [Google Scholar]

- Sampedro, C.; Rodriguez-Ramos, A.; Gil, I.; Mejias, L.; Campoy, P. Image-based visual servoing controller for multirotor aerial robots using deep reinforcement learning. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 979–986. [Google Scholar]

- Xu, A.; Dudek, G. A vision-based boundary following framework for aerial vehicles. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 81–86. [Google Scholar]

- Baker, P.; Kamgar-Parsi, B. Using shorelines for autonomous air vehicle guidance. Comput. Vis. Image Underst. 2010, 114, 723–729. [Google Scholar] [CrossRef]

- Lee, C.; Hsiao, F. Implementation of vision-based automatic guidance system on a fixed-wing unmanned aerial vehicle. Aeronaut. J. 2012, 116, 895–914. [Google Scholar] [CrossRef]

- Corke, P.I.; Hutchinson, S.A. A new partitioned approach to image-based visual servo control. IEEE Trans. Robot. Autom. 2001, 17, 507–515. [Google Scholar] [CrossRef] [Green Version]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter. 1995. Available online: https://perso.crans.org/club-krobot/doc/kalman.pdf (accessed on 1 May 2022).

- Ribeiro, M.I. Kalman and extended kalman filters: Concept, derivation and properties. Inst. Syst. Robot. 2004, 43, 46. [Google Scholar]

- Bao, L.; Yang, Q.; Jin, H. Fast edge-preserving patchmatch for large displacement optical flow. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3534–3541. [Google Scholar]

- Brox, T.; Bruhn, A.; Papenberg, N.; Weickert, J. High accuracy optical flow estimation based on a theory for warping. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2004; pp. 25–36. [Google Scholar]

- Ahmadi, A.; Patras, I. Unsupervised convolutional neural networks for motion estimation. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 1629–1633. [Google Scholar]

- Bailer, C.; Taetz, B.; Stricker, D. Flow fields: Dense correspondence fields for highly accurate large displacement optical flow estimation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4015–4023. [Google Scholar]

- Bailer, C.; Varanasi, K.; Stricker, D. CNN-based patch matching for optical flow with thresholded hinge embedding loss. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3250–3259. [Google Scholar]

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazirbas, C.; Golkov, V.; Van Der Smagt, P.; Cremers, D.; Brox, T. Flownet: Learning optical flow with convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2758–2766. [Google Scholar]

- Ilg, E.; Mayer, N.; Saikia, T.; Keuper, M.; Dosovitskiy, A.; Brox, T. Flownet 2.0: Evolution of optical flow estimation with deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2462–2470. [Google Scholar]

- Revach, G.; Shlezinger, N.; Ni, X.; Escoriza, A.L.; Van Sloun, R.J.; Eldar, Y.C. KalmanNet: Neural network aided Kalman filtering for partially known dynamics. IEEE Trans. Signal Process. 2022, 70, 1532–1547. [Google Scholar] [CrossRef]

- Mahony, R.; Kumar, V.; Corke, P. Multirotor aerial vehicles: Modeling, estimation, and control of quadrotor. IEEE Robot. Autom. Mag. 2012, 19, 20–32. [Google Scholar] [CrossRef]

- Meyer, J.; Sendobry, A.; Kohlbrecher, S.; Klingauf, U.; Von Stryk, O. Comprehensive simulation of quadrotor uavs using ros and gazebo. In International Conference on Simulation, Modeling, and Programming for Autonomous Robots; Springer: Berlin/Heidelberg, Germany, 2012; pp. 400–411. [Google Scholar]

- Autopilot Group. ArduPilot Documentation. 2016. Available online: https://ardupilot.org/copter/docs/common-thecubeorange-overview.html (accessed on 1 May 2022).

- Autopilot Group. ArduPilot Documentation. 2016. Available online: https://ardupilot.org/ardupilot/ (accessed on 1 May 2022).

- Gupta, D. A Beginner’s Guide to Deep Learning Based Semantic Segmentation Using Keras. Available online: https://divamgupta.com/image-segmentation/2019/06/06/deep-learning-semantic-segmentation-keras.html (accessed on 1 May 2022).

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools Prof. Program. 2000, 25, 120–123. [Google Scholar]

- Hinsinger, D.; Neyret, F.; Cani, M.P. Interactive Animation of Ocean Waves. In Proceedings of the 2002 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’02), Durham, UK, 13–15 September 2002. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In ICRA Workshop on Open Source Software; ICRA: Kobe, Japan, 2009; Volume 3, p. 5. [Google Scholar]

- Koenig, N.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)(IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September 2004–2 October 2004; Volume 3, pp. 2149–2154. [Google Scholar]

- Protocol, M.M.A.V. MAVLink to ROS Gateway with Proxy for Ground Control Station. 2003. Available online: https://github.com/mavlink/mavros (accessed on 1 May 2022).

- Bingham, B.; Aguero, C.; McCarrin, M.; Klamo, J.; Malia, J.; Allen, K.; Lum, T.; Rawson, M.; Waqar, R. Toward Maritime Robotic Simulation in Gazebo. In Proceedings of the MTS/IEEE OCEANS Conference, Seattle, WA, USA, 27–31 October 2019. [Google Scholar]

- Iris. 3DR Iris Quadrotor. 2021. Available online: http://www.arducopter.co.uk/iris-quadcopter-uav.html (accessed on 1 May 2022).

| 1st Exp. Scenario | 2nd Exp. Scenario | 3rd Exp. Scenario | |

|---|---|---|---|

| u-axis error fluctuation (in pixels) | 80–170 | 60–80 | 7–22 |

| u-axis error fluctuation (%) | 24–50 | 18–24 | 2–6 |

| v-axis error fluctuation (in pixels) | 8–35 | 8–20 | 2–8 |

| v-axis error fluctuation (%) | 10–20 | 6–14 | 1–4 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aspragkathos, S.N.; Karras, G.C.; Kyriakopoulos, K.J. A Hybrid Model and Data-Driven Vision-Based Framework for the Detection, Tracking and Surveillance of Dynamic Coastlines Using a Multirotor UAV. Drones 2022, 6, 146. https://doi.org/10.3390/drones6060146

Aspragkathos SN, Karras GC, Kyriakopoulos KJ. A Hybrid Model and Data-Driven Vision-Based Framework for the Detection, Tracking and Surveillance of Dynamic Coastlines Using a Multirotor UAV. Drones. 2022; 6(6):146. https://doi.org/10.3390/drones6060146

Chicago/Turabian StyleAspragkathos, Sotirios N., George C. Karras, and Kostas J. Kyriakopoulos. 2022. "A Hybrid Model and Data-Driven Vision-Based Framework for the Detection, Tracking and Surveillance of Dynamic Coastlines Using a Multirotor UAV" Drones 6, no. 6: 146. https://doi.org/10.3390/drones6060146

APA StyleAspragkathos, S. N., Karras, G. C., & Kyriakopoulos, K. J. (2022). A Hybrid Model and Data-Driven Vision-Based Framework for the Detection, Tracking and Surveillance of Dynamic Coastlines Using a Multirotor UAV. Drones, 6(6), 146. https://doi.org/10.3390/drones6060146