A Global Multi-Scale Channel Adaptation Network for Pine Wilt Disease Tree Detection on UAV Imagery by Circle Sampling

Abstract

1. Introduction

2. Materials and Methods

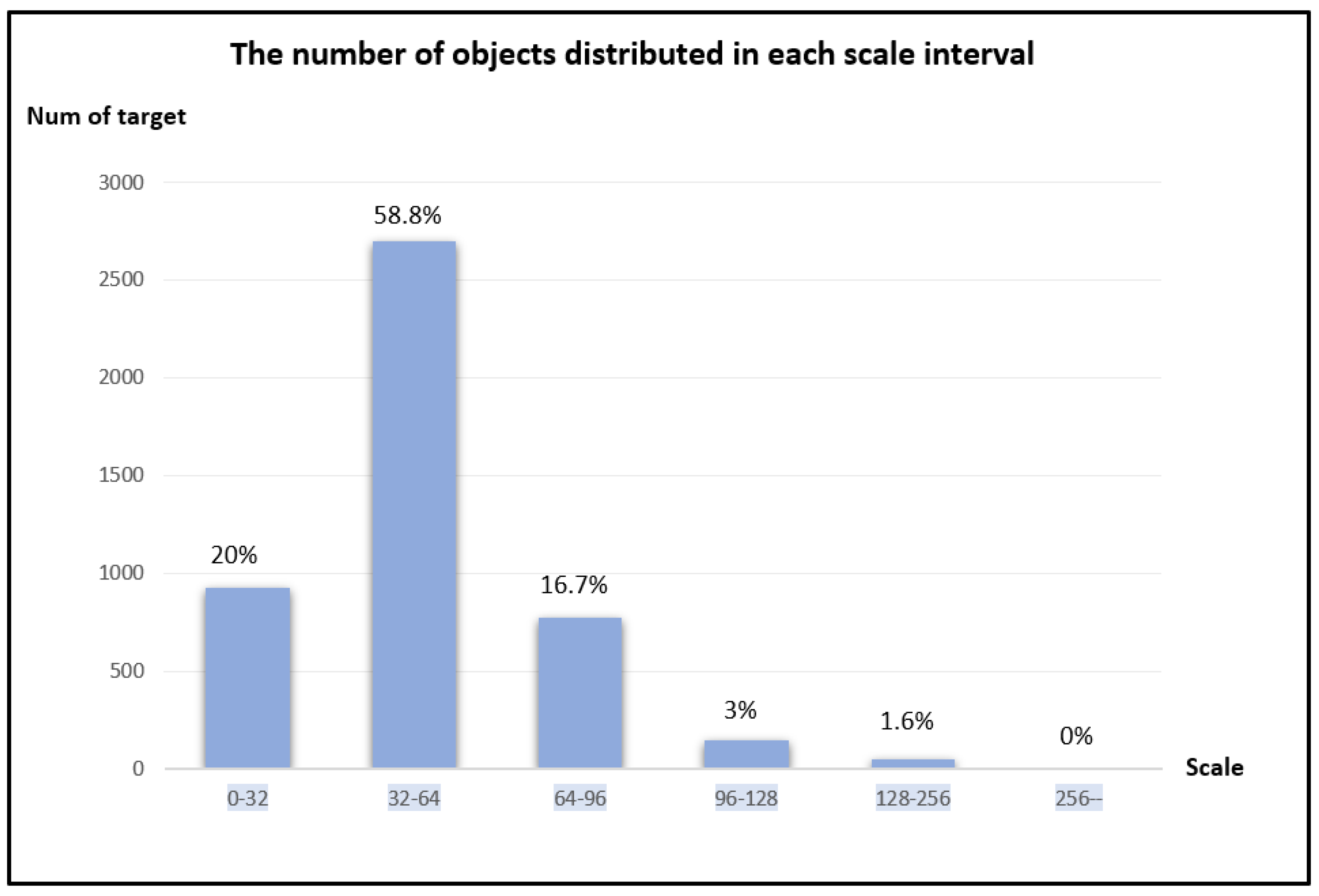

2.1. Data Acquisition and Dataset Production

2.2. Experimental Environment

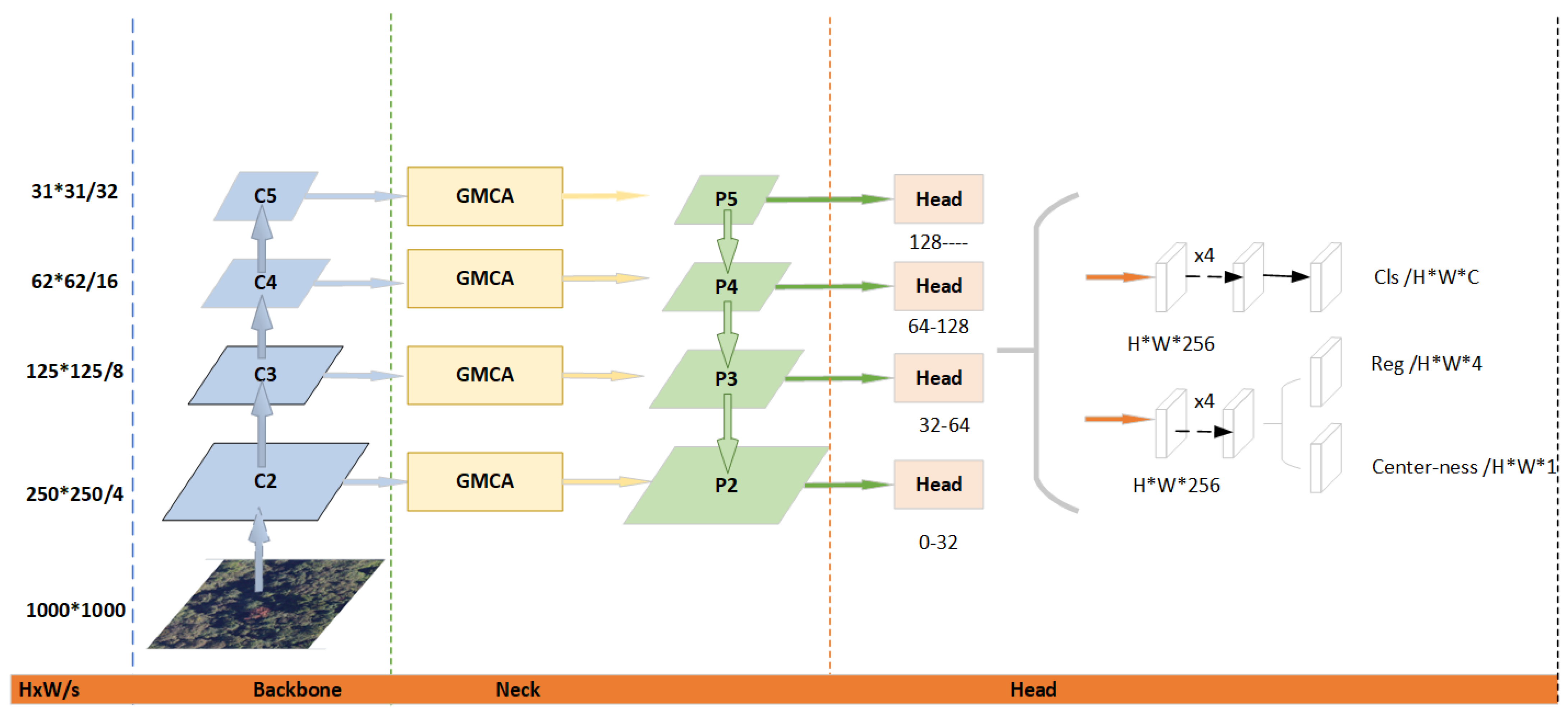

2.3. Detection Algorithm Model

2.3.1. The Global Multi-Scale Channel Attention Network

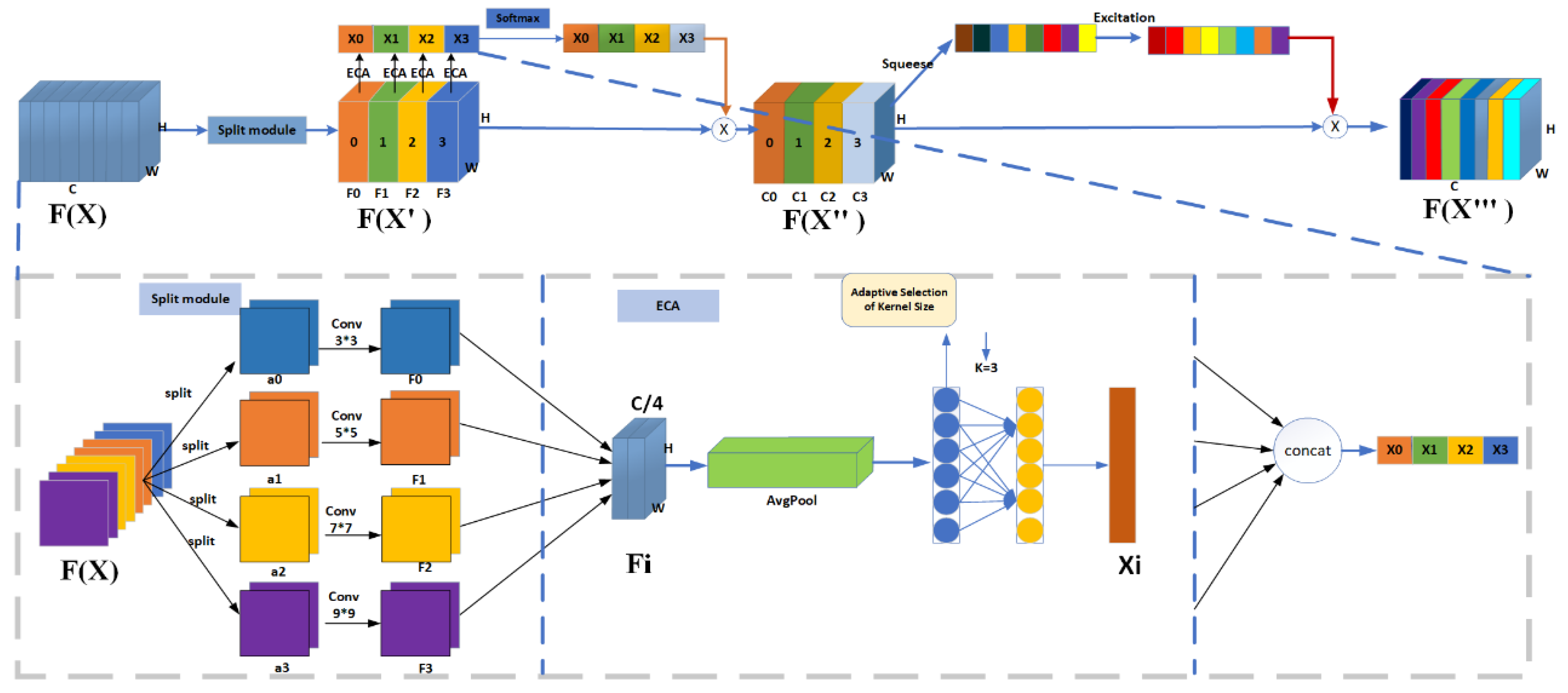

2.3.2. The Global Multi-Scale Channel Attention (GMCA)

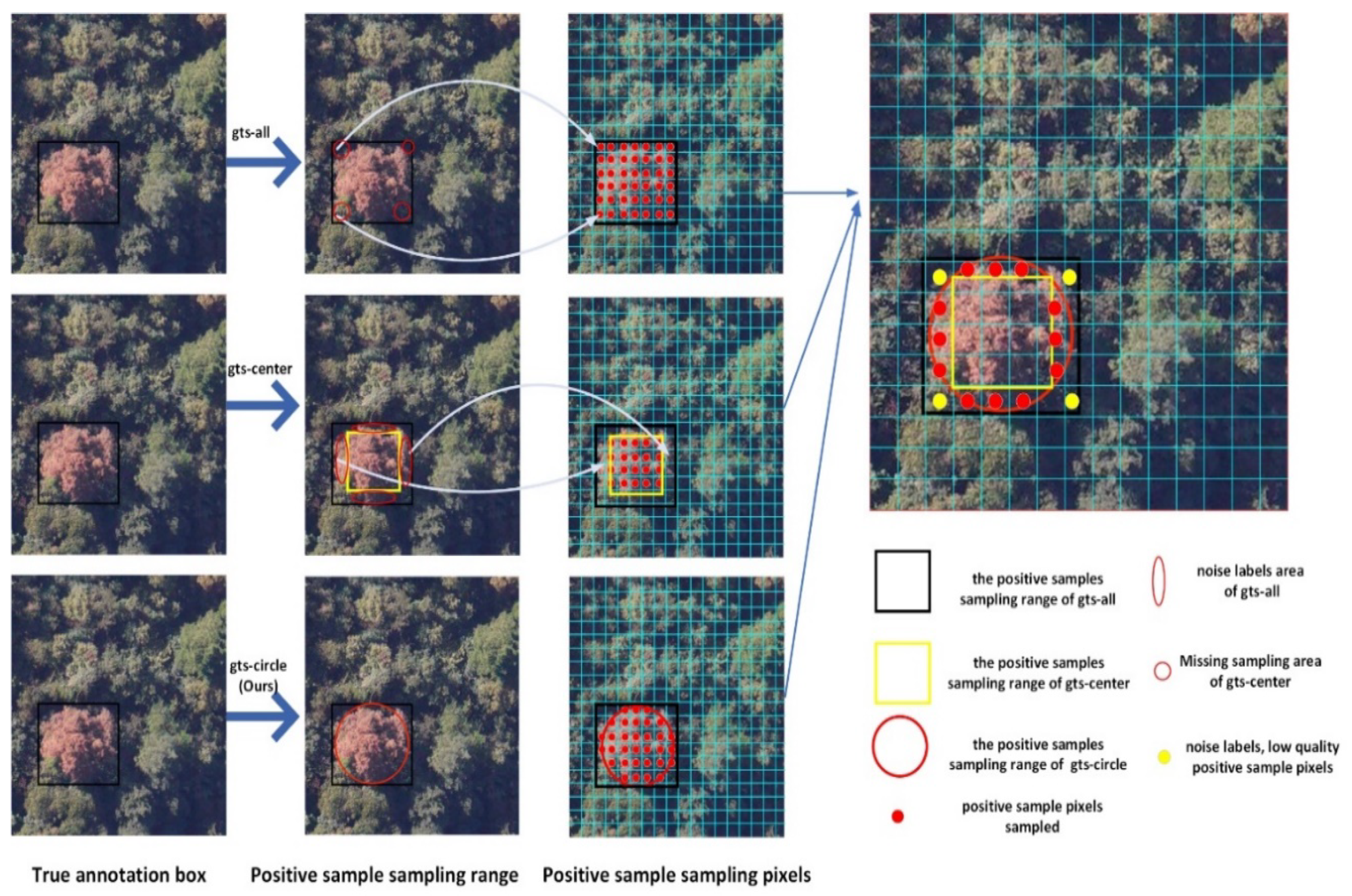

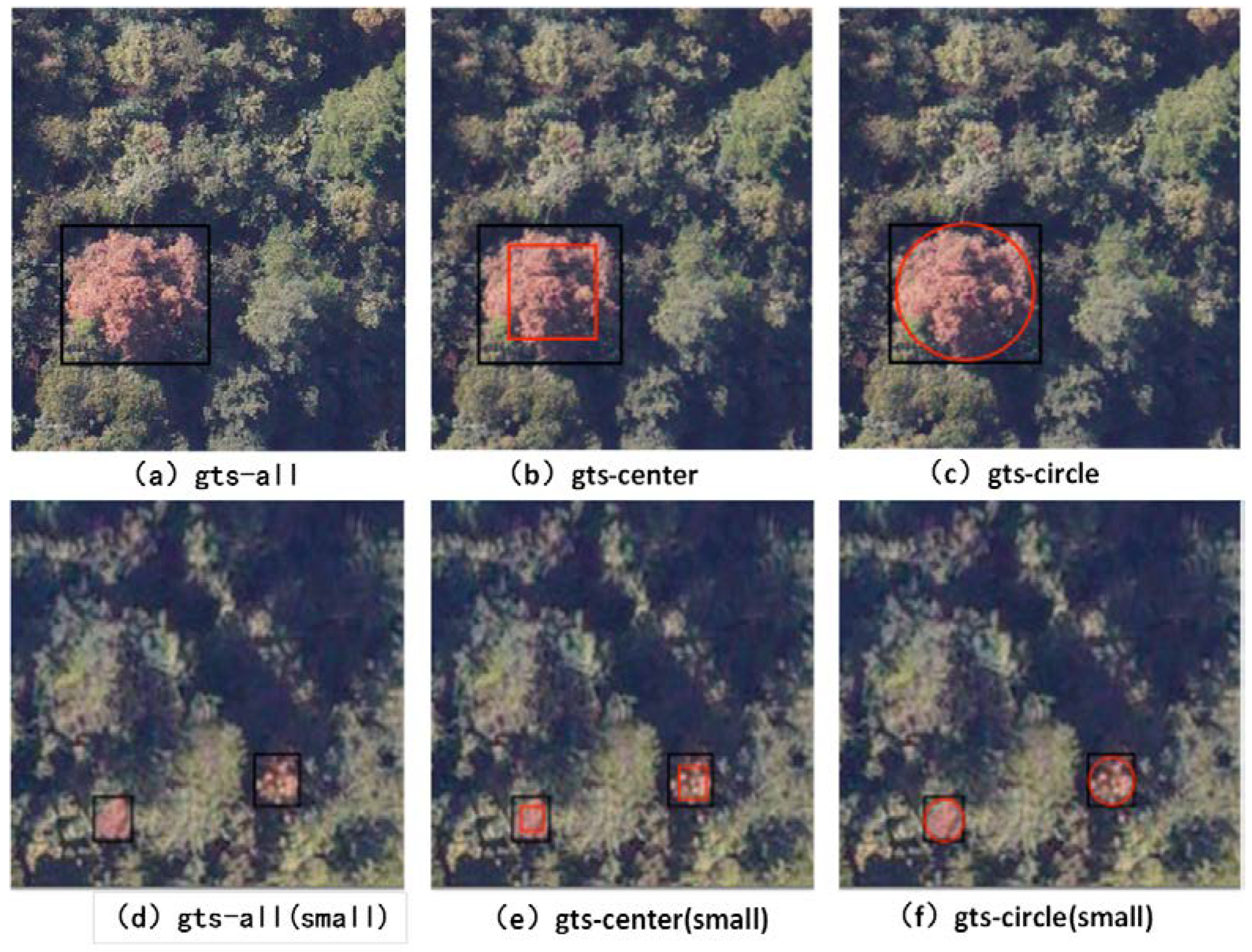

2.3.3. Gts-Circle Sampling

3. Results

3.1. Evaluation Metric

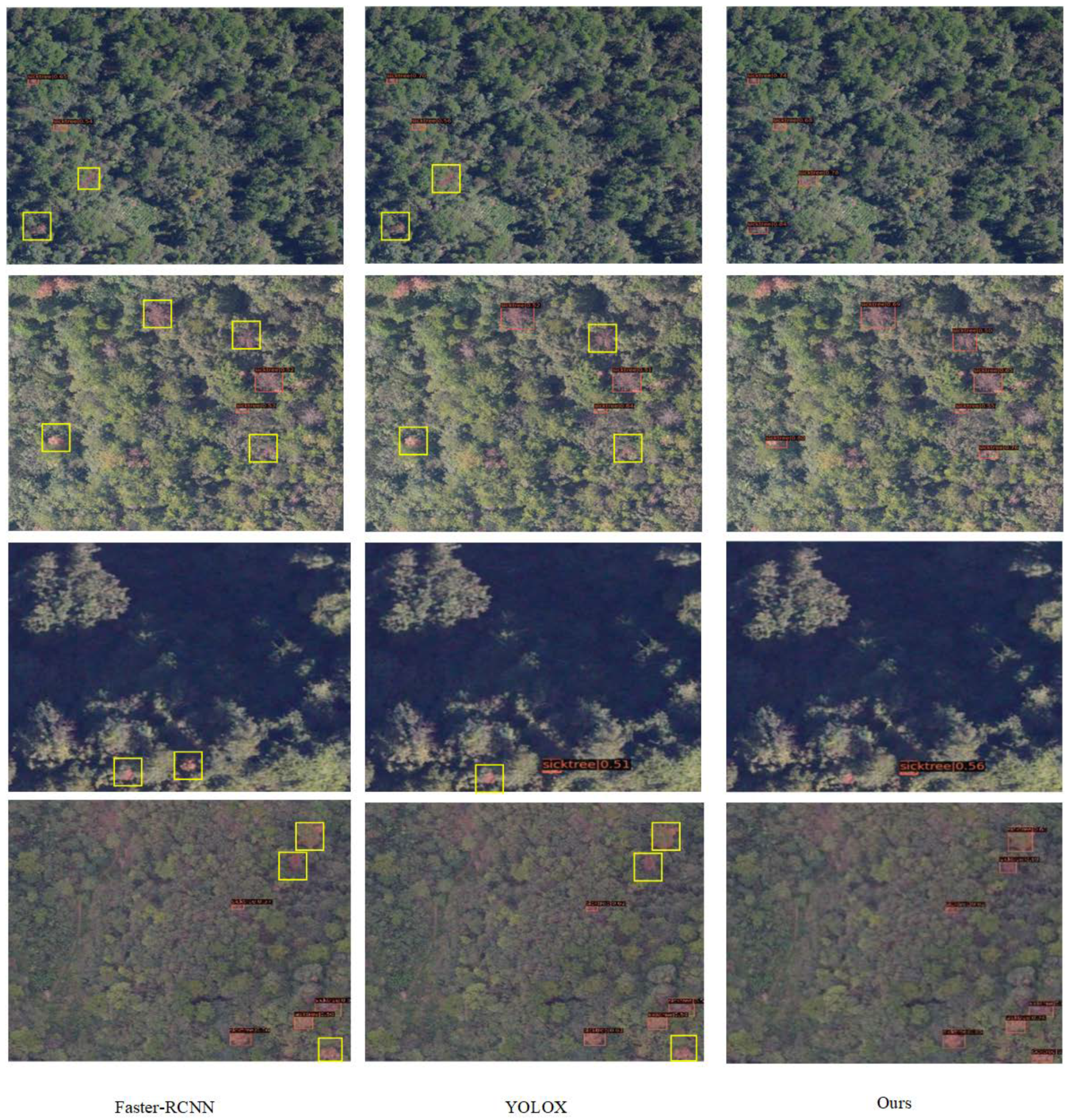

3.2. Comparative Experimental

3.3. Ablation Study

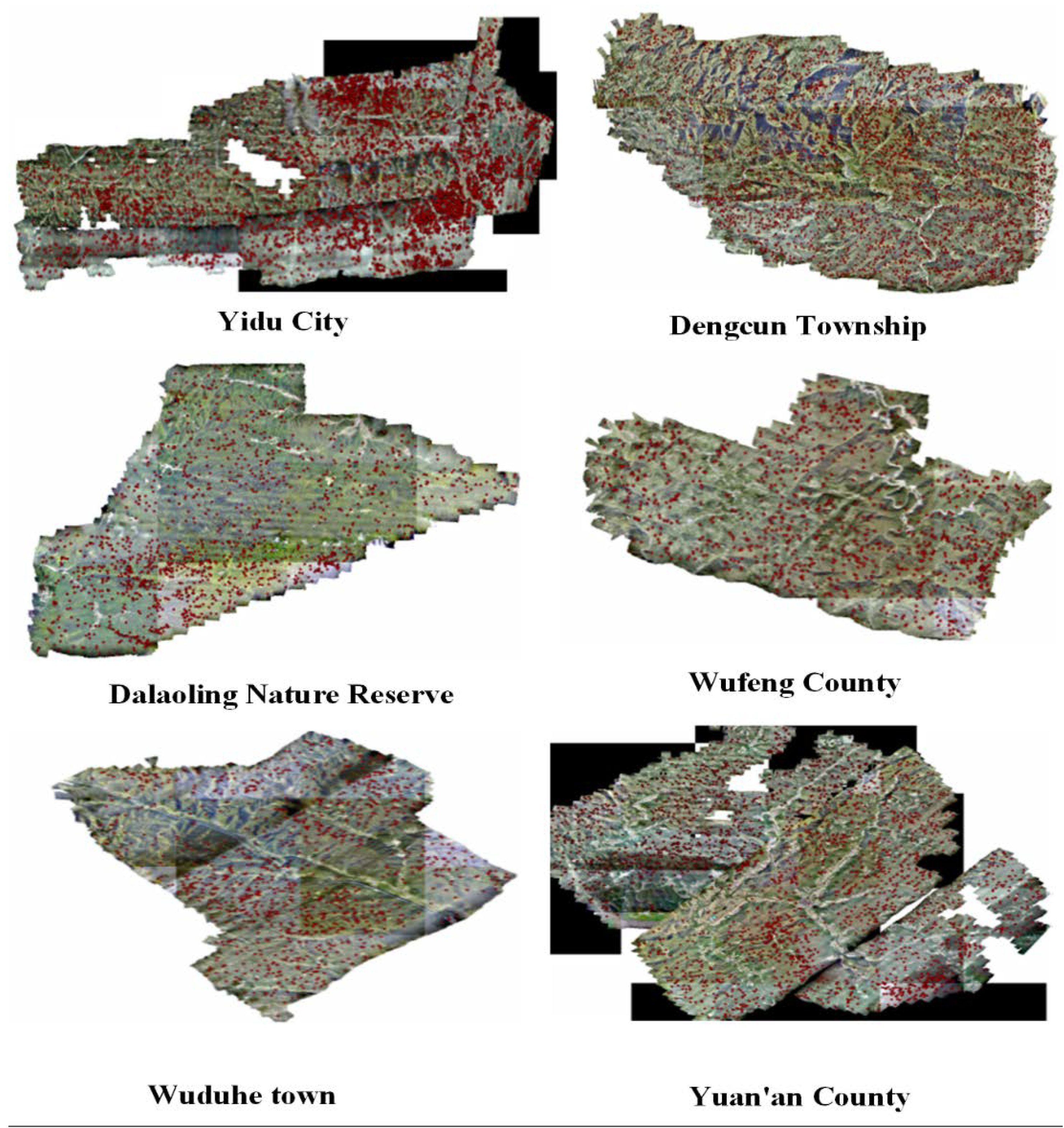

3.4. Application Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zeng, Q.; Sun, H.-F.; Yang, Y.-L.; Zhou, J.-H.; Yang, C. Comparison of accuracy of UAV monitoring pine wood nematode disease. J. Sichuan For. Sci. Technol. 2019, 40, 92–95+114. [Google Scholar]

- Wu, S. Tree extraction of pine wood nematode disease from remote sensing images based on deep learning. Comput. Sci. Appl. 2021, 11, 1419–1426. [Google Scholar]

- Xiangkang, L.; Huanhua, H.; Yongkui, H.; Jun-Xiang, F.; Hai-Wei, W.; Wu, J.R. Study on the change of characteristics of pine wood nematode disease, Study on the change of characteristics of pine wood nematode disease. Guangdong For. Sci. Technol. 2010, 5, 92–96. [Google Scholar]

- He, S.; Liu, P. UAV inspection and verification, ground manual inspection of the city’s 1,592,200 mu of pine forest cover, no dead corners Beijing has woven a three-dimensional monitoring network for pine wood nematode disease. Green. Life. 2020, 7, 19–24. [Google Scholar]

- Peng, L.; WeiXing, S.; Feng-gang, S.; Li, X.; Fengdi, L.; Zhengyu, L.; Zhichao, G.; Chun-yan, J.; Bin, D. A tree detection method and system for pine wood nematode disease based on YOLOV3-CIOU. J. Shandong Agric. Univ. Nat. Sci. Ed. 2021, 52, 10. [Google Scholar]

- Weizheng, L.; Shiguang, S.; Peng, H.; Dejun, H.; Yang, F.; Long, T.; Shuifeng, Z. Remote sensing location of dead and dead wood by low-cost small UAV. For. Sci. Technol. Dev. 2014, 28, 102–106. [Google Scholar]

- Sun, Y.; Ma, O. Automating Aircraft Scanning for Inspection or 3D Model Creation with a UAV and Optimal Path Planning. Drones 2022, 6, 87. [Google Scholar] [CrossRef]

- Hu, M.; Liu, W.; Lu, J.; Fu, R.; Peng, K.; Ma, X.; Liu, J. On the joint design of routing and scheduling for vehicle-assisted multi-UAV inspection. Future Gener. Comput. Syst. 2019, 94, 214–223. [Google Scholar] [CrossRef]

- Jenssen, R.; Roverso, D. Automatic autonomous vision-based power line inspection: A review of current status and the potential role of deep learning. Int. J. Electr. Power Energy Syst. 2018, 99, 107–120. [Google Scholar]

- Xiang, T.-Z.; Xia, G.-S.; Zhang, L. Mini-unmanned aerial vehicle-based remote sensing: Techniques, applications, and prospects. IEEE Geosci. Remote Sens. Mag. 2019, 7, 29–63. [Google Scholar] [CrossRef]

- Ahmed, I.; Ahmad, M.; Chehri, A.; Hassan, M.M.; Jeon, G. IoT Enabled Deep Learning Based Framework for Multiple Object Detection in Remote Sensing Images. Remote Sens. 2022, 14, 4107. [Google Scholar] [CrossRef]

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef]

- Wu, X.; Li, W.; Hong, D.; Tao, R.; Du, Q. Deep learning for unmanned aerial vehicle-based object detection and tracking: A survey. IEEE Geosci. Remote Sens. Mag. 2021, 10, 91–124. [Google Scholar] [CrossRef]

- Luo, W.; Jin, Y.; Li, X.; Liu, K. Application of Deep Learning in Remote Sensing Monitoring of Large Herbivores-A Case Study in Qinghai Tibet Plateau. Pak. J. Zool. 2022, 54, 413. [Google Scholar] [CrossRef]

- Syifa, M.; Park, S.-J.; Lee, C.-W. Detection of the pine wilt disease tree candidates for drone remote sensing using artificial intelligence techniques. Engineering 2020, 6, 919–926. [Google Scholar] [CrossRef]

- Yu, R.; Luo, Y.; Zhou, Q.; Zhang, X.; Wu, D.; Ren, L. Early detection of pine wilt disease using deep learning algorithms and UAV-based multispectral imagery. For. Ecol. Manag. 2021, 497, 119493. [Google Scholar] [CrossRef]

- Chen, W.; Huihui, Z.; Jiwang, L.; Shuai, Z. Object Detection to the Pine Trees Affected by Pine Wilt Disease in Remote Sensing Images Using Deep Learning. J. Nanjing Norm. Univ. 2021, 44, 84–89. [Google Scholar]

- Liming, H.; Yixiang, W.; Qi, X.; Qing, H. YOLO algorithm and UAV image were used to identify abnormal dis-colored wood of pine wood nematode disease. Trans. Chin. Soc. Agric. Eng. 2021, 37, 197–203. [Google Scholar]

- Görlich, F.; Marks, E.; Mahlein, A.-K.; König, K.; Lottes, P.; Stachniss, C. Uav-based classification of Cercospora leaf spot using images. Drones 2021, 5, 34. [Google Scholar] [CrossRef]

- Buters, T.; Belton, D.; Cross, A. Seed and seedling detection using unmanned aerial vehicles and automated image classification in the monitoring of ecological recovery. Drones 2019, 3, 53. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Qilong, W.; Banggu, W.; Pengfei, Z.; Peihua, L.; Wangmeng, Z.; Qinghua, H. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Zhang, Q.-L.; Yang, Y.-B. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. arXiv 2019, arXiv:1910.03151. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: A simple and strong anchor-free object detector. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1922–1933. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. Foveabox: Beyond anchor-based object detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Jocher, B.A.G. AyushExel, “Yolov5”. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 12 May 2022).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Dai, W.; Mao, Y.; Yuan, R.; Liu, Y.; Pu, X.; Li, C. A novel detector based on convolution neural networks for multiscale SAR ship detection in complex background. Sensors 2020, 20, 2547. [Google Scholar] [CrossRef]

- Gao, Y.; Wu, Z.; Ren, M.; Wu, C. Improved YOLOv4 Based on Attention Mechanism for Ship Detection in SAR Images. IEEE Access 2022, 10, 23785–23797. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, B.; Xu, N. SAR ship detection in complex background based on multi-feature fusion and non-local channel attention mechanism. Int. J. Remote Sens. 2021, 42, 7519–7550. [Google Scholar] [CrossRef]

- Zhang, W.; Sun, Y.; Huang, H.; Pei, H.; Sheng, J.; Yang, P. Pest Region Detection in Complex Backgrounds via Contextual Information and Multi-Scale Mixed Attention Mechanism. Agriculture 2022, 12, 1104. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Xiong, B.; Kuang, G. Attention receptive pyramid network for ship detection in SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2738–2756. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, Y.; Yang, T.; Zhang, X.; Cheng, J.; Sun, J. You only look one-level feature. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13039–13048. [Google Scholar]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra R-CNN: Towards balanced learning for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 821–830. [Google Scholar]

| Number of Pictures | GT Number | Ave Target Number | |

|---|---|---|---|

| Training set | 1612 | 3415 | 2.1 |

| Validation set | 200 | 380 | 1.9 |

| Test set | 202 | 427 | 2.1 |

| Network (Year) | Backbone | Recall (Score = 0.5) | AP (IOU = 0.5) |

|---|---|---|---|

| CenterNet (2019) | ResNet18 | 71.6 | 77.5 |

| FoveaBox (2020) | ResNet50 | 80.3 | 78.7 |

| YOLOX (2021) | CSPDarknet53 | 83.1 | 79.5 |

| YOLOv5 (2020) | CSPDarknet53 | 79.0 | 78.5 |

| Faster-RCNN (2015) | ResNet50 | 84.7 | 79.2 |

| RetinaNet (2017) | ResNet50 | 82.5 | 77.6 |

| YOLOv6 (2022) | EfficientRep | 80.5 | 73.6 |

| Ours | ResNet50 | 86.6 | 79.8 |

| Network | Num of True | Num of Detection | Num of Missed |

|---|---|---|---|

| Faster-RCNN | 427 | 358 | 69 |

| YOLOX | 427 | 353 | 74 |

| Ours | 427 | 373 | 54 |

| Network | L: Size 96 × 96 | M: Size: 32 × 32–96 × 96 | S: Size 32 × 32 |

|---|---|---|---|

| Faster-RCNN | 4 | 12 | 53 |

| YOLOX | 16 | 26 | 32 |

| Ours | 0 | 8 | 46 |

| Module | Recall | AP |

|---|---|---|

| FCOS (gts-all) | 83.1 | 77.6 |

| FCOS + gts-center | 80.2 | 78.3 |

| FCOS + gts-circle | 84.3 | 78.4 |

| FCOS + GMCA | 83.5 | 79.1 |

| FCOS + GMCA + gts-circle | 86.6 | 79.8 |

| Faster | Faster + GMCA | FCOS | FCOS + GMCA | |

|---|---|---|---|---|

| person | 79.9 | 77.0 | 80.2 | 80.5 |

| aeroplane | 79.1 | 79.6 | 79.4 | 79.8 |

| tvmonitor | 66.6 | 67.0 | 65.9 | 65.5 |

| train | 72.7 | 76.9 | 77.2 | 79.4 |

| boat | 52.7 | 51.1 | 49.3 | 51.2 |

| dog | 83.8 | 86.5 | 82.0 | 83.9 |

| chair | 50.5 | 51.5 | 52.1 | 52.6 |

| bird | 73.8 | 74.9 | 75.3 | 75.3 |

| bicycle | 73.6 | 75.9 | 72.4 | 72.7 |

| bottle | 50.8 | 50.6 | 51.9 | 53.6 |

| sheep | 72.6 | 71.4 | 71.6 | 70.8 |

| diningtable | 52.9 | 55.0 | 51.3 | 50.1 |

| horse | 74.3 | 79.7 | 76.3 | 77.4 |

| motorbike | 76.6 | 75.5 | 74.0 | 76.4 |

| sofa | 56.5 | 61.1 | 56.8 | 59.7 |

| cow | 67.8 | 70.2 | 62.1 | 64.8 |

| car | 69.7 | 69.8 | 71.4 | 70.8 |

| cat | 86.3 | 88.6 | 85.1 | 87.0 |

| bus | 76.2 | 76.8 | 78.0 | 76.8 |

| pottedplant | 41.9 | 40.2 | 42.7 | 43.5 |

| mAP | 67.9 | 69.0 | 67.8 | 68.6 |

| Region | Number of Detected | The Area (km2) |

|---|---|---|

| Yidu City | 6159 | 211.94 |

| Dengcun Township | 6578 | 95.4 |

| Wuduhe town | 3265 | 125 |

| Dalaoling Nature Reserve | 1468 | 86 |

| Wufeng County | 186 | 24 |

| Yuan’an County | 2448 | 127 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ren, D.; Peng, Y.; Sun, H.; Yu, M.; Yu, J.; Liu, Z. A Global Multi-Scale Channel Adaptation Network for Pine Wilt Disease Tree Detection on UAV Imagery by Circle Sampling. Drones 2022, 6, 353. https://doi.org/10.3390/drones6110353

Ren D, Peng Y, Sun H, Yu M, Yu J, Liu Z. A Global Multi-Scale Channel Adaptation Network for Pine Wilt Disease Tree Detection on UAV Imagery by Circle Sampling. Drones. 2022; 6(11):353. https://doi.org/10.3390/drones6110353

Chicago/Turabian StyleRen, Dong, Yisheng Peng, Hang Sun, Mei Yu, Jie Yu, and Ziwei Liu. 2022. "A Global Multi-Scale Channel Adaptation Network for Pine Wilt Disease Tree Detection on UAV Imagery by Circle Sampling" Drones 6, no. 11: 353. https://doi.org/10.3390/drones6110353

APA StyleRen, D., Peng, Y., Sun, H., Yu, M., Yu, J., & Liu, Z. (2022). A Global Multi-Scale Channel Adaptation Network for Pine Wilt Disease Tree Detection on UAV Imagery by Circle Sampling. Drones, 6(11), 353. https://doi.org/10.3390/drones6110353