Directional Statistics-Based Deep Metric Learning for Pedestrian Tracking and Re-Identification

Abstract

1. Introduction

- Introducing directional statistical distribution to pedestrian tracking and re-identification.

- A new object similarity measure based on pedestrian re-identification.

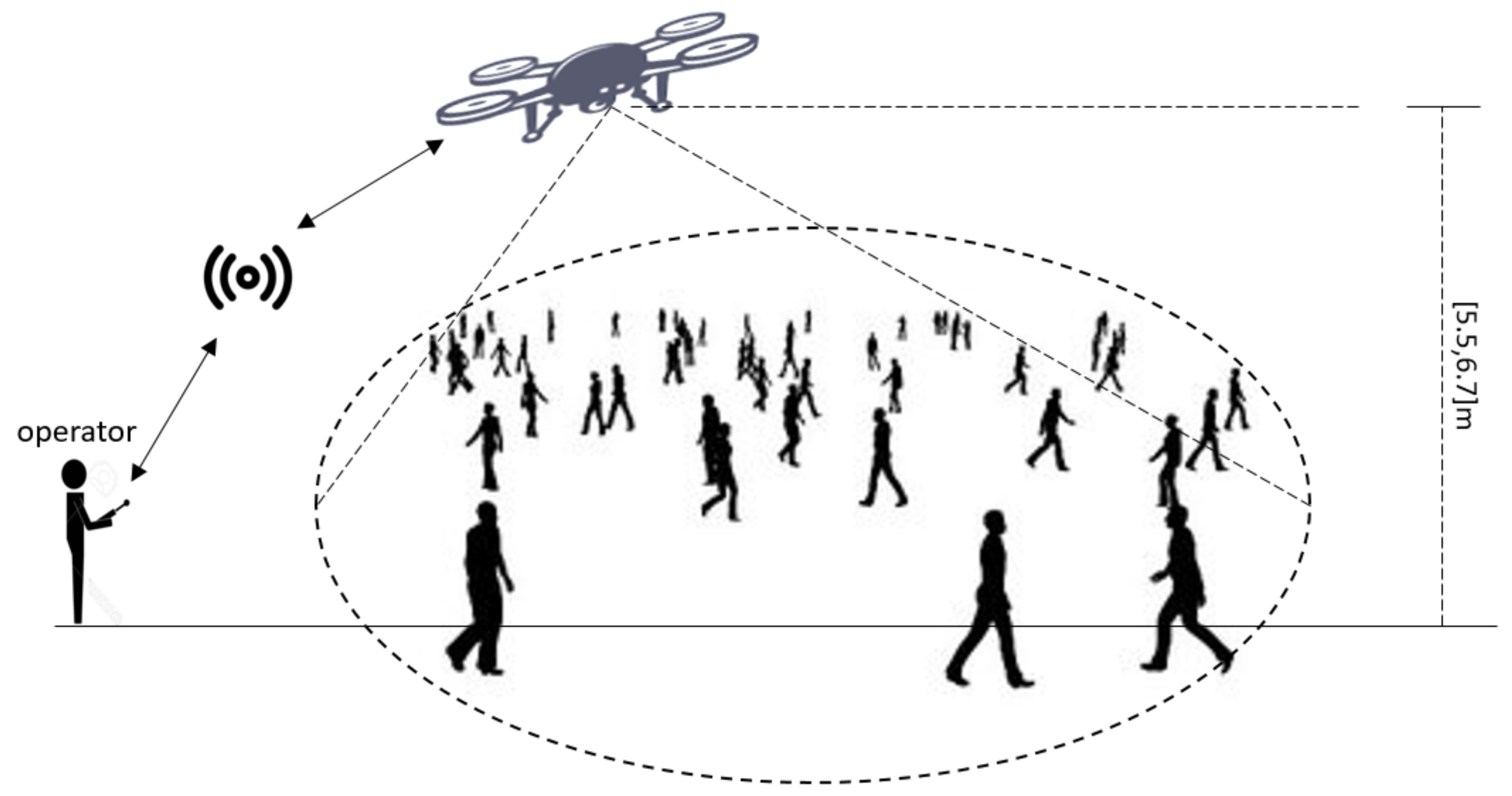

- An end-to-end multiple object tracking and re-identification of pedestrian from aerial devices.

2. Related Work

2.1. Pedestrian Tracking

2.2. Tracking

2.2.1. Online Tracking Mode

2.2.2. Batch Tracking Mode

2.3. Deep Metric Learning

2.4. Pedestrian Re-Identification

2.5. Directional Statistics in Machine Learning

2.5.1. Von Mises-Fisher Distribution

2.5.2. Learning Von-Mises Fisher Distribution

| Algorithm 1 vMF learning algorithm |

| 1. Initialize CNN parameters . |

| 2. while (Converge not achieved) do: |

| 3. Estimate mean directions using (8) and all the training data. |

| 4. Train CNN for several iterations and update . |

3. Dataset Description

4. Methodology

4.1. Multiple Object Tracking and Re-Identification Framework

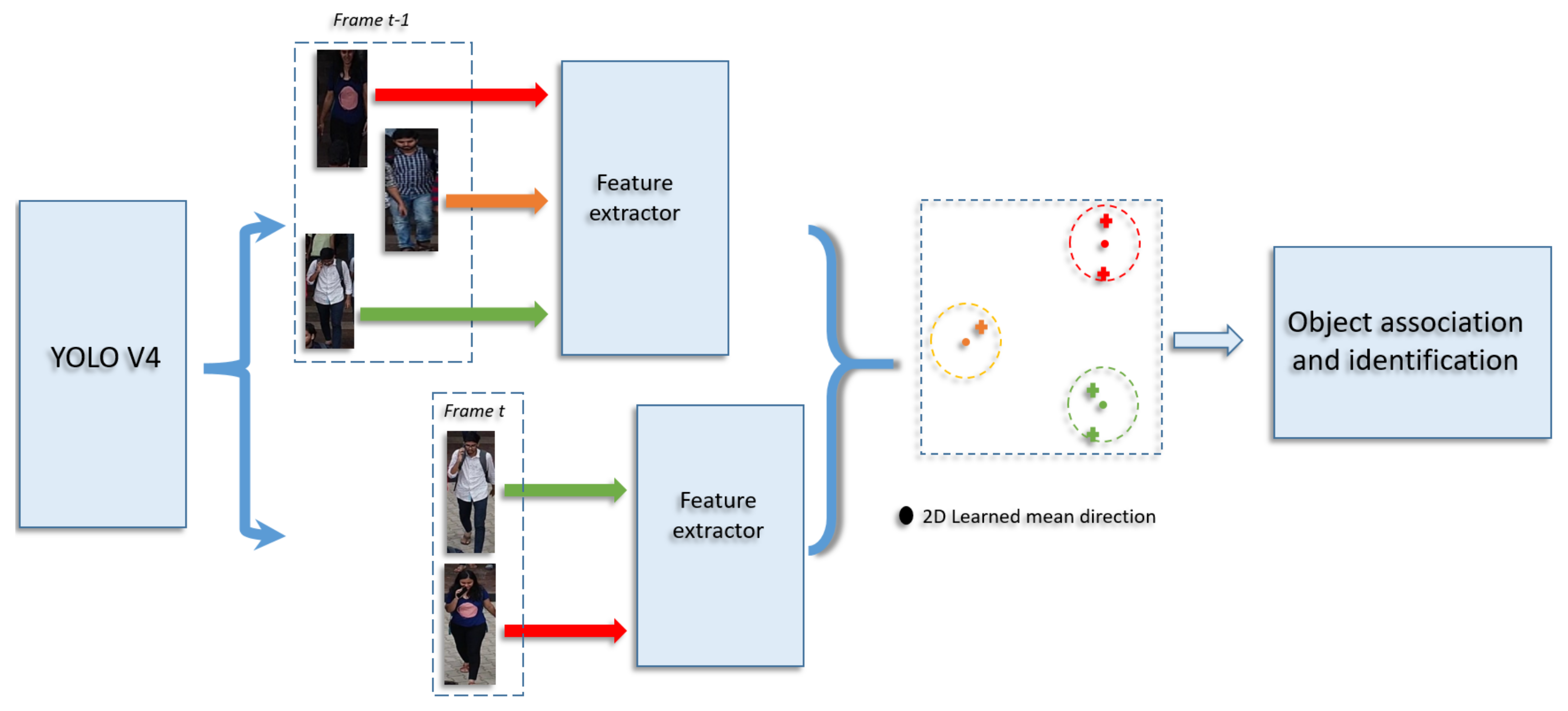

- We used YOLO V4 for real-time object detection. The YOLO V4 detector receives input videos frame by frame. As an output, the detection bounding boxes in each frame are obtained.

- Patches are cropped from each frame using the bounding boxes that have been detected.

- These patches are resized to the unified shape (H, W, C), where H denotes height, W denotes width, and C denotes channel count. A feature vector is then generated for each detected object using the trained features extractor.

- Using features vectors from frames t and , the object association and re-identification algorithm matches and recognizes the objects.

- YOLO V4: For each fold, we fine-tuned YOLO V4 pre-trained architecture to detect only pedestrian using the train set. The detector performance is monitored using the error on the validation set.

- Feature extractor: The feature extractor architecture is composed of a base model and a header. We used Wide ReseNet-50 (WRN) [38] as a base model, and header composed of two Fully connected Pooling Layers (FPL) of sizes [4096, 128], respectively. From each fold a train, validation, and test patches dataset is created from train, validation, and test sets correspondingly. The model is trained using the vMF learning algorithm (Algorithm 1). Figure 3 illustrates the training mechanism of the features extractor.

4.2. Object Association and Re-Identification

4.2.1. Similarity Measure

4.2.2. Object Detection Association

5. Experiments

5.1. Pedestrian Detection

5.2. Pedestrian Tracking

5.3. Long-Term Pedestrian Re-Identification

5.4. Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Suhr, J.K.; Jung, H.G. Rearview camera-based backover warning system exploiting a combination of pose-specific pedestrian recognitions. IEEE Trans. Intell. Transp. Syst. 2017, 19, 1122–1129. [Google Scholar] [CrossRef]

- Camara, F.; Bellotto, N.; Cosar, S.; Nathanael, D.; Althoff, M.; Wu, J.; Ruenz, J.; Dietrich, A.; Fox, C. Pedestrian models for autonomous driving part I: Low-level models, from sensing to tracking. IEEE Trans. Intell. Transp. Syst. 2020, 22, 6131–6151. [Google Scholar] [CrossRef]

- Han, S.; Huang, P.; Wang, H.; Yu, E.; Liu, D.; Pan, X.; Zhao, J. Mat: Motion-aware multi-object tracking. arXiv 2020, arXiv:2009.04794. [Google Scholar] [CrossRef]

- Peng, J.; Wang, C.; Wan, F.; Wu, Y.; Wang, Y.; Tai, Y.; Wang, C.; Li, J.; Huang, F.; Fu, Y. Chained-tracker: Chaining paired attentive regression results for end-to-end joint multiple-object detection and tracking. In Computer Vision–ECCV 2020; Springer: Cham, Switzerland, 2020; pp. 145–161. [Google Scholar]

- Sun, S.; Akhtar, N.; Song, X.; Song, H.; Mian, A.; Shah, M. Simultaneous detection and tracking with motion modelling for multiple object tracking. In Computer Vision–ECCV 2020; Springer: Cham, Switzerland, 2020; pp. 626–643. [Google Scholar]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards real-time multi-object tracking. In Computer Vision–ECCV 2020; Springer: Cham, Switzerland, 2020; pp. 107–122. [Google Scholar]

- Xu, Y.; Ban, Y.; Alameda-Pineda, X.; Horaud, R. Deepmot: A differentiable framework for training multiple object trackers. arXiv 2019, arXiv:1906.06618. [Google Scholar]

- Hornakova, A.; Henschel, R.; Rosenhahn, B.; Swoboda, P. Lifted disjoint paths with application in multiple object tracking. In Proceedings of the International Conference on Machine Learning, Virtual Event, 13–18 July 2020; pp. 4364–4375. [Google Scholar]

- Wen, L.; Du, D.; Li, S.; Bian, X.; Lyu, S. Learning non-uniform hypergraph for multi-object tracking. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8981–8988. [Google Scholar]

- Brasó, G.; Leal-Taixé, L. Learning a neural solver for multiple object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6247–6257. [Google Scholar]

- Zanfir, A.; Sminchisescu, C. Deep learning of graph matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2684–2693. [Google Scholar]

- Bouzid, A. Automatic Target Recognition with Deep Metric Learning. Master’s Thesis, University of Louisville, Louisville, KY, USA, 2020. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Rippel, O.; Paluri, M.; Dollar, P.; Bourdev, L. Metric learning with adaptive density discrimination. arXiv 2015, arXiv:1511.05939. [Google Scholar]

- Zhe, X.; Chen, S.; Yan, H. Directional statistics-based deep metric learning for image classification and retrieval. Pattern Recognit. 2019, 93, 113–123. [Google Scholar] [CrossRef]

- Wang, B.H.; Wang, Y.; Weinberger, K.Q.; Campbell, M. Deep Person Re-identification for Probabilistic Data Association in Multiple Pedestrian Tracking. arXiv 2018, arXiv:1810.08565. [Google Scholar]

- Jiang, Y.F.; Shin, H.; Ju, J.; Ko, H. Online pedestrian tracking with multi-stage re-identification. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Bonetto, M.; Korshunov, P.; Ramponi, G.; Ebrahimi, T. Privacy in mini-drone based video surveillance. In Proceedings of the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, 4–8 May 2015; Volume 4, pp. 1–6. [Google Scholar]

- Hirzer, M.; Beleznai, C.; Roth, P.M.; Bischof, H. Person re-identification by descriptive and discriminative classification. In Scandinavian Conference on Image Analysis; Springer: Berlin/Heidelberg, Germany, 2011; pp. 91–102. [Google Scholar]

- Layne, R.; Hospedales, T.M.; Gong, S. Investigating open-world person re-identification using a drone. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 225–240. [Google Scholar]

- Singh, A.; Patil, D.; Omkar, S. Eye in the sky: Real-time Drone Surveillance System (DSS) for violent individuals identification using ScatterNet Hybrid Deep Learning network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1629–1637. [Google Scholar]

- Yang, F.; Chang, X.; Sakti, S.; Wu, Y.; Nakamura, S. Remot: A model-agnostic refinement for multiple object tracking. Image Vis. Comput. 2021, 106, 104091. [Google Scholar] [CrossRef]

- Papakis, I.; Sarkar, A.; Karpatne, A. Gcnnmatch: Graph convolutional neural networks for multi-object tracking via sinkhorn normalization. arXiv 2020, arXiv:2010.00067. [Google Scholar]

- Berclaz, J.; Fleuret, F.; Turetken, E.; Fua, P. Multiple object tracking using k-shortest paths optimization. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1806–1819. [Google Scholar] [CrossRef] [PubMed]

- Zamir, A.R.; Dehghan, A.; Shah, M. Gmcp-tracker: Global multi-object tracking using generalized minimum clique graphs. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2012; pp. 343–356. [Google Scholar]

- Zheng, W.S.; Gong, S.; Xiang, T. Person re-identification by probabilistic relative distance comparison. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 649–656. [Google Scholar]

- Dikmen, M.; Akbas, E.; Huang, T.S.; Ahuja, N. Pedestrian recognition with a learned metric. In Asian Conference on Computer Vision; Springer: Cham, Switzerland, 2010; pp. 501–512. [Google Scholar]

- Li, W.; Zhao, R.; Xiao, T.; Wang, X. Deepreid: Deep filter pairing neural network for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 152–159. [Google Scholar]

- Yi, D.; Lei, Z.; Liao, S.; Li, S.Z. Deep metric learning for person re-identification. In Proceedings of the 22nd International Conference on Pattern Recognition, Washington, DC, USA, 24–28 August 2014; pp. 34–39. [Google Scholar]

- John, V.; Englebienne, G.; Kröse, B.J. Solving Person Re-identification in Non-overlapping Camera using Efficient Gibbs Sampling. In Proceedings of the BMVC, Bristol, UK, 9–13 September 2013. [Google Scholar]

- Avraham, T.; Gurvich, I.; Lindenbaum, M.; Markovitch, S. Learning implicit transfer for person re-identification. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2012; pp. 381–390. [Google Scholar]

- Koestinger, M.; Hirzer, M.; Wohlhart, P.; Roth, P.M.; Bischof, H. Large scale metric learning from equivalence constraints. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2288–2295. [Google Scholar]

- Kumar, S.A.; Yaghoubi, E.; Das, A.; Harish, B.; Proença, H. The p-destre: A fully annotated dataset for pedestrian detection, tracking, and short/long-term re-identification from aerial devices. IEEE Trans. Inf. Forensics Secur. 2020, 16, 1696–1708. [Google Scholar] [CrossRef]

- Prokudin, S.; Gehler, P.; Nowozin, S. Deep directional statistics: Pose estimation with uncertainty quantification. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 534–551. [Google Scholar]

- Hasnat, M.; Bohné, J.; Milgram, J.; Gentric, S.; Chen, L. von mises-fisher mixture model-based deep learning: Application to face verification. arXiv 2017, arXiv:1706.04264. [Google Scholar]

- Straub, J.; Chang, J.; Freifeld, O.; Fisher, J., III. A Dirichlet process mixture model for spherical data. In Proceedings of the Artificial Intelligence and Statistics, San Diego, CA, USA, 9–12 May 2015; pp. 930–938. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide residual networks. arXiv 2016, arXiv:1605.07146. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Dai, K.; R-FCN, Y. Object detection via region-based fully convolutional networks. arXiv 2016, arXiv:1605.06409. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Bernardin, K.; Stiefelhagen, R. Evaluating multiple object tracking performance: The clear mot metrics. EURASIP J. Image Video Process. 2008, 2008, 246309. [Google Scholar] [CrossRef]

- Bergmann, P.; Meinhardt, T.; Leal-Taixe, L. Tracking without bells and whistles. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 941–951. [Google Scholar]

- Bochinski, E.; Senst, T.; Sikora, T. Extending IOU based multi-object tracking by visual information. In Proceedings of the IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar]

- Bochinski, E.; Eiselein, V.; Sikora, T. High-speed tracking-by-detection without using image information. In Proceedings of the 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 4690–4699. [Google Scholar]

- Subramaniam, A.; Nambiar, A.; Mittal, A. Co-segmentation inspired attention networks for video-based person re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 562–572. [Google Scholar]

| Total number of videos | 75 |

| Frames Per Second (fps) | 30 |

| Total number of identities | 269 |

| Total number of annotated instances | 318,745 |

| Camera range distance | [5.5–6.7] m |

| Method | Backbone | AP |

|---|---|---|

| RetinaNet [39] | ReseNet-50 | 63.10% ± 1.64% |

| R-FCN [40] | ReseNet-101 | 59.29% ± 1.31% |

| SSD [41] | Inception-V2 | 55.63% ± 2.93% |

| YOLO V4 [37] | CSPResNext50 | 65.70% ± 2.40% |

| Method | MOTA | MOTP | F-1 |

|---|---|---|---|

| TracktorCv [43] | 56.00% ± 3.70% | 55.90% ± 2.60% | 87.40% ± 2.00% |

| V-IOU [44] | 47.90% ± 5.10% | 51.10% ± 5.80% | 83.30% ± 8.40% |

| IOU [45] | 38.27% ± 8.42% | 39.68% ± 4.92% | 74.29% ± 6.87% |

| Ours (based on vMF) | 59.30% ± 3.50% | 56.10% ± 5.61% | 92.10% ± 3.20% |

| Method | mAP | Rank-1 | Rank-20 | Mean Direction |

|---|---|---|---|---|

| ArcFace [46] + COSAM [47] | 34.90% ± 6.43% | 49.88% ± 8.01% | 70.10% ± 11.25% | — |

| vMF identifier | 37.85% ± 3.42% | 53.81% ± 4.50% | 74.61% ± 8.50% | 64.45% ± 3.90% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bouzid, A.; Sierra-Sosa, D.; Elmaghraby, A. Directional Statistics-Based Deep Metric Learning for Pedestrian Tracking and Re-Identification. Drones 2022, 6, 328. https://doi.org/10.3390/drones6110328

Bouzid A, Sierra-Sosa D, Elmaghraby A. Directional Statistics-Based Deep Metric Learning for Pedestrian Tracking and Re-Identification. Drones. 2022; 6(11):328. https://doi.org/10.3390/drones6110328

Chicago/Turabian StyleBouzid, Abdelhamid, Daniel Sierra-Sosa, and Adel Elmaghraby. 2022. "Directional Statistics-Based Deep Metric Learning for Pedestrian Tracking and Re-Identification" Drones 6, no. 11: 328. https://doi.org/10.3390/drones6110328

APA StyleBouzid, A., Sierra-Sosa, D., & Elmaghraby, A. (2022). Directional Statistics-Based Deep Metric Learning for Pedestrian Tracking and Re-Identification. Drones, 6(11), 328. https://doi.org/10.3390/drones6110328