1. Introduction

Unmanned Aerial Vehicles (UAVs), owing to their cost-effectiveness and high efficiency, have found widespread applications in numerous critical domains, including military reconnaissance, security surveillance, and precision agriculture [

1,

2]. However, the unauthorized or excessive operation of UAVs has concurrently raised significant security and privacy concerns [

3], posing a substantial threat to low-altitude airspace security [

4]. Consequently, the detection of airborne UAVs is a critical component in safeguarding low-altitude airspace and mitigating potential threats.

Presently, anti-UAV object detection methodologies predominantly draw upon advancements in the general object detection field [

4], broadly categorized into traditional computer vision techniques and deep learning-based approaches. Early traditional methods, such as those based on background subtraction or employing handcrafted features like Haar [

5] and HOG [

6], could achieve preliminary UAV detection in scenarios with minimal background interference. However, these methods rely on empirically designed features with limited representative power, struggling to adapt to real-world scenarios involving complex backgrounds, varying illumination conditions, or rapid UAV movements, thereby resulting in suboptimal detection accuracy and robustness.

To support the development and fair comparison of anti-UAV detection methods, several public benchmark datasets have been released in recent years. Among them, DUT-Anti-UAV [

7] is a representative large-scale dataset featuring a high proportion of small UAV targets captured in complex outdoor environments, while Det-Fly [

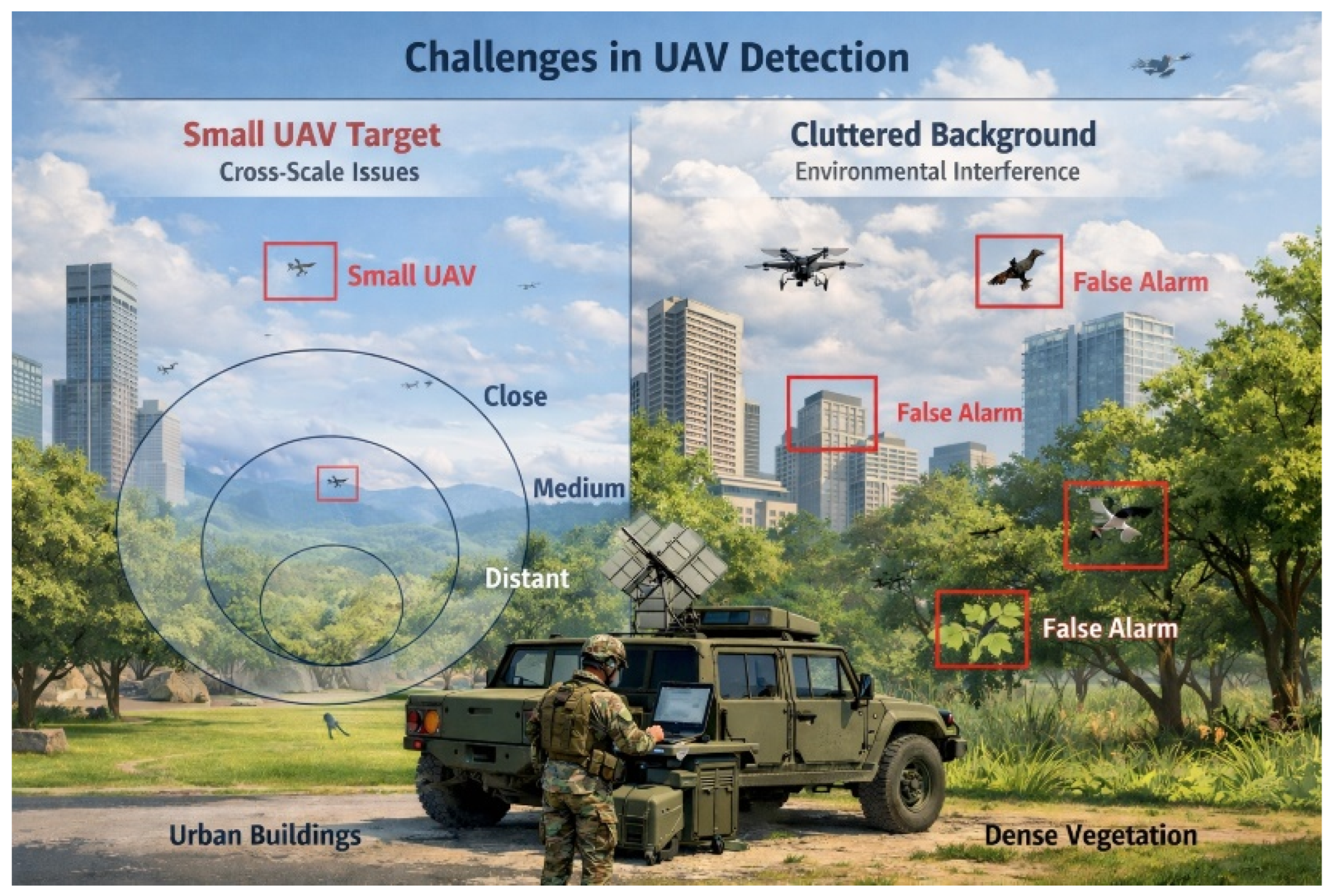

8] provides diverse UAV instances under varying viewpoints and background conditions. These benchmarks offer standardized evaluation settings and serve as important testbeds for validating detection performance in realistic scenarios;Nevertheless, UAV detection in such real-world environments remains highly challenging due to extreme scale variation of small targets and severe interference from cluttered backgrounds (e.g., urban buildings, dense vegetation, and complex sky scenes). As illustrated in

Figure 1, small UAVs often occupy only a few pixels and share similar visual characteristics with surrounding background structures, which makes accurate detection particularly difficult under complex outdoor conditions.

With the advent of deep learning, Convolutional Neural Network (CNN)-based object detectors, particularly single-stage algorithms represented by the YOLO series (e.g., YOLOv8 [

9], YOLOv10 [

10], YOLOv12 [

11]), have demonstrated significant potential and progressively become mainstream in anti-UAV detection tasks. Given that UAV targets exhibit variable scales and are often subject to interference from complex backgrounds, researchers have introduced numerous modifications to these general frameworks from various perspectives. On the ond hand, to address the scale variations of UAV targets, some works have focused on fusing features from different scales to enhance the visual representation of UAVs [

12], thereby improving detection capabilities for UAVs of varying sizes. To achieve this, researchers have designed efficient multi-scale feature fusion modules, such as FPN [

13], PANet [

14], and BiFPN [

15]. Zhai et al. [

16] incorporated multi-scale prediction layers into YOLOv3, utilizing the last four feature maps for multi-scale prediction, which enhanced the detection performance for small targets. Jiang et al. [

17] designed a Reparameterized Cross-stage Efficient Layer Aggregation Network (RCELAN) and a Bidirectional Feature Pyramid Network (BiFPN) to bolster multi-scale feature processing capabilities, thereby improving detection accuracy. Huang et al. [

18] improved upon the YOLOv8 model by employing Deformable Convolutional Networks v2 (DCNv2) to adaptively handle local details and scale variations of UAVs. Zhang et al. [

19] significantly enhanced the detection capability of YOLOv10 for extremely small UAVs by introducing bidirectional scale attention to fuse information across different scales. Gao et al. [

20] improved YOLOv11 by incorporating Haar wavelet downsampling and adding a dedicated small object detection head, markedly boosting detection accuracy for small-sized UAVs.

In addition to CNN-based detectors, Transformer-based approaches have recently attracted increasing attention in UAV detection due to their strong capability in modeling global context and long-range dependencies. Several studies have introduced Vision Transformer or DETR-style frameworks into UAV detection and tracking, demonstrating improved robustness under occlusion, cluttered backgrounds, and target re-appearance. However, most existing Transformer-based methods primarily perform non-local modeling within a single feature scale, which limits their ability to effectively capture cross-scale semantic relationships that are crucial for detecting small UAVs with significant scale variations.

On the other hand, to tackle complex background interference, some studies have focused on enhancing the features to extract more useful contextual information [

21], thereby addressing interference and improving the overall scene understanding for UAVs. Various attention mechanisms have been integrated into anti-UAV detection models, such as CBAM [

22] and SimAM [

23]. Huang et al. [

24] proposed a Dual Semantic Feature Extraction mechanism (DS-RPN) to generate target candidate boxes, eliminating complex background interference through extracted semantic features. Zhao et al. [

25] integrated Transformer encoders, global attention, and coordinate attention into the C3 module of YOLOv5, improving the detection accuracy and robustness for UAV targets in complex environments. Ma et al. [

26] proposed the LA-YOLO network based on YOLOv5, which integrates the SimAM attention mechanism and a normalized Wasserstein distance fusion block, enhancing detection accuracy in low-altitude backgrounds. Bo et al. [

27] adjusted prior box sizes and introduced a Get-and-Send module into YOLOv7, improving object detection accuracy in complex backgrounds. Shi et al. [

28] introduced graph attention to retain more foreground information while reducing the impact of the background.

Despite recent advancements, the detection accuracy of existing methods often deteriorates in challenging real-world environments like urban districts and parks. Two primary factors contribute to this degradation: the inherently small scale of UAV targets and severe interference from cluttered backgrounds [

29], including buildings and vegetation. We posit that the primary reasons for this issue are twofold: (1) Insufficient cross-scale information interaction: UAVs present diverse scale variations at different distances. Existing multi-scale feature fusion methods often rely on simple addition or concatenation, failing to adequately explore and leverage the deep correlations and complementarities between feature maps at different scales. For example, widely adopted architectures such as FPN [

13], PANet [

14], and BiFPN [

15] perform cross-scale feature fusion mainly through element-wise addition or channel concatenation along top-down and/or bottom-up pathways. Specifically, they lack effective modeling of cross-scale global dependencies, which refer to explicit interactions among feature representations at different spatial resolutions, a capability that is later explicitly modeled by the proposed CNFI module, which limits the model’s ability to distinguish targets of varying scales, particularly affecting the detection robustness for small objects. (2) Inadequate capability to counteract complex environmental interference: Complex backgrounds (e.g., clouds in the sky, building contours, tree branches, moving vegetation) often contain textures or shapes similar to UAVs, causing severe interference to detection. Existing models, even when incorporating CNNs or Transformers to enhance contextual modeling, often fail to specifically distinguish intricate details within the image. Consequently, they struggle to effectively suppress background noise and accurately differentiate targets from distractors.

To address these aforementioned challenges, this paper introduces an anti-UAV object detection method founded on non-local feature learning, which serves as the fundamental principle underlying the design of the proposed Cross-Scale Non-local Feature Interaction (CNFI) and Non-local Feature Enhancement (NFE) modules, and is implemented within an end-to-end object detection framework. The core idea of this method is to overcome the limitations of traditional approaches in modeling cross-scale global dependencies and effectively enhancing target feature representations. Specifically, we design two key modules: (1) Cross-Scale Non-local Feature Interaction (CNFI) module: Addressing the issue that existing non-local attention mechanisms are typically confined to intra-scale interactions, the CNFI module is designed to explicitly model long-range dependencies between feature maps at disparate scales. By computing correlations between pairs of feature pixels across scales, CNFI can integrate global contextual information, effectively suppress interference from background regions at different scales, and enhance the perception of small targets. (2) Non-local Feature Enhancement (NFE) module: To further improve the discriminability of UAV features amidst complex background interference, the NFE module fuses global contextual information, acquired via non-local attention, with low-level structural cues such as image gradients. This combination enables the model to focus not only on semantic information but also on the boundary and shape details of the target. Consequently, in scenarios with complex backgrounds and weak target features, it reinforces the feature representation of UAVs, thereby enhancing detection precision.

The major contributions of this study are summarized as follows:

We propose a Cross-scale Non-local Feature Interaction (CNFI) module to facilitate deep interactions among multi-scale features, thereby enhancing the model’s adaptability to significant target scale variations.

We design a Non-local Feature Enhancement (NFE) module that integrates global semantic context with gradient-based structural cues, effectively improving robustness against complex background interference.

Extensive experiments on the DUT-Anti-UAV and Det-Fly datasets demonstrate that the proposed method consistently outperforms state-of-the-art anti-UAV detection approaches.

The remainder of this paper is organized as follows:

Section 2 reviews related work.

Section 3 details the overall architecture of the proposed model.

Section 4 describes the experimental setup, evaluation metrics, and results.

Section 5 discusses the limitations of our method and outlines future directions. Finally,

Section 6 concludes the paper.

2. Literature Review

UAV detection has become an essential research topic in recent years due to the rapid proliferation of drones in civil, industrial, and military environments. Early anti-UAV detection methods predominantly relied on handcrafted visual features such as Haar [

5] and HOG [

6], which suffer from limited robustness under illumination variation, cluttered backgrounds, and rapid UAV motion. Traditional background subtraction and motion-based approaches also struggle in complex scenes due to handcrafted assumptions that fail under dynamic environments.

With the advancement of deep learning, CNN-based object detectors have become the dominant choice for UAV detection. A number of YOLO-series networks, including YOLOv3, YOLOv11, and YOLOv12 [

11,

20,

30], have significantly improved detection accuracy and efficiency. However, these models often fail to capture fine-grained details of small UAV targets, especially when targets occupy less than 1% of the image area. To address scale variation, multi-scale feature fusion structures such as FPN [

13], PANet [

14], BiFPN [

15], and their numerous variants have been widely adopted. Several UAV-specific studies further extend these architectures. For example, Zhai et al. [

16] extended multi-layer prediction for better small-target detection, while Jiang et al. [

17] introduced a reparameterized feature aggregation design to enhance multi-scale robustness. Zhang et al. [

19] improved YOLOv10 with bidirectional scale attention to capture enhanced multi-resolution dependencies.

In parallel, local attention mechanisms have shown notable progress in enhancing feature representation quality. Lightweight attention modules such as CBAM [

22] and SimAM [

23] have been widely integrated into UAV detectors to suppress background noise and highlight discriminative regions. Ma et al. [

26] fused SimAM and Wasserstein normalization for low-altitude background suppression, while Bo et al. [

27] improved YOLOv7 by refining spatial focus. Additionally, graph attention and context-aware modules [

21,

28] have been explored to enhance foreground feature retention.

Recent advances in Transformers [

31,

32] have introduced powerful global context modeling capabilities. Yu et al. [

33] build their anti-UAV tracker around a Transformer-based local tracker and augment it with Transformer-style global re-detection and background-aware alignment modules, enabling attention to maintain target–context associations under occlusion, drift, and re-appearance. Zhu et al. [

34] adapt the DETR Transformer paradigm for infrared small-UAV-swarm detection by improving how queries attend to small targets—via enhanced cross-level feature aggregation and geometry-aware attention—so that global self-attention better separates dense, low-contrast instances. However, they only compute non-local attention within a single scale, which restricts their ability to capture semantic relationships across different scales. Considering that UAV features vary drastically across scales—lower-resolution maps contain global semantic cues, while higher-resolution maps retain boundary details—pure intra-scale, non-local modeling in a single scale is insufficient for robust UAV detection.

To address challenges posed by complex backgrounds, several studies incorporate gradient or structure priors. Gradient-based enhancement methods [

35,

36] and edge-aware convolution modules [

37,

38] boost contour clarity and improve localization accuracy. Multi-branch convolution designs have also been proposed to enrich multi-receptive-field representation [

39,

40], although they lack the ability to capture global context. Recent works integrate global and local structural cues via hybrid attention [

23], but few studies explicitly couple gradient features with non-local modeling.

Anti-UAV detection also faces challenges in dynamic outdoor environments. Small UAVs often appear in highly cluttered scenes such as foliage, urban areas, and sky regions with varying illumination. Studies have reported that complex backgrounds contribute heavily to false positives, while small-scale targets lead to significant false negatives [

29]. Video-based tracking approaches can alleviate some of these issues, yet single-frame detectors remain preferable in many real-time surveillance applications due to lower complexity requirements.

In summary, existing research provides valuable progress in multi-scale fusion, attention-based enhancement, and contextual modeling. However, two critical limitations remain: (1) multi-scale fusion largely relies on local operations and lacks explicit cross-scale non-local interaction; (2) non-local attention methods seldom incorporate gradient cues, limiting boundary-awareness. These deficiencies directly motivate the design of our CNFI and NFE modules, which address the limitations of current methods by reinforcing cross-scale semantic relationships and enhancing gradient-aware structural cues, respectively.

3. Method

Currently, anti-UAV object detection faces two primary challenges: first, insufficient cross-scale information interaction, and second, inadequate robustness against complex environmental interference. To address these challenges, this paper proposes: (1) a Cross-scale Non-local Feature Interaction (CNFI) module to resolve the issue of inadequate cross-scale information interaction, and (2) a Non-local Feature Enhancement (NFE) module to bolster target feature representation and suppress complex background interference. These modules are integrated into an end-to-end detection system, in which non-local feature learning is employed as the core mechanism to model cross-scale dependencies and enhance feature representations.

The overall architecture of the network is illustrated in

Figure 2a. Given an input image, the network initially extracts foundational features through the initial convolutional layers of the backbone. Subsequently, these foundational features are progressively enhanced by multi-stage NFE modules to reinforce the representation of target boundaries and shapes. During this process, features from different levels are combined via upsamplingdownsampling and concatenation operations to form a multi-scale feature pyramid. Next, the CNFI module facilitates deep interaction among features from different scales, thereby augmenting the detection capability for small targets. Finally, the enhanced multi-scale features are fed into three detection branches to generate detection results at different scales.

Specifically, the network first employs a backbone similar to that of YOLOv12 [

11] for multi-scale feature extraction. Unlike YOLOv12 [

11], this paper replaces the original C3f modules with the designed NFE modules to enhance feature representation capabilities and counteract interference from complex backgrounds. Subsequently, the extracted multi-scale features are processed by the CNFI module for fusion and interaction, enabling comprehensive learning of cross-scale information to adaptively address multi-scale targets. Following CNFI processing, these multi-scale features are progressively upsampled and fused with the multi-scale features extracted by the backbone, and then further enhanced by NFE modules at different scales. Finally, detection is performed using a detection head consistent with that of YOLOv12 to obtain the final detection results.

3.1. Cross-Scale Non-Local Feature Interaction (CNFI)

Traditional feature fusion methods, such as FPN [

13], typically employ simple addition or concatenation operations. Such approaches often fail to adequately capture the complex correlations between features at different scales. Existing non-local attention mechanisms are also primarily confined to feature interactions within a single scale [

32,

41,

42,

43], making it difficult to establish global dependencies across scales. UAV targets exhibit variable sizes in images, and features from different scales contain complementary information [

43]; for instance, small-scale feature maps contain more global semantic information, while large-scale feature maps possess richer spatial details. Evidently, effective integration of this cross-scale information is crucial for enhancing small object detection capabilities, particularly in anti-UAV scenarios. Therefore, this paper proposes the CNFI module, which facilitates interaction among multi-scale features and constructs a cross-scale attention computation mechanism to efficiently integrate cross-scale information.

In the CNFI module, multi-scale features are encoded as scale-specific queries , while a shared Key–Value memory is constructed by concatenating all encoded features, i.e., . Each query attends to the same K–V bank, enabling explicit cross-scale non-local interaction. Compared with pairwise cross-scale attention, this shared KV design provides a unified global context and reduces computational overhead, which is particularly beneficial for small-UAV detection under extreme scale variation and cluttered backgrounds.

The detailed structure of the CNFI module is depicted in

Figure 2b. The module receives multiple feature maps (

,

,

) from different feature levels as input. Subsequently, each input feature map is processed by an encoder (EnC = Conv + DP) to generate query features

,

,

:

where

denotes the feature encoding operation for the i-th scale, implemented via a Convolution (Conv) layer followed by a Downsampling (DP) operation. To enable interaction across scales, the resolutions of these features are uniformed, typically by down-sampling all features to match the resolution of the smallest feature map, thereby reducing computational complexity. The encoding for different scales employs distinct downsampling factors.

After encoding the input features at different scales, they are concatenated along the channel dimension to form a shared key K and value V representation across scales. This design allows the key and value to jointly encode complementary information from multiple scales, while the query preserves scale-specific characteristics. As a result, the query from each individual scale computes attention with the same K–V pair, enabling explicit cross-scale information interaction. In this way, the Cross-scale Non-local Attention module constructs a correlation matrix that captures dependencies among features from different scales.

For the

i-th scale, the attention weights are computed as:

where

d denotes the feature dimensionality used for normalization. This operation measures the similarity between the scale-specific query

and the shared key

K, producing attention weights that reflect the relative importance of features from different scales.

Based on the obtained attention weights, cross-scale contextual information is aggregated as:

Finally, the aggregated feature

is transformed back to the original scale through a decoder and combined with the input feature

via a residual connection to produce the output feature:

where

denotes the decoding operation for the

i-th scale, implemented by a convolution layer followed by an upsampling operation. A more intuitive description of the overall procedure is provided in Algorithm 1.

| Algorithm 1 Cross-scale Non-local Feature Interaction (CNFI) |

- 1:

for each scale i do - 2:

- 3:

end for

- 4:

- 5:

- 6:

for each scale i do - 7:

- 8:

end for

- 9:

for each scale i do - 10:

- 11:

end for

- 12:

for each scale i do - 13:

- 14:

end for

|

Complexity analysis of Algorithm 1: Let denote the multi-scale input features, where . In CNFI, all encoded features are spatially unified to the smallest resolution via downsampling, and we denote the number of spatial tokens by . After encoding, each query feature is reshaped as , where d is the embedding dimension. The shared key/value are constructed by channel concatenation across scales, leading to with S scales (here ). Then we discuss the time complexity and memory complexity of Algorithm 1 as below:

(1) Time complexity: The dominant computation in Algorithm 1 is the cross-scale attention and aggregation. For each scale i, the matrix multiplication costs , and the aggregation costs . Thus, the overall attention-related cost is , which becomes for . The encoder/decoder (Conv + Down/Up sampling) contribute lower-order terms that are linear in N. Specifically, a convolution mapping costs at the unified resolution, and the total convolutional overhead across scales is .

(2) Memory complexity: The main memory consumption comes from storing attention maps , which requires memory per scale. Therefore, the total memory complexity for S scales is , i.e., when . Importantly, by downsampling all scales to the smallest feature resolution, CNFI keeps N relatively small, making the additional overhead practically manageable.

3.2. Non-Local Feature Enhancement (NFE)

In complex backgrounds, UAVs often manifest as small targets with weak features, which can be easily overwhelmed by background interference possessing similar textures [

37], a common challenge in anti-UAV detection. Traditional CNN-based detection methods primarily rely on convolutional operations to extract local features [

38,

44,

45], making it difficult to establish relationships between distant pixels. Conversely, Transformer-based detection methods, while capable of capturing global dependencies via non-local attention [

31], often overlook crucial detail information such as target boundaries and shapes. To simultaneously enhance the perception of both global context and local target details, the NFE module integrates non-local attention with gradient enhancement to jointly model global semantic context and local structural cues. Non-local attention captures long-range dependencies to enhance semantic discrimination between UAV targets and complex backgrounds, while gradient enhancement emphasizes contour-related boundary information. This complementary design improves boundary awareness and localization accuracy, particularly for small UAV targets in cluttered scenes.

The NFE module comprises two core sub-modules: Non-local Gradient Attention (NGA) and Gradient Enhancement Conv Block (GEC). These two modules are detailed below.

3.2.1. Non-Local Gradient Attention (NGA)

The NGA sub-module focuses on enhancing the global gradient information of the image, which is a critical low-level cue for object recognition and localization. Gradient information inherently captures edge and structural details, which are vital for distinguishing targets [

35,

36], particularly small or weakly textured ones like UAVs, from complex backgrounds. Traditional convolutional operations have limited receptive fields and may struggle to effectively model long-range dependencies between these crucial structural elements. The NGA module, addresses this by integrating gradient-derived features with a non-local gradient attention mechanism. The motivation for explicitly using gradient information is to provide the attention mechanism with robust structural cues. This allows the module to capture global contextual relationships between important edge and texture details, enabling it to selectively enhance gradient features that are globally consistent with the structure of target while suppressing irrelevant background clutter. This approach is particularly beneficial in scenarios where local information is ambiguous, as the global context provided by the non-local attention on gradients can help disambiguate and reinforce true target features.

The specific details of NGA are illustrated in

Figure 2d. An input feature map (

X) is first rearranged through a regrouping operation (R) to obtain the query

. To capture edge and structural details, gradient information from the feature map is extracted using a regrouping and gradient extraction operation (RG) to generate the key

and value

. This can be expressed as:

where

X represents the input feature,

denotes an operation that directly rearranges the features, and

represents an operation that extracts gradients (e.g., from the 8-neighborhood) and then rearranges them.

Given an input feature map

, for each channel

c and spatial location

, we define the 8-neighborhood offset set

The neighbor-to-center residual in direction

is computed by

and the RG response is obtained by concatenate these residuals along channel dimension:

where

denotes the channel concatenate operation.

Subsequently, non-local attention is computed as:

The value matrix

is then weighted by the attention weights, and the resulting weighted features are added to the original features to produce the output feature:

3.2.2. Gradient Enhancement Conv Block (GEC)

The design of GEC is fundamentally motivated by the imperative to efficiently capture multi-scale contextual information, a critical requirement for robust anti-UAV detection where targets exhibit significant scale variations and are often embedded in complex visual environments [

46]. Drawing inspiration from multi-branch architectures [

39,

47], GEC employs parallel convolutional pathways operating on distinct channel groups [

40]. Each pathway is configured to specialize in extracting features at different receptive fields, allowing the module to concurrently analyze both fine-grained details crucial for small or distant UAVs and broader contextual cues vital for discriminating targets from background clutter. These diverse, scale-specific features are then aggregated and refined through a residual connection, leading to a more comprehensive and discriminative representation. This strategy enables the GEC to learn a rich set of features tailored for varying target appearances, thereby enhancing the model’s ability to discern UAVs under challenging conditions with improved efficiency.

Details of GEC are shown in

Figure 2c. GEC first divides the input feature into 4 channel groups, each comprising 1/4 of the total channels:

Then, each group is processed by a parallel convolution branch with a distinct kernel size to capture multi-scale receptive fields. Specifically, the four branches adopt convolution kernels of

,

,

, and

, respectively:

Finally, the features processed by all branches are fused via a channel concatenation operation and then added to the original input:

Through the aforementioned design, the NFE module leverages non-local attention mechanisms to capture global contextual information while simultaneously preserving target boundary and shape details via gradient enhancement. Consequently, it effectively enhances the discriminability of target features in scenarios with complex backgrounds and weak target characteristics, thereby improving detection precision for the anti-UAV detection task.

4. Experiments and Analysis

In this section, experiments were conducted on publicly available datasets to validate the effectiveness of the proposed method. The experimental process encompassed several aspects: first, the experimental datasets and implementation details are detailed; subsequently, the results of ablation studies are thoroughly analyzed and interpreted; finally, the detection performance of the proposed method is further validated through comparisons with baseline models and other distinct YOLO series models.

4.1. Datasets and Implementation Details

All experiments were conducted on a Linux server equipped with four NVIDIA GeForce RTX 4090 GPUs. The proposed method was implemented in PyTorch (v1.8.0) with CUDA (v11.8), using Python (v3.10.0) under Ubuntu (v18.04). We conducted experiments on the DUT-Anti-UAV and Det-Fly datasets. Following common practice, all images were resized to during both training and inference. During training, we used the Adam optimizer with a batch size of 24 for 300 epochs, an input image size of , and an initial learning rate of 0.01. The learning rate was scheduled using cosine decay with a warm-up of 15 epochs. Unless otherwise stated, all hyper-parameters were kept identical across ablation settings to ensure a fair evaluation. For efficiency analysis, we additionally report the number of parameters, FLOPs (at ), and inference speed in FPS under the same hardware settings.

The experiments were conducted on the publicly available DUT-Anti-UAV dataset, which comprises 5243 training images, 2245 validation images, and 2621 test images. The most significant characteristic of this dataset is the larger proportion of small targets. Specifically, within this dataset, the average target area ratio is 0.013, the minimum target area ratio is 1.9 × 10

−6, and the maximum target occupies 0.7 of the entire image. The proportion of target sizes relative to the entire image is generally less than 0.05.

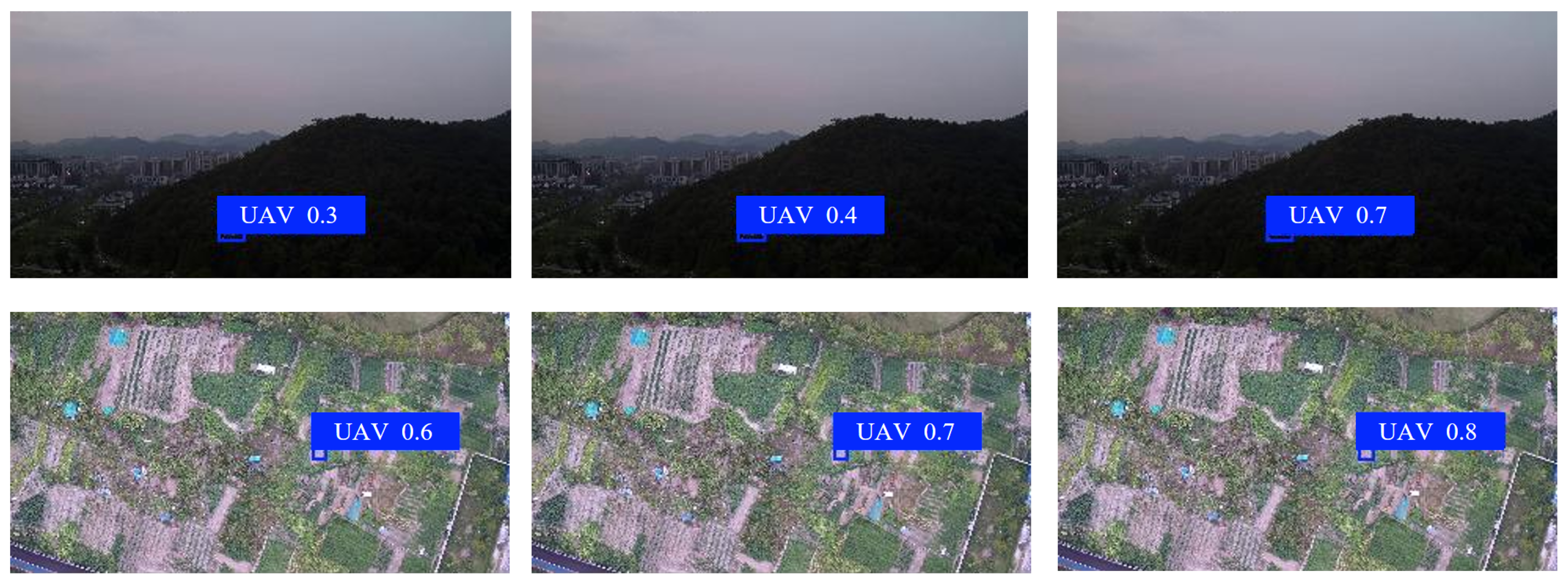

Figure 3 illustrates several typical scenarios from the DUT-Anti-UAV dataset.

In this paper, the performance of the anti-UAV detection model is evaluated using common evaluation metrics. During evaluation, Recall (R) represents the percentage of correctly identified targets, while Precision (P) measures the accuracy of the model in identifying targets. Mean Average Precision (mAP) is employed to comprehensively assess its object detection accuracy. The calculation of these metrics is detailed below, along with their respective formulas:

where

,

,

, and

represent True Positives, False Positives, False Negatives, and Average Precision, respectively.

4.2. Ablation Study

To validate the rationale behind the proposed model, a series of ablation studies were conducted on the DUT-Anti-UAV dataset. Detailed experimental results are presented in

Table 1. Using the YOLOv12n [

11] model as the baseline, the experiments evaluated the effectiveness of the CNFI and NFE modules. When the CNFI module was introduced independently, the detection performance showed a slight improvement: Precision, Recall, and mAP50 increased by 0.30%, 0.33%, and 0.71%, respectively. The independent introduction of NFE led to a significant enhancement in model performance, with experimental results indicating improvements in Precision, Recall, and mAP50 by 0.51%, 0.66%, and 0.63%, respectively. When both CNFI and NFE were incorporated, the model achieved optimal performance, with improvements over the baseline of 0.93% in Precision, 1.09% in Recall, and 2.12% in mAP50. The 2.12% mAP50 improvement is mainly attributed to our CNFI/NFE modules, which provide explicit cross-scale non-local interaction and structure-aware enhancement. Unlike conventional FPN/PAN/BiFPN-style add/concat fusion or scale-wise gating that remains largely local, our design enables direct long-range cross-scale dependency modeling (via a shared multi-scale key–value) and boundary-sensitive discrimination, demonstrating its practical relevance for anti-UAV detection.

Although the numrical improvement of 2.12% may appear moderate, it is practically meaningful in real-world anti-UAV surveillance scenarios. UAV targets are typically extremely small, exhibit significant scale variations across distances, and are often embedded in cluttered backgrounds such as vegetation, building contours, and clouds. Under such high-risk and low-tolerance conditions, a consistent gain in mAP@0.5 indicates a more stable precision–localization trade-off across confidence thresholds, enabling the detector to capture more challenging UAV instances while maintaining reliable bounding-box quality.

To further validate the effectiveness of CNFI, a comparison of target detection results at different scales was conducted. As shown in

Figure 4, without CNFI, the model could primarily detect large-scale targets, exhibiting lower accuracy for small targets. However, with the introduction of CNFI, the model became capable of detecting targets across various scales. These comparative results indicate that the proposed CNFI, by facilitating thorough interaction of multi-scale features, can effectively capture targets of different sizes and demonstrates good robustness in small target scenarios.

To verify the efficacy of NFE, detection results before and after its introduction in complex scenarios were compared.

Figure 5 illustrate that in complex scenes, due to the indistinct details of UAV targets, the baseline model’s detection accuracy was suboptimal. Conversely, after incorporating NFE, the model’s detection accuracy improved significantly. This demonstrates that the proposed NFE can enhance target detection performance in complex scenarios.

Moreover, to further investigate the effectiveness of our proposed CNFI and NFE modules, we conduct comprehensive ablation studies on the Det-Fly [

48] dataset using an incremental validation approach. The quantitative results are summarized in

Table 2.

Using YOLOv12n [

11] as the baseline without any proposed modules, we first evaluate the individual contribution of the CNFI module in model-3. Interestingly, while the Precision improves from 84.21% to 86.00%, the Recall and mAP0.5 show slight decreases from 50.24% to 50.01% and 55.65% to 55.52%, respectively. This phenomenon suggests that CNFI alone enhances detection confidence, but may require complementary mechanisms to maintain recall performance.

The true potential of CNFI is revealed when combined with NFE in our final model. As shown in

Figure 6, the standalone CNFI in model-3 demonstrates improved capability in detecting targets across multiple scales compared to the baseline, particularly for small UAVs that occupy less than 5% of the image area. The synergistic effect between CNFI and NFE is particularly evident in complex scenarios. As illustrated in the second row of

Figure 6, while model-3 with CNFI shows better scale adaptation, the complete model excels in challenging environments with complex backgrounds. The NFE module significantly enhances feature representation in these complex settings, working complementarily with CNFI’s multi-scale fusion capability to achieve robust detection performance across all environmental conditions in the Det-Fly dataset.

These comprehensive results demonstrate that while CNFI provides the foundation for multi-scale target detection, its full potential is unlocked when integrated with NFE, creating a powerful framework that effectively addresses both scale variation and environmental complexity in aerial UAV detection tasks.

To further validate the effectiveness of the proposed NFE design and to explicitly analyze the contribution of its core components, we conduct an ablation study by constructing dedicated control variants on the Det-Fly dataset. Specifically, we design a variant that incorporates only the non-local gradient aggregation module (NGA) without gradient enhancement convolution (model-4), where model-3 is regard as the baseline.

As reported in

Table 3, introducing NGA alone significantly improves detection performance over the baseline, indicating the importance of non-local semantic aggregation for suppressing background interference. More importantly, when gradient-based enhancement (GEC) is further fused with NGA, the full NFE module achieves consistent and substantial gains across all metrics, including Precision, mAP@0.5, and mAP@0.5–0.9. In particular, the joint design markedly improves mAP@0.5 from 64.62 to 72.12, demonstrating that combining gradient-aware structural cues with non-local semantic context is more effective than using either component in isolation. These results confirm that the performance improvement of NFE stems from the synergistic fusion of gradient and non-local features, rather than simply adding extra modules, thereby validating the core design motivation of NFE.

4.3. Comparative Experiments

To validate the performance of the proposed model, comparative experiments were conducted against YOLOv7-tiny [

49], YOLOv8n [

9], EDTC [

50], Complex-GS [

27], DMA-YOLO [

51], and YOLOv12n [

11] on the DUT-Anti-UAV dataset. The results in

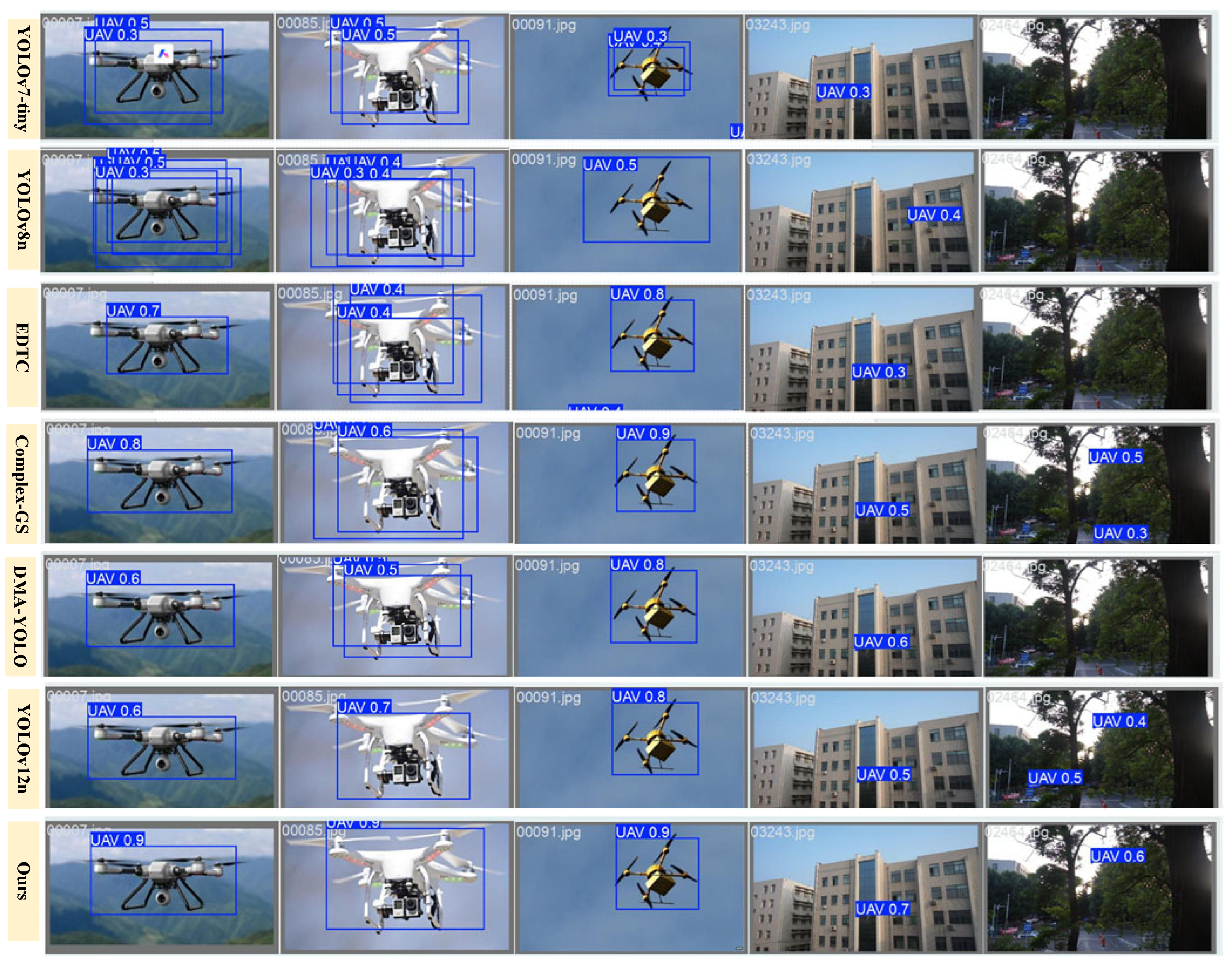

Table 4 indicate that the proposed model exhibits a significant advantage in terms of object detection performance. Specifically, the proposed model achieved the best results across four key metrics: Precision, Recall, mAP50, and mAP50-95. Notably, the proposed model demonstrated a remarkable performance advantage on the mAP0.5 metric, surpassing the suboptimal model by 2.12%. As for computational efficiency, our method achieves a moderate level of runtime performance, with its FPS, parameter count, and FLOPs ranking in the mid-range among the compared approaches.

To more comprehensively demonstrate the effectiveness of our model in anti-UAV detection,

Figure 7 presents comparative results with other models across several different scenarios. In backgrounds such as steel towers and forests, our model achieves higher confidence scores; whereas in backgrounds with interference, such as residential buildings, most other models failed to detect the UAV targets. This indicates that our model performs better in anti-UAV detection under complex background conditions.

Besides the DUT-Anti-UAV dataset, we further conduct comparisons on the Det-Fly dataset to validate the generalizability of our method under more challenging conditions. As summarized in

Table 5 and

Figure 8, our approach achieves the best overall detection accuracy, yielding the highest Precision, Recall, and mAP among all compared methods, and hold the robuteness detections under extreme conditions such as ultra-tiny objects. In particular, the consistent improvement over strong baselines (e.g., YOLOv12n) indicates that the proposed design is effective at both suppressing background-induced false alarms and recovering weak true positives that are easily missed when UAV targets occupy only a few pixels.

From the experimental results above, it is evident that the proposed model can better fuse feature information of targets at different scales and simultaneously enhance target clarity through feature augmentation, thereby significantly improving object detection accuracy. These advantages enable it to outperform others in comparative experiments for small UAV target detection tasks. Therefore, the experimental results of this study surpass those of other models.

6. Conclusions

This paper addresses the challenge of anti-UAV detection under extreme target scale variation and complex background interference. To this end, we propose an anti-UAV detection framework based on non-local feature learning, which integrates two key components: a Cross-scale Non-local Feature Interaction (CNFI) module and a Non-local Feature Enhancement (NFE) module.

The CNFI module explicitly establishes long-range dependencies across multi-scale feature maps via cross-scale non-local attention, enabling more effective global information interaction and improving the detection of small UAV targets. Meanwhile, the NFE module enhances feature discriminability in cluttered environments by fusing global non-local context with gradient-based structural cues, thereby reinforcing boundary and shape information.

Experimental results on the DUT-Anti-UAV and Det-Fly benchmark datasets demonstrate the effectiveness of the proposed method. Compared with the YOLOv12n baseline, our approach achieves improvements of 0.93% in Precision, 1.09% in Recall, and 2.12% in mAP@0.5 on DUT-Anti-UAV, and gains of +4.21% Precision, +15.87% Recall, and +16.47% mAP@0.5 on the Det-Fly dataset, confirming its robustness and generalization capability in diverse scenarios.

Despite these gains, the cross-scale non-local attention introduces additional computational overhead, and the current evaluation is limited to static image datasets. Future work will focus on lightweight attention designs to improve efficiency and extend the proposed framework to video-based anti-UAV detection and edge-device deployment.