Resuming Experiences in Human-Centered Design of Computer-Assisted Knowledge Work Processes †

Abstract

:1. Multi-Agent systems

2. Relevant Findings from Sociotechnical Systems Research

- be practicable and reasonable,

- not do any harm or impair well-being and,

- particularly enhance the human working capacity—as an epitome of experience, capabilities and learning—as a specific human strength to be developed.

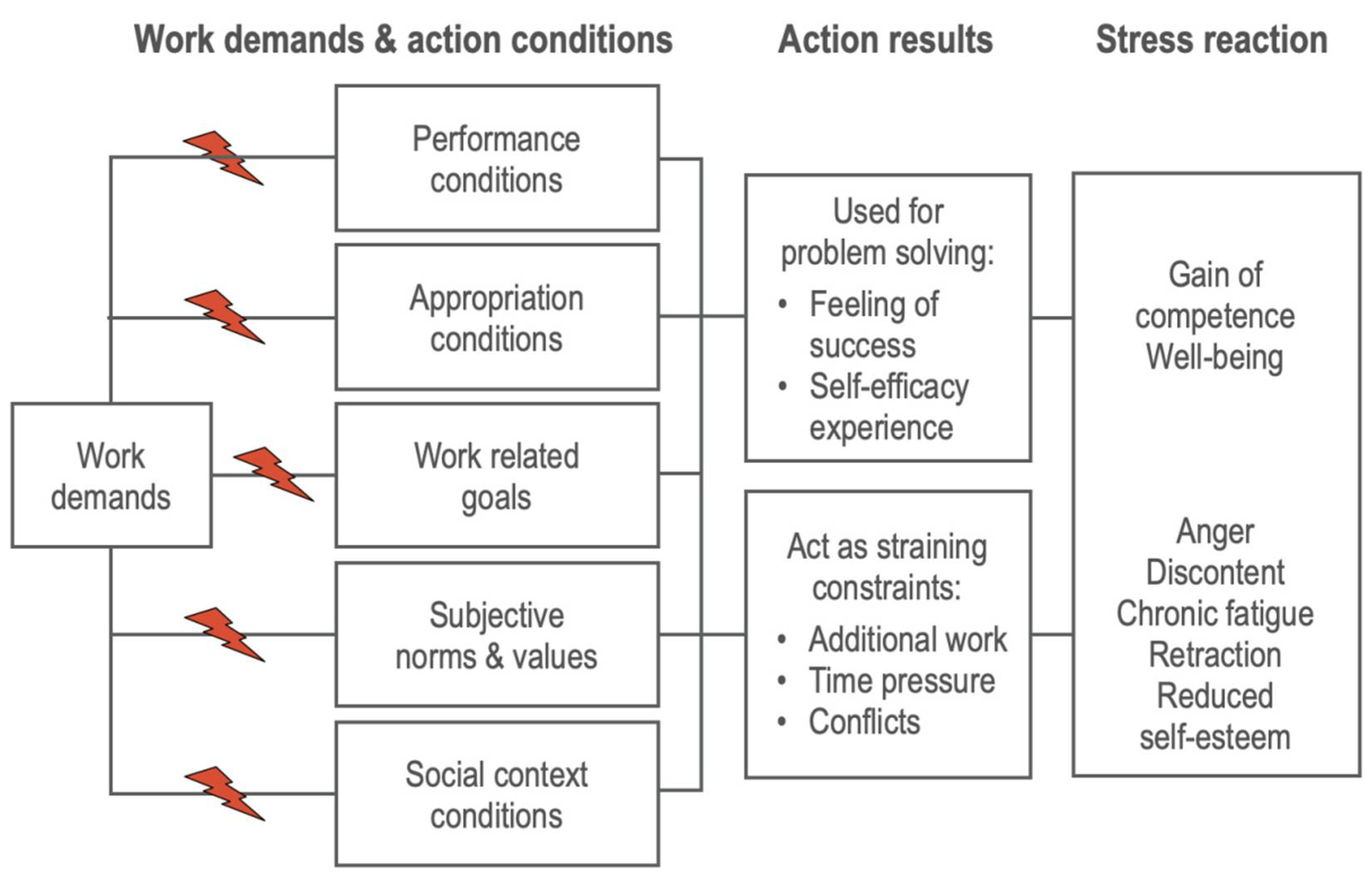

3. Contradictory Work Demands Produce Mental Stress

- High demands for coping with given complex tasks are persistently disturbed by uncertain system reactions.

- It is difficult or impossible for the workers to retrace and, hence, to understand the systems’ situated reactions.

- Workers are thus hindered from learning from experience, to sufficiently appropriate the system’s functionalities and to enhance their skills.

- Frequently, workers will be made accountable for failure and the damage resulting from it, despite loss of control.

- These uncomfortable situations regularly submit workers to pressures of contradictory work demands causing mental stress or even disorders.

- Contradictions between tasks and executing conditions restrain action regulation and learning options due to inadequate tools causing additional efforts.

- Conflict between tasks and learning conditions hinder workers from obtaining the appropriate necessary knowledge and the artifact’s technical functions.

- Contradicting project objectives put workers in »double loyalty« conflicts between different but equally important expectations to act.

- Contradictions between work-related and individual values put workers in conflict between project objectives and professional behavior or standards.

- non-transparent systems’ behavior,

- hardly explainable systems’ behavior,

- uncertain results due to biased and unratable input data,

- misguided or unrealistic expectations of systems performance.

- compelled to blindly trust in the systems’ outcome without any chance for their own assessment;

- being hindered from fully appropriating the systems’ functions for unrestricted instrumental use violating the basic »expectation conformity« requirement (EN ISO 9241-11);

- often being left in the dark about who is accountable for possible failures.

4. Conclusions

- In order to avoid contradictory demands, the implementation and use of adaptive systems should be concentrated on tasks that can reasonably be fully automated—the results of which, however, need to be blindly trusted.

- In operation with skilled knowledge workers, the use of adaptive systems should be avoided for the reasons explicated—as long as self-explaining systems are missing.

- Research efforts for adaptive systems should be concentrated on the development and implementation of facilities to explain questionable system behavior on demand.

- Like other high-risk technologies, adaptive systems should be submitted to publicly controlled certification procedures before deployment.

- In cases of failure, accountability regulations and practices need to be based on comprehensive scrutiny rather than simply ascribing them to human error.

- Consequently, capacities for comprehensively scrutinizing system failures need to be implemented

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach, 3rd ed.; Pearson: Upper Saddle River, NJ, USA, 2009. [Google Scholar]

- Von Foerster, H. Wissen und Gewissen; Suhrkamp: Frankfurt, Germany, 1993. [Google Scholar]

- Jeschke, S. Auf dem Weg zu einer »neuen KI«: Verteilte intelligente Systeme. Inform. Spektrum 2015, 38, 4–9. [Google Scholar] [CrossRef]

- Brödner, P. »Super-intelligent« Machine: Technological Exuberance or the Road to Subjection. AI Soc. J. Knowl. Cult. Commun. 2018, 33, 335–346. [Google Scholar] [CrossRef]

- Mumford, E. The story of socio-technical design. Reflections on its successes, failures and potential. Inf. Syst. J. 2006, 16, 317–342. [Google Scholar] [CrossRef]

- Ulich, E. Arbeitssysteme als Soziotechnische Systeme—eine Erinnerung. J. Psychol. Alltagshandelns 2013, 6, 4–12. [Google Scholar]

- Rohde, M.; Brödner, P.; Stevens, G.; Betz, M.; Wulf, V. Grounded Design—A Praxeological IS Research Perspective. J. Inf. Technol. 2017, 32, 163–179. [Google Scholar] [CrossRef]

- Brödner, P. Sustainability in Knowledge-Based Companies. In Creating Sustainable Work Systems. Developing Social Sustainability; Docherty, P., Kira, M., Shani, R., Eds.; Routledge: London, UK, 2009; pp. 53–69. [Google Scholar]

- Gerlmaier, A.; Latniak, E. (Eds.) Burnout in der IT-Branche. Ursachen und betriebliche Prävention; Asanger Verlag: Kröningen, The Netherlands, 2011. [Google Scholar]

- Mittelstadt, B.D.; Allo, P.; Taddeo, M.; Wachter, S.; Floridi, L. The Ethics of Algorithms: Mapping the Debate. Big Data Soc. 2016, 3, 1–21. [Google Scholar] [CrossRef] [Green Version]

- Norman, D.A. How Might People Interact with Agents. CACM 1994, 37, 68–71. [Google Scholar] [CrossRef]

- Bainbridge, L. Ironies of Automation. Automatica 1983, 19, 775–779. [Google Scholar] [CrossRef]

- Baxter, G.; Rooksby, J.; Wang, Y.; Khajeh-Hosseini, A. The Ironies of Automation … still going strong at 30? In European Conference on Cognitive Ergonomics, ECCE’12, Edinburgh (UK), 28–31 August 2012; Turner, P., Turner, S., Eds.; Edinburgh Napier University: Edinburgh, UK, 2012; pp. 65–71. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brödner, P. Resuming Experiences in Human-Centered Design of Computer-Assisted Knowledge Work Processes. Proceedings 2022, 81, 80. https://doi.org/10.3390/proceedings2022081080

Brödner P. Resuming Experiences in Human-Centered Design of Computer-Assisted Knowledge Work Processes. Proceedings. 2022; 81(1):80. https://doi.org/10.3390/proceedings2022081080

Chicago/Turabian StyleBrödner, Peter. 2022. "Resuming Experiences in Human-Centered Design of Computer-Assisted Knowledge Work Processes" Proceedings 81, no. 1: 80. https://doi.org/10.3390/proceedings2022081080

APA StyleBrödner, P. (2022). Resuming Experiences in Human-Centered Design of Computer-Assisted Knowledge Work Processes. Proceedings, 81(1), 80. https://doi.org/10.3390/proceedings2022081080