2. Shannon’s Equation Revisited

Shannon’s equation and a consolidated tradition have led many scientists and laymen to consider information as some kind of physical, additive quantity. The standard formula that all schoolboys learn is the familiar sum of products between probabilities and their logarithms [

1]:

The aim of this paper is to show that the above function (1) can be rewritten in terms of standard probability theory, and that it thus does not entail any ontological commitment. Namely, that it is possible to show that:

where

(

maps the probabilities,

, of the random variable,

X, using a method that will be described below. It can be shown that Equations (1) and (2) are mathematically equivalent (in the sense of providing the same set of solutions). Since Equation (2) is a function of a product of probabilities, it follows that

H expresses, logarithmically, the probability of independent events, i.e., P(AB) = P(A)P(B). Thus, by Ockham’s principle, if Equation (2) were valid it would drain all ontological commitments of Equation (1). Information as a real quantity could be dismissed.

A few steps show how Equation (2) can be derived from Equation (1). First, Equation (1) can be rewritten as an exponent, and thus the sum can be reformulated as a product with exponents:

Equation (3) begins to take some of the spell away. The next step consists of removing exponents and suggesting suitable independent events. Let

d be the greatest common factor among all

(assuming

,

,

d is the greatest rational, such that

). By means of

d, Equation (3) can then be rewritten as (

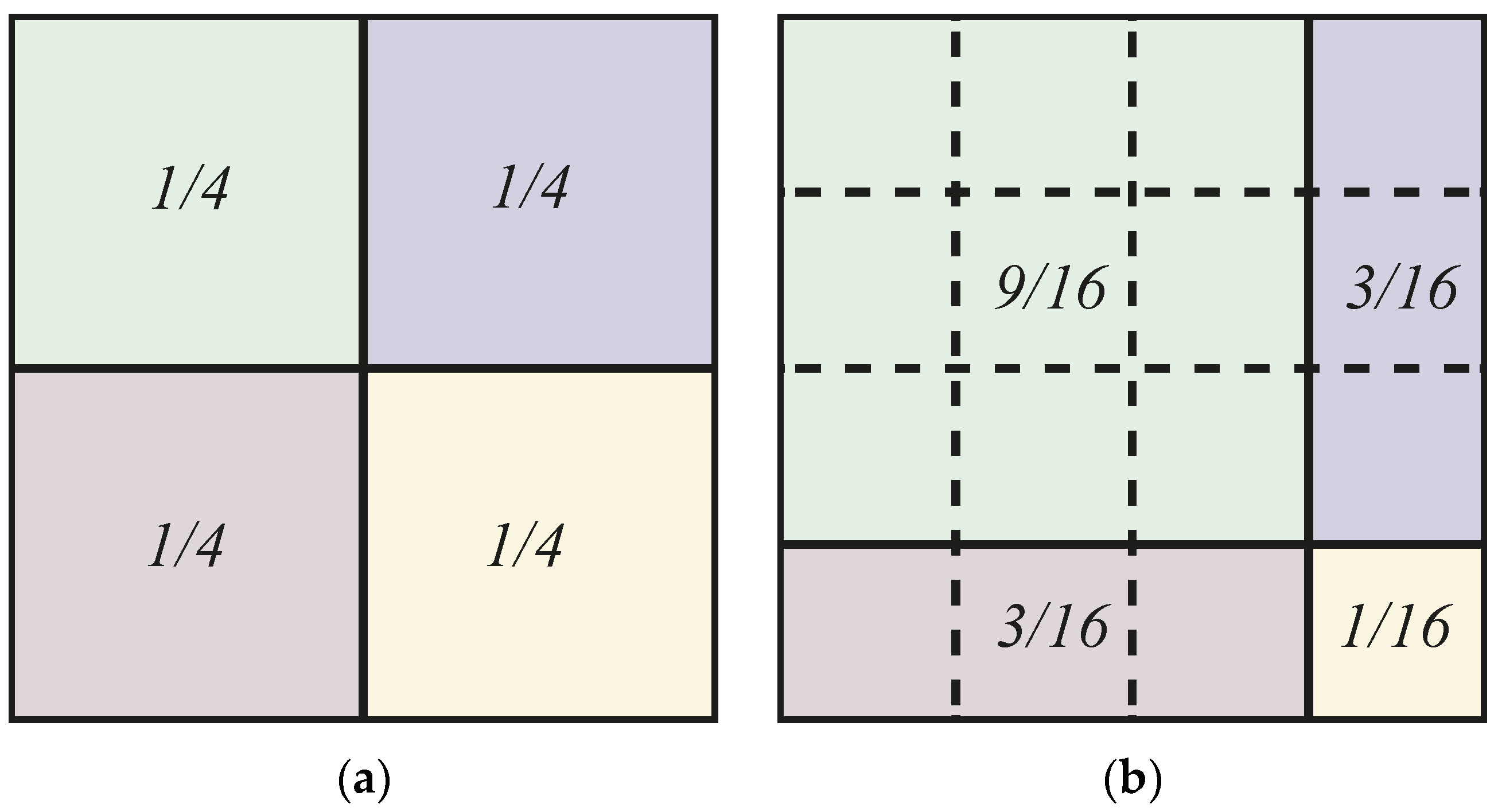

Figure 1):

whereas each factor,

, is rewritten as a product,

, where

. Of course, if

,

. Thus, each

can be revisited by—and thus substituted with—a series of independent events,

, each having a probability,

. The set of probabilities,

, is built by multiplying each

times. Equation (3) can be rewritten as:

Additionally, then, since

, it is trivial to obtain Equation (2). The derivation from Equation (1) to Equation (2) might appear complicated, but it is not. For instance, if the elements are equiprobable symbols (

the familiar Hartley’s expression,

, will follow [

2]. An important caveat:

does not correspond to a probability (

),

does. In fact,

is the probability of any sequence of M events whose frequency matches exactly that of the outcomes of X (

Figure 2). In the future, it would be interesting to check whether this proposal might be extended to the continuous case, thereby providing an even more compelling case.

Consider a handful of numerical examples.

First, suppose having two outcomes with probabilities , then , since is their greatest common factor, i.e., d is a rational number by which , . In fact, =.

Second, suppose having three outcomes, , with probabilities , N = 3. Their greatest common factor common is d = ⅙. By multiplying all elements according to the ratio , a set, , where each element has a probability of , we obtain . Equation (1) obtains . Equation (2) obtains

Finally, suppose having two outcomes with probabilities , N = 2. Then, , M = 4. . The probability represents the probability of any sequence of M outcomes of the kind 0001, 0100, and 1000 (or whatever matches X’s profile).

3. Discussion

Is information something real or not? In many fields, the notion that information is a physical quantity has led scientists to debatable conclusions about both its nature and role [

3,

4]. Many arguments have been advanced both in favour of and against the reality of information. It is fair to maintain that information cannot be directly measured, as can be done with other, less problematic physical quantities (say, mass and charge). It is impossible to tell how much information is contained in a physical system unless more knowledge about the relation between that physical system and another system is available. It is also disputable whether information has any real causal efficacy, or if it is an epiphenomenal notion, as is centres of mass. The very well-known argument in favour of information as a physical quantity has not reached any definitive conclusion [

5]. In this context, much have been derived from Equation (1), which, as Shannon himself stated, was introduced mostly because “the logarithmic measure is more convenient […] it is practically more useful […] it is mathematically more suitable […] it is nearer to our intuitive feelings” [

1]. Shannon hardly provides final arguments for the physical existence of information.

An interesting outcome of Equation (2) is that it is achieved by remapping events with different probabilities over multiple equiprobable events. This suggests that, as happens with entropy, there are not more probable configurations, but rather there are many indistinguishable configurations that are mapped onto convenient “more probable” states. Therefore, Equation (2) (and the steps from three to six) is a way to unpack one’s limited knowledge or perspective about physical states and represent them in a neutral way where each state is just as probable.

This is not to say that the notion of information is not a very successful and useful one. Yet, the existence of an alternative formula that does not require any ontological commitment to information makes a strong cause against its existence—the existence of information does not seem to make any difference. Equations (1) and (2) causally overdetermine what a system does, and, since Equation (1) is ontologically less parsimonious, for the Ockham’s razor Equation (2) is to be preferred.

In this contribution, Equation (2) expresses H in terms of the product between the probabilities of independent events. Therefore, if Equation (2) was used in place of Equation (1) (despite being more cumbersome), there would be no need to add any new entity—information is simply a convenient way to express the probability of different configurations of a physical system. Since Equation (2) is mathematically equivalent to Equation (1), and it does not require anything but probabilities, it follows that Equation (2) provides a mathematical proof that information does not exist apart from as a useful conceptual tool.