Abstract

In this work, we propose an autonomous monitoring system for the daily routine of an elderly person. SARDAM (Service Assistant Robot for Daily Activity Monitoring), which is the name of this system, uses a humanoid robot as a key element that carries out a direct interaction with the user. The purpose of SARDAM is to keep the user active as long as possible by suggesting, and monitoring, a series of daily tasks and healthy habits according to the prescription of a health professional, in order to reduce the early appearance of cognitive and motor impairment. In the current version of SARDAM we use the NAO humanoid robot, which performs a natural interaction with the user through vision and speech libraries. To assure the appropriate execution of the user’s daily tasks, a module for emotion detection has been incorporated in order to propose corrective tasks according to the detected emotion. SARDAM was tested in a scenario with a real user, getting successful results and positive opinions from them that encourage further work.

1. Introduction

Socially Assistive Robotics (SAR) is a currently booming field due to the need for taking care of the growing number of elderly people and people who needs special treatment. One of the main goals of SAR is to maintain an independent lifestyle in the users as long as possible with the help of a robot. These type of robots provide physical therapy and daily assistance, as well as emotional support to the user, among other functions [1]. As for the use of SAR in elderly care, physical and cognitive care, monitoring and training is provided by the robots to improve the quality of life of the people [2].

Presently, there are some relevant projects in this field, such as Pearl [3], from Nursebot project, which mainly reminds the user of the daily activities to be carried out, and guides him/her through the environment. Another SAR system is ENRICHME [4], which uses an customized version of the TIAGo robot, and its main goal is to provide home assistance, health monitoring, complementary care, and social support to improve the quality of life of the elderly person.

SARDAM, the prototype proposed in this paper, is a monitoring system of the daily routine of an elderly person that uses the NAO robot. It is based on a set of activities that are executed following a daily schedule, which is customized for the user, and which is organized depending on a set of priorities as established by a health professional. SARDAM’s main goal is to remind the user of the activities he/she has to do at the right time, emphasising those with the highest priority, and using other activities as a resource to maintain an optimal routine. SARDAM suggests the activities according to the user’s emotional state, adding, when necessary, some special activities to improve it and encourage the user to follow the proper routine.

2. SARDAM’s Architecture

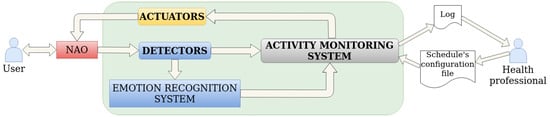

Figure 1 shows SARDAM’s architecture, which consists of five main modules. Starting from the top, the user and NAO robot interact with each other. From this interaction, the robot’s sensors data are passed to the detectors, which process the information. These detectors are focused on people detection and recognition, speech recognition, face emotion recognition, touch detection, and object detection. After that, the processed data are used by the activity monitoring system and the emotion recognition system. The activity monitoring system executes the daily schedule and registers data about the person’s activity on a log file. During the execution of the activities, it provides orders to the robot’s actuators on what they have to do depending on the interaction required. Such actuators are in charge of NAO’s behaviour. The suggestion of each activity depends on the person’s emotional state. If it is detected that the user is not feeling well enough to execute the activity, then the system will try to encourage him/her with a corrective activity during a short time period.

Figure 1.

SARDAM’s architecture.

2.1. The Emotion Recognition System

The emotion recognition system predicts the user’s mood and provides it to the activity monitoring system every time it is needed. To obtain such mood prediction, it merges the results of emotion recognition on frames of a video and on the detected speech from an audio. The library used to perform face emotion recognition is FaceEmotion_ID [5], while SpeechRecognition [6] is the Python library used to detect the speech from an audio, using Google Web Speech API.

To obtain the results from video, 10 frames are extracted to label emotions on each of them using FaceEmotion_ID. Once such labels (“Good mood”, “Normal” and “Bad mood”) with their confidence are obtained, they are merged to get the final results of face emotion. As for results from the audio, first a speech recognition is performed. The next step is to label each detected word using the already mentioned three labels. To do this, it was established a bag of words of each label. Their confidence values are also calculated.

Finally, the definitive label that qualifies the user’s emotional state is calculated from the average of the results obtained from the video and the audio.

2.2. The Activity Monitoring System

The activity monitoring system is in charge of loading and executing the schedule which contains the activities of the user’s daily routine, wrote by a health professional on a configuration file. Currently, it is composed by 18 activities of a typical daily routine for an elderly person, organized on three levels of priority. For instance, task of the highest priority are “wash up”, “eating” or “physical activity”, while task of intermediate priority are “clean the house” or “calling a friend”.

3. Experiment on a Real Environment

SARDAM was tested in a real environment through a one-day monitoring experiment in a home with a woman in her 60s. First of all, it was required to set the daily activities in the schedule configuration file. Once the system started, the schedule was executed as follows. First, SARDAM warns the user what is the task to be carried out; for example, “you should go to have breakfast”. If the task is performed, nothing happens until the next one in the schedule. However, if the user does not perform it, SARDAM uses the emotion recognition system to decide which corrective activity is proposed. Once this special activity is finished, the previously postponed task is proposed again.

As this experiment was performed during a whole day, it was possible to test how the system works dealing with different user responses. We overall got successful results, although in some cases, the detected mood was not the real one expressed by the user. At the end of the day, we asked the user about her experience with the robot, and she expressed that she felt that the robot really cared for her, and that it has been really useful having such a partner reminding her to follow her daily routine.

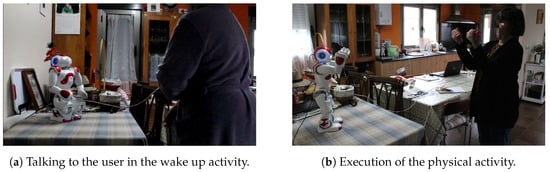

Figure 2 shows two images retrieved during the execution of this experiment. For more examples about SARDAM’s performance: https://www.youtube.com/watch?v=Rz10ANdjpy8.

Figure 2.

Images retrieved from the execution of the experiment with a real user.

4. Conclusions and Future Work

SARDAM is a Socially Assistive Robotics system which is in charge of monitoring the daily routine of an elderly person using emotion recognition. Preliminary results have been encouraging, although many improvements can be performed in the future, like improving the emotion recognition system and incorporating a schedule’s replanification module which adapts to the user’s preferences.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Feil-Seifer, D.; Matarić, M. Defining Socially Assistive Robotics. In Proceedings of the 9th International Conference on Rehabilitation Robotics, 2005 (ICORR 2005), Chicago, IL, USA, 28 June–1 July 2005; Volume 2005, pp. 465–468. [Google Scholar]

- Broekens, J.; Heerink, M.; Rosendal, H. Assistive social robots in elderly care: A review. Gerontechnology 2020, 8, 94–103. [Google Scholar] [CrossRef]

- Pollack, M.; Brown, L.; Colbry, D.; Orosz, C.; Peintner, B.; Ramakrishnan, S.; Engberg, S.; Matthews, J.; Dunbar-Jacob, J.; Mccarthy, C.; et al. Pearl: A Mobile Robotic Assistant for the Elderly. In Proceedings of the AAAI Workshop on Automation as Eldercare, Edmonton, AB, Canada, 28 July 2002. [Google Scholar]

- Coşar, S.; Fernández-Carmona, M.; Agrigoroaie, R.; Pages, J.; Ferland, F.; Zhao, F.; Yue, S.; Bellotto, N.; Tapus, A. ENRICHME: Perception and Interaction of an Assistive Robot for the Elderly at Home. Int. J. Soc. Robot. 2020, 12, 779–805. [Google Scholar] [CrossRef]

- FaceEmotion_ID. Available online: https://github.com/abhijeet3922/FaceEmotion_ID (accessed on 23 July 2020).

- SpeechRecognition. Available online: https://pypi.org/project/SpeechRecognition/ (accessed on 23 July 2020).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).