Computer Vision Aided Structural Identification: Feature Tracking Using Particle Tracking Velocimetry versus Optical Flow †

Abstract

:1. Introduction

2. Methods

2.1. Feature Tracking Methods

2.1.1. Particle Tracking Velocimetry (PTV)

2.1.2. Lucas-Kanade Method for Optical Flow

2.1.3. Phase-Based Motion Magnification (PBMM)

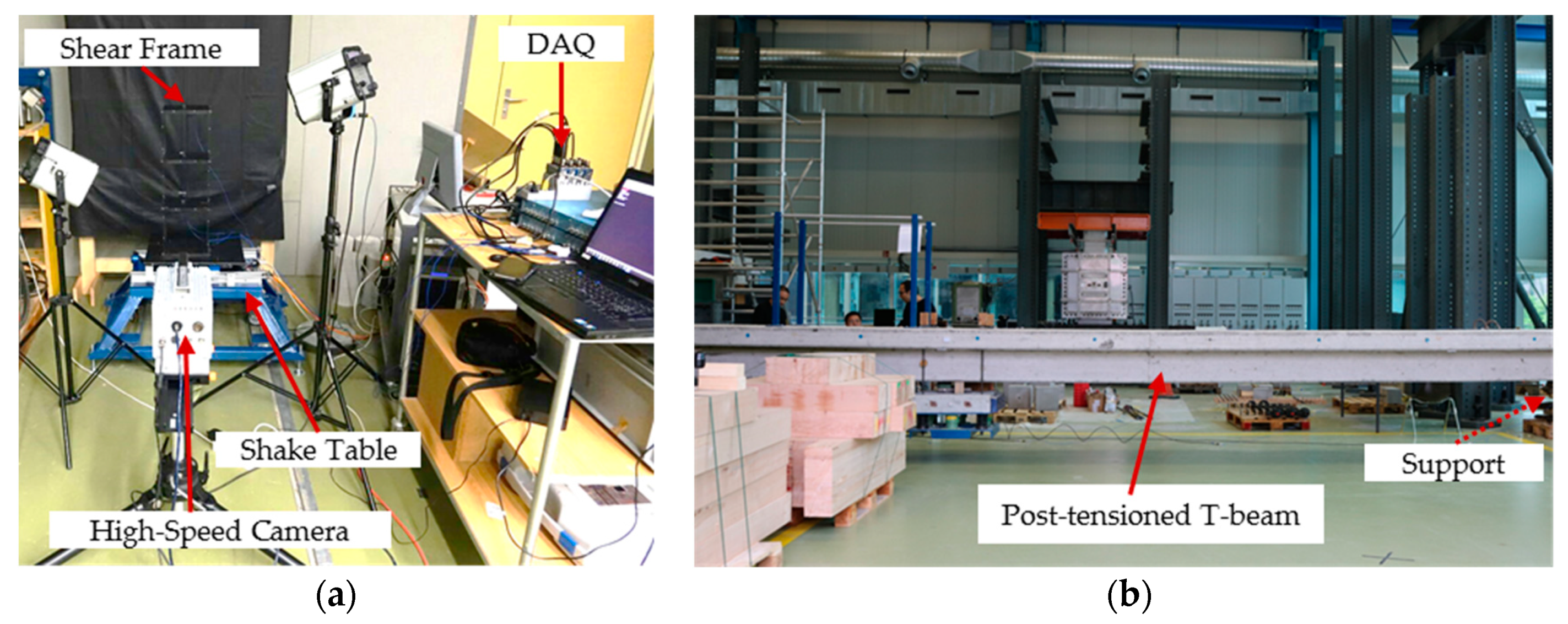

2.2. Experimental Setup Description

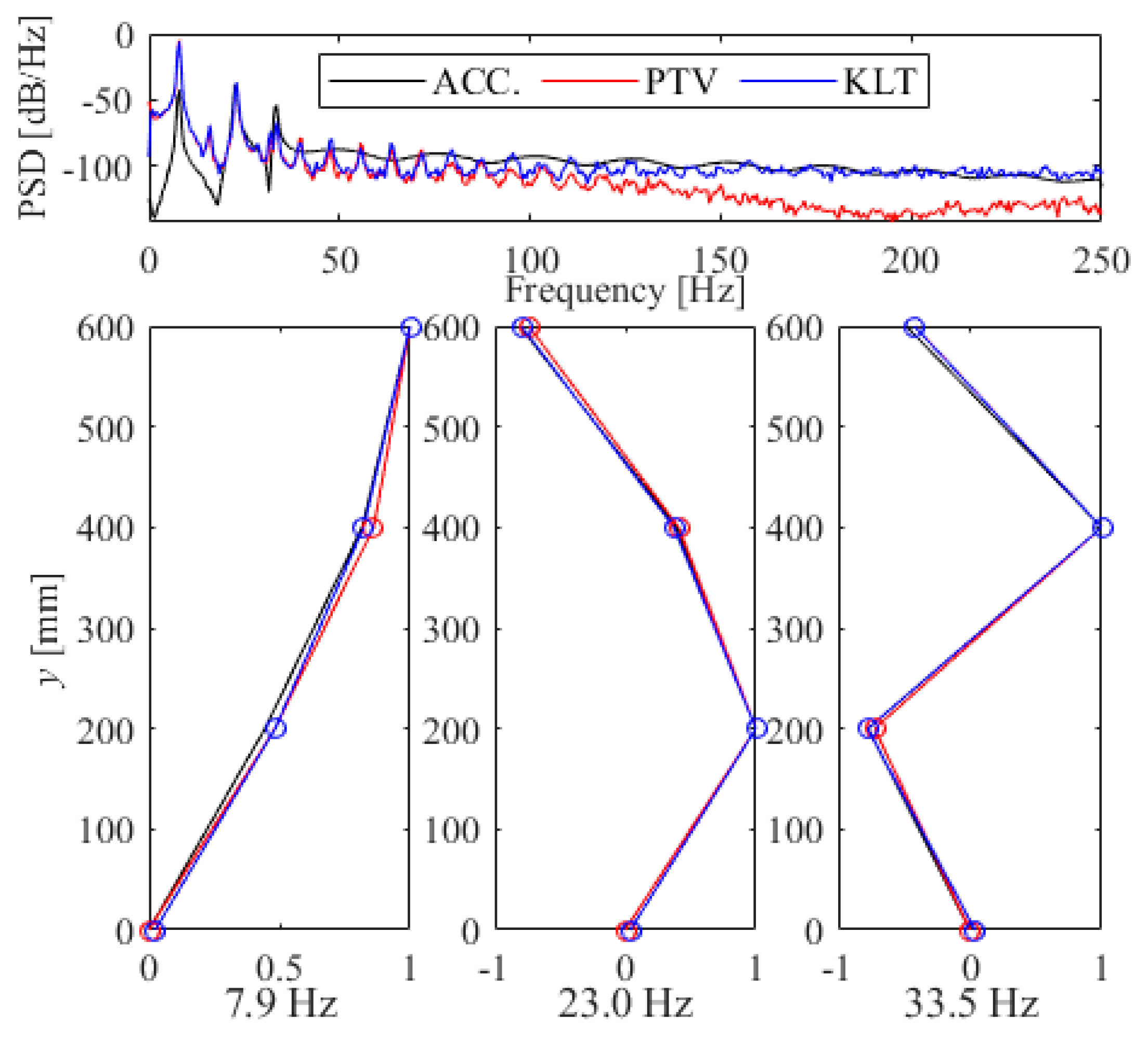

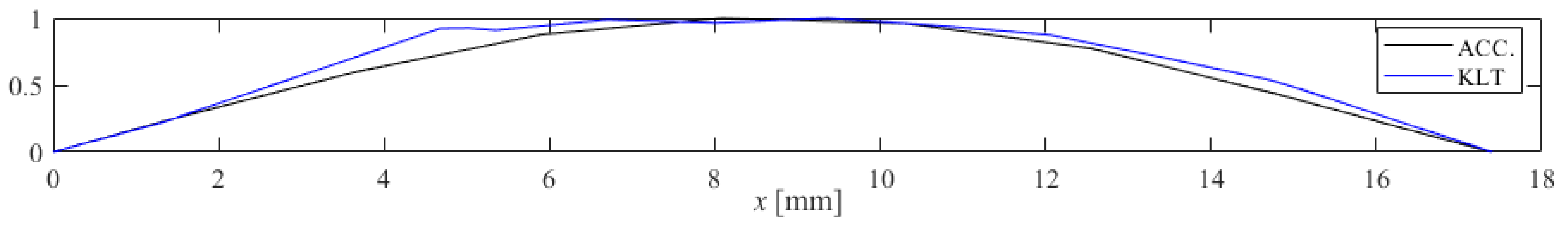

3. Results and Discussion

4. Conclusions and Outlook

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xu, Y.; Brownjohn, J.M.W. Review of machine-vision based methodologies for displacement measurement in civil structures. J. Civ. Struct. Heal. Monit. 2017, 8, 1–20. [Google Scholar] [CrossRef]

- Wadhwa, N.; Rubinstein, M.; Durand, F.; Freeman, W.T. Phase-based video motion processing. ACM Trans. Graph. 2013, 32, 80. [Google Scholar] [CrossRef]

- Chen, J.G.; Wadhwa, N.; Cha, Y.-J.; Durand, F.; Freeman, W.T.; Buyukozturk, O. Modal identification of simple structures with high-speed video using motion magnification. J. Sound Vib. 2015, 345, 58–71. [Google Scholar] [CrossRef]

- Cha, Y.-J.; Chen, J.G.; Büyüköztürk, O. Output-only computer vision based damage detection using phase-based optical flow and unscented Kalman filters. Eng. Struct. 2017, 132, 300–313. [Google Scholar] [CrossRef]

- Yang, Y.; Dorn, C.; Mancini, T.; Talken, Z.; Kenyon, G.; Farrar, C.; Mascareñas, D. Blind identification of full-field vibration modes from video measurements with phase-based video motion magnification. Mech. Syst. Signal Process. 2017, 85, 567–590. [Google Scholar] [CrossRef]

- Sarrafi, A.; Mao, Z.; Niezrecki, C.; Poozesh, P. Vibration-based damage detection in wind turbine blades using Phase-based Motion Estimation and motion magnification. J. Sound Vib. 2018, 421, 300–318. [Google Scholar] [CrossRef]

- Zimmermann, M.; Gülan, U.; Harmanci, Y.E.; Chatzi, E.N.; Holzner, M. Structural Health Monitoring through Video Recording. In Proceedings of the 8th European Workshop on Structural Health Monitoring (EWSHM 2016), Bilbao, Spain, 5–8 July 2016. [Google Scholar]

- Harmanci, Y.E.; Gülan, U.; Zimmermann, M.; Holzner, M.; Chatzi, E. High spatial density vibrational measurements via 3D-particle tracking velocimetry. In Proceedings of the 4th Conference on Smart Monitoring, Assessment and Rehabilitation of Civil Structures (SMAR 2017), Zurich, Switzerland, 13–15 September 2017. [Google Scholar]

- Xu, Y.; Brownjohn, J. Non-contact vibration measurement of cables in a cable-stayed bridge by consumer-grade camera. In Proceedings of the 4th Conference on Smart Monitoring, Assessment and Rehabilitation of Civil Structures (SMAR 2017), Zurich, Switzerland, 13–15 September 2017. [Google Scholar]

- Ribeiro, D.; Calçada, R.; Ferreira, J.; Martins, T. Non-contact measurement of the dynamic displacement of railway bridges using an advanced video-based system. Eng. Struct. 2014, 75, 164–180. [Google Scholar] [CrossRef]

- Khuc, T.; Catbas, F.N. Computer vision-based displacement and vibration monitoring without using physical target on structures. Struct. Infrastruct. Eng. 2017, 13, 505–516. [Google Scholar] [CrossRef]

- Chen, O.B.J.G.; Davis, A.; Wadhwa, N.; Durand, F.; Freeman, W.T. Video Camera–Based Vibration Measurement for Civil Infrastructure Applications. J. Infrastruct. Syst. 2016, 23, B4016013. [Google Scholar] [CrossRef]

- Cavagna, A.; Giardina, I. Bird Flocks as Condensed Matter. Annu. Rev. Condens. Matter Phys. 2014, 5, 183–207. [Google Scholar] [CrossRef]

- Gülan, U.; Lüthi, B.; Holzner, M.; Liberzon, A.; Tsinober, A.; Kinzelbach, W. Experimental study of aortic flow in the ascending aorta via Particle Tracking Velocimetry. Exp. Fluids 2012, 53, 1469–1485. [Google Scholar] [CrossRef]

- Maas, H. Contributions of digital photogrammetry to 3D PTV. In Proceedings of the Three-Dimensional Velocity and Vorticity Measuring and Image Analysis Techniques, Zürich, Switzerland, 3–6 September 1996; pp. 191–207. [Google Scholar]

- Gibson, J.J. The Perception of the Visual World; Houghton Mifflin: Cambridge, MA, USA, 1950. [Google Scholar]

- Radke, R.J. Computer Vision for Visual Effects; Cambridge University Press: Cambridge, UK, 2013. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In IJCAI’81 Proceedings of the 7th international joint conference on Artificial intelligence, Vancouver, BC, Canada, 24–28 August 1981. [Google Scholar]

- Bouguet, J.-Y. Pyramidal implementation of the affine lucas kanade feature tracker description of the algorithm. Intel Corp. 2001, 5, 4. [Google Scholar]

- Rubinstein, M. Analysis and Visualization of Temporal Variations in Video; Massachusetts Institute of Technology: Cambridge, MA, USA, 2014. [Google Scholar]

- Van Overschee, P.; De Moor, B.L. Subspace Identification for Linear Systems: Theory—Implementation—Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Harmanci, Y.E.; Lai, Z.; Gülan, U.; Holzner, M.; Chatzi, E. Computer Vision Aided Structural Identification: Feature Tracking Using Particle Tracking Velocimetry versus Optical Flow. Proceedings 2019, 4, 33. https://doi.org/10.3390/ecsa-5-05750

Harmanci YE, Lai Z, Gülan U, Holzner M, Chatzi E. Computer Vision Aided Structural Identification: Feature Tracking Using Particle Tracking Velocimetry versus Optical Flow. Proceedings. 2019; 4(1):33. https://doi.org/10.3390/ecsa-5-05750

Chicago/Turabian StyleHarmanci, Yunus Emre, Zhilu Lai, Utku Gülan, Markus Holzner, and Eleni Chatzi. 2019. "Computer Vision Aided Structural Identification: Feature Tracking Using Particle Tracking Velocimetry versus Optical Flow" Proceedings 4, no. 1: 33. https://doi.org/10.3390/ecsa-5-05750

APA StyleHarmanci, Y. E., Lai, Z., Gülan, U., Holzner, M., & Chatzi, E. (2019). Computer Vision Aided Structural Identification: Feature Tracking Using Particle Tracking Velocimetry versus Optical Flow. Proceedings, 4(1), 33. https://doi.org/10.3390/ecsa-5-05750