1. Introduction

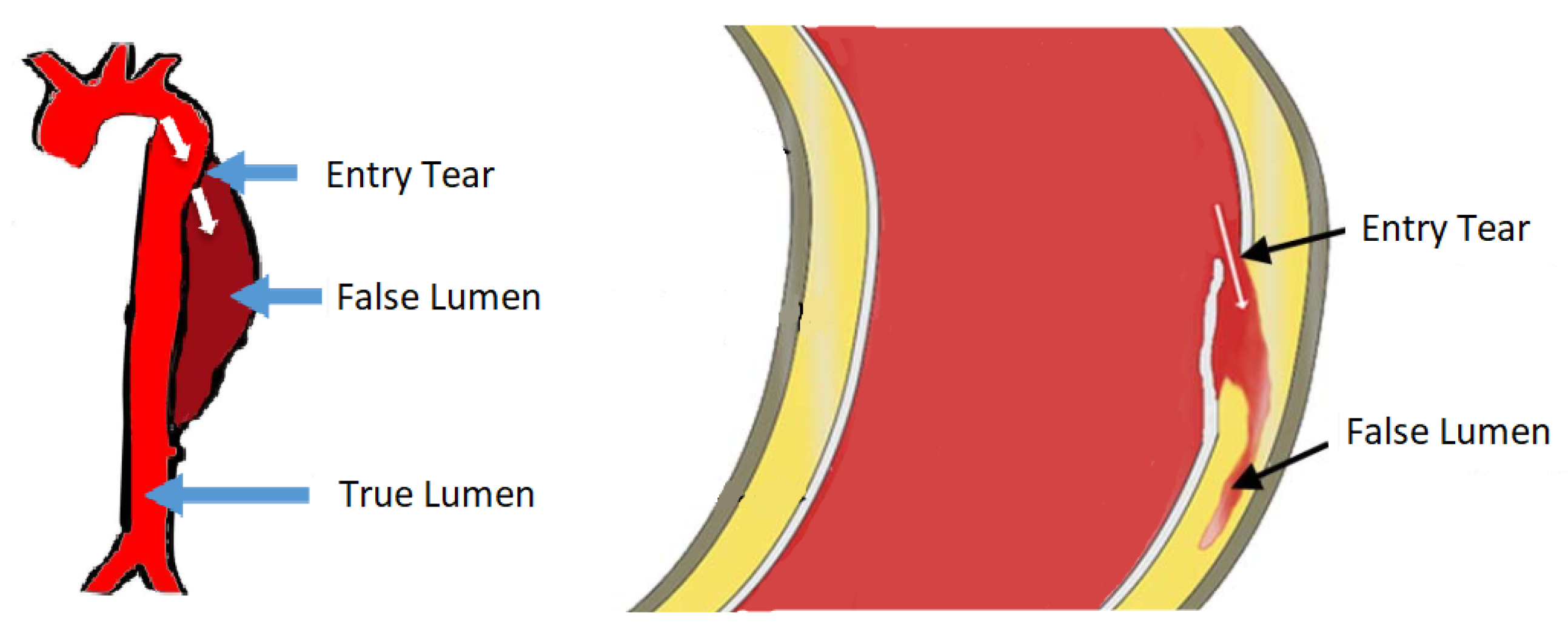

The largest blood vessel in the human body is the aorta. The wall of the aorta is made of aortic tissue, a layered composition of muscle cells, collagen, elastin fibres, etc. In Aortic Dissection (AD), a tear in the innermost layer of the aortic wall permits blood to flow in between the layers, effectively forcing apart the layers and deforming the geometry of the aorta. Obviously, AD affects blood circulation unfavourably ([

1] p. 459). This pathology is illustrated in

Figure 1.

The condition AD is often acute and requires immediate treatment, but diagnosis is difficult. Physicians use a variety of imaging techniques to diagnose AD, among which are Magnetic Resonance Tomography (MRT), Computed Tomography (CT) and Echocardiography, the latter of which is based on an ultrasound device [

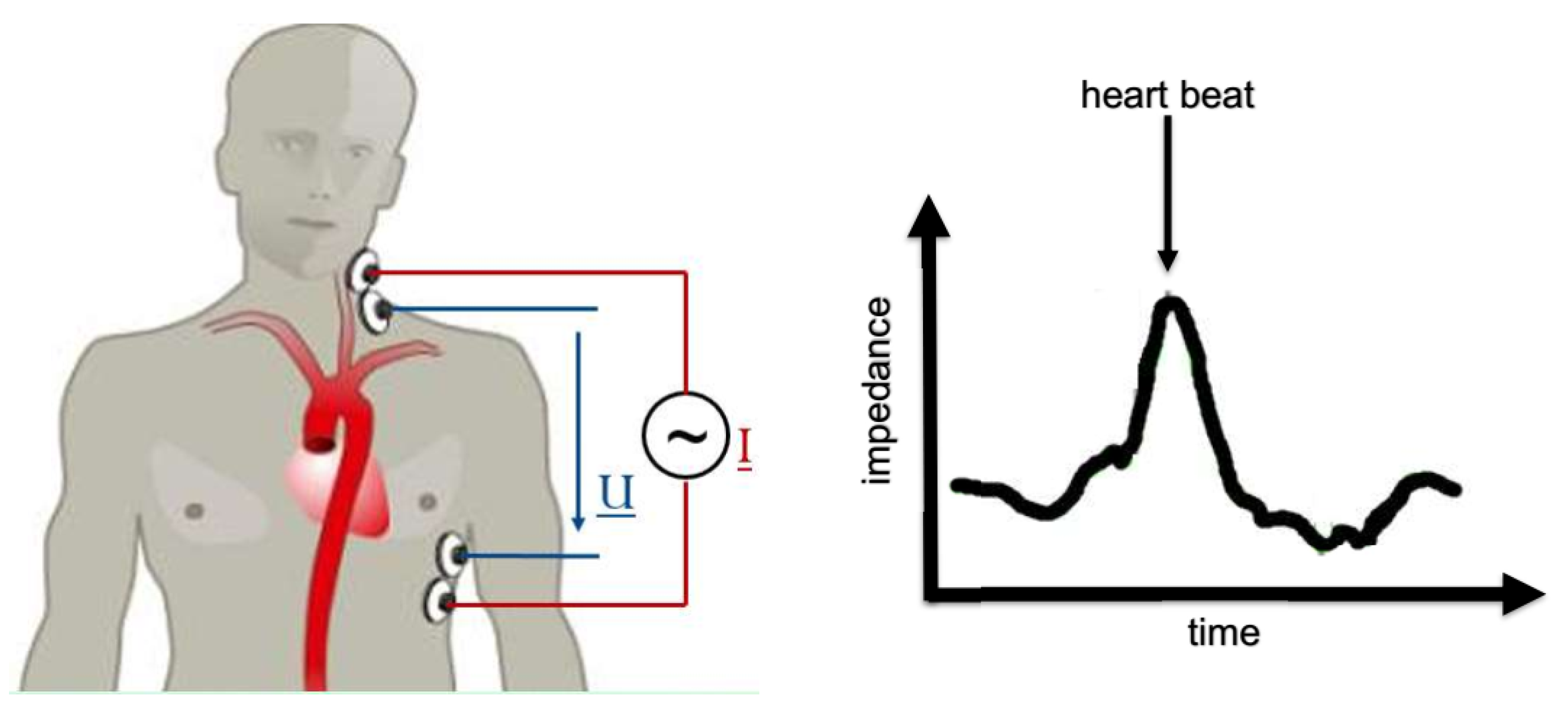

3]. Ultrasound devices are comparably cheap, fast and easy to handle. But if wave propagation is obfuscated by, e.g., the rib cage, the technique is not applicable. CT and MRT do not have this limitation due to full radiation penetration of the body, but show a number of drawbacks: long measurement times, radiation exposure, high costs, require specialized personnel (radiologists) and most importantly, MRT/CT is not available on a whim. A fast response, and a fast diagnosis, hence, is key to the treatment of AD patients. In this work, we analyse the proposal of [

4] to use impedance cardiography (ICG) [

5] for AD diagnosis. In ICG, one places a pair of electrodes on the thorax (upper body), injects a defined low-amplitude, alternating electric current into the body and measures the voltage drop. The generic experimental setup is illustrated in

Figure 2. The specific al resistance (impedance) of blood is much lower than that of muscle, fat or bone [

6]. Electric current seeks the path of least resistance, and thus the current propagates through the aorta rather than through, e.g., the spine. If blood is redistributed within the body due to AD, the path of least resistance is expected to change, and so the overall resistance of the body. ICG is bad to distinguish between different types of blood redistribution, e.g., AD or lung edema [

7]. Still, ICG yields yet another clue in a physician’s diagnostic procedure. ICG is fast, cheap, available on a whim and does not require specialized personnel. A new medical detection device based on ICG would thus close a gap left open by existing procedures.

To develop such a device, it is necessary to perform experiments which are extraordinarily difficult, both technically as well as ethically. One would need ICG measurements as well as high quality tomography data stemming from the same person, before and after AD happened. That kind of data is not available, and we resort to Finite Element (FE) simulations [

8] instead. In these simulations, we find a number of input parameters which are well-defined, but usually neither known precisely nor accessible in the clinical setting. For example, a patient’s blood conductivity varies from day to day. The input parameters are thus afflicted with uncertainty, and this uncertainty propagates through the simulation to the output, which here is the measured impedance. If we wanted a meaningful statement on the condition of the aorta, we therefore need to quantify the uncertainty in the measurement. It is an inverse problem, which involves the forward Uncertainty Quantification (UQ) first. UQ has become a term on its own in the engineering community. A rather chunky, but quite comprehensive collection of reviews on the various aspects of UQ can be found in Reference [

9]. A Bayesian perspective is discussed in [

10,

11,

12].

UQ usually requires quite some computational effort, depending on the number of uncertain parameters and the computational cost of a single simulation itself. If this computational effort is prohibitively large, one may use a surrogate model. The two most widely used surrogate models are Polynomial Chaos Expansion (PCE) [

13,

14,

15,

16] and Gaussian Process Regression (GPR) [

17,

18]. PCE is particularly widely spread within then engineering community, while GPR has had its renaissance recently within the machine learning community [

19,

20].

This work is inspired by the article of Kennedy and O’Hagan in 2000 [

21]. They performed UQ by making use of a computer simulation with different levels of ‘sophistication’ or ‘fidelity’. In other words, a cheap simplified simulation serves as a surrogate. Koutsourelakis follows this idea later on [

22]. While UQ in general has arrived fully in the Biomedical Engineering community [

23], the Bayesian approach has not. Biehler et al. [

24] were, to the best knowledge of the authors, the first to apply a Bayesian Multi-Fidelity Scheme in the context of computational Bio-mechanics .

In

Section 2 we build a physical model of an impedance cardiography measurement applied to the described physiological system. In

Section 3, we develop a Bayesian Multi-Fidelity scheme, which is then used for Uncertainty Quantification of the physical model. The results, i.e., the uncertainty bands of the ICG signal, are presented and discussed in

Section 4. We draw our conclusions and suggest possible future improvements in

Section 5.

2. The Physical Model

We start from the Maxwell’s equations and recognize that one cardiac cycle, i.e., the time span between two heart beats, is on the order of one second, and the frequency of the injected current is on the order of a hundred kilo-Hertz. Thus we can assume the electric field to be quasi-static [

4], and the Maxwell equations boil down to Laplace’s equation (in complex notation),

with electric potential

V, electrical conductivity

, angular frequency

, permittivity

and imaginary unit

i. Equation (

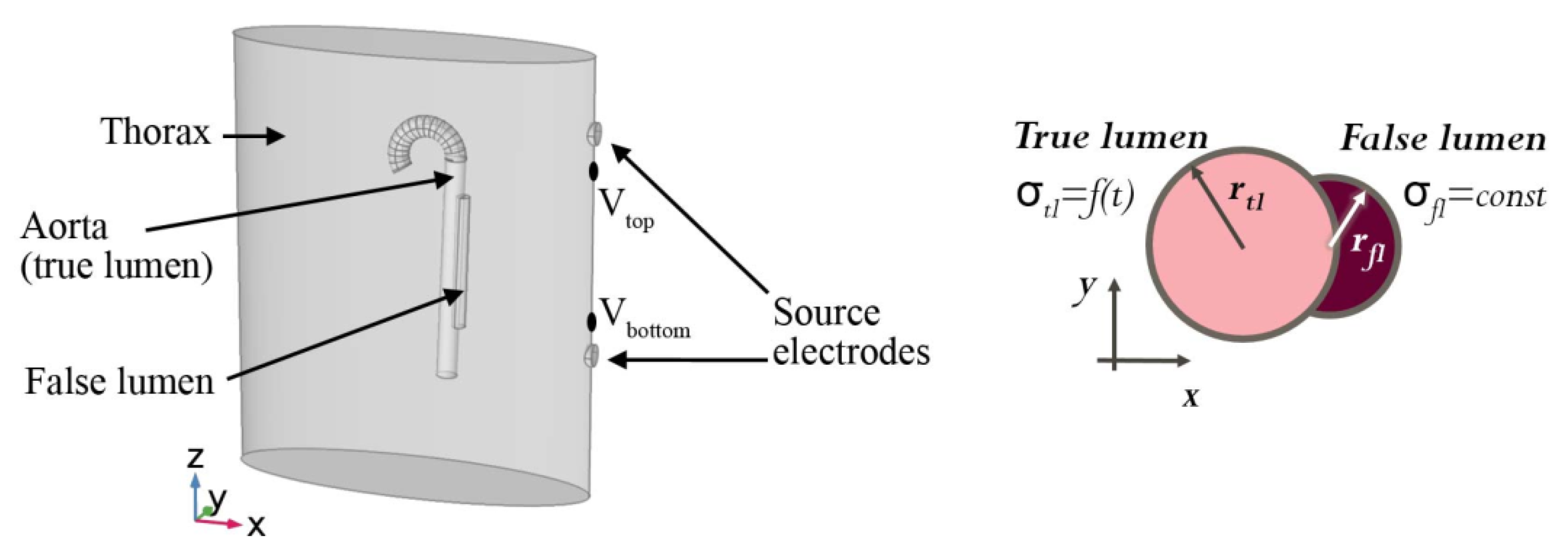

1) is then to be solved on the geometry as depicted in

Figure 3 and described as follows. The thorax (upper body) is modelled by an elliptic cylinder with a spatially homogeneous conductivity and permittivity. The aorta is an up-side-down umbrella stick. We consider the whole organ to be filled with blood and neglect the vessel walls. In clinical parlance, the blood-filled cavity caused by the aortic dissection is called “false lumen”, while the anatomically correct cavity is called “true lumen”. The true lumen is modelled as a circular cylinder, and the false lumen is a holed out circular cylinder attached to the true lumen. The dynamics are modelled via a time-dependent true and false lumen radius, which arises from pressure waves in a pulsatile flow. Further, the blood conductivity depends on the blood flow velocity, and is thus time-dependent in the true lumen, but constant in the false lumen due to a negligible flow velocity [

4,

25]. The boundary conditions are specified by the body surface and two source electrodes. The two source electrodes are modelled by two patches (top and bottom) on the left-hand side of the patient. For the top patch, the injection current is held constant at 4 mA and a frequency of 100 kHz via a constant surface integral of the current density. The bottom patch is defined as ground, i.e., a constant voltage

V. Considering the relatively low conductivity of air, we assume the rest of the body surface to be perfectly insulating. Equation (

1) is then discretized in space and solved with the Finite Elements method [

8]. The quality and fidelity of the space discretization, colloquially termed as “the mesh”, is crucial to the quality of the solution, but also to the amount of computational effort. We use a rather coarse mesh of low fidelity, and a rather detailed mesh of high fidelity, with two examples illustrated in

Figure 4.

We distinguish observable and unobservable (uncertain) parameters (also termed hidden or latent variables). An obvious observable parameter is time

t. From the plethora of unobservable parameters, we choose the false lumen radius,

, and perform a number of simulations with sensible values within the physiological and physical range, i.e., 5.0–25.0 mm with a step size of

mm. The physical lower boundary would be 0 mm, yet below 5 mm meshing problems occur in the LoFi model, i.e., badly shaped elements become frequent, geometry is approximated badly and thus space discretization fails. This is not surprising, since the LoFi model’s size of finite elements is on the order of

mm, and therefore cannot exhibit features of more detail. For any simulation, the voltage drop between any two points can now be measured. In the clinical setting, there would be a number of probe electrodes attached to the patient’s chest, back, neck and/or limbs, and voltage drops measured between the many pairs of probe electrodes. Here, we limit ourselves to just one pair of probe electrodes, with one probe electrode right beneath the upper injection electrode, and one probe electrode right above the lower injection electrode. The positions of the probe electrodes, relative to the injection electrodes, are indicated in

Figure 3 by V

top and V

bottom.

We used the Comsol Multiphysics software to perform the modelling [

26].

3. Bayesian Multi-Fidelity Scheme

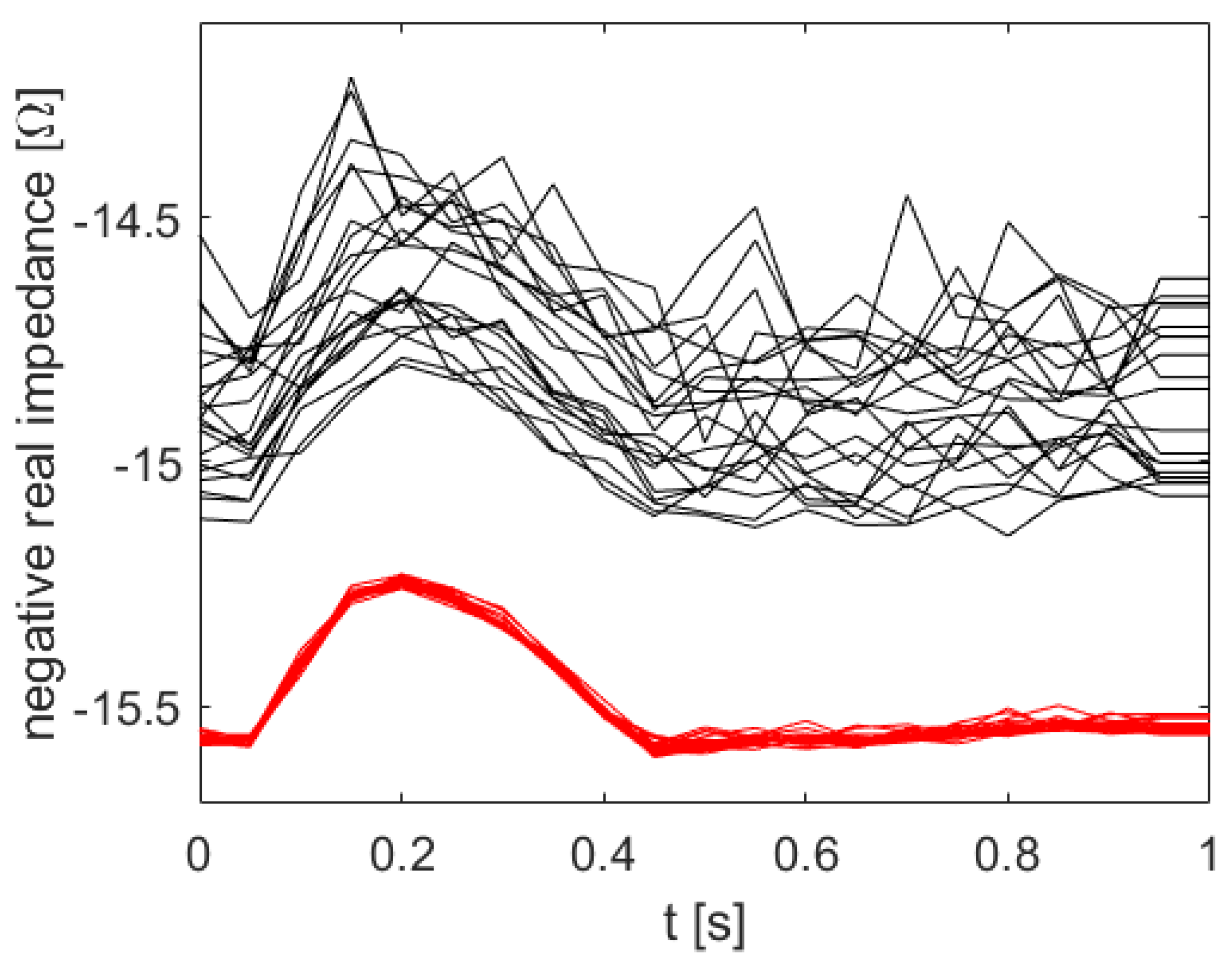

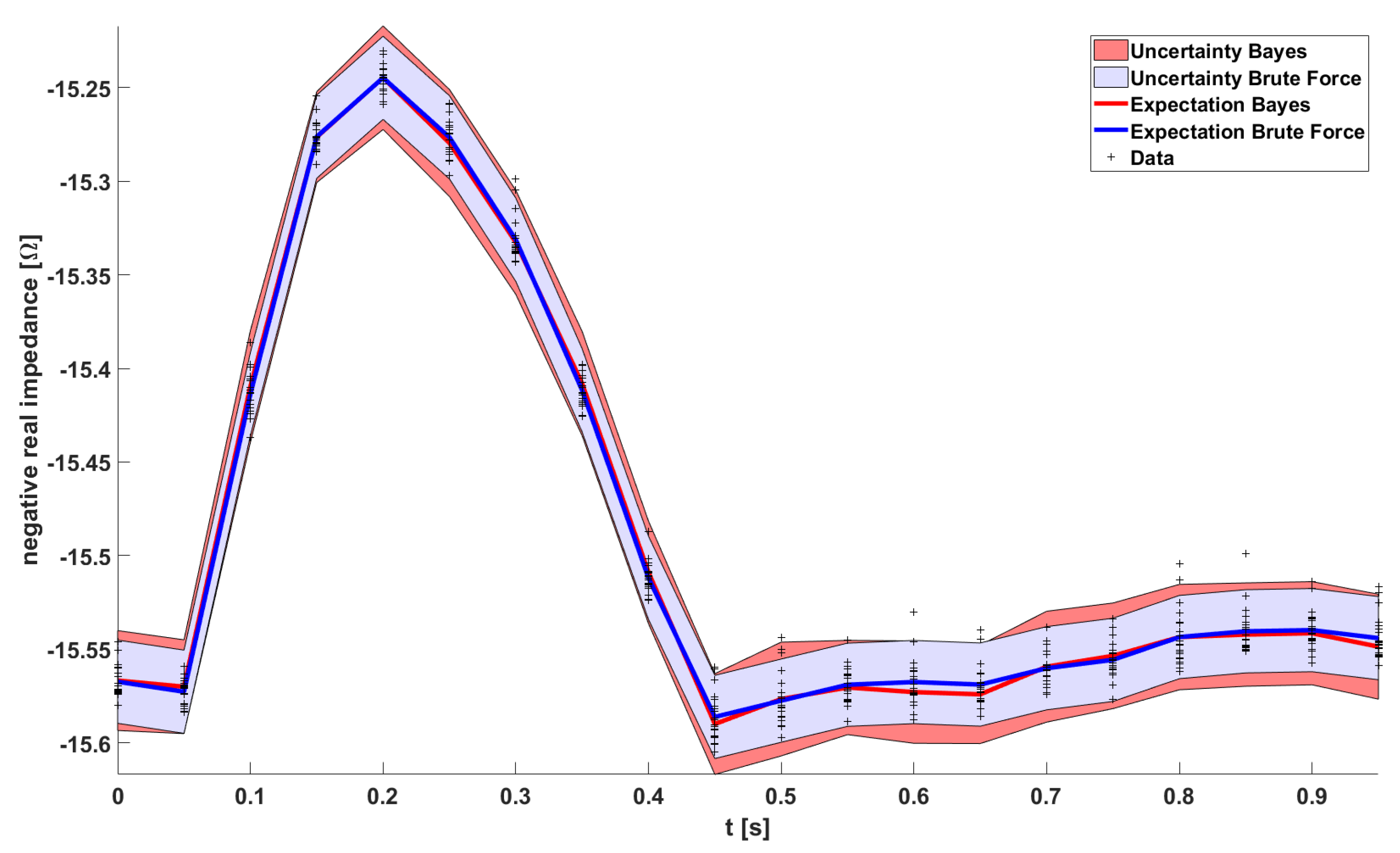

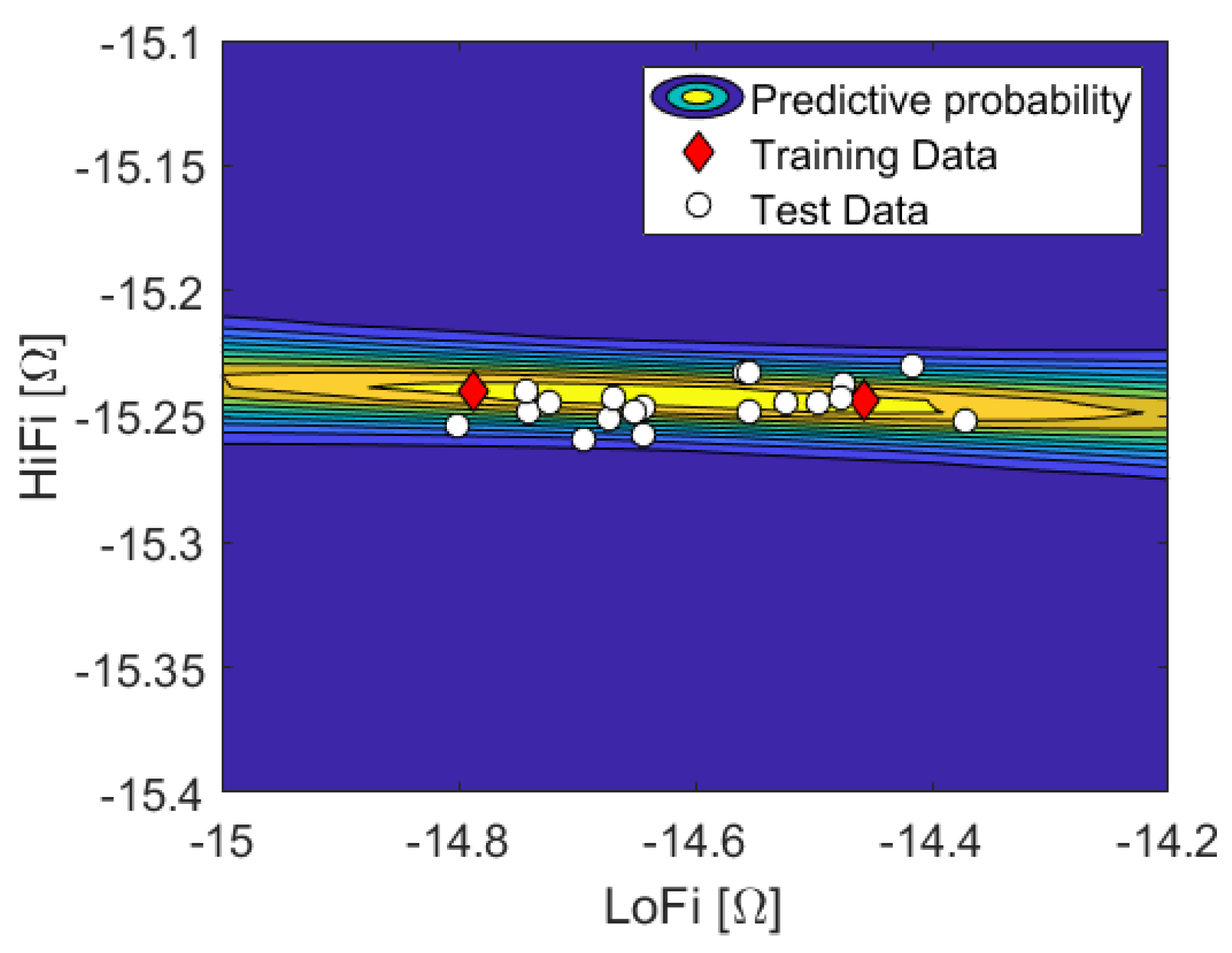

Having computed all the HiFi impedance times series (red) in

Figure 5, the uncertainty quantification is pretty much done already. The uncertainty bands of the Brute Force HiFi data in

Figure 6 (blue) are inferred from a Gaussian Process model with a squared exponential kernel. In the following paragraphs we will instead use the LoFi impedance time series (black) and only a few of the data points of the HiFi impedance time series (red). In other words; in order to compute the uncertainty bands, instead of doing all the HiFi simulations, we rather do all the LoFi simulations and only a few HiFi simulations.

We distinguish the observable physical parameter time, t, from unobservable (uncertain) physical parameters . We consider two disjoint data sets, and . is a large set of input parameters, , , and corresponding (noisy) outputs of the LoFi model, , where each individual output is the voltage drop with parameter at time instance . covers the time series of one whole cardiac cycle. This means . In principle, the solver is deterministic, yet the solution depends on the mesh. Since the false lumen radius is treated as a random variable, the geometry is random as well, and each mesh a specific realization of it. We then choose a small subset of the outputs of with size , and respectively, for which we additionally compute the (noisy) HiFi solution. This subset is chosen such that the support of is appropriately covered. Given input parameters , , the corresponding output of the HiFi model is . We gather these tuples of LoFi-output and corresponding HiFi-output in data set . In other words, shall be a small subset of which “in hindsight” is augmented with the corresponding HiFi data.

We acknowledge that time

t is observable in our experiment, but the latent variables

are not. Let

and

be the true values of high fidelity and low fidelity model respectively, corresponding to given input parameters (latent variables)

and a time instance

t. Note the distinction of the true values

and

from the noisy observations in

and

. Let

be the conditional complex. We want to compute the uncertainty bands of the ICG signal in

Figure 5, meaning the posterior pdf of

given

t, and introduce

via marginalisation

where we constructed the data sets such that we can partially cross them out here. For the sake of easier notation, we will omit the conditional complex

from here on. Let us first discuss the first term in the integral. It implies a one-dimensional regression problem

. This is convenient since the original problem,

, is usually multi-dimensional, and will come in handy once we scale up the number of uncertain parameters. We need to choose a regression function

f, acknowledge noise induced by the discretization error with

, and marginalize

f’s hyperparameters

, i.e.,

Note that

f is actually included in the conditional complex

, but explicitly written out here. In Equation (

3), we find a belated, formal justification for replacing the original regression problem

. In the conditional pdf in Equation (

3),

implies that the knowledge of

f,

, and

already determines

apart from noise

, and thus

is superfluous.

By looking at the data, we recognize that a linear regression function

will capture the salient features, and is hence sufficient for all time instances. Thus the prior reads

with inclination

a, constant offset

b and

being the Heaviside function. We find no apparent outliers in the data, and the likelihood shall be Gaussian with constant noise level, which was estimated from the data to

.

The second term in Equation (

2) is approximated by weighted samples (

), and the integral boils down to a discreet sum over these.

5. Conclusions & Outlook

We have set up a framework to systematically study the uncertainties of theoretical impedance cardiography signals associated with aortic dissection. Since the computational effort is about to skyrocket soon, we employed a Bayesian multi-fidelity scheme rather than using brute force. We did first experiments as a proof of principle, and computed the uncertainty bands of the simulation given an unknown false lumen radius. We achieved a solution quantitatively comparable to the reference solution, while reducing computational effort by roughly a factor of 3.5, which is close to the theoretical limit of 4. With increasing computational effort per simulation, we expect the reduction factor to increase.

The physical model will be improved by adding organs (e.g., lungs, heart) to the model one by one, and dispersion effects investigated by varying the injection current frequency (i.e., the boundary conditions). In terms of data analysis, the next step is to make further use of the pronounced structure of the signal, and sparsify simulation runs of the HiFi model in the time domain. This could be done by, e.g., interpreting each time series as a sample drawn from a Gaussian Process. i.e., we impose a GP prior onto the signal. Ultimately, we want to answer the inverse problem, “Is the aorta healthy or dissected?”, and thus need to compute the evidences. We strongly believe that this question can only be answered unambiguously by considering the signals of multiple electrodes at different positions on the human body at once.