Abstract

Active learning is very useful for classification problems where it is hard or time-consuming to acquire classes of data in order to create a subset for training a classifier. The classification of over-night polysomnography records to sleep stages is an example of such application because an expert has to annotate a large number of segments of a record. Active learning methods enable to iteratively select only the most informative instances for the manual classification so the total expert’s effort is reduced. However, the process is able to be insufficiently initialised because of a large dimensionality of PSG data, so the fast convergence of active learning is at risk. In order to prevent this threat, we have proposed a variant of the query-by-committee active learning scenario which take into account all features of data so it is not necessary to reduce a feature space, but the process is quickly initialised. The proposed method is compared to random sampling and margin uncertainty sampling which is another well-known active learning method. It was shown that during crucial first iteration of the process, the provided variant of query-by-committee acquired the best results among other strategies in most cases.

1. Introduction

Despite there exist a large amount of various machine learning techniques which can be adopted for numerous applications, in more and more real-world settings we often encounter the problem that it is possible to gather a large number of data, but the process of annotating them (i.e., assigning each instance to a specific class so that they can be used for training of a classifier) is expensive and time-consuming.

The classification of over-night polysomnography (PSG) records to sleep stages is a good example of the mentioned problem. In reality, a doctor or a studied annotator has to walk through the whole several hours-long PSG record, which was previously split to 30 seconds-long segments, and manually classify all segments to one of sleep stages [1]. Nowadays, the resolution of sleep phases provided by the American Association of Sleep Medicine (AASM) is used—sleep is divided into five stages: wake, REM, N1, N2 and N3, where N1, N2 and N3 are specified subsets of non-REM sleep [2]. It is clear that the whole process is very time-demanding and it would be appropriate to make it more automatic; on the other hand, the information about sleep stages is used for the patient’s diagnosis so the review of an expert is crucial.

The solution is in the adoption of semi-supervised methods as active learning which is used for choosing of the instances that are sufficient for learning an adequately good classifier [3].

2. Active Learning

Let X be the observation space and Y the space of classes. At the beginning of semi-supervised methods, there are two sets of instances: a small set of labeled instances which contains observations that are assigned to some class (i.e., their class is known) – , and a large set of unlabeled instances , whose classes we have no information about. The active learning process can be divided into a few steps [3]:

- Learn a classifier c on the set of labeled instances .

- Assign instances from the set of unlabeled instances to some class by using the learnt classifier c.

- Use a query strategy in order to select instance from set .

- Ask an “oracle” for the class which the selected instance belongs to. By “oracle” it is often meant an expert—a human annotator who has an expertise in the given field.

- Add the newly classified instance to set (and remove it from set ).

- Repeat steps 1–5 until a terminal condition is met (e.g., a given number of iterations is reached, the error attained a specified threshold, etc.).

2.1. Query Strategies

In this section, we would like to discuss the third step in the previously mentioned list about the active learning process. The most crucial part of active learning is to determine how instances will be selected for the classification by the “oracle”. Settles et al. [3] have introduced a large number of various methods which are commonly used. We will mention two of them, which are in our opinion the most favourite ones—margin uncertainty sampling (MUS) [4] and query-by-committee (QBC) [5]. These two methods are often compared among the literature [6,7].

In the margin uncertainty sampling scenario, the instance, whose class classifier c is the least certain of, is queried [8]. In order to explain it formally, the observation is chosen, for which holds:

where is the conditional probability of a class when the instance is observed, states for the most probable class of x and is the second most probable class of x.

The second approach consists in the utilisation of an ensemble of classifiers which represents competing hypotheses [9]. All models are learnt on the set of labeled instances and the instance, which the classifiers disagree the most about, is selected for assigning to its label. For measuring of the level of disagreement we will use the vote entropy [10]—the instance is queried for which holds:

where n is the number of classes, p is the number of models in the committee and represents how many classifiers decided that instance x belongs to class .

Now let us mentioned third query strategy we will use in order to compare the performance of described strategies: random sampling (RS). As the name suggests, in each iteration of the algorithm the instance, which was selected at random, is queried.

2.2. Advantages and Disadvantages of Active Learning

In this section we would like to discuss a few pros and cons which can be encountered when active learning is utilised.

- Advantages of Active Learning

- -

- Saving of time and money: there is no need to annotate a large amount of data, it is sufficient to label only the most informative instances.

- -

- Online adaptation of the classifier: the classifier is automatically retrained when new unseen instances are available.

- Disadvantages of Active Learning

- -

- Application-dependent selection of the query strategy: the query strategy has to be chosen wisely according i.e., to a chosen classifier (e.g., margin uncertainty sampling is suitable when the classifier computes posterior probabilities [3]), to some specific relationship among data instances in the observation space (then density-weighted methods are useful [11]), etc.

- -

- Sensitivity to the initialisation: when the process is not properly initialised, the performance of the chosen classifier is insufficient during several first iterations (the so-called “cold start problem” [12]) which can result in a slower convergence of the learning process.

3. Dataset

In our work, the dataset consisting of 36 full-night PSG recordings was used. 18 healthy individuals and 18 insomniac patients were examined using the standard 10–20 montage EEG [13] in the National Institute of Mental Health, Czech Republic. Although EOG and EMG were also recorded, only EEG signals were used in this study due to varying quality in both of EOG and EMG recordings. The detailed specification of the measured group of patients is provided in Table 1.

Table 1.

Information about PSG recordings of tested group of healthy individuals and insomniac patients.

All records were split to 30 seconds-long segments without overlaps. 21 features were extracted from all of used EEG derivatives (namely: Fp1, Fp2, F3, F4, C3, C4, P3, P4, F7, F8, T3, T4, T5, T6, Fz, Cz, Pz, O1, O2), i.e., the total amount of features was . List of all computed features is shown in Table 2. The Continuous wavelet transformation (CWT) was used for obtaining of the frequency spectrum which was utilised for computing of features 7–21.

Table 2.

List of extracted features.

Features listed in Table 2 were aggregated by using their median value over all EEG derivatives. As a result, every 30 seconds-long segment was described by 21 features.

4. Proposed Method

As we mentioned in Section 1, active learning often suffers from the cold start problem. It is now necessary to denote that at the beginning of active learning process the initial set of labeled instances contains only few instances described by relatively many features and this all can lead to overfitting. In our previous work [14] we successfully proposed a method which can be used for the increase of the initial set of labeled instances by 1-nearest neighbour classifier without any additional information about classes of selected instances.

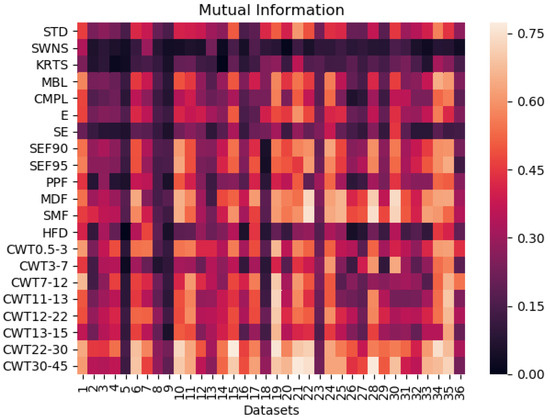

It is also possible to tackle this problem by reducing the feature space. This can be done e.g., by calculating of the mutual information between features and labels [15], i.e., by detecting the features which described instances’ classes the most. Values of the mutual information between individual features and labels for all datasets are shown in the Figure 1. At first sight it is clear that skewness, kurtosis and spectral entropy do not acceptably describe classes of instances (values of the mutual information for these features are approaching zero for all datasets). Furthermore, there is not any obvious pattern that some features gives a better detailed account about labels than other features, so the selection of fewer features is not able to be done.

Figure 1.

Mutual information between features and corresponding labels for all datasets.

Our idea was to utilise a property of the query-by-committee framework. We created an ensemble of simple linear classifiers, each classifier was learnt only on one feature. The instance, about whose class the classifiers had disagreed the most, was queried, classified, and moved to set . If there are more instances with the same level of disagreement, one of them is randomly selected and queried. As a result of this method, we suppose that the error on testing data will be smaller (i.e., classifier is well adapted to data) in first crucial iterations of the algorithm when the proposed version of query-by-committee will be adopted.

5. Experiments and Results

We split each dataset to a training and a testing subsets, the training sets always contain 60% of all instances from a dataset. Training data were divided to the set of unlabeled data and the set of labeled data in such way that five instances of each class were randomly chosen and were added to set ; the set of unlabeled instances was created by the rest of training instances. A linear classifier was chosen for learning on training data and consequently for the estimation of test error E on testing data which is defined as:

where is the percentage of incorrectly classified testing instances of each class.

The whole process followed previously mentioned steps of active learning (see Section 2). Note that E is computed in each iteration.

In order to get more reliable results, the whole process was repeated ten times (each time with different initialisation) and the estimations of E for each iteration were averaged.

We decided to compare three query strategies—random sampling, margin uncertainty sampling and our version of query-by-committee. In Table 3, Table 4 and Table 5 mean values of E acquired in 5th, 10th and 50th iterations are shown.

Table 3.

Values of the average of E obtained in the 5th iteration of the algorithm for all strategies. The smallest value of E within query strategies is in bold.

Table 4.

Values of the average of E obtained in the 10th iteration of the algorithm for all strategies. The smallest value of E within query strategies is in bold.

Table 5.

Values of the average of E obtained in the 50th iteration of the algorithm for all strategies. The smallest value of E within query strategies is in bold.

Let us summarize achieved results. Except Dataset 7, both active learning strategies reached a smaller test error than random sampling in the 5th iteration. Furthermore, the query-by-committee framework overcame margin uncertainty sampling in 30 cases. In the 10th iteration, random sampling acquired the smallest test error only on Dataset 18, the query-by-committee scenario reached the best results in 18 cases, In the end in the 50th iteration, this framework beat other strategies in 9 cases.

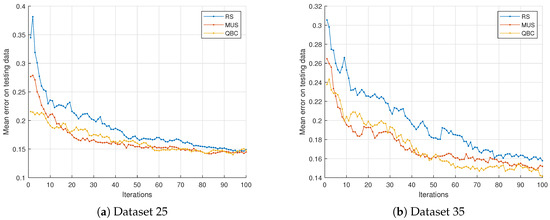

Let us show examples of typical results in Figure 2. The averaged test error during first 100 iterations is plotted. In both cases, the error of the query-by-committee achieves smaller values than other strategies in several first iterations, than the results are in favour of margin uncertainty sampling.

Figure 2.

Mean test error during 100 iterations of the algorithm for all used strategies.

6. Conclusions and Discussion

In this paper, we adopted the query-by-committee framework which consists in training the ensemble of basic linear classifiers (each classifier is learnt on one feature) on the set of unlabeled data. An instance, which class classifiers disagree the most, is then chosen, annotated and added to the set of labeled instances.

Acquired results showed that the test error in several first iterations is actually smaller when query-by-committee is used in comparison with margin uncertainty sampling, but margin uncertainty sampling is in most cases faster in following iterations. The statement, that the utilisation of the proposed variant of the query-by-committee framework is able to help the classifier with a faster adaptation to high-dimensional data, was fulfilled. This leads to the conclusion that the proposed variant of the query-by-committee scenario leads to preventing the cold start problem. Note that random sampling almost always achieved the worst results, so this validates the usage of active learning strategies.

The contribution of margin uncertainty sampling is invaluable, but the utilisation of this method is often limited, because margin uncertainty sampling is entitled to a proper classifier (as it was mentioned above, only classifiers which estimates posterior probabilities can be used). On the other hand, query-by-committee is more robust which was shown e.g., in [6]). Furthermore, our proposed method handles both selection of the most informative instance and dealing with high-dimensional data.

That raises a question of using the combination of both tested query strategies – the variant of query-by-committee at the beginning and then margin uncertainty sampling in next iterations. This will be tested in the future work as well as the utilisation of the proposed method on different data.

Author Contributions

Conceptualization, N.G. and M.M.; Methodology, N.G.; Software, N.G. and M.M.; Validation, N.N.; Investigation, N.G.; Writing—Original Draft Preparation, N.G.; Supervision, M.M.

Funding

The research has been supported by CVUT institutional resources (SGS grant No. SGS17/216/OHK4/3T/37).

Acknowledgments

We would like to acknowledge Martin Brunovsky, Jana Koprivova, Daniela Dudysova, and Alice Heuschneiderova from National Institute of Mental Health, Czech Republic, and Vaclav Gerla from Czech Institute of Informatics, Czech Technical University in Prague, Czech Rebublic, for their assistance, discussions, and contribution to the acquisition and evaluation of the PSG data.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AASM | American Association of Sleep Medicine |

| CWT | Continuous wavelet transform |

| EEG | Electroencephalography |

| EMG | Electromyography |

| EOG | Electrooculography |

| MUS | Margin uncertainty sampling |

| PSD | Power spectral density |

| PSG | Polysomnography |

| QBC | Query-by-committee |

| RS | Random sampling |

References

- Gerla, V. Automatic Analysis of Long-Term EEG Signals. Ph.D. thesis, Czech Technical University, Prague, Czech Republic, 2012. [Google Scholar]

- Duce, B.; Rego, C.; Milosavljevic, J.; Hukins, C. The AASM recommended and acceptable EEG montages are comparable for the staging of sleep and scoring of EEG arousals. J. Clin. Sleep Med. 2014, 10, 803. [Google Scholar] [CrossRef] [PubMed]

- Settles, B. Active Learning Literature Survey; Computer Sciences Technical Report 1648; University of Wisconsin–Madison: Madison, WI, USA, 2009. [Google Scholar]

- Scheffer, T.; Decomain, C.; Wrobel, S. Active hidden markov models for information extraction. In Proceedings of the International Symposium on Intelligent Data Analysis, Cascais, Portugal, 13–15 September 2001; Springer: Berlin/Heidelberg, Germany, 2001; pp. 309–318. [Google Scholar]

- Seung, H.S.; Opper, M.; Sompolinsky, H. Query by Committee. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, COLT ’92, Pittsburgh, PA, USA, 27–29 July 1992; ACM: New York, NY, USA, 1992; pp. 287–294. [Google Scholar]

- Ramirez-Loaiza, M.E.; Sharma, M.; Kumar, G.; Bilgic, M. Active learning: An empirical study of common baselines. Data Min. Knowl. Discov. 2017, 31, 287–313. [Google Scholar] [CrossRef]

- Schein, A.I.; Ungar, L.H. Active learning for logistic regression: An evaluation. Mach. Learn. 2007, 68, 235–265. [Google Scholar] [CrossRef]

- Lewis, D.D.; Catlett, J. Heterogeneous Uncertainty Sampling for Supervised Learning. In Proceedings of the Eleventh International Conference on Machine Learning, New Brunswick, NJ, USA, 10–13 July 1994; pp. 148–156. [Google Scholar]

- Tomanek, K. Resource-aware Annotation Through Active Learning. Ph.D. thesis, Technical University Dortmund, Dortmund, Germany, 2010. [Google Scholar]

- Dagan, I.; Engelson, S.P. Committee-Based Sampling For Training Probabilistic Classifiers. In Proceedings of the Twelfth International Conference on Machine Learning, Tahoe City, CA, USA, 9–12 July 1995; pp. 150–157. [Google Scholar]

- Settles, B.; Craven, M. An Analysis of Active Learning Strategies for Sequence Labeling Tasks. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, EMNLP ’08, Honolulu, HI, USA, 25–27 October 2008; Association for Computational Linguistics: Stroudsburg, PA, USA, 2008; pp. 1070–1079. [Google Scholar]

- Attenberg, J.; Provost, F. Why label when you can search? Alternatives to active learning for applying human resources to build classification models under extreme class imbalance. In Proceedings of the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 24–28 July 2010; pp. 423–432. [Google Scholar]

- Klem, G.H.; Lüders, H.O.; Jasper, H.; Elger, C. The ten-twenty electrode system of the International Federation. Electroencephalogr. Clin. Neurophysiol. 1999, 52, 3–6. [Google Scholar]

- Grimova, N.; Macas, M.; Gerla, V. Addressing the Cold Start Problem in Active Learning Approach Used For Semi-automated Sleep Stages Classification. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2249–2253. [Google Scholar]

- Peng, H.; Long, F.; Ding, C. Feature selection based on mutual information: criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 8, 1226–1238. [Google Scholar] [CrossRef] [PubMed]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).