Applying Pedagogical Usability for Designing a Mobile Learning Application that Support Reading Comprehension †

Abstract

:1. Introduction

- It is difficult for students to develop a constructed response using mobile learning applications, mainly because HCI problems related to the screen’s size and interaction mode.

- It is difficult for the teacher to evaluate all the answers developed by students.

- It is difficult to monitor the degree of progress of the students’ work, how many have responded, and if they responded well.

- It is difficult to manage the interactions inside the classroom in order to achieve learning.

2. Technical Usability Issues in RedCoApp

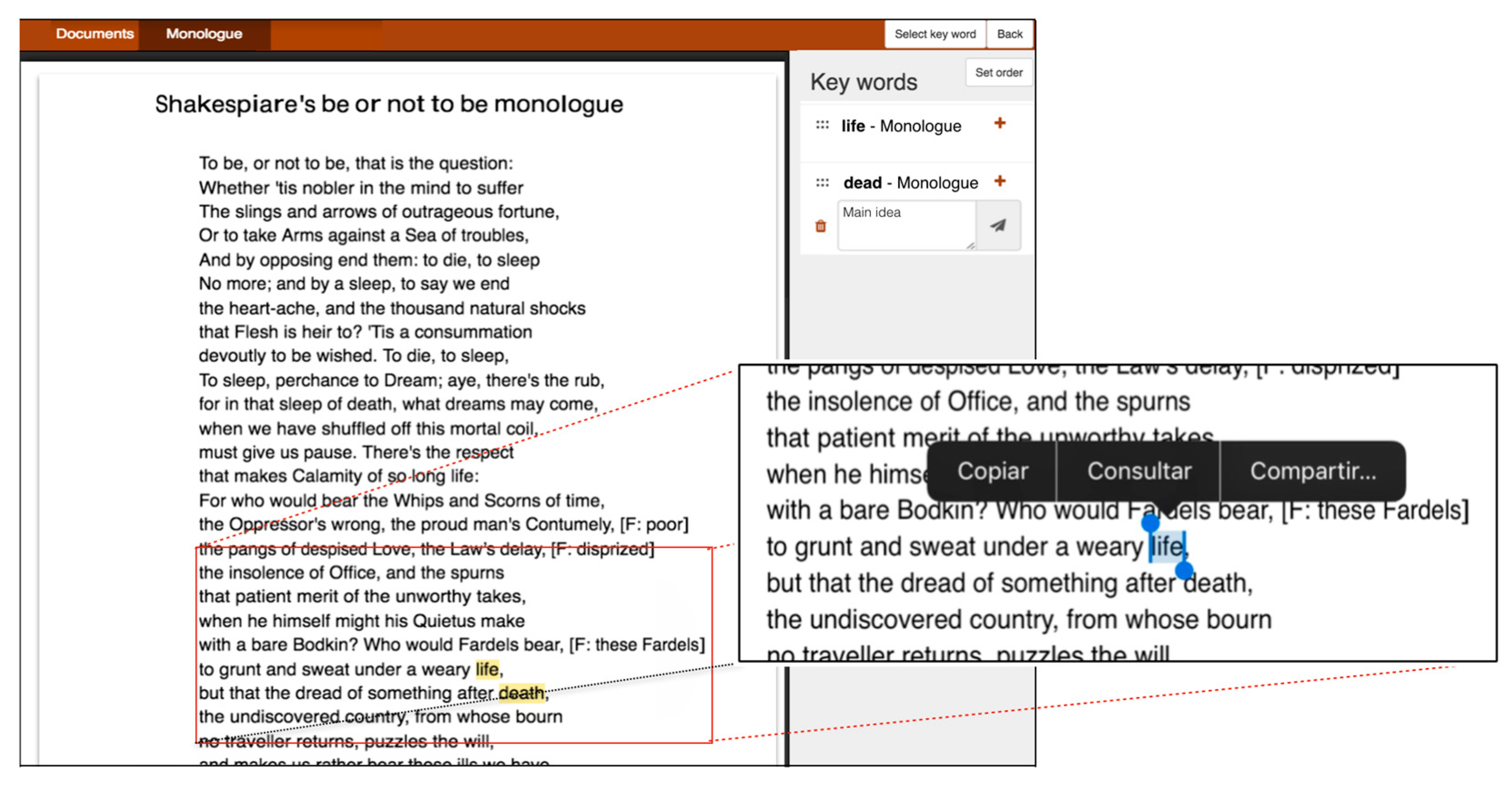

3. Brief Description of the New Version of RedCoMulApp

4. Usability for Mobile Applications in Learning Environments

4.1. Usability in the Context of Teaching and Learning

4.2. Pedagogical Usability

- -

- Learner Control. Minimizing working memory load, as users have limited memory capacity, usually 7 +/− 2 units. Control of the technology should be taken away from the teachers and instructional designers and given to the learners. Information must be presented in meaningful, interconnected entities, and not in separate pieces that are hard to understand.

- -

- Learner Activity. A teacher’s role depends on underlying pedagogic assumptions. Learning material should gain learners attention. Learners should feel that they own the goals of action and thus the results.

- -

- Cooperative/Collaborative Learning. The Constructivist view is based on social learning and knowledge sharing via collaborative tasks. Learners are able to discuss and negotiate on various approaches to the learning task. Tools might support asynchronous or synchronous social navigation.

- -

- Goal Orientation. Instructivists emphasize few clearly defined goals, constructivist goals should also be clear, but set by the learners themselves. Task decomposition can be inductive or deductive.

- -

- Applicability. Authentic activities and contexts: examples should be taken from authentic situations. Transferring learned knowledge or skills are useful to other contexts. Human development should be considered in a way that the material is relevant for target population’s developmental stage.

- -

- Added Value. When mobile devices and digital learning material are used in a learning situation, it is expected that this is done to introduce identifiable added value to the learning in comparison to, for example, printed material, and material produced by the teacher or the students themselves.

- -

- Motivation. Motivation affects the whole learning process. Intrinsic (need for deep understanding) and extrinsic (need for high grades) motivation should be considered.

- -

- Valuation of previous knowledge. Prerequisites—needed to accomplish learning tasks. Meaningful encoding (elaboration), learner is encouraged to make use of his/her previous knowledge.

- -

- Flexibility. Pretesting and diagnostics help adapt learning material for different learners. Task decomposition, small and flexible learning units is required.

- -

- Feedback. The computer-based application or learning material used should provide the student with encouraging and immediate feedback. Encouraging feedback increases learning motivation; immediate feedback helps the student understand the problematic parts in his/her learning.

- -

- Instruction. Whether the application’s instruction is clear or if it needs a lecturer’s intervention.

- -

- Learning content relevance. The extent to which the content of the application supports students in their learning.

- -

- Learning content structure. The degree to which the content of the application is organized in a clear, consistent and coherent way that supports learning.

- -

- Tasks. The extent to which the tasks performed on the application help students achieve their learning goals.

- -

- Learner variables. The degree to which learner variables are considered in the application.

- -

- Collaborative learning. The extent to which the application allows students to study in groups.

- -

- Ease of use. The provision by the application of clear directions and descriptions of what students should do at every stage when questions to the mobile class activities are answered.

- -

- Learner control. The characteristics possessed by the application that allows students to make instructional choices. The extent to which the learning material is broken down into meaningful units. The extent to which the learning material in the application is so interesting to students that it compels them to participate.

- -

- Motivation. The degree to which the application motivates students.

4.3. Metrics for Pedagogical Usability Applied to RedCoMulApp

5. Applying Pedagogical Usability to RedCoMulApp

5.1. Subjects and Settings

5.2. Procedure

| Pedagogical Usability | |

|---|---|

| Metrics | Questions |

| M1. Learner Control | Q1. When I worked on this assignment, I felt that I, not the application, held the responsibility for my own learning. Q2. The application does not present information in a format that makes it easy to learn. Q3. Seeing anonymously the answers and their justifications of my classmates, makes it easier for me to be sure of my answer, and/or correct it. |

| M2. Learner Activity | Q4. Reviewing my answers at the end of the activity does not allow me to be aware of my learning progress. Q5. Being able to answer once again an erroneous answer given, supports my learning. Q6. Elaborating justifications to my answers, do not allow me to be sure of my selection of the answers that I am giving. |

| M3. Collaborative learning | Q7. When I am using the application, it lets me know that other users are doing. Q8. Exchanging points of view, opinions and comments face-to-face with classmates, does not support my learning. Q9. This application lets me talk with my classmates. Q10. This application does not support teamwork. Q11. It is pleasant to use the application with another student, using my own device. |

| M4. Goal Orientation | Q12. The application does not evaluate my learning achievements. Q13. This application tells me how much progress I have made in my learning. |

| M5. Applicability | Q14. I feel that in the future, I could not use what I learned with the support of this application. |

| M6. Add value | Q15. I learn more with this application, than with other conventional methods. |

| M7. Motivation | Q16. I do not try to achieve a score as high as I can in this application |

| M8. Feedback | Q17. This application provides me with immediate feedback of my activities. |

| M9. Learning content relevance | Q18. The text that should be read in the application does not facilitate my task of correctly answering multiple-choice questions. Q19. The multiple-choice questions are organized in a clear and coherent way, and support my learning. |

| M10. Learning content structure | Q20. Following the sequence of three phases of the application does not allow to answer multiple-choice questions effectively. Q21. Being able to revise my answers and having the possibility of changing them together with justification, facilitates the achievement of my learning. |

| M11. Ease of use | Q22. The application clearly does not support me in what I have to do at each phase of the learning activity. Q23. In the application it is easy to follow the sequence of the “individual phase”, followed by “anonymous” and “teamwork” collaboration re elaboration phases. Q24. The multiple-choice questions to e answered and the justifications to be made have an appropriate format. |

| M12. Instruction | Q25. The indications to use the application are clear, and therefore, the intervention of the teacher is not necessary to be able to carry out the learning activity. Q26. In general, the task performed with the application was hard to complete. |

5.3. Activity Description

5.4. Instruments

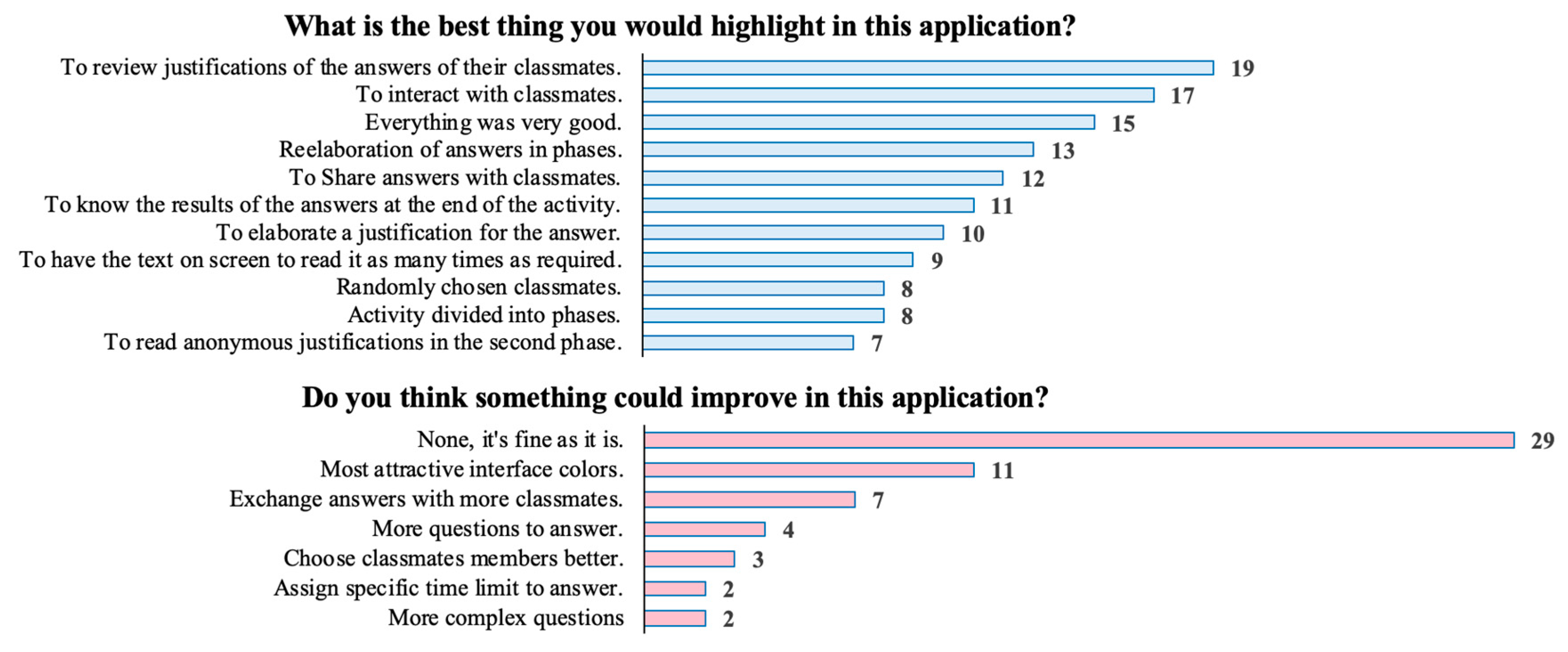

5.5. Results

- (a)

- M3. Collaborative Learning (4.71). Teamwork was perceived as the most outstanding aspect. In particular, questions Q8., Q9. and Q11. are the ones with higher averages, which denote students strongly agreeing with exchanging points of view and opinions through face-to-face conversations.

- (b)

- M6. Add value (4.65). To answer multiple-choice questions similar to those they will have to answer in the PSU test was perceived as a relevant benefit. They also appreciated that in the following two phases, they could learn more, by reworking their answers based on the comments of the answers of others (“anonymous” phase), and by reworking their answers collaboratively with their peers (“teamwork” phase). Students usually prepare for the PSU, by conducting tests consisting of answering only multiple-choice questions. That is, RedCoMulApp turns a summative activity into a formative one.

- (c)

- M1. Learner Control (4.63). This means that according to students, the application enables them to manage the information required to facilitate their learning process.

- (d)

- M11. Ease to use (4.61). According to students’ opinion it is easy to perform the tasks of answering to the multiple selection questions and justifying their answers during the three phases of the activity.

- (e)

- M7. Motivation (4.56), and M4. Goal Orientation (4.56). This evaluation shows a high motivation of the students to perform the activity and obtain the highest possible results. Students perceive that RedCoMulApp supports them in their effort of obtaining correct results, informing them about the achieved progress level at the end of the learning activity.

- (a)

- In the creation of the educational activity, the teacher can add as many questions as wanted.

- (b)

- In addition to the random criterion to form the members of the team works, other criteria can be used: based on the responses collected in the “individual” phase, either between members with the same answers, or with different answers; or based on the academic performance of the students.

- (c)

- The teacher can verbally inform the students of the time they have in each phase. Once this time has elapsed, the teacher activates the next phase. The application does not specifically implement a functionality that establishes a time limit to answer the questions in each of the phases.

- (d)

- Teacher chooses the complexity of the activity at its creation time.

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Nielsen, J. Evaluating hypertext usability. In Designing Hypermedia for Learning; Springer: Berlin/Heidelberg, Germany, 1990; pp. 147–168. [Google Scholar]

- Squires, D.; Preece, J. Usability and learning: Evaluating the potential of educational software. Comput. Educ. 1996, 27, 15–22. [Google Scholar] [CrossRef]

- Gould, D.J.; Terrell, M.A.; Fleming, J. A usability study of users’ perceptions toward a multimedia computer-assisted learning tool for neuroanatomy. Anat. Sci. Educ. 2008, 1, 175–183. [Google Scholar] [CrossRef] [PubMed]

- Koohang, A.; du Plessis, J. Architecting usability properties in the e-learning instructional design process. Int. J. E Learn. 2004, 3, 38–44. [Google Scholar]

- Nokelainen, P. An empirical assessment of pedagogical usability criteria for digital learning material with elementary school students. J. Educ. Technol. Soc. 2006, 9, 178–197. [Google Scholar]

- Vukovac, D.P.; Kirinic, V.; Klicek, B. A comparison of usability evaluation methods for e-learning systems. DAAAM Int. Sci. Book 2010, 271–288. Available online: https://www.daaam.info/Downloads/Pdfs/science_books_pdfs/2010/Sc_Book_2010-027.pdf (accessed on 18 November 2019).

- Cheung, A.C.; Slavin, R.E. Effects of educational technology applications on reading outcomes for struggling readers: A best-evidence synthesis. Read. Res. Q. 2013, 48, 277–299. [Google Scholar] [CrossRef]

- Van Staden, S.; Zimmerman, L. Evidence from the progress in international reading literacy study (PIRLS) and how teachers and their practice can benefit. In Monitoring the Quality of Education in Schools; Brill Sense: Leiden, The Netherlands, 2017; pp. 123–138. [Google Scholar]

- Zurita, G.; Baloian, N.; Jerez, O.; Peñafiel, S. Practice of skills for reading comprehension in large classrooms by using a mobile collaborative support and microblogging. In Proceedings of the CYTED-RITOS International Workshop on Groupware, Saskatoon, SK, Canada, 9–11 August 2017; pp. 81–94. [Google Scholar]

- DiBattista, D.; Sinnige-Egger, J.-A.; Fortuna, G. The “none of the above” option in multiple-choice testing: An experimental study. J. Exp. Educ. 2014, 82, 168–183. [Google Scholar] [CrossRef]

- Park, J. Constructive multiple-choice testing system. Br. J. Educ. Technol. 2010, 41, 1054–1064. [Google Scholar] [CrossRef]

- Bennett, R.E.; Rock, D.A.; Wang, M. Equivalence of free-response and multiple-choice items. J. Educ. Meas. 1991, 28, 77–92. [Google Scholar] [CrossRef]

- Santelices, M.V.; Catalán, X.; Horn, C. University admission criteria in Chile. In The Quest for Equity in Chile’s Higher Education: Decades of Continued Efforts; Rowman & Littlefield: Lanham, MD, USA, 2018; p. 81. [Google Scholar]

- Dixson, D.; Worrell, F.C. Formative and summative assessment in the classroom. Theory Pract. 2016, 55, 153–159. [Google Scholar] [CrossRef]

- Zurita, G.; Baloian, N.; Jerez, O.; Peñafiel, S.; Pino, J.A. Findings when converting a summative evaluation instrument to a formative one through collaborative learning activities. In Proceedings of the International Conference on Collaboration and Technology, Costa de Caparica, Portugal, 5–7 September 2018; pp. 1–16. [Google Scholar]

- Ivanc, D.; Vasiu, R.; Onita, M. Usability evaluation of a LMS mobile web interface. In Proceedings of the International Conference on Information and Software Technologies, Kaunas, Lithuania, 13–14 September 2012; pp. 348–361. [Google Scholar]

- Sung, Y.-T.; Chang, K.-E.; Liu, T.-C. The effects of integrating mobile devices with teaching and learning on students’ learning performance: A meta-analysis and research synthesis. Comput. Educ. 2016, 94, 252–275. [Google Scholar] [CrossRef]

- ISO/TC 159/SC 4 Ergonomic of human-system interaction (Subcommittee). Ergonomic Requirements for Office Work with Visual Display Terminals (VDTs).: Guidance on Usability International Organization for Standarization. 1998, p. 45. Available online: https://www.sis.se/api/document/preview/611299/ (accessed on 18 November 2019).

- Junior, F.S.; Ramos, M.; Pinho, A.; Rosa, J.S. Pedagogical Usability: A theoritical essay for e-learning. Holos 2016, 32, 3–15. [Google Scholar] [CrossRef]

- Khomokhoana, P.J. Using Mobile Learning Applications to Encourage Active Classroom Participation: Technical and Pedagogical Considerations. Ph.D. Thesis, University of the Free State, Bloemfontein, South Africa, 2011. [Google Scholar]

- Hussain, A.; Kutar, M. Apps vs devices: Can the usability of mobile apps be decoupled from the device? IJCSI Int. J. Comput. Sci. 2012, 9, 3. [Google Scholar]

- Kukulska-Hulme, A. Mobile usability in educational contexts: What have we learnt? Int. Rev. Res. Open Distrib. Learn. 2007, 8. [Google Scholar] [CrossRef]

| Likert Scale (Frequency) | ||||||||

|---|---|---|---|---|---|---|---|---|

| Metrics | Q. | 1 | 2 | 3 | 4 | 5 | Mean Q. | Mean Mt. |

| M1. | Q1. | 0 | 0 | 14 | 17 | 47 | 4.42 | 4.63 |

| Learner Control | Q2. | 52 | 21 | 5 | 0 | 0 | 4.60 | |

| Q3. | 0 | 0 | 2 | 7 | 69 | 4.86 | ||

| M2. | Q4. | 52 | 21 | 5 | 0 | 0 | 4.60 | 4.55 |

| Learner Activity | Q5. | 0 | 2 | 7 | 22 | 47 | 4.46 | |

| Q6. | 49 | 21 | 3 | 1 | 0 | 4.59 | ||

| M3. | Q7. | 0 | 1 | 4 | 17 | 55 | 4.64 | 4.71 |

| Collaborative Learning | Q8. | 65 | 11 | 2 | 0 | 0 | 4.81 | |

| Q9. | 0 | 0 | 1 | 16 | 61 | 4.77 | ||

| Q10. | 53 | 22 | 3 | 0 | 0 | 4.64 | ||

| Q11. | 0 | 0 | 3 | 16 | 59 | 4.72 | ||

| M4. | Q12. | 46 | 27 | 4 | 1 | 0 | 4.51 | 4.56 |

| Goal Orientation | Q13. | 0 | 0 | 2 | 27 | 49 | 4.60 | |

| M5. Applicability | Q14. | 51 | 19 | 5 | 3 | 0 | 4.51 | 4.51 |

| M6. Add Value | Q15. | 0 | 1 | 6 | 12 | 59 | 4.65 | 4.65 |

| M7. Motivation | Q16. | 47 | 28 | 3 | 0 | 0 | 4.56 | 4.56 |

| M8. Feedback | Q17. | 0 | 0 | 7 | 26 | 45 | 4.49 | 4.49 |

| M9. | Q18. | 40 | 21 | 12 | 3 | 2 | 4.21 | 4.21 |

| Learning Content Relevance | Q19. | 0 | 2 | 18 | 19 | 39 | 4.22 | |

| M10. | Q20. | 42 | 28 | 8 | 0 | 0 | 4.44 | 4.52 |

| Learning Content Structure | Q21. | 0 | 0 | 6 | 19 | 53 | 4.60 | |

| M11. | Q22. | 61 | 16 | 1 | 0 | 0 | 4.77 | 4.61 |

| Ease to use | Q23. | 0 | 1 | 6 | 21 | 50 | 4.54 | |

| Q24. | 48 | 22 | 8 | 0 | 0 | 4.51 | ||

| M12. | Q25. | 0 | 0 | 9 | 16 | 53 | 4.56 | 4.54 |

| Instruction | Q26. | 49 | 21 | 8 | 0 | 0 | 4.53 | |

| Total | 4.55 | |||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zurita, G.; Baloian, N.; Peñafiel, S.; Jerez, O. Applying Pedagogical Usability for Designing a Mobile Learning Application that Support Reading Comprehension. Proceedings 2019, 31, 6. https://doi.org/10.3390/proceedings2019031006

Zurita G, Baloian N, Peñafiel S, Jerez O. Applying Pedagogical Usability for Designing a Mobile Learning Application that Support Reading Comprehension. Proceedings. 2019; 31(1):6. https://doi.org/10.3390/proceedings2019031006

Chicago/Turabian StyleZurita, Gustavo, Nelson Baloian, Sergio Peñafiel, and Oscar Jerez. 2019. "Applying Pedagogical Usability for Designing a Mobile Learning Application that Support Reading Comprehension" Proceedings 31, no. 1: 6. https://doi.org/10.3390/proceedings2019031006

APA StyleZurita, G., Baloian, N., Peñafiel, S., & Jerez, O. (2019). Applying Pedagogical Usability for Designing a Mobile Learning Application that Support Reading Comprehension. Proceedings, 31(1), 6. https://doi.org/10.3390/proceedings2019031006