Abstract

Timing requirements are present in many current context-aware and ambient intelligent applications. These kinds of applications usually demand a timing response according to needs dealing with context changes and user interactions. The current work introduces an approach that combines knowledge-driven and data-driven methods to check these requirements in the area of human activity recognition. Such recognition is traditionally based on machine learning classification algorithms. Since these algorithms are highly time consuming, it is necessary to choose alternative approaches when timing requirements are tight. In this case, the main idea consists of taking advantage of semantic ontology models that allow maintaining a level of accuracy during the recognition process while achieving the required response times. The experiments performed and their results in terms of checking such timing requirements along with keeping acceptable recognition levels confirm this idea as shown in the final section of the work.

1. Introduction

Context-aware and ambient intelligent environments are increasingly in our daily life. These kinds of environments and related applications usually introduce timing requirements associated with the need to provide a suitable response when context changes and user interactions occur. This is particularly true in the case of human activity recognition (HAR) tasks which require sensing and detecting specific user states in short periods of time. The current work introduces an approach to check these requirements in the HAR area. Such an approach is focused on the possibilities offered by wearable devices (smartwatches, smart-bands or mobile phones) to gather multiple sensor data and process them [1,2]. However, these devices commonly provide very different computational capabilities, with low performance in some cases, and they can concurrently run a number of applications overloading their processing power (e.g., background apps). This makes it difficult to obtain timely recognition results when user activities are quickly changing. Moreover, classification algorithms involved in the processing of HAR tasks are commonly based on machine learning (ML) methods that require a great deal of computational cost as they are highly time-consuming. Therefore, a careful process of balancing timing requirements while keeping acceptable recognition levels has to be performed in this HAR context. There are multiple initiatives related to the recognition of activities based on the sensor values obtained from mobile and wearable devices, which are reported in reviews and surveys [3,4,5]. Mobile phones or smartwatches provide a rich set of sensing capabilities coming from embedded sensors such as accelerometers, compasses, gyroscopes, GPS, microphones, or cameras. In particular, accelerometer data has been used to classify several basic real-time human movements [6,7]. These proposals offer accurate results in recognition tasks, but they are not directly concerned with the need to check timing requirements when monitoring human activities in this context. Then, HAR systems have to be designed to address their suitable recognition as well as fulfilling specific temporal constraints. Lara and Labrador [8] proposed a mobile platform for real-time HAR that evaluated the response times of recognition tasks. MobiRAR [9] is another real-time human activity recognition system based on using mobile devices. Predictions using wearable sensor data were also analyzed to check their latency times [10]. Approaches used for sensor-based activity recognition have been traditionally divided into two main categories [11]: those methods driven by data which are based on ML techniques or other mechanisms that introduce a prior semantic domain knowledge to carry out such recognition. Some authors such as Riboni et al. [12] stated that knowledge-driven approaches were weak dealing with temporal information so alternative solutions such as using dynamic sensor event segmentation techniques [13] or analyzing sub-temporal dataset windows could be addressed [14]. Moreover, there are also hybrid proposals addressed to combine both types of approaches in order to obtain a balance between dealing with temporal constraints and classification accuracy. The current work is based on a framework addressed to build smaller training models which can be applied in statistical classification algorithms and also take advantage of ontology models that enable accurate recognition levels while adjusting their timing responses.

The remainder of the document is structured as follows. Section 2 refers to works dealing with the use of classification algorithms, which combine data-driven methods with ontology models, with the purpose of improving user activity recognition. Section 3 describes the approach proposed in the current work beginning with the basic concepts that underlie it and providing an overview about its global architecture. Moreover, in Section 4, an example case study is introduced to show the approach application, addressing several samples of scenarios in which HAR timing and accuracy requirements can be addressed. These scenarios are tested in Section 5, by measuring response times and recognition levels. Finally, some conclusions and further work are discussed.

2. Related Work

This section is focused on searching for studies in which sensor-based HAR methods combine different perspectives such as sensor data processing, knowledge-driven models or temporal checking mechanisms. Such references are based on hybrid approaches that try to take advantage of the capabilities available in each perspective. COSAR [15] was a good example of a hybrid reasoning framework for context-aware activity recognition to ontologically refine the statistical predictions. Chen et al. [16] also presented an ontology-based hybrid approach to model user activities learning from data about specific user profiles. A reverse approach was proposed by Azkune et al. [17] to extend knowledge-driven activity models through data-driven techniques.

One of the main problems in these hybrid approaches is time management and how they can deal with timing requirements in activity recognition areas. Riboni et al. [18] proposed the unsupervised recognition of interleaved activities by using time-aware inference rules and considering temporal constraints. The approach of Salguero et al. [19] was focused on a time-based pre-segmentation process that, dynamically, defined window sizes, and the Knowledge-based Collaborative Active Learning proposal [20] addressed the possibility of considering the segmentation of the flows of sensor events in real-time. OSCAR [21] is another hybrid framework of knowledge-driven techniques based on ontological constructs and temporal formalisms by means of segmentation processes, complemented with data-driven algorithms for the recognition of parallel and interleaved activities. The semantics-based approach to sensor data segmentation in real-time proposed by Triboan et al. [22] is also a good example of combining several perspectives in activity recognition along with the proposal by Liu et al. [23] about timely daily activity recognition from incomplete streams of sensor events. Therefore, there are several proposals focused on combining different approaches to improve the efficiency of traditional classification algorithms with the support of semantic models and also dealing with checking timing requirements in the HAR area.

3. Approach

3.1. Approach Fundamentals

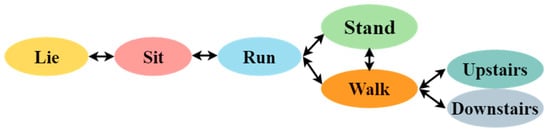

The current approach addresses the processing of mobile sensor information in order to recognize user activities and their associated states in specific HAR domains. So, it is necessary to define a set of basic concepts that can be used through the different stages or classification processes that characterize human activity recognition. The first concept of interest is the so-called user state, which represents those features that can be associated with the actions or physical activities performed by individuals. Table 1 shows a list of common user states along with a short description and a label that identifies every state. These user states can represent static features (e.g., sitting or lying) or dynamic actions such as running or going upstairs. State transitions are shown in Figure 1 as a graph displaying the potential connection among the considered user states.

Table 1.

User states overview.

Figure 1.

Transition states graph.

Then, activity events are used to represent any kind of circumstance that is occurring at a given time in the context of human activities. In the current approach, these events are usually related to user states (ai) which are part of a set of activities labeled as A:

and they can be recognized as the activity progresses or even be attached to transitions between them. Moreover, a special kind of activity event can consist of the action of a user who selects which type of state is expected or triggered by him. In addition, T represents the timestamps corresponding to the set of monitored activities A:

An instance of an activity event is defined as follows, linking each user state with a timestamp ti:

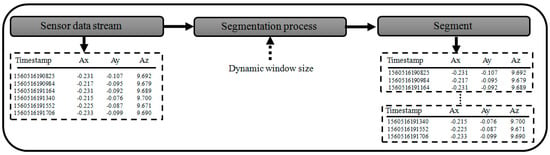

Activity recognition systems involve several types of sensors to obtain streams of data that can be used in order to classify user’s activities and determine their associated user states. In this sense, the sensor stream may be segmented into several data windows to improve their processing. Such windows can be static or have dynamic sizes (measured in time units) and they may also overlap sensor samples when time is passing or “slides”. This concept of a sliding window is widely employed in HAR processes, and several ranges of window sizes can be used. Windowing techniques have shown their effectiveness for the recognition of static as well as dynamic human activities [24]. In the current work, a time-based windowing method is used to segment the data gathered from an accelerometer sensor into time windows. This method merges sensor data that are collected during a specific time interval. Instead of defining a fixed length of static windows, it is possible to dynamically adjust the window size. Figure 2 shows a schema of such a windowing mechanism.

Figure 2.

Overview of segmentation stages.

Another basic concept in the current approach is the time similarity that measures the distance among sensor samples. This measurement simT is based on computing intervals bounded by timestamps associated with the occurrence of such samples and using the next function:

where and are the associated timestamps of the sensed sample and , respectively. In order to support the process of inferring user states, it is possible to assign weights to the occurrence of each semantic activity inference. This mechanism focuses on the majority of inferences to determine the optimal activity label for a specific user state. In this case, the relative frequency for the semantic activity inferences j ∈ = {set of semantic activity inferences} is defined as follows:

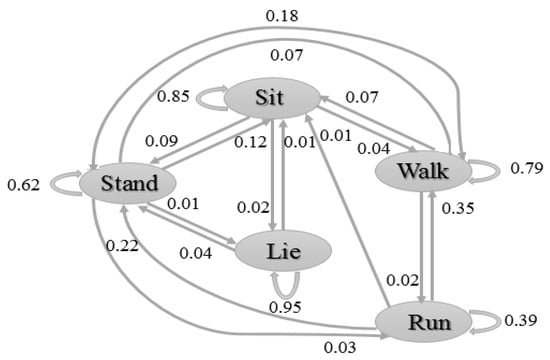

where comprises the total amount of semantic activity inferences till an instant t and is the number of times the semantic activity inference j has appeared during the interval in which is computed. The use of statistical-based techniques combined with semantic classification methods may lead to different activity inferences (user states). Hence, a transition probability matrix (TPM) [25] is applied to refine statistical activity inference through the probability of transition occurrence in human activities. A TPM is generated by evaluating the activity decisions taken in the previous time interval by a classifier algorithm. Figure 3 shows a graphical representation called the transitional probability graph (TPG) of an example of TPM that displays the transitional probabilities of different user’s activities. Using the TPM information, it is possible to infer the final user state from the available current statistical and semantic activity labels by tracing the probabilities of transition from the previous inferred activity label which occurred at to each available current activity state performed at time .

Figure 3.

Transition probability graph (TPG) for five human activities. The oriented arcs between activity nodes represent the transition probability weights.

3.2. Framework Description

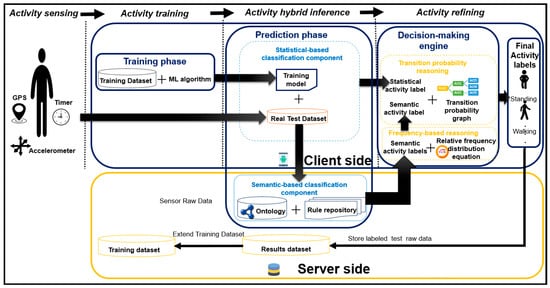

Based on the previous concepts, a framework called sensor-based human activity recognition (SHAR) is proposed, combining data and knowledge-driven methods to recognize human physical activities. SHAR allows handling the classification uncertainty and improving the accuracy of recognition processes by reducing the considerable amount of training data coming from several sensor sources along with using semantic inferences. Figure 4 shows an architecture overview of the SHAR framework that is divided in the next components: (i) Activity Sensing, (ii) Activity Training, (iii) Activity Inference, and (iv) Activity Refining. These components are organized according to versatile client-server architecture in the sense they can be allocated either on the client side (e.g., a smartphone) or using a server environment depending on the timing or context-aware requirements. First, the Activity Sensing component is used for gathering data from inertial and motion sensors embedded on smartphones or wearable devices during user activity such as movements, posture changes or gestures. This component adopts the sliding window mechanism described before in order to achieve a trade-off between recognition responsiveness and the accuracy required according to user circumstances and their surrounding environment. It also has to take into account the usage of computational resources and the energy consumed during this sensing phase. In this sense, different ranges of window sizes can be considered to obtain suitable timing responses.

Figure 4.

Framework architecture.

The Activity Training component addresses one of the critical stages in the HAR process, acting as a preparation step to define an appropriate activity pattern for every physical activity that is annotated in the training set. To complete the training phase, this component applies statistical algorithms such as Random Forest (RF), Decision Tree (DT) or K-Nearest Neighbor (KNN). The output of this phase consists of a specific model that is trained when running the selected algorithm over a specific dataset. The Activity Inference component represents the prediction phase that is performed under two different classification approaches in order to achieve a timely activity recognition process. These approaches are based on two main methods:

- A statistical-based classification that runs as a conventional prediction stage in human activity recognition processes using supervised statistical learning methods. In this classification approach, the activity patterns, previously stored as a training model, are exploited to recognize user states by deriving statistical inference.

- A semantic-based classification that uses human activity recognition ontology and knowledge-driven mechanisms to carry out such recognition processes even with small trained data. This type of method analyses and detects the performed user activity according to contextual knowledge by applying a set of potential semantic inferences.

The Activity Refining component is a fundamental part of the HAR process and contains two reasoning routines described as follows:

- Frequency-based reasoning is in charge of managing and handling the heterogeneity in terms of outputs derived from the semantic-based classification method. It offers a way to reason on these activity outputs in order to select the more appropriate inferred semantic activity based on counting the occurrences of each semantic activity.

- Transitional probabilities reasoning is the final stage and it is used to match the statistical activity label inferred from supervised statistical machine learning with a semantic activity label in order to refine the prediction outcome of the current activity. The purpose of such refinement aims at adjusting such a prediction through transition probability weights, which explore the probability value from previous to current states based on a TPG (see Figure 3).

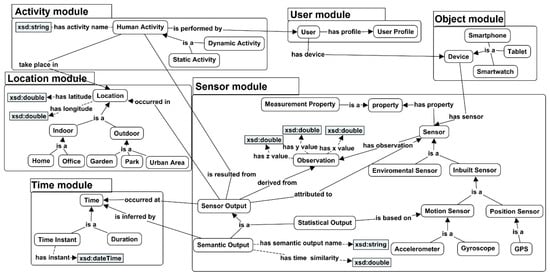

The SHAR framework relies on a HAR ontology called Modular Activity Recognition Ontology (MAROlogy) and it is usually accessed by means of server-side RESTful Web services. However, it is also possible to have a client-side version of MAROlogy containing a minimal set of rules in case the server was not accessible. The MAROlogy model is described in terms of interrelated modules representing different concepts related to the physical activity, user state, sensor devices, location, time, or objects. Figure 5 shows an abstract overview of the MAROlogy model divided into its ontology modules and including their general relationships. Table 2 shows a short description of these basic ontology modules.

Figure 5.

Overview of the Modular Activity Recognition Ontology (MAROlogy) model including its main modules.

Table 2.

Ontology module descriptions.

3.3. Inference Rules

The proposed framework aims at improving the activity recognition process by refining statistical classification tasks and trying to minimize the computational time of these tasks when strict timing requirements are demanded. Thus, the proposed ontology is complemented with reasoning rules that deal with the transition and temporal relationships among human activities. They can be divided into two different types: (i) transition-aware rules and (ii) time-aware rules. In the first case, special dependencies among different activities performed by humans in daily life can be considered. For example, the “walking” state might not occur immediately after a “sitting” state. With this purpose, transition-aware rules are defined to establish the potential relationship between previous and current activities derived from data sensor in the context of a transition event. In the second case, time-aware rules assume that users do not continuously change their activities with time. Instead, users have a tendency to perform the same activity for a certain time period before moving to a new activity or state, although, their application mostly depends on the domain in which such activities are carried out. Table 3 shows an excerpt of a transition-aware rule where a “sitting” state has to be followed only by the “sitting”, “standing” or “lying” activity states.

Table 3.

Transition-aware rule representation (excerpt).

4. Case Study

In order to show the application of the proposed approach, an experiment was carried out based on the MARology model and inference rules defined before along with certain statistical classification methods. The main goals of the experiment were to study the level of enhancement obtained with our hybrid approach for human activity recognition, on the one hand, and, on the other hand, to harness accuracy recognition and transition delay through the enhancement in existing HAR approaches. According to these goals, a prototype of mobile application called ARApp was developed allowing the testing the SHAR framework. First, the implementation of the ARApp prototype is described and, secondly, the building of the training model is explained. Finally, some user scenarios are presented to show how they can be used to assess the current prototype in terms of checking accuracy and timing requirements when human activities have to be recognized.

4.1. ARApp Description

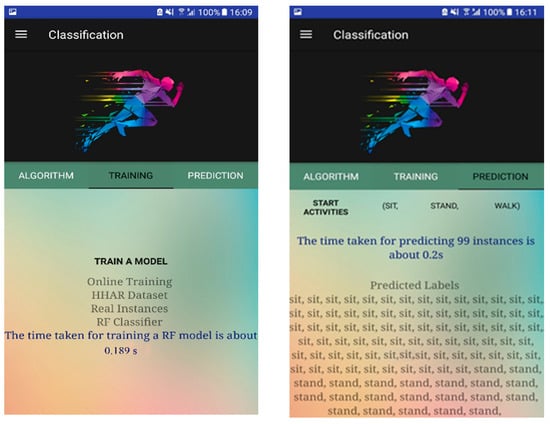

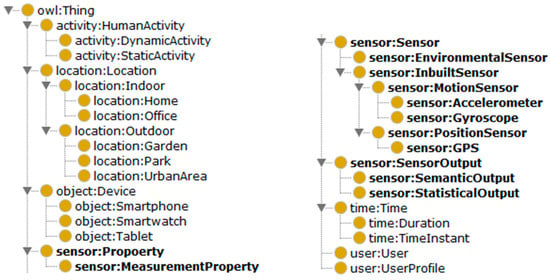

ARApp was developed in Java using the Android studio tool and it integrates WEKA [26], a machine learning library made to classify gathered sensor data under various ML algorithms. Figure 6 shows two samples of screenshots which display some of the ARApp functionalities such as using training datasets, selecting classification algorithms or performing recognition tasks. For the purpose of statistical classification, ARApp gathers several published datasets, which are obtained from the UCI machine learning repository [27] to support training processes as inputs for classification and prediction processes. In order to validate the semantic classification process, ARApp includes a modular HAR ontology, implemented in OWL-DL using the Protégé tool as an ontology editor. Figure 7 shows an excerpt of MAROlogy implementation.

Figure 6.

ARApp mobile application prototype.

Figure 7.

MAROlogy implemented with Protégé.

4.2. Training Model and Activity Recognition Scenarios

With the purpose of obtaining more reliable experimental results in the ARApp application, published datasets such as HHAR [28] provide an appropriate way to train classification algorithms. The HHAR dataset involves motion data gathered using a tri-axis accelerometer and a tri-axis gyroscope, which are pre-installed in mobile phones and smart watches. This sensor data describes six different human activities including, sitting, standing, walking, biking, stair up and stair down.

To complete the experimental stage, data were exclusively obtained from an accelerometer sensor. Moreover, the proposed application was applied in a specific HAR context. Accordingly, experiments were bounded to a given bunch of simple activities due to the fact that those human activities enable producing a more stable output result. Thus, a limited number of user-significant states such as sitting, standing and walking were selected from the HHAR dataset.

Two basic scenarios dealing with the recognition of thee specific user states and their transition are described. In the first scenario, a transition from the “sitting” state to the “stand” state was examined. Such a transition was sometimes difficult to detect because it could be confused with other actions (e.g., “go to lie”). In the second scenario, the transition was easier to detect since it implied a movement and such a circumstance could be checked in the TPG of Figure 3 in which transition probabilities had higher values in “stand to walk”. In every case, an activity event was triggered by the user to input the starting state and the ARApp application has to generate a final event with the recognized state. Timestamps could be associated with such events in order to compute the delay times in these transition scenarios.

5. Results

This section describes the assessment of the proposed hybrid approach in the activity recognition area and particularly focuses on the addressed ontology model. In a previous work [29], several supervised machine learning methods were used to detect user activity by means of statistical classification algorithms. Hence, the results of applying a detection refinement along with improving state transition delays in the case study introduced before are reported.

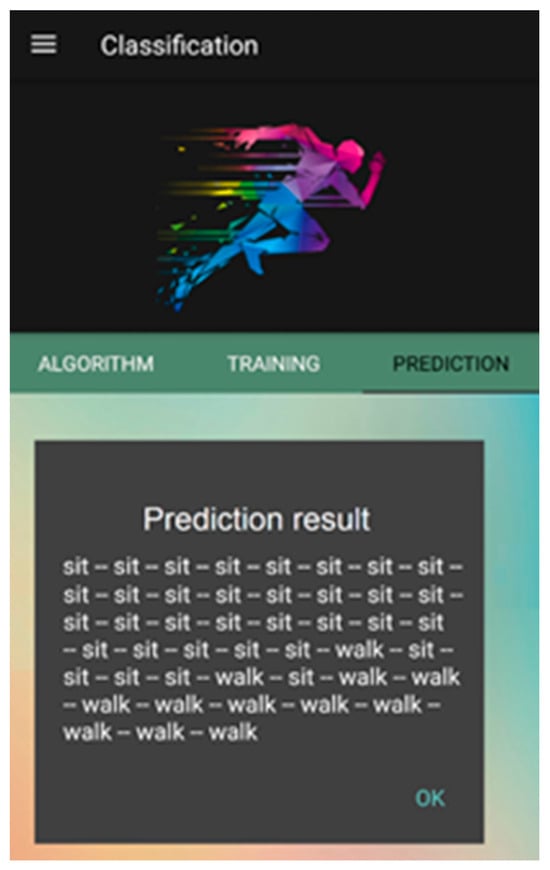

5.1. Assessment of Activity Prediction

To assess the accuracy of activity state prediction, the first scenario, in which a simple “sit-to-stand” transition occurs, is considered. Figure 8 shows a screenshot of the ARApp application that displays a test where the detected user states are distorted. The screenshot displays a sequence of “walk” labels after a “sit” state when classic ML algorithms are applied. Instead, a “stand” state should be detected, and such a distortion produced by the data-driven classification algorithms can be mitigated with the use of the proposed hybrid approach. So, the semantic-based classification, which uses ontology knowledge and reasoning rules, suggests that the current user activity is “sitting” or “stand” using the transition-aware rule based on the previous activity carried out by the user. Regardless of the acquired semantic prediction results, the classification process exploits the time-aware rule and puts forward a new suggestion, arguing that current user activity is equivalent to standing activity by measuring the time similarity between these two activities and checking such a similarity under a given threshold. Next, in order to overcome the high label imbalance at the level of the semantic classification, an activity frequency distribution is obtained to weight the occurrence of different semantic activity outputs. These mechanisms select the “stand” state as the optimal semantic activity inference for such a scenario. The detection of the more appropriate activity state from both kinds of classification processes exploits the transition probabilities graph (TPG) depicted in Figure 3. This graph shows that the probability of the “sit-to-stand” transition is approximately 0.09, while the probability of sit-to-walk is equivalent to 0.04. The definitive activity inference using the refined approach in the first scenario case is a “sit-to-stand” transition. So, this example shows the refinement effectiveness, reducing classification distortion caused by the preceding data-driven approach [29] and allowing for better accuracy in the prediction of activity states.

Figure 8.

Misrecognition of a sit-to-stand transition in our preceding data-driven approach.

5.2. Improvement of Transition Delays

As is known, transitions between physical activities are usually overlooked in many approaches [2]. In this case, the presence of activity transitions is addressed in order to check their timing responsiveness and how these results can also be improved in terms of accurate detection. Transition delays were analyzed in the previous data-driven approach [29]. For the current analysis, the first case scenario was tested checking the delay in the “sit to stand” transition under a window size of 10 s. The transition delay specifies the amount of time between the activity event triggered by users when they start their activity (e.g., the “sit” state) and the event associated with the transition recognition (e.g., the final “stand” state). As can be observed in Table 4, the novel proposed approach outperforms transition detection, with an average delay value between 0.145 and 0.344 s depending on the used classification algorithm. In contrast, the proceeding data-driven approach performs worse, providing an average delay value between 0.379 and 0.519 s.

Table 4.

Transition delays for “sit-to-stand”.

According to these results, a transition in data-driven approaches could take more time to capture and understand such a situation due to the volume of trained models based on the large amount of sensor data as well as the fluctuation in the prediction during transitions. Therefore, the proposed hybrid approach can not only obtain better activity detection results but also achieve them with lower time responses, which means enabling more time for improving detection. This improvement is largely due to the fact that, by using a smaller trained model, faster activity detection and less computational use of resources can be produced. The combination between low-cost data-driven approaches along with the application of HAR ontology models allows refined prediction with fewer fluctuations by the way.

6. Conclusions

The current work has presented a hybrid approach that combines knowledge-driven and data-driven methods to check timing and accuracy requirement in the context of human activity recognition. Such a combination allows the building of smaller training models that also favor a higher responsiveness of mobile applications which can run complex classification algorithms in a faster way. Moreover, the use of ontology models such as the one proposed in this research work and their associated inference rules have enabled an improvement in the capability to detect user states and also the transition among them. The final checking of the time responses in the transition delay computation within a scenario sample has shown the usefulness of the approach in this sense. Further works plan to test new scenarios and case studies in which additional examples of user states can be accurately recognized and transitions can be detected timely.

References

- Lane, N.D.; Miluzzo, E.; Lu, H.; Peebles, D.; Choudhury, T.; Campbell, A.T. A survey of mobile phone sensing. IEEE Commun. Mag. 2010, 48, 140–150. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A Survey on Human Activity Recognition using Wearable Sensors. IEEE Commun. Surv. Tutor. 2012, 15, 1192–1209. [Google Scholar] [CrossRef]

- Incel, O.D.; Kose, M.; Ersoy, C. A Review and Taxonomy of Activity Recognition on Mobile Phones. BioNanoScience 2013, 3, 145–171. [Google Scholar] [CrossRef]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J. A Survey of Online Activity Recognition Using Mobile Phones. Sensors 2015, 15, 2059–2085. [Google Scholar] [CrossRef] [PubMed]

- Cornacchia, M.; Zheng, Y.; Velipasalar, S.; Ozcan, K. A Survey on Activity Detection and Classification Using Wearable Sensors. IEEE Sens. J. 2016, 17, 386–403. [Google Scholar] [CrossRef]

- Brezmes, T.; Gorricho, J.-L.; Cotrina, J. Activity Recognition from Accelerometer Data on a Mobile Phone. In Proceedings of the International Work-Conference on Artificial Neural Networks, Salamanca, Spain, 10–12 June 2009; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5518, pp. 796–799. [Google Scholar]

- Kwapisz, J.R.; Weiss, G.M.; Moore, S.A. Activity recognition using cell phone accelerometers. ACM SIGKDD Explor. Newsl. 2011, 12, 74. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A mobile platform for real-time human activity recognition. In Proceedings of the 2012 IEEE Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 14–17 January 2012; pp. 667–671. [Google Scholar]

- Pham, C. MobiRAR: Real-Time Human Activity Recognition Using Mobile Devices. In Proceedings of the 2015 Seventh International Conference on Knowledge and Systems Engineering (KSE), HoChiMinh City, Vietnam, 8–10 October 2015; pp. 144–149. [Google Scholar]

- Mascret, Q.; Bielmann, M.; Fall, C.-L.; Bouyer, L.J.; Gosselin, B. Real-Time Human Physical Activity Recognition with Low Latency Prediction Feedback Using Raw IMU Data. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 17–21 July 2018; pp. 239–242. [Google Scholar]

- Chen, L.; Hoey, J.; Nugent, C.D.; Cook, D.J.; Yu, Z. Sensor-based activity recognition. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2012, 42, 790–808. [Google Scholar] [CrossRef]

- Riboni, D.; Pareschi, L.; Radaelli, L.; Bettini, C. Is ontology-based activity recognition really effective? In Proceedings of the 2011 IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOM Workshops), Seattle, WA, USA, 21–25 March 2011; pp. 427–431. [Google Scholar]

- Wan, J.; O’grady, M.J.; O’Hare, G.M. Dynamic sensor event segmentation for real-time activity recognition in a smart home context. Pers. Ubiquitous Comput. 2015, 19, 287–301. [Google Scholar] [CrossRef]

- Espinilla, M.; Medina, J.; Hallberg, J.; Nugent, C. A new approach based on temporal sub-windows for online sensor-based activity recognition. J. Ambient. Intell. Humaniz. Comput. 2018, 1–13. [Google Scholar] [CrossRef]

- Riboni, D.; Bettini, C. COSAR: Hybrid reasoning for context-aware activity recognition. Pers. Ubiquitous Comput. 2011, 15, 271–289. [Google Scholar] [CrossRef]

- Chen, L.; Nugent, C.; Okeyo, G. An Ontology-Based Hybrid Approach to Activity Modeling for Smart Homes. IEEE Trans. Hum.-Mach. Syst. 2013, 44, 92–105. [Google Scholar] [CrossRef]

- Azkune, G.; Almeida, A.; Lopez-De-Ipina, D.; Chen, L. Extending knowledge-driven activity models through data-driven learning techniques. Expert Syst. Appl. 2015, 42, 3115–3128. [Google Scholar] [CrossRef]

- Riboni, D.; Sztyler, T.; Civitarese, G.; Stuckenschmidt, H. Unsupervised recognition of interleaved activities of daily living through ontological and probabilistic reasoning. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, UbiComp ’16, Heidelberg, Germany, 12–16 September 2016; pp. 1–12. [Google Scholar]

- Salguero, A.G.; Espinilla, M.; Delatorre, P.; Medina, J. Using Ontologies for the Online Recognition of Activities of Daily Living. Sensors 2018, 18, 1202. [Google Scholar] [CrossRef] [PubMed]

- Civitarese, G.; Bettini, C.; Sztyler, T.; Riboni, D.; Stuckenschmidt, H. NECTAR: Knowledge-based Collaborative Active Learning for Activity Recognition. In Proceedings of the 2018 IEEE International Conference on Pervasive Computing and Communications (PerCom), Athens, Greece, 19–23 March 2018; pp. 1–10. [Google Scholar]

- Safyan, M.; Qayyum, Z.U.; Sarwar, S.; García-Castro, R.; Ahmed, M. Ontology-driven semantic unified modelling for concurrent activity recognition (OSCAR). Multimedia Tools Appl. 2019, 78, 2073–2104. [Google Scholar] [CrossRef]

- Triboan, D.; Chen, L.; Chen, F.; Wang, Z. A semantics-based approach to sensor data segmentation in real-time Activity Recognition. Future Gener. Comput. Syst. 2019, 93, 224–236. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, X.; Zhai, Z.; Chen, R.; Zhang, B.; Jiang, Y. Timely daily activity recognition from headmost sensor events. ISA Trans. 2019, in press. [Google Scholar] [CrossRef] [PubMed]

- Banos, O.; Galvez, J.-M.; Damas, M.; Pomares, H.; Rojas, I. Window Size Impact in Human Activity Recognition. Sensors 2014, 14, 6474–6499. [Google Scholar] [CrossRef] [PubMed]

- Mannini, A.; Sabatini, A.M. Machine Learning Methods for Classifying Human Physical Activity from On-Body Accelerometers. Sensors 2010, 10, 1154–1175. [Google Scholar] [CrossRef] [PubMed]

- Frank, E.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I.H.; Hall, M. The WEKA data mining software: An update. ACM SIGKDD Explor. Newsl. 2009, 11, 10. [Google Scholar]

- UCI Repository. Available online: http://archive.ics.uci.edu/ml/datasets.html (accessed on 21 March 2019).

- Stisen, A.; Blunck, H.; Bhattacharya, S.; Prentow, T.S.; Kjærgaard, M.B.; Dey, A.; Jensen, M.M. Smart devices are different: Assessing and mitigating mobile sensing heterogeneities for activity recognition. In Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems, Seoul, Korea, 1–4 November 2015; ACM: New York, NY, USA, 2015; pp. 127–140. [Google Scholar]

- Jabla, R.; Braham, A.; Buendía, F.; Khemaja, M. A Computing Framework to Check Real-Time Requirements in Ambient Intelligent Systems. In Ambient Intelligence—Software and Applications, 10th International Symposium on Ambient Intelligence; ISAmI. Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2019; pp. 19–26. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).