1. Introduction

Ancient documents are valuable sources of history, culture, and heritage information. Nevertheless, when the ancient manuscripts are written on both pages (recto and verso) of the sheet, often the ink penetrates through the page due to aging, humidity, ink chemical property or paper porosity, and becomes visible as degradation on the other side. This type of degradation is termed as bleed-through, and impairs legibility and interpretation of the document contents. In the past, physical restoration techniques were applied to remove the bleed-through degradation, but unfortunately they are costly, invasive, and can cause permanent damage to the document.

Nowadays, ancient documents are usually archived in the form of digital images for preservation, distribution, retrieval, or analysis. Thus, digital image processing techniques have become a standard for their ability to perform any number of alterations to the document appearance, while leaving the original intact, and have been used with impressive results to remove bleed-through. Indeed, besides being necessary to improve readability, the removal of such degradation is a critical preprocessing step in many tasks such as optical character recognition (OCR), feature extraction, segmentation and automatic transcription.

Generally, bleed-through restoration is handled as a classification problem, where image pixels are labeled as either background, bleed-through, or foreground (original text) [

1]. Broadly speaking, the approaches to bleed-through restoration can be divided into two categories: blind or single-sided and non-blind or double-sided. In blind methods only one side of the document is used, whereas non-blind methods exploit accurately pre-registered recto and verso images of the document. Most of the earlier blind methods involve an intensity based thresholding step [

2]. However, thresholding is not suitable when the aim is to preserve the original appearance of the document. In order to remove bleed-through selectively, in [

3] an independent component analysis (ICA) based method is applied to an RGB image to separate the foreground, background, and bleed-through layers. A dual-layer Markov random field (MRF) is suggested in [

4], whereas in [

5] a conditional random field (CRF) based method is proposed. A non-blind ICA based method is outlined in [

6]. Other methods of this category are proposed in [

7,

8,

9].

A common drawback of all the methods above is the search for an optimal way to replace the bleed-through pattern to be removed. In some of those papers, as a preliminary step, a “clean” background for the entire image is estimated (e.g., [

4,

9]), but this is usually a very laborious task.

In this paper, we propose a two-step method to address bleed-through document restoration from pre-registered recto and verso images. According to the point of view of document appearance preservation, the two steps consist in the selective identification of the bleed-through pattern, followed by its inpainting with the texture of the close background. Bleed-through identification can be performed using, in principle, any of the methods proposed in the literature for this purpose. Here we adopt the bleed-through cancellation algorithm described in [

10], which is simple and very fast. Although efficient in locating bleed-through, also this algorithm suffers from the lack of a proper strategy to replace the unwanted pixels. Indeed, these are assigned with the predominant graylevel of the background. This causes imprints of the bleed-through pattern that are visible in the restored image. An interpolation based inpainting technique for such imprints is presented in [

11], but the filled-in areas are mostly smooth. Here, in the second step of our method, we use a sparse image representation based inpainting to find a befitting fill-in for the bleed-through imprints, according to their neighborhood. This inpainting step, which constitutes the main contribution of the paper, enhances the quality of the bleed-through restored image and preserves the natural paper texture.

The rest of this paper is organized as follows. Next section briefly introduces sparse image representation and dictionary learning. The non-blind bleed-through identification is presented in

Section 3.

Section 4 describes the proposed inpainting technique and the comparative results are discussed in

Section 5.

Section 6 presents some future prospects.

2. Sparse Dictionary Learning

Sparse representation based modeling emerges as a powerful tool in the image processing field [

12], with applications in compression, restoration, inpainting, clustering, recognition, and more. The underlying assumption in this direction is that images contain highly redundant information, therefore they can be approximated by sparse representations of prototype signal-atoms such that very few samples are sufficient to reconstruct a whole image [

13]. The objective in this case is to find a basis, called dictionary, which can be used to represent the given image data by a sparse combination of a few elements of the learned dictionary. Formally, for a given set of signals

where

, the sparse representation consists in learning a suitable dictionary,

( with

), and the sparse vectors with minimal number of non-zero coefficients,

, such that

, where

. In this setting, the sparse decomposition can be formulated as an optimization problem of the following form:

where

is the desired sparsity level in the coefficients

and

is the

norm, with

. An iterative-alternate approach is a natural way to find a solution, by minimizing with respect to one set of variables while keeping the other fixed until some convergence condition is fullfilled [

13,

14].

The sparse vectors indicate the dictionary atoms that are used to generate each signal. Finding the exact sparse representation for signals, given a dictionary, is a NP-hard problem [

15], however using approximate solutions has proven to be a good choice. Commonly used sparse approximation algorithms are Matching Pursuit (MP) [

16], Basis Pursuits (BP) [

17], Focal Undetermined System Solver (FOCUSS) [

18] and Orthogonal Matching Pursuit (OMP) [

19].

The dictionary that can lead to sparse decomposition can either be selected from a set of pre-defined transforms like Wavelets, Fourier, Cosine etc., or can be adaptively learned from the set of training signals [

13,

20]. Although working with pre-defined dictionaries may be simple and fast, their performance might be not good for every case due to their global-adaptivity nature. Instead, learned dictionaries are adaptive to both the signals and the processing task at hand, thus resulting in far better performances [

21,

22].

For a given set of signals

and dictionary

, the dictionary learning algorithms generate a representation of signal

as a sparse linear combination of the atoms

for

,

Dictionary learning algorithms distinguish themselves from traditional model-based methods by the fact that, in addition to

, they also train the dictionary

to better fit the data set

. The solution is generated by iteratively alternating between the sparse coding stage

for

and the dictionary update stage for the

obtained from the sparse coding stage

Dictionary learning algorithms are often sensitive to the choice of

. The update step can either be sequential as in [

14] or parallel as in [

23]. In sequential dictionary learning, the dictionary update minimization problem Equation (

4) is split into

K sequential minimizations, optimizing the cost function Equation (

4) for each individual atom while keeping fixed the remaining atoms. In the method proposed in [

14], which has become a benchmark in dictionary learning, each column

of

and its corresponding row of coefficients

are updated based on a rank-1 matrix approximation of the error for all the signals when

is removed

where

. The singular value decomposition (SVD) of

is used to find the closest rank-1 matrix approximation of

. The

update is taken as the first column of

and the

update is taken as the first column of

multiplied by the first element of

. To avoid the loss of sparsity in

that will be created by the direct application of the SVD on

, in [

14] it was proposed to modify only the non-zero entries of

resulting from the sparse coding stage. This is achieved by taking into account only the signals

that use the atom

in Equation (

5) or by taking the SVD of

, where

and

is the

submatrix of the

identity matrix obtained by retaining only those columns whose index numbers are in

, instead of the SVD of

.

3. Bleed-Through Identification

Provided that the two sides of the manuscript have been written with the same ink and at two close moments, in the majority of the cases the bleed-through text is almost everywhere lighter than the foreground text. Nonetheless, the intensity of bleed-through is usually very variable, and this makes hard to find suitable thresholds to selectively identify and remove it. Indeed, once again we stress the fact that thresholding would likely destroy also the background texture and other slight document marks that genuinely belong to the side at hand. Thus, in [

10] we propose to selectively identify bleed-through and discriminate it from other desired features by looking for those pixels that are lighter than the corresponding pixels in the opposite side.

Three main exceptions to this basic rule are however accounted for. The first one concerns the occlusions, i.e., those areas where the two texts overlap, and that should not be identified as bleed-through in none of the two sides. In these pixels the intensity (graylevel) is the lowest and almost equal in the two corresponding pixels of the recto and verso sides. Therefore, at present we identify the occlusion areas with the aid of suitable thresholds. A specular situation occurs for pixels of background in both sides. Here the intensity is the highest and almost equal in the two sides. Again, proper thresholds can help to discriminate this situation. A third case generates from the typical phenomenon of diffusion of the ink that penetrates through the paper. It may thus happen that, especially at the border of the bleed-through characters, the pixel intensity is lower than that of the (background) pixel in the opposite side, since the text causing the degradation is sharper. To cope with this effect, rather than simply compare the two recto and verso intensities, we compare the intensity in one side with the “smeared” version of the intensity in the opposite side.

Therefore, at each pixel except those identified as occlusions or background, we apply the following formulas to compute the two “seeping levels”

and

:

where the subscripts

indicate recto and verso, respectively,

is the intensity at pixel

,

b is an estimate of the predominant graylevel in the background, and the small constant

is included to avoid indeterminacies or infinity. These formulas comprise two Point Spread Functions (PSF) of unit volume,

and

, which describe the smearing of the seeping ink, thus allowing a pattern in a side to better match the corresponding one in the opposite side. We assume

and

as two Gaussian functions, with size and standard deviation approximately estimated from the extent of the character smearing. At each pixel, we retain the smallest between the two “seeping levels” computed with Equation (

6), to indicate a bleed-through pixel in the related side, and set to zero the other, to indicate a foreground pixel in the opposite side.

In [

10], the strategy described above is applied, with similar performance, using the optical density rather than the intensity, where the optical density is interpreted as a sort of “quantity” of ink, and with reference to a linear additive, non-stationary model to describe the two observed recto and verso densities. The restoration algorithm is completed by substituting the pixels having positive “seeping level” with the background predominant graylevel

b, and leaving unchanged the areas where

. The experimental results proved the efficiency of the algorithm in removing bleed-through while leaving unaltered other salient marks, such as stamps, pencil annotations, paper textures, etc. These marks can be of paramount importance for the scholar studying the document contents and its origin.

4. Bleed-Through Imprint Inpainting

To each side separately, a dictionary based inpainting method is applied to find a suitable fill-in to replace the identified bleed-through pixels. Our aim is to preserve the background texture to ensure the original look of the document. A patch based sparse representation model is used for image inpainting, with a learned dictionary.

Mathematically, the image inpainting problem is to reconstruct the underlying complete image (lexicographically ordered)

from its observed incomplete version

, where

. We assume a sparse representation of

over a dictionary

:

. The incomplete image

and the complete image

are related through

, where

represents the layout of the missing pixels. The image inpainting problem can be formulated as:

Assuming that a well trained dictionary is available, the problem boils down to the estimation of sparse coefficients such that the underlying complete image is given by . To learn the dictionary a training set is created by extracting overlapping patches of size from the image at location , where P is the total number of patches. Then we have , where is an operator that extracts the patch from the image , and its transpose, denoted by , is able to put back a patch into the jth position in the reconstructed image. Considering that patches are overlapped, the recovery of from {} can be obtained by averaging all the overlapped patches.

We learned a dictionary

from the training set

, using the method described in

Section 2. For optimization we used only complete patches from

, i.e., the patches with no bleed-through imprint pixels. This choice speeds up the training process and excludes the ’

’ imprint pixels. For each patch

to be inpainted, we first search for its mutual similar patches in a

bounded neighborhood window. Similar to [

24] we used block matching technique with Euclidean distance metric as similarity criterion. The similar patches are grouped together in a matrix

, where

is the total number of similar patches. Using the learned dictionary

and

, a grouped-based sparse representation method similar to [

25] is used to find the befitting fill-in for the bleed-through imprints. The use of the similar patch group

incorporates the local information in the sparse estimation of the patch and preserves the natural look of the document.

5. Experimental Results

We compared the proposed method with other state-of-the-art methods for images of the database of 25 manuscript recto-verso pairs, presented in [

26,

27]. The input image with bleed-through is first processed for bleed-through detection and removal as discussed in

Section 3.

For imprints inpainting, the training data set

is constructed by selecting all the overlapping patches of size 8 × 8 from the input image. We learned a dictionary

of size

from

, with the sparsity level

and overcomplete DCT as initial dictionary. For each patch to be inpainted, the sparse coefficients are estimated under the learned dictionaries and the similar patches in its

neighbourhood window, as discussed in

Section 4. The estimated sparse coefficient vector of each patch is denoted by

, where

j indicates the number of the patch. The reconstructed patch is then obtained as

.

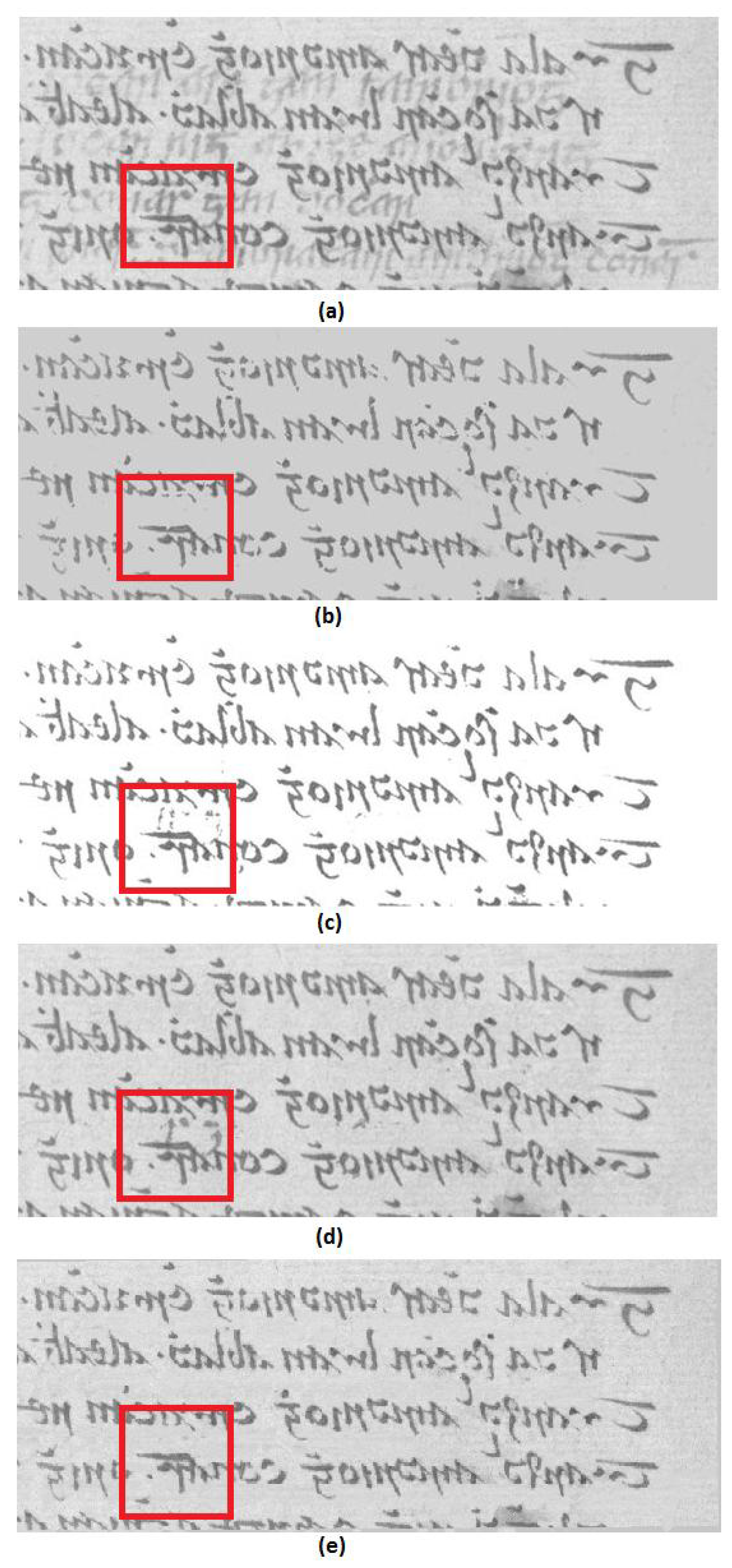

In bleed-through restoration the efficacy is generally compared qualitatively, as in most cases the original clean image is not available. A visual comparision of the proposed method with other state-of-the-art methods is presented in

Figure 1. As can be seen, the proposed method (

Figure 1e) produces a better result compared with the other methods, especially in the area bounded by the red rectangle. The dual-layer MRF approach with user trained likelihood of [

7] (

Figure 1b) flattens the background and removes the original text in the overlapping cases. The wavelet based method in [

8] (

Figure 1c) produces a binary image and fails to handle darker bleed-through. The non-parametric method of [

9] (

Figure 1d) produces better results but again fails in some dark bleed-through regions. The proposed method copes very well with bleed-through removal and the dictionary based inpainting preserves the original look of the document.