A Convolutional Network for the Classification of Sleep Stages †

Abstract

:1. Introduction

2. Materials

3. Method

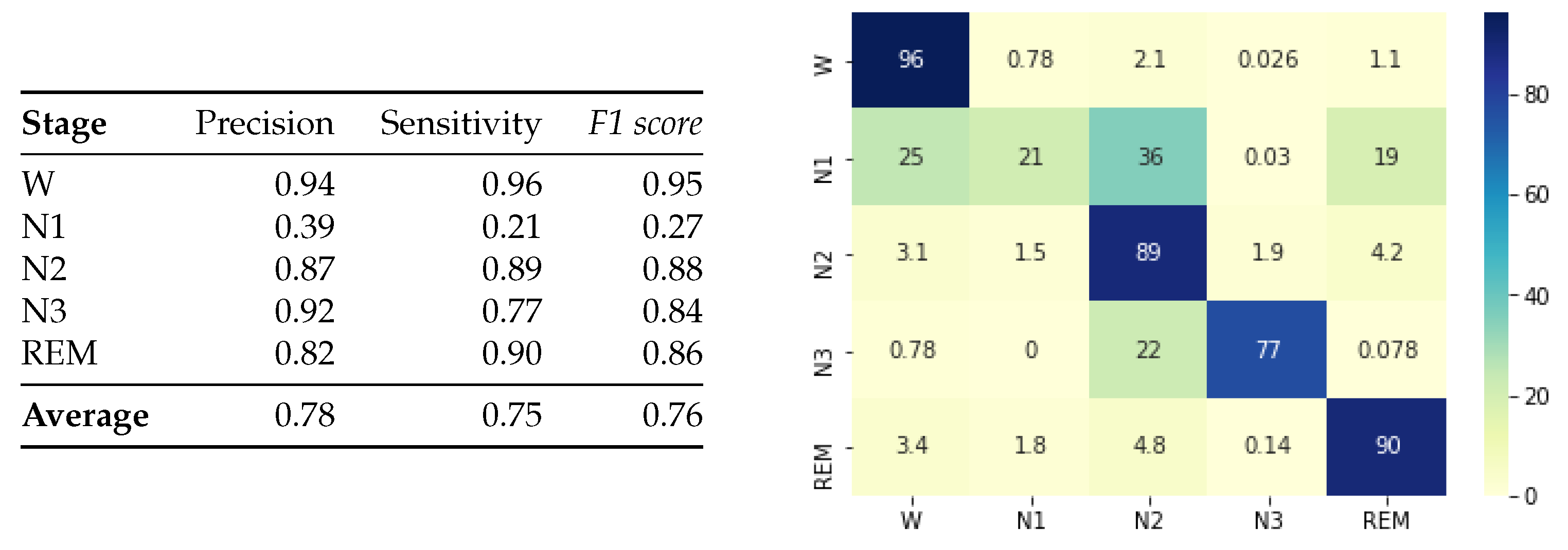

4. Results

5. Discussion and Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Ohayon, M.M.; Sagales, T. Prevalence of insomnia and sleep characteristics in the general population of Spain. Sleep Med. 2010, 11, 1010–1018. [Google Scholar] [CrossRef] [PubMed]

- Marin, J.; Gascon, J.M.; Carrizo, S.; Gispert, J. Prevalence of sleep apnoea syndrome in the Spanish adult population. Int. J. Epidemiol. 1997, 26, 381–386. [Google Scholar] [CrossRef] [PubMed]

- Stepnowsky, C.; Levendowski, D.; Popovic, D.; Ayappa, I.; Rapoport, D.M. Scoring accuracy of automated sleep staging from a bipolar electroocular recording compared to manual scoring by multiple raters. Sleep Med. 2013, 14, 1199–1207. [Google Scholar] [CrossRef] [PubMed]

- Liang, J.; Lu, R.; Zhang, C.; Wang, F. Predicting Seizures from Electroencephalography Recordings: A Knowledge Transfer Strategy. In Proceedings of the 2016 IEEE International Conference on Healthcare Informatics (ICHI 2016), Chicago, IL, USA, 4–7 October 2016; pp. 184–191. [Google Scholar] [CrossRef]

- Hassan, A.R.; Bhuiyan, M.I.H. A decision support system for automatic sleep staging from EEG signals using tunable Q-factor wavelet transform and spectral features. J. Neurosci. Meth. 2016, 271, 107–118. [Google Scholar] [CrossRef] [PubMed]

- Sharma, R.; Pachori, R.B.; Upadhyay, A. Automatic sleep stages classification based on iterative filtering of electroencephalogram signals. Neural Comput. Appl. 2017, 28, 2959–2978. [Google Scholar] [CrossRef]

- Lajnef, T.; Chaibi, S.; Ruby, P.; Aguera, P.E.; Eichenlaub, J.B.; Samet, M.; Kachouri, A.; Jerbi, K. Learning machines and sleeping brains: Automatic sleep stage classification using decision-tree multi-class support vector machines. J. Neurosci. Meth. 2015, 250, 94–105. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.S.; Lin, C.L.; Ko, L.W.; Liu, S.Y.; Su, T.P.; Lin, C.T. Knowledge-based identification of sleep stages based on two forehead electroencephalogram channels. Front. Neurosci. 2014, 8, 263. [Google Scholar] [CrossRef] [PubMed]

- Längkvist, M.; Karlsson, L.; Loutfi, A. Sleep Stage Classification Using Unsupervised Feature Learning. Adv. Artif. Neural Syst. 2012, 2012, 1–9. [Google Scholar] [CrossRef]

- Tsinalis, O.; Matthews, P.M.; Guo, Y.; Zafeiriou, S. Automatic Sleep Stage Scoring with Single-Channel EEG Using Convolutional Neural Networks. arXiv 2016, arXiv:1610.01683. [Google Scholar]

- Supratak, A.; Dong, H.; Wu, C.; Guo, Y. DeepSleepNet: A Model for Automatic Sleep Stage Scoring Based on Raw Single-Channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1998–2008. [Google Scholar] [CrossRef] [PubMed]

- Biswal, S.; Kulas, J.; Sun, H.; Goparaju, B.; Westover, M.B.; Bianchi, M.T.; Sun, J. SLEEPNET: Automated Sleep Staging System via Deep Learning. arXiv 2017, arXiv:1707.08262. [Google Scholar]

- Sors, A.; Bonnet, S.; Mirek, S.; Vercueil, L.; Payen, J.F. A convolutional neural network for sleep stage scoring from raw single-channel EEG. Biomed. Signal Process. Control 2018, 42, 107–114. [Google Scholar] [CrossRef]

- Quan, S.F.; Howard, B.V.; Iber, C.; Kiley, J.P.; Nieto, F.J.; O’connor, G.T.; Rapoport, D.M.; Redline, S.; Robbins, J.; Samet, J.M.; et al. The Sleep Heart Health Study: Design, Rationale, and Methods. Sleep 1997, 20, 1077–1085. [Google Scholar] [CrossRef] [PubMed]

- Bonnet, M.H.; Carley, D.; Carskadon, M. EEG arousals: Scoring rules and examples: a preliminary report from the Sleep Disorders Atlas Task Force of the American Sleep Disorders Association. Sleep 1992, 15, 173–184. [Google Scholar]

- Fernández-Varela, I.; Alvarez-Estevez, D.; Hernández-Pereira, E.; Moret-Bonillo, V. A simple and robust method for the automatic scoring of EEG arousals in polysomnographic recordings. Comput. Biol. Med. 2017, 87, 77–86. [Google Scholar] [CrossRef] [PubMed]

- Tsinalis, O.; Matthews, P.M.; Guo, Y. Automatic Sleep Stage Scoring Using Time-Frequency Analysis and Stacked Sparse Autoencoders. Ann. Biomed. Eng. 2016, 44, 1587–1597. [Google Scholar] [CrossRef] [PubMed]

| Work | Dataset | Kappa | F1 Score | ||||

|---|---|---|---|---|---|---|---|

| W | N1 | N2 | N3 | REM | |||

| Biswal et al. [12] | Massachusetts General Hospital, 1000 recordings | 0.77 | 0.81 | 0.70 | 0.77 | 0.83 | 0.92 |

| Längkvist et al. [9] | St Vicent’s University Hospital, 25 recordings | 0.63 | 0.73 | 0.44 | 0.65 | 0.86 | 0.80 |

| Sors et al. [13] | SHHS, 1730 recordings | 0.81 | 0.91 | 0.43 | 0.88 | 0.85 | 0.85 |

| Supratak et al. [11] | MASS dataset, 62 recordings | 0,80 | 0,87 | 0,60 | 0.90 | 0.82 | 0.89 |

| Supratak et al. [11] | SleepEDF, 20 recordings | 0.76 | 0.85 | 0.47 | 0.86 | 0.85 | 0.82 |

| Tsinalis et al. [10] | SleepEDF, 39 recordings | 0.71 | 0.72 | 0.47 | 0.85 | 0.84 | 0.81 |

| Tsinalis et al. [17] | SleepEDF, 39 recordings | 0.66 | 0.67 | 0.44 | 0.81 | 0.85 | 0.76 |

| This work | SHHS, 500 recordings | 0.83 | 0.95 | 0.27 | 0.88 | 0.84 | 0.86 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fernández-Varela, I.; Hernández-Pereira, E.; Moret-Bonillo, V. A Convolutional Network for the Classification of Sleep Stages. Proceedings 2018, 2, 1174. https://doi.org/10.3390/proceedings2181174

Fernández-Varela I, Hernández-Pereira E, Moret-Bonillo V. A Convolutional Network for the Classification of Sleep Stages. Proceedings. 2018; 2(18):1174. https://doi.org/10.3390/proceedings2181174

Chicago/Turabian StyleFernández-Varela, Isaac, Elena Hernández-Pereira, and Vicente Moret-Bonillo. 2018. "A Convolutional Network for the Classification of Sleep Stages" Proceedings 2, no. 18: 1174. https://doi.org/10.3390/proceedings2181174