General Theory of Information and Mindful Machines †

Abstract

1. Introduction

- Highlight limitations of scaling-driven approaches to intelligence;

- Introduce the Mindful Machines paradigm, grounded in the GTI (General Theory of Information), which encodes goals, structure, and ethical constraints directly into system architecture;

- Demonstrate feasibility through working prototypes in distributed computing and medical decision support.

2. The Emergent AI Paradigm

3. Mindful Machines: Foundations of a Post-Turing Cognition

4. Real-World Prototypes: Demonstrating the Feasibility

5. Conclusions

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Explanations

- Large Language Model (LLM): A transformer-based probabilistic program modeling P(next token∣context). P(next token∣context) over large vocabularies, learned from internet-scale corpora. Generates text/code via conditional sampling; has no intrinsic world-model, goals, or persistent episodic memory unless extended.

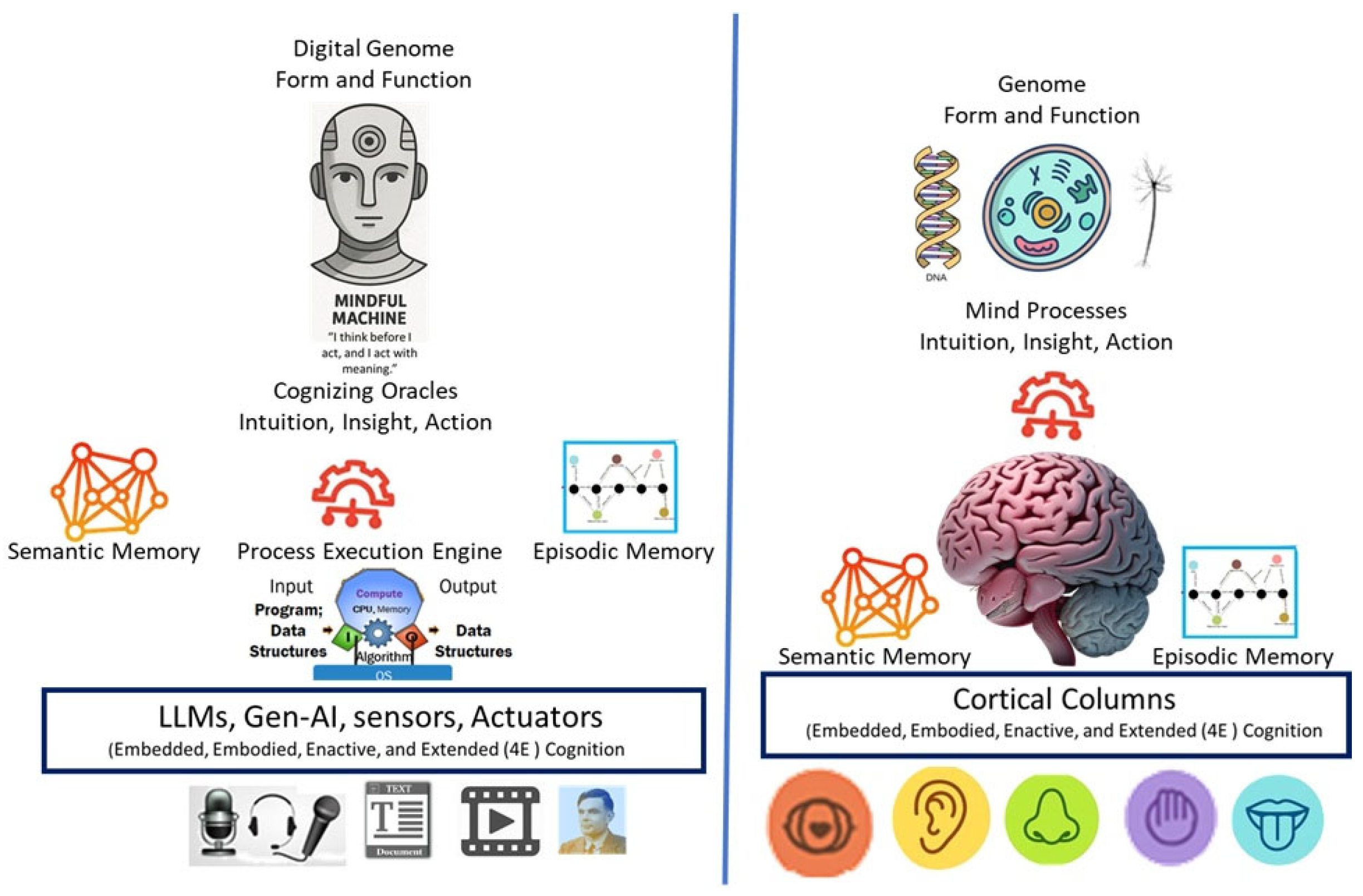

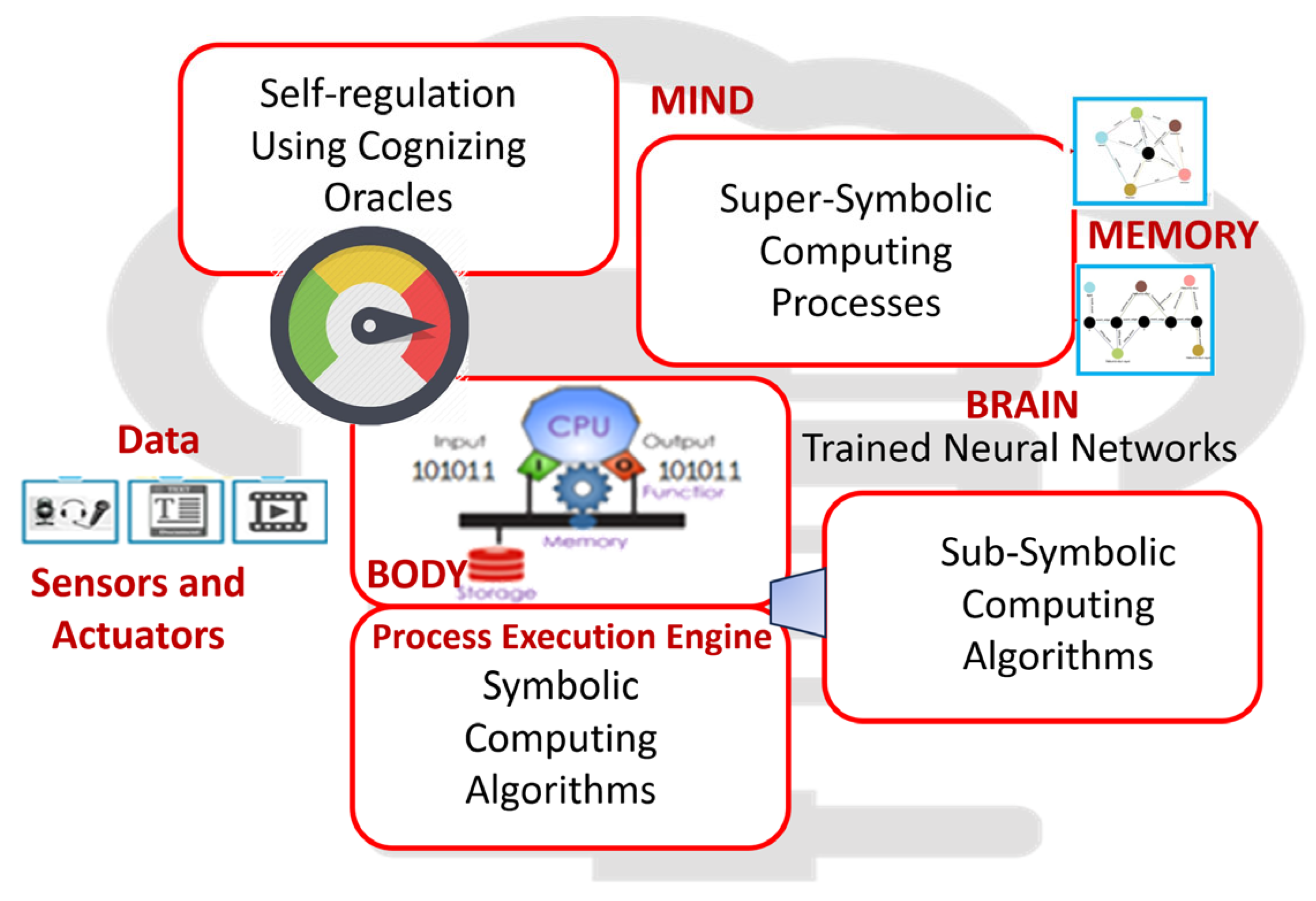

- Cognizing Oracles: Runtime epistemic middleware that bridges goals/policies and execution. Reads Digital Genome + telemetry; consults memory and causal models; chooses and justifies actions (place/move/scale/stop services; adjust configs) to satisfy goals with minimum cost/energy; writes back outcomes for learning.

- Unity of the Computer and the Computed (Figure 1): Achieved by co-specifying function, form, and policy in the Digital Genome; compiling into live graphs via Structural Machines; continuously orchestrated by Cognizing Oracles; executed by Autopoietic Managers; and closed-loop learning via integrated semantic/episodic memory.

- Digital Genome (DG) and Non-Digital Genome: DG = versioned, executable knowledge graph encoding teleological goals, functional specs, non-functional intents, structural patterns, lifecycle policies, and model/memory references. Non-Digital Genomes = biological (chemical) prescriptive blueprints; DG is the engineered, digital counterpart.

- Autopoietic Systems (Figure 2) vs. von Neumann Self-Reproduction: Von Neumann: syntactic replication of a description via a universal constructor in an abstract lattice; no goals or semantics.

- Autopoietic Mindful Systems: sustain and adapt their organization in real environments; replication/healing/migration are goal-driven, memory- and semantics-informed, energy-aware, and optimized against SLOs. They couple operational closure with environmental adaptation and learn from history.

Appendix B. Hardware Limitations and Energy Utilization

References

- von Neumann, J. The Computer and the Brain; Yale University Press: New Haven, CT, USA, 1958. [Google Scholar]

- Kurzweil, R. The Age of Intelligent Machines; MIT Press: Cambridge, MA, USA, 1990. [Google Scholar]

- Kurzweil, R. The Age of Spiritual Machines: When Computers Exceed Human Intelligence; Viking: New York, NY, USA, 1999. [Google Scholar]

- Kurzweil, R. The Singularity is Near: When Humans Transcend Biology; Viking: New York, NY, USA, 2005. [Google Scholar]

- Altman, S. The Gentle Singularity. 2025. Available online: https://blog.samaltman.com/the-gentle-singularity (accessed on 30 June 2025).

- OpenAI. Weak-to-Strong Generalization. 2024. Available online: https://openai.com/index/weak-to-strong-generalization (accessed on 30 June 2025).

- Hendrycks, D.; Schmidt, E.; Wang, A. Superintelligence strategy: Expert version. arXiv 2025, arXiv:2503.05628. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting black-box models: A review on explainable artificial intelligence. Cogn. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

- Savage, N. Breaking into the Black Box of Artificial Intelligence. Nature. Available online: https://www.nature.com/articles/d41586-022-00858-1 (accessed on 11 August 2025).

- von Eschenbach, W.J. Transparency and the Black Box Problem: Why We Do Not Trust AI. Philos. Technol. 2021, 34, 1607–1622. [Google Scholar] [CrossRef]

- DeChant, C. Episodic memory in AI agents poses risks that should be studied and mitigated. arXiv 2025, arXiv:2501.11739. [Google Scholar] [CrossRef]

- Kumar, A.A. Semantic memory: A review of methods, models, and current challenges. Psychon. Bull. Rev. 2020, 28, 40–80. [Google Scholar] [CrossRef] [PubMed]

- Zhou, S.; Schölkopf, B. Causal reasoning and large language models: Opening a new frontier for AI. arXiv 2023, arXiv:2305.00050. [Google Scholar]

- Baron, S. Explainable AI and causal understanding: Counterfactual approaches considered. Minds Mach. 2023, 33, 347–377. [Google Scholar] [CrossRef]

- Hagendorff, T. The ethics of AI ethics: An evaluation of guidelines. Minds Mach. 2020, 30, 99–120. [Google Scholar] [CrossRef]

- van Maanen, H. The philosophy and ethics of AI: Conceptual, empirical, and normative perspectives. AI and Ethics. Digit. Soc. 2024, 3, 10. [Google Scholar] [CrossRef]

- Ye, G.; Pham, K.D.; Zhang, X.; Gopi, S.; Peng, B.; Li, B.; Kulkarni, J.; Inan, H.A. On the emergence of thinking in LLMs I: Searching for the right intuition. arXiv 2025. [Google Scholar] [CrossRef]

- Shahzad, T.; Mazhar, T.; Tariq, M.U.; Ahmad, W.; Ouahada, K.; Hamam, H. A comprehensive review of large language models: Issues and solutions in learning environments. Discov. Sustain. 2025, 6, 27. [Google Scholar] [CrossRef]

- Cemri, M.; Pan, M.Z.; Yang, S.; Agrawal, L.A.; Chopra, B.; Tiwari, R.; Keutzer, K.; Parameswaran, A.; Klein, D.; Ramchandran, K.; et al. Why do multi-agent LLM systems fail? arXiv 2025. [Google Scholar] [CrossRef]

- Burgin, M. Theory of Information: Fundamentality, Diversity, and Unification; World Scientific: Singapore, 2010. [Google Scholar]

- Burgin, M. Theory of Knowledge: Structures and Processes; World Scientific: New York, NY, USA; London, UK; Singapore, 2016. [Google Scholar]

- Burgin, M. Structural Reality; Nova Science Publishers: New York, NY, USA, 2012. [Google Scholar]

- Mikkilineni, R. A New Class of Autopoietic and Cognitive Machines. Information 2022, 13, 24. [Google Scholar] [CrossRef]

- Burgin, M.; Mikkilineni, R. On the Autopoietic and Cognitive Behavior. EasyChair Preprint No. 6261, Version 2. 2021. Available online: https://easychair.org/publications/preprint/tkjk (accessed on 27 December 2021).

- Mikkilineni, R.; Kelly, W.P.; Crawley, G. Digital genome and self-regulating distributed software applications with associative memory and event-driven history. Computers 2024, 13, 220. [Google Scholar] [CrossRef]

- Kelly, W.P.; Coccaro, F.; Mikkilineni, R. General theory of information, digital genome, large language models, and medical knowledge-driven digital assistant. Comput. Sci. Math. Forum 2023, 8, 70. [Google Scholar] [CrossRef]

- Mikkilineni, R. Going beyond Church–Turing Thesis Boundaries: Digital Genes, Digital Neurons and the Future of AI. Proceedings 2020, 47, 15. [Google Scholar] [CrossRef]

- Cockshott, P.; MacKenzie, L.M.; Michaelson, G. Computation and Its Limits; Oxford University Press: Oxford, UK, 2012. [Google Scholar]

- Mikkilineni, R. Mark Burgin’s Legacy: The General Theory of Information, the Digital Genome, and the Future of Machine Intelligence. Philosophies 2023, 8, 107. [Google Scholar] [CrossRef]

- Turing, A.M. The Essential Turing; Copeland, B.J., Ed.; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

- Digital Genome Implementation Presentations: Autopoietic Machines. Available online: https://triadicautomata.com/digital-genome-vod-presentation/ (accessed on 30 June 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mikkilineni, R. General Theory of Information and Mindful Machines. Proceedings 2025, 126, 3. https://doi.org/10.3390/proceedings2025126003

Mikkilineni R. General Theory of Information and Mindful Machines. Proceedings. 2025; 126(1):3. https://doi.org/10.3390/proceedings2025126003

Chicago/Turabian StyleMikkilineni, Rao. 2025. "General Theory of Information and Mindful Machines" Proceedings 126, no. 1: 3. https://doi.org/10.3390/proceedings2025126003

APA StyleMikkilineni, R. (2025). General Theory of Information and Mindful Machines. Proceedings, 126(1), 3. https://doi.org/10.3390/proceedings2025126003