Axiology and the Evolution of Ethics in the Age of AI: Integrating Ethical Theories via Multiple-Criteria Decision Analysis †

Abstract

1. Introduction

2. Theoretical Foundations

2.1. Responsible AI: From Principles to Practice

2.2. Digital Humanism: A Philosophical Foundation

2.3. Axiology and Ethical Pluralism

- Intrinsic Values: Dignity, fairness, and autonomy—valued for their own sake.

- Instrumental Values: Accuracy, efficiency, scalability—valued as means to an end.

2.4. Multi-Criteria Decision Analysis (MCDA) in Ethical AI

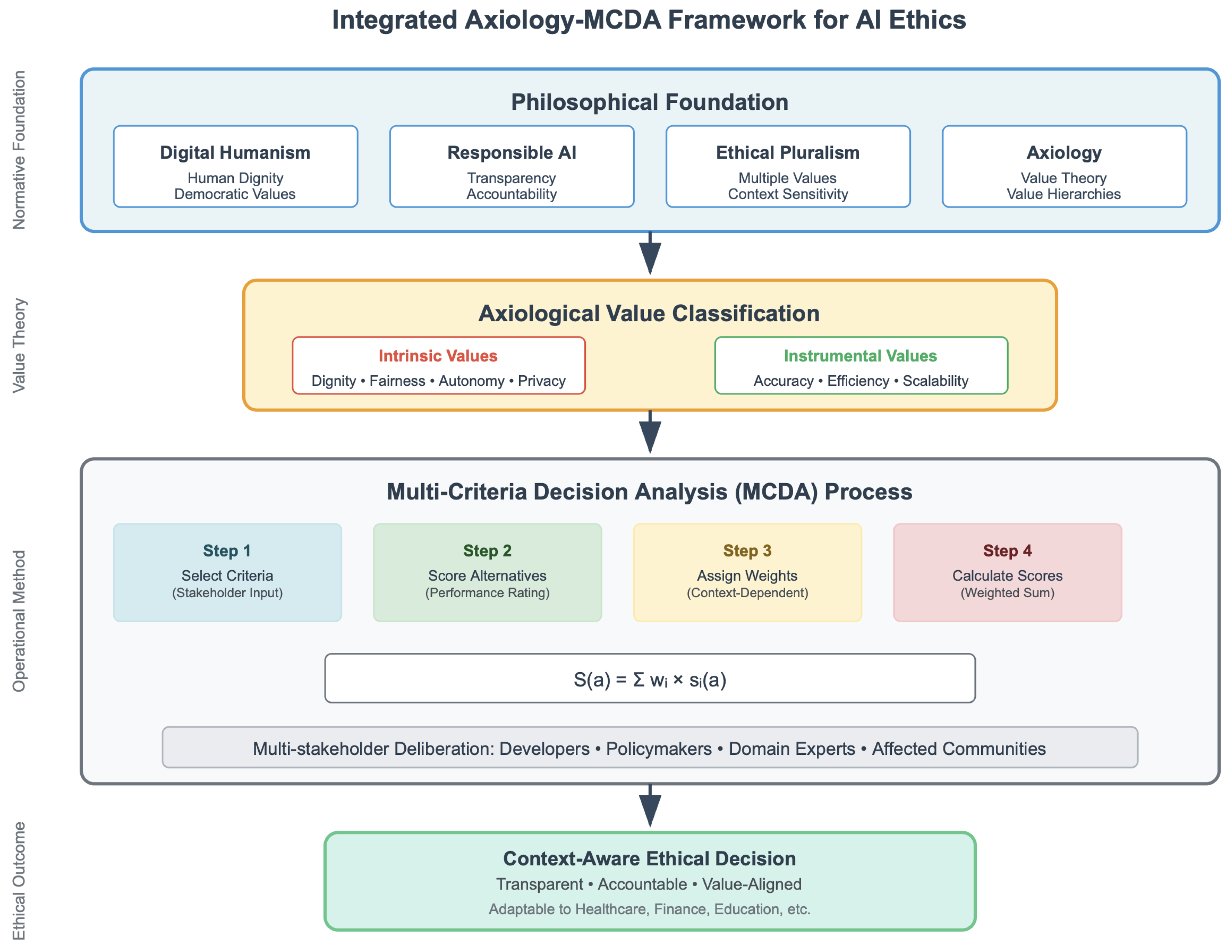

3. Integrating the Axiology–MCDA Framework

3.1. Normative Foundation

3.2. Value Classification

3.3. From Values to Action: MCDA Operational Method

- Select Criteria: Stakeholders collaboratively identify relevant ethical values (e.g., accuracy, privacy, fairness, dignity).

- Score Alternatives: Each AI system is rated on a common scale (e.g., 1–4) for each criterion.

- Assign Weights: Stakeholders assign relative importance to each criterion based on context.

- Calculate Integrated Score: Mathematical aggregation is used to combine weighted performance scores across all ethical criteria.

3.4. Ethical Outcome

4. Illustrative Scenario: Ethical Evaluation of AI Diagnostics in Healthcare

4.1. Scenario and System Alternatives

- System A: This system is optimized for diagnostic accuracy, using extensive patient data (e.g., genomics, family history) to enhance precision.

- System B: This system is designed for privacy, using consent-driven data minimization and differential privacy techniques to protect patient information.

- Physicians prioritize accuracy to support clinical effectiveness, especially in high-stakes environments such as emergency care.

- Patient advocates emphasize privacy, autonomy, and informed consent, reflecting broader societal concerns about data protection and patient dignity.

- Public health officials seek a balanced approach, valuing both clinical performance and community trust, particularly in population-level health initiatives.

4.2. Applying the MCDA Framework

- In routine outpatient care, System B is clearly preferred, reflecting the importance of patient autonomy, informed consent, and data minimization in routine clinical interactions.

- In emergency care, System A slightly outperforms system B, demonstrating that while diagnostic accuracy is paramount, privacy remains ethically relevant even under clinical urgency.

- In community health, system B significantly outperforms System A, emphasizing the importance of trust, privacy, and social accountability in public health initiatives targeting vulnerable populations.

4.3. Insights

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kakarala, M.R.K.; Rongali, S.K. Existing Challenges in Ethical AI: Addressing Algorithmic Bias, Transparency, Accountability and Regulatory Compliance. World J. Adv. Res. Rev. 2025, 25, 549–554. [Google Scholar] [CrossRef]

- AlJadaan, O.T.; Zaidi, H.; Al Faress, M.Y.; Jabas, A.O. Ethics in AI and Computation in Automated Decision-Making. In Enhancing Automated Decision-Making Through AI; Hai-Jew, S., Ed.; IGI Global Scientific Publishing: Hershey, PA, USA, 2025; pp. 397–424. [Google Scholar] [CrossRef]

- Williamson, S.M.; Prybutok, V. Balancing Privacy and Progress: A Review of Privacy Challenges, Systemic Oversight, and Patient Perceptions in AI-Driven Healthcare. Appl. Sci. 2024, 14, 675. [Google Scholar] [CrossRef]

- Lau, T. Predictive Policing Explained. Available online: https://www.brennancenter.org/our-work/research-reports/predictive-policing-explained (accessed on 30 September 2025).

- Alexander, L.; Moore, M. Deontological Ethics. In The Stanford Encyclopedia of Philosophy; Winter 2021 Edition; Zalta, E.N., Ed.; Stanford University: Stanford, CA, USA, 2021. [Google Scholar]

- Crisp, R. Utilitarianism. In The Stanford Encyclopedia of Philosophy; Fall 2017 Edition; Zalta, E.N., Ed.; Stanford University: Stanford, CA, USA, 2017. [Google Scholar]

- Hursthouse, R.; Pettigrove, G. Virtue Ethics. In The Stanford Encyclopedia of Philosophy; Spring 2022 Edition; Zalta, E.N., Ed.; Stanford University: Stanford, CA, USA, 2022. [Google Scholar]

- Hagendorff, T. The Ethics of AI Ethics: An Evaluation of Guidelines. Minds Mach. 2020, 30, 99–120. [Google Scholar] [CrossRef]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef] [PubMed]

- Dubljevic, V.; Yim, M.; Poel, I. Toward a Rational and Ethical Sociotechnical System of Autonomous Vehicles: A Novel Application of Multi-Criteria Decision Analysis. Philos. Technol. 2021, 34, 137–160. [Google Scholar] [CrossRef] [PubMed]

- Mittelstadt, B. Principles Alone Cannot Guarantee Ethical AI. Nat. Mach. Intell. 2019, 1, 501–507. [Google Scholar] [CrossRef]

- Dignum, V. Responsible Artificial Intelligence: How to Develop and Use AI in a Responsible Way; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- European Union. Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act). Official Journal of the European Union, L 2024/1689, 12 July 2024. Available online: https://eur-lex.europa.eu/eli/reg/2024/1689/oj/eng (accessed on 30 September 2025).

- Whittlestone, J.; Nyrup, R.; Alexandrova, A.; Cave, S. The Role and Limits of Principles in AI Ethics: Towards a Focus on Tensions. In Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society, Honolulu, HI, USA, 27–28 January 2019; pp. 195–200. [Google Scholar]

- Nida-Rümelin, J.; Staudacher, K. Philosophical Foundations of Digital Humanism. In Introduction to Digital Humanism; Werthner, H., Ghezzi, C., Kramer, J., Nida-Rümelin, J., Nuseibeh, B., Prem, E., Stanger, A., Eds.; Springer: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Vienna Manifesto on Digital Humanism. Vienna, May 2019. Available online: https://dighum.ec.tuwien.ac.at/wp-content/uploads/2019/07/Vienna_Manifesto_on_Digital_Humanism_EN.pdf (accessed on 30 September 2025).

- Prem, E. Approaches to Ethical AI. In Introduction to Digital Humanism; Werthner, H., Ghezzi, C., Kramer, J., Nida-Rümelin, J., Nuseibeh, B., Prem, E., Stanger, A., Eds.; Springer: Cham, Switzerland, 2024; pp. 225–239. [Google Scholar] [CrossRef]

- Sapienza, G.; Dodig-Crnkovic, G.; Crnkovic, I. Inclusion of Ethical Aspects in Multi-Criteria Decision Analysis. In Proceedings of the 2016 1st International Workshop on Decision Making in Software Architecture (MARCH), Venice, Italy, 5 April 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Triantaphyllou, E. Multi-Criteria Decision Making Methods: A Comparative Study; Springer: Boston, MA, USA, 2000. [Google Scholar] [CrossRef]

- Tsoukiàs, A. From Decision Theory to Decision Aiding Methodology. Eur. J. Oper. Res. 2008, 187, 138–161. [Google Scholar] [CrossRef]

- Belton, V.; Stewart, T.J. Multiple Criteria Decision Analysis: An Integrated Approach; Springer: Boston, MA, USA, 2002. [Google Scholar] [CrossRef]

- Keeney, R.L.; Raiffa, H. Decisions with Multiple Objectives: Preferences and Value Trade-Offs; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar] [CrossRef]

- Ferrell, O.C.; Harrison, D.E.; Ferrell, L.K.; Ajjan, H.; Hochstein, B.W. A Theoretical Framework to Guide AI Ethical Decision Making. AMS Rev. 2024, 14, 53–67. [Google Scholar] [CrossRef]

- Collins, B.X.; Bélisle-Pipon, J.-C.; Evans, B.J.; Ferryman, K.; Jiang, X.; Nebeker, C.; Novak, L.; Roberts, K.; Were, M.; Yin, Z.; et al. Addressing Ethical Issues in Healthcare Artificial Intelligence Using a Lifecycle-Informed Process. JAMIA Open 2024, 7, ooae108. [Google Scholar] [CrossRef] [PubMed]

- Badawy, W. Algorithmic Sovereignty and Democratic Resilience: Rethinking AI Governance in the Age of Generative AI. AI Ethics 2025, in press. [CrossRef]

- Ryan, J. Democracy in the Digital Age: Reclaiming Governance in an Algorithmic World. Toda Peace Institute Policy Brief No. 223. 2025. Available online: https://toda.org/policy-briefs-and-resources/policy-briefs/report-223-full-text.html (accessed on 30 September 2025).

| Scenario | Weights (Acc/Priv) | System A Score | System B Score | Preferred System |

|---|---|---|---|---|

| Routine Outpatient Clinic | 0.5/0.5 | 3.0 | 3.5 | B |

| Emergency Department | 0.7/0.3 | 3.4 | 3.3 | A |

| Community Health | 0.3/0.7 | 2.6 | 3.7 | B |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, F.; Isovic, D.; Dodig-Crnkovic, G. Axiology and the Evolution of Ethics in the Age of AI: Integrating Ethical Theories via Multiple-Criteria Decision Analysis. Proceedings 2025, 126, 17. https://doi.org/10.3390/proceedings2025126017

Sun F, Isovic D, Dodig-Crnkovic G. Axiology and the Evolution of Ethics in the Age of AI: Integrating Ethical Theories via Multiple-Criteria Decision Analysis. Proceedings. 2025; 126(1):17. https://doi.org/10.3390/proceedings2025126017

Chicago/Turabian StyleSun, Fei, Damir Isovic, and Gordana Dodig-Crnkovic. 2025. "Axiology and the Evolution of Ethics in the Age of AI: Integrating Ethical Theories via Multiple-Criteria Decision Analysis" Proceedings 126, no. 1: 17. https://doi.org/10.3390/proceedings2025126017

APA StyleSun, F., Isovic, D., & Dodig-Crnkovic, G. (2025). Axiology and the Evolution of Ethics in the Age of AI: Integrating Ethical Theories via Multiple-Criteria Decision Analysis. Proceedings, 126(1), 17. https://doi.org/10.3390/proceedings2025126017