Insights Gained from Using AI to Produce Cases for Problem-Based Learning †

Abstract

1. Introduction

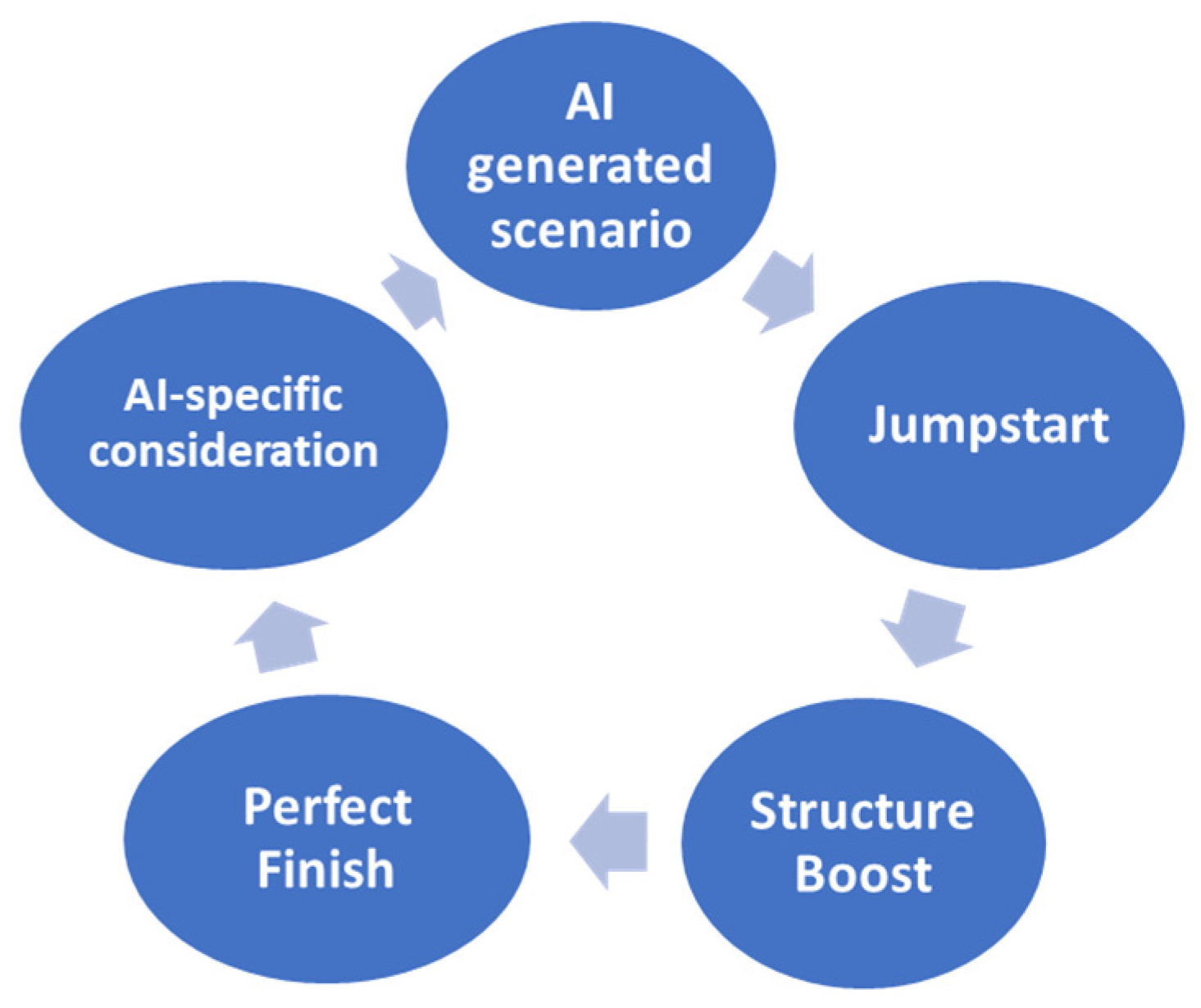

2. Practical Consideration

2.1. A Jumpstart: Prompt Development and Objectives

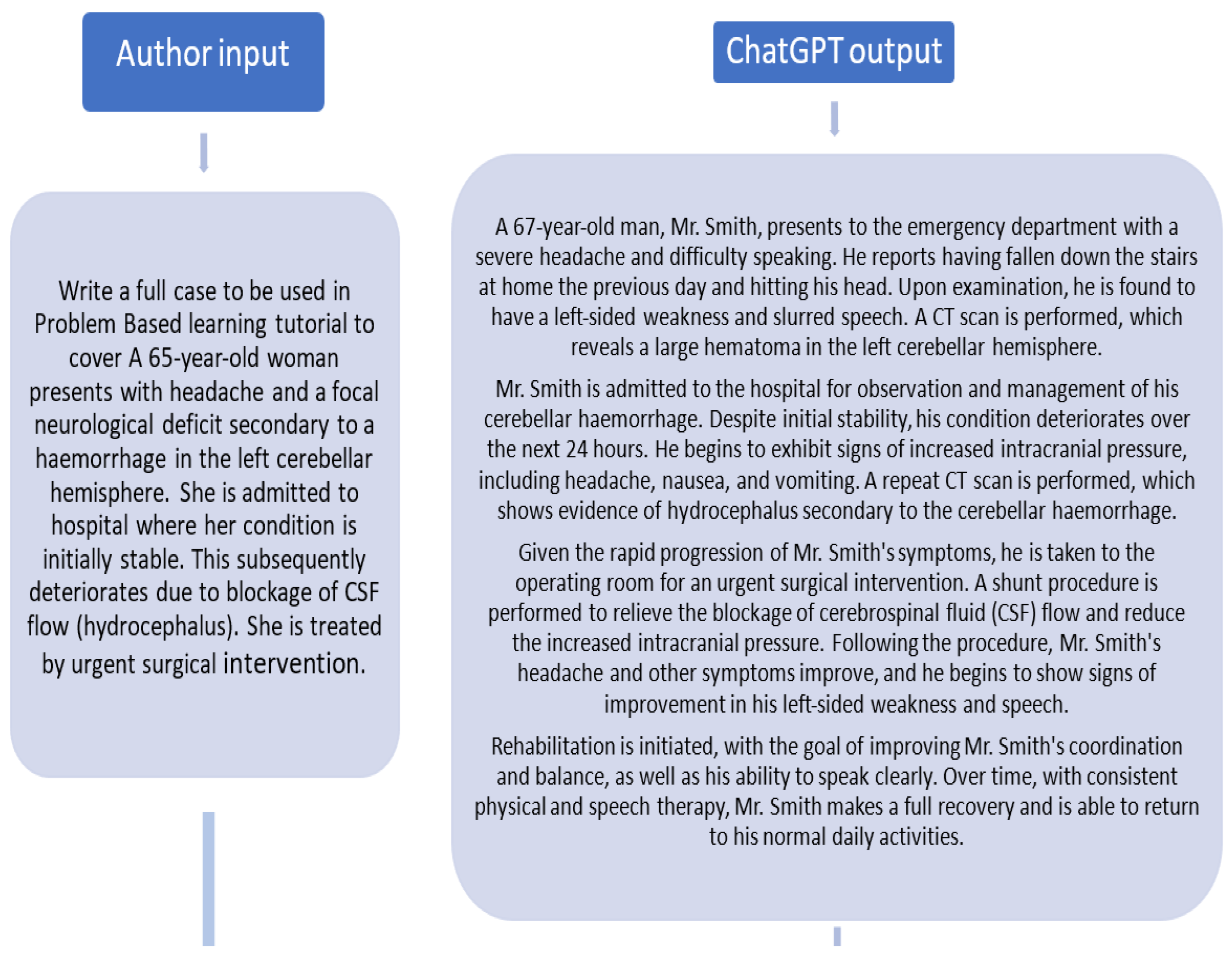

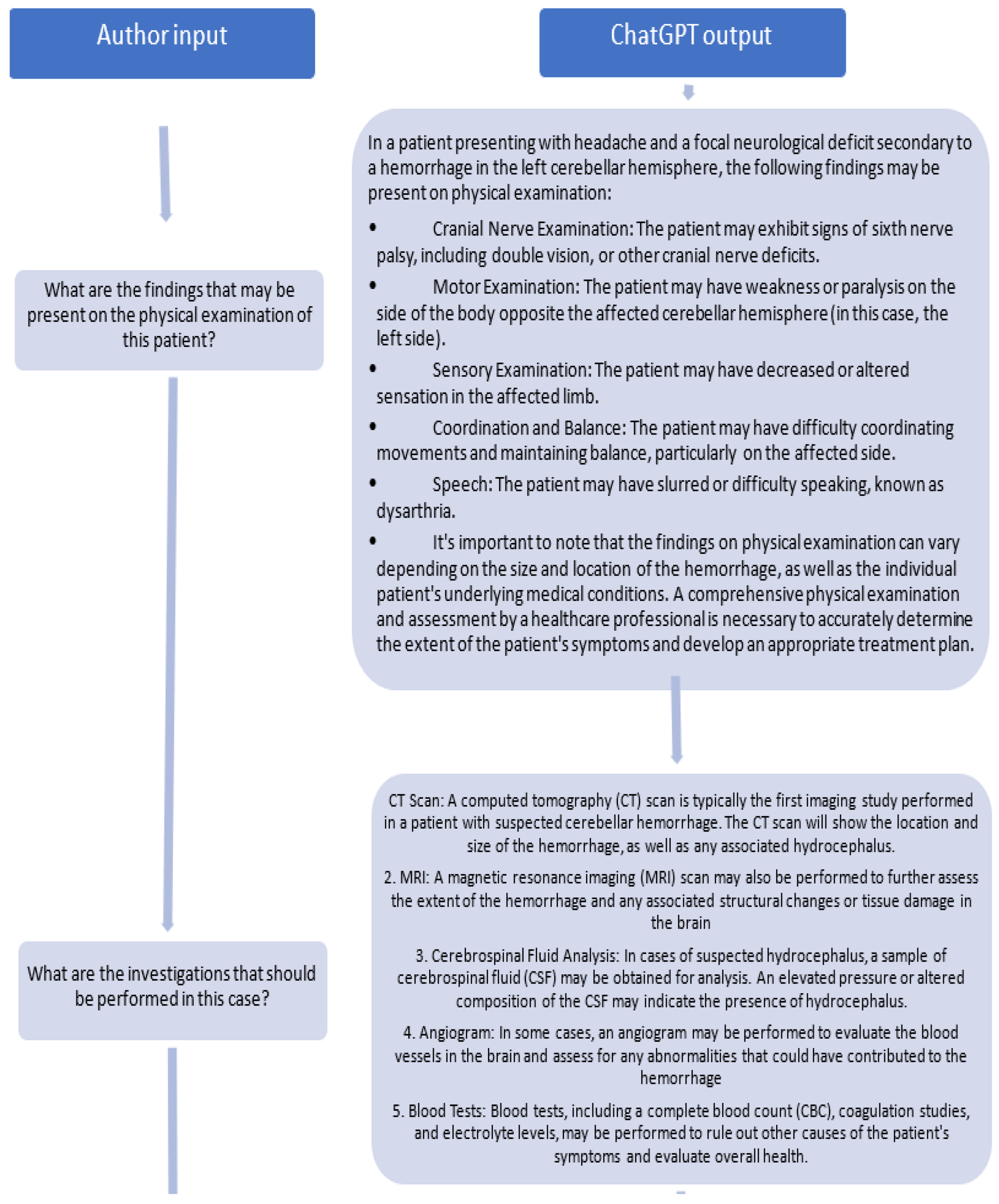

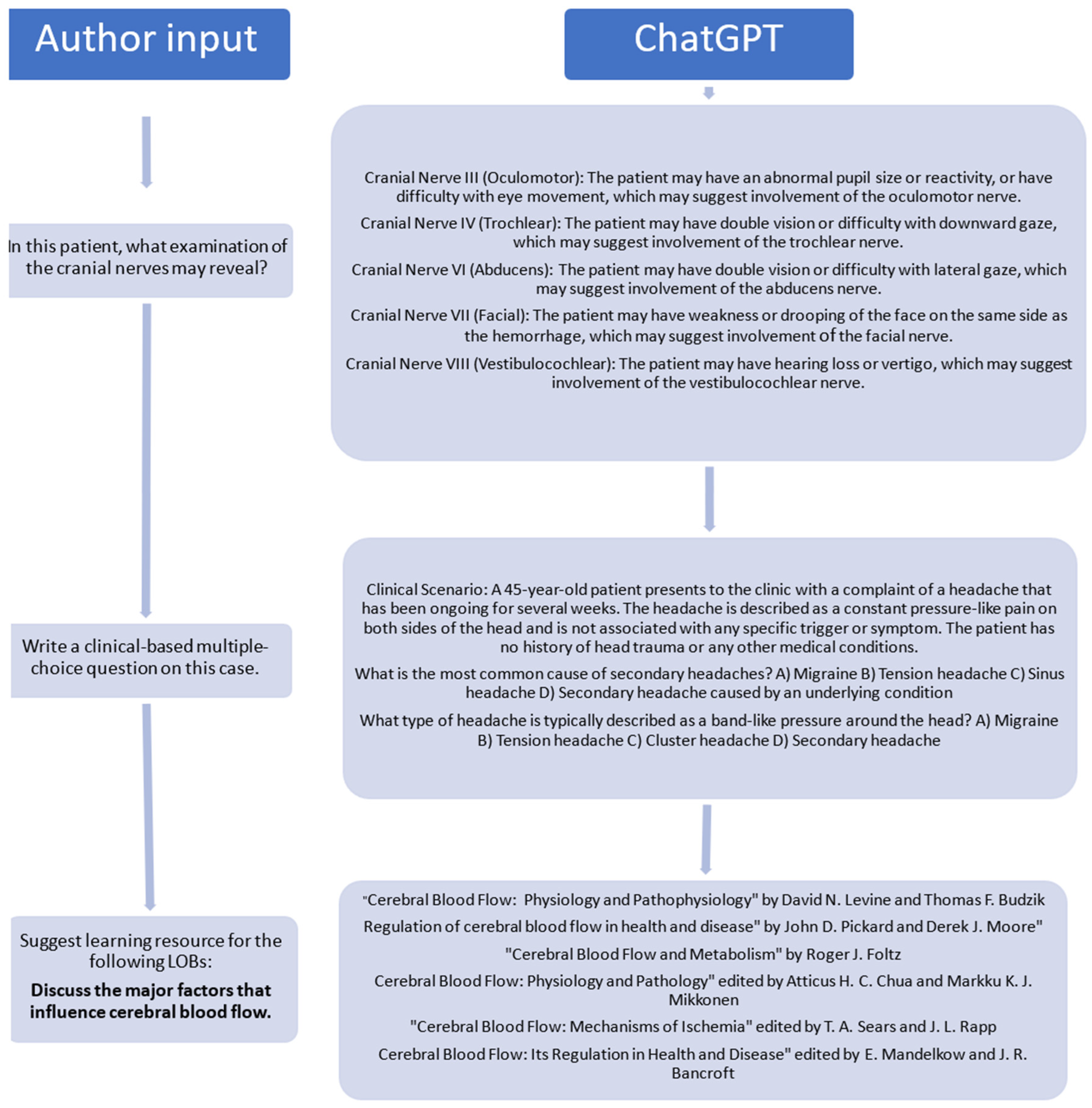

2.1.1. Prompt Development

2.1.2. Predefined Objectives

2.2. Structure Boost: Augmenting Your Case Study Framework

2.2.1. Use of Template

2.2.2. Specify the Working Protocol/Region

2.2.3. Add Sources for Scans/Radiological Input

2.3. Perfect Finish: Quality Assurance and Refinement

2.3.1. Use of References

2.3.2. Review Panel

2.3.3. Do Not Underestimate the Importance of Interdisciplinary Collaboration

2.4. AI-Specific Consideration

2.4.1. Remember That ChatGPT Is Not a Human

2.4.2. Avoid False Confidence

2.4.3. Train Your Chatbot

2.4.4. Supplementary Tools: Other Plugins: Diagram, Picture, Video

3. Implications

4. Ethical Issues in Artificial Intelligence

5. Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yew, E.H.; Goh, K. Problem-based learning: An overview of its process and impact on learning. Health Prof. Educ. 2016, 2, 75–79. [Google Scholar] [CrossRef]

- Abouzeid, E.; Harris, P. Using AI to produce problem-based learning cases. Med. Educ. 2023, 57, 1154–1155. [Google Scholar] [CrossRef] [PubMed]

- OpenAI Community. A Guide to Crafting Effective Prompts for Diverse Applications. 2023. Available online: https://community.openai.com/t/a-guide-to-crafting-effective-prompts-for-diverse-applications/493914 (accessed on 1 January 2025).

- Kıyak, Y.S.; Emekli, E. ChatGPT prompts for generating multiple-choice questions in medical education and evidence on their validity: A literature review. Postgrad. Med. J. 2024, 100, 858–865. [Google Scholar] [CrossRef]

- Zapier. How to Train ChatGPT to Write Like You. Available online: https://zapier.com/blog/train-chatgpt-to-write-like-you/ (accessed on 1 August 2024).

- Robertson, J.; Ferreira, C.; Botha, E.; Oosthuizen, K. Game changers: A generative AI prompt protocol to enhance human-AI knowledge co-construction. Bus. Horiz. 2024, 67, 499–510. [Google Scholar] [CrossRef]

- Klang, E.; Portugez, S.; Gross, R.; Brenner, A.; Gilboa, M.; Ortal, T.; Ron, S.; Robinson, V.; Meiri, H.; Segal, G. Advantages and pitfalls in utilizing artificial intelligence for crafting medical examinations: A medical education pilot study with GPT-4. BMC Med. Educ. 2003, 23, 772. [Google Scholar] [CrossRef]

- Cheung, B.H.H.; Lau, G.K.K.; Wong, G.T.C.; Lee, E.Y.P.; Kulkarni, D.; Seow, C.S.; Wong, R.; Co, M.T.-H. ChatGPT versus human in generating medical graduate exam multiple choice questions—A multinational prospective study (Hong Kong S.A.R., Singapore, Ireland, and the United Kingdom). PLoS ONE 2023, 18, e0290691. [Google Scholar] [CrossRef]

- Kıyak, Y.S.; Coşkun, Ö.; Budakoğlu, I.İ.; Uluoğlu, C. ChatGPT for generating multiple-choice questions: Evidence on the use of artificial intelligence in automatic item generation for a rational pharmacotherapy exam. Eur. J. Clin. Pharmacol. 2024, 80, 729–735. [Google Scholar] [CrossRef]

- Savin-Baden, M.; Major, C.H. Foundations of Problem-Based Learning; Society for Research into Higher Education and Open University Press: New York, NY, USA, 2004. [Google Scholar]

- OpenAI. GPT-4 Is OpenAI’s Most Advanced System, Producing Safer and More Useful Responses. 2023. Available online: https://openai.com/product/gpt-4 (accessed on 1 January 2025).

- Harel, D.; Katz, G.; Marron, A.; Szekely, S. On augmenting scenario-based modeling with Generative AI. arXiv 2024, arXiv:2401.02245. [Google Scholar] [CrossRef]

- Raghavan, M.; Barocas, S.; Kleinberg, J.; Levy, K. Mitigating bias in algorithmic hiring: Evaluating claims and practices. In Proceedings of the Conference on Fairness, Accountability, and Transparency [FAT ’20], Barcelona, Spain, 20–30 January 2020; ACM: New York, NY, USA, 2020; pp. 469–481. [Google Scholar]

- Goldsack, J.C.; Coravos, A.; Bakker, J.P.; Bent, B.; Dowling, A.V.; Fitzer-Attas, C.; Godfrey, A.; Godino, J.G.; Gujar, N.; Izmailova, E.; et al. Verification, analytical validation, and clinical validation (V3): The foundation of determining fit-for-purpose for Biometric Monitoring Technologies (BioMeTs). NPJ Digit. Med. 2020, 3, 55. [Google Scholar] [CrossRef]

- Wang, C.; Liu, S.; Yang, H.; Guo, J.; Wu, Y.; Liu, J. Ethical considerations of using ChatGPT in health care. J. Med. Internet Res. 2023, 25, e48009. [Google Scholar] [CrossRef]

- Kaledio, P.; Robert, A.; Frank, L. The Impact of Artificial Intelligence on Students’ Learning Experience. Available online: https://www.ssrn.com/abstract=4716747 (accessed on 1 February 2024).

- Wang, G.; Badal, A.; Jia, X.; Maltz, J.S.; Mueller, K.; Myers, K.J.; Niu, C.; Vannier, M.; Yan, P.; Yu, Z.; et al. Development of metaverse for intelligent healthcare. Nat. Mach. Intell. 2022, 4, 922–929. [Google Scholar] [CrossRef]

- Stokel-Walker, C.; Van Noorden, R. What ChatGPT and generative AI mean for science. Nature 2023, 614, 214–216. [Google Scholar] [CrossRef]

- Jeblick, K.; Schachtner, B.; Dexl, J.; Mittermeier, A.; Stüber, A.T.; Topalis, J.; Weber, T.; Wesp, P.; Sabel, B.O.; Ricke, J.; et al. ChatGPT makes medicine easy to swallow: An exploratory case study on simplified radiology reports. arXiv 2022, arXiv:2212.14822. [Google Scholar] [CrossRef]

- Gates, B. Will ChatGPT transform healthcare? Nat. Med. 2023, 29, 505–506. [Google Scholar]

- Johnson, D.; Goodman, R.; Patrinely, J.; Stone, C.; Zimmerman, E.; Donald, R.; Chang, S.; Berkowitz, S.; Finn, A.; Jahangir, E.; et al. Assessing the Accuracy and Reliability of AI-Generated Medical Responses: An Evaluation of the Chat-GPT Model. Available online: https://www.researchsquare.com/article/rs-2566942/v1 (accessed on 28 February 2023).

- Alkaissi, H.; McFarlane, S.I. Artificial hallucinations in ChatGPT: Implications in scientific writing. Cureus 2023, 15, e35179. [Google Scholar] [CrossRef] [PubMed]

- Budgell, B. Guidelines to the writing of case studies. J. Can. Chiropr. Assoc. 2008, 52, 199–204. [Google Scholar] [PubMed]

- Soprano, M.; Roitero, K.; La Barbera, D.; Ceolin, D.; Spina, D.; Demartini, G.; Mizzaro, S. Cognitive biases in fact-checking and their countermeasures: A review. Inf. Process. Manag. 2024, 61, 103672. [Google Scholar] [CrossRef]

- Tandoc, E.C. The facts of fake news: A research review. Sociol. Compass 2019, 13, 12724. [Google Scholar] [CrossRef]

- Graves, L. Understanding the Promise and Limits of Automated Fact-Checking; Factsheet, Reuters Institute, University of Oxford: Oxford, UK, 2018. [Google Scholar] [CrossRef]

- Choo, J.M.; Ryu, H.S.; Kim, J.S.; Cheong, J.Y.; Baek, S.; Kwak, J.M. Conversational artificial intelligence (ChatGPT™) in the management of complex colorectal cancer patients: Early experience. ANZ J. Surg. 2024, 94, 356–361. [Google Scholar] [CrossRef]

- Yeo, Y.H.; Samaan, J.S.; Ng, W.H.; Ting, P.-S.; Trivedi, H.; Vipani, A.; Ayoub, W.; Yang, J.D.; Liran, O.; Spiegel, B.; et al. Assessing the performance of ChatGPT in answering questions regarding cirrhosis and hepatocellular carcinoma. Clin. Mol. Hepatol. 2023, 29, 721–732. [Google Scholar] [CrossRef] [PubMed]

- Tan, S.; Xin, X.; Wu, D. ChatGPT in medicine: Prospects and challenges: A review article. Int. J. Surg. 2024, 110, 3701–3706. [Google Scholar] [CrossRef] [PubMed]

- Turing, A. Computing machinery and intelligence. Mind 1950, 59, 433–460. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, X.; Cao, X.; Huang, C.; Liu, E.; Qian, S.; Liu, X.; Wu, Y.; Dong, F.; Qiu, C.-W.; et al. Artificial intelligence: A powerful paradigm for scientific research. Innovation 2021, 2, 100179. [Google Scholar] [CrossRef] [PubMed]

- Alvero, R. ChatGPT: Rumors of human providers’ demise have been greatly exaggerated. Fertil. Steril. 2023, 119, 930–931. [Google Scholar] [CrossRef] [PubMed]

- Roche, C.; Wall, P.J.; Lewis, D. Ethics and diversity in artificial intelligence policies, strategies, and initiatives. AI Ethics 2023, 3, 1095–1115. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, Y.; Luo, Q.; Parker, A.G.; De Choudhury, M. Synthetic lies: Understanding AI-generated misinformation and evaluating algorithmic and human solutions. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems [CHI ’23], Hamburg, Germany, 23–28 April 2023; ACM: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Powell Software. How to Train a Chatbot on Your Own Data. Available online: https://powell-software.com/resources/blog/how-to-train-chatbot-on-your-own-data/ (accessed on 1 January 2025).

- Adamopoulou, E.; Moussiades, L. Chatbots: History, technology, and applications. Mach. Learn. Appl. 2020, 2, 100006. [Google Scholar] [CrossRef]

- Sethar, F. ChatGPT Plugins: How They Work and How They Can Benefit You. 2024. Available online: https://medium.com/@farhansethar/chatgpt-plugins-how-they-work-and-how-they-can-benefit-you-ecde559de96d (accessed on 1 August 2024).

- OpenAI. ChatGPT Plugins. Available online: https://openai.com/index/chatgpt-plugins/ (accessed on 1 January 2025).

- Graham, A. ChatGPT and other AI tools put students at risk of plagiarism allegations, MDU warns. BMJ 2023, 381, 1133. [Google Scholar] [CrossRef] [PubMed]

- Loh, E. ChatGPT and generative AI chatbots: Challenges and opportunities for science, medicine and medical leaders. BMJ Lead. 2024, 8, 51–54. [Google Scholar] [CrossRef] [PubMed]

- Masters, K. Medical Teacher’s first ChatGPT referencing hallucinations: Lessons for editors, reviewers, and teachers. Med. Teach. 2023, 45, 673–675. [Google Scholar] [CrossRef] [PubMed]

- Schaye, V.; Guzman, B.; Burk-Rafel, J.; Marin, M.; Reinstein, I.; Kudlowitz, D.; Miller, L.; Chun, J.; Aphinyanaphongs, Y. Development and validation of a machine learning model for automated assessment of resident clinical reasoning documentation. J. Gen. Intern. Med. 2022, 37, 2230–2238. [Google Scholar] [CrossRef]

- Burk-Rafel, J.; Reinstein, I.; Feng, J.; Kim, M.B.; Miller, L.H.; Cocks, P.M.; Marin, M.; Aphinyanaphongs, Y. Development and validation of a machine learning-based decision support tool for residency applicant screening and review. Acad. Med. 2021, 96, S54–S61. [Google Scholar] [CrossRef] [PubMed]

- Ossa, L.A.; Rost, M.; Lorenzini, G.; Shaw, D.M.; Elger, B.S. A smarter perspective: Learning with and from AI-cases. Artif. Intell. Med. 2022, 135, 102458. [Google Scholar] [CrossRef] [PubMed]

- Gatti, R.F.M.; Menè, R.; Shiffer, D.; Marchiori, C.; Levra, A.G.; Saturnino, V.; Brunetta, E.; Dipaola, F. A Natural Language Processing-based virtual patient simulator and intelligent tutoring system for the clinical diagnostic process: Simulator development and case study. JMIR Med. Inform. 2021, 9, e24073. [Google Scholar] [CrossRef]

- Gordon, M.; Daniel, M.; Ajiboye, A.; Uraiby, H.; Xu, N.Y.; Bartlett, R.; Hanson, J.; Haas, M.; Spadafore, M.; Grafton-Clarke, C.; et al. A scoping review of artificial intelligence in medical education: BEME Guide No. 84. Med. Teach. 2024, 46, 446–470. [Google Scholar] [CrossRef] [PubMed]

- Masters, K. Artificial intelligence developments in medical education: A conceptual and practical framework. MedEdPublish 2020, 9, 239. [Google Scholar] [CrossRef] [PubMed]

- Denvir, P.; Briceland, L.L. Exploring the impact of an innovative peer role-play simulation to cultivate student pharmacists’ motivational interviewing skills. Pharmacy 2023, 11, 122. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Zheng, Y.; Feng, B.; Yang, Y.; Kang, K.; Zhao, A. Embracing ChatGPT for medical education: Exploring its impact on doctors and medical students. JMIR Med. Educ. 2024, 10, e52483. [Google Scholar] [CrossRef]

- Kollerup, N.K.; Johansen, S.S.; Tolsgaard, M.G.; Friis, M.L.; Skov, M.B.; van Berkel, N. Clinical needs and preferences for AI-based explanations in clinical simulation training. Behav. Inf. Technol. 2024, 1–21. [Google Scholar] [CrossRef]

- Zenani, N.E.; Sehularo, L.A.; Gause, G.; Chukwuere, P.C. The contribution of interprofessional education in developing competent undergraduate nursing students: Integrative literature review. BMC Nurs. 2023, 22, 315. [Google Scholar] [CrossRef] [PubMed]

- Shaver, J. The state of telehealth before and after the COVID-19 Pandemic. Prim. Care 2022, 49, 517–530. [Google Scholar] [CrossRef] [PubMed]

- Das, A.; Malaviya, S.; Singh, M. The impact of AI-driven personalization on learners’ performance. Int. J. Comput. Sci. Eng. 2023, 11, 15–22. [Google Scholar] [CrossRef]

- Gerke, S.; Minssen, T.; Cohen, G. Ethical and legal challenges of artificial intelligence-driven healthcare. In Artificial Intelligence in Healthcare; Bohr, A., Memarzadeh, K., Eds.; Academic Press: London, UK, 2020; pp. 295–336. [Google Scholar]

- Arizmendi, C.J.; Bernacki, M.L.; Raković, M.; Plumley, R.D.; Urban, C.J.; Panter, A.T.; Greene, J.A.; Gates, K.M. Predicting student outcomes using digital logs of learning behaviors: Review, current standards, and suggestions for future work. Behav. Res. 2022, 55, 3026–3054. [Google Scholar] [CrossRef] [PubMed]

- Vinge, V. Technological Singularity. 1993. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.94.7856andrep=rep1andtype=pdf (accessed on 1 January 2025).

- Masters, K. Ethical use of Artificial Intelligence in Health Professions Education: AMEE Guide No. 158. Med. Teach. 2023, 45, 574–584. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abouzeid, E.; Harris, P. Insights Gained from Using AI to Produce Cases for Problem-Based Learning. Proceedings 2025, 114, 5. https://doi.org/10.3390/proceedings2025114005

Abouzeid E, Harris P. Insights Gained from Using AI to Produce Cases for Problem-Based Learning. Proceedings. 2025; 114(1):5. https://doi.org/10.3390/proceedings2025114005

Chicago/Turabian StyleAbouzeid, Enjy, and Patricia Harris. 2025. "Insights Gained from Using AI to Produce Cases for Problem-Based Learning" Proceedings 114, no. 1: 5. https://doi.org/10.3390/proceedings2025114005

APA StyleAbouzeid, E., & Harris, P. (2025). Insights Gained from Using AI to Produce Cases for Problem-Based Learning. Proceedings, 114(1), 5. https://doi.org/10.3390/proceedings2025114005